Swarm Optimization and Machine Learning Applied to PE Malware Detection towards Cyber Threat Intelligence

Abstract

1. Introduction

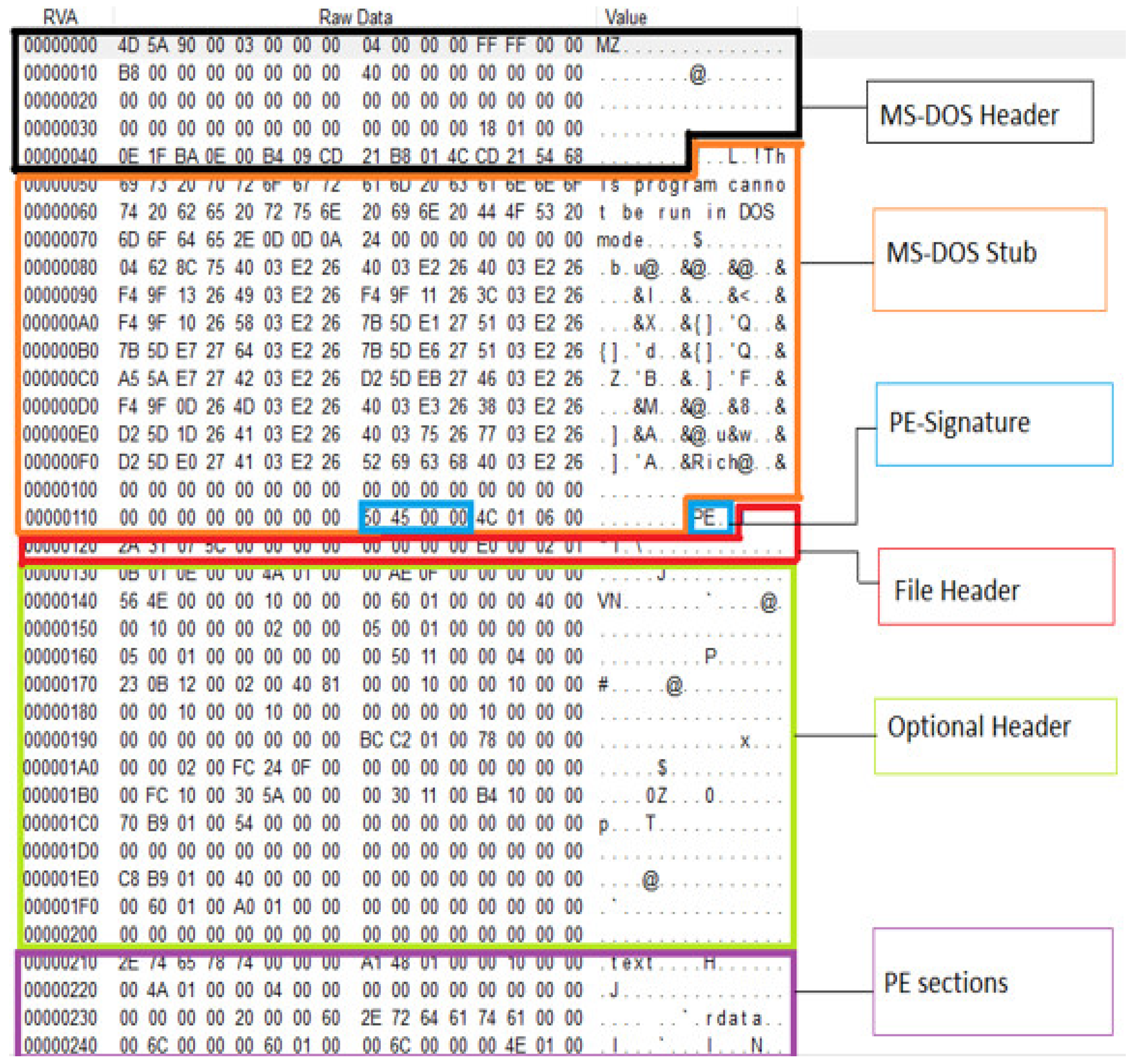

Overview of PE File Header Attributes

- Developing novel swarm-optimization-based PE header attribute-minimizing algorithms.

- Performance evaluation on existing benchmark datasets using various ML classifiers.

- Proposing an updated, pure-PE file header attribute dataset called the SOMLAP dataset (Swarm Optimization and Machine Learning Applied to PE Malware Detection)

- Performance comparison with existing works and validation of the SOMLAP dataset.

2. Literature Review

3. SOMLAP Dataset Construction

3.1. Features Taken from DOS_Header

| S. No. | Field | Description |

|---|---|---|

| 1. | Blp | Last page bytes |

| 2. | Fp | File pages |

| 3. | Rn | Relocation number |

| 4. | Prhdr | Header size in the paragraph |

| 5. | Minpar | Min. paragraphs required in extra |

| 6. | Maxpar | Max. paragraphs required in extra |

| 7. | Ivalss | Initial value of SS |

| 8. | Ivalsp | Initial value of SP |

| 9. | doscksum | Checksum value |

| 10. | Iip | Instructor pointer initial value |

| 11. | Ics | CS Initial value. |

| 12. | Rta | Relocation table file address |

| 13. | Ovn | Number given to overlay |

| 14. | Idoem | ID of the OEM |

| 15. | Infoem | Information of OEM |

| 16. | exehdradr | Exe header address |

3.2. Features Gathered from The Coff_Header or File_Header

| S. No. | Field | Description |

|---|---|---|

| 17. | mach | Identifies the machine for which compilation of the file is done |

| 18. | nsec | Section count after the header part |

| 19. | tds | Binary value representing if the time is between 1980 and now or not |

| 20. | ptrst | Symbol table pointer |

| 21. | stcnt | Symbol table entries count |

| 22. | ohs | Optional_header size |

| 23. | char | Characteristics |

3.3. Features Extracted from The Optional_Header

| S. No. | Attribute | Description |

|---|---|---|

| 24. | sig | Signature of the image |

| 25. | majlv | Major linker version |

| 26. | minlv | Minor linker version |

| 27. | codesize | Total size of code in all sections together |

| 28. | initdatsize | Initialized data size |

| 29. | uninitdatsize | Uninitialized data size |

| 30. | adrentpt | Address of entry point |

| 31. | cbase | Code section base pointer |

| 32. | dbase | Data section base pointer |

| 33. | ibase | Image section base pointer |

| 34. | secalign | Section alignments loaded to memory (bytes) |

| 35. | filealign | Image file raw data alignment (bytes) |

| 36. | majosver | The OS’s major version |

| 37. | minosver | The OS’s minor version |

| 38. | majiver | Image file’s major version |

| 39. | miniver | Image file’s minor version |

| 40. | majssver | File subsystem’s major version |

| 41. | minssver | File subsystem’s minor version |

| 42. | win32vv | Reserved. (0 by default) |

| 43. | soi | Image file size with all headers (bytes) |

| 44. | soh | Combined size of all headers. |

| 45. | optcksum | Checksum value of image |

| 46. | ss | The invoked subsystem to run the exe file |

| 47. | dllch | Characteristics of the DLL |

| 48. | sosr | Size of stack reserve |

| 49. | sosc | Size of stack commit |

| 50. | sohr | Size of heap reserve |

| 51. | sohc | Size of heap commit |

| 52. | ldflg | Loader flag (obsolete) |

| 53. | ndirent | The number of directory entries in the optional header. |

3.4. Section Table

| S. No. | Field | Description |

|---|---|---|

| 54. | Text_mscfaddr | Address of the file. |

| 55. | Text_secsize | Section size that the memory was loaded with. |

| 56. | Text_byteaddr | The loaded section’s first byte address. |

| 57. | Text_datsize | Size of the data initialized on the disk. |

| 58. | Text_ptrrawdat | Pointer to the COFF file first page of raw data. |

| 59. | Text_ptrreloc | Pointer to the starting of relocation entries |

| 60. | Text_ptrlinenum | Pointer to line numbers. |

| 61. | Text_numrelocs | The sections count of entries for relocations. |

| 62. | Text_numlinenums | Count of entries for line numbers. |

| 63. | Text_char | Image characteristics. |

| 64. | Text_entro (A derived attribute) | This is a derived attribute, and not a part of the section. This is the entropy calculated for the section. |

| S. No. | Field | Description |

|---|---|---|

| 65. | Idata_mscfaddr | Address of the file. |

| 66. | Idata_secsize | Section size that the memory was loaded with. |

| 67. | Idata_byteaddr | The loaded section’s first byte address. |

| 68. | Idata_datsize | Size of the data initialized on the disk. |

| 69. | Idata_ptrrawdat | Pointer to the COFF file first page of raw data. |

| 70. | Idata_ptrreloc | Pointer to the starting of relocation entries |

| 71. | Idata_ptrlinenum | Pointer to line numbers. |

| 72. | Idata_numrelocs | The section count of entries for relocations. |

| 73. | Idata_numlinenums | Count of entries for line numbers. |

| 74. | Idata_char | Image characteristics. |

| 75. | Idata_entro (A derived attribute) | This is a derived attribute, and not a part of the section. This is the entropy calculated for the section. |

| S. No. | Field | Description |

|---|---|---|

| 76. | Rsrc_mscfaddr | Address of the file. |

| 77. | Rsrc_secsize | Section size that the memory was loaded with. |

| 78. | Rsrc_byteaddr | The loaded section’s first byte address. |

| 79. | Rsrc_datsize | Size of the data initialized on the disk. |

| 80. | Rsrc_ptrrawdat | Pointer to the COFF file first page of raw data. |

| 81. | Rsrc_ptrreloc | Pointer to the starting of relocation entries |

| 82. | Rsrc_ptrlinenum | Pointer to line numbers. |

| 83. | Rsrc_numrelocs | The section count of entries for relocations. |

| 84. | Rsrc_numlinenums | Count of entries for line numbers. |

| 85. | Rsrc_char | Image characteristics. |

| 86. | Rsrc_entro (A derived attribute) | This is a derived attribute, and not a part of the section. This is the entropy calculated for the section. |

| S. No. | Field | Description |

|---|---|---|

| 87. | Data_mscfaddr | Address of the file. |

| 88. | Data_secsize | Section size that the memory was loaded with. |

| 89. | Data_byteaddr | The loaded section’s first byte address. |

| 90. | Data_datsize | Size of the data initialized on the disk. |

| 91. | Data_ptrrawdat | Pointer to the COFF file first page of raw data. |

| 92. | Data_ptrreloc | Pointer to the starting of relocation entries |

| 93. | Data_ptrlinenum | Pointer to line numbers. |

| 94. | Data_numrelocs | The section count of entries for relocations. |

| 95. | Data_numlinenums | Count of entries for line numbers. |

| 96. | Data_char | Image characteristics. |

| 97. | Data_entro (A derived attribute) | This is a derived attribute, and not a part of the section. This is the entropy calculated for the section. |

| S. No. | Field | Description |

|---|---|---|

| 98. | bss_phyaddr | Address of the file. |

| 99. | bss_virsize | Size of the memory loaded with section. |

| 100 | bss_viraddr | The loaded section’s first byte address. |

| 101. | bss_datsize | Size of the data initialized on the disk. |

| 102. | bss_ptrrawdat | Pointer to the COFF file first page of raw data. |

| 103. | bss_char | Image characteristics. |

| 104. | bss_entro (A derived attribute) | This is a derived attribute, and not a part of the section. This is the entropy calculated for the section. |

| S. No. | Field | Description |

|---|---|---|

| 105. | bss_phyaddr | Address of the file. |

| 106 | bss_virsize | Size of the memory loaded with section. |

| 107 | bss_viraddr | The loaded section’s first byte address. |

| 108. | bss_char | Image characteristics. |

| Algorithm 1. Feature extraction from the malware and benign executable files |

| Input: The set of malware and benign executables |

| Output: Dataset with 108 features |

|

- An extension over the benchmark dataset [29] to research for a possible improvement in malware detection accuracy;

- Increased number of samples, for a total of 51,409;

- Updated malware sources from Virus Share and benign sources from Windows 10;

- An increased attribute size to 108, including several features from six sections of

- the section tables;

- The dataset attributes are pure PE-header-based, which is meant to prove the capability of PE header fields in efficient malware detection.

4. Significant Feature Selection Using Swarm Optimization

4.1. Ant Colony Optimization for Feature Selection

| Algorithm 2. Ant Colony Optimization Wrapper Feature Selection Algorithm |

| Input: Labeled training and evaluating dataset, number of features , number of ants , number of iterations , initial pheromone concentration , and tuning parameters |

| Output: A subset of feature set that gives the maximum fitness over . |

| Auxiliary: Pheromone Matrix ant solutions , where , ant fitness and the best solution . |

|

4.2. Cuckoo Search Optimization for Feature Selection

| Algorithm 3. Binary Cuckoo Search Optimization Wrapper Feature Selection Algorithm. |

| Input: Labeled training and evaluating dataset, number of features , number of nests , number of iterations , and tuning parameters |

| Output: A subset of feature set that gives the maximum fitness over . |

| Auxiliary: Nests, i.e., solutions where , a binary representation of features the best solution and the fitness of nests |

|

4.3. Grey Wolf Optimization

| Algorithm 4. Grey Wolf Optimization Wrapper Feature Selection Algorithm. |

| Input: Training data with labels, Dataset for evaluation, the feature count, iteration count, and tuning parameters . |

| Output: A subset of feature set that gives the maximum fitness over . |

| Auxiliary: Wolves, i.e., solutions where , a binary representation of featuresalpha wolf , beta wolf , delta wolf , the best solution the fitness of wolves , |

|

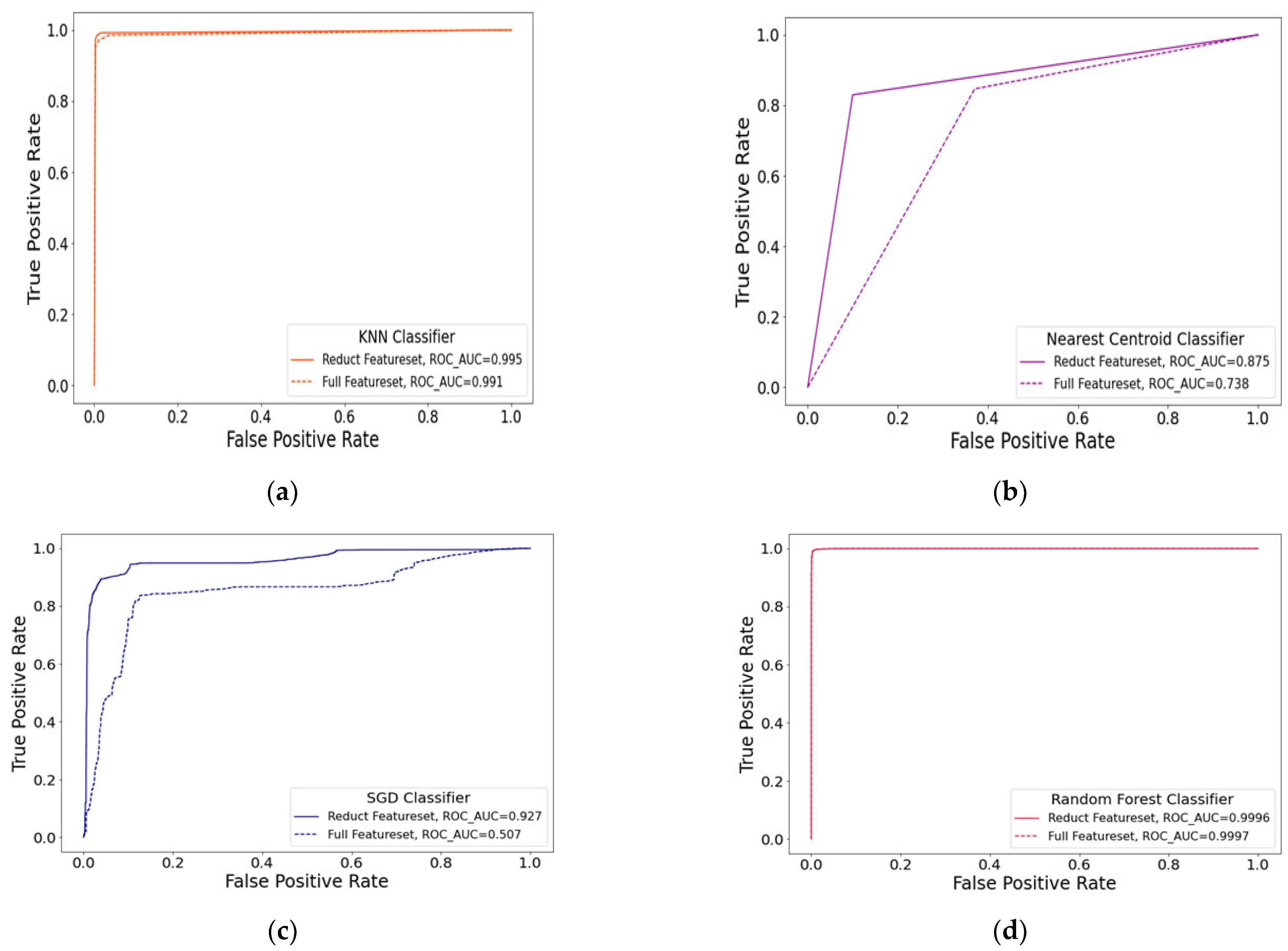

5. Results and Discussion

5.1. Free Parameters and Classifier Selection

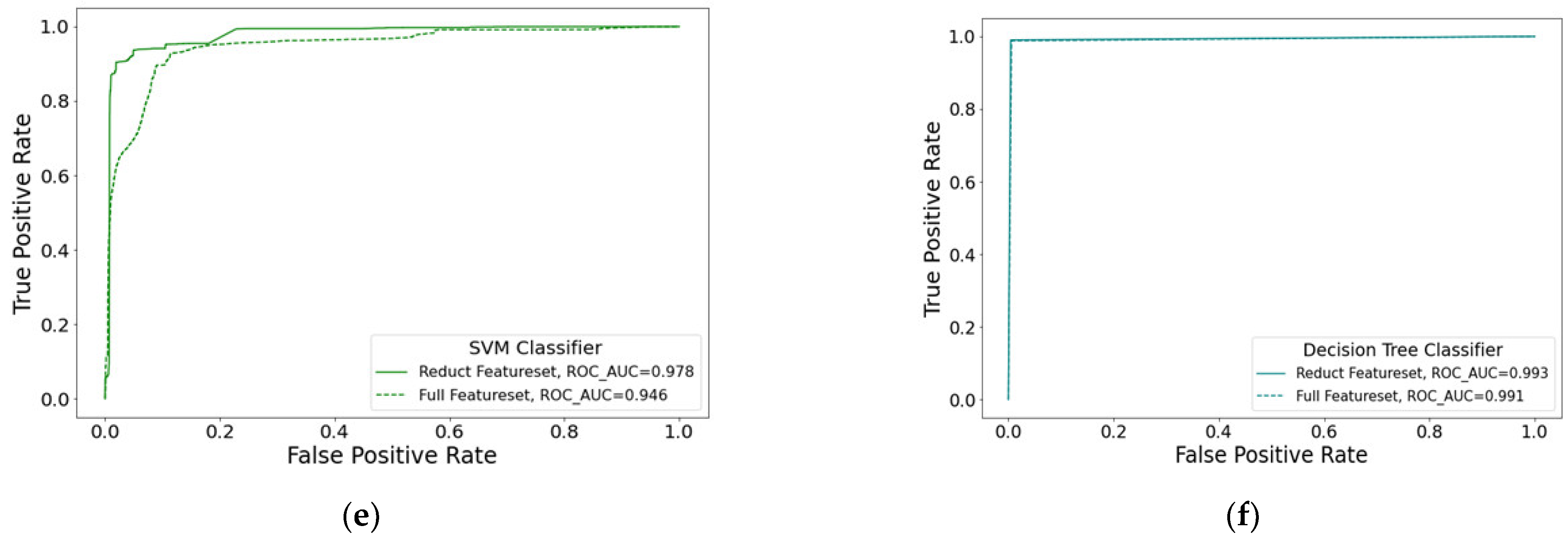

5.2. Evaluation of the ACO-DT, CSO-DT, and GWO-DT Wrappers on the Benchmark ClaMP Dataset

| FS Algorithm | No. of Features Selected (With Best Accuracy) | Features Selected from DOS_Header (6 Features, 1–6) | Features Selected from COFF_Header (17 Features, 7–23) | Features Selected from Optional_Header (37 Features, 24–60) | Features Selected from Other_Fields (9 Features, 61–69) |

|---|---|---|---|---|---|

| ACO-DT | 18 | 1, 2, 3, 6. | 7, 8, 12, 13, 16, 17, 21. | 28, 30, 32, 37, 43, 44, 45. | NIL |

| CSO-DT | 26 | 1, 5. | 7, 8, 9, 10, 11, 13, 17, 18, 21, 23. | 29, 34, 38, 43, 44, 47, 52, 55, 56, 57, 60. | 62, 63, 66. |

| GWO-DT | 37 | 1, 2. | 8, 9, 10, 11, 12, 13, 14, 16, 17, 18, 19, 21. | 24, 27, 29, 30, 31, 32, 34, 35, 36, 37, 39, 43, 46, 47, 52, 59, 60. | 62, 63, 64, 66, 68, 69. |

5.3. Evaluation of the ACO-DT, CSO-DT, and GWO-DT Wrappers on the Proposed SOMLAP Dataset

| Sno | Paper | Number of Samples (units) | ML Technique | Feature Selection Technique | Number of Total Features | Number of Features after Reduction | Accuracy (After Reduction) |

|---|---|---|---|---|---|---|---|

| 1. | Belaoued and Mazouzi [19] (2015) | 552 | NA | KHI square value | 590 | 50 | NA |

| 2. | Salehi et al. [18] (2014) | 1211 | RF, J48 DT NB | Threshold Frequency | NA | 6 | 98.4% |

| 3. | Walenstein et al. [16] (2010) | 23,906 | NB, J48, SVM RF, IB5 | Info. Gain | 1867 | 15 | 99.8% |

| 4. | Elovici et al. [15] (2007) | 30,430 | ANN, DT NB | Fischer Score | 5500 | 300 5-g | 95.5% |

| 5. | kumar et al. [29] (2017) | 5180 | RF, LR, LDA DT, NB, kNN | NA | 53 (Raw) + 68 (integ) | NA | NA |

| 6. | Penmatsa et al. [30] (2020) | 5180 | RF, SVM, NB DT | ACORS algorithm | 53 (Raw) + 68 (integ) | 4 R + 2 I Features | 90.55% |

| 7. | Maleki et al. [27] (2019) | 971 | SVM, RF, NN, ID3, NB | forward feature selection | 30 | 8 | 98.26% |

| 8. | Vidyarthi et al. [23] (2017) | 180 | SVM | Info Gain | NA | 232 | 92% |

| 9. | Chen et al. [28] (2021) | 1000 (malicious) + 1069 (benign) | XGBoost | PCA | NA | 79 | 99.56% |

| 10. | This Paper | 51,409 19,809 (malware) + 31,600 (benign) | RF, DT | ACO, CSO, GWO wrappers | 108 | 12 (ACO-DT) | 99.37% |

5.4. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Statcounter Global Stats—Browser, OS, Search Engine including Mobile Usage Share. Available online: https://gs.statcounter.com/os-market-share (accessed on 21 October 2022).

- Damaševičius, R.; Venčkauskas, A.; Toldinas, J.; Grigaliunas, S. Ensemble-Based Classification Using Neural Networks and Machine Learning Models for Windows PE Malware Detection. Electron 2021, 10, 485. [Google Scholar] [CrossRef]

- Pietrek, M. Peering Inside the PE—A Tour of the Win 32 Portable Executable File Format. Microsoft Syst. J. 1994, 9, 15–38. [Google Scholar]

- Schultz, M.G.; Eskin, E.; Zadok, F.; Stolfo, S.J. Data Mining Methods for Detection of New Malicious Executables. In Proceedings of the 2001 IEEE Symposium on Security and Privacy, Oakland, CA, USA, 14–16 May 2000. [Google Scholar]

- Ye, Y.; Wang, D.; Li, T.; Ye, D.; Jiang, Q. An Intelligent PE-Malware Detection System Based on Association Mining. J. Comput. Virol. 2008, 4, 323–334. [Google Scholar] [CrossRef]

- Choi, Y.-S.; Kim, I.-K.; Oh, J.-T.; Ryou, J.-C. PE File Header Analysis-Based Packed PE File Detection Technique (PHAD). In Proceedings of the International Symposium on Computer Science and its Applications, Hobart, TAS, Australia, 13–15 October 2008. [Google Scholar]

- Wang, T.-Y.; Wu, C.-H.; Hsieh, C.-C. Detecting Unknown Malicious Executables Using Portable Executable Headers. In Proceedings of the 2009 Fifth International Joint Conference on INC, IMS and IDC, Seoul, Republic of Korea, 25–27 August 2009. [Google Scholar]

- Wikibooks. PE Files. Available online: https://en.wikibooks.org/wiki/X86_Disassembly/Windows_Executable_Files#PE_Files (accessed on 21 October 2022).

- Kim, S. PE Header Analysis for Malware Detection; San Jose State University Library: San Jose, CA, USA, 2019. [Google Scholar]

- Namita; Prachi. PE File-Based Malware Detection Using Machine Learning. In Proceedings of International Conference on Artificial Intelligence and Applications; Springer: Singapore, 2021; pp. 113–123. [Google Scholar]

- Wang, J.-H.; Deng, P.S.; Fan, Y.-S.; Jaw, L.-J.; Liu, Y.-C. Virus Detection Using Data Mining Techniques. In Proceedings of the IEEE 37th Annual 2003 International Carnahan Conference on Security Technology, Taipei, Taiwan, 14–16 October 2003. [Google Scholar]

- Sung, A.H.; Xu, J.; Chavez, P.; Mukkamala, S. Static Analyzer of Vicious Executables (SAVE). In Proceedings of the 20th Annual Computer Security Applications Conference, Tucson, AZ, USA, 6–10 December 2004. [Google Scholar]

- Kolter, J.Z.; Maloof, M.A. Learning to Detect Malicious Executables in the Wild. In Proceedings of the 2004 ACM SIGKDD International Conference on Knowledge Discovery and Data Mining-KDD ’04; ACM Press: New York, NY, USA, 2004. [Google Scholar]

- Moskovitch, R.; Stopel, D.; Feher, C.; Nissim, N.; Elovici, Y. Unknown Malcode Detection via Text Categorization and the Imbalance Problem. In Proceedings of the 2008 IEEE International Conference on Intelligence and Security Informatics, Taipei, Taiwan, 17–20 June 2008. [Google Scholar]

- Elovici, Y.; Shabtai, A.; Moskovitch, R.; Tahan, G.; Glezer, C. Applying Machine Learning Techniques for Detection of Malicious Code in Network Traffic. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2007; pp. 44–50. [Google Scholar]

- Walenstein, A.; Hefner, D.J.; Wichers, J. Header Information in Malware Families and Impact on Automated Classifiers. In Proceedings of the 2010 5th International Conference on Malicious and Unwanted Software, Nancy, France, 19–20 October 2010. [Google Scholar]

- Ye, Y.; Li, T.; Jiang, Q.; Wang, Y. CIMDS: Adapting Postprocessing Techniques of Associative Classification for Malware Detection. IEEE Trans. Syst. Man Cybern. C Appl. Rev. 2010, 40, 298–307. [Google Scholar]

- Salehi, Z.; Sami, A.; Ghiasi, M. Using Feature Generation from API Calls for Malware Detection. Comput. Fraud Secur. 2014, 2014, 9–18. [Google Scholar] [CrossRef]

- Belaoued, M.; Mazouzi, S. A Real-Time PE-Malware Detection System Based on CHI-Square Test and PE-File Features. In IFIP Advances in Information and Communication Technology; Springer International Publishing: Cham, Switzerland, 2015; pp. 416–425. [Google Scholar]

- Akour, M.; Alsmadi, I.; Alazab, M. The Malware Detection Challenge of Accuracy. In Proceedings of the 2016 2nd International Conference on Open Source Software Computing (OSSCOM), Beirut, Lebanon, 1–3 December 2016. [Google Scholar]

- Zatloukal, F.; Znoj, J. Malware Detection Based on Multiple PE Headers Identification and Optimization for Specific Types of Files. J. Adv. Eng. Comput. 2017, 1, 153. [Google Scholar] [CrossRef]

- David, B.; Filiol, E.; Gallienne, K. Structural Analysis of Binary Executable Headers for Malware Detection Optimization. J. Comput. Virol. Hacking Tech. 2017, 13, 87–93. [Google Scholar] [CrossRef]

- Vidyarthi, D.; Choudhary, S.P.; Rakshit, S.; Kumar, C.R.S. Malware Detection by Static Checking and Dynamic Analysis of Executables. Int. J. Inf. Secur. Priv. 2017, 11, 29–41. [Google Scholar] [CrossRef]

- Raff, E.; Sylvester, J.; Nicholas, C. Learning the PE Header, Malware Detection with Minimal Domain Knowledge. In Proceedings of the 10th ACM Workshop on Artificial Intelligence and Security; ACM: New York, NY, USA, 2017. [Google Scholar]

- Sophos. Available online: https://www.sophos.com/de-de/medialibrary/PDFs/technical-papers/sophoslabs-machine-learning-tp.pdf (accessed on 21 October 2022).

- Zhang, J. MLPdf: An Effective Machine Learning Based Approach for PDF Malware Detection. arXiv 2018, arXiv:1808.06991. [Google Scholar]

- Maleki, N.; Bateni, M.; Rastegari, H. An Improved Method for Packed Malware Detection Using PE Header and Section Table Information. Int. J. Comput. Netw. Inf. Secur. 2019, 11, 9–17. [Google Scholar] [CrossRef]

- Chen, Z.; Zhang, X.; Kim, S. A Learning-Based Static Malware Detection System with Integrated Feature. Intell. Autom. Soft Comput. 2021, 27, 891–908. [Google Scholar] [CrossRef]

- Kumar, A.; Kuppusamy, K.S.; Aghila, G. A Learning Model to Detect Maliciousness of Portable Executable Using Integrated Feature Set. J. King Saud Univ. Comput. Inf. Sci. 2019, 31, 252–265. [Google Scholar] [CrossRef]

- Penmatsa, R.K.V.; Kalidindi, A.; Mallidi, S.K.R. Feature Reduction and Optimization of Malware Detection System Using Ant Colony Optimization and Rough Sets. Int. J. Inf. Secur. Priv. 2020, 14, 95–114. [Google Scholar] [CrossRef]

- Virusshare. Available online: https://virusshare.com/ (accessed on 21 October 2022).

- Pefile. PyPI. Available online: https://pypi.org/project/pefile/ (accessed on 21 October 2022).

- Microsoft. Karl-Bridge-Microsoft. PE Format. Available online: https://docs.microsoft.com/en-us/windows/win32/debug/pe-format (accessed on 21 October 2022).

- Wikibooks. x86 Disassembly/Windows Executable Files. Available online: https://en.wikibooks.org/wiki/X86_Disassembly/Windows_Executable_Files (accessed on 21 October 2022).

- Chen, R.-C.; Dewi, C.; Huang, S.-W.; Caraka, R.E. Selecting Critical Features for Data Classification Based on Machine Learning Methods. J. Big Data 2020, 7, 1–26. [Google Scholar] [CrossRef]

- Keogh, E.; Mueen, A. Curse of Dimensionality. In Encyclopedia of Machine Learning and Data Mining; Springer: Boston, MA, USA, 2017; pp. 314–315. [Google Scholar]

- Tabakhi, S.; Moradi, P.; Akhlaghian, F. An Unsupervised Feature Selection Algorithm Based on Ant Colony Optimization. Eng. Appl. Artif. Intell. 2014, 32, 112–123. [Google Scholar] [CrossRef]

- Vanaja, R.; Mukherjee, S. Novel Wrapper-Based Feature Selection for Efficient Clinical Decision Support System. In Advances in Data Science; Springer: Singapore, 2019; pp. 113–129. [Google Scholar]

- Dorigo, M.; Di Caro, G. Ant Colony Optimization: A New Meta-Heuristic. In Proceedings of the 1999 Congress on Evolutionary Computation-CEC99 (Cat. No. 99TH8406), Washington, DC, USA, 6–9 July 1999. [Google Scholar]

- Gambardella, L.M.; Dorigo, M. Solving Symmetric and Asymmetric TSPs by Ant Colonies. In Proceedings of the IEEE International Conference on Evolutionary Computation, Nagoya, Japan, 20–22 May 1996. [Google Scholar]

- Blum, C.; Sampels, M. Ant Colony Optimization for FOP Shop Scheduling: A Case Study on Different Pheromone Representations. In Proceedings of the 2002 Congress on Evolutionary Computation. CEC’02 (Cat. No.02TH8600), Honolulu, HI, USA, 12–17 May 2002. [Google Scholar]

- Al-Ani, A. Ant Colony Optimization for Feature Subset Selection. Int. J. Comput. Inf. Eng. 2007, 1, 999–1002. [Google Scholar]

- Sivagaminathan, R.K.; Ramakrishnan, S. A Hybrid Approach for Feature Subset Selection Using Neural Networks and Ant Colony Optimization. Expert Syst. Appl. 2007, 33, 49–60. [Google Scholar] [CrossRef]

- Yang, X.-S.; Deb, S. Engineering Optimization by Cuckoo Search. arXiv 2010, arXiv: 1005.2908. [Google Scholar]

- Aziz, M.A.E.; Hassanien, A.E. Modified Cuckoo Search Algorithm with Rough Sets for Feature Selection. Neural Comput. Appl. 2018, 29, 925–934. [Google Scholar] [CrossRef]

- Alia, A.F.; Taweel, A. Feature Selection Based on Hybrid Binary Cuckoo Search and Rough Set Theory in Classification for Nominal Datasets. Int. J. Inf. Technol. Comput. Sci. 2017, 9, 63–72. [Google Scholar] [CrossRef]

- Wang, G. A Comparative Study of Cuckoo Algorithm and Ant Colony Algorithm in Optimal Path Problems. MATEC Web Conf. 2018, 232, 03003. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Emary, E.; Zawbaa, H.M.; Hassanien, A.E. Binary Grey Wolf Optimization Approaches for Feature Selection. Neurocomputing 2016, 172, 371–381. [Google Scholar] [CrossRef]

- Al-Tashi, Q.; Abdul Kadir, S.J.; Rais, H.M.; Mirjalili, S.; Alhussian, H. Binary Optimization Using Hybrid Grey Wolf Optimization for Feature Selection. IEEE Access 2019, 7, 39496–39508. [Google Scholar] [CrossRef]

| ACO | CSO | GWO |

|---|---|---|

| omega = 0.95 alpha = 0.5 beta = 0.3 rho = 0.1 q = 0.5 epsilon = 0.001 no_ants = 10 no_iter = 10 | omega = 0.95 alpha = −0.5 pa = 0.25 lam = 0.01 no_nests = 10 no_iter = 10 | omega = 0.95 no_wolfs = 10 no_iter = 10 |

| Exp. No. | Classifier | Accuracy | Sol Length | Time Taken (in Sec) (10 Ants 2 Iteration) | |

|---|---|---|---|---|---|

| Full Features | Reduced Features | ||||

| 1 | KNN | 98.25% | 99.05% | 18 | 262,380 |

| 2 | Nearest Centroid (NC) | 71.25% | 89.14% | 14 | 154.3 |

| 3 | Random Forest (RF) | 99.37% | 99.40% | 17 | 141,140 |

| 4 | Gaussian NB (GNB) | 61.73% | 95.49% | 15 | 358 |

| 5 | SVM | 89.93% | 94.11% | 18 | 6430 |

| 6 | Decision Tree (DT) | 99.09% | 99.31% | 13 | 4365 |

| Run No. | DT Wrapper FS Algorithm | Accuracy with Full Features | No. of Features Selected (out of 69) | Run Time (Sec) | Accuracy after Feature Selection | Iteration Number Where the Best Solution Found |

|---|---|---|---|---|---|---|

| 1 | ACO-DT | 97.50% | 18 | 2093.68 | 98.016% | 7 |

| CSO-DT | 26 | 2.141 | 97.312% | 10 | ||

| GWO-DT | 37 | 1.022 | 96.929% | 3 | ||

| 2 | ACO-DT | 97.24% | 19 | 2127.89 | 97.184% | 3 |

| CSO-DT | 29 | 2.256 | 96.865% | 6 | ||

| GWO-DT | 19 | 1.05 | 96.673% | 9 | ||

| 3 | ACO-DT | 97.185% | 24 | 2216.10 | 97.696% | 1 |

| CSO-DT | 39 | 2.198 | 97.696% | 10 | ||

| GWO-DT | 24 | 1.046 | 97.248% | 4 | ||

| 4 | ACO-DT | 98.144% | 21 | 2354.46 | 97.824% | 4 |

| CSO-DT | 25 | 2.235 | 97.502% | 2 | ||

| GWO-DT | 19 | 1.099 | 96.609% | 6 | ||

| 5 | ACO-DT | 96.993% | 20 | 2834.20 | 97.760% | 3 |

| CSO-DT | 20 | 2.259 | 97.057% | 10 | ||

| GWO-DT | 19 | 0.960 | 95.841% | 6 | ||

| Avg. | ACO-DT | 97.528% | 20.4 | 2325.66 | 97.696% | 3.6 |

| CSO-DT | 27.8 | 2.2178 | 97.286% | 7.6 | ||

| GWO-DT | 23.6 | 1.035 | 91.261% | 5.6 |

| Run No. | DT Wrapper FS Algorithm | Accuracy with Full Features | No. of Features Selected (out of 69) | Run Time (Sec) | Accuracy after Feature Selection | Iteration Number where the Best Solution Found |

|---|---|---|---|---|---|---|

| 1 | ACO-DT | 99.144 | 7 | 22,339 | 99.053% | 3 |

| CSO-DT | 30 | 35.67 | 98.878% | 6 | ||

| GWO-DT | 22 | 20.499 | 99.008% | 7 | ||

| 2 | ACO-DT | 99.189 | 9 | 22,901 | 99.17% | 8 |

| CSO-DT | 29 | 36.88 | 98.956% | 8 | ||

| GWO-DT | 24 | 19.31 | 98.988% | 6 | ||

| 3 | ACO-DT | 99.163 | 10 | 24,458 | 99.163% | 4 |

| CSO-DT | 39 | 38.19 | 99.202% | 4 | ||

| GWO-DT | 23 | 19.127 | 99.079% | 4 | ||

| 4 | ACO-DT | 99.131 | 12 | 28,960 | 99.377% | 4 |

| CSO-DT | 29 | 38.011 | 99.137% | 8 | ||

| GWO-DT | 23 | 19.501 | 99.196% | 3 | ||

| 5 | ACO-DT | 99.286 | 10 | 25,066 | 99.202% | 4 |

| CSO-DT | 33 | 36.742 | 99.124% | 5 | ||

| GWO-DT | 24 | 19.176 | 99.170% | 9 | ||

| Avg. | ACO-DT | 99.182 | 10 | 24,744.8 | 99.193% | 4.6 |

| CSO-DT | 32 | 37.098 | 99.059% | 6.2 | ||

| GWO-DT | 23.2 | 19.522 | 99.088% | 5.8 |

| FS Algorithm | No. of Features Selected. (With Best Accuracy) | Features Selected from DOS_Header (16 Features, 1–16) | Features Selected from COFF_Header (7 Features, 17–23) | Features Selected from Optional_Header (30 Features, 24–53) | Features Selected from Sections_Header (55 Features, 54–108) |

|---|---|---|---|---|---|

| ACO-DT | 12 | 2, 10, 16 | 23 | 25, 28, 37, 44, 45, 46 | 80, 86 |

| CSO-DT | 29 | 1, 2, 6, 13, 16 | 22 | 24, 25, 28, 31, 35, 44, 45, 49, 50 | 55, 56, 67, 71, 73, 76, 85, 87, 89, 90, 91, 96, 97, 104 |

| GWO-DT | 23 | 11 | 18, 22 | 24, 25, 38, 40, 44 | 57, 58, 59, 61, 64, 66, 75, 76, 82, 84, 87, 89, 93, 102, 105. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kattamuri, S.J.; Penmatsa, R.K.V.; Chakravarty, S.; Madabathula, V.S.P. Swarm Optimization and Machine Learning Applied to PE Malware Detection towards Cyber Threat Intelligence. Electronics 2023, 12, 342. https://doi.org/10.3390/electronics12020342

Kattamuri SJ, Penmatsa RKV, Chakravarty S, Madabathula VSP. Swarm Optimization and Machine Learning Applied to PE Malware Detection towards Cyber Threat Intelligence. Electronics. 2023; 12(2):342. https://doi.org/10.3390/electronics12020342

Chicago/Turabian StyleKattamuri, Santosh Jhansi, Ravi Kiran Varma Penmatsa, Sujata Chakravarty, and Venkata Sai Pavan Madabathula. 2023. "Swarm Optimization and Machine Learning Applied to PE Malware Detection towards Cyber Threat Intelligence" Electronics 12, no. 2: 342. https://doi.org/10.3390/electronics12020342

APA StyleKattamuri, S. J., Penmatsa, R. K. V., Chakravarty, S., & Madabathula, V. S. P. (2023). Swarm Optimization and Machine Learning Applied to PE Malware Detection towards Cyber Threat Intelligence. Electronics, 12(2), 342. https://doi.org/10.3390/electronics12020342