Abstract

The development of deepfake technology, based on deep learning, has made it easier to create images of fake human faces that are indistinguishable from the real thing. Many deepfake methods and programs are publicly available and can be used maliciously, for example, by creating fake social media accounts with images of non-existent human faces. To prevent the misuse of such fake images, several deepfake detection methods have been proposed as a countermeasure and have proven capable of detecting deepfakes with high accuracy when the target deepfake model has been identified. However, the existing approaches are not robust to partial editing and/or occlusion caused by masks, glasses, or manual editing, all of which can lead to an unacceptable drop in accuracy. In this paper, we propose a novel deepfake detection approach based on a dynamic configuration of an ensemble model that consists of deepfake detectors. These deepfake detectors are based on convolutional neural networks (CNNs) and are specialized to detect deepfakes by focusing on individual parts of the face. We demonstrate that a dynamic selection of face parts and an ensemble of selected CNN models is effective at realizing highly accurate deepfake detection even from partly edited and occluded images.

1. Introduction

Along with the rapid development of deep learning, artificial intelligence (AI) technologies used to create images and videos, which is a type of generative AI, have made significant strides over the past few decades. It is extremely difficult for people to distinguish artificial digital images generated by these technologies from images captured by digital cameras. AI-based image generation holds potential for a wide range of applications, such as movie acting, business advertising, and game creation.

On the other hand, AI-based image generation can be abused. For example, social media accounts uploading high-quality fake profile images could be used for fraudulent purposes. Generating fake images based on deep learning is called “Deepfake”. According to the classification in reference [1], deepfake approaches can be categorized into four types: (1) synthesis, (2) attribute editing, (3) identity swap, and (4) face reenactment. Synthesis (e.g., PGGAN [2], StyleGAN [3], and StyleGAN2 [4]) refers to the process of creating a fake facial image of someone who does not exist in the real world. Attribute editing (e.g., STGAN [5], StarGAN [6], and StarGAN v2 [7]) refers to the process of altering a person’s facial features, such as their hairstyle or facial hair, or adding or removing signs of aging. Meanwhile, the identity swap (e.g., FaceSwap [8]) is an approach which involves replacing one person’s face with another. Finally, during reenactment (e.g., Face2Face [9]), though the person in an image remains the same, his/her facial expression is changed.

In this paper, we mainly focus on detecting deepfakes in the form of synthesis images. The synthesis approach can be classified into generative adversarial network (GAN) and diffusion model. GAN-based synthesis consists of two neural network models: generator and discriminator. The generator is effectively generated by training them alternatively and adversarially. The generator is trained to generate high-quality images that are falsely classified by the discriminator, while the discriminator is trained to precisely distinguish the fake images generated by the generator from real images. Moreover, the diffusion probabilistic model (DPM) [10] is another type of synthesis approach which achieves high-quality image generation by dividing the process of generating an image from an input noise into simpler processes such as removing noise iteratively and gradually. Recently, stable diffusion [11], which is an extension of DPM, has attracted considerable attention, even from non-expert users because it enables users to control image generation via text prompts. In order to prevent the abuse of image generation techniques, it is important to create techniques that allow the early detection of a wide variety of false images.

In recent years, various deepfake detection methods using different clues have been investigated. Photo response non-uniformity (PRNU) is caused by the sensitivity difference of an image sensor, and it can be treated as the fingerprint of a camera. Meanwhile, PRNU-based deepfake detection was proposed in [12]. This approach can detect a deepfake, even from a partially edited facial image. On the other hand, applying PRNU-based deepfake detection to rotated and/or scaled images is immensely challenging. Deepfake detection using deep neural networks (DNNs) [13,14,15,16] has also been explored.

If a fake image is created using an existing deepfake generator, and the source of the image has been identified, then deepfake detection is relatively easy and achieves relatively high accuracy. However, there are challenges associated with achieving reliable and practical deepfake detection. References [17,18] address the issue of improving the generalization performance of deepfake detection models to achieve high deepfake detection accuracy, even when the model used to generate the fake images is unknown. Reference [17] reported that data augmentation is effective at improving the generalization of deepfake detectors. Reference [18] proposed deepfake detection that extracts low-level visual cues that are applicable to a wider range of fake image sources by re-synthesizing input images before classification.

Existing synthesis detection approaches assume that real images and synthesized images are inputted as they are, and the invisible and visible artifacts generated by a deepfake generator appear throughout the facial image. However, we also need to consider the scenario in which the artifacts are available only from a part of the fake image or degraded. For example, parts of the face may be edited manually or through inpainting methods to change the appearance of the face partly. That can destroy the artifacts partly and make it more difficult to detect synthesized images. In such a case, we need to detect deepfake from the area that keeps the artifacts generated by a deepfake generator. Analysis with a small area of facial image is helpful to realize robust deepfake detection regarding manual editing and inpainting. On the other hand, it is difficult to extract artifacts completely separated from the scene content such as textures of the background and face because the invisible artifacts are typically weak signals. In particular, it is a difficult challenge to detect a synthesized image using only a small area of facial images because only a small amount of contaminated artifacts can be obtained.

Because the eye, cornea, nose, and mouth are major facial components, detecting whether each face part is synthesized is useful for detecting synthesized images. In reference [19], inconsistent corneal specular highlights are used to identify a deepfake because left and right corneas must have consistent corneal specular highlights in real images under certain lighting conditions. Because this method uses only cornea regions, it is robust to the occlusion of other face parts. However, even real images may not have clear corneal specular highlights, and the accuracy is not sufficient for images captured in various lighting conditions. Moreover, this technique cannot be used to identify a deepfake from fake images in which the cornea is occluded with sunglasses. Part of the real face images may be hidden by sunglasses, masks, hands, etc. Therefore, it is also important to achieve face-part-based deepfake detection that is robust to partial occlusion and editing.

In this paper, we focus on the challenges presented during deepfake detection involving partly occluded or edited facial images. Our approach is based on an ensemble of convolutional neural network (CNN) models. Each CNN model is specialized for a facial part and can concentrate on artifact analysis of the assigned facial part. We promote the CNN model to extract more appropriate features of the artifacts, even from small and/or low-resolution images by limiting the type of scene content of the input images. The CNN models achieve much higher accuracy than state-of-the-art methods for small facial part images and are useful to detect deepfake part by part. Moreover, the ensemble of those CNN models can enhance the deepfake detection performance. Other than the typical ensemble model that is generated in advance, our ensemble model is dynamically generated image by image according to the results of the face part detection. Our approach is robust to occlusion because only visible face parts are used. Moreover, the proposed ensemble model can achieve highly accurate deepfake detection comparable to state-of-the-art methods for high-resolution images. The contributions of this paper are as follows:

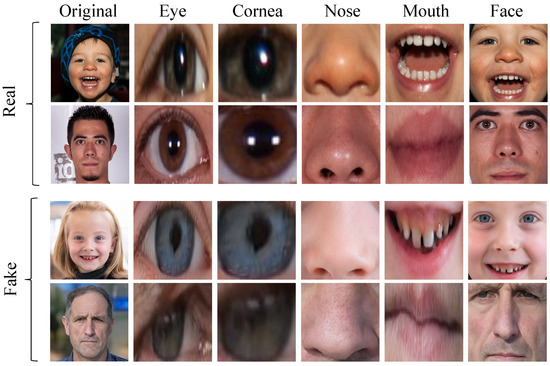

- We propose specialized CNN-based deepfake detectors for different face parts and analyze their effectiveness in terms of deepfake detection. In this paper, we construct deepfake detectors that are specialized for five different facial parts and evaluate their performance. As shown in Figure 1, we focus on the eye, cornea, nose, mouth, and face as facial parts.

Figure 1. Examples of facial images and their five types of face part images. The images of the first two and last two rows are from the FFHQ dataset [20] and StyleGAN2 dataset [21], respectively.

Figure 1. Examples of facial images and their five types of face part images. The images of the first two and last two rows are from the FFHQ dataset [20] and StyleGAN2 dataset [21], respectively. - We evaluate various ensemble models consisting of different combinations of the CNN-based deepfake detectors and demonstrate that the ensemble technique achieves sufficiently high accuracy that is comparable with that of deepfake detection that examines the whole face.

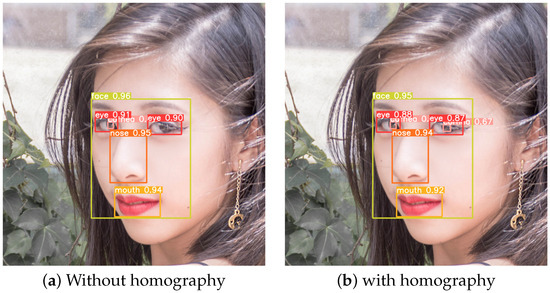

- We develop a face-part detector based on YOLOv8 [22]. We achieve face-part detection that is robust to different face directions by training with data augmentation that mimics 3D face rotation using a homography technique.

- We realize the dynamic selection of deepfake detectors that focus on different face parts, which is based on the results of face-part detection.

- We demonstrate that the proposed method achieves reliable deepfake detection that is more robust to partial occlusion and editing than the state-of-the-art methods.

Although this paper is an extended version of the conference paper presented in [23], the third to fifth contributions are new ones.

The rest of this paper is organized as follows: The proposed method is introduced in Section 2. We present an analysis of the effectiveness of each CNN model specialized for a single face part and various ensemble models of their CNN models in Section 3. We show the evaluation of face-part detection in Section 4. We compare our approach, which is based on dynamic ensemble selection, with the state-of-the-art methods in Section 5. We discuss the potential and challenges of deepfake detection based on face parts and their combination in Section 6. Finally, we present our conclusions in Section 7.

2. Face Part-Based Ensemble Deepfake Detector

2.1. Flowchart of the Proposed Method

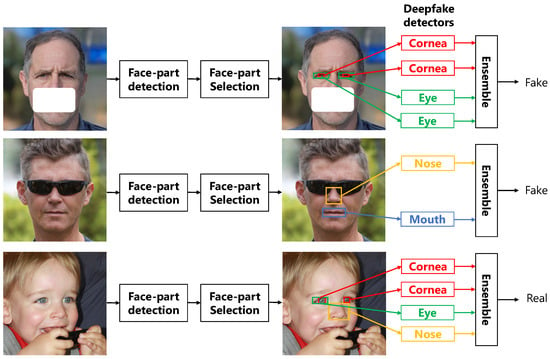

Figure 2 shows the outline of the proposed deepfake detection. First, we input an image to a face-part detector and obtain the candidates of bounding boxes and their confidence scores in each class. We consider five classes that correspond to the eye, cornea, nose, mouth, and face. Based on the confidence scores, we select face parts that will be used for deepfake detection. Next, we crop the image patches corresponding to the selected face parts and resize them to pixels. After that, we input each resized patch of a face part to a binary classifier of real and fake classes, which is specialized to treat the face part and obtain the probability distribution of real and fake classes as an output. Lastly, we obtain the final output of the ensemble deepfake detector by calculating the average of the probability distributions of all classifiers.

Figure 2.

The outline of the proposed deepfake detection based on the dynamic selection of ensemble models. The first two facial images are from StyleGAN2 dataset [21]; the white region is added to mimic a mask. The third one is from FFHQ dataset [20].

This approach achieves high robustness against occlusion and different face directions based on the following points. First, we improve the accuracy of a face-part detector for various directions of facial images by training with data augmentation based on homography. Second, we realize deepfake detectors that can distinguish fake images from real ones using only one type of face part. Third, by selecting appropriate face parts for deepfake detection based on the results of face-part detection, we realize an ensemble model that is robust against occlusion and the misidentification of face parts.

2.2. Face-Part Detection and Selection

In recent years, the neural network-based object detection model has rapidly been developed. In this study, we employ YOLOv8 [22], which is one of the state-of-the-art object detection models. We generate bounding boxes for the five classes of annotation data: eye, cornea, nose, mouth, and face. The face region is defined as the smallest bounding box that includes eyebrows in addition to the eyes, nose, and mouth. The faces in the images on social media are not always front facing. Therefore, it is important to create a face-part detector that can detect face parts precisely from facial images facing in various directions. It is necessary to prepare a large number of pairs of facial images facing in various directions and their annotations to realize robust face-part detection. However, this requires a significant amount of time and human labor. Instead, we introduce data homography-based augmentation, which is widely used for many applications, such as facial emotion recognition [24].

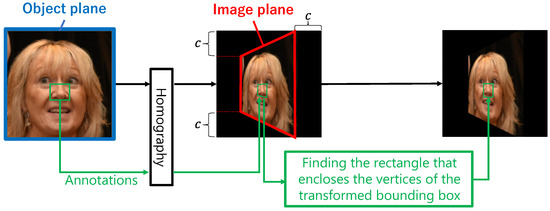

Homography is a mapping of an object plane to an image plane as shown in Figure 3. Let and be the object and image plane coordinates of a point, respectively. In addition, let and be their homogeneous coordinates, respectively. The homography matrix is denoted by

Homography is represented by .

Figure 3.

Data augmentation based on homography and generation of the corresponding annotations. The profile image is from FFHQ [20].

Because the homography matrix has just eight degrees of freedom, we can set to one. We can obtain two equations from a pair of an object plane coordinate and the corresponding image plane coordinate. Therefore, we can estimate all elements of H using correspondence from four vertices of the object plane to those of the image plane. Using the same homography matrix H, we can obtain the transformed bounding box on the image plane for each annotation. Then, we generate the new rectangle bounding box that encloses the vertices of the transformed bounding box.

In our experiments, the four vertices of the object planes are mapped to the four vertices on the image plane as follows: Let be the coordinates of the four vertices of the object planes, where W and H are the width and height. The coordinates of the four vertices of the object plane are set to or , where c is a parameter for deciding the image plane. We set c to or . In Figure 3, c is set as .

2.3. Ensemble of Deepfake Detectors

We explain the face-part section and adaptive generation of an ensemble model. First, we input a facial image to the YOLOv8-based face-part detector described in Section 2.2 and obtain the candidates of bounding boxes with confidence scores. Then, we select face parts whose confidence scores are greater than or equal to a given threshold . Then, we form an ensemble model that calculates the average of the outputs of CNN models specialized for the selected face parts.

It is possible to employ a relatively small CNN as a classifier because each classifier can concentrate on the classification task of a small region in the image corresponding to a face part. In the experiments, we employ the structure of ResNet-18 [25] for each classifier. Each classifier is trained with real and fake images of the corresponding face part. The input image size is set to .

3. Evaluation of Deepfake Detection Using Face Parts

In this section, we build specialized CNN models for deepfake detection using individual face parts and analyze which parts, or combinations of parts, contribute to identifying real or fake faces.

3.1. Environment

We used PyTorch 1.11.0 on Python 3.7.13. We trained CNN models on Google Colaboratory and evaluated them on a desktop PC with a Core i7 CPU of 3.80 GHz, 48.0 GB memory, and NVIDIA RTX3090.

3.2. Dataset Preparation for Training and Analysis

In this experiment, a face part dataset was generated using real images from the Flickr-Faces-HQ (FFHQ) [20] and fake images from the StyleGAN2 [21] dataset. We used YOLOv5 [26] trained with only 101 annotated images to roughly and automatically generate face-part patches from real and fake images. We show the evaluation of the proposed face-part detector based on YOLOv8 in Section 4.

The 5000 real images of FFHQ (00000-04999) and 5000 fake images of StyleGAN2 (00000-004999) were used for training and validation. We show the statistics of extracted face-part patches for training and validation in Table 1. In addition, from 5000 real images of FFHQ (05000-09999) and 5000 fake images of StyleGAN2 (005000-009999), we selected 618 real images and 618 fake images, in which all face parts were detected, for evaluation.

Table 1.

Statistics of training and validation dataset generated from images of FFHQ [20] and StyleGAN2 [21] for evaluating deepfake detection performance of single models and ensemble models.

3.3. Evaluation Method

In this paper, fake (real) images were regarded as samples of the positive (negative) class. We evaluated the deepfake detection performance with recall, precision, F1-score, and accuracy defined as follows:

where TP (TN) represents the number of fake (real) images that are correctly classified, and FP (FN) represents the number of real (fake) images that are falsely classified.

3.4. Generation of Single and Ensemble Models

Using the training dataset shown in Table 1, we trained a single ResNet-18 [25] model whose input layer’s size was pixels for each face part. The ResNet-18 models specialized for the eye, cornea, nose, mouth, and face are denoted by , , , , and , respectively. The suffix of each single model represents the initial letter of the corresponding face part. Each single model is trained with the cross-entropy loss. Moreover, we generated ten ensemble models consisting of two single models, ten ensemble models consisting of three single models, five ensemble models consisting of four single models, and one ensemble model consisting of five single models. The type of model is denoted using a suffix concatenating the suffixes of the corresponding face parts of the single models used. For example, represents an ensemble model consisting of , , and . We also trained another ResNet-18 model, denoted by , whose input layer’s size is , which enables us to analyze with a higher resolution.

In training, the cross-entropy loss was employed as a loss function, and Adam with a learning rate of 0.001 was used as an optimizer. The number of training epochs was set to 100. The batch size was 32. The model that achieved the best validation accuracy during training was used for evaluation with the test dataset.

We show the F1-score, accuracy, and inference time for five single models and 26 ensemble models of different combinations of single models in Table 2. The inference time was measured, while the batch size was set to 256.

Table 2.

Evaluation of deepfake detection performance of 5 single models trained with cross-entropy loss and 26 ensemble models. (The data are from Table 2 of [23]).

The first five rows of Table 2 represent the results of five single models. This information shows that all face parts significantly contribute to deepfake detection. In this experiment, in particular, the single model specialized for the cornea, denoted by , achieved the best F1-score. From the combined perspective of detection complexity, including efforts of annotation and deepfake detection accuracy, the cornea was most suitable for deepfake detection, though there was room to improve the performance of single models specialized for other face parts. Because the cornea is the smallest facial part, it is difficult to detect from low-resolution images. Larger face parts, such as the nose and mouth, also achieve relatively high deepfake detection performance, and it is important to use these parts effectively.

Next, we analyzed the possibility of improving the deepfake detection performance by combining two single models. First, we focused on the cornea, whose single model achieved the best F1-score of 0.997. The ensembles of two models including the cornea slightly improved the F1-score to 0.998–0.999. Next, we focused on the mouth whose single model achieved the worst F1-score of 0.977. The ensembles of two models including the mouth significantly improved the F1-score to 0.993–0.999. Similarly, the ensemble model of any combination achieved a higher F1-score than the single model used in the ensemble model. Moreover, the ensemble of three (four) models achieved at least 0.997 (0.998) in the F1-score. We can see that any combination of face parts has high potential to improve the deepfake detection performance.

Of all the models, and achieved the fastest and slowest processing times, respectively. In fact, although the inference times on GPU for and were similar, the pre-processing to resize extracted patch to pixels was different. The pre-processing for the face patch took more time because of its larger size.

We also compared the best ensemble models consisting of 1–4 of and with the single model that receives a face patch of pixels as an input. The comparison results are shown in Table 3. As shown, the ensemble models were faster than and achieved comparable deepfake detection performance. These results show that using the entire face is not mandatory for deepfake detection. Even if only some parts of the face are used, the same level of deepfake detection accuracy can be achieved as when the entire face of a higher resolution is used. The ensemble model is also superior in terms of execution time and computational cost because the computational cost of each single model can be reduced.

Table 3.

Deepfake detection performance of the best ensemble models for each CNN model trained on face-part images and the single model trained on face images. (The data are from Table 3 of [23]).

In the dataset of Table 1, the number of real images is different from that of fake images for each face part. To relax the class imbalance problem of Table 1, we also trained the single models with focal loss [27]. Let specify the ground-truth class and be the estimated probability for the ground-truth class with . To simply represent the focal loss function, is defined by

The focal loss is defined by

Based on the preliminary experiments, we set to 0.5, 0, 0.5, 1.0, and 2.0 for the eye, cornea, nose, mouth, and face, respectively.

Table 4 shows the evaluation results of five single models trained with focal loss and 26 ensemble models. We can see that , which suffers from serious class imbalance, improves the F1-score significantly by using the focal loss. The F1-score for and also increases, while that for the cornea and face decreases. The trend is similar to that in Table 2. We can see that any face part can contribute to deepfake detection and any combination of single models improves the F1-score.

Table 4.

Evaluation of deepfake detection performance of 5 single models trained with focal loss and 26 ensemble models.

4. Evaluation of Face-Part Detection

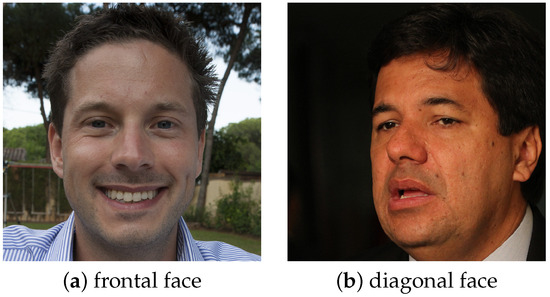

We prepared 1000 annotated images from FFHQ and 388 annotated images generated by StyleGAN3 [28] for face part detection, and trained two YOLOv8s models with and without homography on the FFHQ images, respectively. Moreover, we prepared 100 frontal face images and 100 diagonal face images from the FFHQ dataset as test data. We show examples of frontal and diagonal faces in Figure 4.

Figure 4.

Examples of test face images. The profile images are fro FFHQ [20].

We show the average precision (AP) of each class and mean average precision (mAP) of face-part detection in Table 5. As shown in the table, we improved the detection performance for each face part for diagonal faces using data augmentation based on homography. We realized accurate face-part detection in both cases of frontal and diagonal faces. Moreover, YOLOv8s achieved higher mAP than YOLOv5s. Therefore, we selected YOLOv8s in the following experiments. An example of a face-part detection result by YOLOv8s trained with homography is shown in Figure 5.

Table 5.

mAP of face-part detection without and with homography.

Figure 5.

Examples of face-part detection. The images are from FFHQ [20].

5. Evaluation of Dynamic Ensemble Selection

We generated a face-part image dataset with which to train ResNet-18 specialized for each face part using the proposed face-part detector. To show that our approach can be widely applied, we used StyleGAN [29] images as fake ones, instead of StyleGAN2. In Table 6, we show the number of training and validation images for each class. We used face images in 5000 images (00000-04999) of the FFHQ and 5000 images (000000-004999) of the StyleGAN datasets. We also used test datasets consisting of 1894 images (06000-07999) in the FFHQ dataset and 1894 images (006000-007999) in the StyleGAN dataset.

Table 6.

Training and validation dataset for deepfake detection generated from FFHQ and StyleGAN.

First, we evaluated the performance of deepfake detection for a small area of facial image. We used cornea and nose images that are extracted from the test images by the YOLOv8s model and are scaled down to in this experiment. Please note that the artifacts generated by StyleGAN are degraded by resizing. We compared the proposed method with four state-of-the-art methods [17,18,30,31]. The proposed method dynamically generates an ensemble model consisting of the eye, cornea, mouth, and nose detected by the face-part detector with a threshold of 0.8. The method proposed in [17] employs a ResNet-50 model trained with data augmentation, such as blurred and compression. The method in [18] proposed a re-synthesizer extracting low-level and general visual cues. Reference [30] evaluated seven methods [13,17,32,33,34,35,36] and their variants and selected the best model. The selected model is a modified ResNet50, in which the downsampling of the first layer is removed. Ref. [31] employed a static ensemble of EfficientNet-B4 models that are trained with orthogonal datasets; different datasets include images depicting different semantic content and images post-processed and compressed differently. Table 7 shows the F1-score for cornea and nose images. The existing methods are specialized for relatively high-resolution images and assume that almost part of facial images is received. Although they achieve high accuracy for deepfake detection for such images, they are not effective for deepfake detection using small facial parts. On the other hand, our approach identifies the face parts generated by a deepfake generator. Even for small face-part images of , we can extract artifacts useful for deepfake detection and analyze each face part precisely because each single model is specialized for extracting effective features from small-area, low-resolution, and degraded artifacts.

Table 7.

F1-score comparison of deepfake detection for cornea and nose images of 64 × 64 pixels.

We also created four types of occluded test datasets from the original test dataset. Figure 6 shows examples of occluded images of each test dataset. The upper left and lower right coordinates of the occluded area were determined based on the bounding box of the detected parts to generate the occlusion image. Figure 6a,b represent test images of small eye occlusion (SEO) and large eye occlusion (LEO), respectively. In SEO, the right eye’s upper-left (lower-right) coordinate is used as the upper-left (lower-right) coordinate of the occlusion region. Moreover, LEO expanded the occlusion region by multiplying the upper-left coordinate by 0.95 and the lower-right coordinate by 1.05. Figure 6c,d represent test images of small mouth and nose occlusion (SMNO) and large mouth and nose occlusion (LMNO), respectively. SMNO (LMNO) used x-coordinates of the right eye and y-coordinates of the nose for the upper-left coordinates, and x-coordinates of the left eye and y-coordinates of the mouth for the lower-right coordinates. LMNO expanded and shifted the occlusion region by multiplying the y-coordinates by 1.1. Moreover, we created a resized test dataset by resizing all test images into pixels.

Figure 6.

Examples of occluded images of each test dataset. (a–d) Small eye occlusion (SEO), large eye occlusion (LEO), small mouth and nose occlusion (SMNO), and large mouth and nose occlusion (LMNO), respectively. The images are generated from an image of FFHQ [20].

Next, Table 8 shows the F1-score of deepfake detection for non-occluded images. We also show the normalized F1-score that is calculated by the F1-score for occluded/resized images over that for non-occluded images. Although the F1-score of the proposed method is slightly lower than [30], we can see that the proposed method not only achieves deep fake detection for small facial part but also achieves the same level of accuracy as the state-of-the-art methods for the original face images. Based on the evaluation of the normalized F1-score, we analyzed how sensitive each method was to occlusion. For the method proposed in [17], occlusions decreased the normalized F1-score by 1.0–3.7%. Similarly, in the method proposed in [18], the normalized F1-score was reduced by 1.0–3.0%. On the other hand, the normalized F1-score reduction for the proposed method was only 0.30% to 0.50%. The proposed method appropriately selected face parts and realized more robust deepfake detection against occlusion. The methods proposed in [30,31] also achieved a high normalized F1-score. For resized images, the methods proposed in [17,18,31] reduced the normalized F1-score significantly. On the other hand, the proposed method and the method proposed in [30] maintain a high normalized F1-score. We show examples of deepfake detection results in Figure 7.

Table 8.

F1-score comparison of deepfake detection performance for partly occluded and resized images.

Figure 7.

Examples of deepfake detection results obtained using the proposed method. The value placed close to each bounding box represents the predicted probability by the corresponding CNN model for the fake class. The value of the bottom-left represents the predicted probability offered by the ensemble model. The first and third images are from StyleGAN [29], and the second one is from FFHQ [20].

6. Discussion

There are many existing studies on deepfake detection based on deep learning, such as [13,14,15,16,17,18,30,31], which have shown that high accuracy can be achieved when the model used to generate the fake images is identified. However, it has not been adequately discussed whether these methods can be applied to cases in which parts of the image have been hidden or additionally edited. In such cases, only a part of the artifacts generated by a deepfake generator is available for deepfake detection. The analysis results in this paper show that most of existing methods that extract features from the entire face can be greatly degraded in accuracy due to partial occlusion and editing, such as resizing.

On the other hand, we demonstrated that it is not necessary to use information from the entire face for highly accurate deepfake detection. Even small regions corresponding to facial parts, such as the cornea, eyes, nose, and mouth, contain sufficient information to discriminate fake images. The existing models are suitable for extracting effective features from a relatively wide area of facial images. However, it is difficult for them to extract effective features from a small area of facial images because only a small amount of contaminated artifacts can be obtained. We proposed simple but powerful CNN models that are specialized for individual face parts. These CNN models can concentrate on extracting effective features from images of similar textures, and they are more robust to contamination and degradation of artifacts. This enables us to detect deepfake if the artifacts generated by a deepfake generator remain in a small area. We also showed that the accuracy can be further improved by using an ensemble model of deepfake detectors that focus on different parts of the face. Based on these facts, the proposed method is able to achieve robust deepfake detection against partial occlusion and editing.

Another advantage of the proposed method is the ability to show the class probability for each facial part to users in addition to that for the whole of the facial image as shown in Figure 7. This is a new feature as a detector of synthesized images. If the artifacts remain only partially due to significant editing, there is a risk that the conventional method or the proposed ensemble model will falsely consider a fake image as a real image when the entire face image is evaluated. The proposed method can provide class probabilities for the major parts of the face, thus alerting the user to the possibility of being generated by the GAN.

On the other hand, there is still room for improvement in the proposed method. Because the proposed method assumes that a 64 × 64 low-resolution image is input to each single model, it is necessary to reduce the size of facial parts to feed them to the single model, even when the input image is an image of higher resolution. This dares to degrade the artifacts of the high-resolution image. The images that failed to be classified in the experiment may be correctly classified by using a CNN model with higher-resolution images as input. To achieve more reliable deepfake detection, it may be necessary to train CNN models that are specific to different resolutions and adaptively select one based on the resolution of the input image. Additionally, there is the problem that deepfake detection fails in case the bounding box estimation for face-part detection is inaccurate. In this paper, we selected a simple average for the proposed ensemble model because it is one of the simplest ways and has a low computational cost, and our experimental results showed that even a simple average can improve the accuracy effectively. However, not all detected face parts are effective for deepfake detection. Therefore, the weight of each face part should be determined based on the overall judgment of patch size, bounding box accuracy, and ease of artifact extraction.

Deepfake generalization performance, which is discussed in [17,18], is an important issue. This paper presents an in-depth exploration of the possibility of deepfake detection using face parts and does not address the improvement of the generalization performance. However, the approaches used to improve generalization performance via data augmentation and image resynthesis proposed by [17,18] can be introduced into the proposed method, and it would be interesting to analyze the synergistic effects of such combinations.

7. Conclusions

In this paper, we proposed a deepfake detection method based on an ensemble model that has combined deepfake detectors focused on face-part images (eyes, corneas, nose, mouth, and face). The proposed method selects face parts to avoid occlusions and inappropriate parts for deepfake detection and adaptively generates an ensemble model of CNN models corresponding to the selected face parts. We found that important clues for deepfakes are hidden in patches of small regions corresponding to face parts and that it is possible to detect deepfakes with relatively high accuracy even when using a single face part. We also found that deepfake detection performance can be improved by combining specialized deepfake detectors for each face part. Furthermore, we demonstrated that the proposed dynamic ensemble selection provides robust deepfake detection against partial occlusion and editing. In future work, we will explore effective ways to achieve a generalized deepfake detector that is not only robust against partial occlusion but also applicable to fake images generated by unknown deepfake approaches. We will also investigate how to assign the weights to face parts in making an accurate ensemble model of low computational cost.

Author Contributions

Conceptualization, A.K. and Y.T.; methodology, A.K., R.H., Y.T. and J.S.; software, A.K. and R.H.; validation, A.K., R.H. and Y.T.; formal analysis, A.K., R.H., Y.T., J.S. and Y.O.; investigation, A.K., R.H., Y.T. and Y.O.; resources, Y.T.; data curation, Y.T.; writing—original draft preparation, A.K., R.H. and Y.T.; writing—review and editing, A.K., R.H., Y.T., J.S. and Y.O.; visualization, A.K., R.H. and Y.T.; supervision, Y.T.; project administration, Y.T.; funding acquisition, Y.T. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by JSPS KAKENHI Grant Number JP20K11813 and SEI Group CSR Foundation.

Data Availability Statement

FFHQ dataset is accessible at https://github.com/NVlabs/ffhq-dataset. StyleGAN dataset can be found at https://github.com/NVlabs/stylegan. StyleGAN2 dataset is accessible at https://github.com/NVlabs/stylegan2.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wang, R.; Huang, Z.; Chen, Z.; Liu, L.; Chen, J.; Wang, L. Anti-Forgery: Towards a Stealthy and Robust DeepFake Disruption Attack via Adversarial Perceptual-aware Perturbations. arXiv 2022, arXiv:2206.00477. [Google Scholar]

- Karras, T.; Aila, T.; Laine, S.; Lehtinen, J. Progressive growing of gans for improved quality, stability, and variation. arXiv 2017, arXiv:1710.10196. [Google Scholar]

- Karras, T.; Laine, S.; Aila, T. A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4401–4410. [Google Scholar]

- Karras, T.; Laine, S.; Aittala, M.; Hellsten, J.; Lehtinen, J.; Aila, T. Analyzing and improving the image quality of stylegan. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8110–8119. [Google Scholar]

- Liu, M.; Ding, Y.; Xia, M.; Liu, X.; Ding, E.; Zuo, W.; Wen, S. Stgan: A unified selective transfer network for arbitrary image attribute editing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3673–3682. [Google Scholar]

- Choi, Y.; Choi, M.; Kim, M.; Ha, J.W.; Kim, S.; Choo, J. Stargan: Unified generative adversarial networks for multi-domain image-to-image translation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8789–8797. [Google Scholar]

- Choi, Y.; Uh, Y.; Yoo, J.; Ha, J.W. Stargan v2: Diverse image synthesis for multiple domains. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8188–8197. [Google Scholar]

- Faceswap. Available online: https://faceswap.dev/ (accessed on 21 August 2023).

- Thies, J.; Zollhofer, M.; Stamminger, M.; Theobalt, C.; Nießner, M. Face2face: Real-time face capture and reenactment of rgb videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2387–2395. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-Resolution Image Synthesis With Latent Diffusion Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 10684–10695. [Google Scholar]

- Koopman, M.; Rodriguez, A.M.; Geradts, Z. Detection of deepfake video manipulation. In Proceedings of the 20th Irish Machine Vision and Image Processing Conference (IMVIP), Belfast, Northern Ireland, 29–31 August 2018; pp. 133–136. [Google Scholar]

- Marra, F.; Gragnaniello, D.; Cozzolino, D.; Verdoliva, L. Detection of gan-generated fake images over social networks. In Proceedings of the 2018 IEEE Conference on Multimedia Information Processing and Retrieval (MIPR), IEEE, Miami, FL, USA, 10–12 April 2018; pp. 384–389. [Google Scholar]

- Nguyen, H.H.; Yamagishi, J.; Echizen, I. Capsule-forensics: Using capsule networks to detect forged images and videos. In Proceedings of the ICASSP 2019–2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), IEEE, Brighton, UK, 12–17 May 2019; pp. 2307–2311. [Google Scholar]

- Hsu, C.C.; Zhuang, Y.X.; Lee, C.Y. Deep fake image detection based on pairwise learning. Appl. Sci. 2020, 10, 370. [Google Scholar] [CrossRef]

- Nirkin, Y.; Wolf, L.; Keller, Y.; Hassner, T. DeepFake detection based on discrepancies between faces and their context. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 6111–6121. [Google Scholar] [CrossRef]

- Wang, S.Y.; Wang, O.; Zhang, R.; Owens, A.; Efros, A.A. CNN-generated images are surprisingly easy to spot... for now. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8695–8704. [Google Scholar]

- He, Y.; Yu, N.; Keuper, M.; Fritz, M. Beyond the spectrum: Detecting deepfakes via re-synthesis. arXiv 2021, arXiv:2105.14376. [Google Scholar]

- Hu, S.; Li, Y.; Lyu, S. Exposing GAN-generated faces using inconsistent corneal specular highlights. In Proceedings of the ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), IEEE, Toronto, ON, Canada, 6–11 June 2021; pp. 2500–2504. [Google Scholar]

- Flickr-Faces-HQ Dataset (FFHQ). Available online: https://github.com/NVlabs/ffhq-dataset (accessed on 21 August 2023).

- StyleGAN2—Official TensorFlow Implementation. Available online: https://github.com/NVlabs/stylegan2 (accessed on 21 August 2023).

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLOv8. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 21 August 2023).

- Kawabe, A.; Haga, R.; Tomioka, Y.; Okuyama, Y.; Shin, J. Fake Image Detection Using An Ensemble of CNN Models Specialized For Individual Face Parts. In Proceedings of the 2022 IEEE 15th International Symposium on Embedded Multicore/Many-core Systems-on-Chip (MCSoC), Penang, Malaysia, 19–22 December 2022; pp. 72–77. [Google Scholar] [CrossRef]

- Li, Y.Y.; Cheng, H.J.; Liu, Y.; Shen, C.T. Edge-Computing Convolutional Neural Network with Homography-Augmented Data for Facial Emotion Recognition. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 3287–3291. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Stoken, A.; Borovec, J.; Kwon, Y.; Fang, J.; Michael, K.; Montes, D.; Nadar, J.; Skalski, P.; et al. ultralytics/yolov5: V6.1-TensorRT, TensorFlow Edge TPU and OpenVINO Export and Inference (v6.1). Zenodo. Available online: https://zenodo.org/record/6222936 (accessed on 21 August 2023).

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Karras, T.; Aittala, M.; Laine, S.; Härkönen, E.; Hellsten, J.; Lehtinen, J.; Aila, T. Alias-Free Generative Adversarial Networks. In Proceedings of the NeurIPS, Virtual, 13 December 2021. [Google Scholar]

- StyleGAN—Official TensorFlow Implementation. Available online: https://github.com/NVlabs/stylegan (accessed on 21 August 2023).

- Gragnaniello, D.; Cozzolino, D.; Marra, F.; Poggi, G.; Verdoliva, L. Are GAN generated images easy to detect? A critical analysis of the state-of-the-art. In Proceedings of the 2021 IEEE International Conference on Multimedia and Expo (ICME), IEEE, Shenzhen, China, 5–9 July 2021; pp. 1–6. [Google Scholar]

- Mandelli, S.; Bonettini, N.; Bestagini, P.; Tubaro, S. Detecting Gan-Generated Images by Orthogonal Training of Multiple CNNs. In Proceedings of the 2022 IEEE International Conference on Image Processing (ICIP), Bordeaux, France, 16–19 October 2022; pp. 3091–3095. [Google Scholar] [CrossRef]

- Boroumand, M.; Chen, M.; Fridrich, J.J. Deep Residual Network for Steganalysis of Digital Images. IEEE Trans. Inf. Forensics Secur. 2019, 14, 1181–1193. [Google Scholar] [CrossRef]

- Zhang, X.; Karaman, S.; Chang, S.F. Detecting and simulating artifacts in gan fake images. In Proceedings of the 2019 IEEE International Workshop on Information Forensics and Security (WIFS), IEEE, Delft, The Netherlands, 9–12 December 2019; pp. 1–6. [Google Scholar]

- Xuan, X.; Peng, B.; Wang, W.; Dong, J. On the generalization of GAN image forensics. In Proceedings of the Chinese Conference on Biometric Recognition, Zhuzhou, China, 12–13 October 2019; pp. 134–141. [Google Scholar]

- Nataraj, L.; Mohammed, T.M.; Chandrasekaran, S.; Flenner, A.; Bappy, J.H.; Roy-Chowdhury, A.K.; Manjunath, B. Detecting GAN generated fake images using co-occurrence matrices. arXiv 2019, arXiv:1903.06836. [Google Scholar] [CrossRef]

- Chai, L.; Bau, D.; Lim, S.N.; Isola, P. What makes fake images detectable? Understanding properties that generalize. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XXVI 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 103–120. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).