Pairwise Guided Multilayer Cross-Fusion Network for Bird Image Recognition

Abstract

:1. Introduction

- (1)

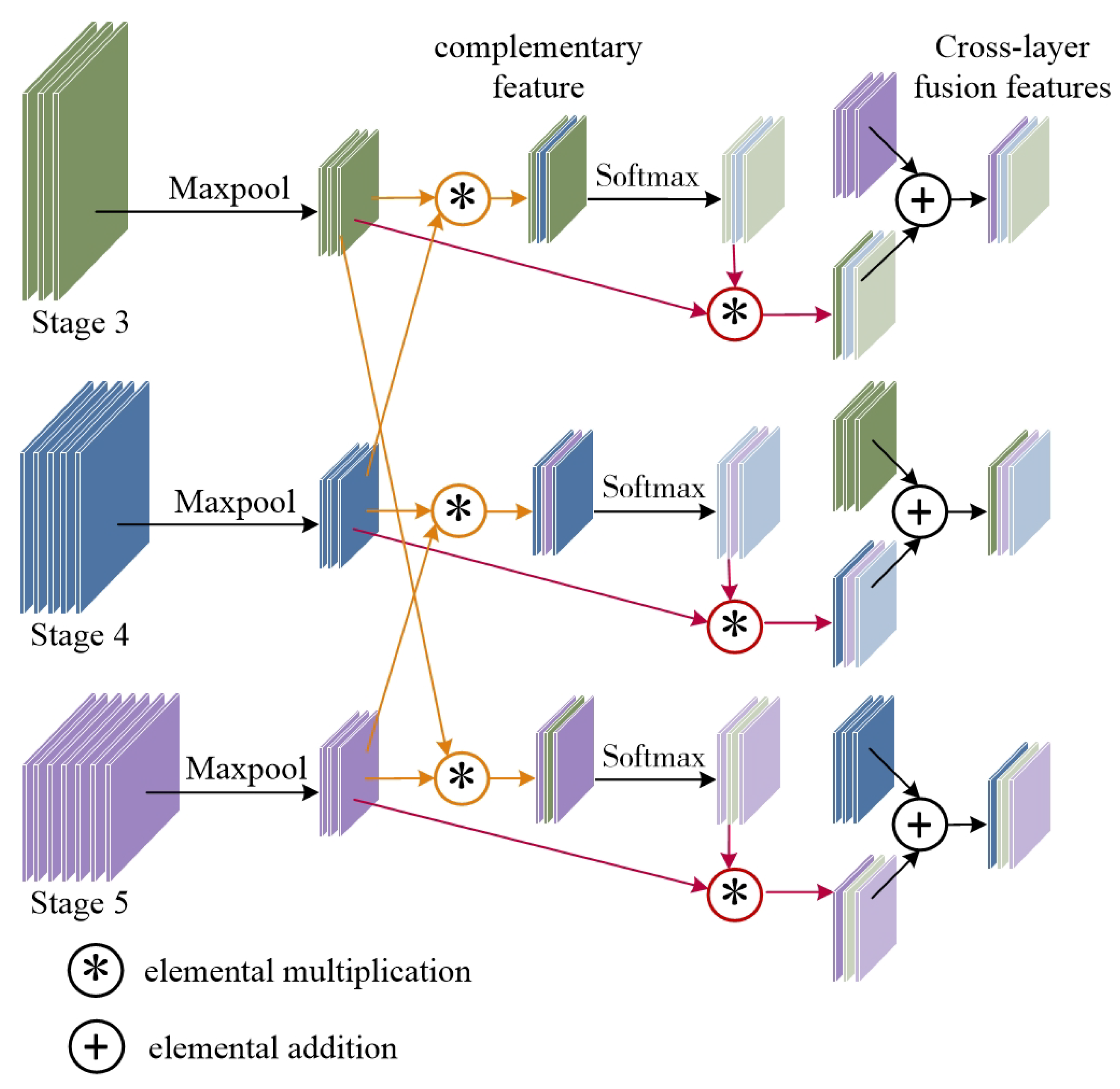

- We present the Cross-Layer Fusion Module (CLFM) to interact with features from different stages to enrich semantic features.

- (2)

- We also propose the Deep Semantic Localization Module (DSLM) to strengthen highly responsive feature regions and to weaken the influence of the background.

- (3)

- We introduce a Semantic Guidance Loss (SGloss) to react to the spatial gap between different categories and to enhance the generalization of models.

2. Related Works

3. Methods

3.1. Network Architecture

3.2. Cross-Layer Fusion Module (CLFM)

3.3. Deep Semantic Localization Module (DSLM)

3.4. Optimized Loss Function

4. Experiment

4.1. Datasets

4.2. Experiment Details

5. Results and Discussions

5.1. Evaluation Indexes

5.2. Results on the CUB-200-2011

5.3. Results on the Stanford Cars and FGVC-Aircraft

5.4. Ablation Study

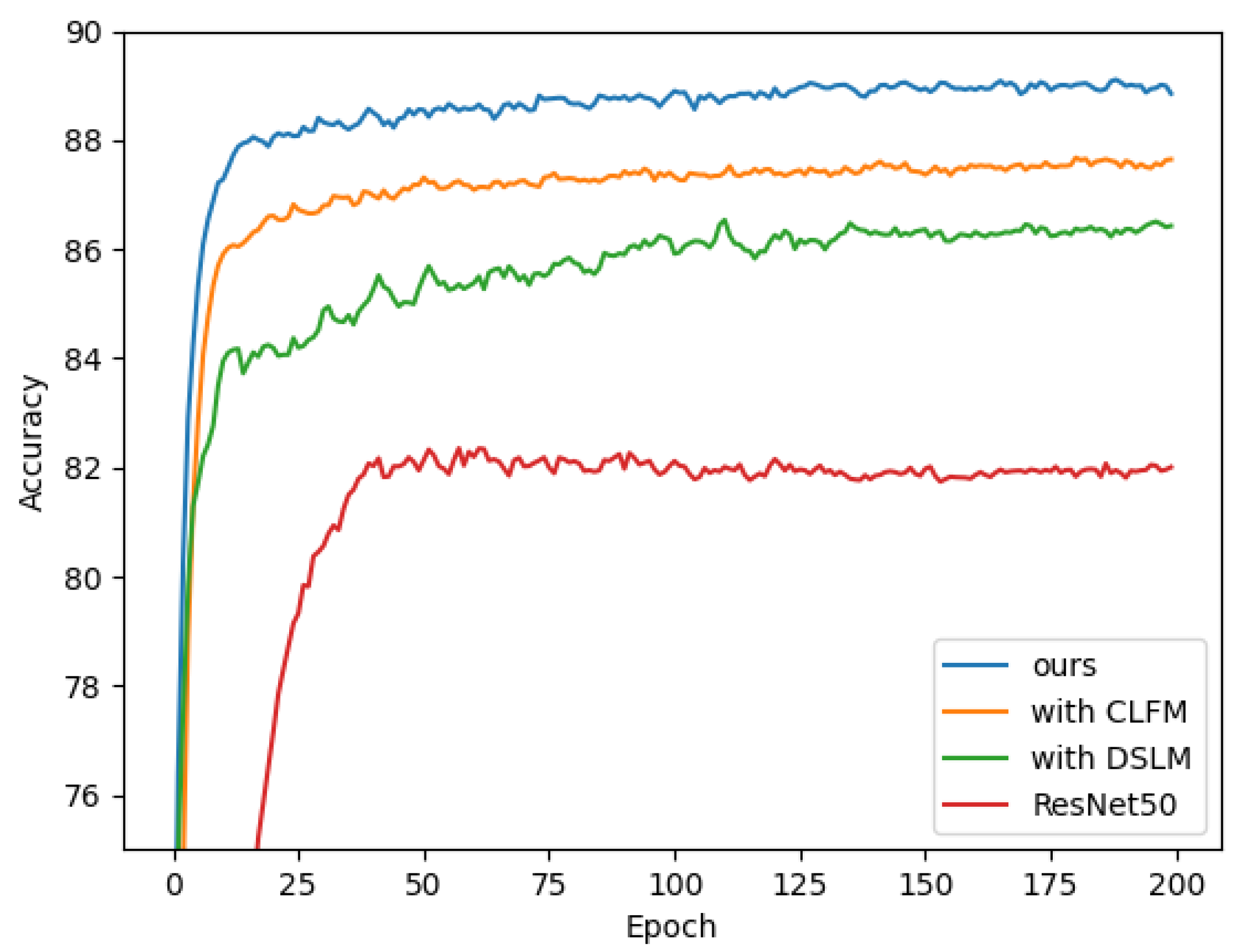

5.4.1. Effects of DSLM and CLFM

5.4.2. Effects of Mixup

5.4.3. Effects of Loss

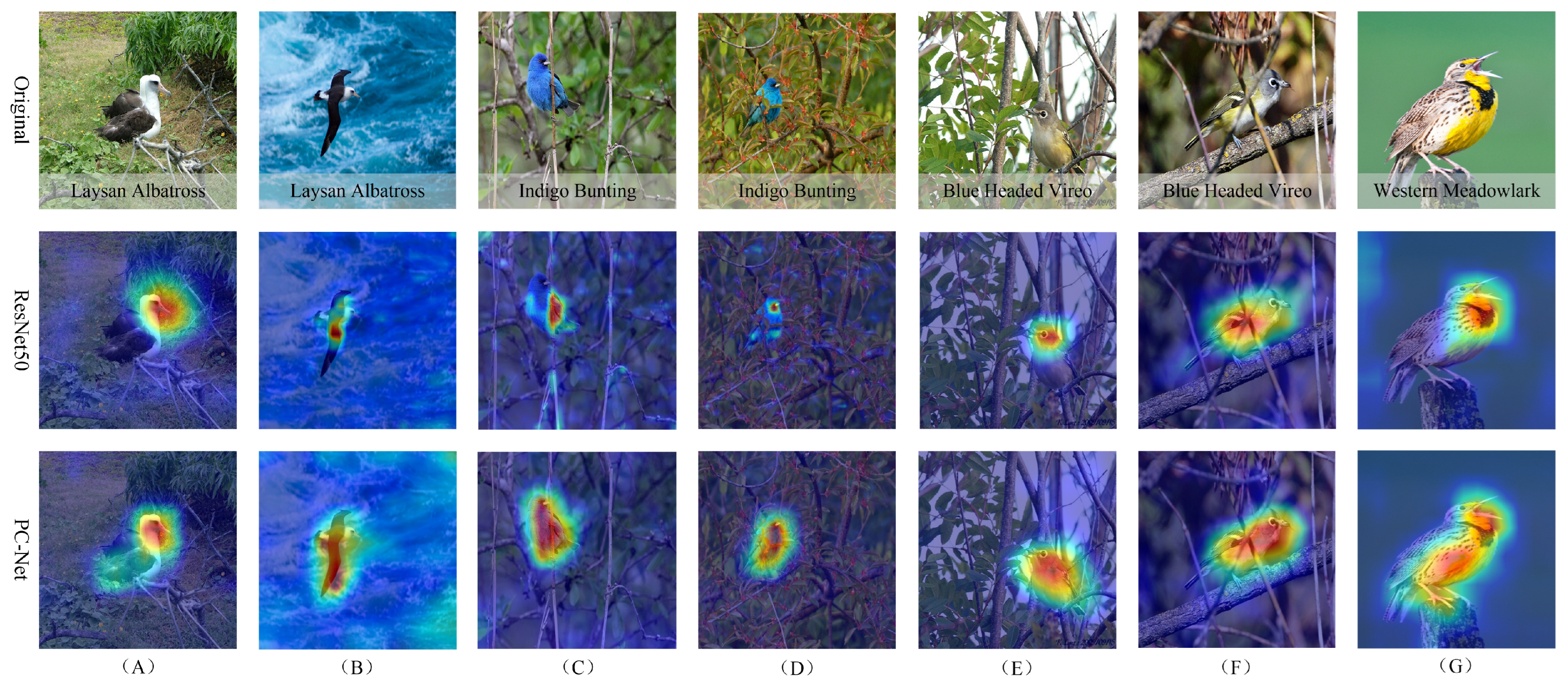

5.5. Visualization

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CUB-200-2011 | Caltech-UCSD Birds-200-2011 dataset |

| PC-Net | Progressive Cross-Union Network |

| CV | Computer Vision |

| CNN | Convolutional Neural Network |

| Part R-CNN | Region-Based Convolutional Neural Network |

| MA-CNN | Multi-Attention Convolutional Neural Network |

| TASN | Trilinear Attention Sampling Network |

| RA-CNN | Recurrent Attention Convolutional Neural Network |

| B-CNN | Bilinear Convolutional Network |

| SBP-CNN | Semantic Bilinear Pooling CNN |

| API-Net | Attentive Pairwise Interaction Network |

| DTD | Describable Textures Dataset |

| CLFM | Cross-Layer Fusion Module |

| DSLM | Deep Semantic Localization Module |

| SGloss | Semantic Guidance Loss |

| CELoss | Cross-Entropy Loss |

| FGVC-Aircraft | Fine-Grained Visual Classification of Aircraft |

| SGD | Stochastic Gradient Descent |

| Croxx-X | Cross-X Learning |

| FGVC | Fine-Grained Visual Classification |

| PC-DenseNet | Pairwise Confusion based on DenseNet |

| PCA-Net | Progressive Co-Attention Network |

| TP | True Positive |

| FN | False Negative |

| FP | False Positive |

| TN | True Negative |

References

- Yang, X.; Tan, X.; Chen, C.; Wang, Y. The influence of urban park characteristics on bird diversity in Nanjing, China. Avian Res. 2020, 11, 45. [Google Scholar] [CrossRef]

- Xie, J.; Zhong, Y.; Zhang, J.; Liu, S.; Ding, C.; Triantafyllopoulos, A. A review of automatic recognition technology for bird vocalizations in the deep learning era. Ecol. Inform. 2022, 73, 101927. [Google Scholar] [CrossRef]

- Wei, X.S.; Xie, C.W.; Wu, J.; Shen, C. Mask-CNN: Localizing parts and selecting descriptors for fine-grained bird species categorization. Pattern Recognit. 2018, 76, 704–714. [Google Scholar] [CrossRef]

- Zhang, N.; Donahue, J.; Girshick, R.; Darrell, T. Part-based R-CNNs for fine-grained category detection. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014. Part I. Volume 13, pp. 834–849. [Google Scholar]

- Branson, S.; Van Horn, G.; Belongie, S.; Perona, P. Bird species categorization using pose normalized deep convolutional nets. arXiv 2014, arXiv:1406.2952. [Google Scholar]

- Huang, S.; Xu, Z.; Tao, D.; Zhang, Y. Part-stacked CNN for fine-grained visual categorization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1173–1182. [Google Scholar]

- Lin, D.; Shen, X.; Lu, C.; Jia, J. Deep lac: Deep localization, alignment and classification for fine-grained recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1666–1674. [Google Scholar]

- Ding, Y.; Ma, Z.; Wen, S.; Xie, J.; Chang, D.; Si, Z.; Wu, M.; Ling, H. AP-CNN: Weakly supervised attention pyramid convolutional neural network for fine-grained visual classification. IEEE Trans. Image Process. 2021, 30, 2826–2836. [Google Scholar] [CrossRef] [PubMed]

- Zheng, H.; Fu, J.; Mei, T.; Luo, J. Learning multi-attention convolutional neural network for fine-grained image recognition. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5209–5217. [Google Scholar]

- Zheng, H.; Fu, J.; Zha, Z.J.; Luo, J. Looking for the devil in the details: Learning trilinear attention sampling network for fine-grained image recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5012–5021. [Google Scholar]

- Fu, J.; Zheng, H.; Mei, T. Look closer to see better: Recurrent attention convolutional neural network for fine-grained image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017; pp. 4438–4446. [Google Scholar]

- Lin, T.Y.; RoyChowdhury, A.; Maji, S. Bilinear CNN models for fine-grained visual recognition. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1449–1457. [Google Scholar]

- Li, X.; Yang, C.; Chen, S.L.; Zhu, C.; Yin, X.C. Semantic bilinear pooling for fine-grained recognition. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; IEEE: New York, NY, USA, 2021; pp. 3660–3666. [Google Scholar]

- Kong, S.; Fowlkes, C. Low-rank bilinear pooling for fine-grained classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 365–374. [Google Scholar]

- Zhang, T.; Chang, D.; Ma, Z.; Guo, J. Progressive co-attention network for fine-grained visual classification. In Proceedings of the 2021 International Conference on Visual Communications and Image Processing (VCIP), Novotel Munich, Germany, 5–8 December 2021; IEEE: New York, NY, USA, 2021; pp. 1–5. [Google Scholar]

- Luo, W.; Yang, X.; Mo, X.; Lu, Y.; Davis, L.S.; Li, J.; Yang, J.; Lim, S.N. Cross-x learning for fine-grained visual categorization. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8242–8251. [Google Scholar]

- Zhuang, P.; Wang, Y.; Qiao, Y. Learning attentive pairwise interaction for fine-grained classification. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 13130–13137. [Google Scholar]

- Zhang, Z.; Sabuncu, M. Generalized cross entropy loss for training deep neural networks with noisy labels. Adv. Neural Inf. Process. Syst. 2018, 31, 8792–8802. [Google Scholar]

- Dubey, A.; Gupta, O.; Guo, P.; Raskar, R.; Farrell, R.; Naik, N. Pairwise confusion for fine-grained visual classification. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 70–86. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. mixup: Beyond empirical risk minimization. arXiv 2017, arXiv:1710.09412. [Google Scholar]

- Wah, C.; Branson, S.; Welinder, P.; Perona, P.; Belongie, S. The Caltech-UCSD Birds-200-2011 Dataset. 2011. Available online: https://api.semanticscholar.org/CorpusID:16119123 (accessed on 8 September 2023).

- Krause, J.; Stark, M.; Deng, J.; Fei-Fei, L. 3d object representations for fine-grained categorization. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Sydney, Australia, 1–8 December 2013; pp. 554–561. [Google Scholar]

- Maji, S.; Rahtu, E.; Kannala, J.; Blaschko, M.; Vedaldi, A. Fine-grained visual classification of aircraft. arXiv 2013, arXiv:1306.5151. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and pattern Recognition, Miami, FL, USA, 20–25 June 2009; IEEE: New York, NY, USA, 2009; pp. 248–255. [Google Scholar]

- Ruder, S. An overview of gradient descent optimization algorithms. arXiv 2016, arXiv:1609.04747. [Google Scholar]

- Loshchilov, I.; Hutter, F. Sgdr: Stochastic gradient descent with warm restarts. arXiv 2016, arXiv:1608.03983. [Google Scholar]

- Paszke, A.; Gross, S.; Chintala, S.; Chanan, G.; Yang, E.; DeVito, Z.; Lin, Z.; Desmaison, A.; Antiga, L.; Lerer, A. Automatic Differentiation in Pytorch. 2017. Available online: https://openreview.net/forum?id=BJJsrmfCZ (accessed on 8 September 2023).

- Chang, D.; Ding, Y.; Xie, J.; Bhunia, A.K.; Li, X.; Ma, Z.; Wu, M.; Guo, J.; Song, Y.Z. The devil is in the channels: Mutual-channel loss for fine-grained image classification. IEEE Trans. Image Process. 2020, 29, 4683–4695. [Google Scholar] [CrossRef] [PubMed]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

| Reference | Object | DL Frame | Contributions | Limitations |

|---|---|---|---|---|

| [9] | Bird, Car, Aircraft | VGG | Learning more features by acquiring multiple discriminative regions from the feature channel. | Extraction of features from parts, lack of grasp of the target as a whole. |

| [10] | Cars, Naturalist Species | ResNet | Selection of hundreds of detailed parts from which useful feature representations are extracted to improve classification standard. | |

| [11] | Bird, Car, Dog | VGG | Possible to perform multiscale feature extraction to generate attention regions from coarse to fine. | |

| [12] | Bird, Car, Aircraft | VGG | The use of bilinear pooling fuses information from different channels to transform first-order statistics into second-order, thus improving the performance of the model. | High number of parameters and computational effort make the model not cost-effective. |

| [13] | Bird, Car, Aircraft | VGG ResNet | Improving bilinear pooling into a semantics- differentiated double branching. | |

| [14] | Bird, Car, Aircraft Textures | VGG | Improvement of the limitation of large parametric quantities of bilinear networks by two parameter approximations. | |

| [17] | Bird, Car, Aircraft, Dog | ResNet DenseNet | Proposes pairwise interactions via mutual learning vectors to capture the semantic differences among them. | Direct feature interaction across different images, which leads to poor results. |

| [19] | Bird, Car, Aircraft, Dog Flower | ResNet DeseNet BilinearCNN | Proposes simplified conditional probability separately to improve the overfitting problem. |

| Dataset Name | Number of Classes | Train | Text |

|---|---|---|---|

| CUB-200-2011 | 200 | 5994 | 5794 |

| Stanford Cars | 196 | 8144 | 8041 |

| FGVC Aircraft | 100 | 6667 | 3333 |

| Methods | Backbone | Accuracy/% |

|---|---|---|

| B-CNN [12] | VGG | 84.1 |

| RA-CNN [11] | VGG | 85.3 |

| MA-CNN [9] | VGG | 86.5 |

| SBP-CNN [13] | VGG | 87.8 |

| SBP-CNN [13] | Resnet50 | 88.9 |

| Corss-X [16] | Resnet50 | 87.7 |

| API-Net [17] | Resnet50 | 87.7 |

| TASN [10] | Resnet50 | 87.9 |

| PCA-Net [15] | Resnet50 | 88.3 |

| API-Net [17] | Resnet101 | 88.6 |

| PCA-Net [15] | Resnet101 | 88.9 |

| PC-DenseNet [19] | DenseNet | 86.8 |

| Ours | Resnet50 | 89.2 |

| Parameters | Value |

|---|---|

| FLOPs | 37.4 G |

| Params | 45.4 M |

| Inference time | 0.02 s |

| Methods | Backbone | Stanford Cars/% | FGVC-Aircraft/% |

|---|---|---|---|

| B-CNN [12] | VGG | 91.3 | 84.1 |

| RA-CNN [11] | VGG | 92.5 | 85.3 |

| MA-CNN [9] | VGG | 92.8 | 86.5 |

| SBP-CNN [13] | VGG | 93.2 | 87.8 |

| SBP-CNN [13] | Resnet50 | 94.3 | 88.9 |

| Corss-X [16] | Resnet50 | 94.5 | 87.7 |

| API-Net [17] | Resnet50 | 94.8 | 87.7 |

| PCA-Net [15] | Resnet50 | 94.3 | 88.3 |

| API-Net [17] | Resnet101 | 94.9 | 88.6 |

| PCA-Net [15] | Resnet101 | 94.6 | 88.9 |

| Ours | Resnet50 | 94.6 | 93.0 |

| ResNet50 | DSLM | CLFM | Accuracy /% |

|---|---|---|---|

| Y | N | N | 82.5 |

| Y | Y | N | 86.4 |

| Y | N | Y | 87.8 |

| Y | Y | Y | 89.2 |

| Methods | Accuracy /% |

|---|---|

| Without mixup | 86.1 |

| With mixup | 86.4 |

| Methods | Accuracy /% |

|---|---|

| CELoss | 87.4 |

| CELoss + SGLoss | 89.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lei, J.; Jin, Y.; Huang, L.; Ji, Y.; Yang, S. Pairwise Guided Multilayer Cross-Fusion Network for Bird Image Recognition. Electronics 2023, 12, 3817. https://doi.org/10.3390/electronics12183817

Lei J, Jin Y, Huang L, Ji Y, Yang S. Pairwise Guided Multilayer Cross-Fusion Network for Bird Image Recognition. Electronics. 2023; 12(18):3817. https://doi.org/10.3390/electronics12183817

Chicago/Turabian StyleLei, Jingsheng, Yao Jin, Liya Huang, Yuan Ji, and Shengying Yang. 2023. "Pairwise Guided Multilayer Cross-Fusion Network for Bird Image Recognition" Electronics 12, no. 18: 3817. https://doi.org/10.3390/electronics12183817

APA StyleLei, J., Jin, Y., Huang, L., Ji, Y., & Yang, S. (2023). Pairwise Guided Multilayer Cross-Fusion Network for Bird Image Recognition. Electronics, 12(18), 3817. https://doi.org/10.3390/electronics12183817