An Efficient Combination of Genetic Algorithm and Particle Swarm Optimization for Scheduling Data-Intensive Tasks in Heterogeneous Cloud Computing

Abstract

1. Introduction

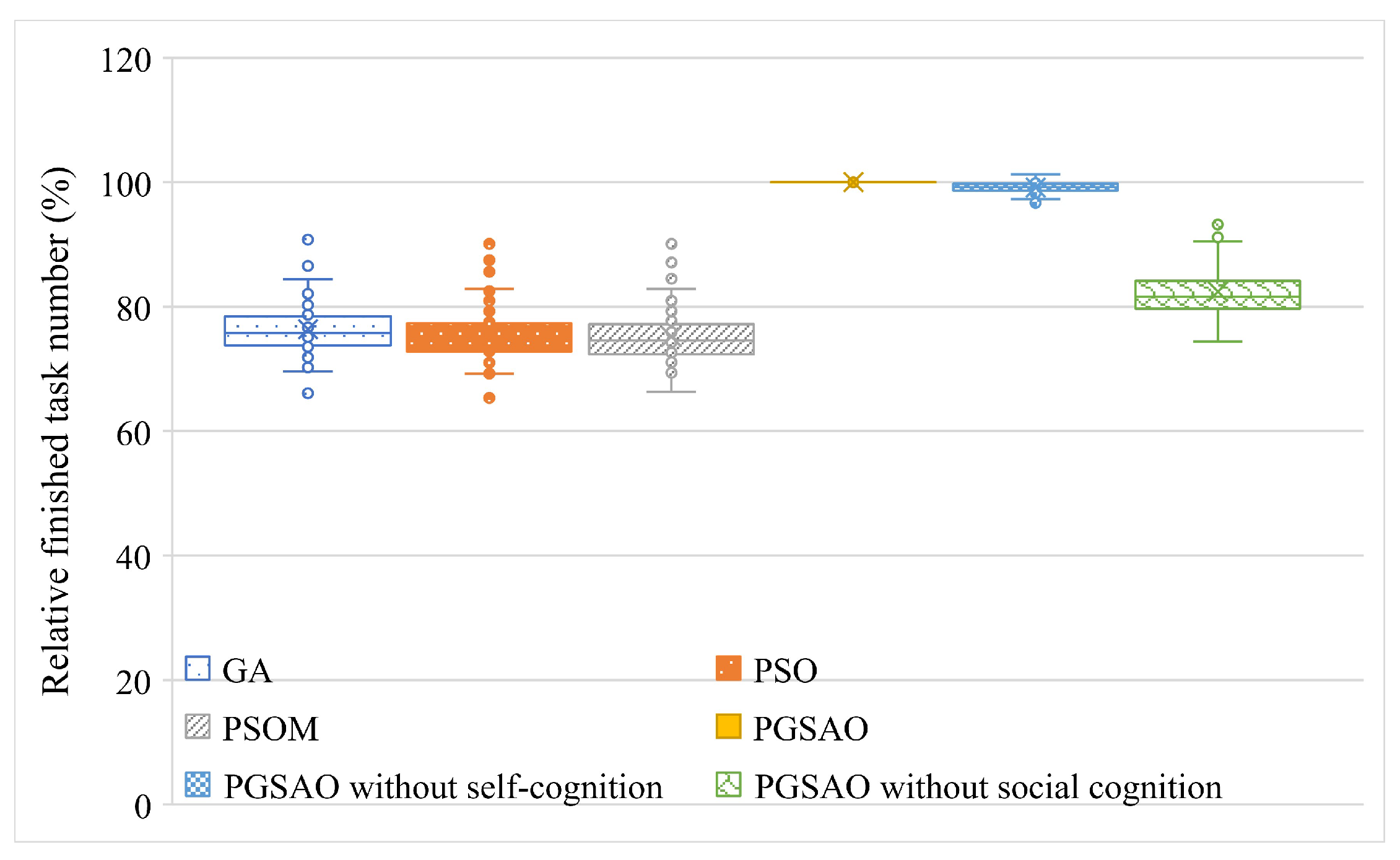

- We formulate the task scheduling problem with deadline constraints into a combinatorial optimization problem for heterogeneous cloud computing. There are two optimization objectives: the number of tasks that are finished within their respective deadlines (the major one) and resource utilization (the minor one), which are quantifying user satisfaction and resource efficiency, respectively.

- We propose a hybrid heuristic algorithm (PGSAO) for the task scheduling problem, by integrating GA into PSO. In PGSAO, each individual corresponds to a task scheduling solution, with an integer-encoding strategy. During the population evolution phase, PGSAO performs crossover and mutation operators on each individual to produce offspring, where the individual is crossed with not only another individual (like GA) but also its personal best and the global best codes (the self-cognition and social cognition of PSO). After each operator, every individual is replaced by its best offspring.

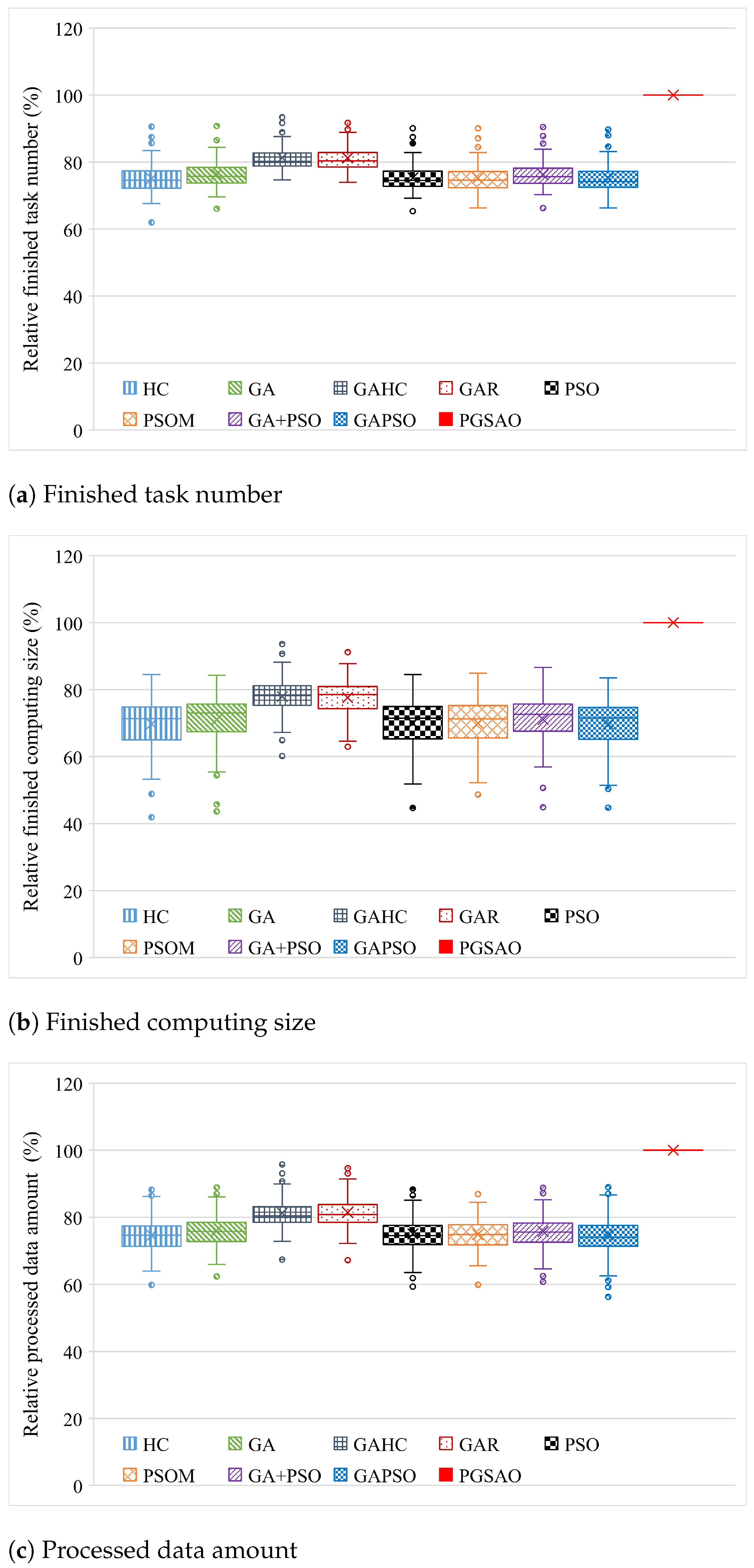

- We conduct extensive simulated experiments to evaluate the performance of PGSAO. Experiment results show that PGSAO can achieve 23.0–33.2% more finished tasks and 27.9–43.7% higher resource utilization, on average, compared with eight other methods, which verifies the performance superiority of PGSAO.

2. Related Works

3. Problem Formulation

4. Hybrid GA and PSO Scheduling Algorithm

| Algorithm 1 PGSAO: the hybrid GA and PSO scheduling |

|

- (1)

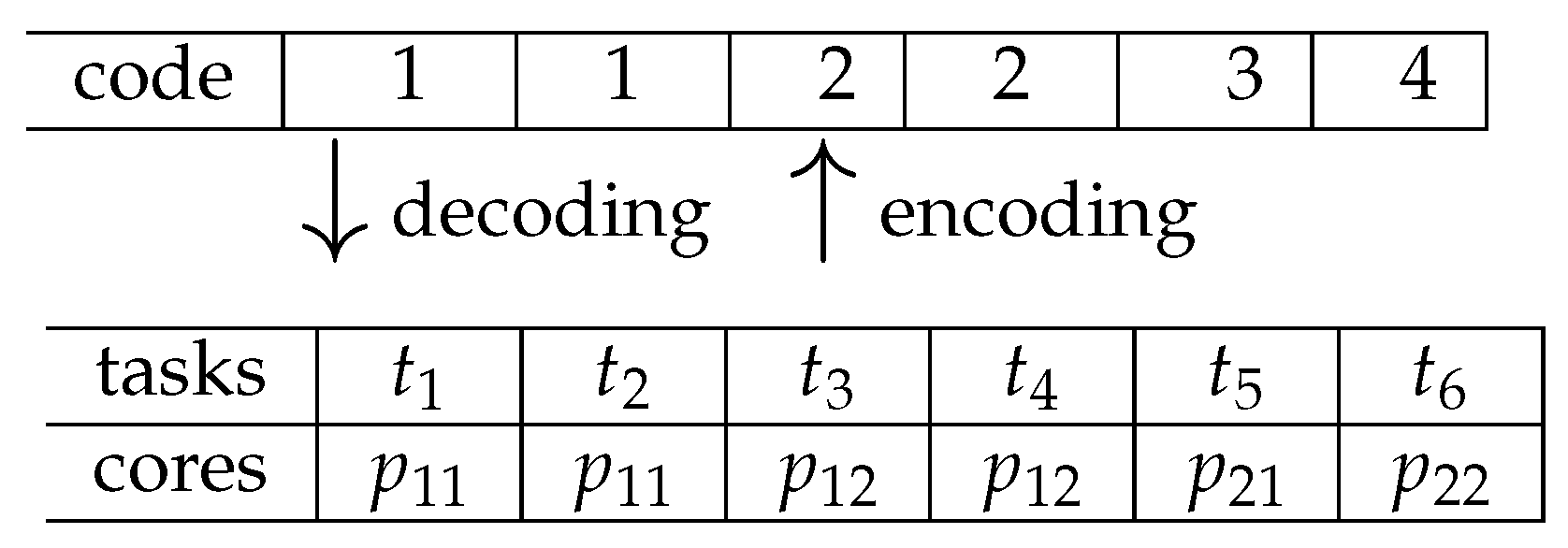

- PGSAO randomly initializes several starting points (codes of particles) for following searches (line 1), and evaluates the fitness of each code (line 2). Meanwhile, PGSAO records the personal best code as its initialized one for each particle (line 3), and the global best code as the best one in all codes of particles (line 4).

- (2)

- PGSAO explores and exploits the solution space by updating codes of particles with the crossover and mutation operators of GA as well as the self-cognition and social cognition ideas of PSO (lines 5–16). In detail, PGSAO repeats the following steps for each particle (line 6), until the predefined terminal condition is reached (line 5).

- (a)

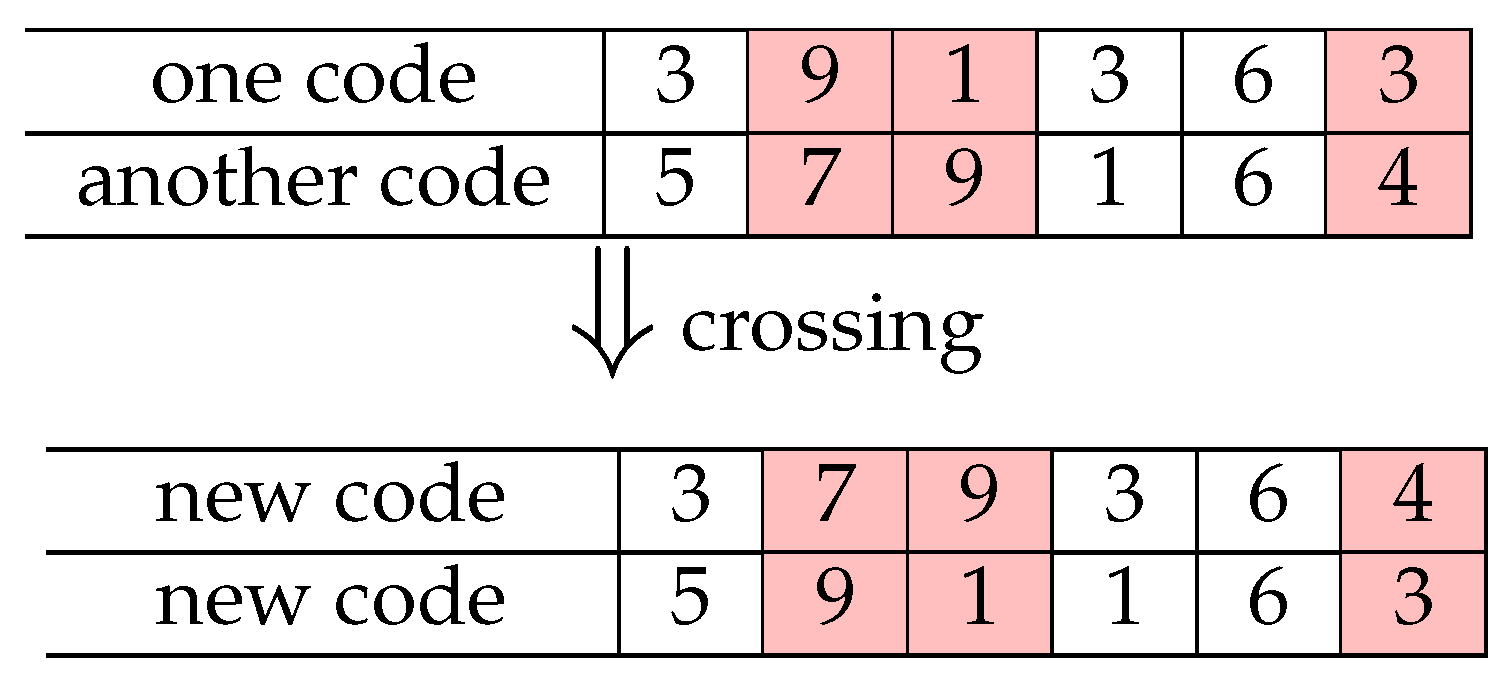

- With set crossover probability, PGSAO crosses the particle’s code with the code of another particle, its personal best code and the global best code, respectively, by uniform crossover operator. The latter two crossovers are exploiting the self-cognition and social cognition in the population, respectively.

- (b)

- For each of the six new codes produced by crossover operators in the previous step, PGSAO evaluates its fitness, and compares its fitness with that of the personal best code and the global best code, respectively. When the new code has a better fitness, the personal/global best code is updated as the new code.

- (c)

- PGSAO updates the particle’ code as the best one of the above six new codes. This step combines the position-updating strategy of PSO and the tournament selection operator of GA.

- (d)

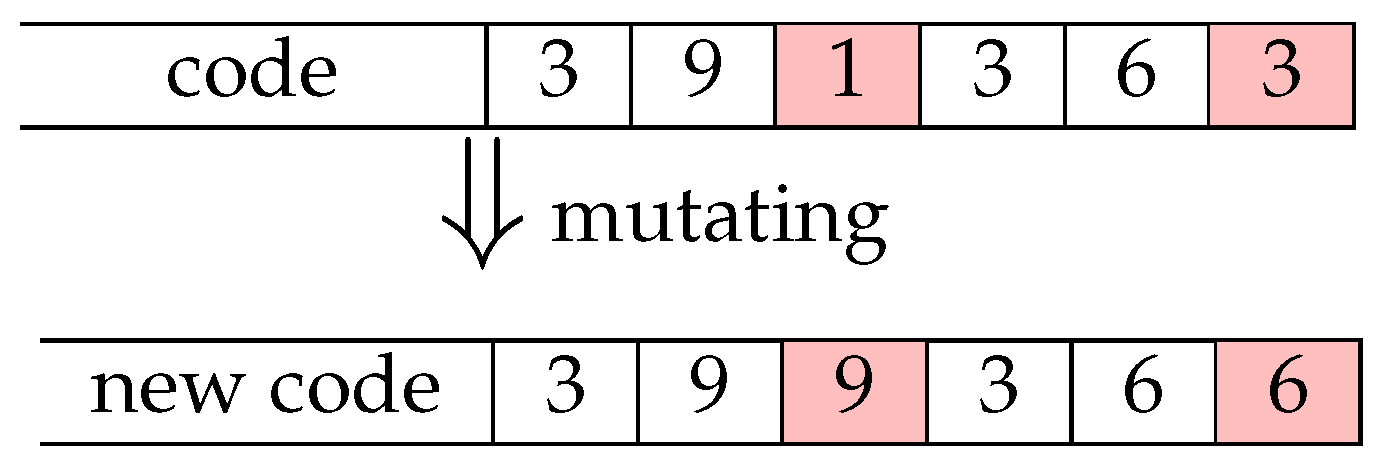

- PGSAO performs a uniform mutation operator on the particle’s code, with set mutation probability. This produces a new code.

- (e)

- PGSAO does the same as in steps 2b and 2c for the new code produced by the mutation operator in the previous step.

- (3)

- PGSAO returns the best solution decoded by the global best code (lines 17 and 18).

4.1. Encoding Method

4.2. Crossover Operator

4.3. Mutation Operator

4.4. Complexity Analysis

5. Performance Evaluation

5.1. Experiment Environment

- GA with HC (GAHC) is proposed in [37]. It performs an iteration of HC on each individual before the evolution of GA. Meanwhile, GAHC replaces the selection operator with a replacement, such as the particle updating idea used in PSO.

- GA with replacement (GAR) uses the replacement instead of the selection operator in GA, which is same as GAHC except that GAR does use the HC operator.

- Particle swarm optimization (PSO) is also one of the most popular algorithm for the task-scheduling problem, e.g., [40]. PSO exploits the movement behaviour of a bird flock, fish school, or insect swarm, to evolve its population.

- PSO with Mutation (PSOM) improves PSO by the mutation operator [31]. It performs the mutation operator on each particle after each evolution of PSO.

- GA+PSO first exploits GA at the first half phase of population evolution, and then evolves the population from GA by PSO at the second [32].

- GAPSO first uses GA to evolve its population, and then applies PSO on the evolution, in each iteration [33].

- User Satisfaction has great influence on the income and reputation of clouds [41]. The following three metrics are used for its quantification. The number (N), computing size (), and cumulated input data amount () of accepted tasks.

- Resource Efficiency determines the operator cost of clouds, to a large extent. It is quantified by following three metrics, the overall resource utilization (U), energy efficiency in computing, and energy efficiency in data processing, which are, respectively, calculated byandThe latter two are the finished computing size and the processed data amount, respectively, by consuming one energy unit.

- Processing Efficiency is the task processing rate on the cloud. Two metrics are applied for its evaluation, the finished computing size and the processed data amount per time unit, which can be calculated, respectively, byand

5.2. Experiment Results

5.2.1. User Satisfaction

5.2.2. Resource Efficiency

5.2.3. Processing Efficiency

6. Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| ACO | Ant colony algorithm |

| BNLP | Binary nonlinear programming problems |

| CSA | Cuckoo search algorithm |

| CWO | Cat swarm optimization |

| DE | Differential evolution |

| DFS | Distributed file system |

| EDF | Earliest deadline first |

| EO | Extremal optimization |

| GA | Genetic algorithm |

| GAHC | GA with HC |

| GAR | GA with replacement |

| GSA | Gravitational Search Algorithm |

| GWO | Grey wolf optimizer |

| HC | Hill climbing |

| HEFT | Heterogeneous earliest finish time |

| MI | Million instructions |

| MIPS | MI per second |

| PSO | Particle swarm optimization |

| PSOM | PSO with mutation |

| SA | Simulated annealing |

| SSA | Salp swarm algorithm |

| VM | Virtual machines |

| WOA | Whale optimization algorithm |

References

- Statista Inc. Public Cloud Services End-User Spending Worldwide from 2017 to 2023. 2022. Available online: https://www.statista.com/statistics/273818/global-revenue-generated-with-cloud-computing-since-2009/ (accessed on 1 August 2023).

- Statista Inc. Europe: Cloud Computing Market Size Forecast 2017–2030. 2022. Available online: https://www.statista.com/statistics/1260032/european-cloud-computing-market-size/ (accessed on 1 August 2023).

- Guo, J.; Chang, Z.; Wang, S.; Ding, H.; Feng, Y.; Mao, L.; Bao, Y. Who Limits the Resource Efficiency of My Datacenter: An Analysis of Alibaba Datacenter Traces. In Proceedings of the International Symposium on Quality of Service, New York, NY, USA, 24–25 June 2019; IWQoS ’19; Article ID: 39. pp. 1–10. [Google Scholar] [CrossRef]

- Katal, A.; Dahiya, S.; Choudhury, T. Energy efficiency in cloud computing data centers: A survey on software technologies. Clust. Comput. 2023, 26, 1845–1875. [Google Scholar] [CrossRef] [PubMed]

- Ghafari, R.; Kabutarkhani, F.H.; Mansouri, N. Task scheduling algorithms for energy optimization in cloud environment: A comprehensive review. Clust. Comput. 2022, 25, 1035–1093. [Google Scholar] [CrossRef]

- Jamil, B.; Ijaz, H.; Shojafar, M.; Munir, K.; Buyya, R. Resource Allocation and Task Scheduling in Fog Computing and Internet of Everything Environments: A Taxonomy, Review, and Future Directions. ACM Comput. Surv. 2022, 54, 233. [Google Scholar] [CrossRef]

- Du, J.; Leung, J.Y.T. Complexity of Scheduling Parallel Task Systems. SIAM J. Discret. Math. 1989, 2, 473–487. [Google Scholar] [CrossRef]

- Durasević, M.; Jakobović, D. Heuristic and metaheuristic methods for the parallel unrelated machines scheduling problem: A survey. Artif. Intell. Rev. 2023, 56, 3181–3289. [Google Scholar] [CrossRef]

- S., V.C.S.; S., A.H. Nature inspired meta heuristic algorithms for optimization problems. Computing 2022, 104, 251–269. [Google Scholar] [CrossRef]

- Abualigah, L.; Elaziz, M.A.; Khasawneh, A.M.; Alshinwan, M.; Ibrahim, R.A.; Al-qaness, M.A.A.; Mirjalili, S.; Sumari, P.; Gandomi, A.H. Meta-heuristic optimization algorithms for solving real-world mechanical engineering design problems: A comprehensive survey, applications, comparative analysis, and results. Neural Comput. Appl. 2022, 34, 4081–4110. [Google Scholar] [CrossRef]

- Dinachali, B.P.; Jabbehdari, S.; Javadi, H.H.S. A pricing approach for optimal use of computing resources in cloud federation. J. Supercomput. 2023, 79, 3055–3094. [Google Scholar] [CrossRef]

- Jahangard, L.R.; Shirmarz, A. Taxonomy of green cloud computing techniques with environment quality improvement considering: A survey. Int. J. Energy Environ. Eng. 2022, 13, 1247–1269. [Google Scholar] [CrossRef]

- Chi, H.R.; Wu, C.K.; Huang, N.F.; Tsang, K.F.; Radwan, A. A Survey of Network Automation for Industrial Internet-of-Things Towards Industry 5.0. IEEE Trans. Ind. Inform. 2023, 19, 2065–2077. [Google Scholar] [CrossRef]

- Katoch, S.; Chauhan, S.S.; Kumar, V. A review on genetic algorithm: Past, present, and future. Multimed. Tools Appl. 2021, 80, 8091–8126. [Google Scholar] [CrossRef]

- Houssein, E.H.; Gad, A.G.; Hussain, K.; Suganthan, P.N. Major Advances in Particle Swarm Optimization: Theory, Analysis, and Application. Swarm Evol. Comput. 2021, 63, 100868. [Google Scholar] [CrossRef]

- Shao, K.; Song, Y.; Wang, B. PGA: A New Hybrid PSO and GA Method for Task Scheduling with Deadline Constraints in Distributed Computing. Mathematics 2023, 11, 1548. [Google Scholar] [CrossRef]

- Nabi, S.; Ahmed, M. PSO-RDAL: Particle swarm optimization-based resource- and deadline-aware dynamic load balancer for deadline constrained cloud tasks. J. Supercomput. 2022, 78, 4624–4654. [Google Scholar] [CrossRef]

- Nabi, S.; Ahmad, M.; Ibrahim, M.; Hamam, H. AdPSO: Adaptive PSO-Based Task Scheduling Approach for Cloud Computing. Sensors 2022, 22, 920. [Google Scholar] [CrossRef] [PubMed]

- Pirozmand, P.; Hosseinabadi, A.A.R.; Farrokhzad, M.; Sadeghilalimi, M.; Mirkamali, S.; Slowik, A. Multi-objective hybrid genetic algorithm for task scheduling problem in cloud computing. Neural Comput. Appl. 2021, 33, 13075–13088. [Google Scholar] [CrossRef]

- Pradhan, R.; Satapathy, S.C. Energy Aware Genetic Algorithm for Independent Task Scheduling in Heterogeneous Multi-Cloud Environment. J. Sci. Ind. Res. 2022, 81, 776–784. [Google Scholar] [CrossRef]

- Malti, A.N.; Hakem, M.; Benmammar, B. Multi-objective task scheduling in cloud computing. Concurr. Comput. Pract. Exp. 2022, 34, e7252. [Google Scholar] [CrossRef]

- Mangalampalli, S.; Swain, S.K.; Mangalampalli, V.K. Multi Objective Task Scheduling in Cloud Computing Using Cat Swarm Optimization Algorithm. Arab. J. Sci. Eng. 2022, 47, 1821–1830. [Google Scholar] [CrossRef]

- Mangalampalli, S.; Swain, S.K.; Mangalampalli, V.K. Prioritized Energy Efficient Task Scheduling Algorithm in Cloud Computing Using Whale Optimization Algorithm. Wirel. Pers. Commun. 2022, 126, 2231–2247. [Google Scholar] [CrossRef]

- Aghdashi, A.; Mirtaheri, S.L. Novel dynamic load balancing algorithm for cloud-based big data analytics. J. Supercomput. 2022, 78, 4131–4156. [Google Scholar] [CrossRef]

- Belgacem, A.; Beghdad-Bey, K. Multi-objective workflow scheduling in cloud computing: Trade-off between makespan and cost. Clust. Comput. 2022, 25, 579–595. [Google Scholar] [CrossRef]

- Aktan, M.N.; Bulut, H. Metaheuristic task scheduling algorithms for cloud computing environments. Concurr. Comput. Pract. Exp. 2022, 34, e6513. [Google Scholar] [CrossRef]

- Pradeep, K.; Ali, L.J.; Gobalakrishnan, N.; Raman, C.J.; Manikandan, N. CWOA: Hybrid Approach for Task Scheduling in Cloud Environment. Comput. J. 2022, 65, 1860–1873. [Google Scholar] [CrossRef]

- Pirozmand, P.; Javadpour, A.; Nazarian, H.; Pinto, P.; Mirkamali, S.; Ja’fari, F. GSAGA: A hybrid algorithm for task scheduling in cloud infrastructure. J. Supercomput. 2022, 78, 17423–17449. [Google Scholar] [CrossRef]

- Jain, R.; Sharma, N. A quantum inspired hybrid SSA–GWO algorithm for SLA based task scheduling to improve QoS parameter in cloud computing. Clust. Comput. 2022, 1–24. [Google Scholar] [CrossRef]

- Cheikh, S.; Walker, J.J. Solving Task Scheduling Problem in the Cloud Using a Hybrid Particle Swarm Optimization Approach. Int. J. Appl. Metaheuristic Comput. 2022, 13, 1–25. [Google Scholar] [CrossRef]

- Hafsi, H.; Gharsellaoui, H.; Bouamama, S. Genetically-modified Multi-objective Particle Swarm Optimization approach for high-performance computing workflow scheduling. Appl. Soft Comput. 2022, 122, 108791. [Google Scholar] [CrossRef]

- Nwogbaga, N.E.; Latip, R.; Affendey, L.S.; Rahiman, A.R.A. Attribute reduction based scheduling algorithm with enhanced hybrid genetic algorithm and particle swarm optimization for optimal device selection. J. Cloud Comput. 2022, 11, 15. [Google Scholar] [CrossRef]

- Xian Wang, B.; Wu, P.; Arefzaeh, M. A new method for task scheduling in fog-based medical healthcare systems using a hybrid nature-inspired algorithm. Concurr. Comput. Pract. Exp. 2022, 34, e7155. [Google Scholar] [CrossRef]

- MathWorks, I. Optimization Toolbox: Solve Linear, Quadratic, Conic, Integer, and Nonlinear Optimization Problems. 2022. Available online: https://ww2.mathworks.cn/en/products/optimization.html (accessed on 1 August 2023).

- Jong, K.A.D.; Spears, W.M. A formal analysis of the role of multi-point crossover in genetic algorithms. Ann. Math. Artif. Intell. 1992, 5, 1–26. [Google Scholar] [CrossRef]

- Nabi, S.; Ahmed, M. OG-RADL: Overall performance-based resource-aware dynamic load-balancer for deadline constrained Cloud tasks. J. Supercomput. 2021, 77, 7476–7508. [Google Scholar] [CrossRef]

- Hussain, A.A.; Al-Turjman, F. Hybrid Genetic Algorithm for IOMT-Cloud Task Scheduling. Wirel. Commun. Mob. Comput. 2022, 2022, 6604286. [Google Scholar] [CrossRef]

- Wang, B.; Wang, C.; Huang, W.; Song, Y.; Qin, X. Security-aware task scheduling with deadline constraints on heterogeneous hybrid clouds. J. Parallel Distrib. Comput. 2021, 153, 15–28. [Google Scholar] [CrossRef]

- Athmani, M.E.; Arbaoui, T.; Mimene, Y.; Yalaoui, F. Efficient Heuristics and Metaheuristics for the Unrelated Parallel Machine Scheduling Problem with Release Dates and Setup Times. In Proceedings of the Genetic and Evolutionary Computation Conference, Boston, MA, USA, 9–13 July 2022; GECCO ’22. pp. 177–185. [Google Scholar] [CrossRef]

- Teraiya, J.; Shah, A. Optimized scheduling algorithm for soft Real-Time System using particle swarm optimization technique. Evol. Intell. 2022, 15, 1935–1945. [Google Scholar] [CrossRef]

- Wang, B.; Cheng, J.; Cao, J.; Wang, C.; Huang, W. Integer particle swarm optimization based task scheduling for device-edge-cloud cooperative computing to improve SLA satisfaction. PeerJ Comput. Sci. 2022, 8, e893. [Google Scholar] [CrossRef] [PubMed]

| #Core | ||

|---|---|---|

| 110 | 175 | |

| 125 | 210 | |

| 210 | 300 | |

| 350 | 500 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shao, K.; Fu, H.; Wang, B. An Efficient Combination of Genetic Algorithm and Particle Swarm Optimization for Scheduling Data-Intensive Tasks in Heterogeneous Cloud Computing. Electronics 2023, 12, 3450. https://doi.org/10.3390/electronics12163450

Shao K, Fu H, Wang B. An Efficient Combination of Genetic Algorithm and Particle Swarm Optimization for Scheduling Data-Intensive Tasks in Heterogeneous Cloud Computing. Electronics. 2023; 12(16):3450. https://doi.org/10.3390/electronics12163450

Chicago/Turabian StyleShao, Kaili, Hui Fu, and Bo Wang. 2023. "An Efficient Combination of Genetic Algorithm and Particle Swarm Optimization for Scheduling Data-Intensive Tasks in Heterogeneous Cloud Computing" Electronics 12, no. 16: 3450. https://doi.org/10.3390/electronics12163450

APA StyleShao, K., Fu, H., & Wang, B. (2023). An Efficient Combination of Genetic Algorithm and Particle Swarm Optimization for Scheduling Data-Intensive Tasks in Heterogeneous Cloud Computing. Electronics, 12(16), 3450. https://doi.org/10.3390/electronics12163450