Enhancing Privacy-Preserving Intrusion Detection through Federated Learning

Abstract

1. Introduction

2. Background

2.1. Federated Learning for Anomaly Intrusion Detection System

- Privacy Preservation: Federated learning ensures privacy by allowing for the creation of a global IDS model without sharing the sensitive security logs of the individual branches or entities. By training on distributed data without transmitting the actual data, federated learning protects the privacy and confidentiality of sensitive information. This is particularly crucial in an IDS, where network traffic and security event data can be highly sensitive.

- Enhanced Security: Federated learning provides security benefits by keeping the data on local devices and minimizing data transfers to a central server. This approach reduces the risk of unauthorized access, interception, or the theft of data during transmission, which is a significant concern in IDS applications.

- Improved Efficiency: In large-scale IDS applications, traditional server-based approaches may require transferring substantial amounts of data to a central server for analysis, thereby leading to high communication costs. Federated learning minimizes data transfers by only sharing model updates, thus resulting in reduced communication costs. This efficiency gain is particularly valuable in scenarios with limited network bandwidth and significant data volumes.

- Scalability: Federated learning is highly scalable, thus making it suitable for IDS applications involving distributed data across multiple devices or locations. For instance, in smart city networks with numerous sensors monitoring traffic, federated learning can develop a global IDS model that analyzes data from all sensors while maintaining the data locally. It enables the effective analysis and detection of security events across the entire network.

- Collaborative Approach: Federated learning allows for collaboration among multiple entities without compromising data privacy and security. In an IDS, where different organizations or departments may need to work together, federated learning enables each entity to train its local model on its own data while contributing to a shared global model. This collaboration enhances the overall performance of the IDS by incorporating diverse data sources and perspectives.

- Real-Time Updates: With federated learning, local models are trained in real time on each device, and updates are sent to the central server immediately. This enables the IDS to adapt swiftly to changing network conditions and detect new threats in real time.

- Improved Accuracy: Federated learning leverages a larger and more diverse dataset, thereby leading to enhanced accuracy and performance. By training the model on diverse data from different sources, the IDS becomes capable of detecting a wider range of threats, thus ultimately improving the overall accuracy.

- Reduced Training Time: Compared to traditional centralized approaches, federated learning significantly reduces the training time and resource requirements. By training the model on local devices, the IDS achieves improved efficiency and expedites the training process.

- Cost-Saving: Federated learning offers cost savings by utilizing existing devices on the network for model training. Organizations can leverage their infrastructure without the need for additional hardware or software investments, thus resulting in cost-effective IDS deployment and maintenance.

- By leveraging these advantages, federated learning proves to be a promising approach for developing advanced, privacy-preserving, efficient, and collaborative IDS solutions.

2.2. Federated Learning Architecture

2.3. Federated Learning Techniques

2.4. Aggregation in Federated Learning

- 1.

- Federated Averaging (FedAvg): FedAvg is a widely used aggregation method in FL. In FedAvg, the local model updates from clients, typically in the form of gradients or model weights, which are averaged to obtain the global model update. The central server computes the average of the local updates and applies them to the global model. FedAvg is simple to implement and can achieve a good performance in many FL scenarios.

- 2.

- Weighted Federated Averaging: Weighted Federated Averaging is an extension of FedAvg that assigns different weights to different clients during the aggregation process. The weights can be based on various criteria, such as the number of samples or the performance of the clients’ local models. The central server computes the weighted average of the local model updates, thereby taking into account the assigned weights. Weighted federated averaging allows for the differential treatment of clients based on their contributions or capabilities [8].

- 3.

- Federated Averaging with Momentum (FedAvgM): Federated averaging with momentum (FedAvgM) is an extension of federated averaging that incorporates momentum into the update process. In the traditional gradient descent, momentum is used to accelerate the convergence of the optimization algorithm by taking into account the past gradients [10]. Similarly, in FedAvgM, the past gradients from the participating devices are used to accelerate the convergence of the federated optimization algorithm.The updating equation for FedAvgM is as follows:where is the momentum vector at time t, is the momentum coefficient, is the learning rate, is the average gradient of the participating devices at time t, and is the updated model at time t.FedAvgM has been shown to improve the convergence rate and final accuracy of the federated learning algorithm, especially in scenarios where the participating devices have a heterogeneous data distribution. To provide a deeper understanding of FedAvgM, we defined the following variables:

- = Current global model parameters;

- = Current global model parameters;

- = the previous global model parameters;

- = local model update of device i;

- m = the momentum term;

- = the local loss.

- (a)

- Initialization:

- and m are initialized by the central server.

- (b)

- Client Update:

- Each client device i updates its local model parameter by minimizing its local loss function .

- The local model update for the device i is given by

- (c)

- Server Aggregation:

- The central server aggregates the local model updates from all the devices to obtain the current aggregated local model update, which is denoted as G. The aggregation is performed by summing up all the local model updates across all client devices and dividing by the total number of client devices (N) to obtain the average:

- (d)

- Momentum Update:

- The momentum term m is updated using the formula:where, is the momentum parameter. The formula combines the previous value of m, the difference between the current and previous global model parameters, and the aggregated local model update to calculate the new value of m.

- 4.

- Secure Aggregation: Secure aggregation is an aggregation method that focuses on ensuring privacy and security in FL. It typically involves using cryptographic techniques, such as secure multiparty computation (SMPC) or homomorphic encryption, to protect the privacy of local model updates during the aggregation process. Secure aggregation can provide strong privacy guarantees, but it may also introduce additional computational overhead and complexity compared to other aggregation methods.

2.5. Data Selection

- 1.

- Random selection: Clients are randomly chosen from the available participating clients in each round. This approach ensures that all clients have an equal chance of being selected, and it can be simple to implement. However, it may not take into account the heterogeneity of the clients’ data or their suitability for the task at hand. The use of random selection helps to prevent overfitting by ensuring that each client receives a diverse set of training data.

- 2.

- Stratified selection: Clients are selected in a stratified manner, thus taking into account their data characteristics, such as data distribution or data size. For example, clients with larger data sizes or more representative data distributions may be given a higher probability of selection. This approach aims to ensure that the selected clients are representative of the overall data distribution and can contribute more meaningfully to the global model.

- 3.

- Proactive selection: Clients are proactively chosen based on certain criteria, such as their data quality, model performance, or resource availability. For example, clients with higher data quality or better computational resources may be given a higher priority for selection. This approach aims to prioritize clients who are likely to provide higher-quality updates to the global model, thereby potentially leading to faster convergence or better performance.

- 4.

- Dynamic selection: Clients are dynamically selected based on their recent performance or contribution to the global model. For example, clients that have recently provided better updates or have been less frequently selected in previous rounds may be given a higher probability of selection. This approach aims to adaptively adjust the client selection based on their recent performance, which can potentially improve the overall performance of the federated learning process.

3. Related Work

4. Preserving Privacy in Federated Learning for Intrusion Detection

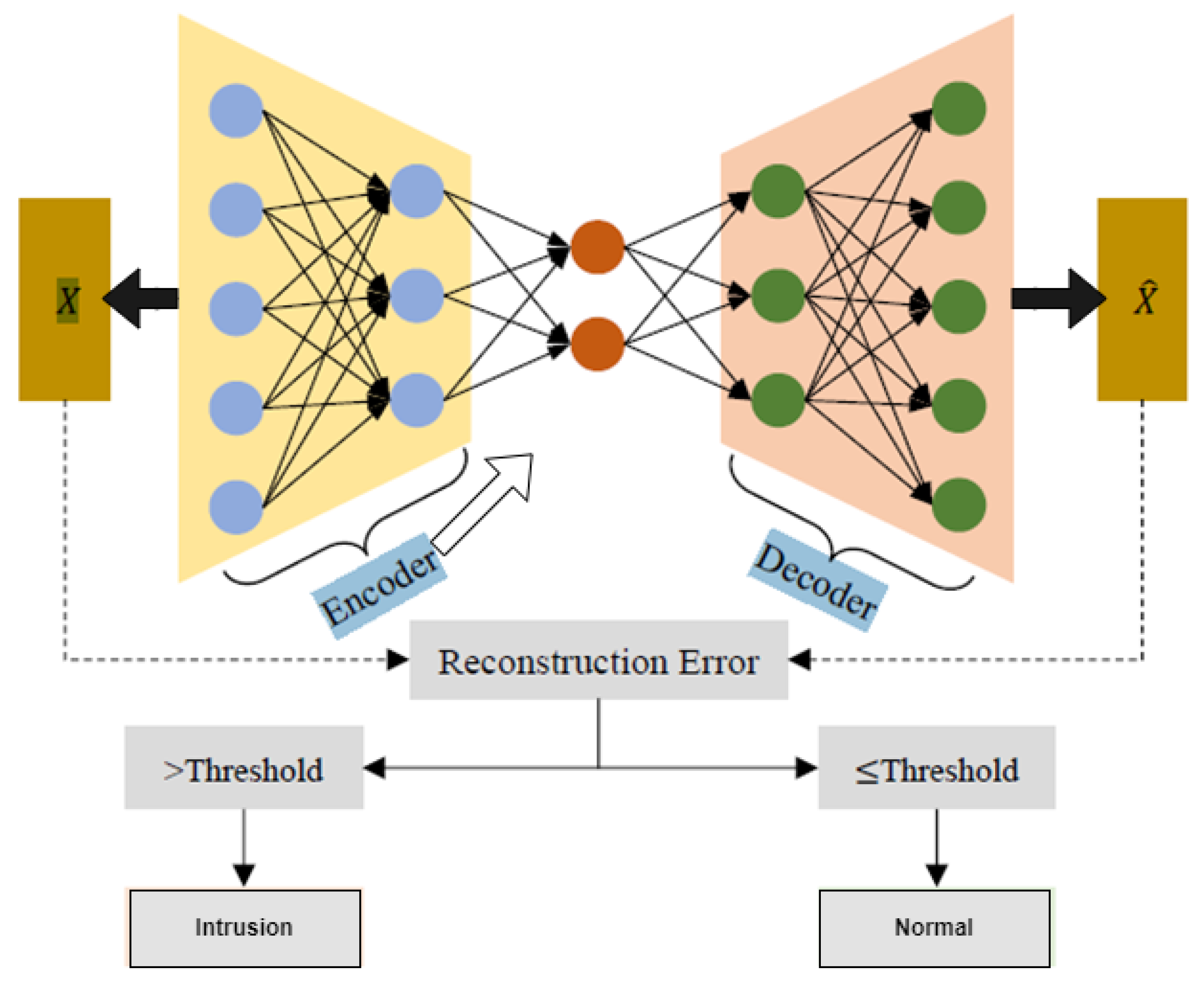

Anomaly Detection with Autoencoder Model

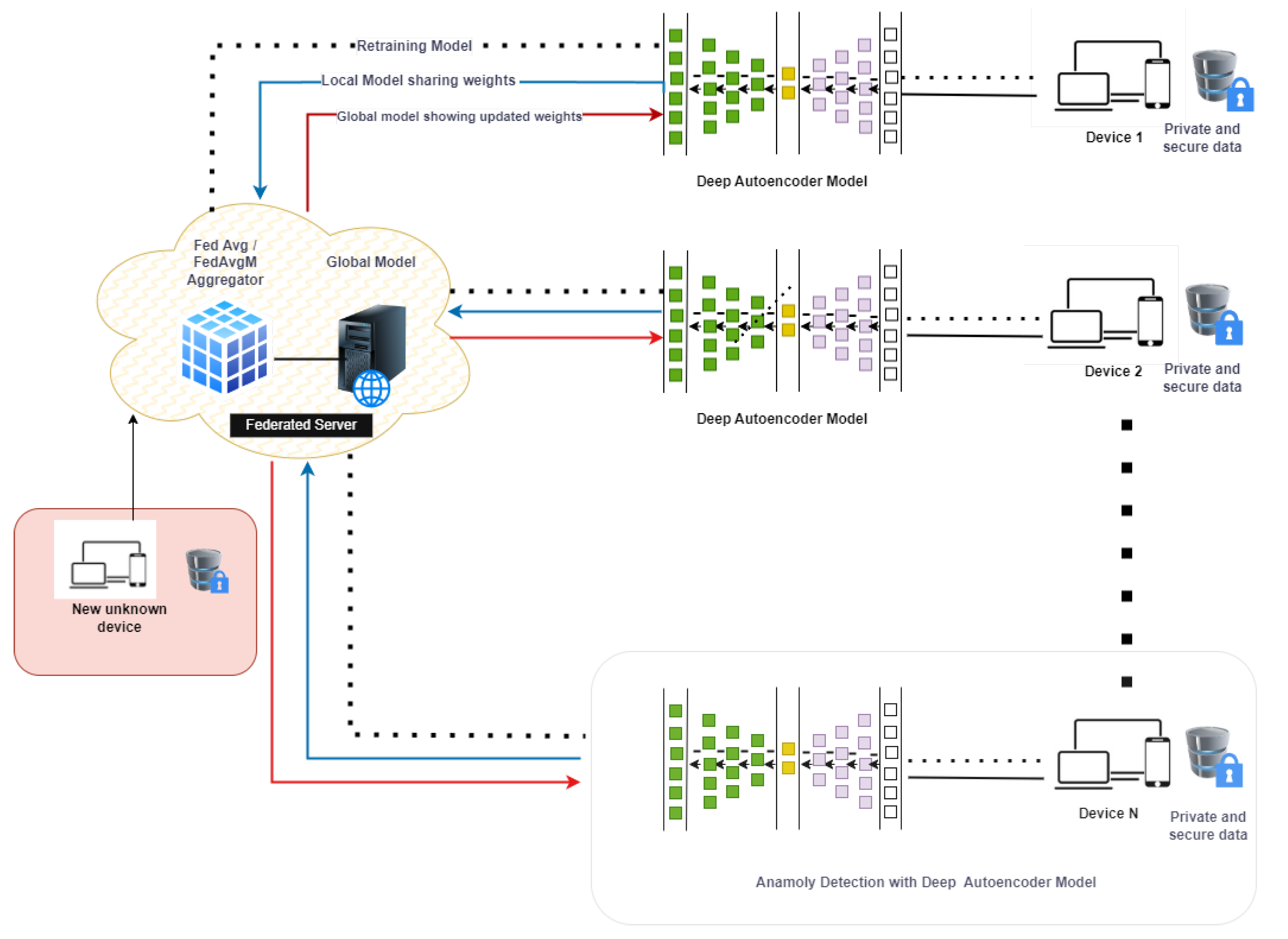

FELIDS (Federated-Learning-Based Intrusion Detection System)

- 1.

- The server initializes the global model parameters.

- 2.

- Each client independently trains a local model using its own local data, which follows the FL training procedure.

- 3.

- The clients securely send their local model updates to the server through the secure gRPC channel.

- 4.

- The server aggregates the received local model updates, typically by using the federated averaging (FedAvg) algorithm.

- 5.

- The server updates the global model parameters using the aggregated model updates.

- 6.

- Steps 2–5 are repeated for the specified number of FL rounds (R), thus allowing the models to iteratively improve and converge towards a better intrusion detection model.

- 7.

- After the FL process is completed, the final global model is deployed for intrusion detection across the system.

| Algorithm 1 Start Server algorithm |

|

- D: The entire dataset used for training the machine learning model.

- B: The number of clients or subsets into which the dataset is divided during training.

- E: The number of local training epochs for each client during each round of FedAvg.

- : The preprocessed dataset obtained after applying the preprocess function to D.

- w: The model parameters (weights and biases) shared between clients and the server.

- : The local dataset batch on each client obtained by splitting into B subsets.

- : The learning rate, which controls the step size of the model parameter updates.

- : The gradient of the loss function with respect to w computed on the local batch b.

| Algorithm 2 Client algorithm |

|

5. Experiments and Results

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Khraisat, A.; Alazab, A. A critical review of intrusion detection systems in the internet of things: Techniques, deployment strategy, validation strategy, attacks, public datasets and challenges. Cybersecurity 2021, 4, 18. [Google Scholar] [CrossRef]

- Khraisat, A.; Gondal, I.; Vamplew, P.; Kamruzzaman, J. Survey of intrusion detection systems: Techniques, datasets and challenges. Cybersecurity 2019, 2, 20. [Google Scholar] [CrossRef]

- Alazab, A.; Khraisat, A.; Singh, S. A Review on the Internet of Things (IoT) Forensics: Challenges, Techniques, and Evaluation of Digital Forensic Tools. In Digital Forensics-Challenges and New Frontiers; Reilly, D.D., Ed.; IntechOpen: Rijeka, Croatia, 2023; Chapter 10. [Google Scholar] [CrossRef]

- Alazab, A.; Khraisat, A.; Alazab, M.; Singh, S. Detection of obfuscated malicious JavaScript code. Future Internet 2022, 14, 217. [Google Scholar] [CrossRef]

- Agrawal, S.; Sarkar, S.; Aouedi, O.; Yenduri, G.; Piamrat, K.; Alazab, M.; Bhattacharya, S.; Maddikunta, P.K.R.; Gadekallu, T.R. Federated learning for intrusion detection system: Concepts, challenges and future directions. Comput. Commun. 2022, 195, 346–361. [Google Scholar] [CrossRef]

- Victor, N.; Alazab, M.; Bhattacharya, S.; Magnusson, S.; Maddikunta, P.K.R.; Ramana, K.; Gadekallu, T.R. Federated learning for iout: Concepts, applications, challenges and opportunities. arXiv 2022, arXiv:2207.13976. [Google Scholar]

- Tavallaee, M.; Bagheri, E.; Lu, W.; Ghorbani, A.A. A detailed analysis of the KDD CUP 99 data set. In Proceedings of the 2009 IEEE Symposium on Computational Intelligence for Security and Defense Applications, Ottawa, ON, Canada, 8–10 July 2009; pp. 1–6. [Google Scholar] [CrossRef]

- Khraisat, A.; Gondal, I.; Vamplew, P.; Kamruzzaman, J.; Alazab, A. Hybrid Intrusion Detection System Based on the Stacking Ensemble of C5 Decision Tree Classifier and One Class Support Vector Machine. Electronics 2020, 9, 173. [Google Scholar] [CrossRef]

- Ghimire, B.; Rawat, D.B. Recent advances on federated learning for cybersecurity and cybersecurity for federated learning for internet of things. IEEE Internet Things J. 2022, 9, 8229–8249. [Google Scholar] [CrossRef]

- Sun, T.; Li, D.; Wang, B. Decentralized federated averaging. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 4289–4301. [Google Scholar] [CrossRef] [PubMed]

- Wei, K.; Li, J.; Ding, M.; Ma, C.; Yang, H.H.; Farokhi, F.; Jin, S.; Quek, T.Q.; Poor, H.V. Federated learning with differential privacy: Algorithms and performance analysis. IEEE Trans. Inf. Forensics Secur. 2020, 15, 3454–3469. [Google Scholar] [CrossRef]

- Fereidooni, H.; Marchal, S.; Miettinen, M.; Mirhoseini, A.; Möllering, H.; Nguyen, T.D.; Rieger, P.; Sadeghi, A.R.; Schneider, T.; Yalame, H.; et al. SAFELearn: Secure aggregation for private federated learning. In Proceedings of the 2021 IEEE Security and Privacy Workshops (SPW), San Francisco, CA, USA, 27 May 2021; IEEE: Piscataway, NJ, USA; pp. 56–62. [Google Scholar]

- Liu, Y.; Kang, Y.; Xing, C.; Chen, T.; Yang, Q. A secure federated transfer learning framework. IEEE Intell. Syst. 2020, 35, 70–82. [Google Scholar] [CrossRef]

- Hu, L.; Yan, H.; Li, L.; Pan, Z.; Liu, X.; Zhang, Z. MHAT: An efficient model-heterogenous aggregation training scheme for federated learning. Inf. Sci. 2021, 560, 493–503. [Google Scholar] [CrossRef]

- Elahi, F.; Fazlali, M.; Malazi, H.T.; Elahi, M. Parallel fractional stochastic gradient descent with adaptive learning for recommender systems. IEEE Trans. Parallel Distrib. Syst. 2022, 1–14. [Google Scholar] [CrossRef]

- So, J.; He, C.; Yang, C.S.; Li, S.; Yu, Q.; Ali, R.E.; Guler, B.; Avestimehr, S. Lightsecagg: A lightweight and versatile design for secure aggregation in federated learning. Proc. Mach. Learn. Syst. 2022, 4, 694–720. [Google Scholar]

- Xing, H.; Xiao, Z.; Qu, R.; Zhu, Z.; Zhao, B. An efficient federated distillation learning system for multitask time series classification. IEEE Trans. Instrum. Meas. 2022, 71, 1–12. [Google Scholar] [CrossRef]

- Friha, O.; Ferrag, M.A.; Shu, L.; Maglaras, L.; Choo, K.K.R.; Nafaa, M. FELIDS: Federated learning-based intrusion detection system for agricultural Internet of Things. J. Parallel Distrib. Comput. 2022, 165, 17–31. [Google Scholar] [CrossRef]

- Attota, D.C.; Mothukuri, V.; Parizi, R.M.; Pouriyeh, S. An ensemble multi-view federated learning intrusion detection for IoT. IEEE Access 2021, 9, 117734–117745. [Google Scholar] [CrossRef]

- Rahman, S.A.; Tout, H.; Talhi, C.; Mourad, A. Internet of things intrusion detection: Centralized, on-device, or federated learning? IEEE Netw. 2020, 34, 310–317. [Google Scholar] [CrossRef]

- Nguyen, T.D.; Marchal, S.; Miettinen, M.; Fereidooni, H.; Asokan, N.; Sadeghi, A.R. DÏoT: A federated self-learning anomaly detection system for IoT. In Proceedings of the 2019 IEEE 39th International Conference on Distributed Computing Systems (ICDCS), Dallas, TX, USA, 7–10 July 2019; pp. 756–767. [Google Scholar] [CrossRef]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; Arcas, B.A.Y. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the Artificial Intelligence and Statistics, PMLR, Ft. Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Alazab, A.; Khraisat, A.; Singh, S.; Bevinakoppa, S.; Mahdi, O.A. Routing Attacks Detection in 6LoWPAN-Based Internet of Things. Electronics 2023, 12, 1320. [Google Scholar] [CrossRef]

| Technique | Description | Advantages | Disadvantages |

|---|---|---|---|

| Federated Averaging [10] | Central server aggregates model updates from multiple clients and sends updated model back to clients. | Efficient, scales well to a large number of clients, and preserves the privacy of client data. | Slow convergence due to communication bottleneck; potential bias towards more frequently updated clients. |

| Federated Learning with Differential Privacy [11] | Adds noise to model updates to protect client privacy. | Provides strong privacy guarantees; allows for more diverse client participation. | Introduces noise to model updates, which may reduce model accuracy. |

| Federated Learning with Secure Aggregation [12] | Utilizes secure multiparty computation to aggregate model updates without revealing client data. | Provides strong privacy guarantees; preserves client data privacy, even in case of compromised server. | Computationally expensive; may require specialized hardware. |

| Federated Transfer Learning [13] | Clients transfer knowledge learned from their local data to a shared model. | Enables learning across domains and improves model generalization. | Requires similar data distributions across clients, which may lead to bias if clients have vastly different data. |

| Aggregation Method | Description | Use Cases |

|---|---|---|

| Federated Averaging [14] | Average of the model parameters from all participating devices is taken as the updated model | Image classification, speech recognition, natural language processing |

| Federated Stochastic Gradient Descent (FSGD) [15] | Aggregation of stochastic gradients from all participating devices to update the global model | Healthcare, finance, edge computing |

| Federated Learning with Secure Aggregation (FSA) [16] | Encrypted data and model parameters are transferred from participating devices to a central server, where the aggregation is performed with the help of secure multiparty computation (MPC) | Privacy-sensitive applications such as healthcare and finance |

| Federated Distillation [17] | Model compression technique where a smaller, more lightweight model is trained on the global model using knowledge distillation | Mobile devices and Internet of Things (IoT) devices |

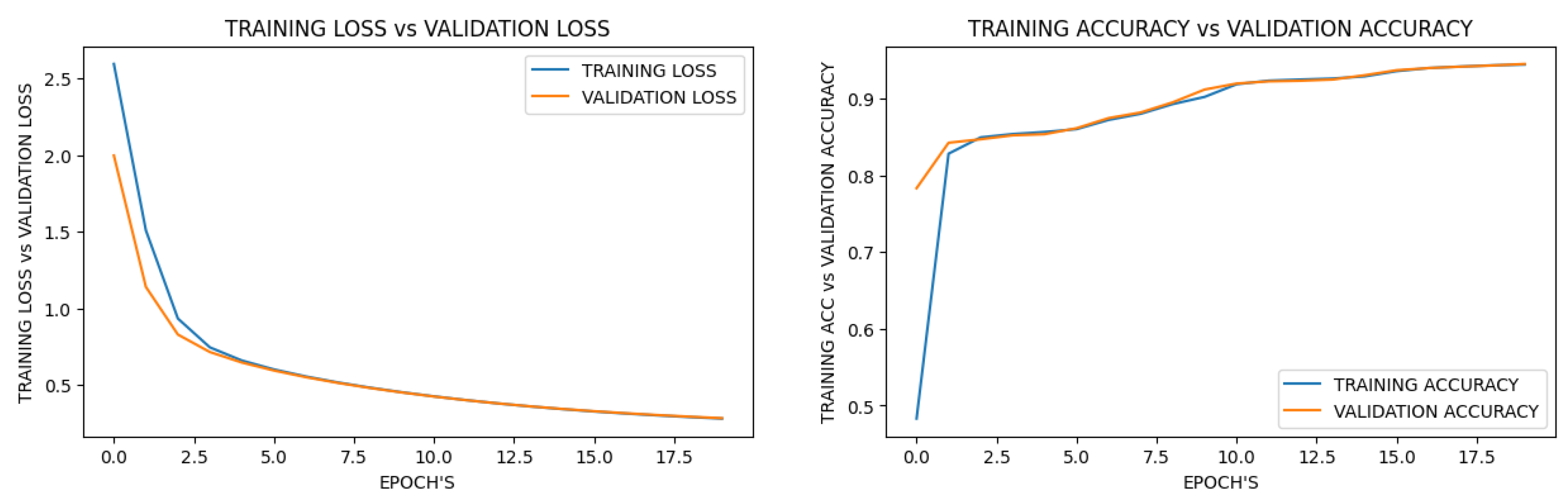

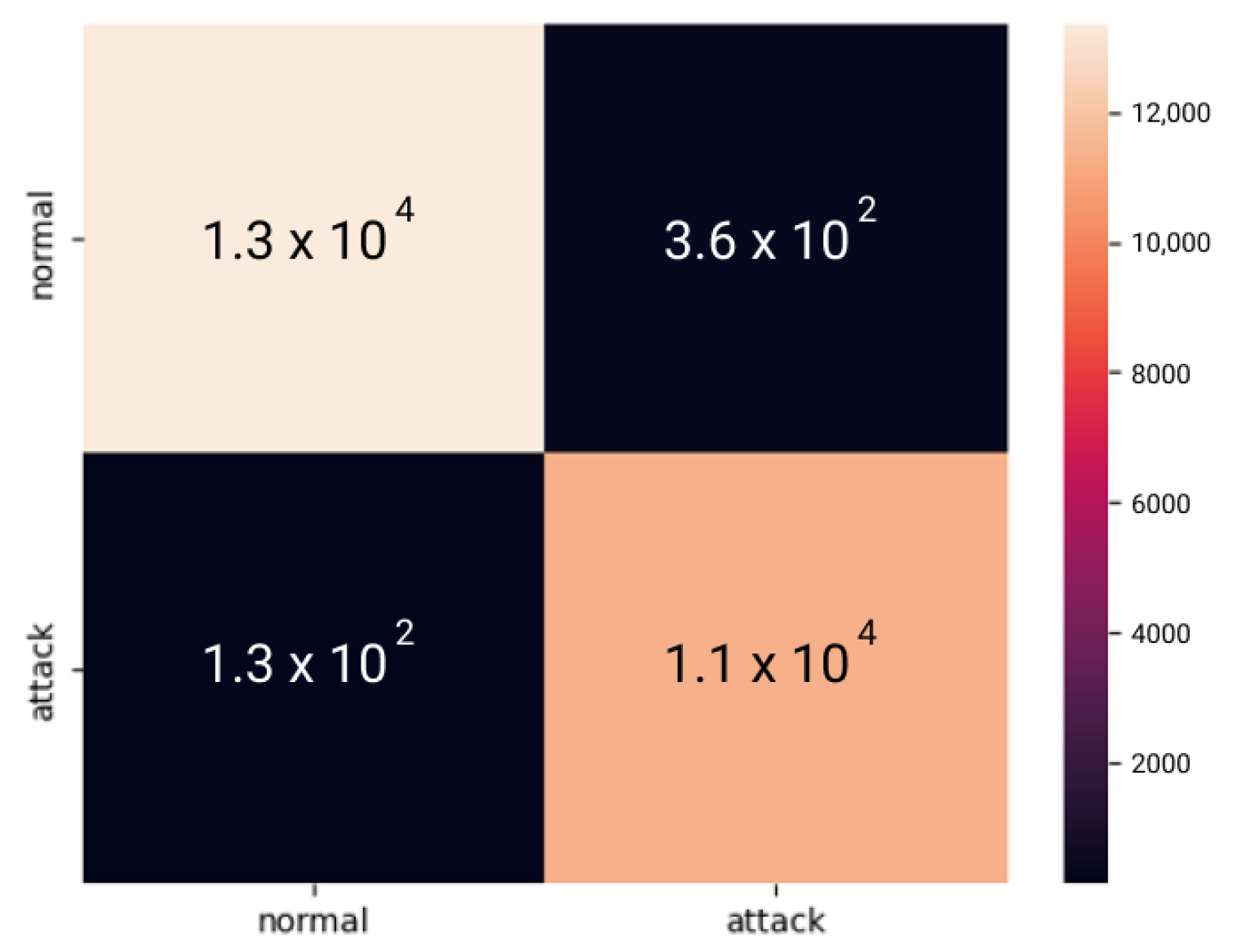

| Learning Types | Deep Learning Accuracy | Deep Learning Loss | Federated Learning Accuracy | Federated Learning Loss |

|---|---|---|---|---|

| 1st(round/epoch) | 78.33 | 1.9984 | 94.40 | 0.5441 |

| 5st(round/epoch) | 85.37 | 0.6459 | 96.54 | 18.1923 |

| 10st(round/epoch) | 91.19 | 0.4505 | 97.15 | 38.5893 |

| 20st(round/epoch) | 94.53 | 0.2824 | 97.77 | 58.3108 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alazab, A.; Khraisat, A.; Singh, S.; Jan, T. Enhancing Privacy-Preserving Intrusion Detection through Federated Learning. Electronics 2023, 12, 3382. https://doi.org/10.3390/electronics12163382

Alazab A, Khraisat A, Singh S, Jan T. Enhancing Privacy-Preserving Intrusion Detection through Federated Learning. Electronics. 2023; 12(16):3382. https://doi.org/10.3390/electronics12163382

Chicago/Turabian StyleAlazab, Ammar, Ansam Khraisat, Sarabjot Singh, and Tony Jan. 2023. "Enhancing Privacy-Preserving Intrusion Detection through Federated Learning" Electronics 12, no. 16: 3382. https://doi.org/10.3390/electronics12163382

APA StyleAlazab, A., Khraisat, A., Singh, S., & Jan, T. (2023). Enhancing Privacy-Preserving Intrusion Detection through Federated Learning. Electronics, 12(16), 3382. https://doi.org/10.3390/electronics12163382