Abstract

Detecting anomalies, intrusions, and security threats in the network (including Internet of Things) traffic necessitates the processing of large volumes of sensitive data, which raises concerns about privacy and security. Federated learning, a distributed machine learning approach, enables multiple parties to collaboratively train a shared model while preserving data decentralization and privacy. In a federated learning environment, instead of training and evaluating the model on a single machine, each client learns a local model with the same structure but is trained on different local datasets. These local models are then communicated to an aggregation server that employs federated averaging to aggregate them and produce an optimized global model. This approach offers significant benefits for developing efficient and effective intrusion detection system (IDS) solutions. In this research, we investigated the effectiveness of federated learning for IDSs and compared it with that of traditional deep learning models. Our findings demonstrate that federated learning, by utilizing random client selection, achieved higher accuracy and lower loss compared to deep learning, particularly in scenarios emphasizing data privacy and security. Our experiments highlight the capability of federated learning to create global models without sharing sensitive data, thereby mitigating the risks associated with data breaches or leakage. The results suggest that federated averaging in federated learning has the potential to revolutionize the development of IDS solutions, thus making them more secure, efficient, and effective.

1. Introduction

In recent years, machine learning (ML) has emerged as a powerful tool for detecting intrusions in computer networks. However, the effectiveness of traditional machine learning approaches relies heavily on the availability of large and diverse datasets, which can be challenging to obtain in practice [1,2]. This challenge is further exacerbated by the distributed and dynamic nature of modern computer networks, which generate vast amounts of data in real time and in heterogeneous formats [3]. Additionally, the centralized nature of traditional machine learning approaches raises significant concerns regarding data privacy and security, especially in sensitive domains such as healthcare, finance, and national security. The risk of data breaches, data leakage, and unauthorized access to sensitive information can undermine the trust and adoption of machine learning solutions in these domains, thereby limiting their potential impact and effectiveness [4]. To address these challenges, federated learning has emerged as a promising approach that enables distributed machine learning without compromising data privacy and security.

To overcome these challenges, federated learning has emerged as a promising approach for developing effective and efficient intrusion detection systems (IDSs). Federated learning allows multiple parties or clients to collaboratively train a shared model while keeping their data decentralized and private [5]. Instead of sending data to a central server for training, each client trains the model locally on its own data and shares only model updates with a central aggregator or server. The server aggregates the model updates from multiple clients and updates the global model, which is then sent back to clients for further iterations. This process is repeated iteratively to refine the global model without sharing the raw data across clients [6]. Such a model is considered useful in distributed computing or IoT network computing. Federated learning offers several advantages over traditional machine learning approaches for IDSs. First, it enables the development of more accurate and robust models by leveraging the diversity of data across multiple clients. Second, it allows for the creation of global models without compromising the privacy and security of sensitive data. Third, it can reduce the communication costs and computational resources required for training large-scale machine learning models. Fourth, it can improve the scalability and efficiency of IDS solutions by enabling the participation of many clients with varying degrees of data heterogeneity [6].

In our research study, we aimed to evaluate the effectiveness of federated learning in developing intrusion detection systems (IDSs) using the NSL-KDD dataset, which is a popular dataset for network intrusion detection [7,8]. To achieve this, we implemented a horizontal federated learning architecture with an average aggregation and random selection of clients to participate in each round of training. We compared the performance of our federated learning model with a traditional deep learning model that was trained on a centralized dataset. Our experimental results showed that the federated learning approach outperformed the traditional deep learning approach in terms of accuracy and loss. This improvement was particularly noticeable in scenarios where data privacy and security posed critical concerns, as federated learning allowed us to develop a robust IDS solution while preserving the privacy of the individual client data.

Our findings demonstrate that federated learning can be a highly effective approach for developing IDS solutions in real-world scenarios, where data privacy and security are major concerns. Specifically, our experiments demonstrated that the federated learning model outperformed the traditional deep learning model in terms of accuracy and loss. We achieved a higher accuracy rate of 98.067% and a lower loss rate with the federated learning model compared to the traditional deep learning model. The advantage of achieving a higher accuracy rate and a lower loss rate with the federated learning model compared to the traditional deep learning model is that it indicates that the federated learning approach is more effective in detecting intrusions in computer networks. This can lead to more accurate and reliable intrusion detection systems, which in turn can help prevent security breaches and protect sensitive data in various domains such as healthcare, finance, and national security. Moreover, the use of a horizontal federated learning architecture with an average aggregation and a random selection of clients can further enhance the effectiveness and efficiency of the federated learning approach for IDSs. This architecture ensures that each client has access to a diverse set of training data, which helps prevent overfitting and improves the generalizability of the model. The average aggregation method helps mitigate the heterogeneity of the local datasets and ensures that the global model is a representative and accurate reflection of the client data. Additionally, the random selection of clients helps to ensure that all the clients have equal opportunities to participate in the training process and contribute to the final model.

The remainder of this paper is organized as follows. In the next section, we provide a brief overview of federated learning and its benefits for IDSs. Next, we present related work. Then, we describe the research methodology used in this study. Finally, we present the expected outcomes and significance of this research.

2. Background

2.1. Federated Learning for Anomaly Intrusion Detection System

Federated learning offers numerous advantages for developing effective and efficient intrusion detection system (IDS) solutions [9]. The following are the key benefits that make federated learning a promising approach for IDS solutions:

- Privacy Preservation: Federated learning ensures privacy by allowing for the creation of a global IDS model without sharing the sensitive security logs of the individual branches or entities. By training on distributed data without transmitting the actual data, federated learning protects the privacy and confidentiality of sensitive information. This is particularly crucial in an IDS, where network traffic and security event data can be highly sensitive.

- Enhanced Security: Federated learning provides security benefits by keeping the data on local devices and minimizing data transfers to a central server. This approach reduces the risk of unauthorized access, interception, or the theft of data during transmission, which is a significant concern in IDS applications.

- Improved Efficiency: In large-scale IDS applications, traditional server-based approaches may require transferring substantial amounts of data to a central server for analysis, thereby leading to high communication costs. Federated learning minimizes data transfers by only sharing model updates, thus resulting in reduced communication costs. This efficiency gain is particularly valuable in scenarios with limited network bandwidth and significant data volumes.

- Scalability: Federated learning is highly scalable, thus making it suitable for IDS applications involving distributed data across multiple devices or locations. For instance, in smart city networks with numerous sensors monitoring traffic, federated learning can develop a global IDS model that analyzes data from all sensors while maintaining the data locally. It enables the effective analysis and detection of security events across the entire network.

- Collaborative Approach: Federated learning allows for collaboration among multiple entities without compromising data privacy and security. In an IDS, where different organizations or departments may need to work together, federated learning enables each entity to train its local model on its own data while contributing to a shared global model. This collaboration enhances the overall performance of the IDS by incorporating diverse data sources and perspectives.

- Real-Time Updates: With federated learning, local models are trained in real time on each device, and updates are sent to the central server immediately. This enables the IDS to adapt swiftly to changing network conditions and detect new threats in real time.

- Improved Accuracy: Federated learning leverages a larger and more diverse dataset, thereby leading to enhanced accuracy and performance. By training the model on diverse data from different sources, the IDS becomes capable of detecting a wider range of threats, thus ultimately improving the overall accuracy.

- Reduced Training Time: Compared to traditional centralized approaches, federated learning significantly reduces the training time and resource requirements. By training the model on local devices, the IDS achieves improved efficiency and expedites the training process.

- Cost-Saving: Federated learning offers cost savings by utilizing existing devices on the network for model training. Organizations can leverage their infrastructure without the need for additional hardware or software investments, thus resulting in cost-effective IDS deployment and maintenance.

- By leveraging these advantages, federated learning proves to be a promising approach for developing advanced, privacy-preserving, efficient, and collaborative IDS solutions.

2.2. Federated Learning Architecture

Federated learning architecture encompasses two distinct approaches: horizontal federated learning and vertical federated learning, which both enable collaborative model training without compromising data privacy.

Horizontal federated learning involves multiple clients, such as different organizations or network segments, that possess the same set of features but have different examples of those features. For instance, various organizations may have network traffic logs with the same features but from their respective networks. In this architecture, clients collaborate to train a shared intrusion detection model without directly sharing their raw traffic data. This process typically entails clients training local models on their respective traffic logs and exchanging model updates with a central server. The server aggregates these updates and distributes the updated model back to the clients. Horizontal federated learning enhances intrusion detection accuracy across multiple networks while respecting data privacy.

Vertical federated learning, on the other hand, involves multiple clients, such as different sensors or network monitoring tools, with each possessing different sets of features but sharing the same set of examples. For instance, these clients may have diverse data sources, such as network traffic logs, system logs, or sensor data, but they all pertain to the same network or system. The clients collaborate to train a shared intrusion detection model by combining their respective features without exposing their raw data to each other. The process involves clients training local models on their specific data sources and exchanging model updates with one another. By leveraging these updates, the clients collectively train a shared model that incorporates diverse sources of data while still preserving the privacy of each data source. Vertical federated learning thus enhances intrusion detection accuracy by incorporating a broader range of data while maintaining data source privacy.

2.3. Federated Learning Techniques

Federated learning techniques have emerged as powerful approaches for training machine learning models collaboratively while preserving data privacy and security. These techniques enable organizations to harness the collective intelligence of decentralized devices without compromising the confidentiality of sensitive data. The four key techniques within federated learning, federated averaging, federated learning with differential privacy, federated learning with secure aggregation, and federated transfer learning, are compared in Table 1.

Table 1.

Comparison of federated learning techniques.

2.4. Aggregation in Federated Learning

The aggregation methods in federated learning are summarized and compared in Table 2.

Table 2.

Aggregation methods in federated learning.

The aggregation process typically occurs at a central server or aggregator that is responsible for receiving local model updates from clients and combining them to generate a global model. There are several common methods for aggregation in FL:

- 1.

- Federated Averaging (FedAvg): FedAvg is a widely used aggregation method in FL. In FedAvg, the local model updates from clients, typically in the form of gradients or model weights, which are averaged to obtain the global model update. The central server computes the average of the local updates and applies them to the global model. FedAvg is simple to implement and can achieve a good performance in many FL scenarios.

- 2.

- Weighted Federated Averaging: Weighted Federated Averaging is an extension of FedAvg that assigns different weights to different clients during the aggregation process. The weights can be based on various criteria, such as the number of samples or the performance of the clients’ local models. The central server computes the weighted average of the local model updates, thereby taking into account the assigned weights. Weighted federated averaging allows for the differential treatment of clients based on their contributions or capabilities [8].

- 3.

- Federated Averaging with Momentum (FedAvgM): Federated averaging with momentum (FedAvgM) is an extension of federated averaging that incorporates momentum into the update process. In the traditional gradient descent, momentum is used to accelerate the convergence of the optimization algorithm by taking into account the past gradients [10]. Similarly, in FedAvgM, the past gradients from the participating devices are used to accelerate the convergence of the federated optimization algorithm.The updating equation for FedAvgM is as follows:where is the momentum vector at time t, is the momentum coefficient, is the learning rate, is the average gradient of the participating devices at time t, and is the updated model at time t.FedAvgM has been shown to improve the convergence rate and final accuracy of the federated learning algorithm, especially in scenarios where the participating devices have a heterogeneous data distribution. To provide a deeper understanding of FedAvgM, we defined the following variables:

- = Current global model parameters;

- = Current global model parameters;

- = the previous global model parameters;

- = local model update of device i;

- m = the momentum term;

- = the local loss.

- (a)

- Initialization:

- and m are initialized by the central server.

- (b)

- Client Update:

- Each client device i updates its local model parameter by minimizing its local loss function .

- The local model update for the device i is given by

- (c)

- Server Aggregation:

- The central server aggregates the local model updates from all the devices to obtain the current aggregated local model update, which is denoted as G. The aggregation is performed by summing up all the local model updates across all client devices and dividing by the total number of client devices (N) to obtain the average:

- (d)

- Momentum Update:

- The momentum term m is updated using the formula:where, is the momentum parameter. The formula combines the previous value of m, the difference between the current and previous global model parameters, and the aggregated local model update to calculate the new value of m.

- 4.

- Secure Aggregation: Secure aggregation is an aggregation method that focuses on ensuring privacy and security in FL. It typically involves using cryptographic techniques, such as secure multiparty computation (SMPC) or homomorphic encryption, to protect the privacy of local model updates during the aggregation process. Secure aggregation can provide strong privacy guarantees, but it may also introduce additional computational overhead and complexity compared to other aggregation methods.

2.5. Data Selection

Client data selection in federated learning (FL) refers to the process of determining which clients, among the available participating clients in a federated learning system, are selected to contribute their data for model training in each round or iteration of the FL process. Client data selection is an important aspect of FL, as it can significantly impact the performance, convergence, and privacy of the trained global model.

In FL, multiple clients (e.g., mobile devices and edge servers) each hold their local data, and the global model is trained by aggregating the local updates from these clients. The clients typically have heterogeneity in terms of their data distribution, data size, and data quality, which can result in variations in their local model updates. Therefore, the selection of the clients for model training can affect the representatives of the global model and its ability to generalize well to unseen data. There are several common strategies for client data selection in FL:

- 1.

- Random selection: Clients are randomly chosen from the available participating clients in each round. This approach ensures that all clients have an equal chance of being selected, and it can be simple to implement. However, it may not take into account the heterogeneity of the clients’ data or their suitability for the task at hand. The use of random selection helps to prevent overfitting by ensuring that each client receives a diverse set of training data.

- 2.

- Stratified selection: Clients are selected in a stratified manner, thus taking into account their data characteristics, such as data distribution or data size. For example, clients with larger data sizes or more representative data distributions may be given a higher probability of selection. This approach aims to ensure that the selected clients are representative of the overall data distribution and can contribute more meaningfully to the global model.

- 3.

- Proactive selection: Clients are proactively chosen based on certain criteria, such as their data quality, model performance, or resource availability. For example, clients with higher data quality or better computational resources may be given a higher priority for selection. This approach aims to prioritize clients who are likely to provide higher-quality updates to the global model, thereby potentially leading to faster convergence or better performance.

- 4.

- Dynamic selection: Clients are dynamically selected based on their recent performance or contribution to the global model. For example, clients that have recently provided better updates or have been less frequently selected in previous rounds may be given a higher probability of selection. This approach aims to adaptively adjust the client selection based on their recent performance, which can potentially improve the overall performance of the federated learning process.

The choice of client data selection strategy in FL depends on various factors, such as the specific requirements of the task, the characteristics of the available clients, the privacy and security constraints, and the system architecture. The selection strategy should be carefully designed to ensure fairness, representation, and efficiency in the federated learning process while respecting privacy and security concerns.

3. Related Work

Federated learning has gained significant attention in the field of intrusion detection as a privacy-preserving approach that allows multiple entities to collaboratively train models without sharing sensitive data. In this section, we provide a survey of the related works on intrusion detection using federated learning, thus highlighting the contributions and advancements made by researchers in this domain.

Friha et al. [18] proposed FELIDS, a federated learning-based intrusion detection system designed to secure agricultural-IoT infrastructures. The system focuses on protecting data privacy by employing local learning, in which devices share model updates with an aggregation server to improve the detection model. To enhance the security of the agricultural IoT, the FELIDS system utilizes three deep learning classifiers: deep neural networks, convolutional neural networks, and recurrent neural networks. The performance of the proposed intrusion detection system was evaluated using three distinct datasets: CSE-CIC-IDS2018, MQTTset, and InSDN. The experimental results revealed that FELIDS outperformed traditional centralized machine learning methods by demonstrating superior accuracy in detecting attacks while ensuring the privacy of IoT device data.

Attota et al. [19] proposed MV-FLID, a multiview federated-learning-based intrusion detection approach for IoT networks. The authors addressed the limitations of traditional intrusion detection methods and highlighted the need for more insightful and privacy-preserving approaches. MV-FLID leverages multiview learning and federated learning techniques to improve attack detection and classification while preserving data privacy. The evaluation results demonstrated that MV-FLID achieved a higher accuracy compared to traditional methods. Although the authors claimed that MV-FLID preserved data privacy through federated learning, this paper did not delve into the privacy mechanisms employed, nor did it address potential vulnerabilities. A more thorough discussion of privacy-preserving techniques, including encryption methods or differential privacy, would enhance the credibility of the proposed approach in terms of protecting sensitive IoT data.

Rahman et al. [20] introduced an intrusion detection system (IDS) for IoT networks based on federated learning (FL). They performed three different experiments, namely, centralized, device-based, and federated learning, using the NSL-KDD dataset. The results of their experimental evaluation demonstrated that the FL-based IDS achieved an accuracy of approximately 83.09%, which closely matched the performance of the centralized model.

Nguyen et al. [21] proposed an intrusion detection system (IDS) for the IoT that utilizes federated learning (FL) and incorporates an automated technique that is tailored to specific device types. When tested in a physical implementation involving devices infected with the Mirai malware, this work demonstrated a remarkable average detection rate of 95.6% for attacks, with a swift response time of 257 ms. Additionally, the system generated minimal false alarms. However, it is important to note that the proposed model was designed solely to detect attacks targeting IoT devices. Other potential threats that may affect various components within the entire ecosystem, such as advanced networking technologies such as SDN and services such as FTP and SSH, were not taken into consideration.

4. Preserving Privacy in Federated Learning for Intrusion Detection

Anomaly Detection with Autoencoder Model

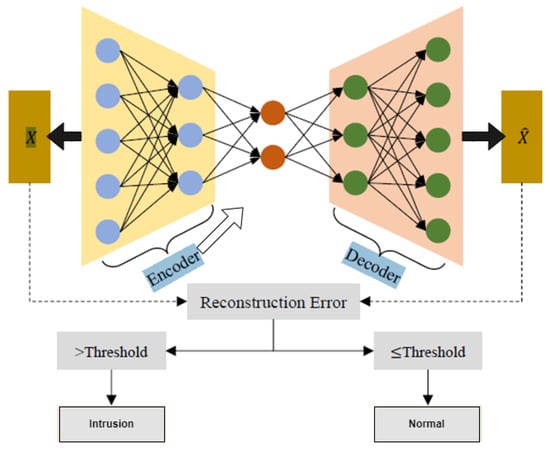

An autoencoder was used to build an intrusion detection system. Autoencoders are a type of neural network that can be leveraged for anomaly detection in intrusion detection systems. In this approach, autoencoders are trained exclusively on normal network traffic data. During the training phase, the autoencoder learned to encode the normal traffic patterns into a compressed representation using an encoder network. It then decoded this representation back into the original input data using a decoder network. The training objective was to minimize the reconstruction error, which measures the difference between the input data and the reconstructed output.

In the anomaly detection phase, the trained autoencoder was applied to new, unseen network traffic instances. By calculating the reconstruction error between the input and the reconstructed output, the system could assess the similarity between the observed traffic and the learned normal patterns. Instances with higher reconstruction errors were classified as anomalies, thereby indicating potential intrusions or attacks. To differentiate between normal and anomalous instances, a threshold was set. Instances with reconstruction errors above the threshold were flagged as anomalies, whereas those below the threshold were considered normal.

The autoencoder consists of an encoder and decoder network, where the encoder maps the input data X to a lower-dimensional representation Z through the encoding function h such that , and the decoder maps the lower-dimensional representation Z to the output data Y through the decoding function g such that The goal of the autoencoder is to learn a compressed representation of the input data that captures its essential features while minimizing the difference between the input data and the reconstructed data, i.e., to minimize the reconstruction error.

where X is the input data, and Y is the reconstructed data.

The training process involves minimizing the reconstruction error between the input data and the reconstructed data, which can be achieved by optimizing the parameters of the encoder and decoder networks using gradient descent or other optimization algorithms. Once the autoencoder is trained, it can be used for various tasks such as encoding input data to obtain lower-dimensional representations, generating new data samples from the learned representations, or using the encoder as a feature extraction module for downstream tasks. In the context of anomaly detection, the trained autoencoder was used to reconstruct new, unseen network traffic data, and the reconstruction error was compared to a predefined threshold. If the reconstruction error was above the threshold, the network traffic was classified as an anomaly. This approach is particularly useful for detecting novel or unknown anomalies, as the autoencoder can detect any deviations from the normal patterns it has learned during training. Figure 1 presents a visual representation of the approach used for detecting anomalies with an autoencoder.

Figure 1.

Visualizing anomaly detection with autoencoders.

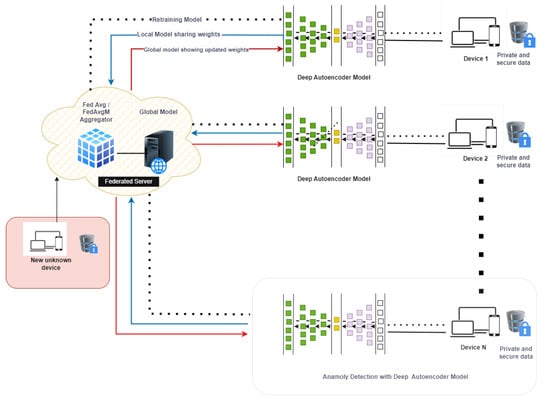

FELIDS (Federated-Learning-Based Intrusion Detection System)

One way to implement federated averaging is through the horizontal architecture of federated machine learning, as shown in Figure 2. In this architecture, the data are distributed across N number of devices, and each device trains its local model based only on the data it has access to. A deep autoencoder can be used as a local model for each client to learn a compressed representation of the client’s data. Once the local model has been trained on the client device, the model parameters are sent to the federated server, which aggregates the parameters from all client devices using a federated averaging algorithm. This allows the server to update the global model with the combined knowledge from all the clients, all while preserving the privacy of each client’s data.

Figure 2.

Horizontal architecture intrusion detection system.

To initiate the federated learning (FL) process in the federated-learning-based intrusion detection system (FELIDS), the FELIDS server begins by selecting a fraction C of K edge nodes, referred to as FELIDS clients, to participate in the computation for a specified number of FL rounds, denoted as R. The goal is to collaboratively train a robust intrusion detection model.

To ensure secure and private data exchange between the clients and the server, a secure gRPC (Google remote procedure call) channel is established. This channel incorporates built-in SSL/TLS (secure socket Layers/transport layer security) support, thereby enabling client and server authentication, as well as encryption of the entire communication between them. This secure channel guarantees the confidentiality and integrity of the exchanged data during the FL process. Once all the selected clients are connected through the secure gRPC channel, the FL process follows a specific procedure outlined in Figure 2 and the algorithms described in Algorithms 1 and 2, which are adapted from the FedAvg algorithm [22].

The FL process works as follows:

- 1.

- The server initializes the global model parameters.

- 2.

- Each client independently trains a local model using its own local data, which follows the FL training procedure.

- 3.

- The clients securely send their local model updates to the server through the secure gRPC channel.

- 4.

- The server aggregates the received local model updates, typically by using the federated averaging (FedAvg) algorithm.

- 5.

- The server updates the global model parameters using the aggregated model updates.

- 6.

- Steps 2–5 are repeated for the specified number of FL rounds (R), thus allowing the models to iteratively improve and converge towards a better intrusion detection model.

- 7.

- After the FL process is completed, the final global model is deployed for intrusion detection across the system.

Once the clients are connected, the algorithm performs FedAvg, which involves selecting a subset of clients and sending them the current model parameters. Each selected client then trains the model locally using its private data and returns the updated model parameters to the server. The server aggregates the received parameters from all clients, computes the new global model, and sends it back to all the clients. This process is repeated for a fixed number of communication rounds.

Finally, the algorithm releases the clients and is terminated. The aim of this approach is to improve the accuracy of the machine learning model while maintaining privacy by keeping the training data at the client side without having to share it with the server.

| Algorithm 1 Start Server algorithm |

|

Algorithm 1 is a high-level description of the “StartServer”, which is used to initialize the federated learning process. The algorithm uses three parameters: K (the total number of FELIDS clients), C (the fraction of clients chosen to participate in each round), and R (the number of rounds for the federated learning to perform). The main loop of the algorithm runs until all K clients have connected to the server. Inside this loop, the “FedAvg” algorithm is utilized, which performs R rounds of federated learning. In each round, a random subset of clients are chosen to participate, and they each train their local model using the current global model. The updated local models are then aggregated to create a new global model. After all the rounds are completed, the clients are released from the process. The following presents a legend for the algorithm:

- D: The entire dataset used for training the machine learning model.

- B: The number of clients or subsets into which the dataset is divided during training.

- E: The number of local training epochs for each client during each round of FedAvg.

- : The preprocessed dataset obtained after applying the preprocess function to D.

- w: The model parameters (weights and biases) shared between clients and the server.

- : The local dataset batch on each client obtained by splitting into B subsets.

- : The learning rate, which controls the step size of the model parameter updates.

- : The gradient of the loss function with respect to w computed on the local batch b.

| Algorithm 2 Client algorithm |

|

Algorithm 2 is the FELIDS client algorithm for federated averaging (FedAvg), which is used in the federated-learning-based intrusion detection system (FELIDS). This algorithm is executed by each FELIDS client k to train the global model using their private data. Initially, the client downloads the generic model from the FELIDS server. Then, the client trains the generic model locally with their private data in parallel and generates a new local set of weights. Each client has a preprocessed dataset that is split into local mini batches of size B, and the client computes the new weights by updating the old ones using the learning rate, average gradient, and minibatch. Once the updated parameters have been computed, the client sends them to the FELIDS server. In contrast to centralized learning, FELIDS clients only share the updated model parameters, which were trained on the local data. The FELIDS server aggregates the updated parameters from the different FELIDS clients and creates a new updated global model by applying the average update. Finally, the FELIDS server forwards the updated global model parameters to all FELIDS clients for further enhancement using their new local data.

5. Experiments and Results

The NSL-KDD dataset was preprocessed, split into training and test sets, and used to train deep learning and federated learning models. A secure communication protocol was established between the central server and clients in the federated learning setup to ensure data privacy and security. Local model updates were aggregated to obtain a global model, which was evaluated on the test dataset. We obtained and preprocessed the NSL-KDD dataset, which contained 125,973 records in the training set and 22,544 records in the test set. The training dataset was split into training and validation sets using a 4:1 ratio for model training and validation purposes [7].

The federated learning setup entailed the following: We set up a secure communication protocol between the central server and the clients, including data encryption and authentication mechanisms, to ensure the privacy and security of the data. We defined the global model architecture and hyperparameters to be used in the federated learning process.

The local model update entailed the following: Each client trained its local model using its local dataset. We selected a suitable machine learning algorithm and trained the local model on each client’s dataset. The local model parameters, represented by , were updated for each client.

To train our model, we utilized the NSL-KDD dataset, which consisted of 125,973 records in the training set and 22,544 records in the test set. We split the training dataset into training and validation sets using a 4:1 ratio to train and validate the model. Following training, we evaluated the model’s accuracy using the test dataset. We aggregated the local model updates using federated averaging or a customized aggregation function to obtain the global model M.

We evaluated the performance of the global model M using the test dataset from the NSL-KDD dataset, through which we measured its accuracy and other intrusion detection metrics.

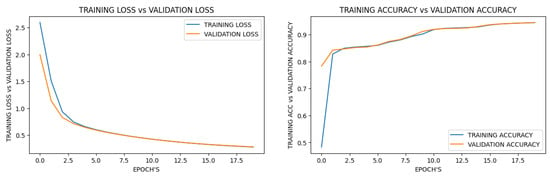

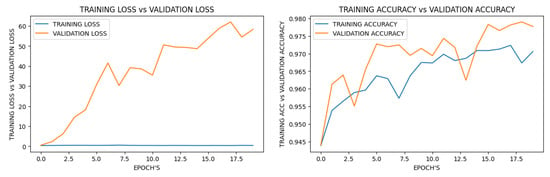

Table 3 shows the comparison between deep learning and federated learning in terms of their accuracy and loss values. The results indicate that, overall, federated learning could achieve a higher accuracy compared to deep learning, especially as the training progressed. It is important to consider that this improved accuracy may be accompanied by higher loss values. However, it is essential to acknowledge that these findings can be influenced by the choice of dataset and model architecture. Thus, the results may vary depending on these factors. This table has two columns: one for deep learning and the other for federated learning. Each row represents a specific round or epoch during the training process. The first row shows the initial accuracy and loss values for both deep learning and federated learning, which were 78.33% and 94.40% for the accuracy, respectively, and 1.9984 and 0.5441 for the loss, respectively.

Table 3.

Testing results for deep learning and federated learning.

As the training progressed, the accuracy and loss for both models improved. The fifth round or epoch shows that federated learning outperformed deep learning with an accuracy of 96.54% and a loss of 18.1923 compared to the deep learning outcomes, with an accuracy of 85.37% and a loss of 0.6459.

The tenth round or epoch showed that federated learning continued to outperform deep learning with an accuracy of 97.15% and a loss of 38.5893 compared to the deep learning outcomes, with an accuracy of 91.19% and a loss of 0.4505. Finally, the twentieth round or epoch showed that federated learning achieved the highest accuracy of 97.77% and a loss of 58.3108, while deep learning achieved an accuracy of 94.53% and a loss of 0.2824.

Overall, the table demonstrates that federated learning can achieve a higher accuracy than deep learning, particularly as the training progresses, although it may come at the cost of a higher loss. However, it is worth noting that the results may vary depending on the specific dataset and model architecture used.

Figure 3 and Figure 4 demonstrate that the federated learning approach achieved acceptable accuracy and loss even in the first round, whereas the deep learning method required up to 15 epochs to achieve comparable performance. Additionally, the results indicate that, on a per-round or per-epoch basis, the amount of available data was the same for both approaches, yet federated learning outperformed deep learning. However, as the number of rounds increased, the validation loss in federated learning also increased, thus indicating that overfitting occurred earlier in the federated learning approach.

Figure 3.

Deep learning results.

Figure 4.

Federated learning results.

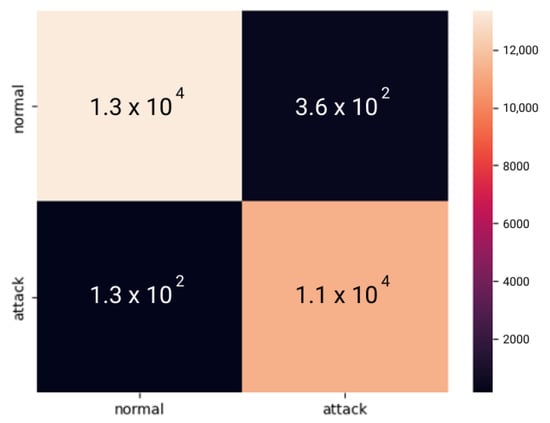

Figure 5 shows the federated learning confusion matrix. It provides a clear breakdown of the true positives, true negatives, false positives, and false negatives, thereby allowing for the calculation of important metrics such as accuracy, precision, recall, and the F1-score. A higher F1-score indicates a better balance between the precision and recall, thus suggesting a more reliable classifier for binary classification tasks [23]. The model achieved an accuracy of 98.067%, thus indicating that it made correct predictions for approximately 98.067% of the total instances. With a precision of 0.974, the model correctly identified 97.4% of the positive instances among all the instances that it classified as positive. The F1 score, which considers both precision and recall, was 98.210, thus indicating a high overall performance. The true positive rate (TPR), also known as sensitivity or recall, was 0.99058, thus indicating that the model correctly identified 99.058% of the positive instances. Moreover, the model had a low false positive rate (FPR) of 0.03075, thus suggesting that it made a relatively small number of false positive errors.

Figure 5.

Federated learning confusion matrix.

The given text also presents a confusion matrix figure that shows the true positive rate (TPR) against the false positive rate (FPR) at different threshold settings for a federated learning model. The figure shows an accuracy of 98.067%, a precision of 0.974, an F1-score of 98.210, a TPR of 0.99058, and an FPR of 0.03075 at the chosen threshold. These metrics suggest that the model has a high accuracy, precision, and F1 score, as well as a low false positive rate.

Federated learning in intrusion detection systems has several limitations and challenges, which include data heterogeneity, communication overhead, data imbalance, security and privacy concerns, model aggregation vulnerabilities, client availability issues, lack of a global view, and difficulties in handling model drifting. Addressing these challenges is essential to make FL in IDS more effective and secure.

6. Conclusions

In conclusion, our research focused on evaluating the effectiveness of federated learning for enhancing privacy-preserving intrusion detection systems (IDS). Federated learning, as a distributed machine learning approach, allows for the collaborative training of a shared model while maintaining data decentralization and privacy. Through our experiments and comparisons with traditional deep learning models, we demonstrated that federated learning, with a random selection of clients, outperforms deep learning in terms of accuracy and loss in an IDS. This advantage is particularly significant in scenarios where data privacy and security are critical concerns. By leveraging federated learning, we were able to develop global models without the need to share sensitive data, thereby reducing the risks associated with data breaches or leakage. The results of our study indicate that federated learning has the potential to revolutionize the development of more effective, efficient, and secure IDS solutions.

Our research study utilized the NSL-KDD dataset, which is a widely used dataset for network intrusion detection, and it implemented a horizontal federated learning architecture with average aggregation and random client selection. The experimental findings consistently demonstrated the superiority of the federated learning approach over traditional deep learning methods in terms of the accuracy and loss. This research has significant implications, as it opens avenues for the development of more accurate and reliable intrusion detection systems that can effectively prevent security breaches and protect sensitive data in various domains such as healthcare, finance, and national security. Future research in this area can further explore enhancements to the federated learning approach, investigate its application to different datasets, and address the challenges related to scalability and heterogeneous client environments. Overall, federated learning holds great promise for advancing privacy-preserving intrusion detection systems and strengthening network security.

Author Contributions

Conceptualization, A.A. and A.K.; methodology, A.A. and A.K.; software, A.K. and S.S.; validation, A.K.; formal analysis, A.A. and A.K.; investigation, A.A.; resources, A.K. and T.J.; data curation, A.A. and S.S.; writing—original draft preparation, A.A. and S.S.; writing—review and editing, T.J.; visualization, S.S.; project administration, T.J.; funding acquisition, A.A. and T.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Khraisat, A.; Alazab, A. A critical review of intrusion detection systems in the internet of things: Techniques, deployment strategy, validation strategy, attacks, public datasets and challenges. Cybersecurity 2021, 4, 18. [Google Scholar] [CrossRef]

- Khraisat, A.; Gondal, I.; Vamplew, P.; Kamruzzaman, J. Survey of intrusion detection systems: Techniques, datasets and challenges. Cybersecurity 2019, 2, 20. [Google Scholar] [CrossRef]

- Alazab, A.; Khraisat, A.; Singh, S. A Review on the Internet of Things (IoT) Forensics: Challenges, Techniques, and Evaluation of Digital Forensic Tools. In Digital Forensics-Challenges and New Frontiers; Reilly, D.D., Ed.; IntechOpen: Rijeka, Croatia, 2023; Chapter 10. [Google Scholar] [CrossRef]

- Alazab, A.; Khraisat, A.; Alazab, M.; Singh, S. Detection of obfuscated malicious JavaScript code. Future Internet 2022, 14, 217. [Google Scholar] [CrossRef]

- Agrawal, S.; Sarkar, S.; Aouedi, O.; Yenduri, G.; Piamrat, K.; Alazab, M.; Bhattacharya, S.; Maddikunta, P.K.R.; Gadekallu, T.R. Federated learning for intrusion detection system: Concepts, challenges and future directions. Comput. Commun. 2022, 195, 346–361. [Google Scholar] [CrossRef]

- Victor, N.; Alazab, M.; Bhattacharya, S.; Magnusson, S.; Maddikunta, P.K.R.; Ramana, K.; Gadekallu, T.R. Federated learning for iout: Concepts, applications, challenges and opportunities. arXiv 2022, arXiv:2207.13976. [Google Scholar]

- Tavallaee, M.; Bagheri, E.; Lu, W.; Ghorbani, A.A. A detailed analysis of the KDD CUP 99 data set. In Proceedings of the 2009 IEEE Symposium on Computational Intelligence for Security and Defense Applications, Ottawa, ON, Canada, 8–10 July 2009; pp. 1–6. [Google Scholar] [CrossRef]

- Khraisat, A.; Gondal, I.; Vamplew, P.; Kamruzzaman, J.; Alazab, A. Hybrid Intrusion Detection System Based on the Stacking Ensemble of C5 Decision Tree Classifier and One Class Support Vector Machine. Electronics 2020, 9, 173. [Google Scholar] [CrossRef]

- Ghimire, B.; Rawat, D.B. Recent advances on federated learning for cybersecurity and cybersecurity for federated learning for internet of things. IEEE Internet Things J. 2022, 9, 8229–8249. [Google Scholar] [CrossRef]

- Sun, T.; Li, D.; Wang, B. Decentralized federated averaging. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 4289–4301. [Google Scholar] [CrossRef] [PubMed]

- Wei, K.; Li, J.; Ding, M.; Ma, C.; Yang, H.H.; Farokhi, F.; Jin, S.; Quek, T.Q.; Poor, H.V. Federated learning with differential privacy: Algorithms and performance analysis. IEEE Trans. Inf. Forensics Secur. 2020, 15, 3454–3469. [Google Scholar] [CrossRef]

- Fereidooni, H.; Marchal, S.; Miettinen, M.; Mirhoseini, A.; Möllering, H.; Nguyen, T.D.; Rieger, P.; Sadeghi, A.R.; Schneider, T.; Yalame, H.; et al. SAFELearn: Secure aggregation for private federated learning. In Proceedings of the 2021 IEEE Security and Privacy Workshops (SPW), San Francisco, CA, USA, 27 May 2021; IEEE: Piscataway, NJ, USA; pp. 56–62. [Google Scholar]

- Liu, Y.; Kang, Y.; Xing, C.; Chen, T.; Yang, Q. A secure federated transfer learning framework. IEEE Intell. Syst. 2020, 35, 70–82. [Google Scholar] [CrossRef]

- Hu, L.; Yan, H.; Li, L.; Pan, Z.; Liu, X.; Zhang, Z. MHAT: An efficient model-heterogenous aggregation training scheme for federated learning. Inf. Sci. 2021, 560, 493–503. [Google Scholar] [CrossRef]

- Elahi, F.; Fazlali, M.; Malazi, H.T.; Elahi, M. Parallel fractional stochastic gradient descent with adaptive learning for recommender systems. IEEE Trans. Parallel Distrib. Syst. 2022, 1–14. [Google Scholar] [CrossRef]

- So, J.; He, C.; Yang, C.S.; Li, S.; Yu, Q.; Ali, R.E.; Guler, B.; Avestimehr, S. Lightsecagg: A lightweight and versatile design for secure aggregation in federated learning. Proc. Mach. Learn. Syst. 2022, 4, 694–720. [Google Scholar]

- Xing, H.; Xiao, Z.; Qu, R.; Zhu, Z.; Zhao, B. An efficient federated distillation learning system for multitask time series classification. IEEE Trans. Instrum. Meas. 2022, 71, 1–12. [Google Scholar] [CrossRef]

- Friha, O.; Ferrag, M.A.; Shu, L.; Maglaras, L.; Choo, K.K.R.; Nafaa, M. FELIDS: Federated learning-based intrusion detection system for agricultural Internet of Things. J. Parallel Distrib. Comput. 2022, 165, 17–31. [Google Scholar] [CrossRef]

- Attota, D.C.; Mothukuri, V.; Parizi, R.M.; Pouriyeh, S. An ensemble multi-view federated learning intrusion detection for IoT. IEEE Access 2021, 9, 117734–117745. [Google Scholar] [CrossRef]

- Rahman, S.A.; Tout, H.; Talhi, C.; Mourad, A. Internet of things intrusion detection: Centralized, on-device, or federated learning? IEEE Netw. 2020, 34, 310–317. [Google Scholar] [CrossRef]

- Nguyen, T.D.; Marchal, S.; Miettinen, M.; Fereidooni, H.; Asokan, N.; Sadeghi, A.R. DÏoT: A federated self-learning anomaly detection system for IoT. In Proceedings of the 2019 IEEE 39th International Conference on Distributed Computing Systems (ICDCS), Dallas, TX, USA, 7–10 July 2019; pp. 756–767. [Google Scholar] [CrossRef]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; Arcas, B.A.Y. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the Artificial Intelligence and Statistics, PMLR, Ft. Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Alazab, A.; Khraisat, A.; Singh, S.; Bevinakoppa, S.; Mahdi, O.A. Routing Attacks Detection in 6LoWPAN-Based Internet of Things. Electronics 2023, 12, 1320. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).