SPE-ACGAN: A Resampling Approach for Class Imbalance Problem in Network Intrusion Detection Systems

Abstract

1. Introduction

- For NIDS, a resampling method SPE-ACGAN based on the combination of SPE and ACGAN is proposed to alleviate the data imbalance problem, which is able to reduce the majority class samples and increase the minority class samples to make the training set more balanced.

- We merge the CICIDS-2017 dataset and the CICIDS-2018 dataset into a new dataset, named CICIDS-17-18. The CICIDS-17-18 dataset is a more imbalanced dataset with a larger amount of data to show the effectiveness of SPE-ACGAN.

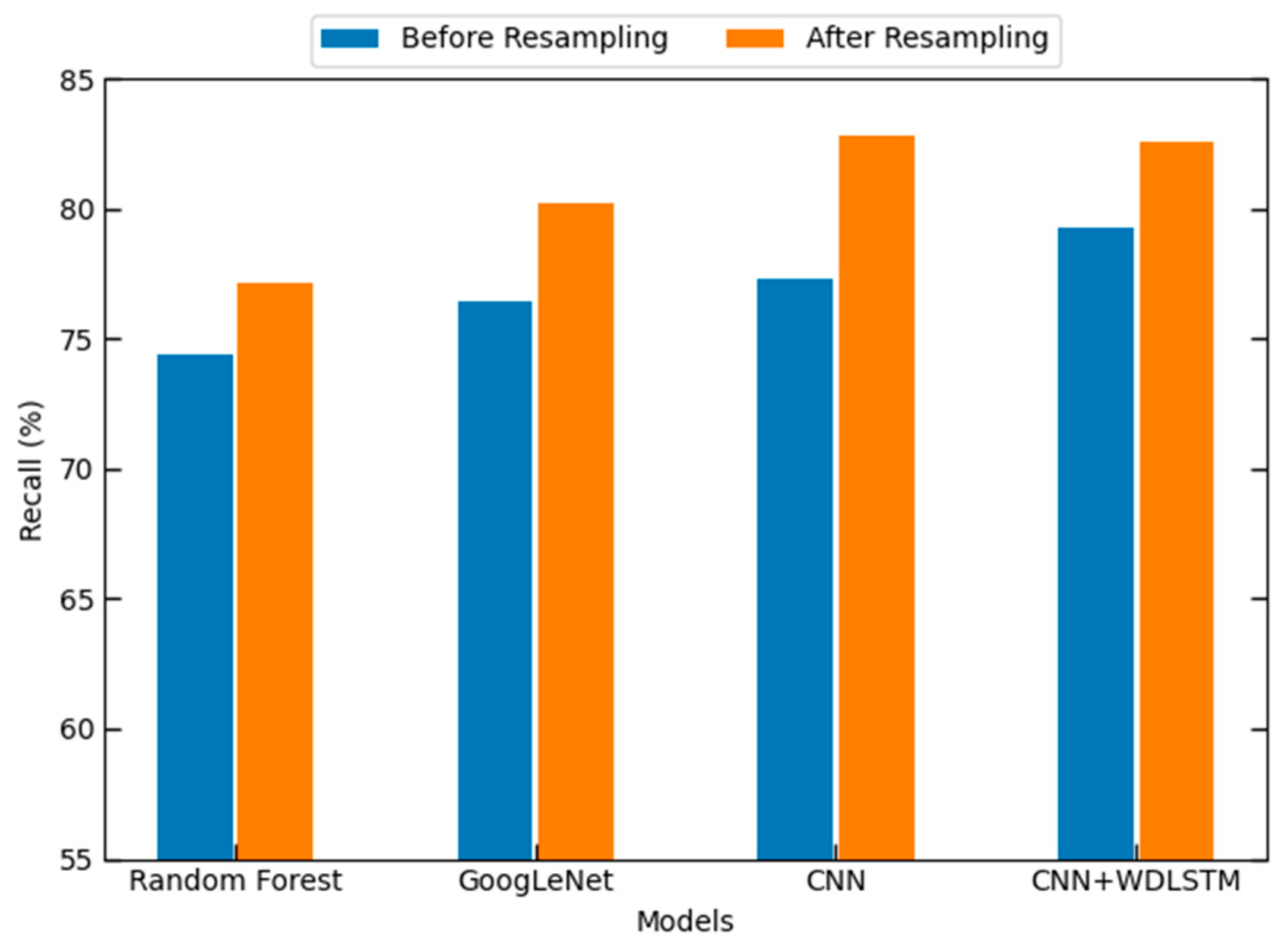

- Our proposed method is experimented on the above three datasets and compared with some existing resampling methods. The performance metrics of some typical NIDS models are improved after applying our proposed method.

2. Related Work

3. Methods and Materials

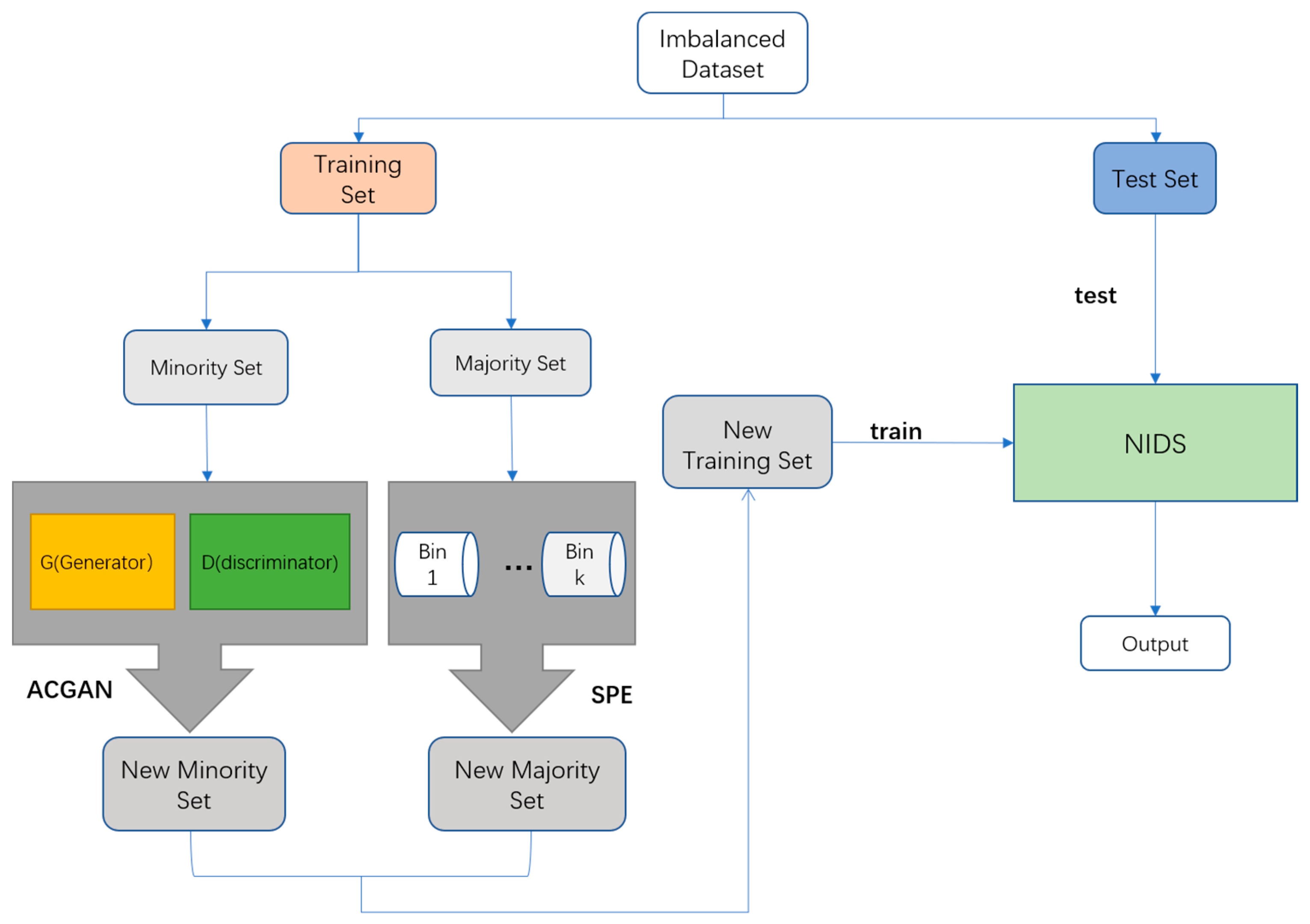

3.1. SPE-ACGAN

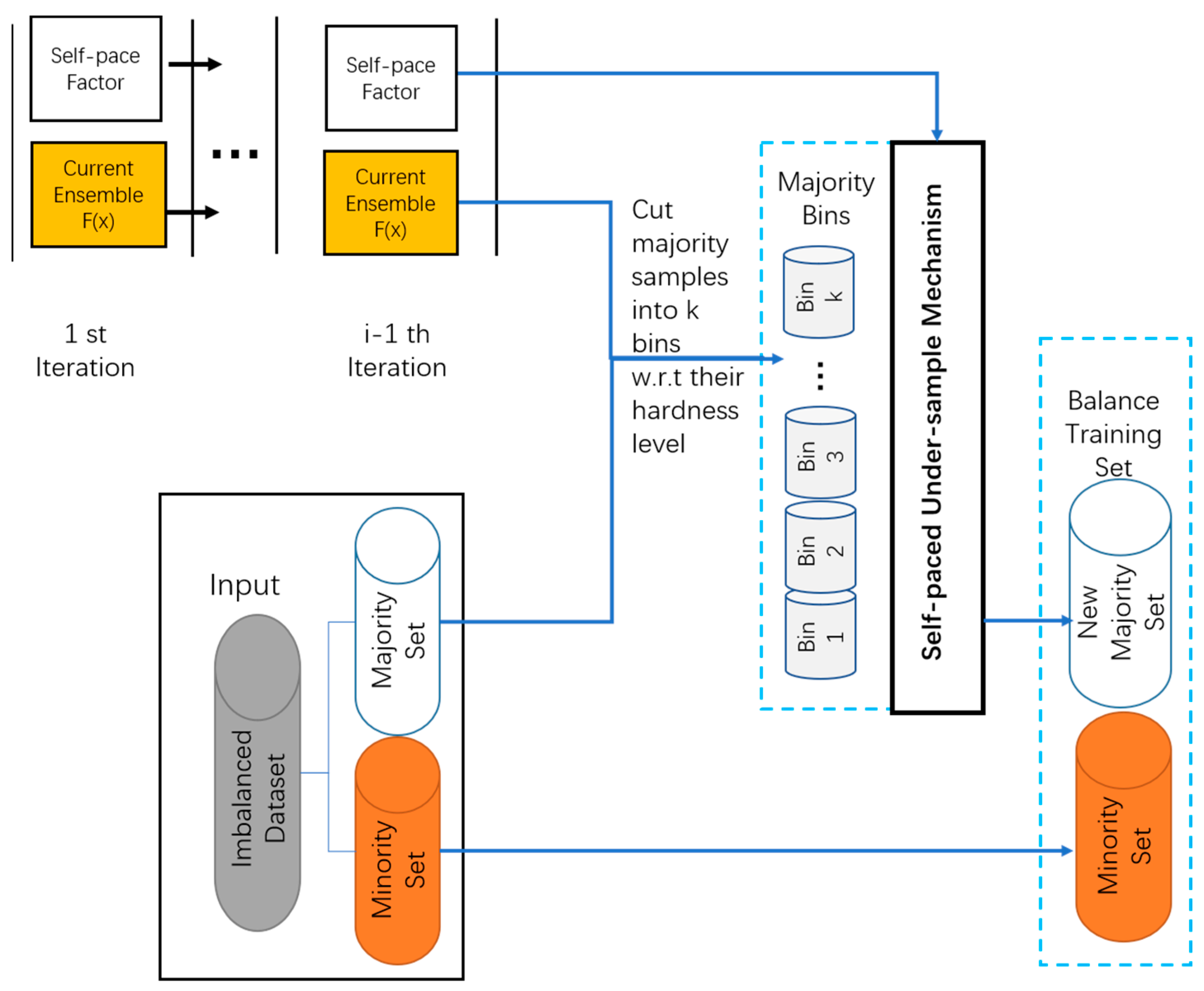

3.1.1. SPE

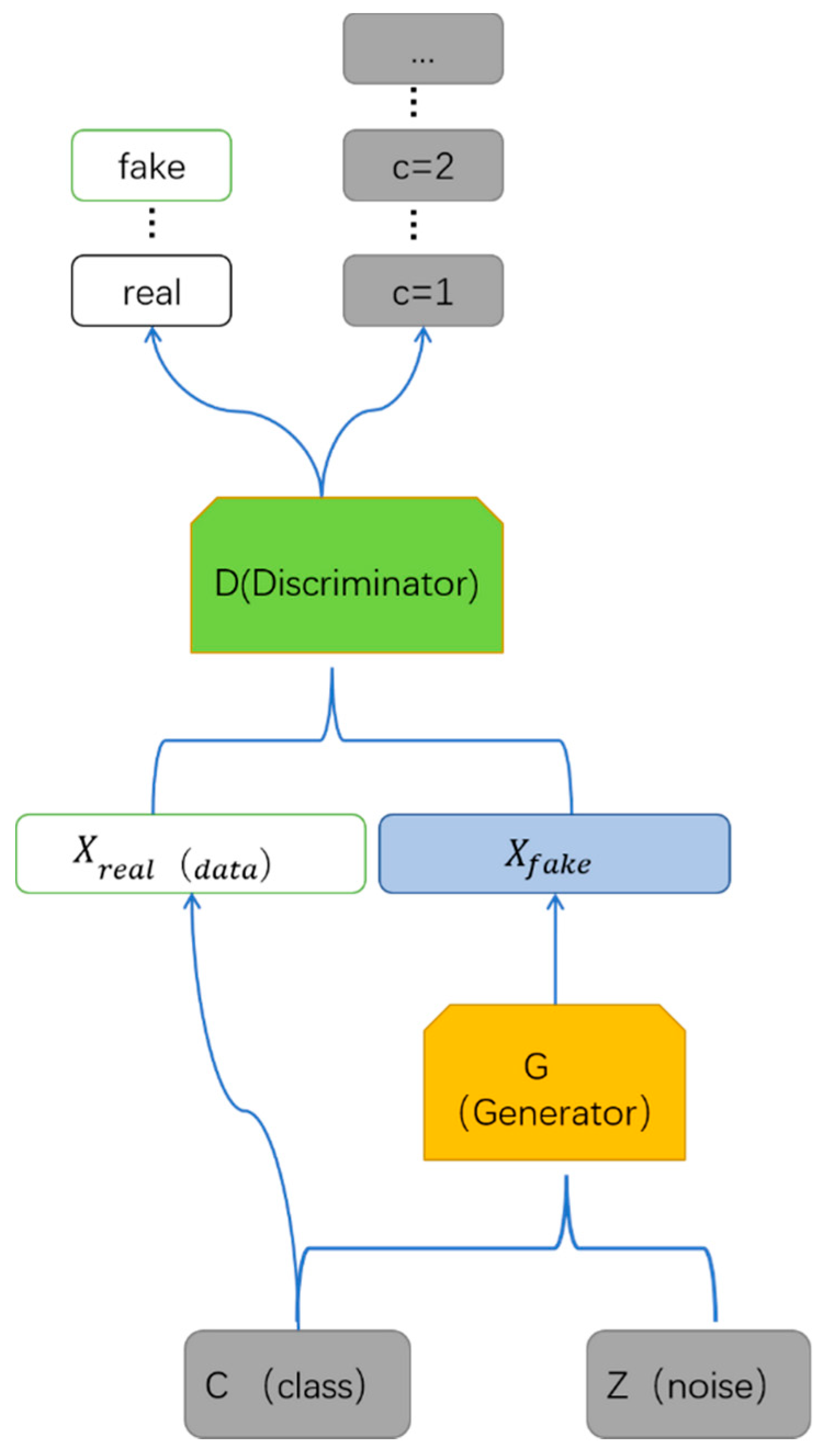

3.1.2. ACGAN

3.1.3. Overall Model Architecture

3.2. Details of the SPE-ACGAN

3.2.1. Dataset

3.2.2. Dataset Resampling

4. Experimentation and Result Analysis

4.1. Experimental Setup

4.2. Performance Metrics

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Molina-Coronado, B.; Mori, U.; Mendiburu, A.; Miguel-Alonso, J. Survey of network intrusion detection methods from the perspective of the knowledge discovery in databases process. IEEE Trans. Netw. Serv. 2020, 4, 2451–2479. [Google Scholar]

- Viegas, E.K.; Santin, A.O.; Oliveira, L.S. Toward a reliable anomaly-based intrusion detection in real-world environments. Comput. Netw. 2017, 11, 200–216. [Google Scholar] [CrossRef]

- Aggarwal, C.C. Data Mining: The Textbook; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Shyu, M.L.; Chen, S.C.; Sarinnapakorn, K.; Chang, L.W. A novel anomaly detection scheme based on principal component classifier. In Proceedings of the IEEE Foundation and New Direction of Data Mining Workshop, Melbourne, FA, USA, 19–22 November 2003; pp. 172–179. [Google Scholar]

- Goodall, J.R.; Ragan, E.D.; Steed, C.A.; Reed, J.W. Situ: Identifying and explaining suspicious behavior in networks. IEEE Trans. Vis. Comput. Graph. 2019, 1, 204–214. [Google Scholar] [CrossRef] [PubMed]

- Depren, O.; Topallar, M.; Anarim, E.; Ciliz, M.K. An intelligent intrusion detection system (IDS) for anomaly and misuse detection in computer networks. Expert Syst. Appl. 2005, 4, 713–722. [Google Scholar] [CrossRef]

- Bhuyan, M.H.; Bhattacharyya, D.K.; Kalita, J.K. A multi-step outlier-based anomaly detection approach to network-wide traffic. Inf. Sci. 2016, 6, 243–271. [Google Scholar] [CrossRef]

- Wu, K.; Chen, Z.; Li, W. A novel intrusion detection model for a massive network using convolutional neural networks. IEEE Access 2018, 9, 50850–50859. [Google Scholar] [CrossRef]

- Hassan, M.M.; Gumaei, A.; Alsanad, A.; Alrubaian, M.; Fortino, G. A hybrid deep learning model for efficient intrusion detection in big data environment. Inf. Sci. 2019, 3, 386–396. [Google Scholar] [CrossRef]

- Li, Z.P.; Qin, Z.; Huang, K.; Yang, X.; Ye, S.X. Intrusion detection using convolutional neural networks for representation learning. In Proceedings of the NIP 2017, Long Beach, CA, USA, 4–9 December2017; pp. 858–866. [Google Scholar]

- Bedi, P.; Gupta, N.; Jindal, V. I-SiamIDS: An improved Siam-IDS for handling class imbalance in network-based intrusion detection systems. Appl. Intell. 2021, 2, 1133–1151. [Google Scholar] [CrossRef]

- Bedi, P.; Gupta, N.; Jindal, V. Siam-IDS:Handling class imbalance problem in Intrusion Detection Systems using Siamese Neural Network. In Proceedings of the Third International Conference on Computing and Network Communications, Vellore, India, 30–31 March 2019; Elsevier: Amsterdam, The Netherlands, 2019; pp. 780–789. [Google Scholar]

- Apruzzese, G.; Colajanni, M.; Ferretti, L.; Guido, A.; Marchetti, M. On the effectiveness of machine and deep learning for cyber security. In Proceedings of the International Conference on Cyber Conflict, Swissotel Tallinn, Estonia, 29 May–1 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 371–390. [Google Scholar]

- Dong, B.; Wang, X. Comparison Deep Comparison deep learning method to traditional methods using for network intrusion detection. In Proceedings of the IEEE International Conference on Communication Software & Networks, Beijing, China, 4–6 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 581–585. [Google Scholar]

- Wang, S.; Liu, W.; Wu, J.; Cao, L.; Meng, Q.; Kennedy, P.J. Training deep neural networks on imbalanced data sets. In Proceedings of the International Joint Conference on Neural Networks, Vancouver, BC, Canada, 24–29 July 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 4368–4374. [Google Scholar]

- Ma, X.Y.; Shi, W. Aesmote: Adversarial reinforcement learning with smote for anomaly detection. IEEE Trans. Netw. Sci. Eng. 2021, 2, 943–956. [Google Scholar] [CrossRef]

- Tahir, M.A.; Kittler, J.; Mikolajczyk, K.; Yan, F. A multiple expert approach to the class imbalance problem using inverse random under sampling. In Proceedings of the International Workshop on Multiple Classifier Systems, Reykjavik, Iceland, 10–12 June 2009; Springer: Berlin, Germany, 2009; pp. 82–91. [Google Scholar]

- Lee, J.; Park, K. AE-CGAN model based high performance network intrusion detection system. Appl. Sci. 2019, 9, 4221. [Google Scholar] [CrossRef]

- Odena, A.; Olan, C.; Solens, J. Conditional image synthesis with auxiliary classifier GANs. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; PMLR: New York, NY, USA, 2017; pp. 2642–2651. [Google Scholar]

- Liu, Z.; Cao, W.; Gao, Z.; Bian, J.; Chen, H. Self-paced Ensemble for Highly Imbalanced Massive Data Classification. In Proceedings of the 36th IEEE International Conference on Data Engineering, Dallas, TX, USA, 20–24 April 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 841–852. [Google Scholar]

- Yang, J.; Sheng, Y.; Wang, J. A GBDT-paralleled quadratic ensemble learning for intrusion detection system. IEEE Access 2020, 8, 175467–175482. [Google Scholar] [CrossRef]

- Lopez-Martin, M.; Carro, B.; Sanchez-Esguevillas, A.; Lloret, J. Conditional variational autoencoder for prediction and feature recovery applied to intrusion detection in IoT. Sensors 2017, 17, 1967. [Google Scholar] [CrossRef] [PubMed]

- Rifai, S.; Vincent, P.; Muller, X.; Glorot, X.; Bengio, Y. Contractive auto-encoders: Explicit invariance during feature extraction. In Proceedings of the ICM 2011, Bellevue, WA, USA, 28 June–2 July 2011; ACM: New York, NY, USA, 2011; pp. 833–840. [Google Scholar]

- Wang, Z.; Li, Z.; Wang, J.; Li, D. Network intrusion detection model based on improved BYOL self-supervised learning. Secur. Commun. Netw. 2021, 2021, 9486949. [Google Scholar] [CrossRef]

- Vaiyapuri, T.; Binbusayyis, A. Enhanced deep autoencoder based feature representation learning for intelligent intrusion detection system. CMC—Comput. Mater. Contin. 2021, 3, 3271–3288. [Google Scholar] [CrossRef]

- Yan, B.H.; Han, G.D. LA-GRU: Building combined intrusion detection model based on imbalanced learning and gated recurrent unit neural network. Secur. Commun. Netw. 2018, 2018, 6026878. [Google Scholar] [CrossRef]

- Abdulhammed, R.; Faezipour, M.; Abuzneid, A.; Abumallouh, A. Deep and machine learning approaches for anomaly-based intrusion detection of imbalanced network traffic. IEEE Sens. Lett. 2019, 1, 7101404. [Google Scholar] [CrossRef]

- Andresini, G.; Appice, A.; Rose, L.D.; Malerba, D. GAN augmentation to deal with imbalance in imaging-based intrusion detection. Futur. Gener. Comp. Syst. 2021, 123, 108–127. [Google Scholar] [CrossRef]

- Park, C.; Lee, J.; Kim, Y.; Park, J.-G.; Kim, H.; Hong, D. An enhanced AI-based network intrusion detection system using generative adversarial networks. IEEE. IoT-J. 2023, 10, 2330–2345. [Google Scholar] [CrossRef]

- Sharafaldin, I.; Lashkari, A.H.; Ghorbani, A.A. Toward generating a new intrusion detection dataset and intrusion traffic characterization. In Proceedings of the International Conference on Information Systems Security & Privacy, Funchal, Portugal, 22–24 January 2018; Elsevier: London, UK, 2018; pp. 108–116. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Ho, S.; Jufout, S.A.; Dajani, K.; Mozumdar, M. A novel intrusion detection model for detecting known and innovative cyberattacks using convolutional neural network. IEEE Open J. Comput. Soc. 2021, 2, 14–25. [Google Scholar] [CrossRef]

| Method | Oversampling | Undersampling |

|---|---|---|

| SMOTE | √ | |

| RUS | √ | |

| GAN | √ | |

| SPE-ACGAN (our method) | √ | √ |

| Class | Samples of CICIDS-2017 | Composition (%) | Samples of CICIDS-2018 | Composition (%) | Samples of CICIDS-17-18 | Composition (%) |

|---|---|---|---|---|---|---|

| Benign | 2,273,097 | 80.301 | 6,376,223 | 76.041 | 8,649,320 | 76.023 |

| FTP-Patator | 7938 | 0.281 | 193,353 | 2.306 | 201,291 | 1.771 |

| SSH-Patator | 5897 | 0.209 | 187,588 | 2.237 | 193,485 | 1.702 |

| Bot | 1966 | 0.070 | 285,289 | 3.402 | 287,255 | 2.523 |

| DDos | 128,027 | 4.523 | 687,840 | 8.203 | 815,867 | 7.176 |

| Dos GoldenEye | 10,293 | 0.364 | 461,911 | 5.509 | 472,204 | 4.155 |

| Dos Hulk | 231,073 | 8.163 | 41,507 | 0.495 | 272,580 | 2.401 |

| Dos Slowhttptest | 5499 | 0.195 | 139,889 | 1.668 | 145,388 | 1.282 |

| Dos Slowloris | 5796 | 0.205 | 10,989 | 0.131 | 16,785 | 0.148 |

| Heartbleed | 11 | 0.001 | 0 | 0 | 11 | 0.001 |

| Infiltration | 36 | 0.001 | 161,095 | 1.921 | 161,131 | 1.416 |

| Port Scan | 158,930 | 5.615 | 0 | 0 | 158,930 | 1.397 |

| Web Attack-Brute Force | 1507 | 0.054 | 610 | 0.001 | 2117 | 0.001 |

| Web Attack-Sql Injection | 21 | 0.001 | 86 | 0.001 | 107 | 0.001 |

| Web Attack-XSS | 652 | 0.024 | 229 | 0.001 | 881 | 0.001 |

| Class | Before Resampling | Composition (%) | After Resampling | Composition (%) |

|---|---|---|---|---|

| Benign | 1,818,477 | 80.301 | 300,000 | 52.685 |

| FTP-Patator | 7938 | 0.281 | 17,938 | 3.153 |

| SSH-Patator | 6350 | 0.209 | 16,350 | 2.874 |

| Bot | 1572 | 0.07 | 11,572 | 2.034 |

| DDos | 102,421 | 4.523 | 30,726 | 5.401 |

| Dos GoldenEye | 8234 | 0.364 | 18,234 | 3.205 |

| Dos Hulk | 184,858 | 8.163 | 55,457 | 9.748 |

| Dos Slowhttptest | 4399 | 0.195 | 15,499 | 2.724 |

| Dos Slowloris | 4636 | 0.205 | 14,636 | 2.573 |

| Heartbleed | 8 | 0.001 | 10,008 | 1.759 |

| Infiltration | 28 | 0.001 | 10,028 | 1.763 |

| Port Scan | 127,144 | 5.615 | 38,143 | 6.705 |

| Web Attack-Brute Force | 1295 | 0.054 | 11,295 | 1.810 |

| Web Attack-Sql Injection | 16 | 0.001 | 10,016 | 1.761 |

| Web Attack-XSS | 521 | 0.023 | 10,521 | 1.847 |

| Class | Before Resampling | Composition (%) | After Resampling | Composition (%) |

|---|---|---|---|---|

| Benign | 509,778 | 76.041 | 509,778 | 49.708 |

| FTP-Patator | 154,682 | 2.306 | 46,404 | 4.569 |

| SSH-Patator | 150,070 | 2.237 | 45,021 | 6.742 |

| Bot | 228,231 | 3.402 | 68,469 | 8.427 |

| DDos | 550,272 | 8.203 | 165,081 | 16.255 |

| Dos GoldenEye | 369,528 | 5.509 | 11,858 | 1.671 |

| Dos Hulk | 33,205 | 0.495 | 16,602 | 11.257 |

| Dos Slowhttptest | 111,911 | 1.668 | 33,573 | 1.635 |

| Dos Slowloris | 8791 | 0.131 | 18,791 | 1.850 |

| Heartbleed | 0 | 0 | 0 | 0 |

| Infiltration | 128,876 | 1.921 | 38,662 | 3.807 |

| Port Scan | 0 | 0 | 0 | 0 |

| Web Attack-Brute Force | 488 | 0.001 | 10,488 | 1.033 |

| Web Attack-Sql Injection | 68 | 0.001 | 10,068 | 0.991 |

| Web Attack-XSS | 183 | 0.001 | 10,183 | 0.992 |

| Class | Before Resampling | Composition (%) | After Resampling | Composition (%) |

|---|---|---|---|---|

| Benign | 6,919,456 | 76.023 | 700,000 | 49.793 |

| FTP-Patator | 161,032 | 1.771 | 48,309 | 3.463 |

| SSH-Patator | 154,788 | 1.702 | 46,436 | 3.328 |

| Bot | 229,804 | 2.523 | 68,941 | 4.942 |

| DDos | 652,693 | 7.176 | 195,807 | 14.035 |

| Dos GoldenEye | 377,763 | 4.155 | 113,328 | 8.123 |

| Dos Hulk | 218,064 | 2.401 | 65,419 | 4.689 |

| Dos Slowhttptest | 116,311 | 1.282 | 34,893 | 2.501 |

| Dos Slowloris | 13,428 | 0.148 | 13,428 | 0.963 |

| Heartbleed | 8 | 0.001 | 10,008 | 0.713 |

| Infiltration | 128,904 | 1.416 | 38,671 | 0.646 |

| Port Scan | 127,144 | 1.397 | 38,143 | 2.771 |

| Web Attack-Brute Force | 1693 | 0.001 | 11,634 | 0.834 |

| Web Attack-Sql Injection | 86 | 0.001 | 10,086 | 0.723 |

| Web Attack-XSS | 704 | 0.001 | 10,704 | 0.761 |

| Method | CICIDS-2017 | CICIDS-2018 | ||||

|---|---|---|---|---|---|---|

| P (%) | R (%) | F1 (%) | P (%) | R (%) | F1 (%) | |

| Random Forest | 92.17 | 93.79 | 92.97 | 91.68 | 89.65 | 90.65 |

| GoogLeNet | 92.88 | 94.53 | 93.69 | 92.94 | 91.39 | 91.71 |

| CNN | 96.68 | 98.05 | 97.36 | 93.62 | 92.10 | 92.34 |

| CNN + WDLSTM | 98.07 | 98.42 | 98.24 | 94.97 | 94.88 | 94.63 |

| Our Proposed + Random Forest | 93.03 | 94.93 | 93.97 | 92.70 | 90.64 | 91.66 |

| Our Proposed + GoogLeNet | 93.34 | 94.10 | 93.72 | 93.17 | 92.43 | 92.80 |

| Our Proposed + CNN | 96.85 | 98.11 | 97.48 | 94.71 | 93.33 | 94.01 |

| Our Proposed + CNN + WDLSTM | 98.68 | 98.88 | 98.78 | 95.92 | 96.13 | 96.02 |

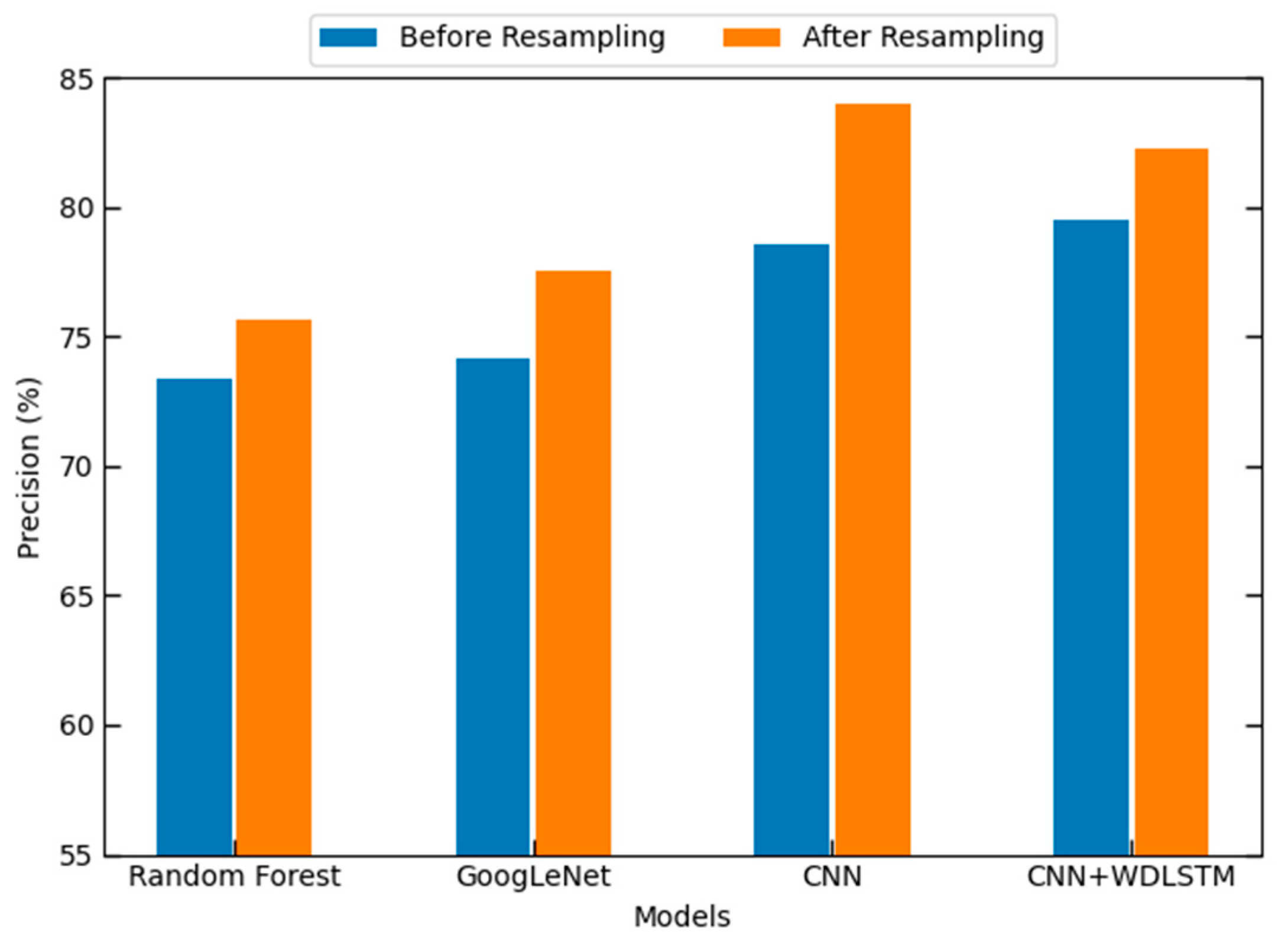

| Method | CICIDS-17-18 | ||

|---|---|---|---|

| P (%) | R (%) | F1 (%) | |

| Random Forest | 73.34 | 74.37 | 70.79 |

| GoogLeNet | 74.16 | 76.40 | 75.26 |

| CNN | 78.53 | 77.30 | 77.91 |

| CNN + WDLSTM | 79.46 | 79.24 | 78.82 |

| RUS + Random Forest | 75.13 | 75.15 | 75.14 |

| RUS + GoogLeNet | 76.58 | 79.36 | 77.94 |

| RUS + CNN | 78.38 | 78.23 | 75.03 |

| RUS + CNN + WDLSTM | 77.68 | 79.06 | 78.36 |

| SMOTE + Random Forest | 74.76 | 79.87 | 76.23 |

| SMOTE + GoogLeNet | 77.82 | 80.14 | 78.96 |

| SMOTE + CNN | 78.09 | 81.96 | 75.32 |

| SMOTE + CNN + WDLSTM | 80.55 | 81.67 | 81.11 |

| SPE + Random Forest | 75.58 | 75.22 | 75.40 |

| SPE + GoogLeNet | 75.69 | 77.58 | 76.57 |

| SPE + CNN | 80.53 | 80.12 | 80.32 |

| SPE + CNN + WDLSTM | 81.02 | 81.82 | 81.42 |

| ACGAN + Random Forest | 74.44 | 74.55 | 74.49 |

| ACGAN + GoogLeNet | 75.55 | 77.52 | 76.52 |

| ACGAN + CNN | 78.98 | 77.21 | 78.08 |

| ACGAN + CNN + WDLSTM | 78.36 | 77.06 | 77.70 |

| Our Proposed + Random Forest | 75.63 | 77.14 | 76.38 |

| Our Proposed + GoogLeNet | 77.57 | 80.20 | 78.86 |

| Our Proposed + CNN | 83.94 | 82.78 | 81.66 |

| Our Proposed + CNN + WDLSTM | 82.23 | 82.54 | 82.38 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, H.; Xu, J.; Xiao, Y.; Hu, L. SPE-ACGAN: A Resampling Approach for Class Imbalance Problem in Network Intrusion Detection Systems. Electronics 2023, 12, 3323. https://doi.org/10.3390/electronics12153323

Yang H, Xu J, Xiao Y, Hu L. SPE-ACGAN: A Resampling Approach for Class Imbalance Problem in Network Intrusion Detection Systems. Electronics. 2023; 12(15):3323. https://doi.org/10.3390/electronics12153323

Chicago/Turabian StyleYang, Hao, Jinyan Xu, Yongcai Xiao, and Lei Hu. 2023. "SPE-ACGAN: A Resampling Approach for Class Imbalance Problem in Network Intrusion Detection Systems" Electronics 12, no. 15: 3323. https://doi.org/10.3390/electronics12153323

APA StyleYang, H., Xu, J., Xiao, Y., & Hu, L. (2023). SPE-ACGAN: A Resampling Approach for Class Imbalance Problem in Network Intrusion Detection Systems. Electronics, 12(15), 3323. https://doi.org/10.3390/electronics12153323