Abstract

PDF has become a major attack vector for delivering malware and compromising systems and networks, due to its popularity and widespread usage across platforms. PDF provides a flexible file structure that facilitates the embedding of different types of content such as JavaScript, encoded streams, images, executable files, etc. This enables attackers to embed malicious code as well as to hide their functionalities within seemingly benign non-executable documents. As a result, a large proportion of current automated detection systems are unable to effectively detect PDF files with concealed malicious content. To mitigate this problem, a novel approach is proposed in this paper based on ensemble learning with enhanced static features, which is used to build an explainable and robust malicious PDF document detection system. The proposed system is resilient against reverse mimicry injection attacks compared to the existing state-of-the-art learning-based malicious PDF detection systems. The recently released EvasivePDFMal2022 dataset was used to investigate the efficacy of the proposed system. Based on this dataset, an overall classification accuracy greater than 98% was observed with five ensemble learning classifiers. Furthermore, the proposed system, which employs new anomaly-based features, was evaluated on a reverse mimicry attack dataset containing three different types of content injection attacks, i.e., embedded JavaScript, embedded malicious PDF, and embedded malicious EXE. The experiments conducted on the reverse mimicry dataset showed that the Random Committee ensemble learning model achieved 100% detection rates for embedded EXE and embedded JavaScript, and 98% detection rate for embedded PDF, based on our enhanced feature set.

1. Introduction

Malicious documents have been one of the growing methods used by attackers to propagate malware. This has been made possible due to the growing numbers of unsuspecting document users and failure of detection by modern antivirus software [1]. Portable Document Format (PDF) has become a major attack vector because of its flexibility, cross-platform widespread usage, and the ease of embedding different types of content such as encoded streams, JavaScript code, executable files, etc. Since PDF files are not perceived to be dangerous like EXE files, they are usually treated with less caution by users. Thus, they can be used as an effective means to launch social engineering attacks, for example to convey ransomware. In [2], it was reported that Sophos Labs discovered a spam campaign where a variant of the Locky Ransomware was launched by a VBA macro hidden in Word Document that is deeply nested inside a PDF file. The malicious PDF file was spread by email as an attachment. Such types of malicious components can be embedded in PDF files using tools such as Metasploit. Furthermore, PDF files pose a higher risk compared to Portable Executables since the embedded content can be encrypted or encoded [3]. PDF documents have also been used in targeted attacks and advance persistent threat (APT) campaigns to accomplish one or more stages of a multi-stage attack, for instance, the MiniDuke APT campaign [4], where infected PDF files that targeted an Adobe Reader vulnerability (i.e., CVE-2013-0640) was used for the first stage of the attack.

The detection of malicious PDF documents is made more challenging by the fact that its format is complex, and it is susceptible to a wide range of attacks, many of which take advantage of legitimate PDF functionality, e.g., the embedding and encoding of a wide variety of content types. Several static and dynamic analysis tools are available to facilitate manual analysis of PDF documents for potentially malicious content. Examples of such tools include PDFiD [5], PeePDF [6], PhoneyPDF [7], and PDF Walker [8]. However, the volume of malicious PDF files that are constantly emerging makes it infeasible for the security community to rely on manual analysis alone. While signatures can be utilized to facilitate automated analysis to detect malicious files, this also comes with its own set of challenges, including susceptibility to obfuscation and aging of signatures against the appearance of new types of attacks.

To overcome these limitations, learning-based systems have been proposed by researchers based on different types of features. Two popular kinds of features used in the current learning-based PDF malware detection systems include JavaScript-based features and structural features. Learning-based systems that utilize JavaScript-based features extract them by analyzing embedded Javascript code to detect malicious behaviour, for example in PJScan [9] or Lux0R [10]. Such systems, however, are only effective for detecting malicious PDF files that contain JavaScript code. Examples of proposed learning-based systems that rely on structural features of PDF files include PDF Slayer [11], Hidost [12], and PDFRate [13]. The use of structural features with machine learning became more widespread because it enables fast automated detection of a wide variety of attacks including newly appearing variants. Recently, detection systems that employ visualization-based features are also being proposed. For example, ref. [14] proposed a system where PDF files are first converted to grayscale images before extracting visualization-based features for machine learning.

According to [15], one of the problems with machine learning-based classifiers in the PDF malware detection domain is that mimicry attacks and reverse mimicry attacks are quite effective against them. A reverse mimicry attack involves injecting or embedding malicious content into benign PDF files such that the features of the benign file will effectively mask the presence of the embedded malicious content from being detected by detection systems. It is a form of evasive adversarial attack that can be performed on a large scale using automated tools. Existing machine learning-based solutions such as PDF Slayer [11], Hidost [12], and PDFRate [13] have been shown to have limited robustness against reverse mimicry attacks. Even the more recent attempt at utilizing visualization techniques for high accuracy PDF malware detection presented in [14] did not show substantial resilience in the reverse mimicry attack experiments.

Hence, despite the advances that have been made with learning-based malware PDF detection, their resilience against evasive or adversarial attacks remains a significant challenge. In order to mitigate the problem, this paper proposes a novel approach that uses an enhanced feature set which extends existing structural features with anomaly-based ones, and utilizes the power of ensemble learning to provide a high accuracy malicious PDF detection system that is also resilient against injection-based adversarial attacks. The main contributions of this paper are as follows:

- The paper proposes an ensemble learning-based system that employs an enhanced feature set comprising structural and anomaly-based features. This feature set is a unique one that is designed to enable robust and effective detection of malicious PDF files including those that employ evasive techniques.

- The novel anomaly-based features that enable robust maldoc detection are described, discussing their impact on the performance of the learning-based detection system, as well as its resilience to reverse mimicry attacks.

- An extensive performance evaluation of the proposed system for malicious PDF detection is undertaken, using the recently released Evasive-PDFMal2022 dataset. The results showed that the ensemble learners demonstrated high accuracy with the enhanced feature set.

- Furthermore, several experiments are performed using a publicly available reverse mimicry attack dataset consisting of three types of injection attacks. A comparative analysis with several existing systems is presented to demonstrate the robustness of our proposed approach against reverse mimicry attacks. We also present explanations of the models prediction in each attack scenario using the SHapely Additive exPlanation (SHAP) approach.

This paper is organized as follows: after the Introduction in Section 1, Section 2 gives an overview of PDF file format and is followed by related works in Section 3. Section 4 presents the development of the proposed system, and describes the new anomaly-based features that are incorporated with structural features to enable more robust malicious PDF file detection. The experiments and results are discussed in Section 5 and Section 6. Finally the paper is concluded in Section 7 with recommendations for future work.

2. Structure of a PDF File

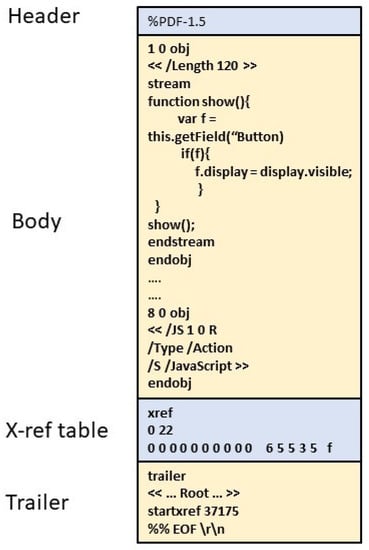

PDF was created as a versatile format to enable sharing of text, rich media, images, etc. independent of hardware or software platforms and in a consistent way. It was invented by Adobe in 1993 and has now become one of the most widely used standards for sharing documents. The PDF format was standardized into an ISO 32000-1:2008 [16] open standard. The typical structure of a PDF document is shown in Figure 1 and consists of four parts:

Figure 1.

The sections of a typical PDF file.

- The header: contains PDF file version information according to the ISO standard.

- The body: This section typically contains the contents that are displayed to the user. It shows the number of objects that define the operations to be performed by the file. The body section also contains the embedded data such as text, images, scripting code, etc. which are also presented as objects. Within an object, operations such as decompression of data or decryption are defined if needed and will typically take place during the rendering of the file.

- The cross-reference (x-ref) table: This contains a list of the offsets of each object that are to be rendered within the file by the reader application. The offsets within the x-ref table makes it possible to randomly access any of the objects in the file. The x-ref table is also the section that enables incremental updates to a document, as allowed by the PDF standard. Thus, when a document is updated, extra x-ref tables and trailers are appended at the end of the document.

- The trailer: The trailer is a special object corresponding to the last section of the file. It points to the object identified by the/Root tag, which is the first object that will be rendered by the document viewer. The offset of the start of the x-ref table is also located in the trailer. The last line of the file, which is the end of file string ‘%%EOF’ is also part of the trailer section.

Basically, When a PDF reader displays a file, it begins from the trailer object and parses each indirect object referenced by the x-ref table, and at the same time decompresses the data so that all pages, texts, images, and other components of the PDF file are progressively rendered. This means that a PDF file is organized as a graph of objects that contain instructions for the PDF reader, which represents the operations to be performed for presenting the file contents to the user [17].

3. Related Work

Learning-based detection of malware and malicious content in PDF documents have proliferated in recent years due to the drive to create new approaches that will enhance or complement existing anti-malware systems. In [3], the authors proposed an approach to detect malicious content embedded in PDF documents. They focused on data encoded in the ‘stream’ tag along with other structural information. Their method decrypts encrypted blocks and decodes encoded blocks within the stream tags and also utilizes other structural features. These are given to a decision tree for classification. Although the paper claims that the method is effective against mimicry attacks, no empirical evaluation was presented to support the claim. In [18], a method for detecting and classifying suspicious PDF files based on YARA scan and structural scan is presented. Their system inspects PDF documents to search for features that are important in labelling PDF documents as suspicious. In [10], a system to detect malicious JavaScript embedded in PDF files was presented. The system was called ‘Lux 0n discriminant References’ (Lux0R). The authors of [9] presented PJScan, a tool which is designed to uncover JavaScript from the malicious file and to extract its lexical properties via a tokenizer. The output, which is a token sequence, is then used to train a machine learning algorithm to detect malicious JavaScript-bearing PDF files.

Jeong, Woo, and Kang [19] presented a convolutional neural network (CNN) designed to take the byte sequence of a stream object contained within a PDF file and predict whether the input sequence contains malicious actions or not. The CNN model achieved superior performance compared to traditional machine learning classifiers including SVM, Decision Tree, Naive Bayes, and Random Forest. Albahar et al. [20] presented two learning-based models for detection of malicious PDFs and experimented on 30,797 infected and benign documents collected from the Contagio dataset and VirusTotal. Their first model was a CNN model that used tree-based PDF file structure as features and yielded 99.33% accuracy; the second model was an ensemble SVM model with different kernels which used n-gram with object content encoding as features and yielded an accuracy of 97.3%. In [21], Bazzi and Onozato used LibSVM to build a classification model which utilizes features extracted from a report generated through dynamic analysis with Cuckoo sandbox. The study used 6000 samples for training and 10,904 samples for testing, obtaining an accuracy of 97.45%.

In [22], a PDF maldoc detection system was proposed based on extracting features with PDFiD and PeePDF. They used both tools to extract keyword and structural features and used malicious document heuristics to derive an additional set of features. Trough feature selection, the top 14 important features were selected, which led to an improved accuracy of up to 97.9% for the ML classifier. Zhang proposed MLPdf in [23] which uses an MLP classifier to detect PDF malware. Their system extracted a group of high quality features from two real world datasets that contained 105,000 malicious and benign PDF documents. The MLP model achieved a detection rate of 95.12% and low false positive rate of 0.08%. Jiang et al. [24] applied semi-supervised learning to the problem of malicious PDF document detection in [24] by extracting structural features together with statistical features based on entropy sequences using wavelet energy spectrum. They then employed a random sub-sampling approach to train multiple sub-classifiers, with their method achieving an accuracy of 94%.

The authors of [25] did a performance comparison of machine learning classifiers to traditional AV solutions by experimenting on PDF documents with embedded JavaScript. They used 995 samples for training, 217 samples for validation, and 500 samples for testing and obtained 92%, 50%, and 96% accuracy with Random Forest, SVM, and MLP, respectively. In [14], the authors applied image visualization techniques of byte plot and Markov plot and extracted various image features from both. They evaluated the performance using the Contagio PDF dataset, obtaining very good results when testing with samples from the same dataset. They also evaluated their models on a reverse mimicry attack dataset, with very limited success but showing slightly improved robustness over the PDF Slayer approach. They experimented with both Markov plot and byte plot visualization methods, applying various image processing techniques used in extracting features to train RF, K-Nearest Neighbor (KNN), and Decision Tree (DT) classifiers. The best method (byte plot + Gabor Filter + Random Forest) achieved an F1-score of 99.48%.

Al-Haija, Odeh, and Hazem proposed in [26] a detection system for identifying benign and malicious PDF files. Their proposed system used an optimally-trained AdaBoost decision tree and their experiments were performed using the Evasive-PDFMal2022 dataset [27] (which is also used in this paper). Their system achieved 98.4% prediction accuracy with 98.80% precision, 98.90% sensitivity, and a 98.8% F1-score. In [28], the authors also utilized the Evasive-PDFMal2022 dataset and applied an enhanced structural feature set to investigate the efficacy of the enhanced set. Seven machine learning classifiers were evaluated on the dataset using the enhanced features, and improved classification accuracy was noticed with 5 out of 7 of the classifiers compared to the baseline scenario without the enhanced features.

In [29], a system for detecting evasive PDF malware was proposed based on Stacking ensemble learning. The detection system is based on a set of 28 static features which were divided into ‘general’ and ‘structural’ features. Their system was evaluated on the Contagio dataset, yielding an accuracy of 99.89% and F1-score of 99.86%. They also evaluated the system on their newly generated Evasive-PDFmal2022 dataset [27] for which they achieved 98.69% accuracy and a 98.77% F1-score, respectively.

From our review of related work, it is evident that several of the proposed learning-based detectors utilized features extracted only from JavaScript obtained from the PDF files, e.g., [9,10,19]. While such systems may be able to detect PDF files incorporating content injection attacks that involve embedded JavaScript, they may not be effective against other types of content embedding attacks, e.g., those involving embedded PDF, Word, EXE, or other types of content. Other works, such as [22,23,26,29], utilized structural features in their work, but did not evaluate their approach against any type of adversarial attacks. Different from the existing works, this paper aims to improve the robustness of malicious PDF document detection by enhancing structural features with novel anomaly-based features and utilizing the enhanced feature set to train ensemble learning classifiers. Furthermore, we present experiments to demonstrate the resilience of our proposed approach to reverse mimicry injection attacks, enabled by the new anomaly-based features.

4. Methodology

This section presents our proposed approach to automated ensemble learning-based malicious PDF detection, which is based on an enhanced feature set consisting of 35 features (29 structural features and 6 anomaly-based features). These features are extracted from the labeled files that have been set aside as the training set. The instances consisting of the 35 extracted features are fed into ensemble learning classification algorithms to learn the distinguishing characteristics of benign and malicious PDF files, thus enabling the prediction and classification of unlabeled PDF files as benign or malicious, as shown in Figure 2. The methods used in building the proposed PDF classification system are discussed in the following sub-sections.

Figure 2.

Proposed enhanced features-based approach.

4.1. Datasets

Evasive-PDFMal2022 dataset: The first dataset used for the study in this paper is a recently generated evasive PDF dataset (Evasive-PDFMal2022) [27] which was released by Issakhani et al. [29]. This dataset has been generated as an improved version of the well-known Contagio PDF dataset which has been utilized extensively in previous works. According to [29], the Contagio dataset has several drawbacks which include (a) a high proportion of duplicate samples with very high similarity, which was estimated as 44% of the entire dataset and (b) lack of sufficient diversity of samples within each class of the dataset. Thus, the new dataset aims to address the flaws found with the Contagio dataset and provide a more realistic and representative dataset of the PDF distribution. It consists of 10,025 PDF file samples with no duplicate entries (4468 benign and 5557 malicious).

PDF reverse mimicry dataset: This was the second dataset utilized in our study. It is used to evaluate the robustness of our proposed approach to content injection attacks designed to disguise malicious content by embedding them within benign PDF files. This is known as the reverse mimicry attack, and it is a form of evasive adversarial attack that can be performed on a large scale using automated tools. The reverse mimicry dataset [30] consists of 1500 benign PDF files with embedded malicious components and is available online from the Pattern Recognition and Applications lab (PRAlab), University of Cagliari, Italy. The dataset consists of 500 PDF files containing embedded JavaScript, 500 PDF files containing embedded PDF, and 500 PDF files containing embedded EXE payload. Further details on how these reverse mimicry files were created can be found in [17]. Note that the detection of malicious PDF files created by altering a benign file through such reverse mimicry attacks is a challenging task. This is because the injected file will still retain the characteristics of a benign PDF file, thus making it hard for learning algorithms to discriminate effectively.

4.2. Feature Extraction of Structural Feature Set

Our proposed ensemble learning-based detection system is based on 35 features: 29 structural features and 6 features that are based on anomalies, i.e., properties that are rarely observed from regular harmless files. The structural features we used are similar to those found in previous works, however, the anomaly-based features are novel features aimed at improving the performance of the learning-based detectors. The features were extracted by using our extended version of the the open source PDFMalyzer tool available from [31]. PDFMalyzer is based on PDFiD and PyMuPDF and it enabled the 29 structural features to be extracted. By extending the tool using Python scripts, we were able to extract the new anomaly-based features and combine them with the 29 structural features into a feature vector to represent each of the PDF files being used in our experiments. The initial set of 29 structural features are listed in Table 1.

Table 1.

Initial feature set containing 29 structural features (NK: non-keyword based, K: keyword-based).

4.3. Enhancing the Structural Feature Set with New Features

Structural features are related to the characteristics of the name object present in the PDF file [17]. Structural features have the ability to detect the presence of different types of embedded contents such as JavaScript or ActionScript, which can aid in the detection of malicious PDF files. Note that the keywords representing the structural features could be missed if a deliberate attempt has been made to evade their detection, e.g., through obfuscation, or due to errors from the analysis tools being used to extract the features. These uncertainties in feature extraction motivated the derivation of new (anomaly-based) features to improve robustness. The proposed anomaly-based features are described next.

When a user directly modifies an existing PDF file, this creates a new x-ref table and trailer which are added to the file. This means that a manually updated PDF file will typically have more than one trailer and x-ref table. Hence, the feature vector of a benign file should consist of more than one occurrence of /trailer, /xref, and /startxref features. Thus, having only one occurrence of those features in the feature vector should be considered an anomaly. Based on this reasoning, we defined two new features (mal_trait1 and mal_trait2) derived by observing the number of /trailer, /xref, or /startxref occurrences (which are typically the same) together with keyword features that are indicative of possible malicious content. These indicators of malicious content for each of these two new features include (a) presence of JavaScript and (b) the presence of one or more embedded files. The anomaly-based features are explained below:

- mal_trait1: This is a new feature being proposed to represent the situation where /xref, /trailer, and /startxref are found only once in the PDF file, but with JavaScript detected within the file as well. This could indicate the injection or embedding of JavaScript code with an automated tool (such as Metasploit), since having only one occurrence the aforementioned three keywords does not suggest user modification.

- mal_trait2: This is a new feature being proposed to represent the situation where /xref, /trailer, and /startxref are only found once in the PDF file, but an embedded file is also detected (regardless of whether JavaScript is present or not). This could also indicate that another file was injected or embedded within the PDF file using an automated tool (such as Metasploit), since having only one occurrence of the aforementioned three keywords does not suggest user modification.

- mal_trait3: The purpose of this new feature is to search for the presence of both JavaScript code and embedded files within the PDF file. The intuition behind this feature is that the JavaScript code can be used to launch a malicious embedded file.

- diff_obj: This feature captures anomalies observed with the opening and closing tags of objects in a PDF file as described in [22]. Each object in the file is expected to begin with an opening tag (obj) and have a corresponding closing tag (endObj). A difference in the occurrences of the opening and closing tags indicates possible file corruption (usually a missing closing tag). This is an obfuscation technique designed to bypass some parsing tools that strictly conform to PDF standards. On the other hand, the file will still be rendered correctly by the PDF readers, thus enabling the intended malicious activity to occur.

- diff_stream: This feature also captures anomalies in a similar manner to diff_obj, by recording the occurrences of ‘stream’ and ‘endStream’ which are the opening and closing tags of stream objects. According to [22], this evasive technique of omitting a stream object tag is intended to corrupt the file such that parsing tools within detectors will be confused but the file will still be rendered and shown to the user by reader applications.

- mal_traits_all: This is a new composite feature that is intended to help with the identification of files that exhibit one or more of the above five anomalous features. The intuition behind this is to create a robust feature that will maintain its relevance even if new techniques evolve to defeat a subset of the new features. For instance, the ability to obfuscate the /trailer, /xref, or /startxref values may produce errors in capturing mal_trait1 and mal_trait2 features or make them obsolete in the future. However, mal_traits_all will still remain relevant in the presence of such obfuscation because it is created as a compound feature. Moreover, the failure of extraction tools could lead to missing or erroneous values for some of the standard features. Mal_traits_all therefore provides an indicator that has resilience against the occurrence of such errors.

4.4. Ensemble Learning Classifiers

In this section, we provide brief descriptions of the ensemble learners used in our proposed system. The ensemble classifiers are first evaluated using the initial 29 structural features, and then the 36 features, including the anomaly-based ones. The trained ensemble learners are also evaluated on three reverse mimicry datasets. The results of these experiments are presented in Section 5.

4.4.1. Random Committee

This is an ensemble learner that utilizes randomizable base classifiers to build an ensemble. It builds each base classifier using a different random number seed but based on the same data. Hence, a randomizable base classifier must be chosen as it does not accept non-randomizable classifiers such as J48, Simple Logistic, or rule-based classifiers. Random Committee uses the same type of base classifier, e.g., Random Tree. Different seeds are used to generate different random numbers for the underlying base leaner which, although it uses the same mechanism, will result in a different model as a result of being initialized differently. With the Random Committee, since each base learner is built from the same data, diversity of models can only come from random behaviour. The outcomes of these models are averaged to generate a final prediction.

4.4.2. Random Subspace

Random Subspace [32] is an ensemble learner that constructs models in randomly chosen subspaces, with the training data samples in the feature space. The output of the models is then combined by a simple majority vote.

4.4.3. Random Forest

Random Forest [33] combines decision trees with bagging (bootstrap aggregating), and retains many of the benefits of decision trees while being able to handle a large number of features. Each model in the ensemble uses a randomly drawn subset of the training set, and the combined outcome is derived from a majority vote, with each model having equal weight. Random Forest has been widely applied to different classification problems, and generally shows very good performance compared to other non-ensemble learners in many problem domains.

4.4.4. AdaBoost

AdaBoost is based on Boosting, which incrementally builds an ensemble by training each new model instance to emphasize the training instances that were miss-classified in previous iterations. Boosting [34] iteratively builds a succession of models with each one being trained on a dataset with previously miss-classified instances given more weight. All of the models are then weighted according to their success and the outputs are combined by voting or averaging. With AdaBoost, the training set does not need to be large to achieve good results, since the same training set is used iteratively.

4.4.5. Stacking

This is also called Stacked Generalization [35]. It combines multiple base learners by introducing the concept of a meta-learner and can be used to combine models of different types, unlike boosting or bagging-based ensemble learners. The training set is split into two non-overlapping sets and the first part is used to train the base learners while testing them on the second part. Using the prediction/classification outcomes from the test set as inputs, and correct labels as outputs, the meta-learner is trained to derive a final classification outcome.

5. Experiments and Results

In this section, we present the results of the experiments for quantifying the impact of the new features on the performance of ensemble learning classifiers (the core component of our proposed overall approach). In the previous section, we already explained how the features provide resilience against some obfuscation and extraction errors. Ideally, the new features should not have a negative impact on the classification accuracy when incorporated with the existing ones. First, a baseline experiment is performed where we train the five ensemble classifiers using only the original 29 features. Afterwards, a second set of experiments is carried out with the enhanced set containing all the 35 features. The configurations of the ensemble learners are shown in Table 2.

Table 2.

Ensemble Classifier Configurations.

5.1. Original Feature Set Results

Table 3 presents the 10-fold cross validation results of five ensemble classifiers trained using the original 29 structural features extracted from the PDF samples in the dataset. These results are based on the 10,025 samples of the Evasive-PDFMal2022 dataset. From the table, it can be observed that the Random Forest, Random Committee, and Random Subspace models yielded higher overall accuracy >99%. The Stacking and AdaBoost models obtained an overall accuracy of 98.78% and 98.63%, respectively. These results show that the ensemble learners performed well with the 29 baseline structural features since all of the classifiers showed >98% accuracy.

Table 3.

Ensemble classifiers results without new features (10-fold CV).

5.2. Enhanced Feature Set Results

Table 4 presents the 10-fold cross validation results of five ensemble classifiers trained using the enhanced set with 35 features. These results are based on the 10,025 samples of the Evasive-PDFMal2022 dataset. From the table, it can be observed that there is an improvement in the performance of Random Forest with the overall accuracy slightly increased to 99.33%. The AdaBoost and Stacking models also increased their performance with accuracy rising to 98.83% and 98.84%, respectively. On the other hand, the overall accuracy of Random Subspace dropped slightly by 0.06%, while that of Random Committee also dropped by 0.06%. These results show that the introduction of the new features did not have a negative impact on the ensemble classifiers. However, our main goal is to examine whether these features provide resilience by improving the performance of the models in adversarial scenarios. Our next set of experiments on the reverse mimicry attack dataset will underscore the impact of the novel features to the performance of the ensemble learning models.

Table 4.

Ensemble classifiers results with the new features (10-fold CV).

5.3. Investigating the Effect of the New Features against the Reverse Mimicry Attacks

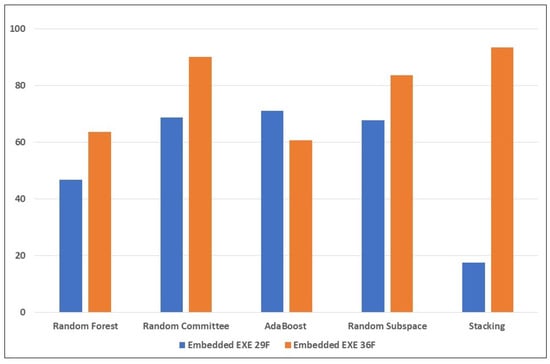

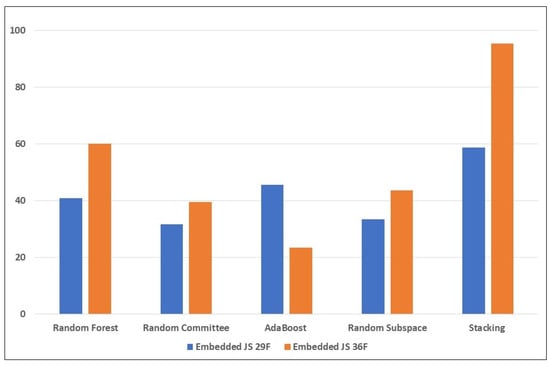

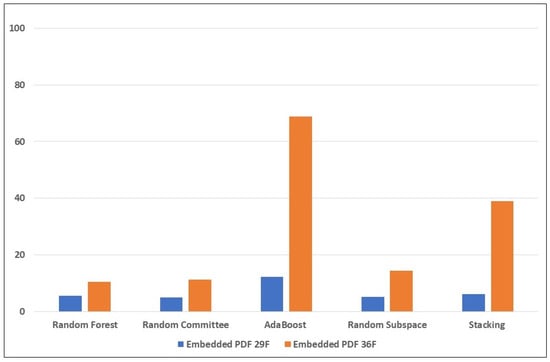

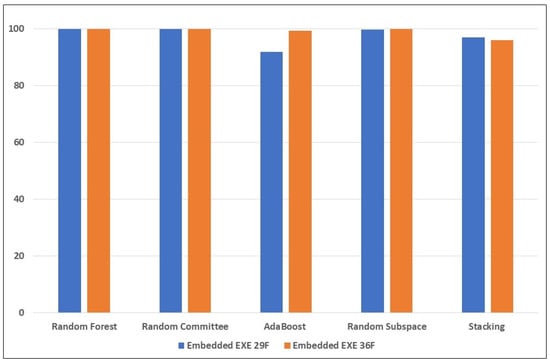

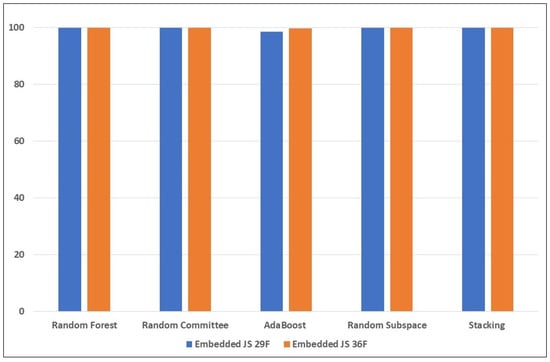

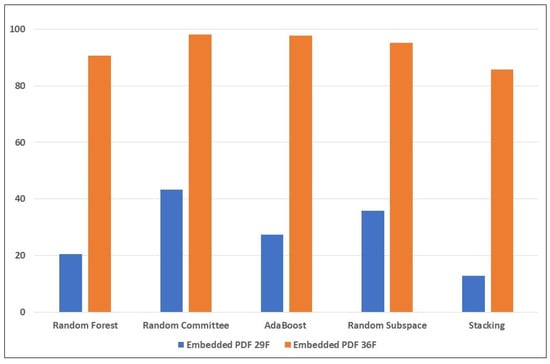

As mentioned earlier, the reverse mimicry dataset consists of three content injection attacks each with 500 samples. They include (a) embedded executable, (b) embedded JavaScript, and (c) embedded PDF. The experiments were conducted by training the ensemble models with all of the Evasive-PDFMal2022 samples and then using each of the 500 samples in the reverse mimicry dataset as the testing set. The first model training was done with only the 29 baseline structural features and then the models were evaluated on the attack samples. The same process was repeated with the full set of 35 features including the new anomaly-based features. The results of these experiments are shown in Table 5 and Table 6. The numbers depicted in brackets in the table heading denote the number of samples used in the evaluation (a few of the initial samples failed during the experiments).

Table 5.

Reverse mimicry attack dataset—ensemble classifiers results without the new features.

Table 6.

Reverse mimicry attack dataset—ensemble classifiers results with the new features.

From Table 5 (without the new features), it can be seen that the best result for embedded Exe was AdaBoost, with 71.2%, i.e., 355 samples detected. For the embedded JavaScript, the best was the Stacking model which detected 293 samples (58.8%). In the embedded PDF set, the highest was only 12.2% (61 samples) detected. This shows that the embedded PDF was the most challenging attack to detect. One possible reason for this could be the lack of structural features (keywords) that directly indicate when a PDF file is present in the PDF file. In the feature set there are two keywords directly related to JavaScript, which may make it easier to detect embedded JavaScript attacks. Another possible reason could be the way the embedded PDF attack was crafted. The embedded PDF can be used to nest other features which will not appear within the parent benign PDF, thus tricking the classifier into predicting the sample as benign.

From Table 6 (with the new anomaly-based features), there is significant improvement in the detection of the mimicry attacks. Random Committee and Stacking detected 449 (90.1%) and 466 (93.6%) samples of the embedded EXE attack, respectively. For the embedded JavaScript, Stacking also obtained 477 (95%) detected samples, while for the embedded PDF, the highest was AdaBoost, with 68.9% (344 samples). Again, this highlights how challenging it is to detect the PDF embedding attack, for the reasons mentioned earlier. However, there is improvement compared to the results in the previous table; this can be attributed to the new anomaly-based features introduced into the feature set. The significant improvement in the performance of the Stacking learning model highlights the impact of the anomaly-based features introduced into the mix. The new features mal_trait2, mal_trait3, and mal_traits_all are most likely to be responsible for enhancing the ability of the ensemble learners to detect more embedded EXE samples. The new feature mal_trait2 is likely to have had the most impact in improving the models’ ability to detect embedded PDF samples. Figure 3, Figure 4 and Figure 5 visually depict the percentages of detected samples with and without the new features for each of the three types of reverse mimicry attacks investigated.

Figure 3.

Performance of the ensemble learners on the embedded EXE reverse mimicry samples (with and without the new features).

Figure 4.

Performance of the ensemble learners on the embedded JS reverse mimicry samples (with and without the new features).

Figure 5.

Performance of the ensemble learners on the embedded PDF reverse mimicry samples (with and without the new features).

5.4. Experimenting with Training Set Augmentation

At the initial stage of our investigation, we hypothesized that the detection of adversarial samples could be facilitated by augmenting the training set with some examples from the attack dataset. This is expected to enable the classifier models to learn the characteristics of the adversarial samples and be equipped to classify new unseen examples correctly. Based on this hypothesis, another set of experiments was performed, where 10% of the samples from each type of content injection attack set was taken and used to augment the training set. The results of the experiments are shown in Table 7 and Table 8.

Table 7.

Reverse mimicry attack dataset—ensemble classifiers results with training set augmentation but without the new features.

Table 8.

Reverse mimicry attack dataset—ensemble classifiers results with training set augmentation and the new features included.

From Table 7, the results of the ensemble learners’ performance when trained without the new features seem to confirm our hypothesis in the case of embedded EXE and embedded JavaScript detection of reverse mimicry attack detection. Random Forest and Random Committee models detected all the samples from both attacks. However, they still performed poorly when tested with the embedded PDF sample set, despite having augmented the training set with 50 samples from the embedded PDF set. Data augmentation of the training set with adversarial examples clearly made the detection of embedded EXE and embedded JavaScript mimicry attacks much easier to detect, even without the new features.

In Table 8, the results show dramatic improvement when the new anomaly-based features were utilized in the training and testing sets (after augmenting the training set with adversarial samples). Figure 6, Figure 7 and Figure 8 visually depict the percentages of detected samples with and without the new features, and with data augmentation for each of the three types of reverse mimicry attacks investigated. It can be seen that Random Committee detected 98% of the embedded PDF attacks compared to only 43.2% without the new features. These results show that data augmentation as a means to improve detection of adversarial samples would be more effective only if we have the right feature set. The possible reason for significant improvement in embedded PDF detection due to the new features can be explained as follows: it is highly likely that the combination of the anomaly-based features with other features produced new patterns that were learned by the ensemble models, and these patterns were present in the samples that the training set was augmented with. In a nutshell, we can conclude that the new anomaly-based features significantly enhanced the robustness of the ensemble learning models against reverse mimicry attacks via content injection.

Figure 6.

Performance of the ensemble learners on the embedded EXE reverse mimicry samples (with and without the new features), using training set augmented with attack samples.

Figure 7.

Performance of the ensemble learners on the embedded JS reverse mimicry samples (with and without the new features), using training set augmented with attack samples.

Figure 8.

Performance of the ensemble learners on the embedded PDF reverse mimicry samples (with and without the new features), using training set augmented with attack samples.

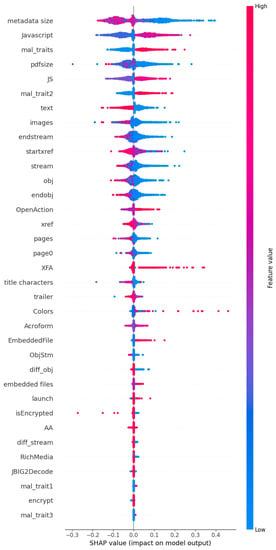

5.5. Explaining and Interpreting the Ensemble Model Using SHapely Additive exPlanation

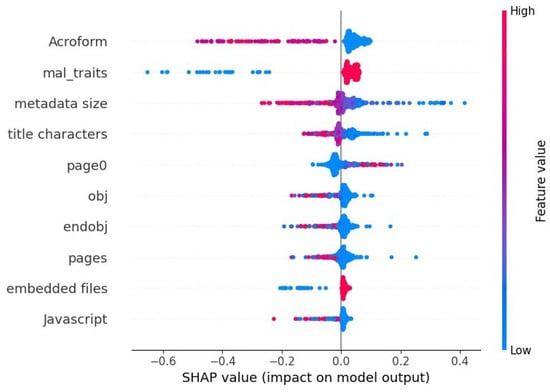

In this section we will explain the ensemble model for evasive malicious PDF detection using SHapely Additive exPlanation (SHAP). SHAP was introduced by Lundberg and Lee in 2017 [36] as a model-agnostic method of explaining machine learning models based on Shapley values taken from game theory. SHAP determines the impact of each feature by calculating the difference between the model’s performance with and without the feature. Thus, it provides an understanding of how much each feature contributes to the prediction. In Figure 9 a SHAP summary chart can be seen from which we can visualize the importance of the features and their impact on predictions. This plot was generated from building an ensemble model with 80% of the dataset and testing on the remaining. The features are sorted in descending order of SHAP value magnitudes over all testing samples. The SHAP values are also used to show the distribution of the impacts each feature has on the prediction. The colour represents the feature value, with red indicating high while blue indicates low.

Figure 9.

Each features impact on model’s predictions as determined by SHAP, for the ensemble model’s prediction on test samples that do not contain reverse mimicry content injection.

From Figure 9, we can see that metadata size, JavaScript, and mal_traits_all were the top three that had the most impact, according to the SHAP values. It can also explain that when the metadata size is low (blue) the model predicts positively, i.e., as a malicious PDF in most cases. However, when the metadata size is large (red), that impacts on the prediction by making the model classify documents as benign. We can also see that in most cases when Javascript or JS is present (red), the model predicts malicious PDF, while it predicts benign PDF if it is absent (blue). When there is mal_traits present (red), then malicious PDF is predicted, and when it is not present (blue), in many instances that led to a prediction of benign PDF. The same is true for mal_trait2. The plot also shows us that for many test samples, when text, images, and number of streams are low (blue endstream, stream) or number of objects are low (blue obj and endobj), then the PDF is likely to be predicted as malicious. For text, high values (red) indicate benign PDF in many cases; which makes sense because those will be genuine documents as opposed to crafted PDF that have been manipulated for nefarious purposes. The plot also shows us that the model predicts malicious PDF for many instances where XFA, OpenAction, and EmbeddedFile were present (red). Note that these plots only relate to the particular test set that was used and will be different from another test set which will have a different distribution of the features.

In Figure 10, the SHAP summary chart depicts the impacts of the top 10 features on an ensemble model’s prediction on the embedded exe reverse mimicry test set. It shows the the presence of Acroform (which is indicative of potential manual input into the document) has a negative impact on the prediction (i.e., benign is predicted) while the opposite is true. This also happens when metadata size is large (red) or there is a large number of objects or pages in the PDF document. The presence of mal_traits (i.e., any of the new anomaly features) leads to a positive prediction, and so does the presence of embedded files.

Figure 10.

Each features impact on model’s predictions as determined by SHAP, for the ensemble model’s prediction on test samples that consist of embedded exe within the pdf files.

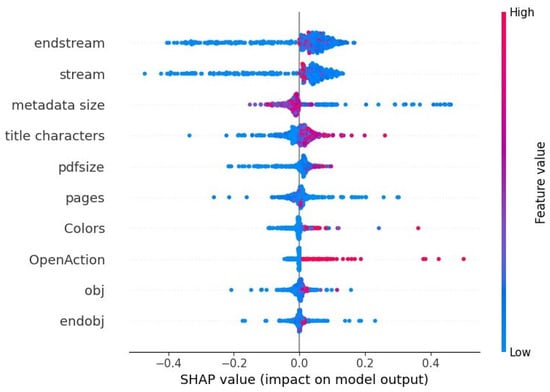

The SHAP summary chart in Figure 11 depicts the impacts of the top 10 features on an ensemble model’s prediction on the embedded pdf reverse mimicry test set. It shows that high number of streams (endstream and stream being red) indicates malicious PDF while in some cases low number of streams does also indicate malicious PDF. This could mean that a combination with other features influences the prediction, or some of these instances could be incorrectly classified. The figure also shows us that positive predictions (i.e., malicious PDF) are made when metadata size is low, title characters are absent when PDF size is large (which can be an indicator for embedded PDF) and when OpenAction (which could be used to manipulate the embedded PDF) is present (red).

Figure 11.

Each features impact on model’s predictions as determined by SHAP, for the ensemble model’s prediction on test samples that consist of embedded pdf within the pdf files.

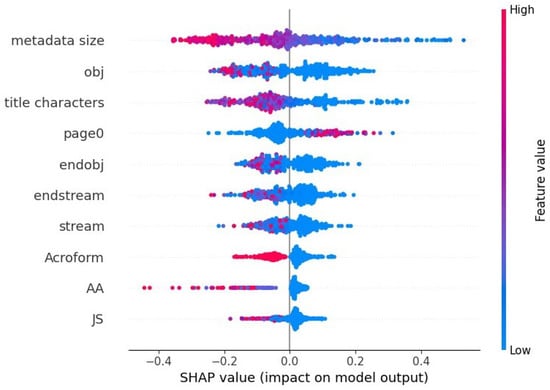

In Figure 12, the impacts of the top 10 features on an ensemble model’s prediction on the embedded JavaScript reverse mimicry test set is shown. The model’s positive (malicious PDF) prediction can be explained by seeing low metadata size, smaller number of objects, fewer title characters, fewer streams, and the absence of Acroform. The presence of Acroform, JS keyword, and AA feature seem to be indicators of negative (benign PDF) prediction amongst the samples of embedded JavaScript from this reverse mimicry dataset used to analyze the model with SHAP. In a nutshell, these SHAP summary charts demonstrate the explainability of the models which is crucial in increasing the trust of our proposed approach while giving us insight into the models’ decision-making.

Figure 12.

Each features impact on model’s predictions as determined by SHAP, for the ensemble model’s prediction on test samples that consist of embedded JavaScript within the pdf files.

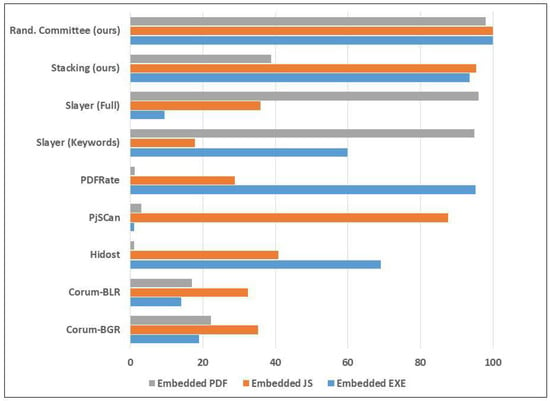

5.6. Comparing Our Results with Existing Works

In this section we present a comparison of our approach to other works in the literature using the reverse mimicry dataset. In a reverse mimicry attack, malicious content is injected into a benign file. This type of attack does not exploit any specific knowledge of the attacked system [17]. The dataset of 1500 evasive PDF files with injected content was used in [17] to evaluate several existing PDF detectors (Hidost, PJScan, PDFRate, Slayer Neo—Keyword and Full versions).

Corum et al. [30] employed visualization techniques for PDF malware detection. The results of their model on the reverse mimicry dataset is shown in Table 9. The best result from that paper was obtained from using Byte plot + Gabor + Random Forest classifier, which detected only 95 out of about 500 samples for EXE embedding; 176 out of 500 for JS embedding; and 111 out of 500 for PDF embedding. In Table 9 and Figure 13 this approach is named as Corum-BGR. Their Byte plot + Local entropy + Random Forest approach detected only 70 out of 500 for EXE embedding; 162 out of 500 for JS embedding; and 85 out of 500 for PDF embedding. In Table 9 and Figure 13 this approach is named as Corum-BLR.

Table 9.

Reverse mimicry attack dataset—comparison with existing works.

Figure 13.

Performance of the ensemble learners on the reverse mimicry samples, with training set augmented with attack samples.

From Table 9, the results of the first two rows indicate that the visualization techniques which were reported to have achieved high accuracies on the Contagio PDF dataset performed poorly when tested with the reverse mimicry attack samples. On the other hand, even though Slayer Neo struggled to detect embedded EXE and embedded JavaScript, it was quite effective in detecting embedded PDF attacks. This is because of the way the system was designed. Moreover, note that PDFRate was the only system that detected a high percentage of Embedded JavaScript. This is because it was created specifically to detect JavaScript-bearing malicious PDF files. Note that these tools and approaches constitute the stat-of-the-art in the domain of malicious PDF document detection. Table 9 and Figure 13 both illustrate that the approach proposed in this paper has outperformed these state-of-the-art methods in terms of resilience to reverse mimicry content injection attacks.

6. Limitations of Our Proposed Approach

In this section we discuss the limitations of the proposed approach presented in this paper. The first one is that the extraction of the features is reliant upon existing static analysis tools (i.e., PDFMaLyzer, which in turn utilizes PDFiD). This means that the approach is prone to the limitations of these tools as well. Hence, if a feature can be hidden from those tools, it would affect extraction in our proposed system as well. However, the extended anomaly features set is designed to counteract the tools’ failure to some extent. A direction for future improvement is to make the underlying feature extraction tools more robust or to extract a hybrid of complementary features that the system can use to make it resilient to such failures. Another limitation of our proposed approach is that it could be susceptible to obfuscation, whereby some of the features could be masked. A possible countermeasure for this is to perform content analysis rather than relying solely on extraction of such features from structural keywords. The content analysis-based features could also provide a hybrid composite features approach when combined with the structural and anomaly-based features.

7. Conclusions and Recommendation for Future Work

In this paper we presented a malicious PDF detection system based on ensemble learning with an enhanced feature set. The enhanced feature set consists of 6 new anomaly-based features which we have added to 29 structural features derived from existing PDF static analysis tools. In the first part of our experiments, the results have shown that the introduction of the new features did not diminish performance after testing five ensemble learning algorithms using the Evasive-PDFMal2022 dataset. The second part of our experiments performed on the PDF reverse mimicry dataset showed the robustness of the new features against content injection attacks designed to disguise malicious content by embedding them within benign PDF files. By comparing our results with existing approaches including Hidost, PJScan, PDFrate, Slayer Noe, and other approaches, there was a significant improvement in detection rates by our proposed approach. The experiments conducted on the reverse mimicry dataset showed that the Random Committee ensemble learning model achieved 100% detection rates for embedded EXE and embedded JavaScript, and 98% detection rate for embedded PDF, based on our enhanced feature set. The experiments also showed that data augmentation will not enhance the detection of adversarial samples unless accompanied by effective feature engineering, which our system incorporates through the new anomaly-based features. For future work, we recommend investigating how to improve the resilience of other types of existing PDF detection systems, e.g., those that utilize visualization approaches, to incorporate more resilience against reverse mimicry attacks. Another recommendation for future work is on how to extend the system proposed in this paper with content-based features.

Author Contributions

Conceptualization, S.Y.Y.; Methodology, S.Y.Y. and A.B.; Software, S.Y.Y. and A.B.; Validation, A.B.; Formal analysis, A.B.; Resources, A.B.; Writing—original draft, S.Y.Y. and A.B.; Writing—review & editing, A.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original datasets used in this research work were taken from the public domain. The pre-processed datasets are available on request from the author.

Acknowledgments

This work is supported in part by the 2022 Cybersecurity research grant number PCC-Grant-202228, from the Cybersecurity Center at Prince Mohammad Bin Fahd University, Al-Khobar, Saudi Arabia.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Singh, P.; Tapaswi, S.; Gupta, S. Malware detection in PDF and office documents: A survey. Inf. Secur. Glob. Perspect. 2020, 29, 134–153. [Google Scholar] [CrossRef]

- Goud, N. Cyber Attack with Ransomware Hidden Inside PDF Documents. Available online: https://www.cybersecurity-insiders.com/cyber-attack-with-ransomware-hidden-inside-pdf-documents/ (accessed on 31 October 2022).

- Nath, H.V.; Mehtre, B. Ensemble learning for detection of malicious content embedded in pdf documents. In Proceedings of the 2015 IEEE International Conference on Signal Processing, Informatics, Communication and Energy Systems (SPICES), Kozhikode, India, 19–21 February 2015; pp. 1–5. [Google Scholar]

- Mimoso, M. MiniDuke Espionage Malware Hits Governments in Europe Using Adobe Exploits. Available online: https://threatpost.com/miniduke-espionage-malware-hits-governments-europe-using-adobe-exploits-022713/77569/ (accessed on 31 October 2022).

- Stevens, D. PDF Tools. Available online: https://blog.didierstevens.com/programs/pdf-tools/ (accessed on 25 September 2022).

- Stevens, D. Peepdf—PDF Analysis Tool. Available online: https://eternal-todo.com/tools/peepdf-pdf-analysis-tool (accessed on 25 September 2022).

- Bandla, K. PhoneyPDF: A Virtual PDF Analysis Framework. Available online: https://github.com/kbandla/phoneypdf (accessed on 8 November 2022).

- Gdelugre, G. PDF Walker: Frontend to Explore the Internals of a PDF Document with Origami. Available online: https://github.com/gdelugre/pdfwalker (accessed on 25 September 2022).

- Laskov, P.; Srndic, N. Static Detection of Malicious JavaScript-Bearing PDF Documents. In Proceedings of the 27th Annual Computer Security Applications Conference (ACSAC 2011), Orlando, FL, USA, 5–9 December 2011; pp. 373–382. [Google Scholar]

- Corona, I.; Maiorca, D.; Ariu, D.; Giacinto, G. Detection of malicious pdf-embedded javascript code through discriminant analysis of api references. In Proceedings of the 2014 Workshop on Artificial Intelligent and Security Workshop, Scottsdale, AZ, USA, 7 November 2014; pp. 47–57. [Google Scholar]

- Maiorca, D.; Ariu, D.; Corona, I. A pattern recognition system for malicious pdf files detection. In Machine Learning and Data Mining in Pattern Recognition; Springer: Berlin/Heidelberg, Germany, 2012; pp. 510–524. [Google Scholar]

- Srndic, N.; Laskov, P. Hidost: A Static Machine-learning-based Detector of Malicious Files. Eurasip J. Inf. Secur. 2016, 2016, 22. [Google Scholar] [CrossRef]

- Smutz, C.; Stavrou, A. Malicious PDF detection using meta-data and structural features. In Proceedings of the 28th Annual Computer Security Applications Conference (ACSAC 2012), Orlando, FL, USA, 3–7 December 2012; pp. 239–248. [Google Scholar]

- Corum, A.; Jenkins, D.; Zheng, J. Robust PDF Malware Detection with Image Visualization and Processing Techniques. In Proceedings of the 2019 2nd International Conference on Data Intelligence and Security (ICDIS), South Padre Island, TX, USA, 28–30 June 2019; pp. 1–5. [Google Scholar]

- Kang, H.; Yuefei, Z.; Yubo, H.; Long, L.; Bin, L.; Wei, L. Detection of Malicious PDF Files Using a Two-Stage Machine Learning Algorithm. Chin. J. Electron. 2020, 28, 1165–1177. [Google Scholar]

- ISO 32000-1:2008; Document Management—Portable Document Format—Part 1: PDF 1.7. ISO: Geneva, Switzerland, 2008. Available online: https://www.iso.org/standard/51502.html (accessed on 30 October 2022).

- Maiorca, D.; Biggio, B. Digital Investigation of PDF Files: Unveiling Traces of Embedded Malware. IEEE Secur. Priv. Mag. Spec. Issue Digit. Forensics 2017, 17, 63–71. [Google Scholar] [CrossRef]

- Khitan, S.J.; Hadi, A.; Atoum, J. PDF Forensic Analysis System using YARA. Int. J. Comput. Sci. Netw. Secur. 2017, 17, 77–85. [Google Scholar]

- Jeong, Y.; Woo, J.; Kang, A. Malware detection on byte streams of pdf files using convolutional neural networks. Secur. Commun. Netw. 2019, 2019, 8485365. [Google Scholar] [CrossRef]

- Albahar, M.; Thanoon, M.; Alzilai, M.; Alrehily, A.; Alfaar, M.; Alghamdi, M.; Alassaf, N. Toward Robust Classifiers for PDF Malware Detection. Comput. Mater. Contin. 2021, 69. [Google Scholar] [CrossRef]

- Bazzi, A.; Yoshikuni, O. Automatic Detection of Malicious PDF Files Using Dynamic Analysis. In Proceedings of the JSST 2013 International Conference on Simulation Technology, Tokyo, Japan, 11 September 2013; pp. 3–4. [Google Scholar]

- Falah, A.; Pan, L.; Huda, S.; Pokhrel, S.R.; Anwar, A. Improving Malicious PDF Classifier with Feature Engineering: A Data-Driven Approach. Future Gener. Comput. Syst. 2021, 115, 314–326. [Google Scholar] [CrossRef]

- Jason, Z. MLPdf: An Effective Machine Learning Based Approach for PDF Malware Detection. arXiv 2018, arXiv:1808.06991. [Google Scholar]

- Jiang, J.; Song, N.; Yu, M.; Liu, C.; Huang, W. Detecting malicious pdf documents using semi-supervised machine learning. In Advances in Digital Forensics XVII. Digital Forensics 2021. IFIP Advances in Information and Communication Technology; Peterson, G., Shenoi, S., Eds.; Springer: Berlin/Heidelberg, Germany, 2021; Volume 612. [Google Scholar] [CrossRef]

- Torres, J.; Santos, S. Malicious PDF Documents Detection using Machine Learning Techniques—A Practical Approach with Cloud Computing Applications. In Proceedings of the 4th International Conference on Information Systems Security and Privacy (ICISSP 2018), Funchal, Portugal, 22–24 January 2018; pp. 337–344. [Google Scholar] [CrossRef]

- Abu Al-Haija, Q.; Odeh, A.; Qattous, H. PDF Malware Detection Based on Optimizable Decision Trees. Electronics 2022, 11, 3142. [Google Scholar] [CrossRef]

- CIC. PDF Dataset: CIC-Evasive-PDFMal2022. Available online: https://www.unb.ca/cic/datasets/PDFMal-2022.html (accessed on 25 September 2022).

- Yerima, S.Y.; Bashar, A.; Latif, G. Malicious PDF detection Based on Machine Learning with Enhanced Feature Set. In Proceedings of the 14th International Conference on Computational Intelligence and Communication Networks, Al-Khobar, Saudi Arabia, 4–6 December 2022. [Google Scholar]

- Issakhani, M.; Victor, P.; Tekeoglu, A.; Lashkari, A.H. PDF Malware Detection based on Stacking Learning. In Proceedings of the 8th International Conference on Information Systems Security and Privacy (ICISSP 2022), Online, 9–11 February 2022; pp. 562–570. [Google Scholar]

- PRALAB. PDF Reverse Mimicry Dataset. Available online: https://pralab.diee.unica.it/en/pdf-reverse-mimicry/ (accessed on 31 October 2022).

- Lashkari, A.H. PDFMALyzer. Available online: https://github.com/ahlashkari/PDFMalLyzer (accessed on 25 September 2022).

- Ho, T.K. The Random Subspace Method for Constructing Decision Forests. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 832–844. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Freund, Y.; Schapire, R.E. Experiments with a new boosting algorithm. In Proceedings of the Thirteenth International Conference on Machine Learning, Bari, Italy, 3–6 July 1996; pp. 148–156. [Google Scholar]

- Wolpert, D.H. Stacked generalization. Neural Netw. 1992, 45, 214–259. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S.I. A Unified Approach to interpreting model predictions. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).