An Effective YOLO-Based Proactive Blind Spot Warning System for Motorcycles

Abstract

1. Introduction

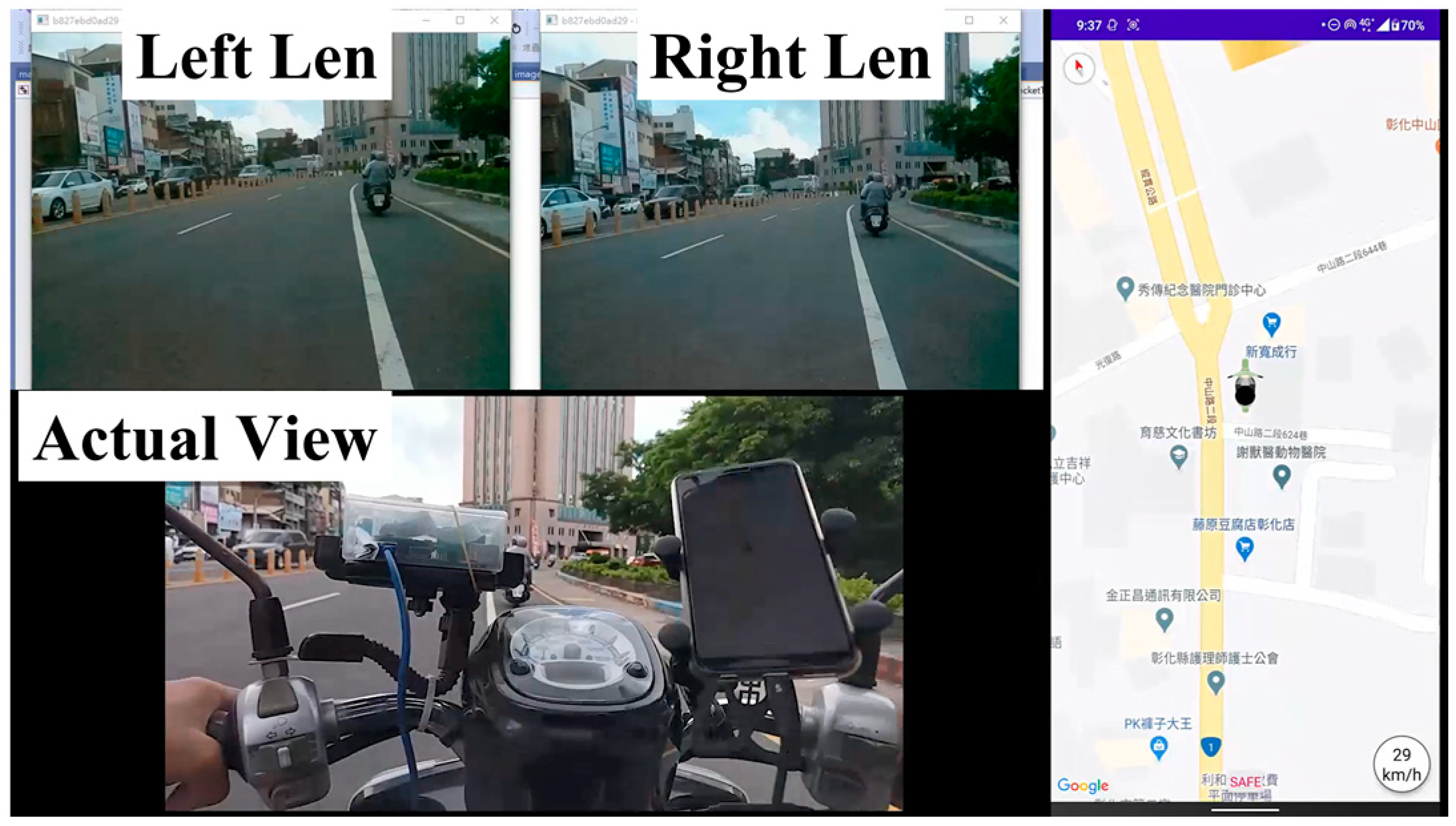

- Through the combination of dual-lens and artificial intelligence technology, the distance and relative position of the motorcycle to a bus on the move are judged and calculated in real time.

- Motorcycles equipped with this proactive PBSW system can receive an immediate warning when they enter the blind spot or the area of the inner wheel difference of the bus, which achieves a significant impact on enhancing motorcyclist safety.

- This proactive PBSW system has been implemented and tested in real life. Video clips and snapshots recorded in the real-world evaluation of this implemented system are presented in Section 4.3. Further, results of six performance metrics provide concrete evidence of the PBSW system’s efficiency and reliability.

2. Related Work

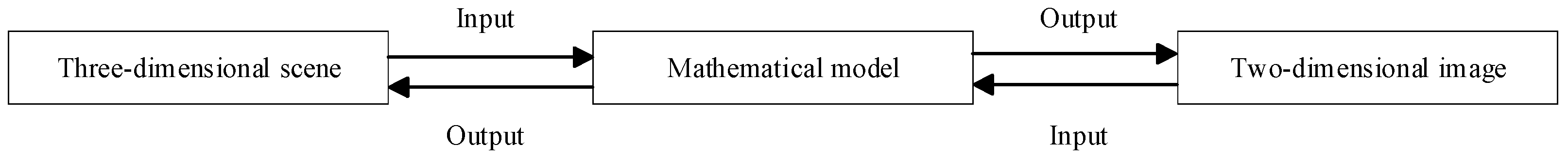

2.1. Camera Calibration and Correction

2.2. Image Recognition

2.3. Image Matching

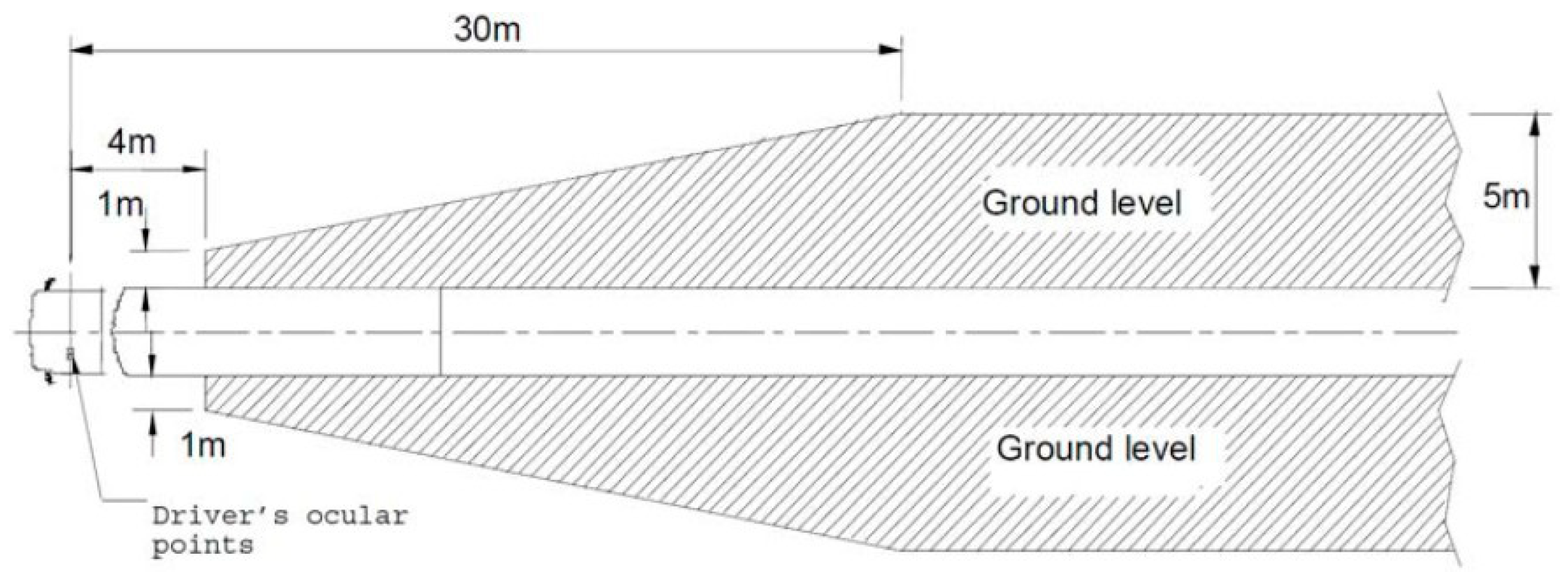

2.4. Regulations of Devices for Indirect Vision [35]

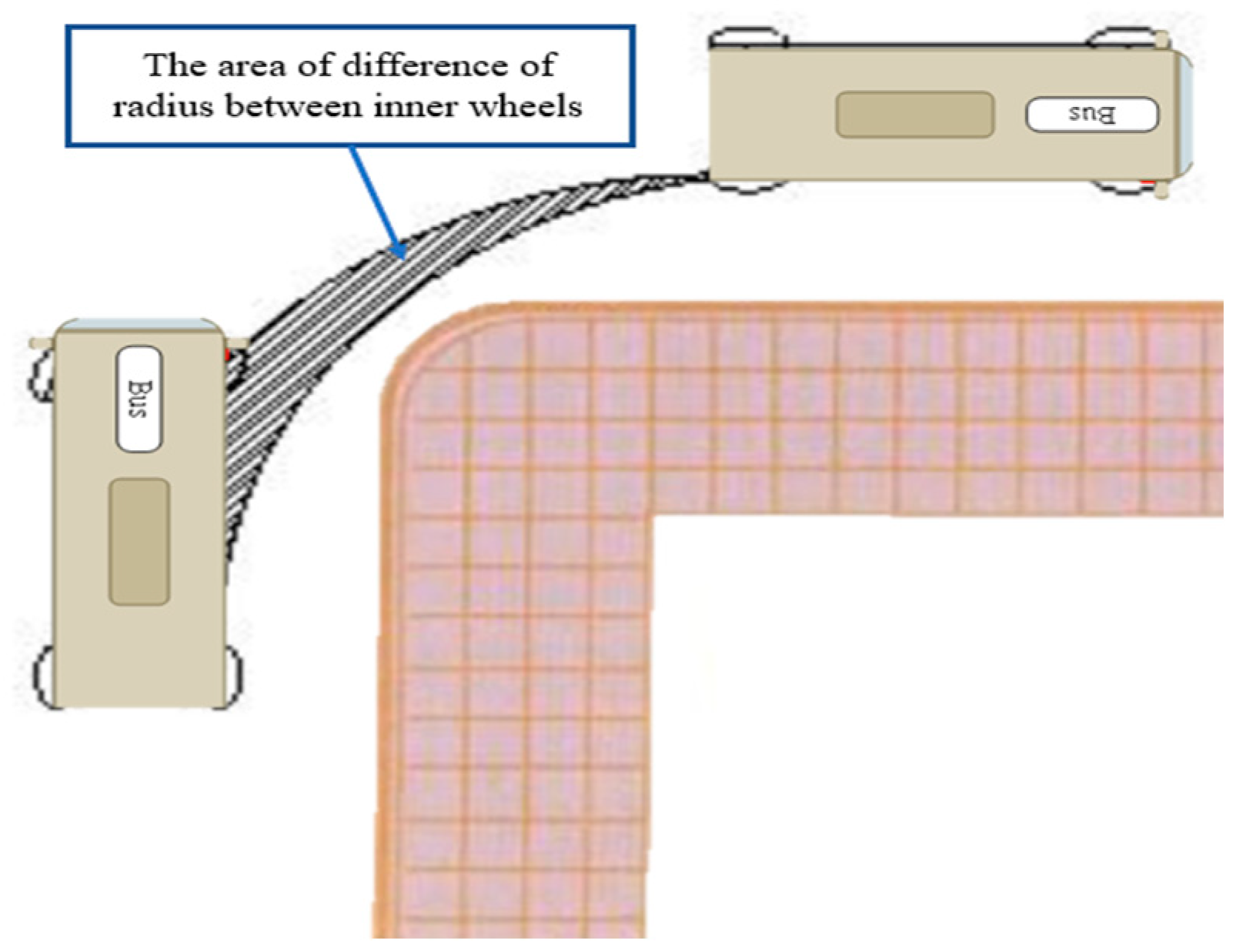

2.5. Inner Wheel Difference Calculation [5,36]

3. Design of the PBSW System

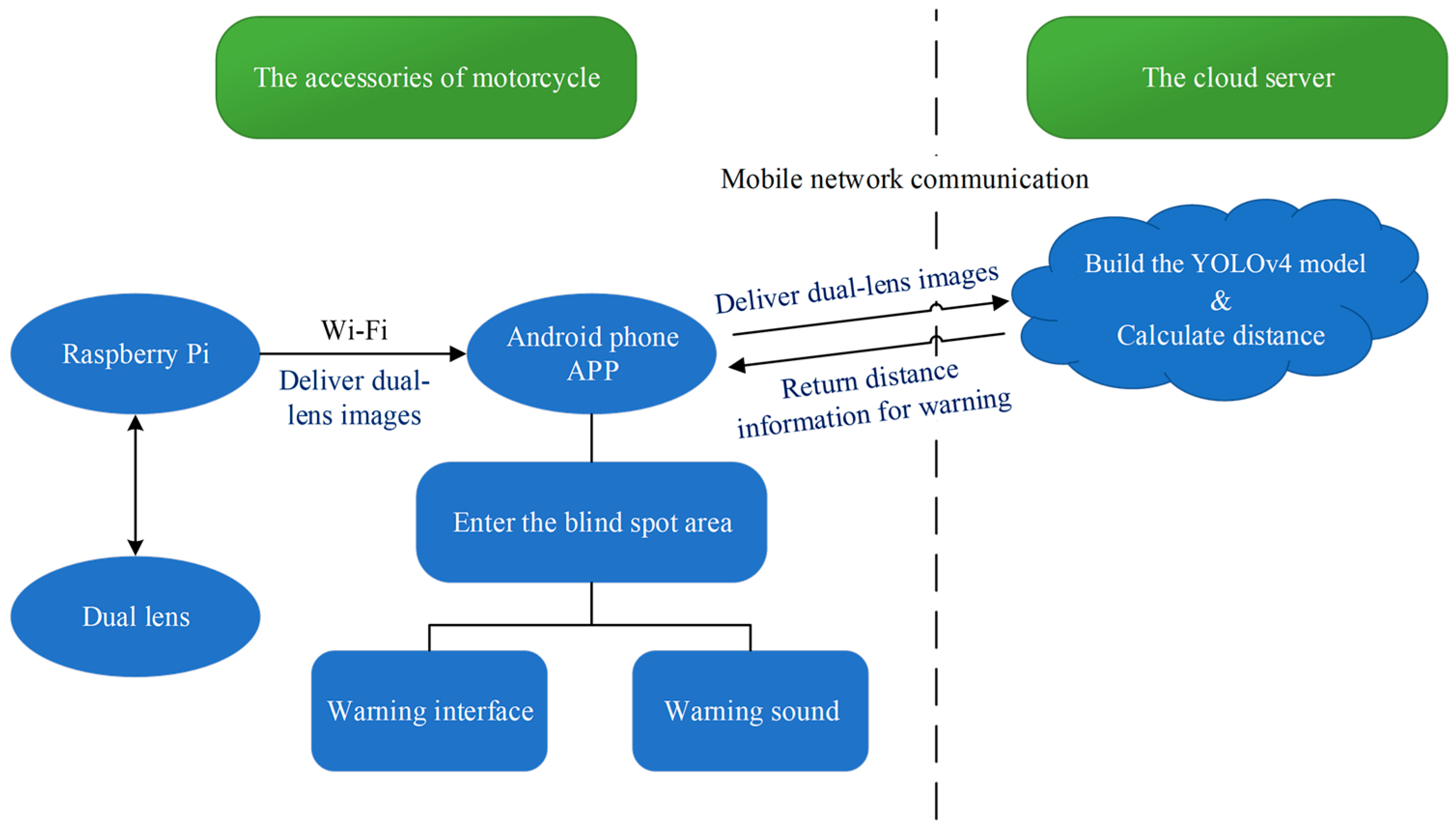

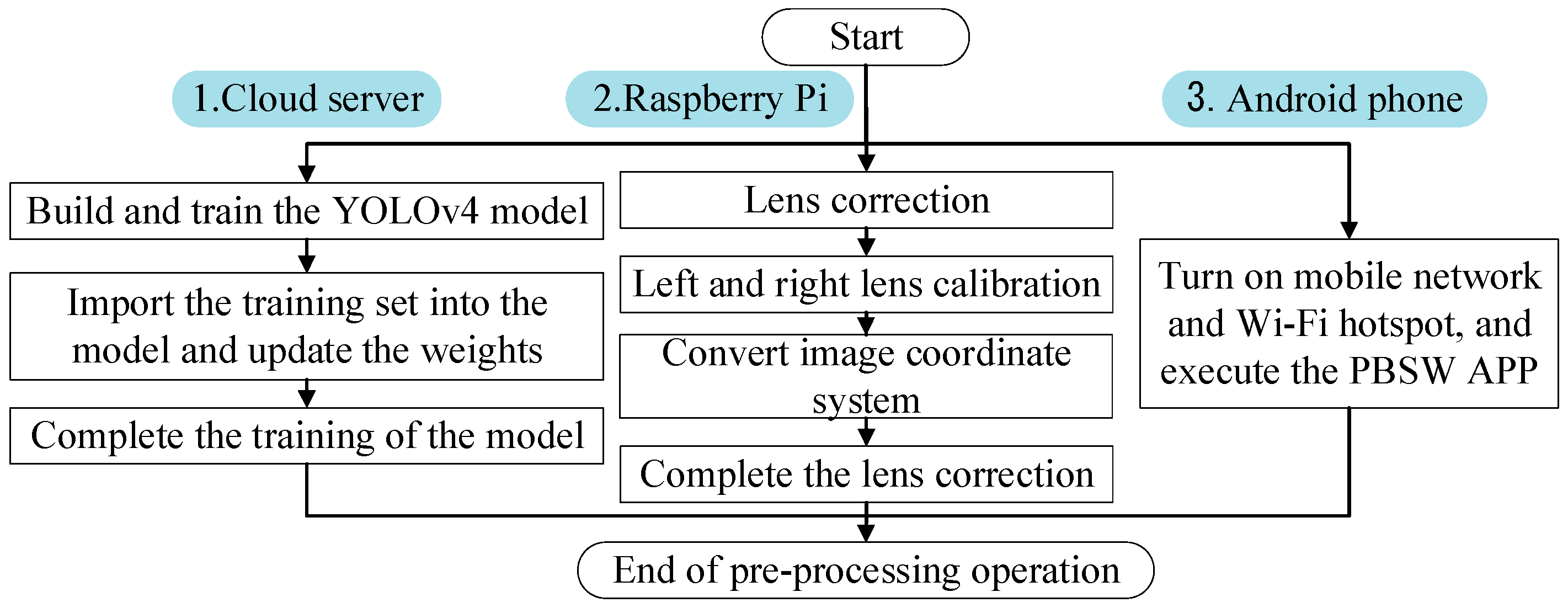

3.1. The Preprocessing Flow of PBSW

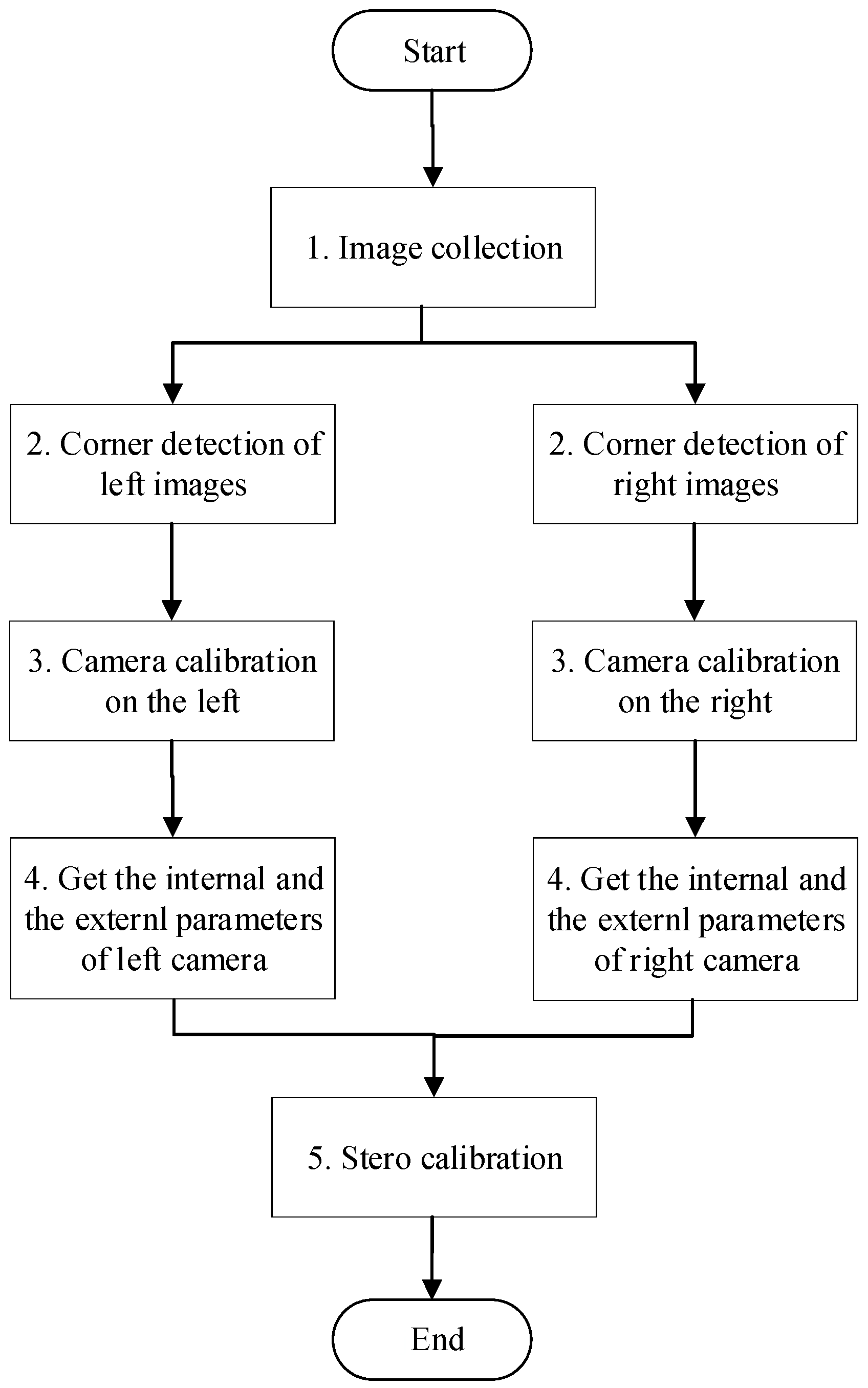

3.2. Camera Calibration Process

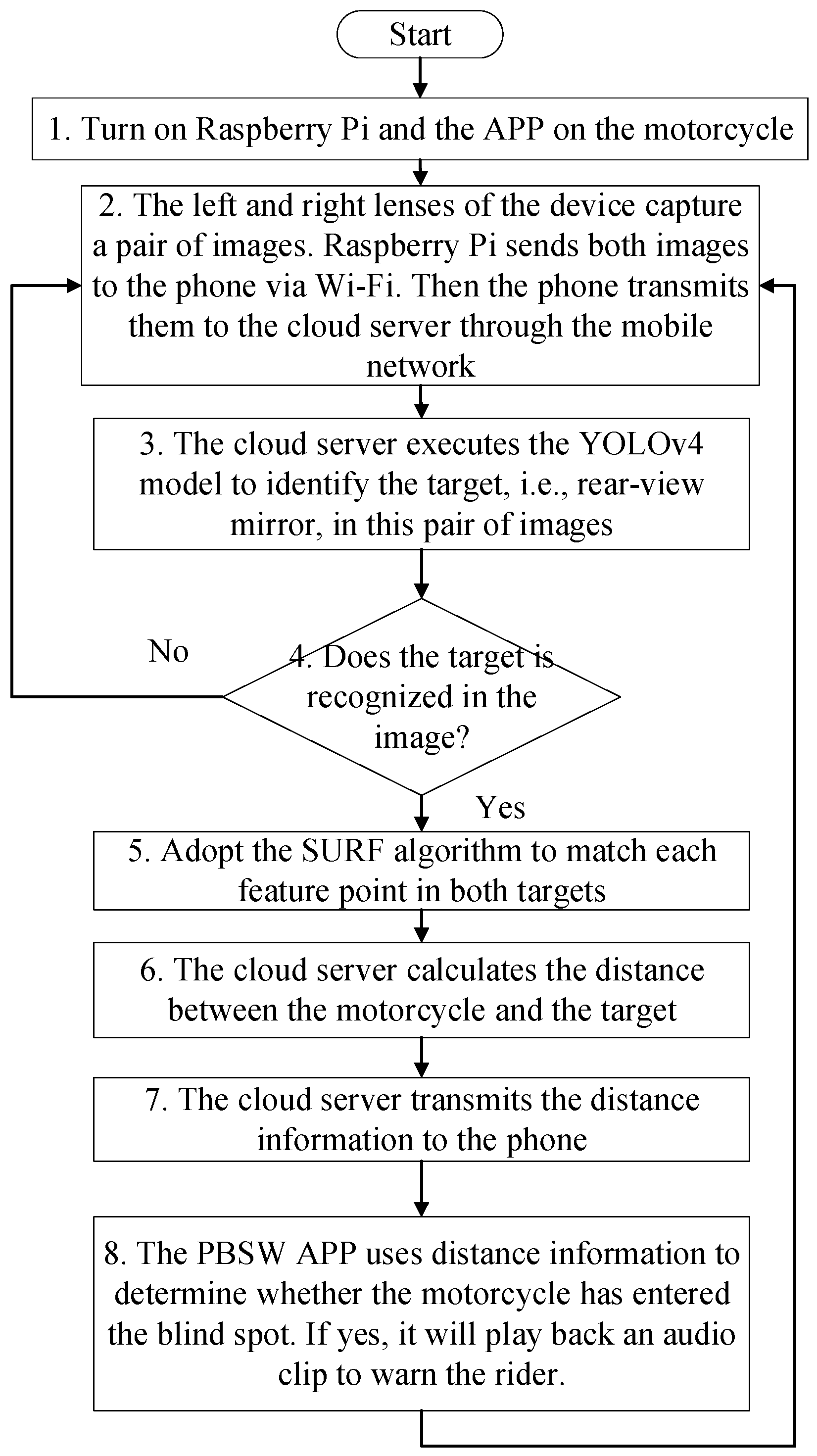

3.3. The Blind Spot Warning Process of PBSW

- Turn on the Raspberry Pi device of the motorcycle and open the PBSW app on the Android phone to connect to the cloud server.

- The Raspberry Pi device captures a pair of images from the left and right lenses at the same time, sends them first to the Android phone via Wi-Fi, and, finally, to the cloud server through the mobile network.

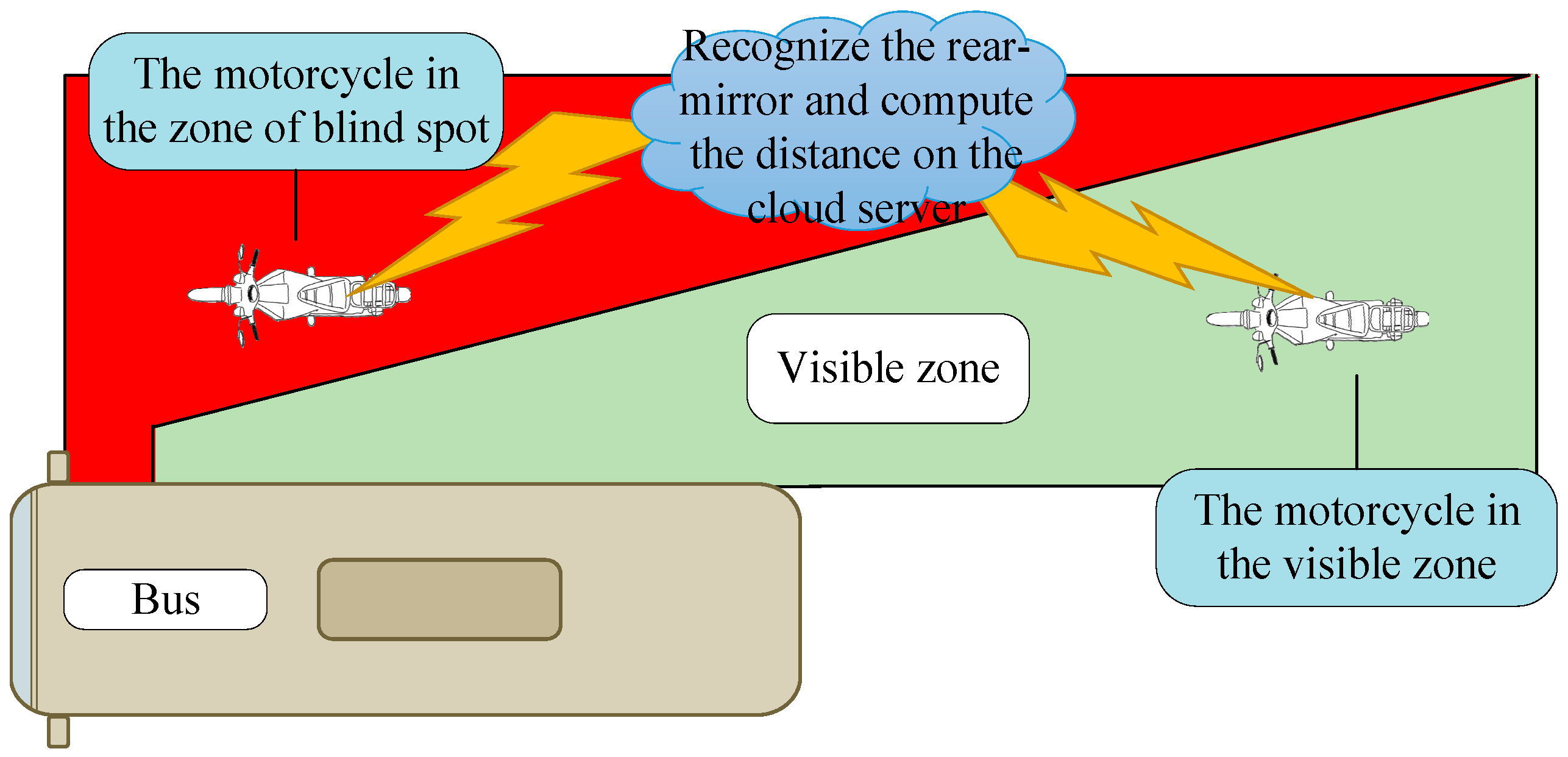

- The cloud server executes the YOLOv4 model to identify whether there are rear-view mirrors in this pair of images.

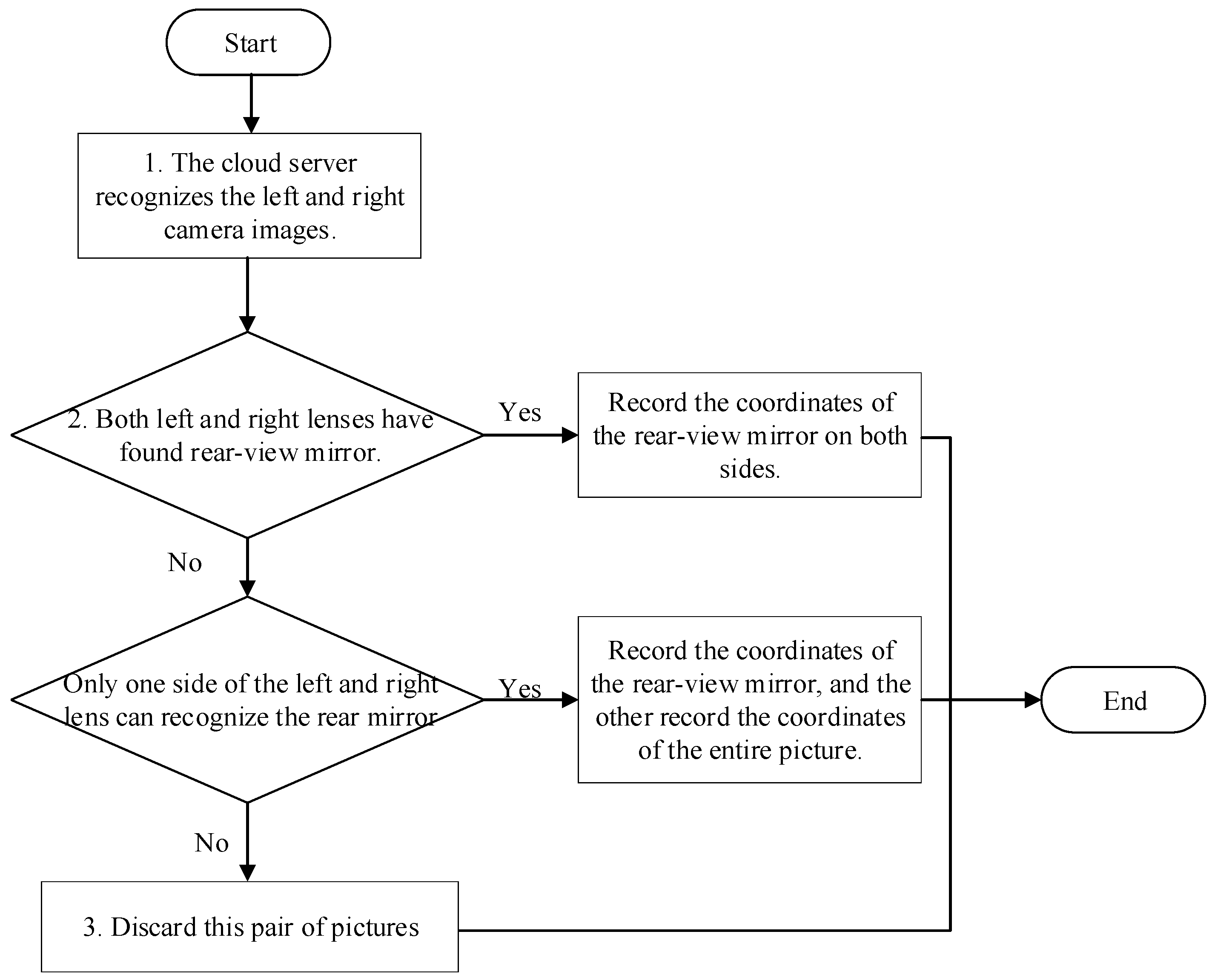

- The cloud server receives a pair of images from the mobile phone to identify and record.

- A decision strategy is chosen based on the identification results.

- Case 1: If both the left and right images have identified two types of targets, the coordinate information and type of the target class are directly passed to the matching program.

- Case 2: Under the condition that buses in the images are recognized, if the rear-view mirror has been recognized in the image of one lens only, the coordinate information of the rear-view mirror and the entire image are sent to the matching program.

- Otherwise: If the two conditions noted above have not been met, this pair of images will be discarded.

- 4.

- If the rear-view mirror has been recognized in the image, go to step 5. Otherwise, go back to step 2.

- 5.

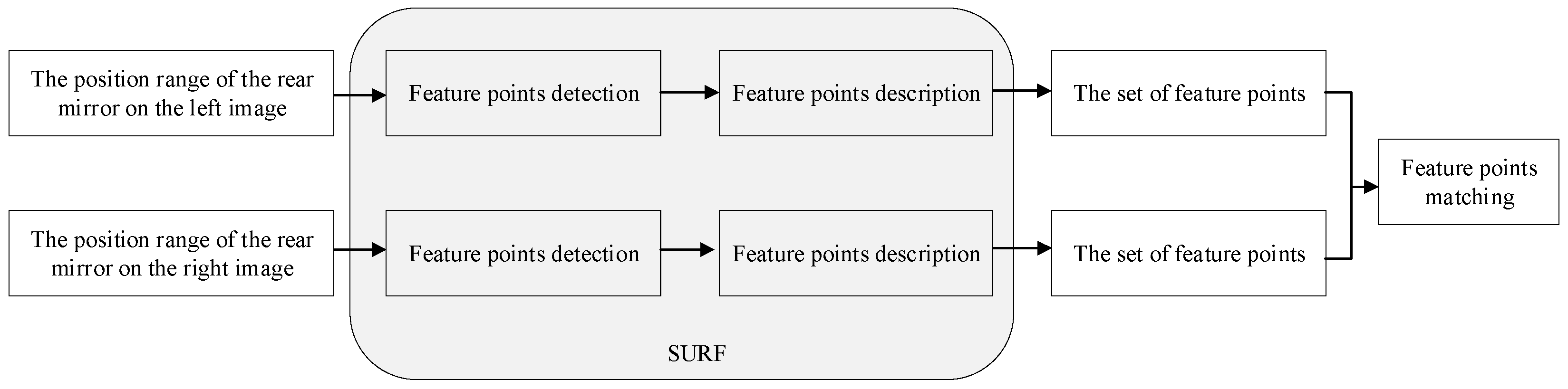

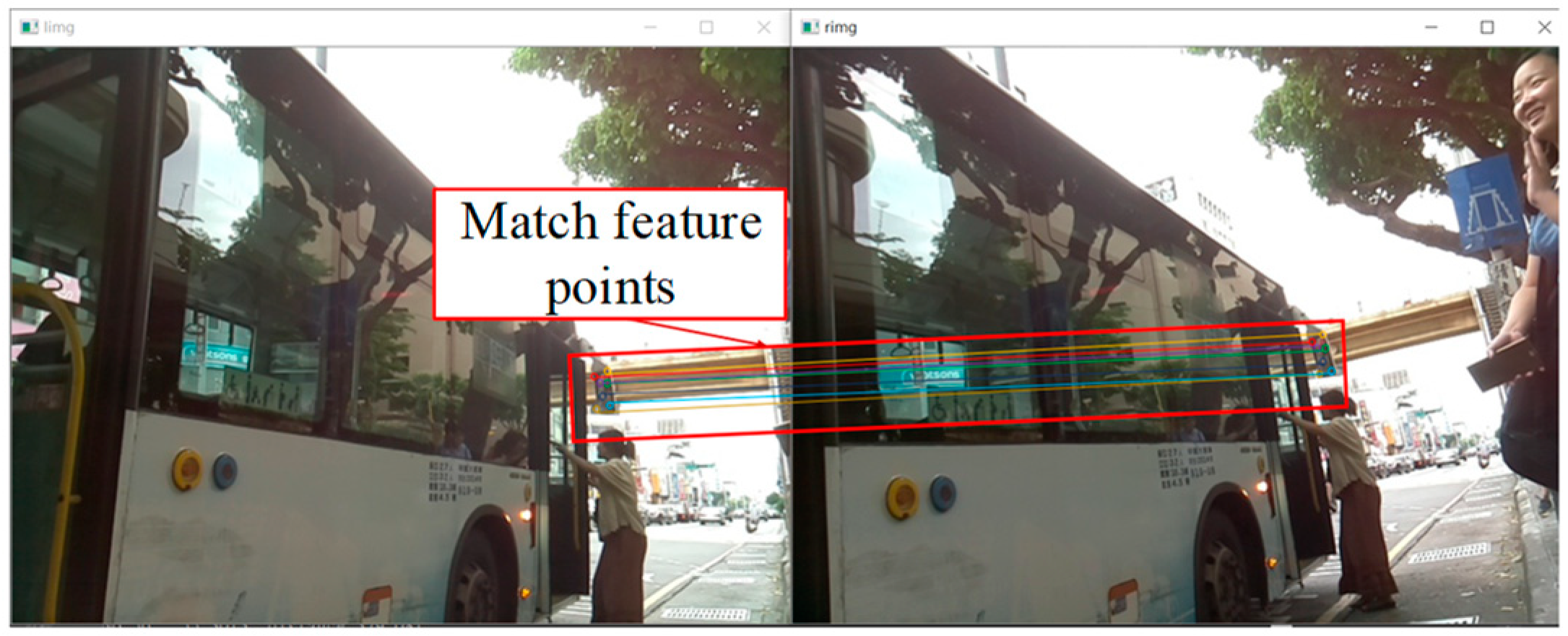

- Adopt the SURF algorithm to match each feature point within the coordinate positions of the rear-view mirrors in this pair of images.

- If the area of the rear-view mirror in the image is less than 25 pixels × 50 pixels, this area will be enlarged by 2.5 times to perform the feature point detection with the threshold value 1000, as shown in Figure 11.

- Use the feature point sets of the left and right images to match them, as shown in Figure 12.

- 6.

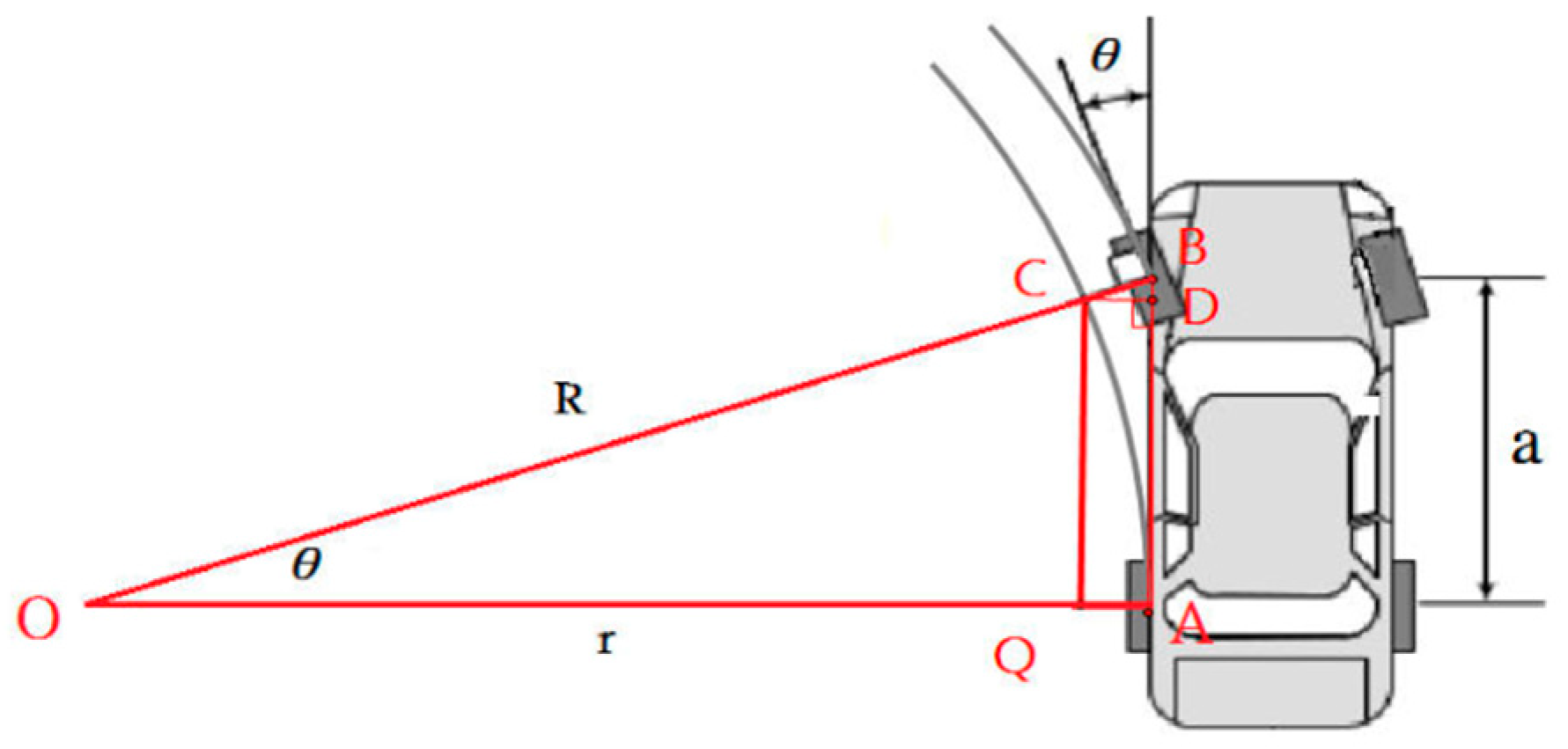

- According to the principle of lens imaging [37], which is shown in Figure 13, the distance between the lens and the rear-view mirror can be calculated using the world coordinates of this pair of images. In addition, edge detection is performed on the area within the recognized bus range. The detected edge lines can be used to determine the normal vector of the bus body surface to further calculate the relative angle between the bus and the motorcycle.

- Use the rear-view mirror of the bus as the target of the YOLOv4 model.

- In order to accurately determine the target, first use the focal lengths for both lenses to capture the clearest target images using the autofocus technology. Then, f1 and f2 are averaged to obtain f for the next step.

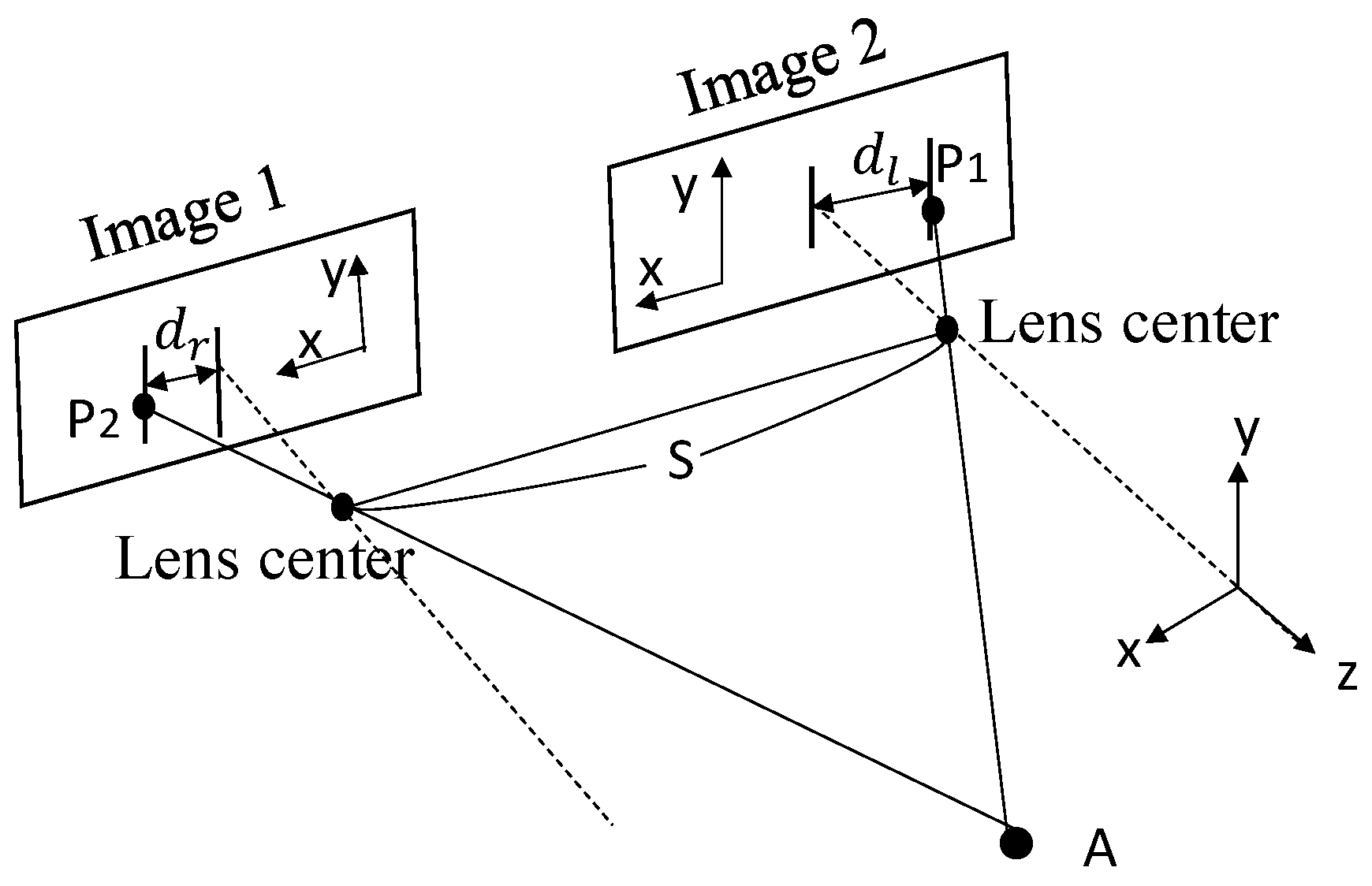

- Through the optical centers of the left and right lenses, target A is imaged at location P1 and P2, respectively. To calculate the distance between the lenses and the target A, the three-dimensional space is first projected to the x-z plane. Based on the similar triangle principle, in the unit of m can be calculated by Equation (2), where dl is the x-axis distance from P1 to the optical center of the left lens, dr is the x-axis distance from P2 to the optical center of the right lens, and S is the distance between the optical centers of the left and right lenses:

- Based on the imaging relationship for a single lens, the horizontal distance between the imaging position of the object and the image center is d, where . Hence, the horizontal distance between the optical center of a lens and the target can be obtained by Equation (3):

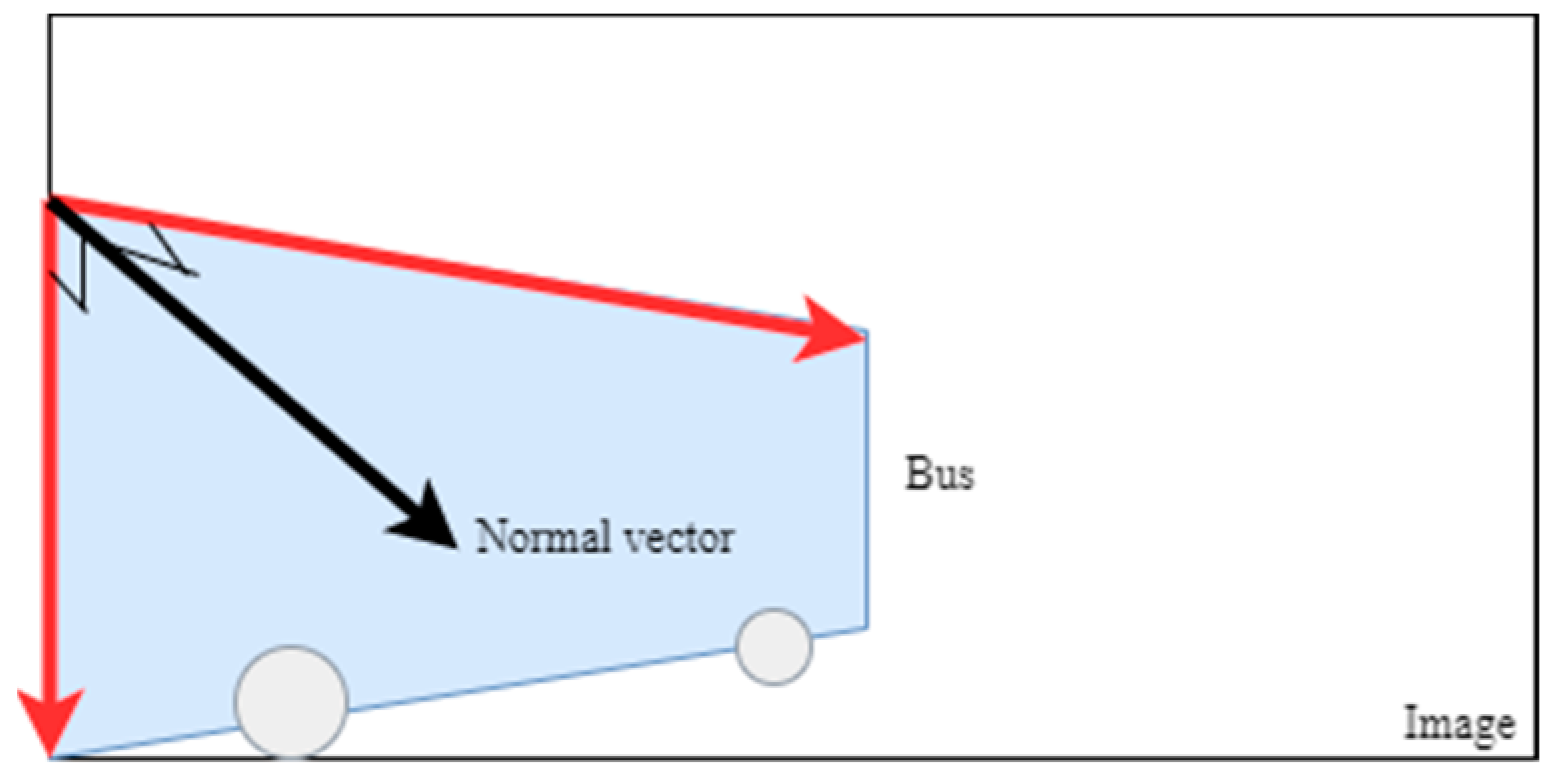

- Perform edge detection on the identified bus coordinate range and extract the direction vectors close to the four sides of the bus body.

- Use the outer product of vectors to extract the normal vector of the surface enclosed by the four sides, as shown in Figure 14.

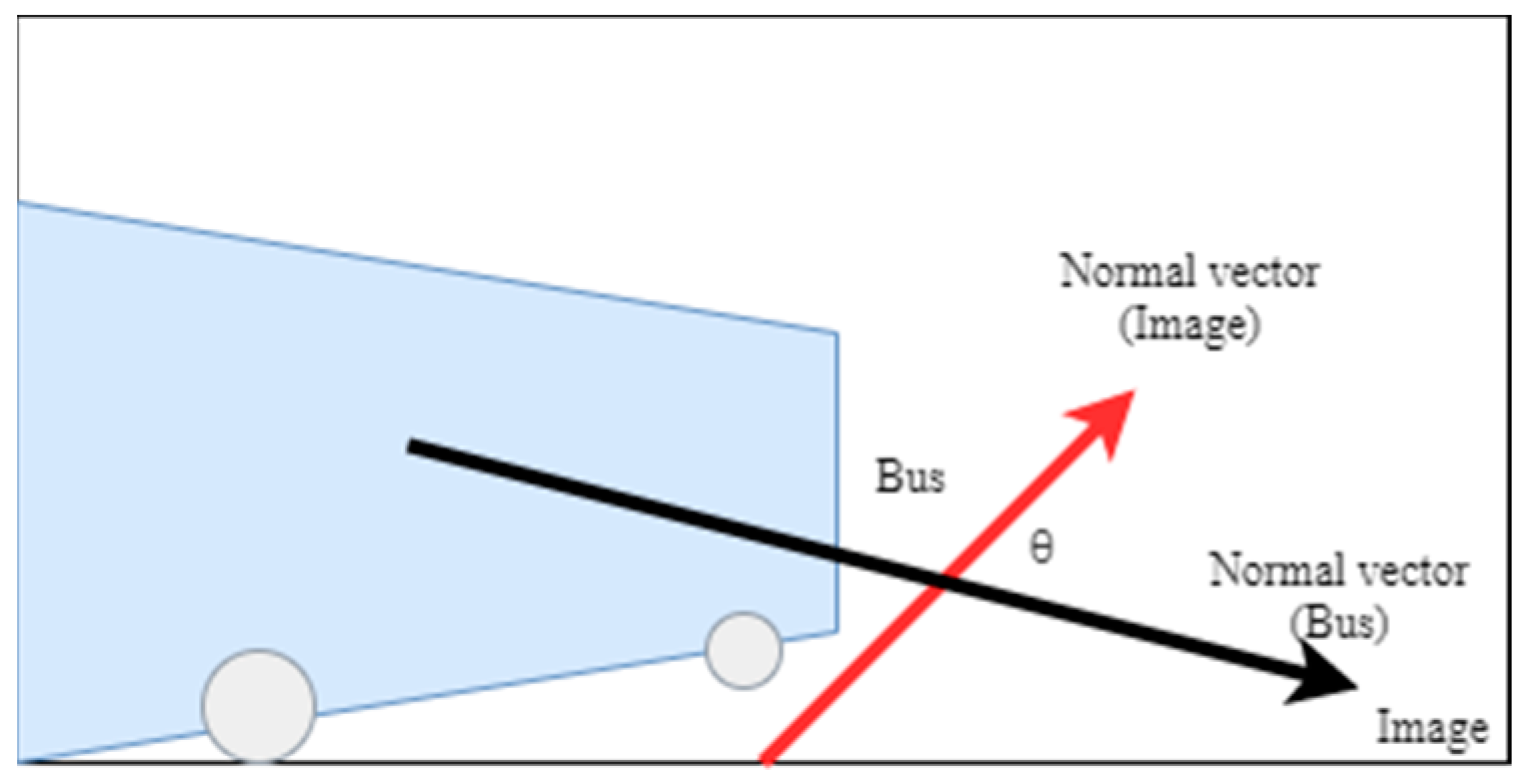

- Use the inner product of the normal vector of the calculated surface and that of the camera image to further derive the angle between the bus and the motorcycle, as shown in Figure 15.

- 7.

- The cloud server transmits relative angle and distances and to the android phone.

- 8.

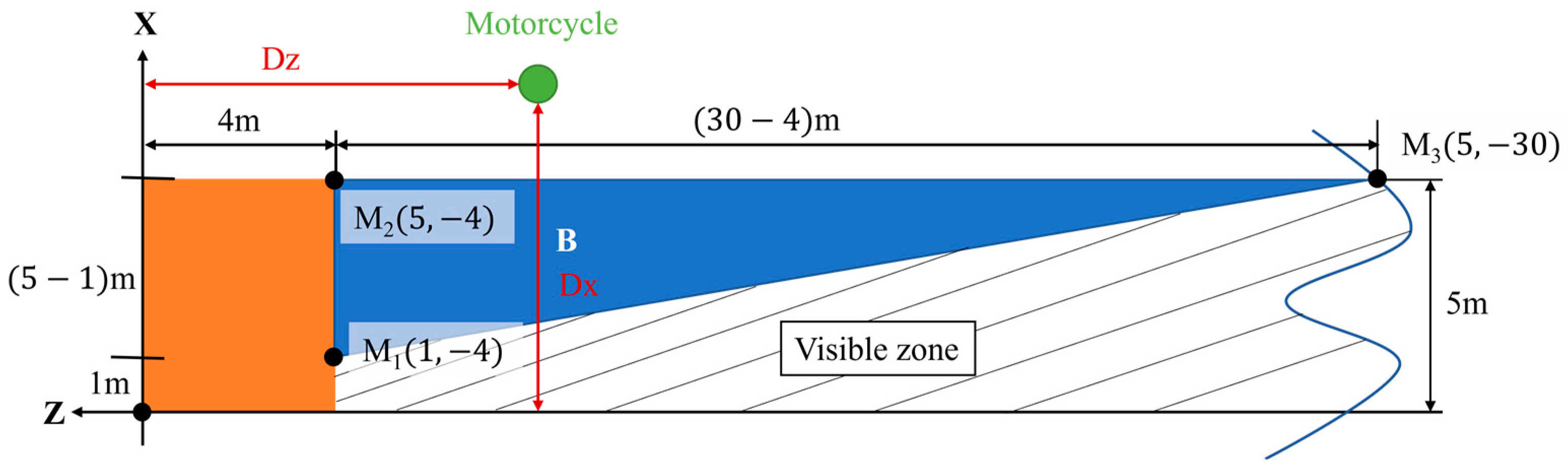

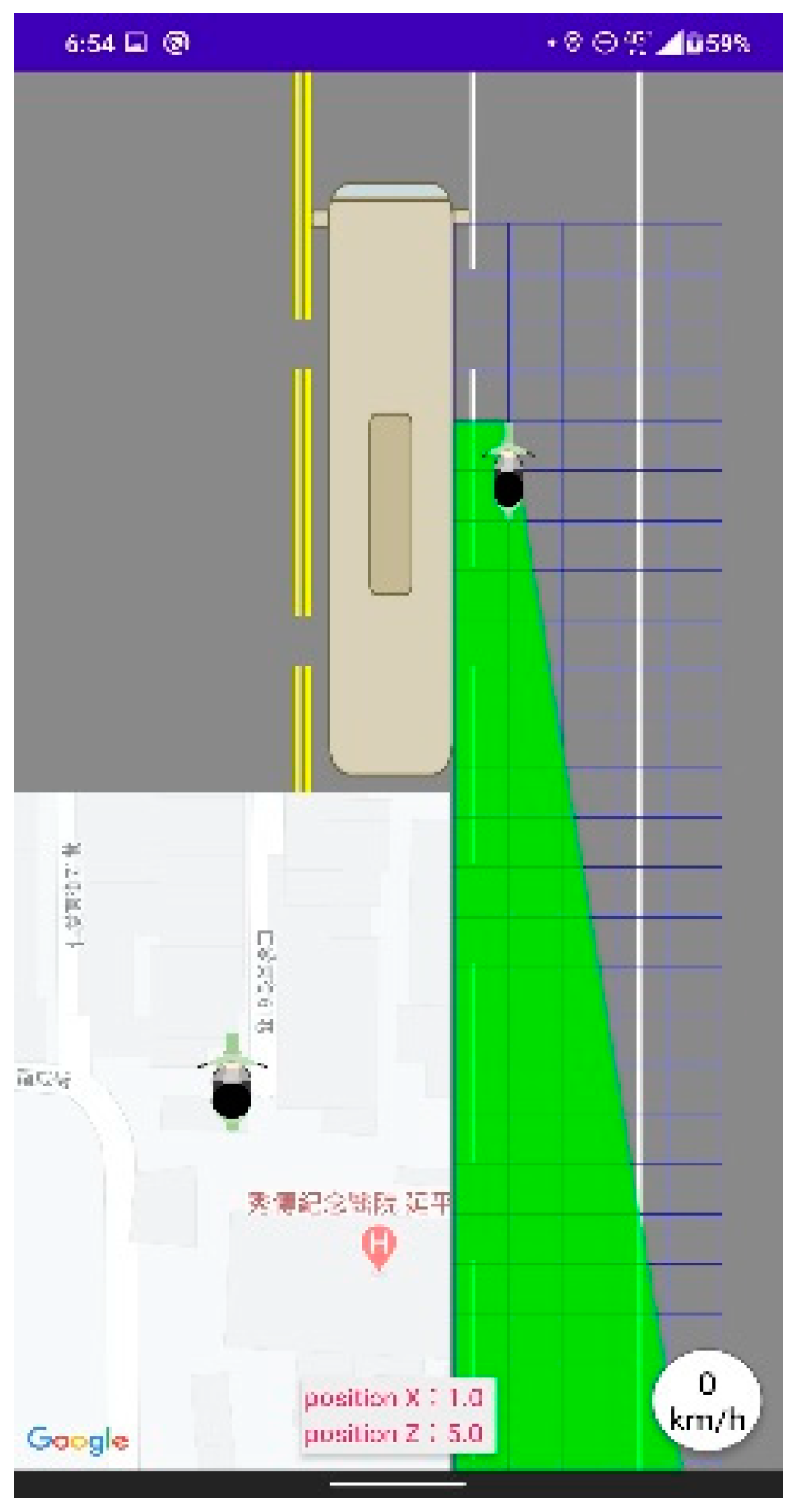

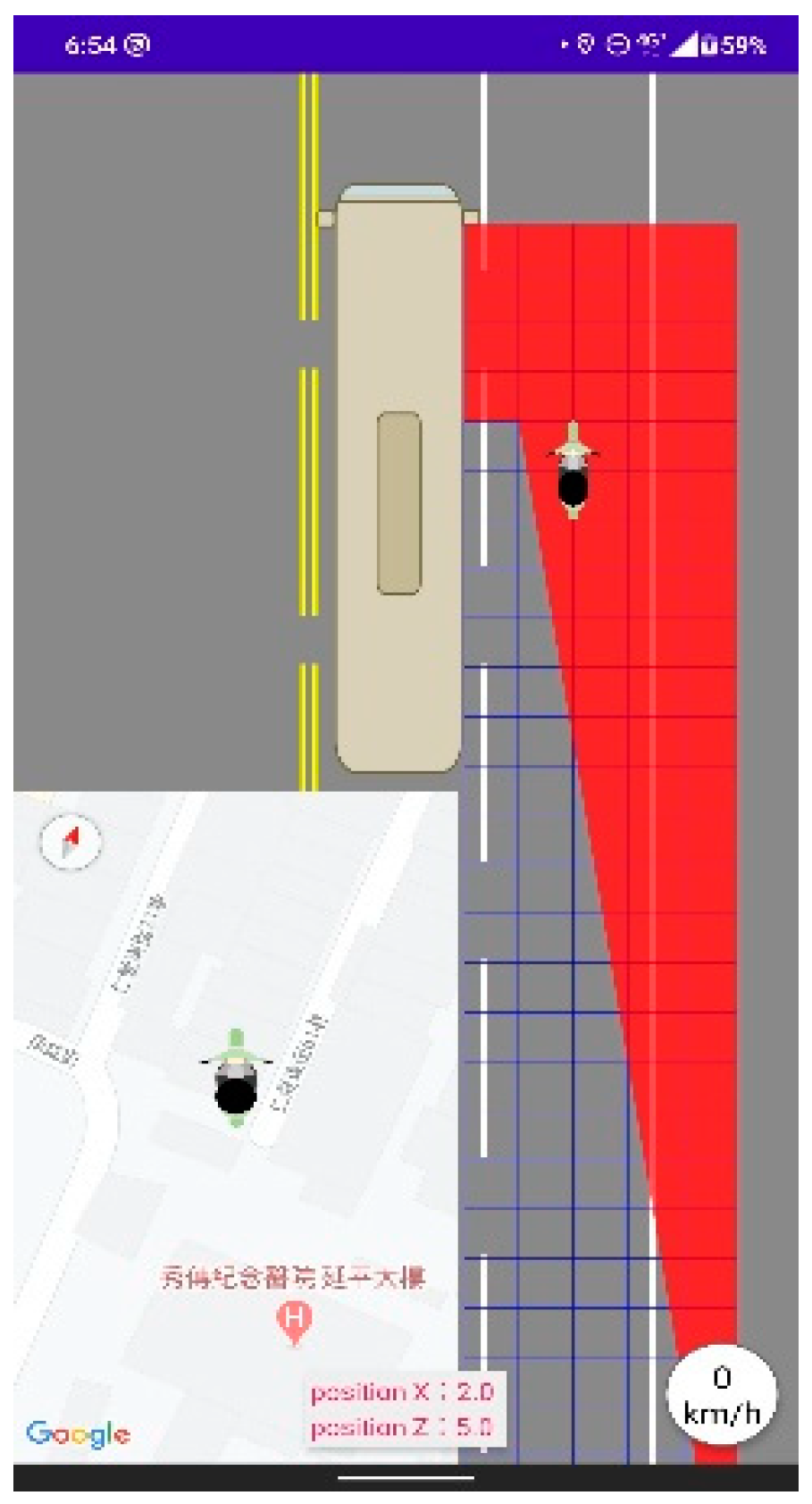

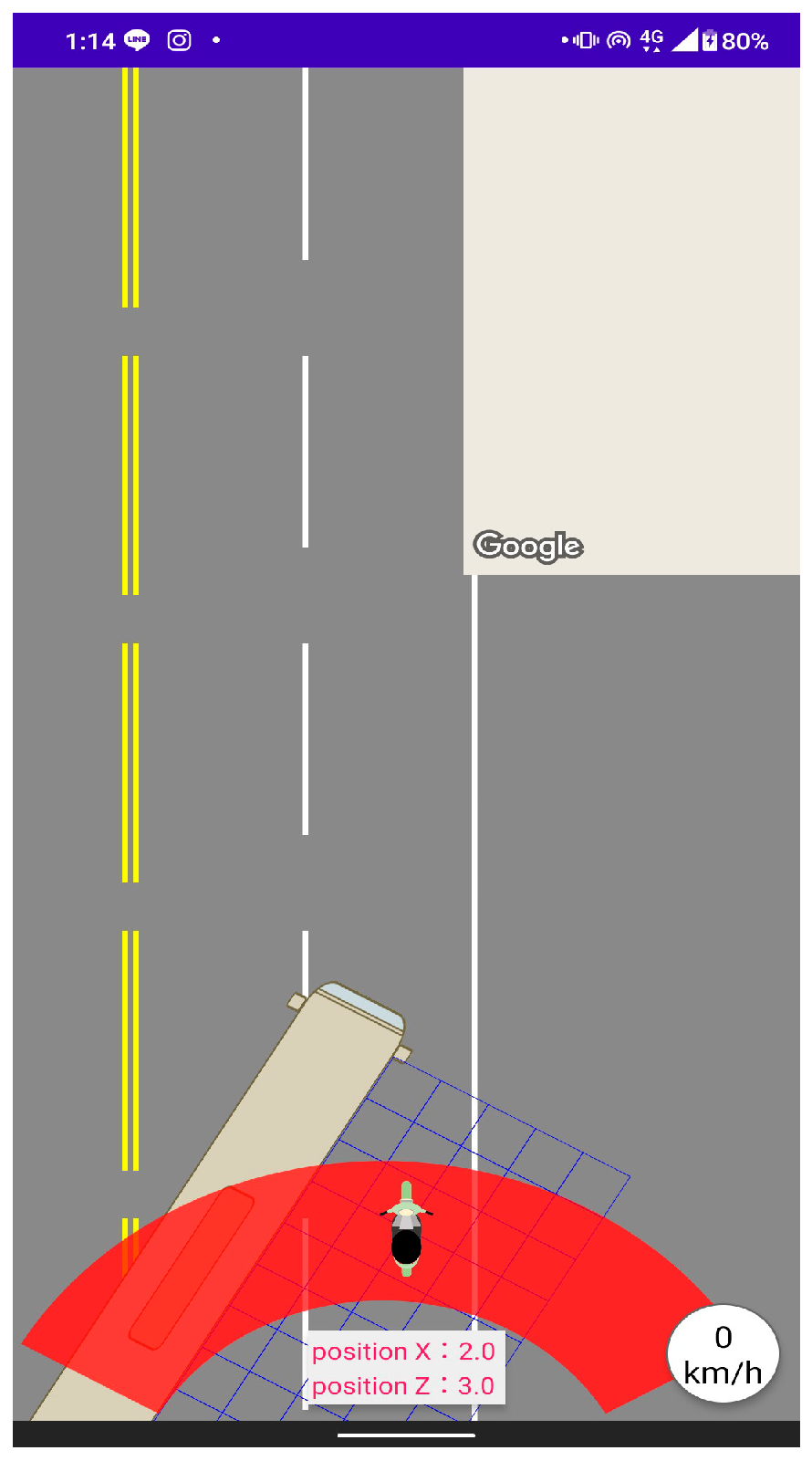

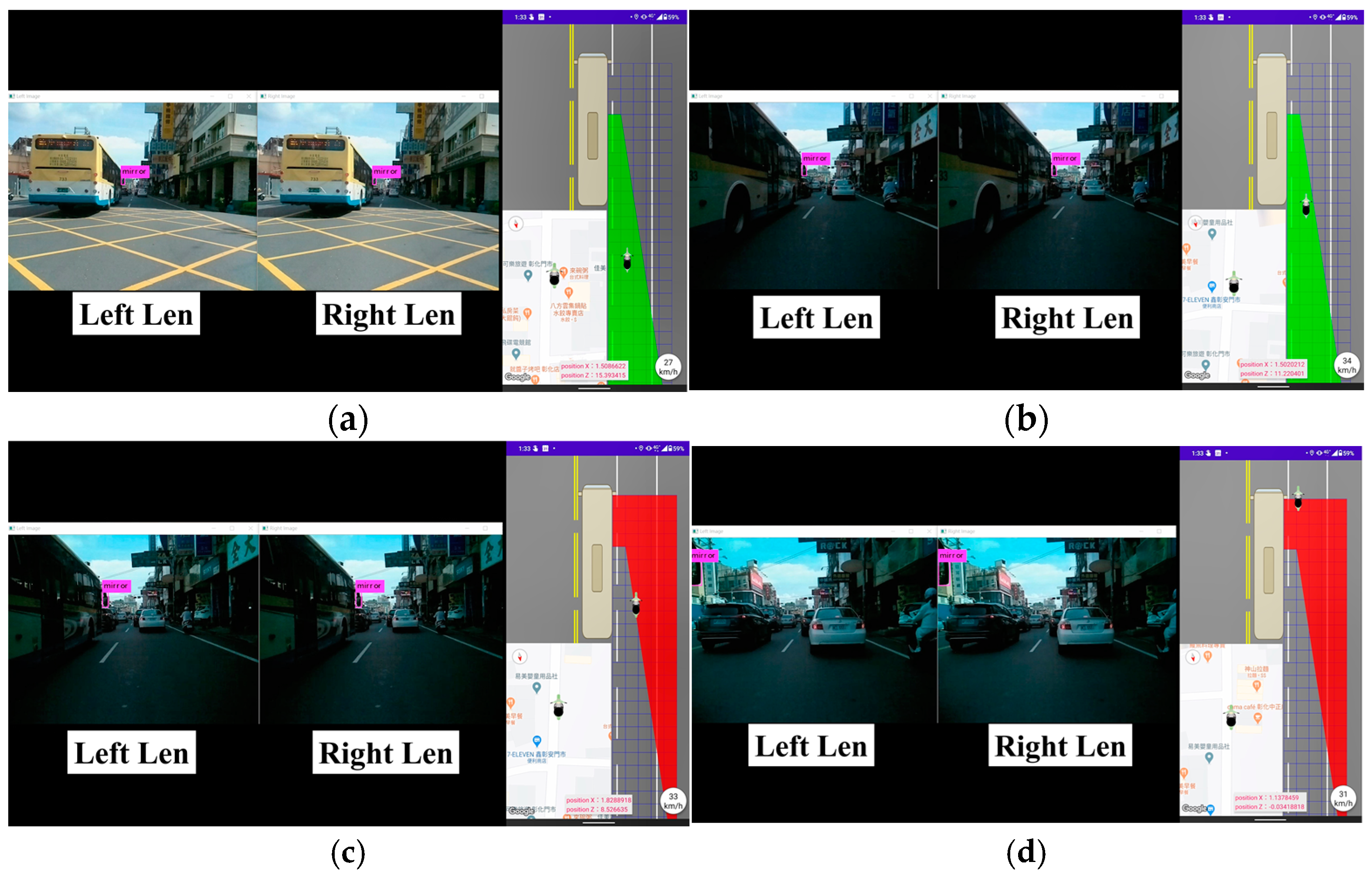

- As soon as the android phone receives relative angle, , and , the PBSW app running on the android updates the relative position of the motorcycle and the bus on the screen. It further determines whether the bus is turning, or whether the motorcycle has entered the blind spot of the bus or not, using angular and distance information, respectively. If yes, an audio clip to warn the motorcycle driver of the incoming danger is played back immediately. Figure 16 illustrates how to confine two zones of the bus blind spot.

- Zone A: this zone is an invisible rectangle besides the bus and behind the driver’s ocular points. Hence, two formulas, i.e., 0 ≤ ≤ 5 and 0 ≤ ≤ 4, confine Zone A.

- Zone B: this zone is an invisible triangle starting from 4 m behind the driver’s ocular points. It is composed of three vertices, i.e., M1(1, −4), M2(5, −4), and M3(5, −30), under the condition > 4.

- 9.

- In summary, Equation (4) formulates rules in terms of and confining the complete blind spot of the bus:

4. System Implementation and Performance Evaluations

4.1. Hardware and Software

4.2. The Android PBSW App

4.3. Performance Evaluations of this System

4.3.1. Real-World Evaluation of the Implemented System

4.3.2. Average Image Classification Time in the Cloud Server and Raspberry Pi

4.3.3. Average Image Transmission Rate, Image Processing Time, and Round-Trip Delay

4.3.4. YOLOv4 Classification Results of the PBSW System

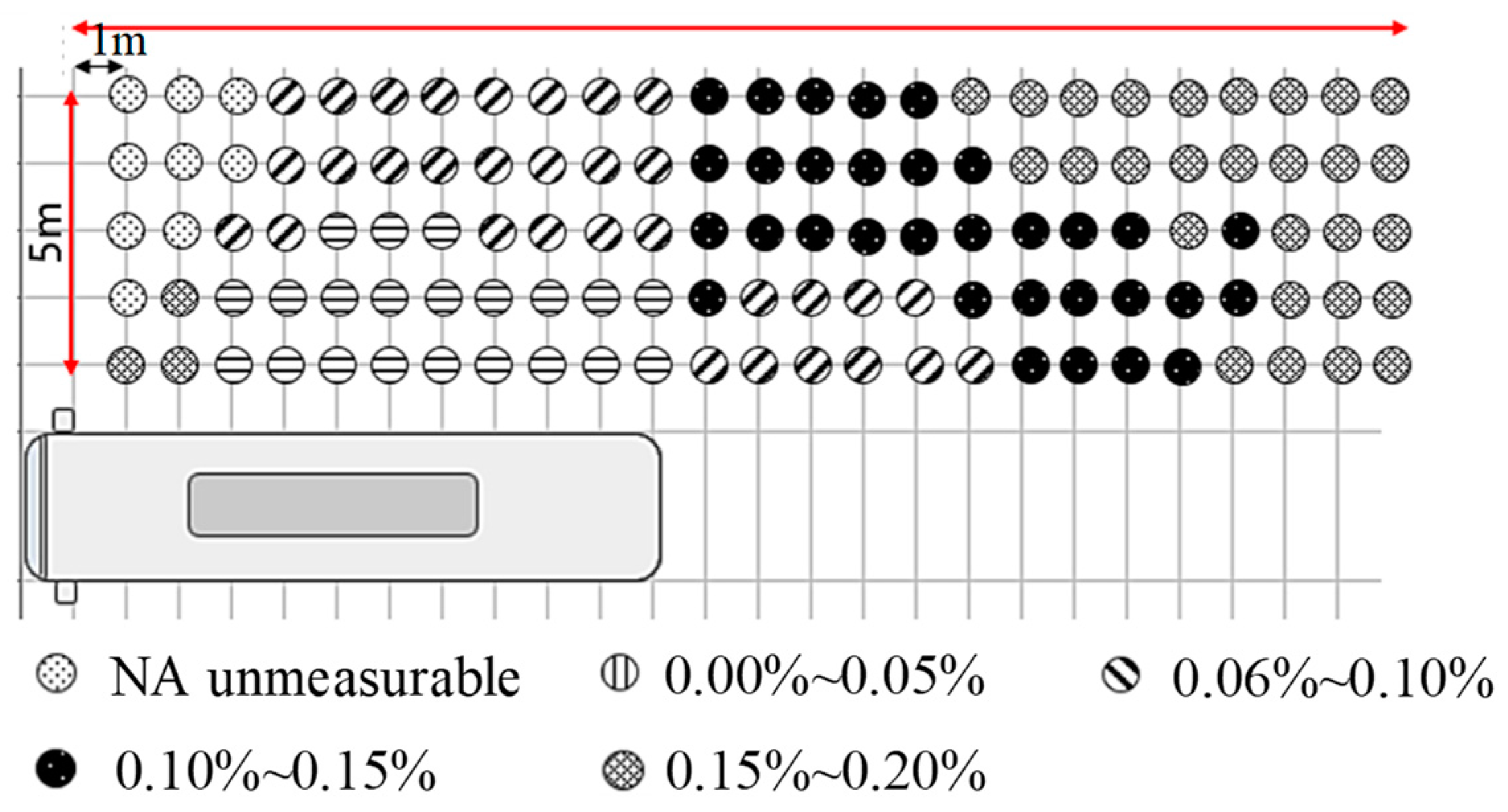

4.3.5. The Error Rate of the Estimated Distance

4.3.6. Discussions

- Section 4.3.1 presents the real-world evaluation of the implemented system and results of six performance metrics in our test scenario, demonstrating its profound ability to reduce accidents and significantly enhance motorcycle safety. However, there are many different scenarios on the road; thus, significant time is required to test this system. We will continue working on it to collect data for testing the usability of this PBSW system across different scenarios.

- Section 4.3.3 discusses the average round-trip delay (RTT) measured between the Android phone and the cloud server in the real-world evaluation. The RTT includes the one-way transmission delay for the Android phone to send images to the cloud server, the YOLOv4 image classification and processing time in the cloud server, and the one-way transmission delay for the cloud server to send the results back to the Android phone. As a result, the average one-way transmission delay between the Android phone and the cloud server is 0.245 s. Please note that these RTTs are measured by the Android phone when it communicates with the cloud server through a stable 4G mobile network. If the 4G signal suffers from significant interferences or blocks by obstacles, these RTTs may fluctuate seriously.

- This system uses a Raspberry Pi 3B+ board as the main body to connect two USB cameras for obtaining road images with a resolution of 640 × 480 pixels per frame and seven frames per second (fps). The Android phone also creates a connectionless UDP socket to transmit the captured images to the cloud server through the mobile network for training the YOLOv4 model. Due to the load of the Android phone and the fluctuated transmission rate of the 4G mobile network, the average image transmission rate of this PBSW system is further limited to 4 fps, which becomes a performance bottleneck of this system. Hence, the predicted motorcycle location is not continuous, as shown in the video clip recorded in the real-world evaluation. In the future, we will replace the Raspberry Pi 3B+ board with a more efficient one, like the Jetson Nano board [39], to raise the image capture rate, image transmission rate, and image inference speed accordingly.

- Section 4.3.4 presents the YOLOv4 classification results of the PBSW system. The YOLOv4 classification results, i.e., precision, recall, and average precision (AP) when TP = 392, FP = 24, and FN = 58 are shown in Table 3. Overall, the AP is 92.82% and the F1 score is 0.91 in this real-world evaluation. In terms of these results, this PBSW system, which uses the YOLOv4 model in the cloud server, can accurately identify the rear-view mirror when the motorcycle moves into the proximity of the bus. As mentioned above, if a more efficient image board, instead of Raspberry Pi 3B+, is used on the motorcycle side, it may execute the YOLOv4 or another advanced image recognition model locally to accurately detect the bus’s rear-view mirror while preventing the fluctuated RTTs to transmit images to the cloud server through an unstable 4G mobile network.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ogitsu, T.; Mizoguchi, H. A study on driver training on advanced driver assistance systems by using a driving simulator. In Proceedings of the 2015 International Conference on Connected Vehicles and Expo (ICCVE), Shenzhen, China, 19–23 October 2015. [Google Scholar]

- Jean-Claude, K.; de Souza, P.; Gruyer, D. Advanced RADAR sensors modeling for driving assistance systems testing. In Proceedings of the 2016 10th European Conference on Antennas and Propagation (EuCAP), Davos, Switzerland, 10–15 April 2016. [Google Scholar]

- Sarala, S.M.; Sharath Yadav, D.H.; Ansari, A. Emotionally adaptive driver voice alert system for advanced driver assistance system (adas) applications. In Proceedings of the 2018 International Conference on Smart Systems and Inventive Technology (ICSSIT), Tirunelveli, India, 13–14 December 2018. [Google Scholar]

- Liu, G.; Zhou, M.; Wang, L.; Wang, H.; Guo, X. A blind spot detection and warning system based on millimeter wave radar for driver assistance. Optik 2017, 135, 353–365. [Google Scholar] [CrossRef]

- Zhang, R.; Liu, J.; Ma, L. A typical blind spot danger pre-warning method of heavy truck under turning right condition. In Proceedings of the 2015 Sixth International Conference on Intelligent Systems Design and Engineering Applications (ISDEA), Guiyang, China, 18–19 August 2015. [Google Scholar]

- Zhou, H.; Shu, W. An early warning system based on motion history image for blind spot of oversize vehicle. In Proceedings of the 2011 International Conference on Electrical and Control Engineering, Yichang, China, 16–18 September 2011. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Chen, C.; Liu, B.; Wan, S.; Qiao, P.; Pei, Q. An edge traffic flow detection scheme based on deep learning in an intelligent transportation system. IEEE Trans. Intell. Transp. Syst. 2020, 22, 1840–1852. [Google Scholar] [CrossRef]

- Liang, S.; Wu, H.; Zhen, L.; Hua, Q.; Garg, S.; Kaddoum, G.; Hassan, M.M.; Yu, K. Edge YOLO: Real-time intelligent object detection system based on edge-cloud cooperation in autonomous vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 23, 25345–25360. [Google Scholar] [CrossRef]

- Wang, C.-H.; Huang, K.-Y.; Yao, Y.; Chen, J.-C.; Shuai, H.-H.; Cheng, W.-H. Lightweight deep learning: An overview. IEEE Consum. Electron. Mag. 2022. [Google Scholar] [CrossRef]

- Appiah, N.; Bandaru, N. Obstacle detection using stereo vision for self-driving cars. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Baden-Baden, Germany, 5–9 June 2011. [Google Scholar]

- Adu-Gyamfi, Y.O.; Asare, S.K.; Sharma, A.; Titus, T. Automated vehicle recognition with deep convolutional neural networks. Transp. Res. Rec. 2017, 2645, 113–122. [Google Scholar] [CrossRef]

- Wang, H.; Yu, Y.; Cai, Y.; Chen, L.; Chen, X. A vehicle recognition algorithm based on deep transfer learning with a multiple feature subspace distribution. Sensors 2018, 18, 4109. [Google Scholar] [CrossRef] [PubMed]

- Bai, T. Analysis on Two-stage Object Detection based on Convolutional Neural Networks. In Proceedings of the 2020 International Conference on Big Data & Artificial Intelligence & Software Engineering (ICBASE), Bangkok, Thailand, 30 October–1 November 2020; pp. 321–325. [Google Scholar]

- Zhang, Y.; Li, X.; Wang, F.; Wei, B.; Li, L. A comprehensive review of one-stage networks for object detection. In Proceedings of the 2021 IEEE International Conference on Signal Processing, Communications and Computing (ICSPCC), Xi’an, China, 17–19 August 2021; pp. 1–6. [Google Scholar]

- Soviany, P.; Ionescu, R.T. Optimizing the trade-off between single-stage and twostage deep object detectors using image difficulty prediction. In Proceedings of the 2018 20th International Symposium on Symbolic and Numeric Algorithms for Scientific Computing (SYNASC), Timisoara, Romania, 20–23 September 2018; pp. 209–214. [Google Scholar]

- Krishna, H.; Jawahar, C. Improving small object detection. In Proceedings of the 2017 4th IAPR Asian Conference on Pattern Recognition (ACPR), Nanjing, China, 26–29 November 2017; pp. 340–345. [Google Scholar]

- Jiang, P.; Chen, Y.; Liu, B.; He, D.; Liang, C. Real-time detection of apple leaf diseases using deep learning approach based on improved convolutional neural networks. IEEE Access 2019, 7, 59069–59080. [Google Scholar] [CrossRef]

- Laroca, R.; Zanlorensi, L.A.; Gonçalves, G.R.; Todt, E.; Schwartz, W.R.; Menotti, D. An efficient and layout-independent automatic license plate recognition system based on the YOLO detector. arXiv 2019, arXiv:1909.01754. [Google Scholar]

- Redmon, A.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, realtime object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Mingxing, T.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Zhang, Y.; Yuan, B.; Zhang, J.; Li, Z.; Pang, C.; Dong, C. Lightweight PM-YOLO Network Model for Moving Object Recognition on the Distribution Network Side. In Proceedings of the 2022 2nd Asia-Pacific Conference on Communications Technology and Computer Science (ACCTCS), Shenyang, China, 25–27 February 2022; pp. 508–516. [Google Scholar]

- Li, Y.; Lv, C. Ss-yolo: An object detection algorithm based on YOLOv3 and shufflenet. In Proceedings of the 2020 IEEE 4th Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), Chongqing, China, 12–14 June 2020; Volume 1, pp. 769–772. [Google Scholar]

- Wu, L.; Zhang, L.; Zhou, Q. Printed circuit board quality detection method integrating lightweight network and dual attention mechanism. IEEE Access 2022, 10, 87617–87629. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, Y.; Zhao, J. MGA-YOLO: A lightweight one-stage network for apple leaf disease detection. Front. Plant Sci. 2022, 13, 927424. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Jin, J.; Ma, Y.; Ren, P. Lightweight object detection algorithm based on YOLOv5 for unmanned surface vehicles. Front. Mar. Sci. 2023, 9, 1058401. [Google Scholar] [CrossRef]

- Li, Z.; Pang, C.; Dong, C.; Zeng, X. R-YOLOv5: A Lightweight Rotational Object Detection Algorithm for Real-Time Detection of Vehicles in Dense Scenes. IEEE Access 2023, 11, 61546–61559. [Google Scholar] [CrossRef]

- Cui, Y.; Yang, L.; Liu, D. Dynamic proposals for efficient object detection. arXiv 2022, arXiv:2207.05252. [Google Scholar]

- Chen, G.; Choi, W.; Yu, X.; Han, T.; Chandraker, M. Learning efficient object detection models with knowledge distillation. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; pp. 1–10. [Google Scholar]

- De Raeve, N.; De Schepper, M.; Verhaevert, J.; Van Torre, P.; Rogier, H. A bluetooth-low-energy-based detection and warning system for vulnerable road users in the blind spot of vehicles. Sensors 2020, 20, 2727. [Google Scholar] [CrossRef] [PubMed]

- Blondé, L.; Doyen, D.; Borel, T. 3D stereo rendering challenges and techniques. In Proceedings of the 2010 44th Annual Conference on Information Sciences and Systems (CISS), Princeton, NJ, USA, 17–19 March 2010. [Google Scholar]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-up robust features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Regulations, “Installation of Devices for Indirect Vision”, Motor Vehicle Driver Information Service, R46 04-S4, R81 00-S1. Available online: https://www.mvdis.gov.tw/webMvdisLaw/Download.aspx?type=Law&ID=32218 (accessed on 30 July 2023).

- Zhang, Q.; Wei, Y.; Wang, K.; Liu, H.; Xu, Y.; Chen, Y. Design of arduino-based in-vehicle warning device for inner wheel difference. In Proceedings of the 2019 IEEE 2nd International Conference on Electronics Technology (ICET), Chengdu, China, 10–13 May 2019. [Google Scholar]

- Fu, K.S.; Gonzalez, R.C.; Lee, C.S.G. Robotics: Control, Sensing, Vision, and Intelligence; McGraw-Hill: New York, NY, USA, 1987; pp. 313–328. [Google Scholar]

- The Video Clip of the PBSW System. Available online: https://drive.google.com/file/d/1FsX5DZLE6-WQbgcSi7cBlt-BxFbdXyva/view?usp=drive_link (accessed on 20 June 2023).

- Jetson Nano Developer Kit for AI and Robotics. Available online: https://www.nvidia.com/en-us/autonomous-machines/embedded-systems/jetson-nano/ (accessed on 20 June 2023).

| Raspberry Pi | Cloud Server | |

|---|---|---|

| Average Image Classification Time | 394.4 s | 0.01 s |

| Average Image Processing Time | Average Image Transmission Rate | Average Round-Trip Delay |

|---|---|---|

| 0.04 ms | 4 fps | 0.5 s |

| Number of Targets | Precision | Recall | AP | F1 Score | |

|---|---|---|---|---|---|

| Rear-view mirror | 450 | 0.94 | 0.87 | 92.82% | 0.90 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chang, I.-C.; Yen, C.-E.; Song, Y.-J.; Chen, W.-R.; Kuo, X.-M.; Liao, P.-H.; Kuo, C.; Huang, Y.-F. An Effective YOLO-Based Proactive Blind Spot Warning System for Motorcycles. Electronics 2023, 12, 3310. https://doi.org/10.3390/electronics12153310

Chang I-C, Yen C-E, Song Y-J, Chen W-R, Kuo X-M, Liao P-H, Kuo C, Huang Y-F. An Effective YOLO-Based Proactive Blind Spot Warning System for Motorcycles. Electronics. 2023; 12(15):3310. https://doi.org/10.3390/electronics12153310

Chicago/Turabian StyleChang, Ing-Chau, Chin-En Yen, Ya-Jing Song, Wei-Rong Chen, Xun-Mei Kuo, Ping-Hao Liao, Chunghui Kuo, and Yung-Fa Huang. 2023. "An Effective YOLO-Based Proactive Blind Spot Warning System for Motorcycles" Electronics 12, no. 15: 3310. https://doi.org/10.3390/electronics12153310

APA StyleChang, I.-C., Yen, C.-E., Song, Y.-J., Chen, W.-R., Kuo, X.-M., Liao, P.-H., Kuo, C., & Huang, Y.-F. (2023). An Effective YOLO-Based Proactive Blind Spot Warning System for Motorcycles. Electronics, 12(15), 3310. https://doi.org/10.3390/electronics12153310