A Q-Learning-Based Load Balancing Method for Real-Time Task Processing in Edge-Cloud Networks

Abstract

1. Introduction

- We propose a load-balancing method based on reinforcement learning between edge servers and cloud servers to reduce the average service time. Service time refers to a task sent from the mobile device to the server and returns to the mobile device after the task is processed on the server side. In other words, the service time is the sum of the task processing time and the communication time.

- We propose a load-balancing method that could flexibly respond to network and server state changes using Q-learning in reinforcement learning. Moreover, in order to utilize edge computing to reduce communication delay, the proposed load-balancing method also considers the real-time tasks that need to be processed.

- We evaluate the proposed method and several other existing load-balancing methods through simulation and verify its superiority.

2. Related Work

2.1. Load Balancing Method in Cloud-Edge-Client Communications

2.2. Load Balancing Method Using Reinforcement Learning

3. Proposed Load Balancing Method

3.1. Methodology Section

3.2. The Proposed Q-Learning Approach

3.2.1. State S

3.2.2. Action A

3.2.3. Reward r and R

3.2.4. The Update of Q Value

4. Simulation Result and Analysis

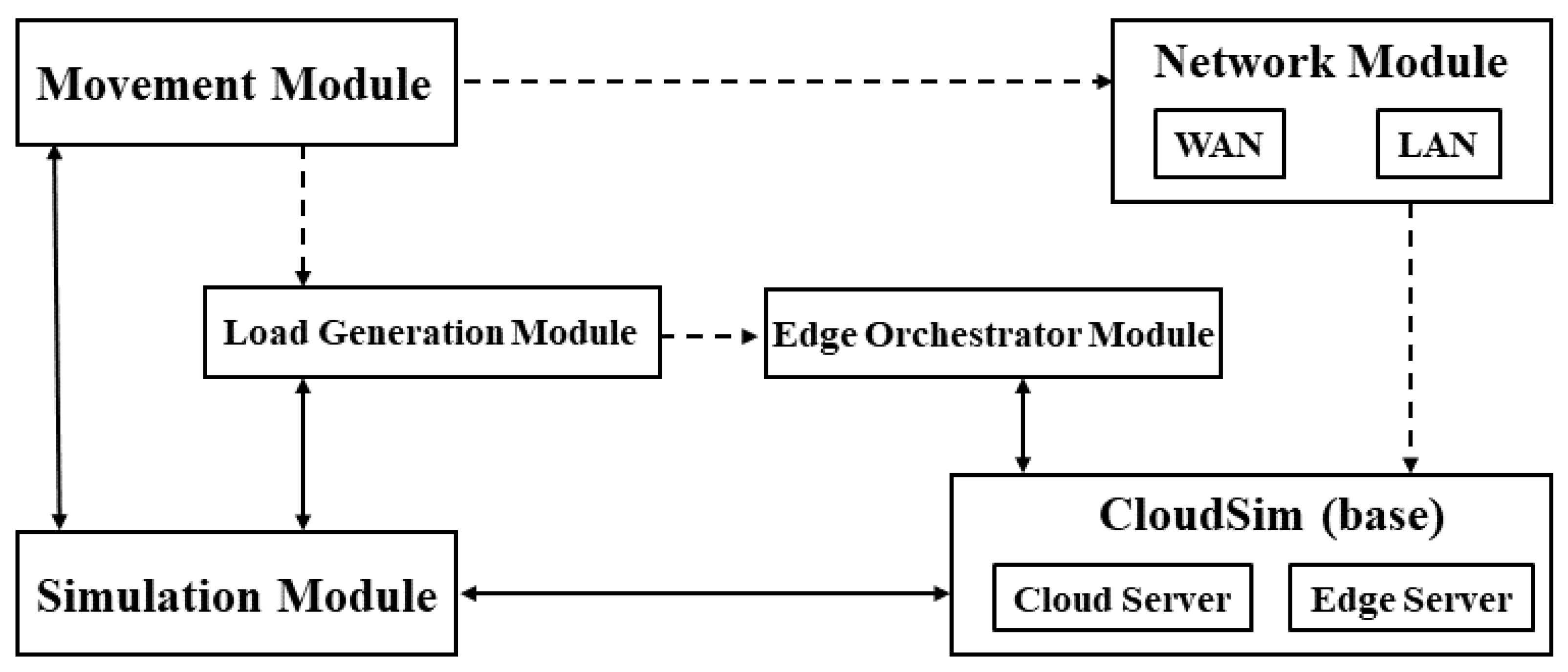

4.1. Simulation Tool and Setup

4.2. Result Analysis

- 1.

- Process tasks only on cloud servers (Cloud method).

- 2.

- Process tasks only on edge servers (Edge method).

- 3.

- Load balancing between edge and cloud servers based on probability (Probability method).

- 4.

- Load Balancing between edge and cloud servers using Q-learning (Proposed Method).

- 1.

- The user with the device moves while the task is being processed.

- 2.

- The task could not be submitted due to network congestion.

- 3.

- When the total service time exceeds a threshold.

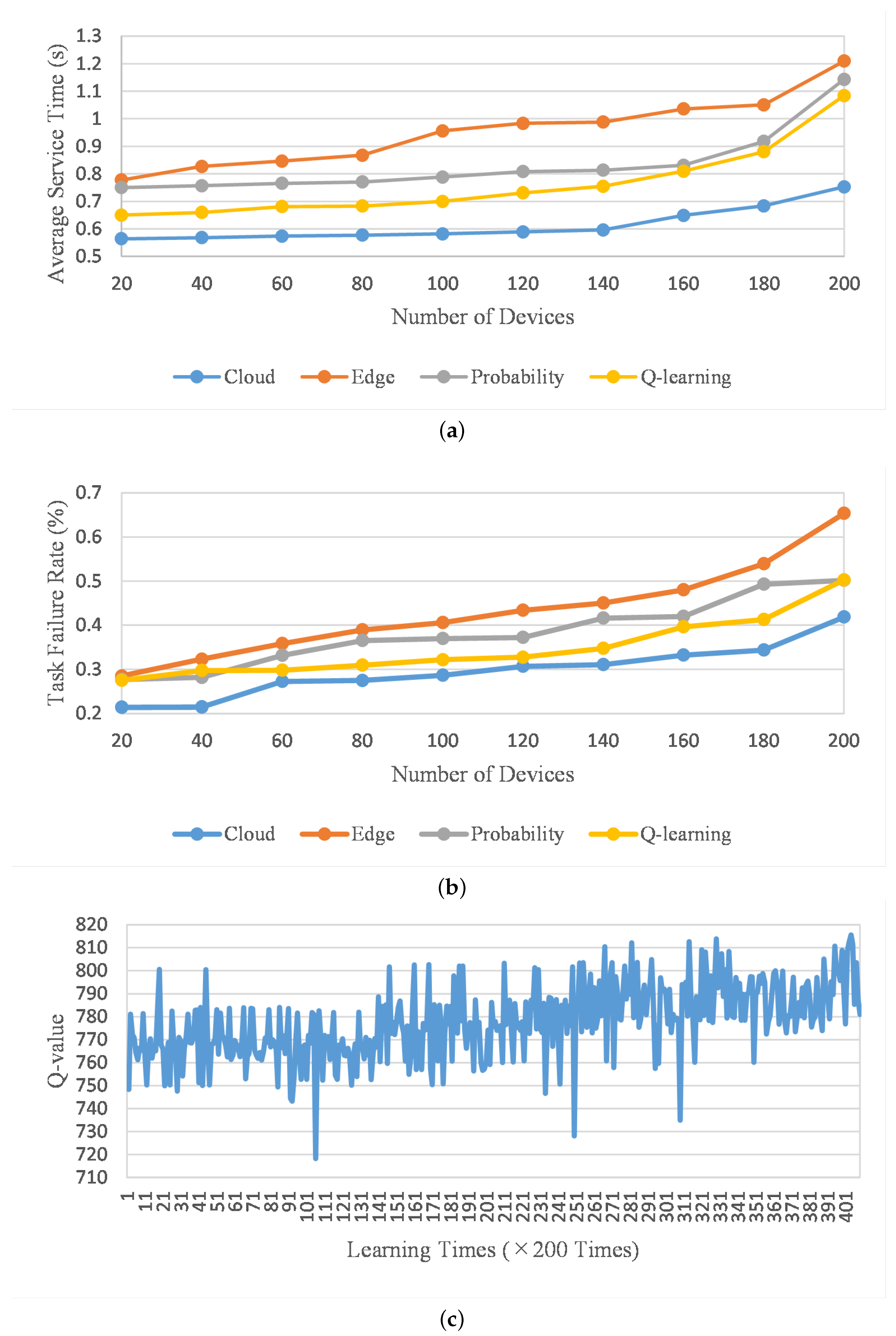

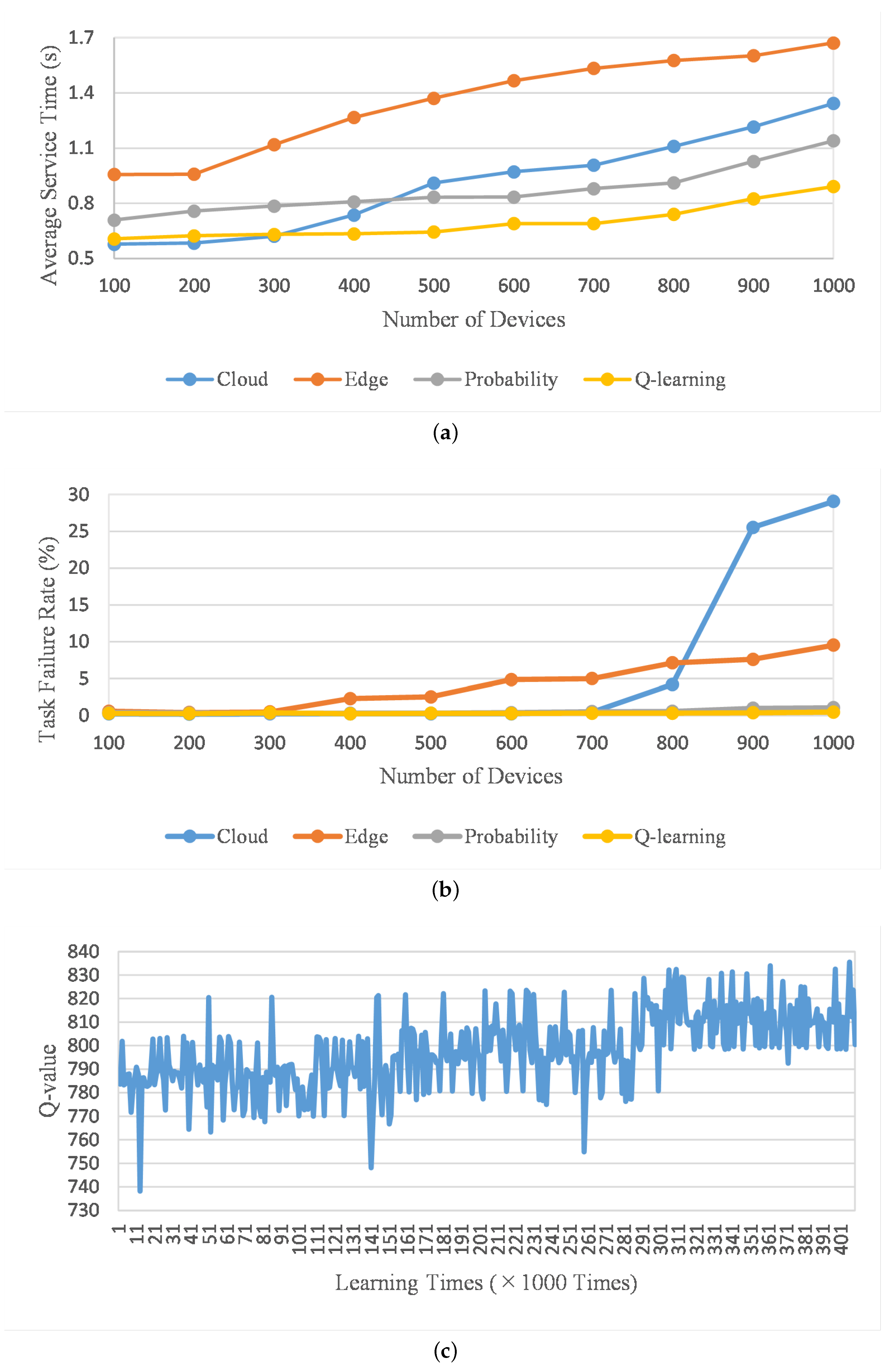

4.3. Evaluation in Changing Number of Devices

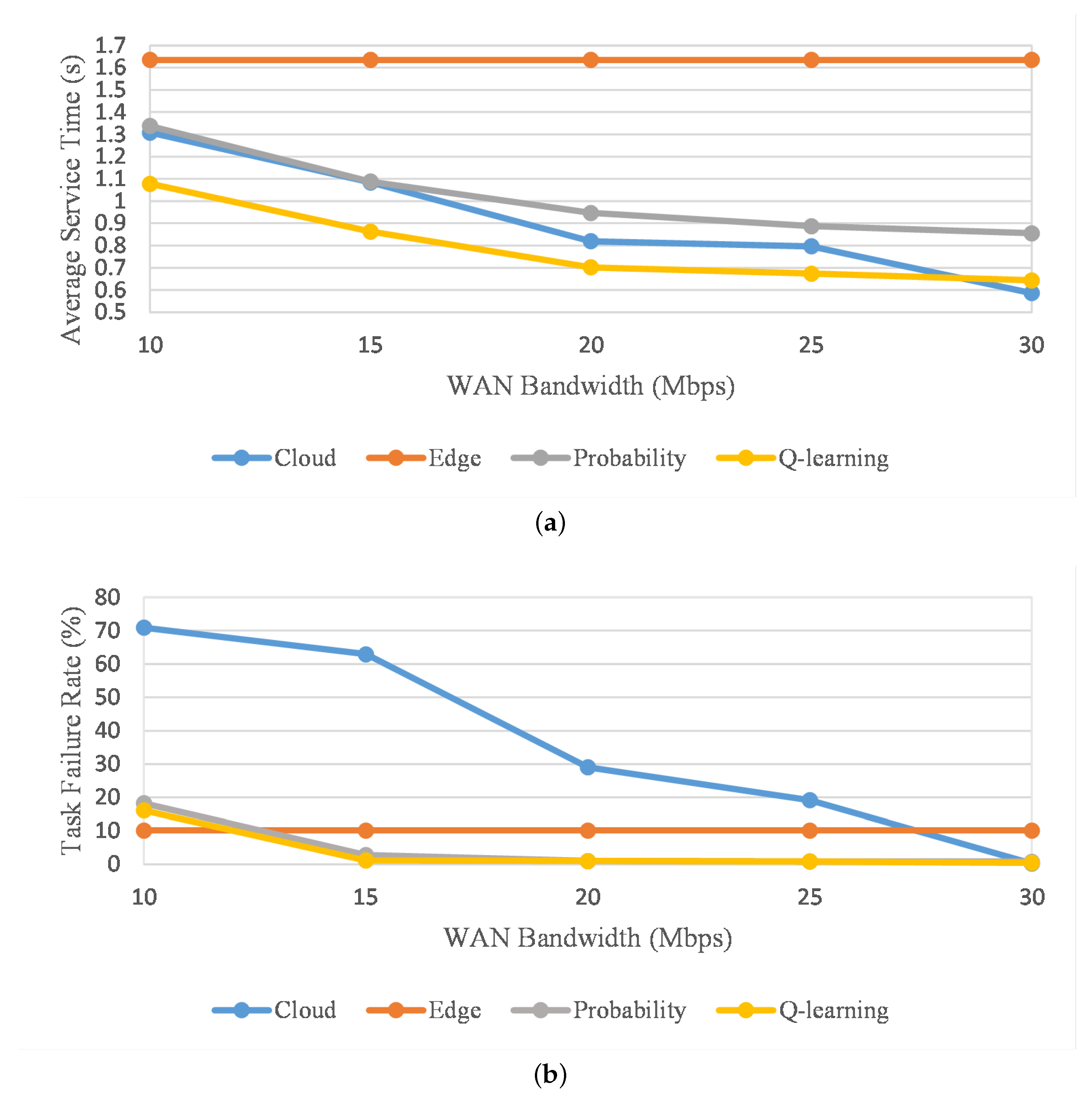

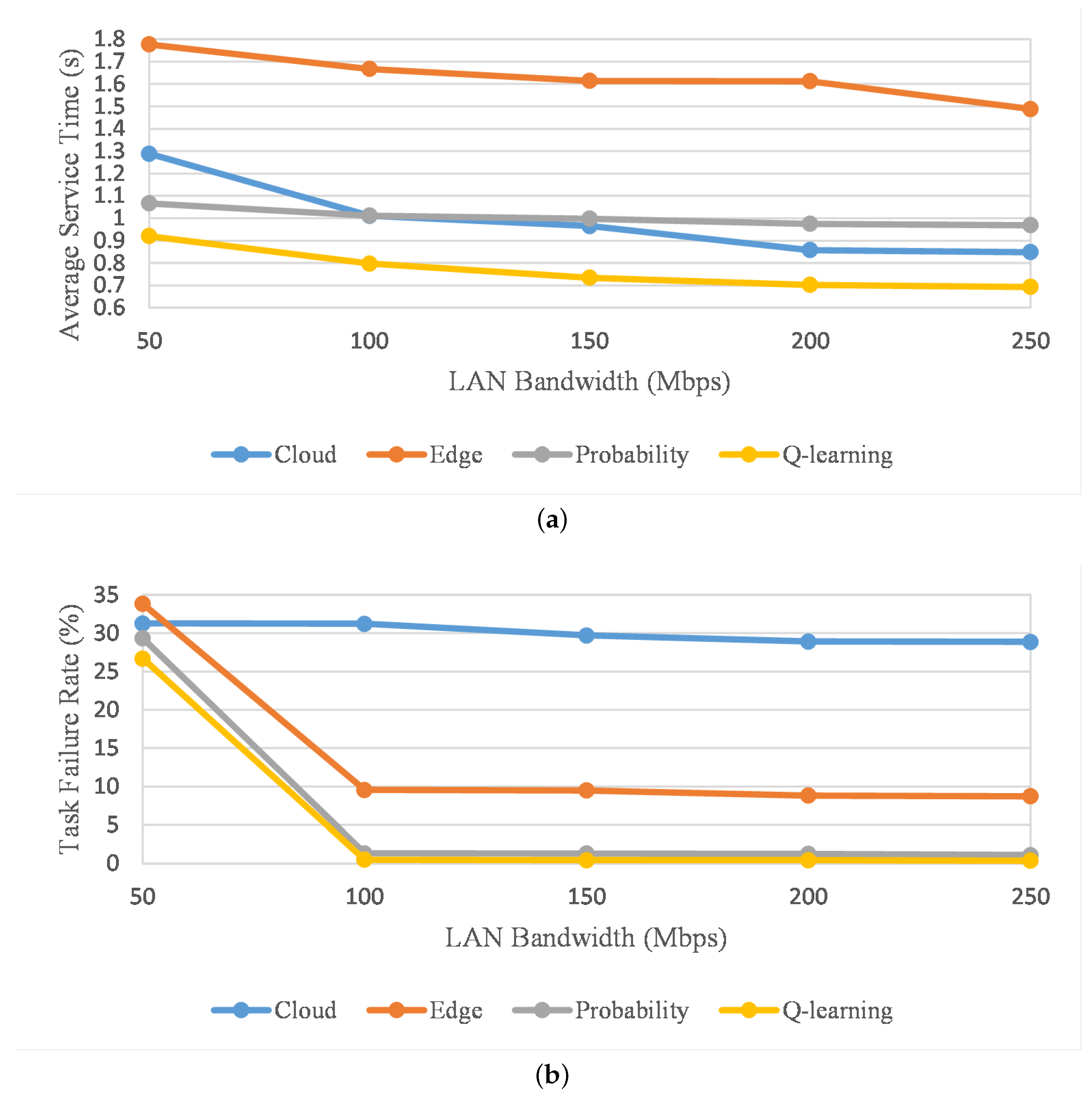

4.4. Evaluation in Bandwidth Change

4.5. Evaluation of Change in Communication Delay

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Guo, F.; Yu, F.R.; Zhang, H.; Li, X.; Ji, H.; Leung, V.C.M. Enabling Massive IoT Toward 6G: A Comprehensive Survey. IEEE Internet Things J. 2021, 8, 11891–11915. [Google Scholar] [CrossRef]

- Khanh, Q.V.; Hoai, N.V.; Manh, L.D.; Le, A.N.; Jeon, G. Wireless communication technologies for IoT in 5G: Vision, applications, and challenges. Wirel. Commun. Mob. Comput. 2022, 8, 3229294. [Google Scholar] [CrossRef]

- Mahmood, A.; Beltramelli, L.; Abedin, S.F.; Zeb, S.; Mowla, N.I.; Hassan, S.A.; Sisinni, E.; Gidlund, M. Industrial IoT in 5G-and-Beyond Networks: Vision, Architecture, and Design Trends. IEEE Trans. Ind. Inform. 2022, 18, 4122–4137. [Google Scholar] [CrossRef]

- Bello, S.A.; Oyedele, L.O.; Akinade, O.O.; Bilal, M.; Delgado, J.M.D.; Akanbi, L.A.; Ajayi, A.O.; Owolabi, H.A. Cloud computing in construction industry: Use cases, benefits and challenges. Autom. Constr. 2021, 122, 103441. [Google Scholar] [CrossRef]

- Alouffi, B.; Hasnain, M.; Alharbi, A.; Alosaimi, W.; Alyami, H.; Ayaz, M. A Systematic Literature Review on Cloud Computing Security: Threats and Mitigation Strategies. IEEE Access 2021, 9, 57792–57807. [Google Scholar] [CrossRef]

- Siriwardhana, Y.; Porambage, P.; Liyanage, M.; Ylianttila, M. A Survey on Mobile Augmented Reality With 5G Mobile Edge Computing: Architectures, Applications, and Technical Aspects. IEEE Commun. Surv. Tutorials 2021, 23, 1160–1192. [Google Scholar] [CrossRef]

- Douch, Q.S.; Abid, M.R.; Zine-Dine, K.; Bouzidi, D.; Benhaddou, D. Edge Computing Technology Enablers: A Systematic Lecture Study. IEEE Access 2022, 10, 69264–69302. [Google Scholar] [CrossRef]

- Arthurs, P.; Gillam, L.; Krause, P.; Wang, N.; Halder, K.; Mouzakitis, A. A Taxonomy and Survey of Edge Cloud Computing for Intelligent Transportation Systems and Connected Vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 23, 6206–6221. [Google Scholar] [CrossRef]

- Chang, Z.; Liu, S.; Xiong, X.; Cai, Z.; Tu, G. A Survey of Recent Advances in Edge-Computing-Powered Artificial Intelligence of Things. IEEE Internet Things J. 2021, 8, 13849–13875. [Google Scholar] [CrossRef]

- Houssein, E.H.; Gad, A.G.; Wazery, Y.M.; Suganthan, P.N. Task scheduling in cloud computing based on meta-heuristics: Review, taxonomy, open challenges, and future trends. Swarm Evol. Comput. 2021, 62, 100841. [Google Scholar] [CrossRef]

- Jing, W.; Zhao, C.; Miao, Q.; Song, H.; Chen, G. QoS-DPSO: QoS-aware task scheduling for cloud computing system. J. Netw. Syst. Manag. 2021, 29, 1–29. [Google Scholar] [CrossRef]

- Kumar, P.; Kumar, R. Issues and challenges of load balancing techniques in cloud computing: A survey. ACM Comput. Surv. CSUR 2022, 51, 1–35. [Google Scholar] [CrossRef]

- Qiu, T.; Chi, J.; Zhou, X.; Ning, Z.; Atiquzzaman, M.; Wu, D.O. Edge Computing in Industrial Internet of Things: Architecture, Advances and Challenges. IEEE Commun. Surv. Tutorials 2020, 22, 2462–2488. [Google Scholar] [CrossRef]

- Pydi, H.; Iyer, G.N. Analytical Review and Study on Load Balancing in Edge Computing Platform. In Proceedings of the 2020 Fourth International Conference on Computing Methodologies and Communication (ICCMC), Erode, India, 11–13 March 2020; pp. 180–187. [Google Scholar]

- Wang, T.; Lu, Y.; Wang, J.; Dai, H.-N.; Zheng, X.; Jia, W. EIHDP: Edge-Intelligent Hierarchical Dynamic Pricing Based on Cloud-Edge-Client Collaboration for IoT Systems. IEEE Trans. Comput. 2021, 70, 1285–1298. [Google Scholar] [CrossRef]

- Mahmoodi, S.E.; Uma, R.N.; Subbalakshmi, K.P. Optimal Joint Scheduling and Cloud Offloading for Mobile Applications. IEEE Trans. Cloud Comput. 2019, 7, 301–313. [Google Scholar] [CrossRef]

- Chiang, Y.; Zhang, T.; Ji, Y. Jonit Cotask Aware Offloading and Scheduling in Mobile Edge Computing Systems. IEEE Access 2019, 7, 105008–105018. [Google Scholar] [CrossRef]

- Li, Z.; Yang, L. An Electromagnetic Situation Calculation Method Based on Edge Computing and Cloud Computing. In Proceedings of the 2021 4th Inter national Conference on Artificial Intelligence and Big Data, Chengdu, China, 28–31 May 2021; pp. 232–236. [Google Scholar]

- Hao, T.; Zhan, J.; Hwang, K.; Gao, W.; Wen, X. AI oriented Workload Allocation for Cloud Edge Computing. In Proceedings of the 2021 IEEE/A M 21st International Symposium on Cluster, Cloud and Internet Computing (CCGrid), Melbourne, Australia, 10–13 May 2021; pp. 555–564. [Google Scholar]

- Luong, N.C.; Hoang, D.T.; Gong, S.; Niyato, D.; Wang, P.; Liang, Y.C.; Kim, D.I. Applications of Deep Reinforcement Learning in Communications and Networking: A Survey. IEEE Commun. Surv. Tutorials 2019, 21, 3133–3174. [Google Scholar] [CrossRef]

- Chen, W.; Qiu, X.; Cai, T.; Dai, H.-N.; Zheng, Z.; Zhang, Y. Deep Reinforcement Learning for Internet of Things: A Comprehensive Survey. IEEE Commun. Surv. Tutorials 2021, 23, 1659–1692. [Google Scholar] [CrossRef]

- Li, T.; Zhu, K.; Luong, N.C.; Niyato, D.; Wu, Q.; Zhang, Y.; Chen, B. Applications of Multi-Agent Reinforcement Learning in Future Internet: A Comprehensive Survey. IEEE Commun. Surv. Tutorials 2022, 24, 1240–1279. [Google Scholar] [CrossRef]

- Zheng, K.; Meng, H.; Chatzimisios, P.; Lei, L.; Shen, X. An SMDP-Based Resource Allocation in Vehicular Cloud Computing Systems. IEEE Trans. Ind. Electron. 2015, 62, 7920–7928. [Google Scholar] [CrossRef]

- Liang, H.; Zhang, X.; Zhang, J.; Li, Q.; Zhou, S.; Zhao, L. A Novel Adaptive Resource Allocation Model Based on SMDP and Reinforcement Learning Algorithm in Vehicular Cloud System. IEEE Trans. Veh. Technol. 2019, 68, 10018–10029. [Google Scholar] [CrossRef]

- Le, D.V.; Tham, C.-K. A deep reinforcement learning based offloading scheme in ad hoc mobile clouds. In Proceedings of the IEEE INFOCOM 2018—IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), Honolulu, HI, USA, 15–19 April 2018; pp. 760–765. [Google Scholar]

- Ke, H.; Wang, J.; Wang, H.; Ge, Y. Joint Optimization of Data Offloading and Resource Allocation With Renewable Energy Aware for IoT Devices: A Deep Reinforcement Learning Approach. IEEE Access 2019, 7, 179349–179363. [Google Scholar] [CrossRef]

- Chen, X.; Wu, C.; Liu, Z.; Zhang, N.; Ji, Y. Computation Offloading in Beyond 5G Networks: A Distributed Learning Framework and Applications. IEEE Wirel. Commun. 2021, 28, 56–62. [Google Scholar] [CrossRef]

- Wu, C.; Liu, Z.; Liu, F.; Yoshinaga, T.; Ji, Y.; Li, J. Collaborative Learning of Communication Routes in Edge-enabled Multi-access Vehicular Environment. IEEE Trans. Cogn. Commun. Netw. 2020, 6, 1155–1165. [Google Scholar] [CrossRef]

- Sonmez, C.; Ozgovde, A.; Ersoy, C. Edgecloudsim: An environment for performance evaluation of edge computing systems. Trans. Emerg. Telecommun. Technol. 2018, 29, 3493. [Google Scholar] [CrossRef]

| State S: (1) CPU usage rate (Edge server/Cloud Server) (2) Task size (3) Delay sensitivity level 0 or 1 |

| Action A: (1) Task processed at Edge Server (2) Task offloaded to cloud server |

| Reward r: Service time (Task processing time + communication delay) R: (1) Task processing failure: R(1) = 0 (2) Task processing success (service time longer than average): R(2) = 10 (3) Task processing success (service time less than average): R(3) = 100 (4) Tasks that require real-time processing are processed by edge servers: R(4) = 50 |

| Value Q: |

| Parameter | Value |

|---|---|

| Number of servers | 1 |

| Number of VMs | 4 |

| Number of cores for VM | 4 |

| MIPS of VM | 10,000 |

| RAM of VM (KB) | 32,000 |

| Storage Capacity of VM (KB) | 1,000,000 |

| Parameter | Value |

|---|---|

| Number of servers | 14 |

| Microprocessor | x86 |

| OS | Linux |

| VMM of Host | Xen |

| Number of cores for Host | 8 |

| MIPS of Host | 4000 |

| RAM of Host | 8000 |

| Storage capacity of Host (KB) | 200,000 |

| Number of VMs | 2 |

| VMM of VM | Xen |

| Number of cores for VM | 2 |

| MIPS of VM | 1000 |

| RAM of VM (KB) | 2000 |

| Storage capacity of VM (KB) | 50,000 |

| Parameter | Value |

|---|---|

| Simulation time (min) | 30 |

| LAN bandwidth (Mbps) | 200 |

| WAN bandwidth (Mbps) | 20 |

| LAN delay (ms) | 1 |

| WAN delay (ms) | 100 |

| The interval of VM load check (s) | 0.1 |

| The interval of VM location check (s) | 0.1 |

| Minimum number of devices | 20 |

| Maximum number of devices | 200 |

| Device count size | 20 |

| Parameter | Value |

|---|---|

| Application usage rate (%) | 20 |

| Probability of offloading to the cloud server (%) | 50 |

| Poisson inter-arrival time (s) | 5 |

| Delay sensitivity | 1 |

| Active period (s) | 45 |

| Idle period (s) | 15 |

| Upload data size (KB) | 150 |

| Download data size (KB) | 25 |

| Task size | 200 |

| Required number of cores | 1 |

| VM utilization on the edge server (%) | 5 |

| VM utilization on the cloud server (%) | 0.5 |

| Parameter | Value |

|---|---|

| Application usage rate (%) | 20 |

| Probability of offloading to the cloud server (%) | 50 |

| Poisson inter-arrival time (s) | 30 |

| Delay sensitivity | 1 |

| Active period (s) | 10 |

| Idle period (s) | 20 |

| Upload data size (KB) | 125 |

| Download data size (KB) | 20 |

| Task size | 400 |

| Required number of cores | 1 |

| VM utilization on the edge server (%) | 5 |

| VM utilization on the cloud server (%) | 0.5 |

| Parameter | Value |

|---|---|

| Application usage rate (%) | 30 |

| Probability of offloading to the cloud server (%) | 50 |

| Poisson inter-arrival time (s) | 60 |

| Delay sensitivity | 0 |

| Active period (s) | 60 |

| Idle period (s) | 60 |

| Upload data size (KB) | 2500 |

| Download data size (KB) | 250 |

| Task size | 3000 |

| Required number of cores | 1 |

| VM utilization on the edge server (%) | 20 |

| VM utilization on the cloud server (%) | 2 |

| Parameter | Value |

|---|---|

| Application usage rate (%) | 30 |

| Probability of offloading to the cloud server (%) | 50 |

| Poisson inter-arrival time (s) | 7 |

| Delay sensitivity | 0 |

| Active period (s) | 15 |

| Idle period (s) | 45 |

| Upload data size (KB) | 2000 |

| Download data size (KB) | 200 |

| Task size | 200 |

| Required number of cores | 1 |

| VM utilization on the edge server (%) | 10 |

| VM utilization on the cloud server (%) | 1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Du, Z.; Peng, C.; Yoshinaga, T.; Wu, C. A Q-Learning-Based Load Balancing Method for Real-Time Task Processing in Edge-Cloud Networks. Electronics 2023, 12, 3254. https://doi.org/10.3390/electronics12153254

Du Z, Peng C, Yoshinaga T, Wu C. A Q-Learning-Based Load Balancing Method for Real-Time Task Processing in Edge-Cloud Networks. Electronics. 2023; 12(15):3254. https://doi.org/10.3390/electronics12153254

Chicago/Turabian StyleDu, Zhaoyang, Chunrong Peng, Tsutomu Yoshinaga, and Celimuge Wu. 2023. "A Q-Learning-Based Load Balancing Method for Real-Time Task Processing in Edge-Cloud Networks" Electronics 12, no. 15: 3254. https://doi.org/10.3390/electronics12153254

APA StyleDu, Z., Peng, C., Yoshinaga, T., & Wu, C. (2023). A Q-Learning-Based Load Balancing Method for Real-Time Task Processing in Edge-Cloud Networks. Electronics, 12(15), 3254. https://doi.org/10.3390/electronics12153254