A Two-Stage Image Inpainting Technique for Old Photographs Based on Transfer Learning

Abstract

:1. Introduction

- In this paper, a two-stage image inpainting network is constructed, which embeds images into two collaborative subtasks, which are structure generation and texture synthesis under structure constraints, and embeds the window cross aggregation-based transform module into the generator, which can effectively acquire the image long-range dependencies, thereby solving the problem that convolutional operations are limited to local feature extraction and enhancing the long-range contextual information acquisition capability of the model in image inpainting.

- Transfer learning is applied to image inpainting technology. The improved two-stage image inpainting network in this paper is used as the basic network, and the generator is decoupled into a feature extractor and classifier, which are an encoder and a decoder, respectively. A domain-invariant feature extractor is obtained through the training of the minimax game using source domain data and target domain data. The feature extractor can be combined with the original encoder to repair the old photo image, and the restoration of small sample old photo image dataset is realized.

- The experiments demonstrate that the two-stage network constructed in this paper has a better inpainting performance, and the inpainting of old photos using the transfer learning technique is better than that without the use of transfer learning, which proves the effectiveness of the method.

2. Related Work

2.1. Image Inpainting Technique

2.2. Transfer Learning Method

3. Two-Stage Image Inpainting of Old Photos Based on Transfer Learning

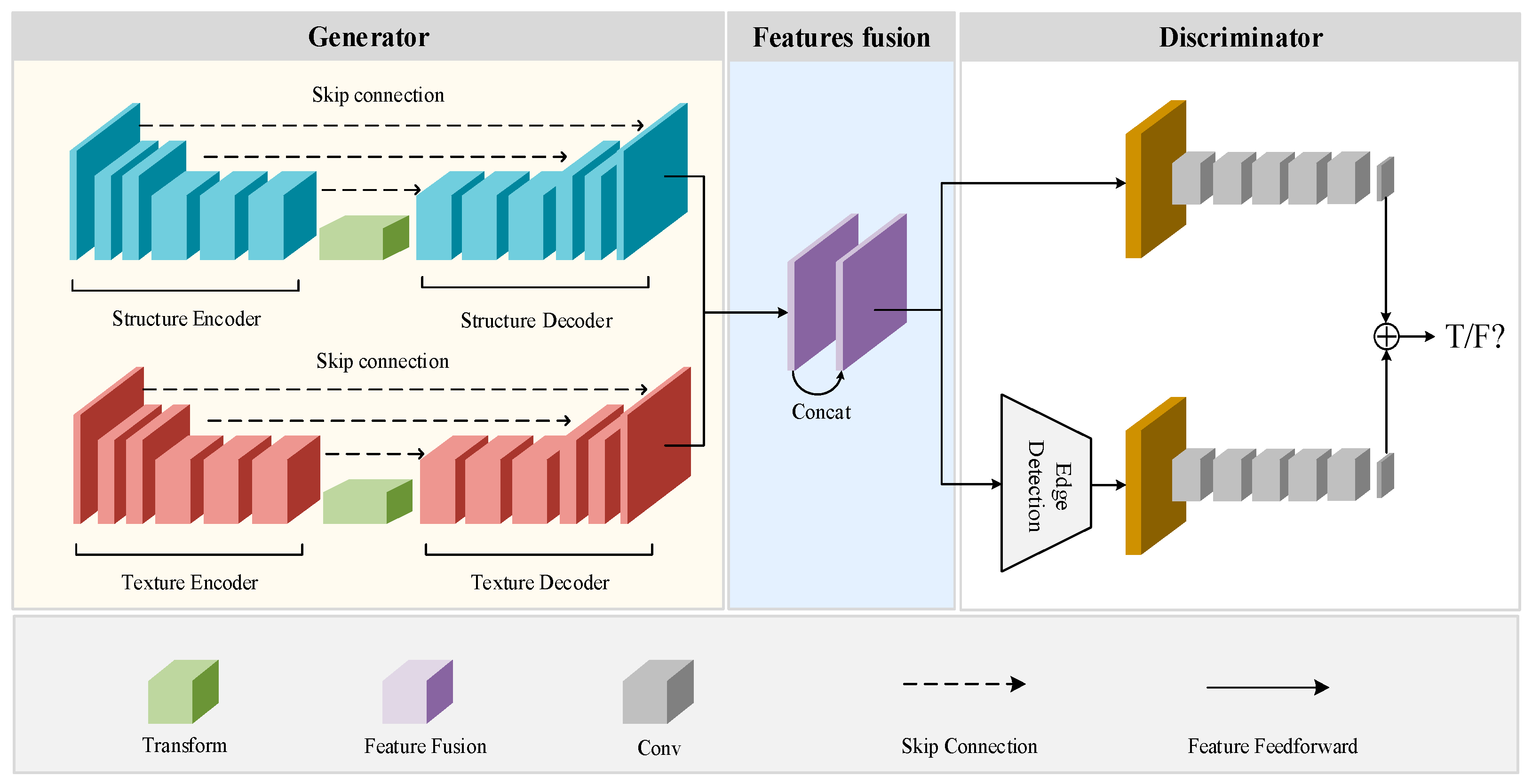

3.1. Two-Stage Image Inpainting Network

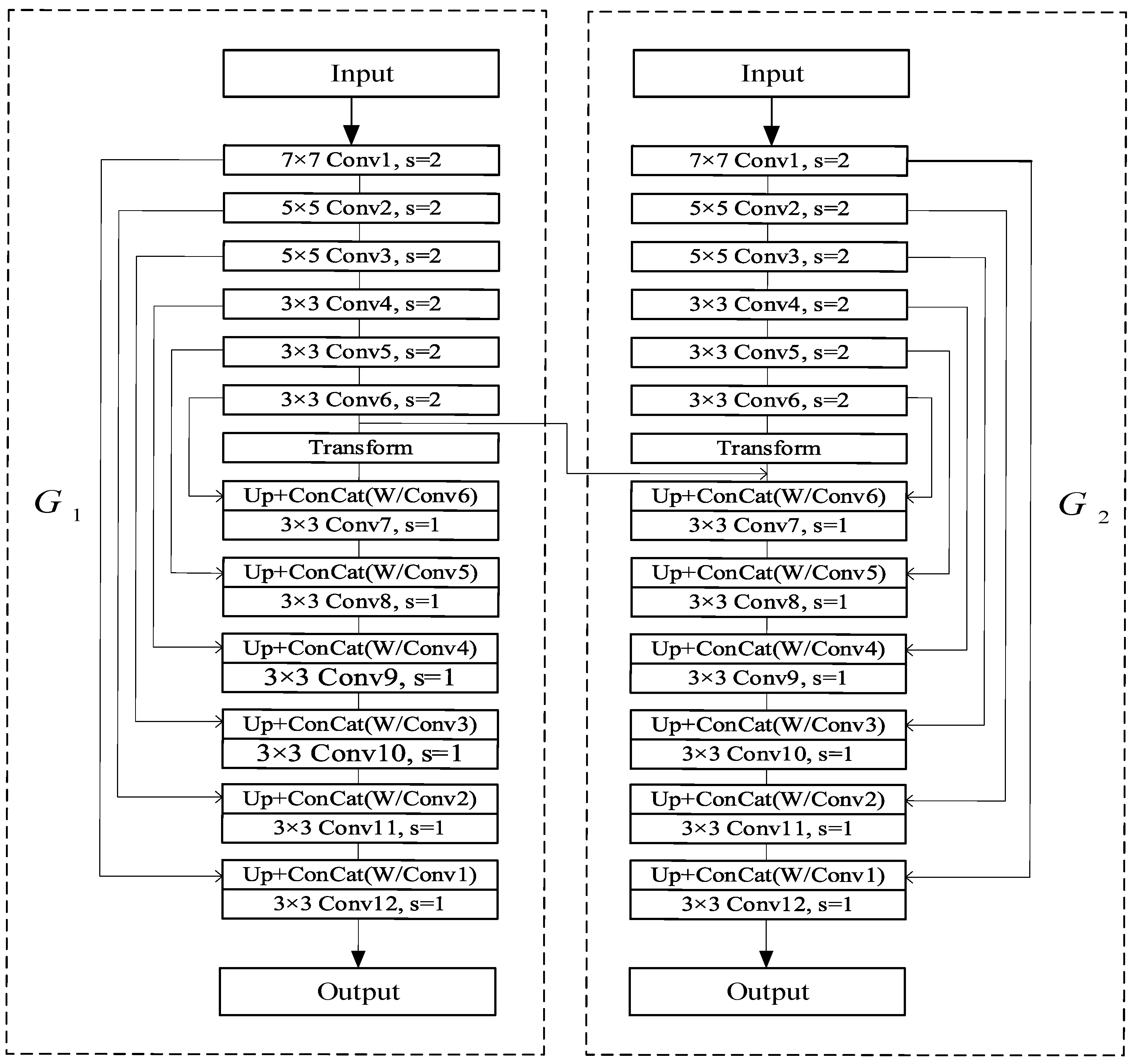

3.1.1. Generator Network

3.1.2. Dual Discriminators

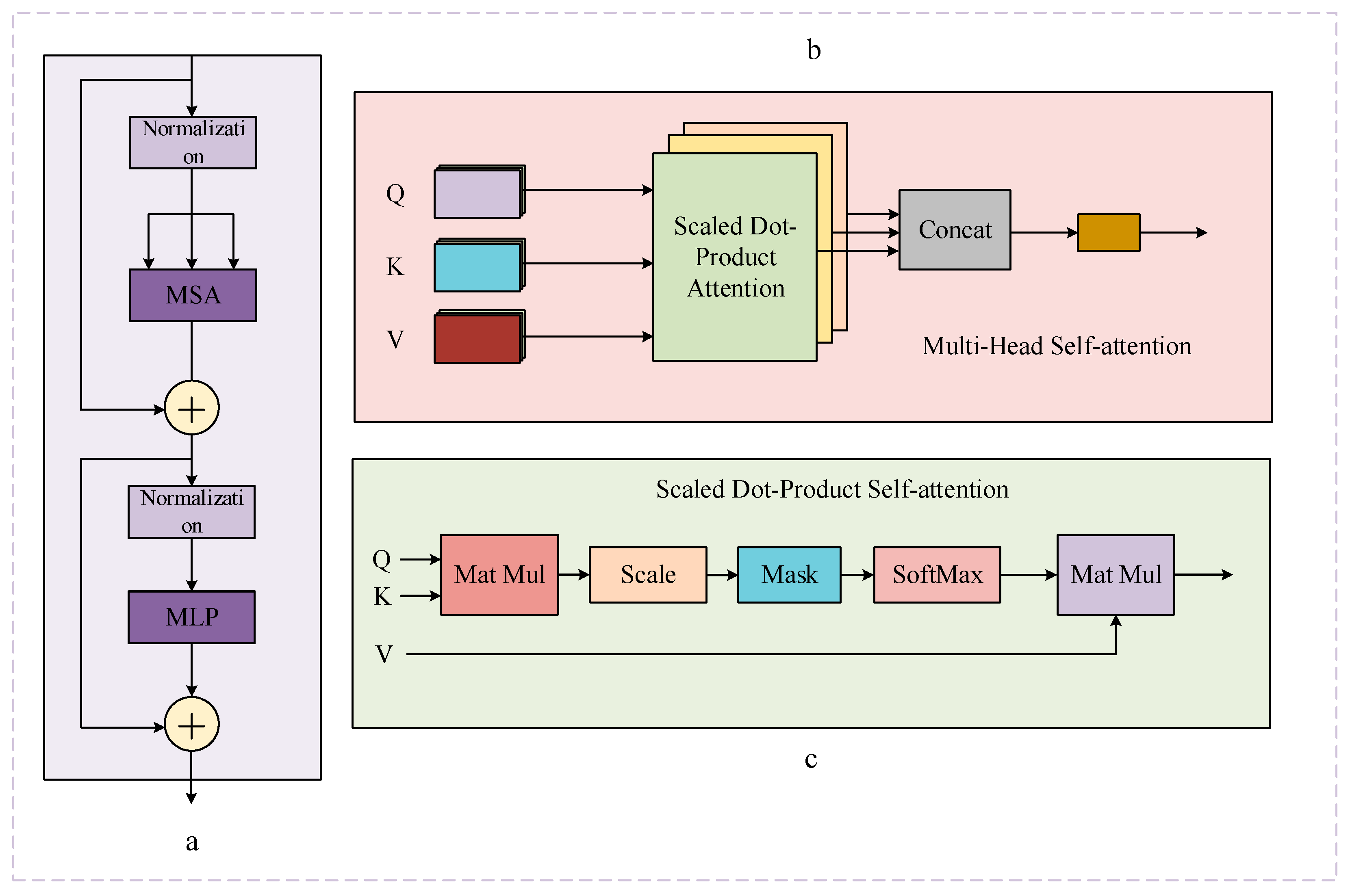

3.1.3. Aggregation Transform

3.1.4. The Joint Loss Function

- (1)

- Feature content loss

- (2)

- Reconstruction loss

- (3)

- Perceptual loss [39]

- (4)

- Style loss

- (5)

- Adversarial loss [42]

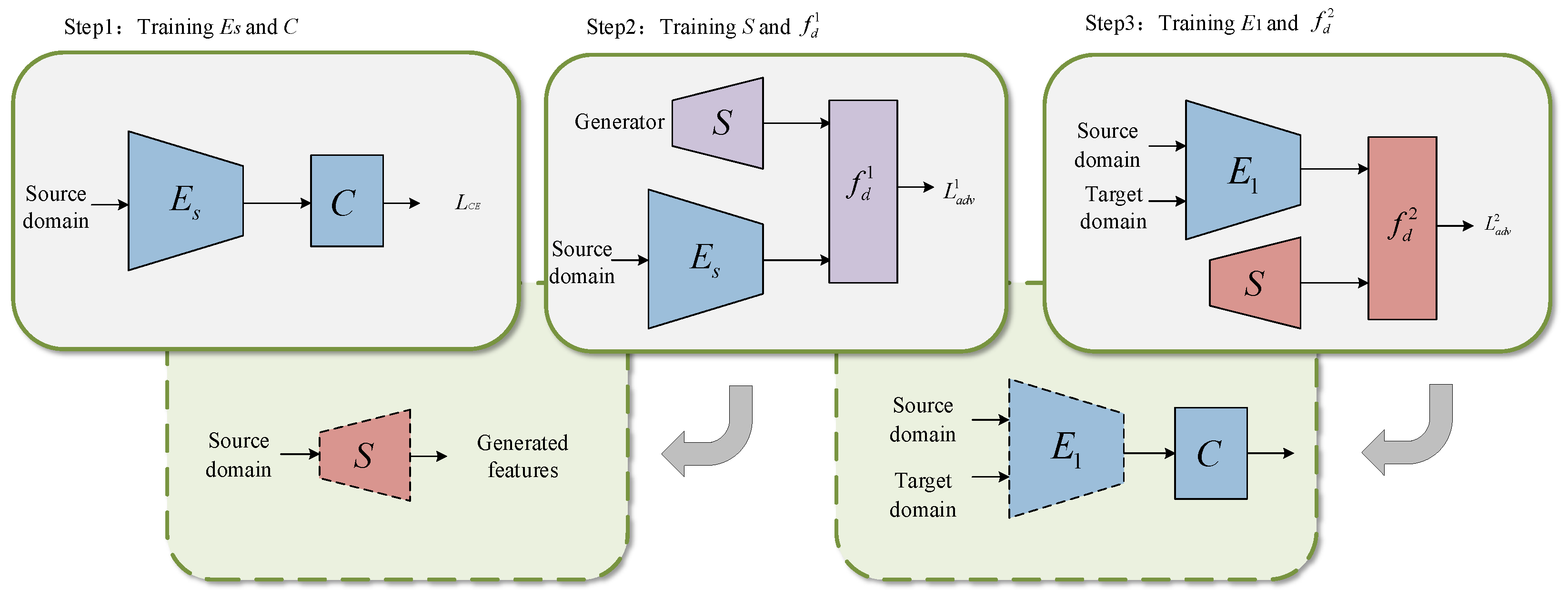

3.2. Training of the Model

4. Experimental Analysis

4.1. Analysis of Experimental Results of Inpainting Model

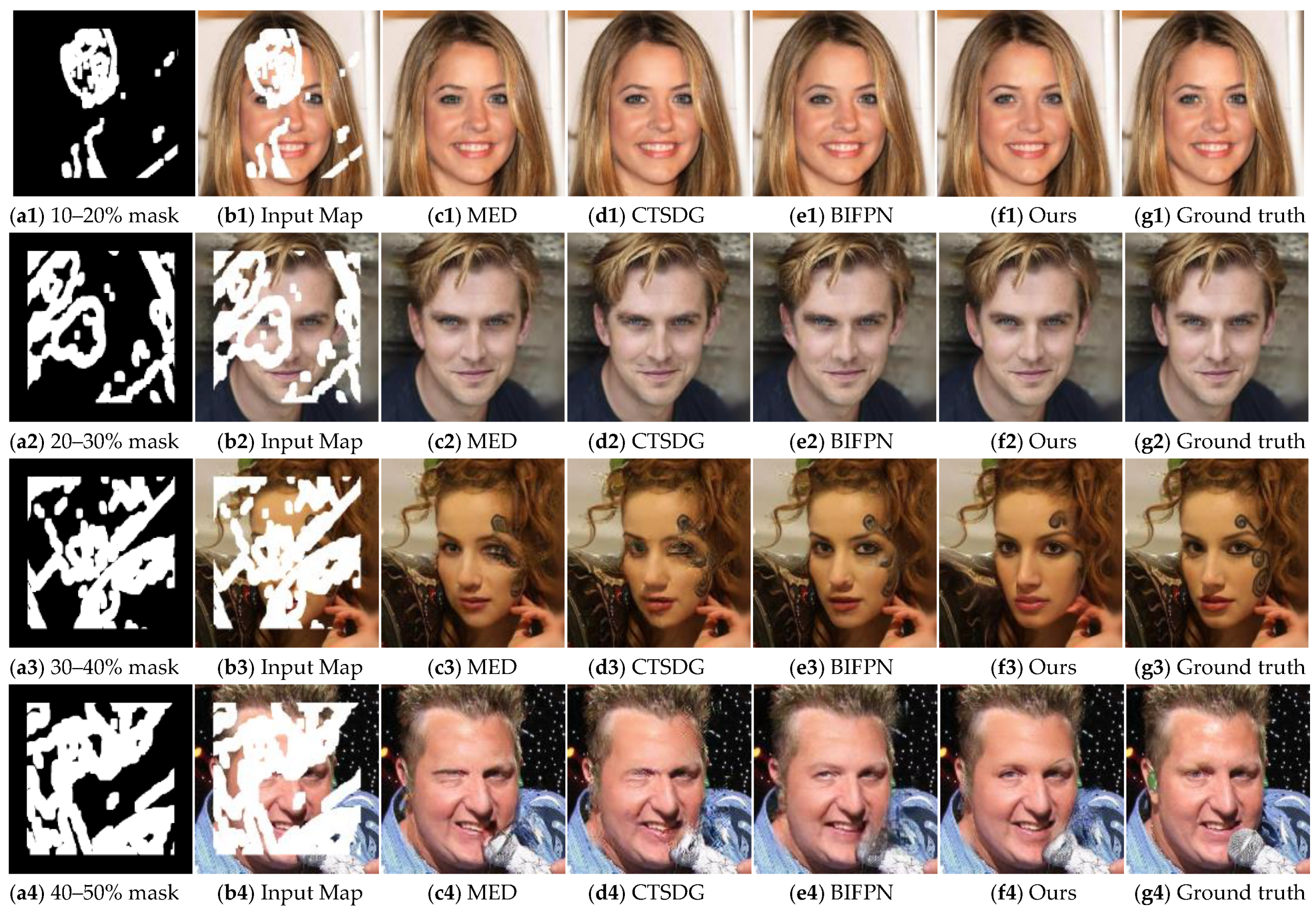

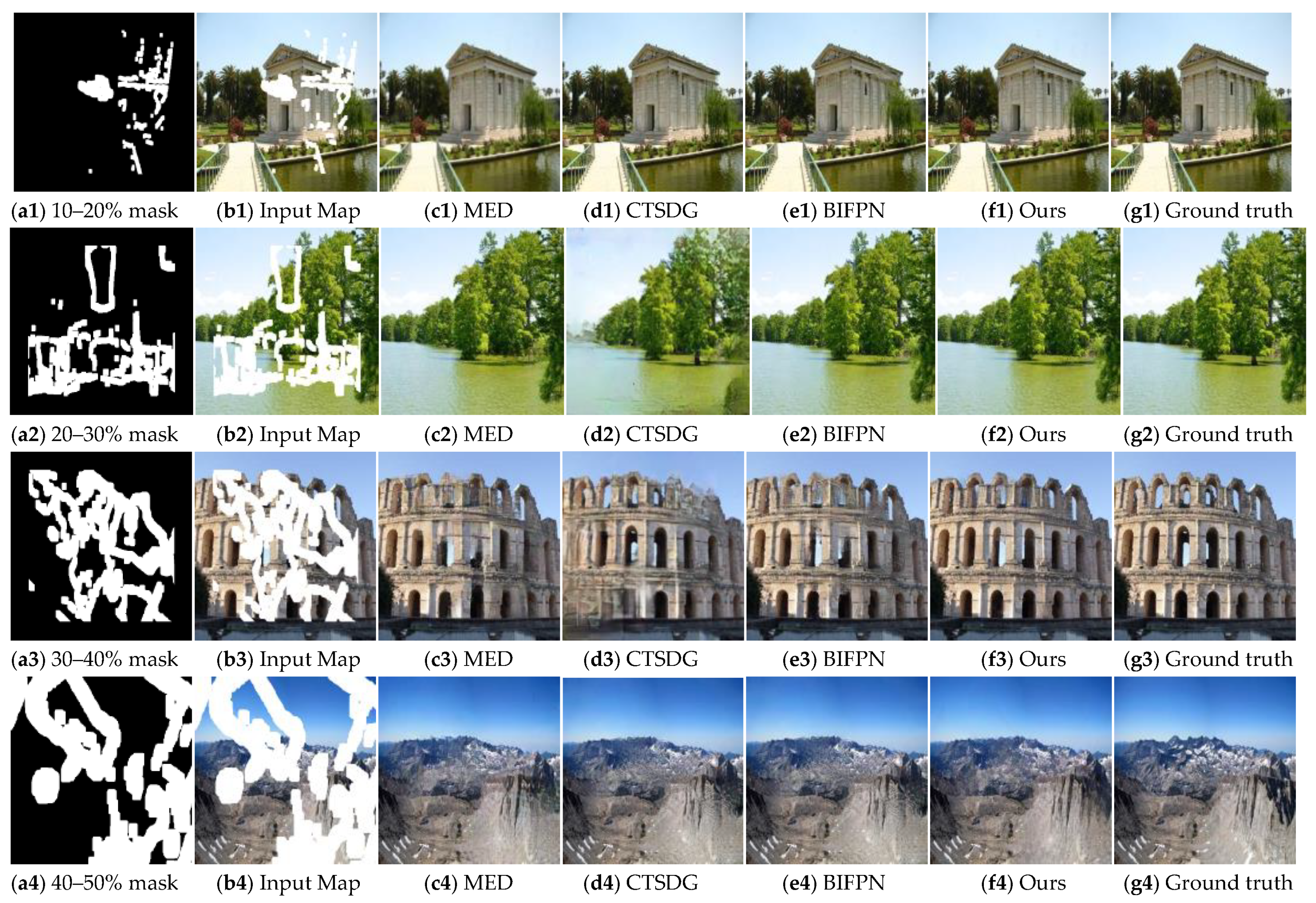

4.1.1. Qualitative Analysis

4.1.2. Quantitative Analysis

4.2. Analysis of Old Photo Inpainting Results with Transfer Learning

4.2.1. Experimental Content

4.2.2. Dataset Acquisition and Pre-Processing

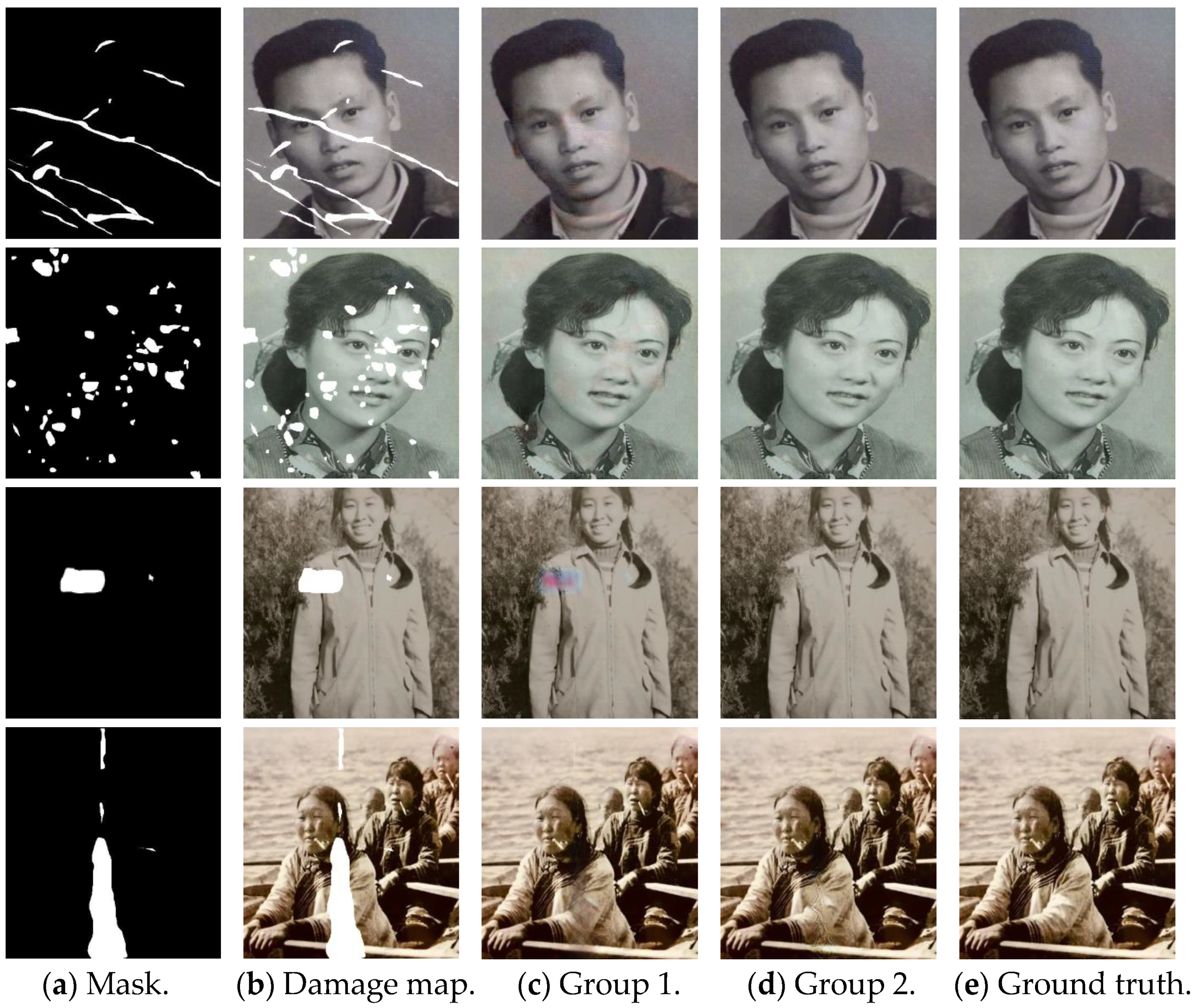

4.2.3. Analysis of Experimental Results

- Subjective evaluation

- 2.

- Objective evaluation

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

References

- Criminisi, A.B.; Perez, P.; Toyama, K. Region Filling and Object Removal by Exemplar-Based Image Inpainting. IEEE Trans. Image Process. 2004, 13, 200–1212. [Google Scholar] [CrossRef]

- Li, L.; Chen, M.J.; Shi, H.D.; Duan, Z.X.; Xiong, X.Z. Multiscale Structure and Texture Feature Fusion for Image Inpainting. IEEE Access 2022, 10, 82668–82679. [Google Scholar] [CrossRef]

- Bertalmio, M.; Sapiro, G.; Caselles, V.; Ballester, C. Image Inpainting. In Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques, New Orleans, LA, USA, 23–28 July 2000; pp. 417–424. [Google Scholar]

- Levin, A.; Zomet, A.; Weiss, Y. Learning how to inpaint from global image statistics. In Proceedings of the ICCV, Nice, France, 13–16 October 2003; pp. 305–312. [Google Scholar]

- Li, L.; Chen, M.J.; Xiong, X.Z.; Yang, Z.W.; Zhang, J.S. A Continuous Nonlocal Total Variation Image Restoration Model. Radio Eng. 2021, 51, 864–869. [Google Scholar]

- Darabis, S.; Shechtman, E.; Barnes, C.; Goldman, D.B.; Sen, P. Image melding: Combining inconsistent images using patch-based synthesis. ACM Trans. Graph. 2021, 31, 4. [Google Scholar] [CrossRef]

- Efrosa, A.; Freemanw, T. Image quilting for texture synthesis and transfer. In Proceedings of the 28th Annual Conference on Computer Graphics and Interactive Techniques, New York, NY, USA, 12–17 August 2001; pp. 341–346. [Google Scholar]

- Yeh, R.A.; Chen, C.; Lim, T.Y.; Schwing, A.G.; Hasegawa-Johnson, M.; Do, M.N. Semantic image inpainting with deep generative models. In Proceedings of the ICCV, Venice, Italy, 22–29 October 2017; pp. 6882–6890. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the NIPS, Montreal, BC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Yu, X. Research on Face Image Inpainting Method Based on Generative Adversarial Network. Master’s Thesis, Southwest University of Science and Technology, Mianyang, China, 2022. [Google Scholar] [CrossRef]

- Deepak, P.; Philipp, K.; Jeff, D.; Darrell, T.; Efros, A.A. Context encoder: Feature Learning by Inpainting. In Proceedings of the CVPR, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar] [CrossRef] [Green Version]

- Jason, Y.; Jeff, C.; Yoshua, B.; Lipson, H. How transferable are features in deep neural networks? In Proceedings of the NIPS, Montreal, BC, Canada, 8–13 December 2014. [Google Scholar] [CrossRef]

- Zeiler, M.D.; Rob, F. Visualizing and under-standing convolutional networks. In Proceedings of the ECCV, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Azizpour, H.; Razavian, A.S.; Sullivan, J.; Maki, A.; Carlsson, S. Factors of transferability for a generic convnet representation. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 1790–1802. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhao, M.; Kang, M.; Tang, B.; Pecht, M. Deep residual networks with dynamically weighted wavelet coefficients for fault diagnosis of planetary gearboxes. IEEE Trans. Ind. Electron. 2017, 65, 4290–4300. [Google Scholar] [CrossRef]

- Nazeri, K.; Ng, E.; Joseph, T.; Qureshi, F.; Ebrahimi, M. Edgeconnect: Structure guided image inpainting using edge prediction. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Xiong, W.; Yu, J.; Lin, Z.; Yang, J.; Lu, X.; Barnes, C.; Luo, J. Foreground-aware image inpainting. In Proceedings of the 2019 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Ren, Y.; Yu, X.; Zhang, R.; Li, T.H.; Liu, S.; Li, G. Structureflow: Image inpainting via structure-aware appearance flow. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Li, J.; He, F.; Zhang, L.; Du, B.; Tao, D. Progressive reconstruction of visual structure for image inpainting. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Yang, J.; Qi, Z.Q.; Shi, Y. Learning to incorporate structure knowledge for image inpainting. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020. [Google Scholar]

- Liu, H.; Jiang, B.; Song, Y.; Huang, W.; Yang, C. Rethinking image inpainting via a mutual encoder-decoder with feature equalizations. In Proceedings of the 16th European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Guo, X.; Yang, H.; Huang, D. Image Inpainting via Conditional Texture and Structure Dual Generation. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 14114–14123. [Google Scholar]

- Liu, H.; Wan, Z.; Huang, W.; Song, Y.; Han, X.; Liao, J. PD-GAN: Probabilistic diverse GAN for image inpainting. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 9367–9376. [Google Scholar]

- Tan, C.; Sun, F.; Kong, T.; Zhang, W.; Yang, C.; Liu, C. A survey on deep transfer learning. In Proceedings of the International Conference on Artificial Neural Networks, Barcelona, Spain, 5–7 October 2018; pp. 270–279. [Google Scholar]

- Ge, W.F.; Yu, Y.Z. Borrowing treasures from the wealthy: Deep transfer learning through selective joint fine-tuning. In Proceedings of the ICCV, Venice, Italy, 22–29 October 2017; pp. 1086–1095. [Google Scholar]

- Cui, Y.; Song, Y.; Sun, C.; Howard, A.; Belongie, S. Large scale fine-grained categorization and domain-specific transfer learning. In Proceedings of the CVPR, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4109–4118. [Google Scholar]

- Long, M.; Cao, Y.; Wang, J.; Jordan, M. Learning transferable features with deep adaptation networks. In Proceedings of the International Conference on Machine Learning, Lille, France, 7–9 July 2015; pp. 97–105. [Google Scholar]

- Tzeng, E.; Hoffman, J.; Zhang, N.; Saenko, K.; Darrell, T. Deep domain confusion: Maximizing for domain invariance. arXiv 2014, arXiv:1412.3474. [Google Scholar] [CrossRef]

- Gretton, A.; Borgwardt, K.M.; Rasch, M.J.; Schölkopf, B.; Smola, A. A kernel two-sample test. J. Mach. Learn. Res. 2012, 13, 723–773. [Google Scholar]

- Haeusser, P.; Frerix, T.; Mordvintsev, A.; Cremers, D. Associative domain adaptation. In Proceedings of the ICCV, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Chen, H.; Zhang, Z.; Deng, J.; Yin, X. A Novel Transfer-Learning Network for Image Inpainting. In Proceedings of the ICONIP, Sanur, Bali, Indonesia, 8–12 December 2021; pp. 20–27. [Google Scholar]

- Zhao, Y.M.; Zhang, Y.X.; Sun, Z.S. Unsupervised Transfer Learning for Generative Image Inpainting with Adversarial Edge Learning. In Proceedings of the 2022 5th International Conference on Sensors, Signal and Image Processing, Birmingham, UK, 28–30 October 2022; pp. 17–22. [Google Scholar]

- Li, L.; Chen, M.J.; Shi, H.D.; Liu, T.T.; Deng, Y.S. Research on Image Inpainting Algorithm Based on BIFPN-GAN Feature Fusion. Radio Eng. 2022, 52, 2141–2148. [Google Scholar]

- Yu, F.; Koltun, V. Multi-scale Context Aggregation by Dilated Convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- Lou, L.; Zang, S. Research on Edge Detection Method Based on Improved HED Network. J. Phys. Conf. Ser. 2020, 1607, 012068. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the NIPS, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the CVPR, Las Vegas, NV, USA, 27–30 July 2016; pp. 770–778. [Google Scholar]

- Jimmy, L.B.; Jamie, R.K.; Geoffrey, E.H. Layer normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar] [CrossRef]

- Johnson, J.; Alahi, A.; Fei-Fei, L. Perceptual losses for real-time style transfer and super-resolution. In Proceedings of the ECCV, Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Jia, D.; Wei, D.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierar-chical image database. In Proceedings of the CVPR, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional net-works for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar] [CrossRef]

- Jolicoeur-Martineau, A. The relativistic discriminator: A key element missing from standard GAN. In Proceedings of the ICLR, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Liu, G.; Reda, F.A.; Shih, K.J.; Wang, T.C.; Tao, A.; Catanzaro, B. Image Inpainting for Irregular Holes Using Partial Convolutions. In Proceedings of the ECCV, Munich, Germany, 8–14 September 2018; pp. 85–100. [Google Scholar]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar] [CrossRef]

| Layers Names | Convolution Kernel Size | Step Size | Activation Function | Output Feature Maps |

|---|---|---|---|---|

| Convolutional layer 1 | 4 × 4 | 2 | LeakyReLU | 64 × 128 × 128 |

| Convolutional layer 2 | 4 × 4 | 2 | LeakyReLU | 128 × 64 × 64 |

| Convolutional layer 3 | 4 × 4 | 2 | LeakyReLU | 256 × 32 × 32 |

| Convolutional layer 4 | 4 × 4 | 1 | LeakyReLU | 512 × 16 × 16 |

| Convolutional layer 5 | 4 × 4 | 1 | LeakyReLU | 512 × 4 × 4 |

| Fully connected layer | - | - | Sigmoid | 512 × 1 × 1 |

| Mask Rate | MED | CTSDG | BIFPN | Ours | |

|---|---|---|---|---|---|

| PSNR↑ | 10–20% | 28.75 | 32.67 | 32.11 | 32.03 |

| 20–30% | 26.97 | 28.13 | 28.67 | 28.44 | |

| 30–40% | 23.67 | 25.32 | 25.81 | 26.43 | |

| 40–50% | 22.07 | 23.46 | 23.56 | 24.79 | |

| SSIM↑ | 10–20% | 0.922 | 0.958 | 0.960 | 0.953 |

| 20–30% | 0.904 | 0.917 | 0.924 | 0.931 | |

| 30–40% | 0.837 | 0.852 | 0.863 | 0.882 | |

| 40–50% | 0.811 | 0.826 | 0.833 | 0.841 | |

| FID↓ | 10–20% | 5.63 | 2.61 | 2.67 | 2.95 |

| 20–30% | 6.79 | 3.74 | 3.24 | 3.11 | |

| 30–40% | 8.64 | 5.35 | 5.02 | 4.78 | |

| 40–50% | 9.11 | 7.69 | 7.63 | 7.11 |

| Mask Rate | MED | CTSDG | BIFPN | Ours | |

|---|---|---|---|---|---|

| PSNR ↑ | 10–20% | 28.05 | 30.54 | 31.09 | 31.86 |

| 20–30% | 25.44 | 26.55 | 26.61 | 27.14 | |

| 30–40% | 22.89 | 23.73 | 24.17 | 25.71 | |

| 40–50% | 21.76 | 22.54 | 22.78 | 23.64 | |

| SSIM ↑ | 10–20% | 0.924 | 0.929 | 0.934 | 0.926 |

| 20–30% | 0.874 | 0.897 | 0.906 | 0.907 | |

| 30–40% | 0.846 | 0.856 | 0.862 | 0.873 | |

| 40–50% | 0.811 | 0.826 | 0.834 | 0.842 | |

| FID ↓ | 10–20% | 5.71 | 4.11 | 3.88 | 3.16 |

| 20–30% | 6.59 | 5.21 | 4.16 | 4.07 | |

| 30–40% | 9.14 | 7.68 | 7.11 | 6.89 | |

| 40–50% | 11.54 | 9.13 | 8.75 | 8.18 |

| Train Set | Validation Set | Test Set | |

|---|---|---|---|

| Old photos | 252 | 32 | 32 |

| Methods | PSNR | SSIM | FID |

|---|---|---|---|

| Group 1 | 32.42 | 0.943 | 3.62 |

| Group 2 | 36.25 | 0.971 | 2.01 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, M.; Duan, Z.; Li, L.; Yi, S.; Cui, A. A Two-Stage Image Inpainting Technique for Old Photographs Based on Transfer Learning. Electronics 2023, 12, 3221. https://doi.org/10.3390/electronics12153221

Chen M, Duan Z, Li L, Yi S, Cui A. A Two-Stage Image Inpainting Technique for Old Photographs Based on Transfer Learning. Electronics. 2023; 12(15):3221. https://doi.org/10.3390/electronics12153221

Chicago/Turabian StyleChen, Mingju, Zhengxu Duan, Lan Li, Sihang Yi, and Anle Cui. 2023. "A Two-Stage Image Inpainting Technique for Old Photographs Based on Transfer Learning" Electronics 12, no. 15: 3221. https://doi.org/10.3390/electronics12153221

APA StyleChen, M., Duan, Z., Li, L., Yi, S., & Cui, A. (2023). A Two-Stage Image Inpainting Technique for Old Photographs Based on Transfer Learning. Electronics, 12(15), 3221. https://doi.org/10.3390/electronics12153221