Constructing Maps for Autonomous Robotics: An Introductory Conceptual Overview

Abstract

1. Introduction

2. Building Maps—Core Concepts in SLAM

2.1. Problem Formulation, Concepts

2.1.1. Full SLAM

2.1.2. Filter SLAM

- This makes the problem more tractable for algorithms in the form of a Bayes filter, which operate by iterated repetition of a state transition function followed by a measurement update. Note that probability distributions over hidden states x are referred to as belief in [8] and works derived thereof.

- Prior state estimates are marginalized out, with information about them being contained in the beliefs over landmarks and current state. When dealing with non-linear state propagation and observation functions, this means that re-linearization of these functions cannot be performed, contributing to drift.

- There is a strong distinction between odometry and measurement constraints, in that the former is used in the state propagation step and the latter in measurement updates.

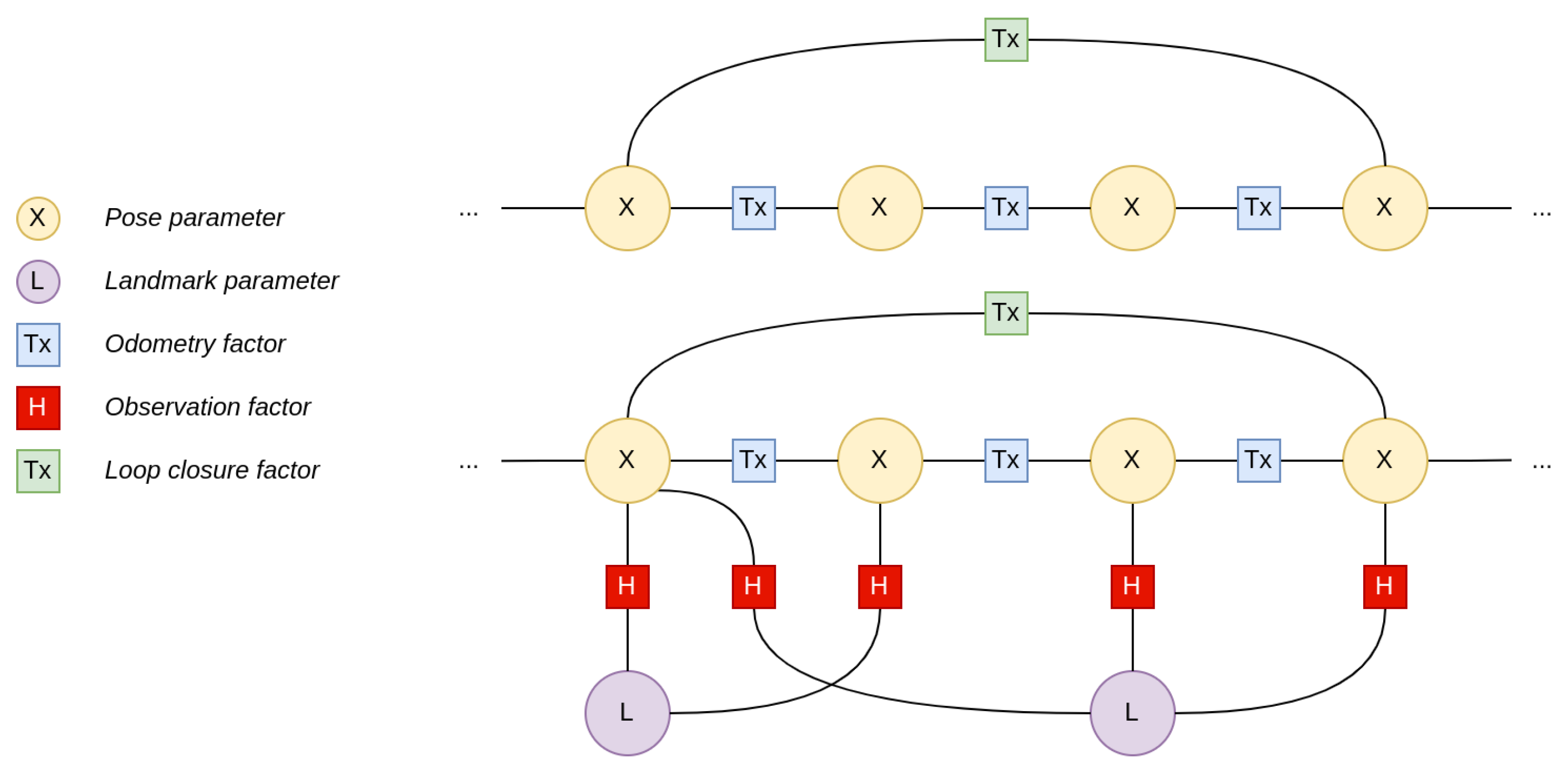

2.1.3. Smoothing SLAM and Factor Graphs

2.2. Representative Examples

2.3. Summary

3. Types of Maps—Metric, Topological, Semantic

3.1. Spatial Map Representations

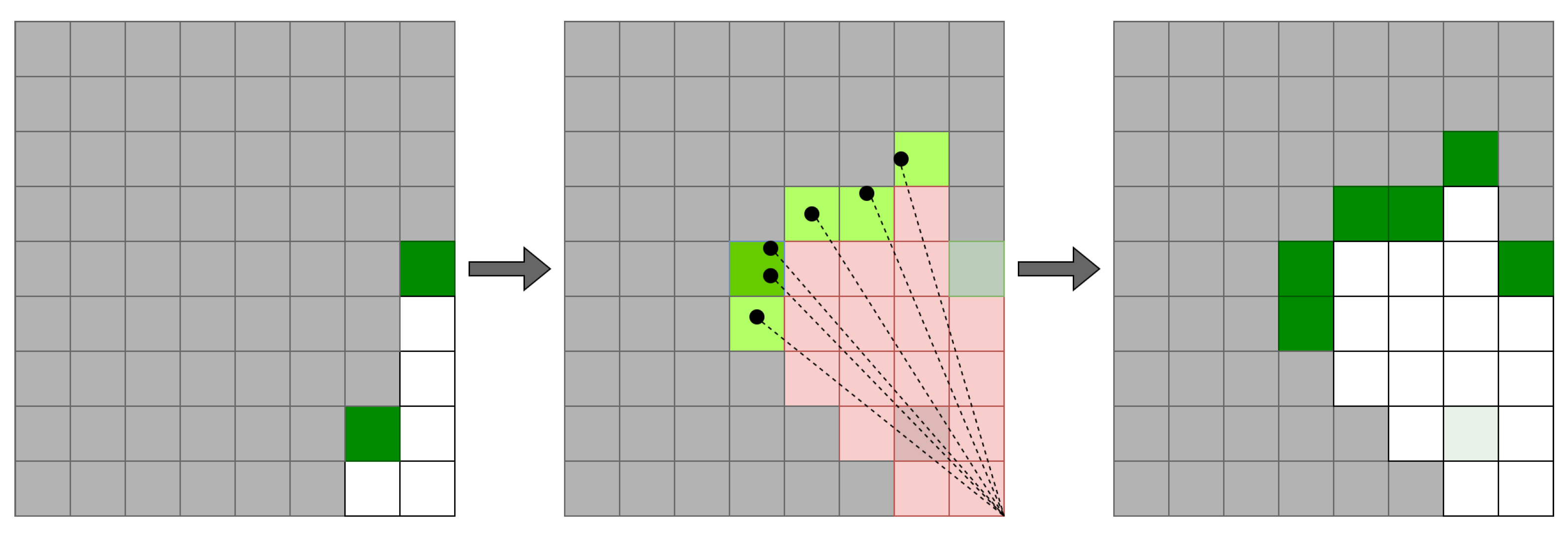

3.1.1. Occupancy Grid Maps

3.1.2. Surface Representations

3.1.3. Implicit Scene Models

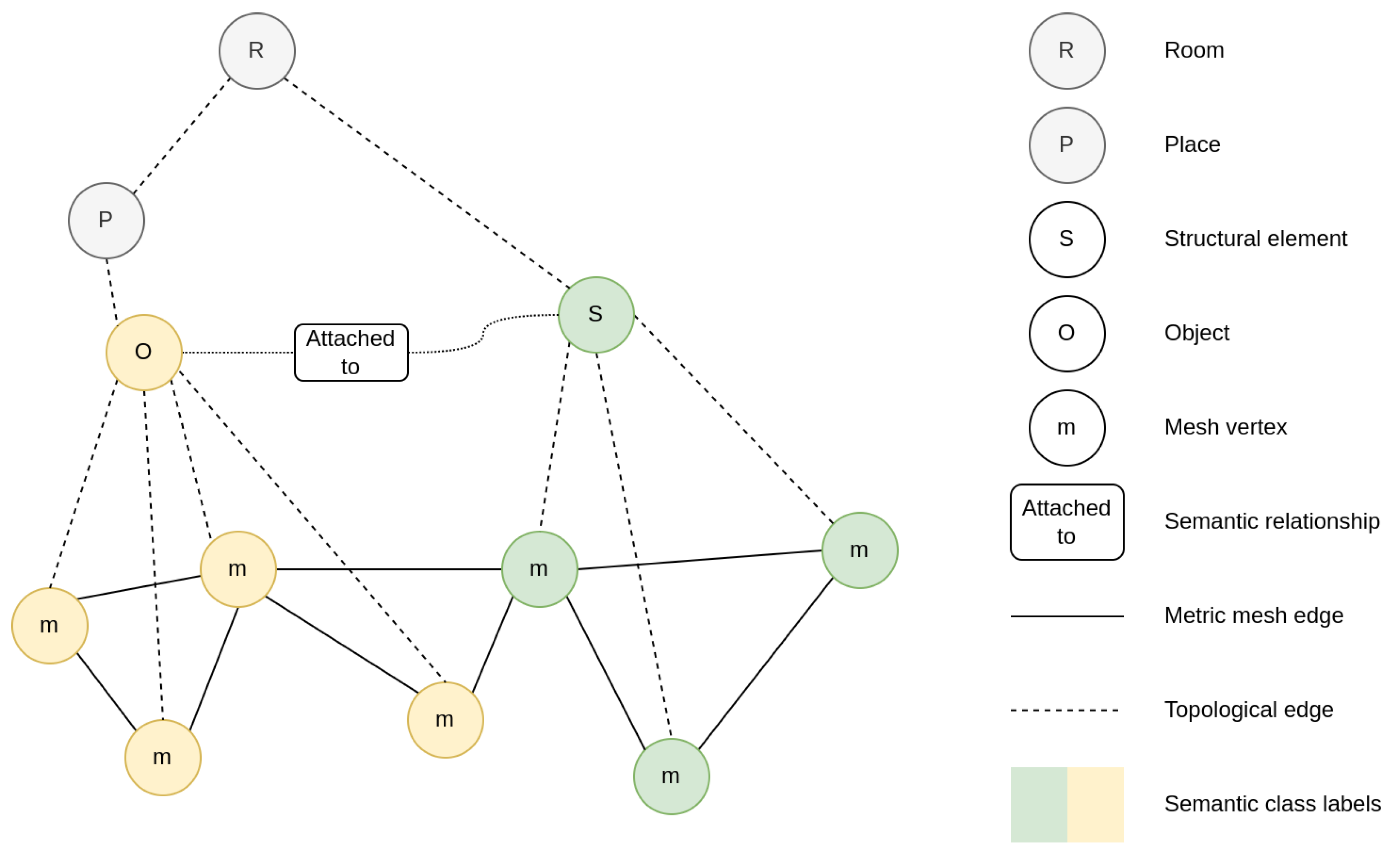

3.2. Scene Graphs and Topologies

3.3. Semantics

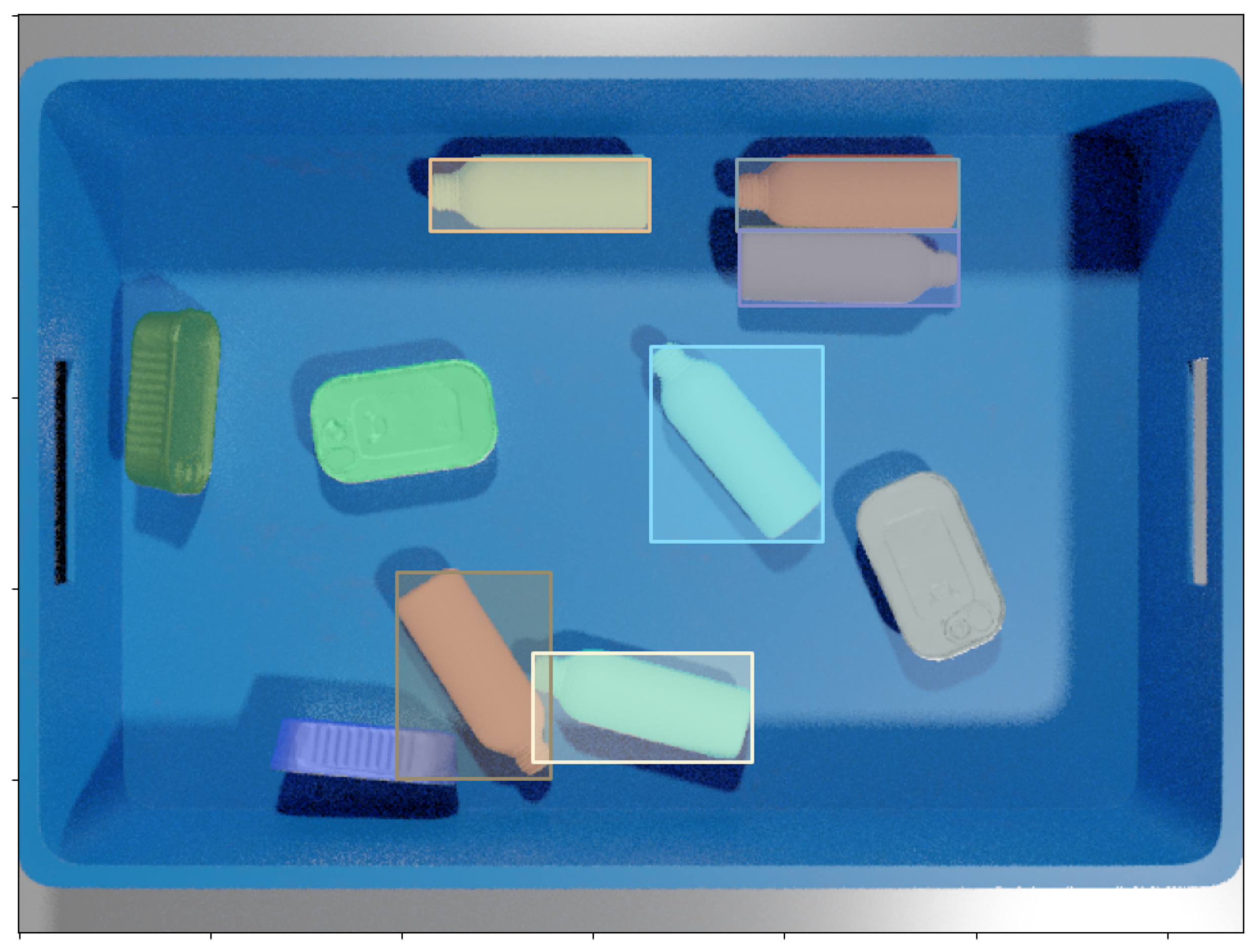

3.3.1. Object Detection

3.3.2. Image Segmentation—Semantic, Instance, Panoptic

3.3.3. Open-Set Semantics

3.3.4. Map Integration

3.4. Summary

4. Performance Evaluation

- KITTI [72]—stereo imagery, multi-line LiDAR, IMU tracks, collected over multi-kilometer outdoor tracks in a self-driving vehicle testbed; ground truth poses established with aid of GPS; also includes 3D object instance annotations.

- RGB-D SLAM benchmark from TUM [73]—RGB-D data of indoor observation sequences collected by custom rig; ground truth data from motion capture equipment; notable for establishing the Absolute Trajectory Error (ATE) metric.

- EuRoC [74]—a micro aerial vehicle (MAV) stereo, IMU dataset collected indoors; ground truth data established through laser tracking; provides a reference point cloud in some locations.

- TUM-VI [75]—another stereo-inertial dataset, featuring outdoor sequences, collected with a hand-held rig; ground truth data provided by motion capture equipment, meaning that for longer sequences this is only available at the start and end of the trajectory.

5. Discussion

5.1. Domain-Specific Challenges

5.2. Robot Navigation without the Construction of Maps

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Arents, J.; Greitans, M. Smart Industrial Robot Control Trends, Challenges and Opportunities within Manufacturing. Appl. Sci. 2022, 12, 937. [Google Scholar] [CrossRef]

- Majumdar, A.; Aggarwal, G.; Devnani, B.; Hoffman, J.; Batra, D. ZSON: Zero-Shot Object-Goal Navigation using Multimodal Goal Embeddings. arXiv 2022, arXiv:2206.12403. [Google Scholar]

- ROS Wiki: Movebase Global Planner. Available online: https://wiki.ros.org/global_planner (accessed on 29 June 2023).

- Kuipers, B. Modeling Spatial Knowledge. Cogn. Sci. 1978, 2, 129–153. [Google Scholar] [CrossRef]

- Chatila, R.; Laumond, J.P. Position referencing and consistent world modeling for mobile robots. In Proceedings 1985 IEEE International Conference on Robotics and Automation; IEEE: Piscataway, NJ, USA, 1985; Volume 2, pp. 138–145. [Google Scholar]

- Rosinol, A.; Gupta, A.; Abate, M.; Shi, J.; Carlone, L. 3D Dynamic Scene Graphs: Actionable Spatial Perception with Places, Objects, and Humans. arXiv 2020, arXiv:2002.06289. [Google Scholar]

- Cheng, W.S.; Wald, J.; Tateno, K.; Navab, N.; Tombari, F. SceneGraphFusion: Incremental 3D Scene Graph Prediction from RGB-D Sequences. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 7511–7521. [Google Scholar]

- Thrun, S.; Burgard, W.; Fox, D. Probabilistic Robotics; MIT Press: Cambridge, MA, USA, 2005. [Google Scholar]

- Dellaert, F.; Kaess, M. Factor Graphs for Robot Perception. Found. Trends Robot. 2017, 6, 1–139. [Google Scholar] [CrossRef]

- Alkendi, Y.; Seneviratne, L.; Zweiri, Y. State of the Art in Vision-Based Localization Techniques for Autonomous Navigation Systems. IEEE Access 2021, 9, 76847–76874. [Google Scholar] [CrossRef]

- Huang, B.; Zhao, J.; Liu, J. A Survey of Simultaneous Localization and Mapping. arXiv 2019, arXiv:1909.05214. [Google Scholar]

- Garg, S.; Sunderhauf, N.; Dayoub, F.; Morrison, D.; Cosgun, A.; Carneiro, G.; Wu, Q.; Chin, T.J.; Reid, I.D.; Gould, S.; et al. Semantics for Robotic Mapping, Perception and Interaction: A Survey. arXiv 2021, arXiv:2101.00443. [Google Scholar]

- Osman, H.; Darwish, N.; Bayoumi, A. PlaceNet: A multi-scale semantic-aware model for visual loop closure detection. Eng. Appl. Artif. Intell. 2023, 119, 105797. [Google Scholar] [CrossRef]

- Newcombe, R.A.; Davison, A.J. Live dense reconstruction with a single moving camera. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 1498–1505. [Google Scholar]

- Jatavallabhula, K.M.; Kuwajerwala, A.; Gu, Q.; Omama, M.; Chen, T.; Li, S.; Iyer, G.; Saryazdi, S.; Keetha, N.V.; Tewari, A.K.; et al. ConceptFusion: Open-set Multimodal 3D Mapping. arXiv 2023, arXiv:2302.07241. [Google Scholar]

- Lu, G.; Yang, H.; Li, J.; Kuang, Z.; Yang, R. A Lightweight Real-Time 3D LiDAR SLAM for Autonomous Vehicles in Large-Scale Urban Environment. IEEE Access 2023, 11, 12594–12606. [Google Scholar] [CrossRef]

- Bloesch, M.; Omari, S.; Hutter, M.; Siegwart, R. Robust visual inertial odometry using a direct EKF-based approach. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 298–304. [Google Scholar] [CrossRef]

- Yang, N.; Stumberg, L.v.; Wang, R.; Cremers, D. D3VO: Deep Depth, Deep Pose and Deep Uncertainty for Monocular Visual Odometry. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Xu, W.; Cai, Y.; He, D.; Lin, J.; Zhang, F. FAST-LIO2: Fast Direct LiDAR-Inertial Odometry. IEEE Trans. Robot. 2022, 38, 2053–2073. [Google Scholar] [CrossRef]

- Leutenegger, S.; Furgale, P.T.; Rabaud, V.; Chli, M.; Konolige, K.; Siegwart, R.Y. Keyframe-Based Visual-Inertial SLAM using Nonlinear Optimization. In Proceedings of the Robotics: Science and Systems, Berlin, Germany, 24–28 June 2013. [Google Scholar]

- Mur-Artal, R.; Montiel, J.M.M.; Tardós, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Campos, C.; Elvira, R.; Rodr’iguez, J.J.G.; Montiel, J.M.M.; Tardós, J.D. ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual–Inertial, and Multimap SLAM. IEEE Trans. Robot. 2020, 37, 1874–1890. [Google Scholar] [CrossRef]

- Qin, T.; Li, P.; Shen, S. VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision, 2nd ed.; Cambridge University Press: Cambridge, MA, USA, 2004. [Google Scholar] [CrossRef]

- Sun, K.; Mohta, K.; Pfrommer, B.; Watterson, M.; Liu, S.; Mulgaonkar, Y.; Taylor, C.J.; Kumar, V. Robust Stereo Visual Inertial Odometry for Fast Autonomous Flight. IEEE Robot. Autom. Lett. 2018, 3, 965–972. [Google Scholar] [CrossRef]

- Frey, B.J.; Kschischang, F.R.; Loeliger, H.A.; Wiberg, N. Factor graphs and algorithms. In Proceedings of the Annual Allerton Conference on Communication Control and Computing, Citeseer, Cambridge, UK, 29 September–1 October 1997; Volume 35, pp. 666–680. [Google Scholar]

- Fourie, D.; Leonard, J.; Kaess, M. A nonparametric belief solution to the Bayes tree. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 2189–2196. [Google Scholar] [CrossRef]

- Dellaert, F.; Contributors. Borglab/Gtsam. Available online: https://zenodo.org/record/7582634 (accessed on 29 June 2023).

- Rosinol, A.; Abate, M.; Chang, Y.; Carlone, L. Kimera: An Open-Source Library for Real-Time Metric-Semantic Localization and Mapping. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 1689–1696. [Google Scholar] [CrossRef]

- Gálvez-López, D.; Tardós, J.D. Bags of Binary Words for Fast Place Recognition in Image Sequences. IEEE Trans. Robot. 2012, 28, 1188–1197. [Google Scholar] [CrossRef]

- Dellaert, F. Factor Graphs: Exploiting Structure in Robotics. Annu. Rev. Control. Robot. Auton. Syst. 2021, 4, 141–166. [Google Scholar] [CrossRef]

- Forster, C.; Carlone, L.; Dellaert, F.; Scaramuzza, D. IMU Preintegration on Manifold for Efficient Visual-Inertial Maximum-a-Posteriori Estimation. In Proceedings of the Robotics: Science and Systems, Rome, Italy, 13–17 July 2015. [Google Scholar]

- Hess, W.; Kohler, D.; Rapp, H.; Andor, D. Real-time loop closure in 2D LIDAR SLAM. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1271–1278. [Google Scholar] [CrossRef]

- Schneider, T.; Dymczyk, M.; Fehr, M.; Egger, K.; Lynen, S.; Gilitschenski, I.; Siegwart, R. Maplab: An Open Framework for Research in Visual-Inertial Mapping and Localization. IEEE Robot. Autom. Lett. 2018, 3, 1418–1425. [Google Scholar] [CrossRef]

- Keller, M.; Lefloch, D.; Lambers, M.; Izadi, S.; Weyrich, T.; Kolb, A. Real-Time 3D Reconstruction in Dynamic Scenes Using Point-Based Fusion. In Proceedings of the 2013 International Conference on 3D Vision, Seattle, WA, USA, 29 June–1 July 2013; pp. 1–8. [Google Scholar]

- Whelan, T.; Salas-Moreno, R.F.; Glocker, B.; Davison, A.J.; Leutenegger, S. ElasticFusion: Real-time dense SLAM and light source estimation. Int. J. Robot. Res. 2016, 35, 1697–1716. [Google Scholar] [CrossRef]

- Sucar, E.; Liu, S.; Ortiz, J.; Davison, A.J. iMAP: Implicit Mapping and Positioning in Real-Time. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 6209–6218. [Google Scholar] [CrossRef]

- Klein, G.S.W.; Murray, D.W. Parallel Tracking and Mapping for Small AR Workspaces. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2007; pp. 225–234. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G.R. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–12 November 2011; pp. 2564–2571. [Google Scholar]

- Kaess, M.; Johannsson, H.; Roberts, R.; Ila, V.; Leonard, J.J.; Dellaert, F. iSAM2: Incremental smoothing and mapping using the Bayes tree. Int. J. Robot. Res. 2012, 31, 216–235. [Google Scholar] [CrossRef]

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. arXiv 2020, arXiv:2003.08934. [Google Scholar] [CrossRef]

- Mazur, K.; Sucar, E.; Davison, A.J. Feature-Realistic Neural Fusion for Real-Time, Open Set Scene Understanding. arXiv 2022, arXiv:2210.03043. [Google Scholar]

- Kuipers, B. The Spatial Semantic Hierarchy. Artif. Intell. 2000, 119, 191–233. [Google Scholar] [CrossRef]

- Lavalle, S.M. Planning Algorithms; Cambridge University Press: Cambridge, UK, 2006. [Google Scholar]

- Mccormac, J.; Clark, R.; Bloesch, M.; Davison, A.; Leutenegger, S. Fusion++: Volumetric Object-Level SLAM. In Proceedings of the 2018 International Conference on 3D Vision (3DV), Verona, Italy, 5–8 September 2018; pp. 32–41. [Google Scholar]

- Crespo, J.; Castillo, J.C.; Mozos, O.M.; Barber, R. Semantic Information for Robot Navigation: A Survey. Appl. Sci. 2020, 10, 497. [Google Scholar] [CrossRef]

- Han, X.; Li, S.; Wang, X.; Zhou, W. Semantic Mapping for Mobile Robots in Indoor Scenes: A Survey. Information 2021, 12, 92. [Google Scholar] [CrossRef]

- Newcombe, R.A.; Lovegrove, S.J.; Davison, A.J. DTAM: Dense tracking and mapping in real-time. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2320–2327. [Google Scholar] [CrossRef]

- Curless, B.; Levoy, M. A volumetric method for building complex models from range images. In Proceedings of the 23rd Annual Conference on Computer Graphics and Interactive Techniques, New Orleans, LA, USA, 4–9 August 1996. [Google Scholar]

- Zeng, M.; Zhao, F.; Zheng, J.; Liu, X. Octree-based fusion for realtime 3D reconstruction. Graph. Model. 2013, 75, 126–136. [Google Scholar] [CrossRef]

- Siddiqui, Y.; Porzi, L.; Bul’o, S.R.; Muller, N.; Nießner, M.; Dai, A.; Kontschieder, P. Panoptic Lifting for 3D Scene Understanding with Neural Fields. arXiv 2022, arXiv:2212.09802. [Google Scholar]

- Shafiullah, N.M.M.; Paxton, C.; Pinto, L.; Chintala, S.; Szlam, A.D. CLIP-Fields: Weakly Supervised Semantic Fields for Robotic Memory. arXiv 2022, arXiv:2210.05663. [Google Scholar]

- Zender, H.; Mozos, Ó.M.; Jensfelt, P.; Kruijff, G.J.M.; Burgard, W. Conceptual spatial representations for indoor mobile robots. Robot. Auton. Syst. 2008, 56, 493–502. [Google Scholar] [CrossRef]

- Chang, D.S.; Cho, G.H.; Choi, Y.S. Ontology-based knowledge model for human–robot interactive services. In Proceedings of the 35th Annual ACM Symposium on Applied Computing, Brno, Czech Republic, 30 March–3 April 2020. [Google Scholar]

- Sun, X.; Zhang, Y.; Chen, J. High-Level Smart Decision Making of a Robot Based on Ontology in a Search and Rescue Scenario. Future Internet 2019, 11, 230. [Google Scholar] [CrossRef]

- Zhu, G.; Zhang, L.; Jiang, Y.; Dang, Y.; Hou, H.; Shen, P.; Feng, M.; Zhao, X.; Miao, Q.; Shah, S.A.A.; et al. Scene Graph Generation: A Comprehensive Survey. arXiv 2022, arXiv:2201.00443. [Google Scholar]

- Li, Q.; Nevalainen, P.; Peña Queralta, J.; Heikkonen, J.; Westerlund, T. Localization in Unstructured Environments: Towards Autonomous Robots in Forests with Delaunay Triangulation. Remote Sens. 2020, 12, 1870. [Google Scholar] [CrossRef]

- Nie, F.; Zhang, W.; Wang, Y.; Shi, Y.; Huang, Q. A Forest 3-D Lidar SLAM System for Rubber-Tapping Robot Based on Trunk Center Atlas. IEEE/ASME Trans. Mechatronics 2022, 27, 2623–2633. [Google Scholar] [CrossRef]

- Hughes, N.; Chang, Y.; Carlone, L. Hydra: A Real-time Spatial Perception System for 3D Scene Graph Construction and Optimization. In Proceedings of the Robotics: Science and Systems XVIII, New York, NY, USA, 27 June–1 July 2022. [Google Scholar]

- Tateno, K.; Tombari, F.; Navab, N. Real-time and scalable incremental segmentation on dense SLAM. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 4465–4472. [Google Scholar]

- Qi, C.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 77–85. [Google Scholar]

- Galindo, C.; Saffiotti, A.; Coradeschi, S.; Buschka, P.; Fernandez-Madrigal, J.; Gonzalez, J. Multi-hierarchical semantic maps for mobile robotics. In Proceedings of the 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, AL, Canada, 2–6 August 2005; pp. 2278–2283. [Google Scholar] [CrossRef]

- Salas-Moreno, R.F.; Newcombe, R.A.; Strasdat, H.; Kelly, P.H.; Davison, A.J. SLAM++: Simultaneous Localisation and Mapping at the Level of Objects. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 1352–1359. [Google Scholar] [CrossRef]

- Dong, J.; Fei, X.; Soatto, S. Visual-Inertial-Semantic Scene Representation for 3D Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, Hawaii, USA, 21–26 July 2017; pp. 3567–3577. [Google Scholar] [CrossRef]

- McCormac, J.; Handa, A.; Davison, A.; Leutenegger, S. SemanticFusion: Dense 3D semantic mapping with convolutional neural networks. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 4628–4635. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.K.; Girshick, R.B.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Kirillov, A.; He, K.; Girshick, R.B.; Rother, C.; Dollár, P. Panoptic Segmentation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June; pp. 9396–9405.

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R.B. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 42, 386–397. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. arXiv 2020, arXiv:2005.12872. [Google Scholar]

- Wang, X.; Kong, T.; Shen, C.; Jiang, Y.; Li, L. SOLO: Segmenting Objects by Locations. arXiv 2019, arXiv:1912.04488. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models From Natural Language Supervision. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The KITTI vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Sturm, J.; Engelhard, N.; Endres, F.; Burgard, W.; Cremers, D. A benchmark for the evaluation of RGB-D SLAM systems. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Vancouver, BC, Canada, 24–28 September 2012; pp. 573–580. [Google Scholar]

- Burri, M.; Nikolic, J.; Gohl, P.; Schneider, T.; Rehder, J.; Omari, S.; Achtelik, M.W.; Siegwart, R. The EuRoC micro aerial vehicle datasets. Int. J. Robot. Res. 2016, 35, 1157–1163. [Google Scholar] [CrossRef]

- Schubert, D.; Goll, T.; Demmel, N.; Usenko, V.C.; Stückler, J.; Cremers, D. The TUM VI Benchmark for Evaluating Visual-Inertial Odometry. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2018; pp. 1680–1687. [Google Scholar]

- Guan, T.; Kothandaraman, D.; Chandra, R.; Manocha, D. GANav: Group-wise Attention Network for Classifying Navigable Regions in Unstructured Outdoor Environments. arXiv 2021, arXiv:2103.04233. [Google Scholar]

- Wigness, M.; Eum, S.; Rogers, J.G.; Han, D.; Kwon, H. A RUGD Dataset for Autonomous Navigation and Visual Perception in Unstructured Outdoor Environments. In Proceedings of the International Conference on Intelligent Robots and Systems (IROS), The Venetian Macao, Macau, 3–8 November 2019. [Google Scholar]

- Jiang, P.; Osteen, P.R.; Wigness, M.B.; Saripalli, S. RELLIS-3D Dataset: Data, Benchmarks and Analysis. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June; pp. 1110–1116.

- Dai, A.; Chang, A.X.; Savva, M.; Halber, M.; Funkhouser, T.A.; Nießner, M. ScanNet: Richly-Annotated 3D Reconstructions of Indoor Scenes. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2432–2443. [Google Scholar]

- Wald, J.; Dhamo, H.; Navab, N.; Tombari, F. Learning 3D Semantic Scene Graphs From 3D Indoor Reconstructions. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 3960–3969. [Google Scholar]

- Cremona, J.; Comelli, R.; Pire, T. Experimental evaluation of Visual-Inertial Odometry systems for arable farming. J. Field Robot. 2022, 39, 1123–1137. [Google Scholar] [CrossRef]

- Shin, Y.S.; Kim, A. Sparse Depth Enhanced Direct Thermal-Infrared SLAM Beyond the Visible Spectrum. IEEE Robot. Autom. Lett. 2019, 4, 2918–2925. [Google Scholar] [CrossRef]

- Badue, C.S.; Guidolini, R.; Carneiro, R.V.; Azevedo, P.; Cardoso, V.B.; Forechi, A.; Jesus, L.F.R.; Berriel, R.; Paixão, T.M.; Mutz, F.W.; et al. Self-Driving Cars: A Survey. arXiv 2019, arXiv:1901.04407. [Google Scholar] [CrossRef]

- Ahn, M.; Brohan, A.; Brown, N.; Chebotar, Y.; Cortes, O.; David, B.; Finn, C.; Gopalakrishnan, K.; Hausman, K.; Herzog, A.; et al. Do As I Can, Not As I Say: Grounding Language in Robotic Affordances. In Proceedings of the Conference on Robot Learning, Auckland, New Zealand, 14–18 December 2022. [Google Scholar]

- Shah, D.; Osinski, B.; Ichter, B.; Levine, S. LM-Nav: Robotic Navigation with Large Pre-Trained Models of Language, Vision, and Action. In Proceedings of the Conference on Robot Learning, Auckland, New Zealand, 14–18 December 2022. [Google Scholar]

| System | Sensors 1 | Tracking 2 | Smoothing 3 | Note |

|---|---|---|---|---|

| ORB-SLAM (2017) [21] | V | Two-stage local BA | Frame descriptors for loop detection, PGO | |

| ORB-SLAM3 (2020) [22] | V, VI, S, SI | Two-stage local BA with optional IMU, stereo factors | Frame descriptors for loop detection, multiple maps, PGO | Extension of [21] |

| Google Cartographer (2016) [33] | 2L, 3L | Scan pose optimization with regard to local OGM | Geometric feature-based loop detection between local OGMs, PGO | |

| Maplab (2017) [34] | VI | ROVIO [17] EKF | — | Optimization performed offline through external tools |

| Keller et al. (2013) [35] | D | ICP with regard to a fixed surfel model | None for camera poses; Depth point averaging | Basis for many other systems, e.g., [7,15,36] |

| iMap (2021) [37] | D | Camera pose optimization with regard to NRF with frozen weights | Joint camera pose and NRF optimization |

| System | Benchmark | Score |

|---|---|---|

| ORB-SLAM (2017) [21] | EuRoC V 3, ATE | 0.047 1 |

| ORB-SLAM3 (2020) [22] | 0.041 1 | |

| ORB-SLAM3 (2020) [22] | EuRoC VI 3, ATE | 0.043 |

| ROVIO (2015) 2 [17] | 0.224 | |

| ORB-SLAM3 (2020) [22] | EuRoC SI 3, ATE | 0.035 |

| Kimera (2020) [29] | 0.119 | |

| ORB-SLAM3 (2020) [22] | TUM-VI outdoors5 4,5, ATE | 8.95 |

| ROVIO (2015) 2 [17] | 54.32 | |

| ORB-SLAM3 (2020) [22] | TUM-VI outdoors7 4,6, ATE | 4.58 |

| ROVIO (2015) 2 [17] | 49.01 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Racinskis, P.; Arents, J.; Greitans, M. Constructing Maps for Autonomous Robotics: An Introductory Conceptual Overview. Electronics 2023, 12, 2925. https://doi.org/10.3390/electronics12132925

Racinskis P, Arents J, Greitans M. Constructing Maps for Autonomous Robotics: An Introductory Conceptual Overview. Electronics. 2023; 12(13):2925. https://doi.org/10.3390/electronics12132925

Chicago/Turabian StyleRacinskis, Peteris, Janis Arents, and Modris Greitans. 2023. "Constructing Maps for Autonomous Robotics: An Introductory Conceptual Overview" Electronics 12, no. 13: 2925. https://doi.org/10.3390/electronics12132925

APA StyleRacinskis, P., Arents, J., & Greitans, M. (2023). Constructing Maps for Autonomous Robotics: An Introductory Conceptual Overview. Electronics, 12(13), 2925. https://doi.org/10.3390/electronics12132925