Assessment of Visual Motor Integration via Hand-Drawn Imitation: A Pilot Study

Abstract

1. Introduction

- A VMI evaluation framework was proposed based on a computer-assisted hand-drawn imitation task.

- C-RQA was first introduced to measure the VMI from the complexity of the hand and eye behaviour synchrony. It fills the gap without a computational framework for visual–motor behaviour.

- We conducted a pilot study of the proposed VMI evaluation tool and verified the validity of the proposed method by a statistical significance test, providing promising VMI indicators.

2. Experiment Design

2.1. Participants

2.2. Drawing Imitation Task

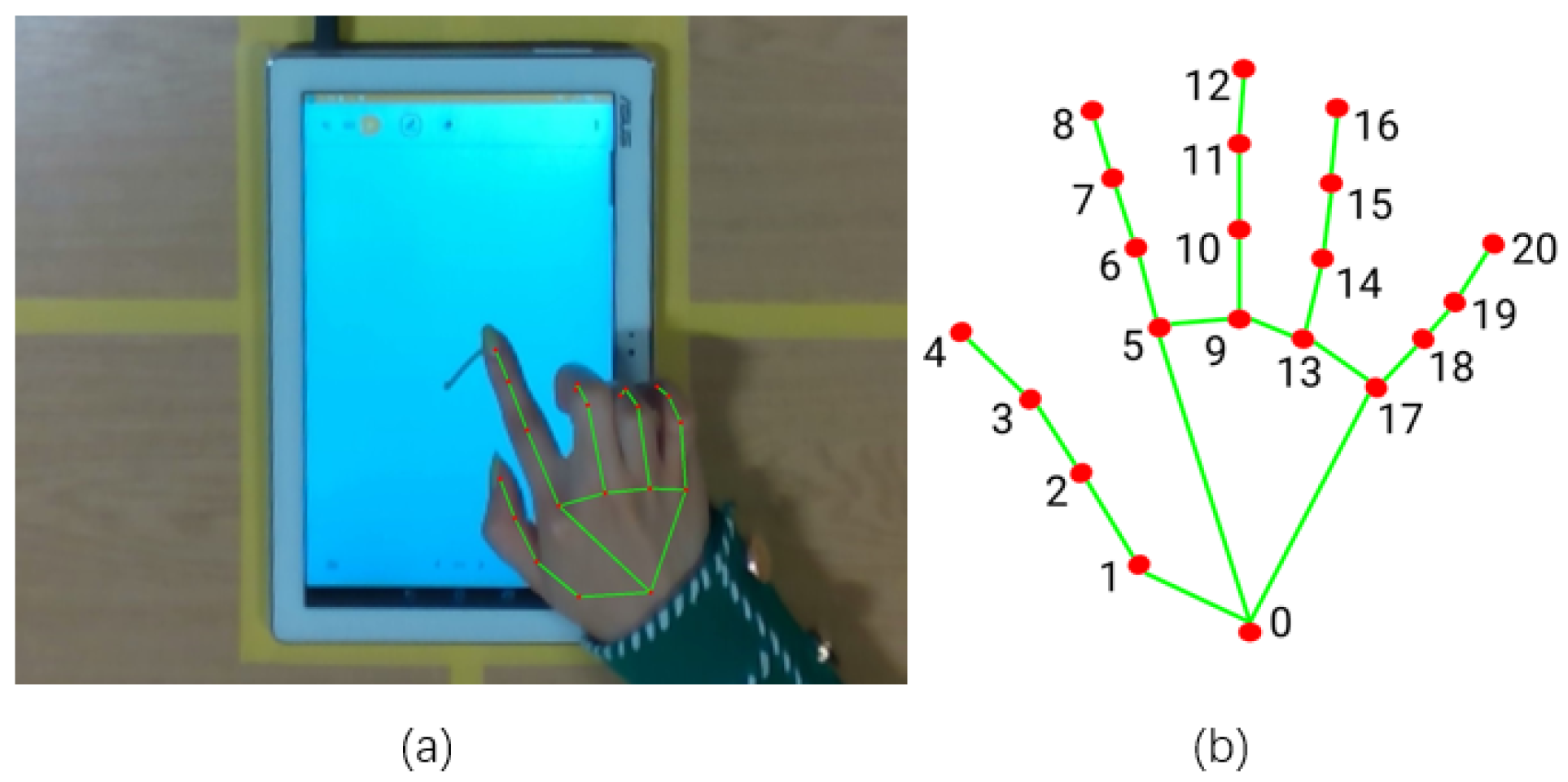

2.3. Experiment Setup

2.4. Procedures

3. Methodology

3.1. Complexity of Visual–Motor Synchrony

3.1.1. Categorisation of Visual–Motor Behaviour

| Algorithm 1 Categorical Gaze Behaviour Sequence. |

| Input: : The set of gaze sequence in 2D pixel coordinate. |

| : The set of fixation sequence in 2D pixel coordinate. |

| : The set of reference hand-drawn trajectory in 2D pixel coordinate. |

| : The distance threshold for determining AOI. |

| Output: G: Categorical Gaze Behaviour Sequence |

| for ; ; do |

| if then |

| if then |

| G |

| else |

| G |

| end if |

| else |

| G |

| end if |

| end for |

| return |

| Algorithm 2 Categorical Hand Behaviour Sequence. |

| Input: : The set of hand skeleton sequence in 2D pixel coordinate for the drawing finger. |

| : The maximum distance threshold. |

| : The minimum distance threshold. |

| Output: H: Categorical Hand Behaviour Sequence |

| for ; ; do |

| if then |

| H |

| else if then |

| H |

| else |

| H |

| end if |

| end for |

| return |

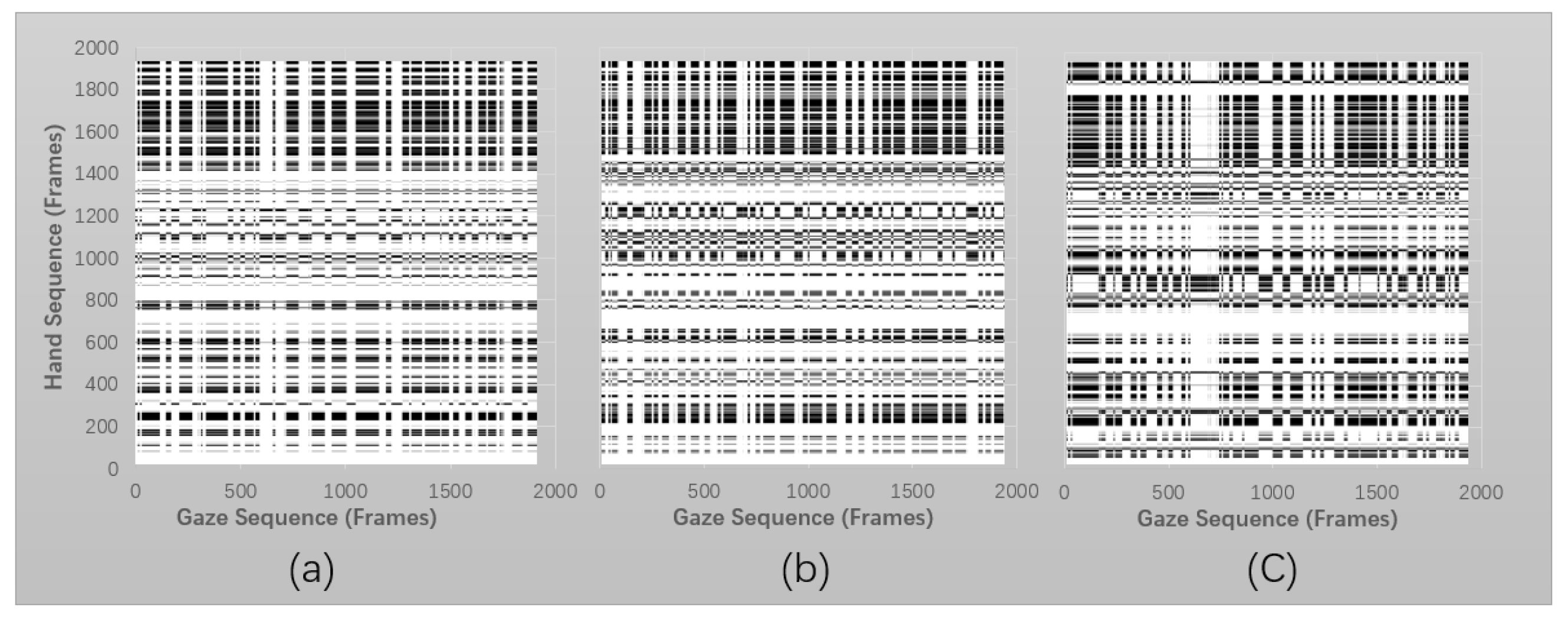

3.1.2. Cross Recurrent Plot

- Fixation on AOI with hand drawing: refers to the nature of visual–motor behaviour: that is, the eye is guiding the hand movement following the reference video.

- Hand moving with no fixation on screen: refers to the state in which the hand and eye are relocated (saccade, move), indicating the hand and gaze are preparing for the oncoming task properties.

3.1.3. Visual–Motor Complexity Score

3.2. Engagement Score

- Gaze fixation ratio : was defined as the ratio of the time the participant’s gaze was fixed on the AOI (reference video) to the total time spent in the experiment.

- Hand-drawing ratio : was defined as the ratio of the time the participant’s hand was in the drawing state to the total time spent in the experiment.

- Hand-drawn similarity : The drawing performance score estimated from drawing similarity, as VMI had been operationally defined as the ability to copy geometric shapes. From recent research by Lewis et al. [42], they proved the log Hausdorff distance was moderately positively correlated with human judgements of visual dissimilarity. In definition, the Hausdorff distance measures how far two subsets of a metric space are from each other.where is the Hausdorff distance between two points: set A and B. represents the Euclidean distance functions. Then, the hand-drawn similarity is defined as the log Hausdorff distance between query trajectory and reference trajectory . Trajectory coordinates have been normalised to for both axes.

3.3. Statistical Analysis

4. Result and Discussion

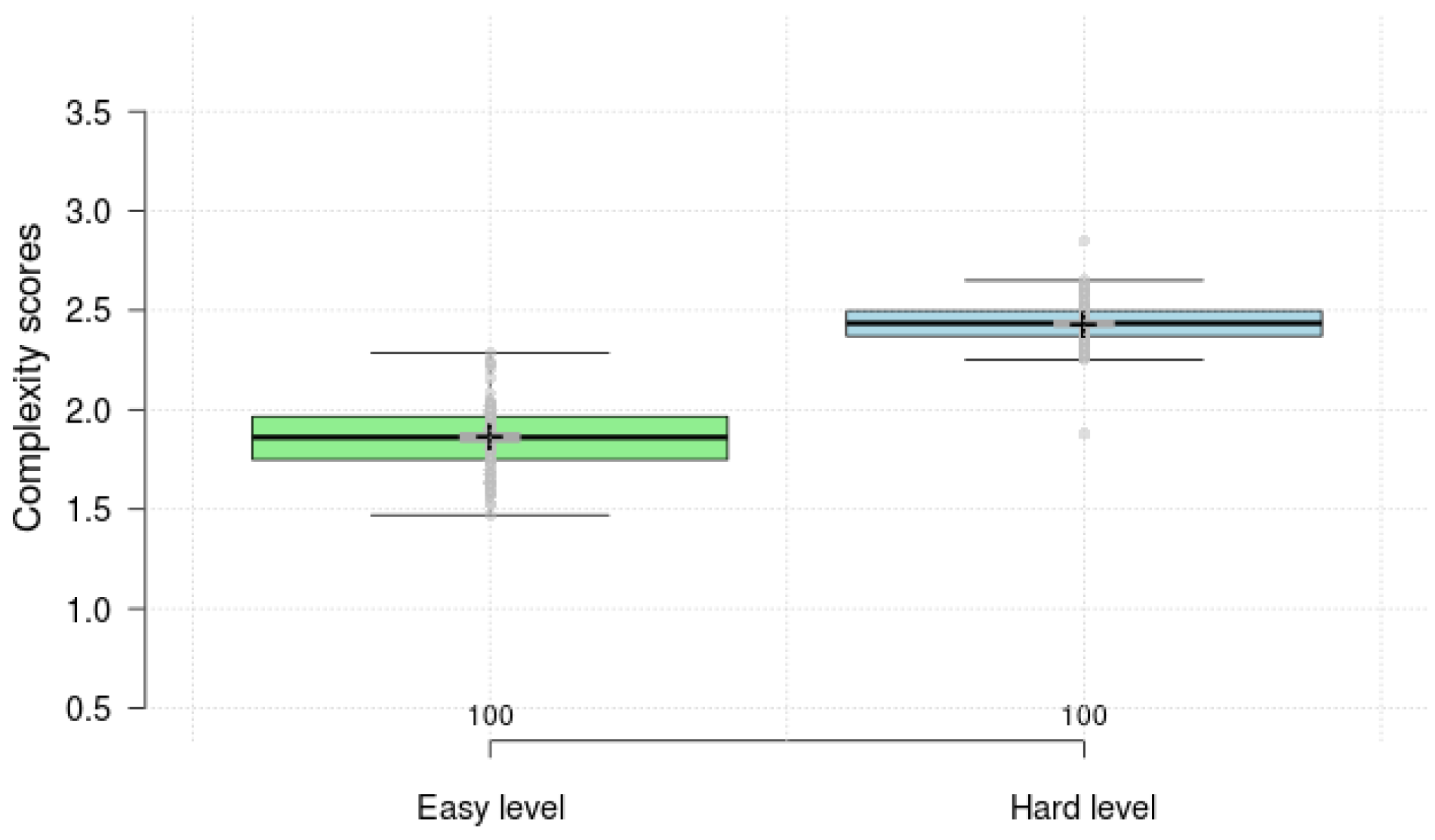

4.1. Result Comparison of Visual–Motor Complexity Score

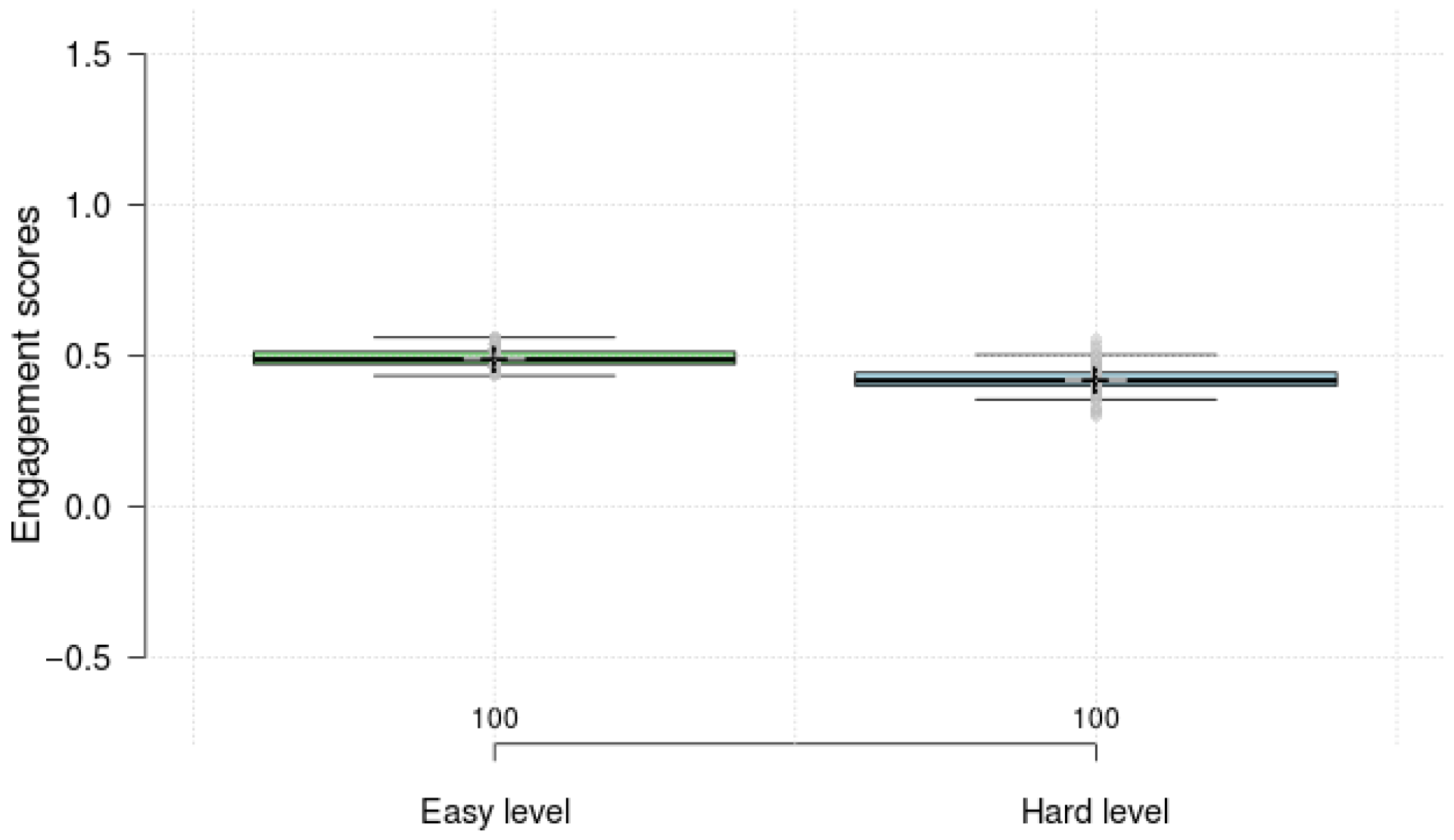

4.2. Result Comparison of Engagement Score

4.3. VMI Evaluation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kaiser, M.L.; Albaret, J.M.; Doudin, P.A. Relationship between visual-motor integration, eye-hand coordination, and quality of handwriting. J. Occup. Ther. Sch. Early Interv. 2009, 2, 87–95. [Google Scholar] [CrossRef]

- Shin, S.; Crapse, T.B.; Mayo, J.P.; Sommer, M.A. Visuomotor Integration. Encycl. Neurosci. 2009, 4354–4359. [Google Scholar] [CrossRef]

- Licari, M.K.; Alvares, G.A.; Varcin, K.; Evans, K.L.; Cleary, D.; Reid, S.L.; Glasson, E.J.; Bebbington, K.; Reynolds, J.E.; Wray, J.; et al. Prevalence of motor difficulties in autism spectrum disorder: Analysis of a population-based cohort. Autism Res. 2020, 13, 298–306. [Google Scholar] [CrossRef]

- Bhat, A.N. Is motor impairment in autism spectrum disorder distinct from developmental coordination disorder? A report from the SPARK study. Phys. Ther. 2020, 100, 633–644. [Google Scholar] [CrossRef] [PubMed]

- Lloyd, M.; MacDonald, M.; Lord, C. Motor skills of toddlers with autism spectrum disorders. Autism 2013, 17, 133–146. [Google Scholar] [CrossRef]

- Patterson, J.W.; Armstrong, V.; Duku, E.; Richard, A.; Franchini, M.; Brian, J.; Zwaigenbaum, L.; Bryson, S.E.; Sacrey, L.A.R.; Roncadin, C.; et al. Early trajectories of motor skills in infant siblings of children with autism spectrum disorder. Autism Res. 2022, 15, 481–492. [Google Scholar] [CrossRef]

- Chukoskie, L.; Townsend, J.; Westerfield, M. Motor skill in autism spectrum disorders: A subcortical view. Int. Rev. Neurobiol. 2013, 113, 207–249. [Google Scholar] [PubMed]

- Zakaria, N.K.; Tahir, N.M.; Jailani, R. Experimental Approach in Gait Analysis and Classification Methods for Autism spectrum Disorder: A Review. Int. J. 2020, 9, 3995–4005. [Google Scholar] [CrossRef]

- Studenka, B.E.; Myers, K. Preliminary Evidence That Motor Planning Is Slower and More Difficult for Children with Autism Spectrum Disorder During Motor Cooperation. Mot. Control 2020, 24, 127–149. [Google Scholar] [CrossRef] [PubMed]

- Iverson, J.M.; Shic, F.; Wall, C.A.; Chawarska, K.; Curtin, S.; Estes, A.; Gardner, J.M.; Hutman, T.; Landa, R.J.; Levin, A.R.; et al. Early motor abilities in infants at heightened versus low risk for ASD: A Baby Siblings Research Consortium (BSRC) study. J. Abnorm. Psychol. 2019, 128, 69–80. [Google Scholar] [CrossRef]

- West, K.L. Infant motor development in autism spectrum disorder: A synthesis and meta-analysis. Child Dev. 2019, 90, 2053–2070. [Google Scholar] [CrossRef] [PubMed]

- Lim, Y.H.; Licari, M.; Spittle, A.J.; Watkins, R.E.; Zwicker, J.G.; Downs, J.; Finlay-Jones, A. Early motor function of children with autism spectrum disorder: A systematic review. Pediatrics 2021, 147, e2020011270. [Google Scholar] [CrossRef] [PubMed]

- Nebel, M.B.; Eloyan, A.; Nettles, C.A.; Sweeney, K.L.; Ament, K.; Ward, R.E.; Choe, A.S.; Barber, A.D.; Pekar, J.J.; Mostofsky, S.H. Intrinsic visual-motor synchrony correlates with social deficits in autism. Biol. Psychiatry 2016, 79, 633–641. [Google Scholar] [CrossRef]

- Craig, F.; Lorenzo, A.; Lucarelli, E.; Russo, L.; Fanizza, I.; Trabacca, A. Motor competency and social communication skills in preschool children with autism spectrum disorder. Autism Res. 2018, 11, 893–902. [Google Scholar] [CrossRef]

- Gandotra, A.; Kotyuk, E.; Szekely, A.; Kasos, K.; Csirmaz, L.; Cserjesi, R. Fundamental movement skills in children with autism spectrum disorder: A systematic review. Res. Autism Spectr. Disord. 2020, 78, 101632. [Google Scholar] [CrossRef]

- Lidstone, D.E.; Mostofsky, S.H. Moving toward understanding autism: Visual-motor integration, imitation, and social skill development. Pediatr. Neurol. 2021, 122, 98–105. [Google Scholar] [CrossRef] [PubMed]

- Lidstone, D.E.; Rochowiak, R.; Pacheco, C.; Tunçgenç, B.; Vidal, R.; Mostofsky, S.H. Automated and scalable Computerized Assessment of Motor Imitation (CAMI) in children with Autism Spectrum Disorder using a single 2D camera: A pilot study. Res. Autism Spectr. Disord. 2021, 87, 101840. [Google Scholar] [CrossRef]

- Vabalas, A.; Gowen, E.; Poliakoff, E.; Casson, A.J. Applying machine learning to kinematic and eye movement features of a movement imitation task to predict autism diagnosis. Sci. Rep. 2020, 10, 8346. [Google Scholar] [CrossRef]

- Carsone, B.; Green, K.; Torrence, W.; Henry, B. Systematic Review of Visual Motor Integration in Children with Developmental Disabilities. Occup. Ther. Int. 2021, 2021, 1801196. [Google Scholar] [CrossRef]

- Mazzolini, D.; Mignone, P.; Pavan, P.; Vessio, G. An easy-to-explain decision support framework for forensic analysis of dynamic signatures. Forensic Sci. Int. Digit. Investig. 2021, 38, 301216. [Google Scholar] [CrossRef]

- Whitten, P.; Wolff, F.; Papachristou, C. Explainable Neural Network Recognition of Handwritten Characters. In Proceedings of the 2023 IEEE 13th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 8–11 March 2023; pp. 176–182. [Google Scholar]

- Gozzi, N.; Malandri, L.; Mercorio, F.; Pedrocchi, A. XAI for myo-controlled prosthesis: Explaining EMG data for hand gesture classification. Knowl.-Based Syst. 2022, 240, 108053. [Google Scholar] [CrossRef]

- Lukos, J.R.; Snider, J.; Hernandez, M.E.; Tunik, E.; Hillyard, S.; Poizner, H. Parkinson’s disease patients show impaired corrective grasp control and eye–hand coupling when reaching to grasp virtual objects. Neuroscience 2013, 254, 205–221. [Google Scholar] [CrossRef]

- Ran, H.; Ning, X.; Li, W.; Hao, M.; Tiwari, P. 3D human pose and shape estimation via de-occlusion multi-task learning. Neurocomputing 2023, 548, 126284. [Google Scholar] [CrossRef]

- Tian, S.; Li, W.; Ning, X.; Ran, H.; Qin, H.; Tiwari, P. Continuous Transfer of Neural Network Representational Similarity for Incremental Learning. Neurocomputing 2023, 545, 126300. [Google Scholar] [CrossRef]

- Lee, K.; Junghans, B.M.; Ryan, M.; Khuu, S.; Suttle, C.M. Development of a novel approach to the assessment of eye–hand coordination. J. Neurosci. Methods 2014, 228, 50–56. [Google Scholar] [CrossRef]

- Dong, X.; Ning, X.; Xu, J.; Yu, L.; Li, W.; Zhang, L. A Recognizable Expression Line Portrait Synthesis Method in Portrait Rendering Robot. IEEE Trans. Comput. Soc. Syst. 2023, 1–11. [Google Scholar] [CrossRef]

- Casellato, C.; Gandolla, M.; Crippa, A.; Pedrocchi, A. Robotic set-up to quantify hand-eye behavior in motor execution and learning of children with autism spectrum disorder. In Proceedings of the 2017 International Conference on Rehabilitation Robotics (ICORR), London, UK, 17–20 July 2017; pp. 953–958. [Google Scholar]

- Valevicius, A.M.; Boser, Q.A.; Lavoie, E.B.; Murgatroyd, G.S.; Pilarski, P.M.; Chapman, C.S.; Vette, A.H.; Hebert, J.S. Characterization of normative hand movements during two functional upper limb tasks. PLoS ONE 2018, 13, e0199549. [Google Scholar] [CrossRef]

- Coco, M.I.; Dale, R. Cross-recurrence quantification analysis of categorical and continuous time series: An R package. Front. Psychol. 2014, 5, 510. [Google Scholar] [CrossRef]

- López Pérez, D.; Leonardi, G.; Niedźwiecka, A.; Radkowska, A.; Rączaszek-Leonardi, J.; Tomalski, P. Combining recurrence analysis and automatic movement extraction from video recordings to study behavioral coupling in face-to-face parent-child interactions. Front. Psychol. 2017, 8, 2228. [Google Scholar] [CrossRef]

- de Jonge-Hoekstra, L.; Van Der Steen, S.; Cox, R.F. Movers and shakers of cognition: Hand movements, speech, task properties, and variability. Acta Psychol. 2020, 211, 103187. [Google Scholar] [CrossRef]

- Fusaroli, R.; Konvalinka, I.; Wallot, S. Analyzing social interactions: The promises and challenges of using cross recurrence quantification analysis. In Translational Recurrences; Springer: Berlin/Heidelberg, Germany, 2014; pp. 137–155. [Google Scholar]

- Villamor, M.M.; Rodrigo, M.; Mercedes, T. Gaze collaboration patterns of successful and unsuccessful programming pairs using cross-recurrence quantification analysis. Res. Pract. Technol. Enhanc. Learn. 2019, 14, 25. [Google Scholar] [CrossRef]

- Liu, Q.; Wang, Q.; Li, X.; Gong, X.; Luo, X.; Yin, T.; Liu, J.; Yi, L. Social synchronization during joint attention in children with autism spectrum disorder. Autism Res. 2021, 14, 2120–2130. [Google Scholar] [CrossRef] [PubMed]

- Zhang, D.; Toptan, C.M.; Zhao, S.; Zhang, G.; Liu, H. ASD Children Adaption Behaviour Assessment via Hand Movement Properties: A RoadMap. In Proceedings of the UK Workshop on Computational Intelligence, Virtual Event, 22–23 June 2021; pp. 475–480. [Google Scholar]

- Englund, J.A.; Decker, S.L.; Allen, R.A.; Roberts, A.M. Common cognitive deficits in children with attention-deficit/hyperactivity disorder and autism: Working memory and visual-motor integration. J. Psychoeduc. Assess. 2014, 32, 95–106. [Google Scholar] [CrossRef]

- Green, R.R.; Bigler, E.D.; Froehlich, A.; Prigge, M.B.; Travers, B.G.; Cariello, A.N.; Anderson, J.S.; Zielinski, B.A.; Alexander, A.; Lange, N.; et al. Beery VMI performance in autism spectrum disorder. Child Neuropsychol. 2016, 22, 795–817. [Google Scholar] [CrossRef]

- Rosenblum, S.; Ben-Simhon, H.A.; Meyer, S.; Gal, E. Predictors of handwriting performance among children with autism spectrum disorder. Res. Autism Spectr. Disord. 2019, 60, 16–24. [Google Scholar] [CrossRef]

- Zhang, F.; Bazarevsky, V.; Vakunov, A.; Tkachenka, A.; Sung, G.; Chang, C.L.; Grundmann, M. Mediapipe hands: On-device real-time hand tracking. arXiv 2020, arXiv:2006.10214. [Google Scholar]

- Rawald, T.; Sips, M.; Marwan, N. PyRQA—Conducting recurrence quantification analysis on very long time series efficiently. Comput. Geosci. 2017, 104, 101–108. [Google Scholar] [CrossRef]

- Lewis, M.; Balamurugan, A.; Zheng, B.; Lupyan, G. Characterizing Variability in Shared Meaning through Millions of Sketches. In Proceedings of the Annual Meeting of the Cognitive Science Society, Vienna, Austria, 26–29 July 2021; Volume 43. Available online: https://escholarship.org/uc/item/702482s5 (accessed on 6 November 2022).

- Virtanen, P.; Gommers, R.; Oliphant, T.E.; Haberland, M.; Reddy, T.; Cournapeau, D.; Burovski, E.; Peterson, P.; Weckesser, W.; Bright, J.; et al. SciPy 1.0: Fundamental Algorithms for Scientific Computing in Python. Nat. Methods 2020, 17, 261–272. [Google Scholar] [CrossRef]

- Allen, B.; Stacey, B.C.; Bar-Yam, Y. Multiscale information theory and the marginal utility of information. Entropy 2017, 19, 273. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, D.; Lu, B.; Guo, J.; He, Y.; Liu, H. Assessment of Visual Motor Integration via Hand-Drawn Imitation: A Pilot Study. Electronics 2023, 12, 2776. https://doi.org/10.3390/electronics12132776

Zhang D, Lu B, Guo J, He Y, Liu H. Assessment of Visual Motor Integration via Hand-Drawn Imitation: A Pilot Study. Electronics. 2023; 12(13):2776. https://doi.org/10.3390/electronics12132776

Chicago/Turabian StyleZhang, Dinghuang, Baoli Lu, Jing Guo, Yu He, and Honghai Liu. 2023. "Assessment of Visual Motor Integration via Hand-Drawn Imitation: A Pilot Study" Electronics 12, no. 13: 2776. https://doi.org/10.3390/electronics12132776

APA StyleZhang, D., Lu, B., Guo, J., He, Y., & Liu, H. (2023). Assessment of Visual Motor Integration via Hand-Drawn Imitation: A Pilot Study. Electronics, 12(13), 2776. https://doi.org/10.3390/electronics12132776