A Streaming Data Processing Architecture Based on Lookup Tables

Abstract

1. Introduction

2. Methodology

2.1. Stream Data Processing

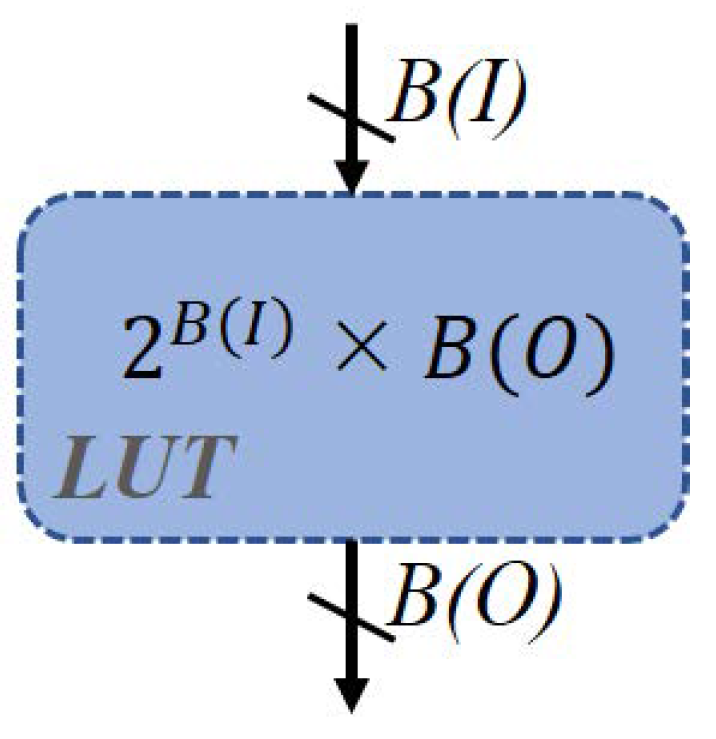

2.2. Lookup Table-Based Computing

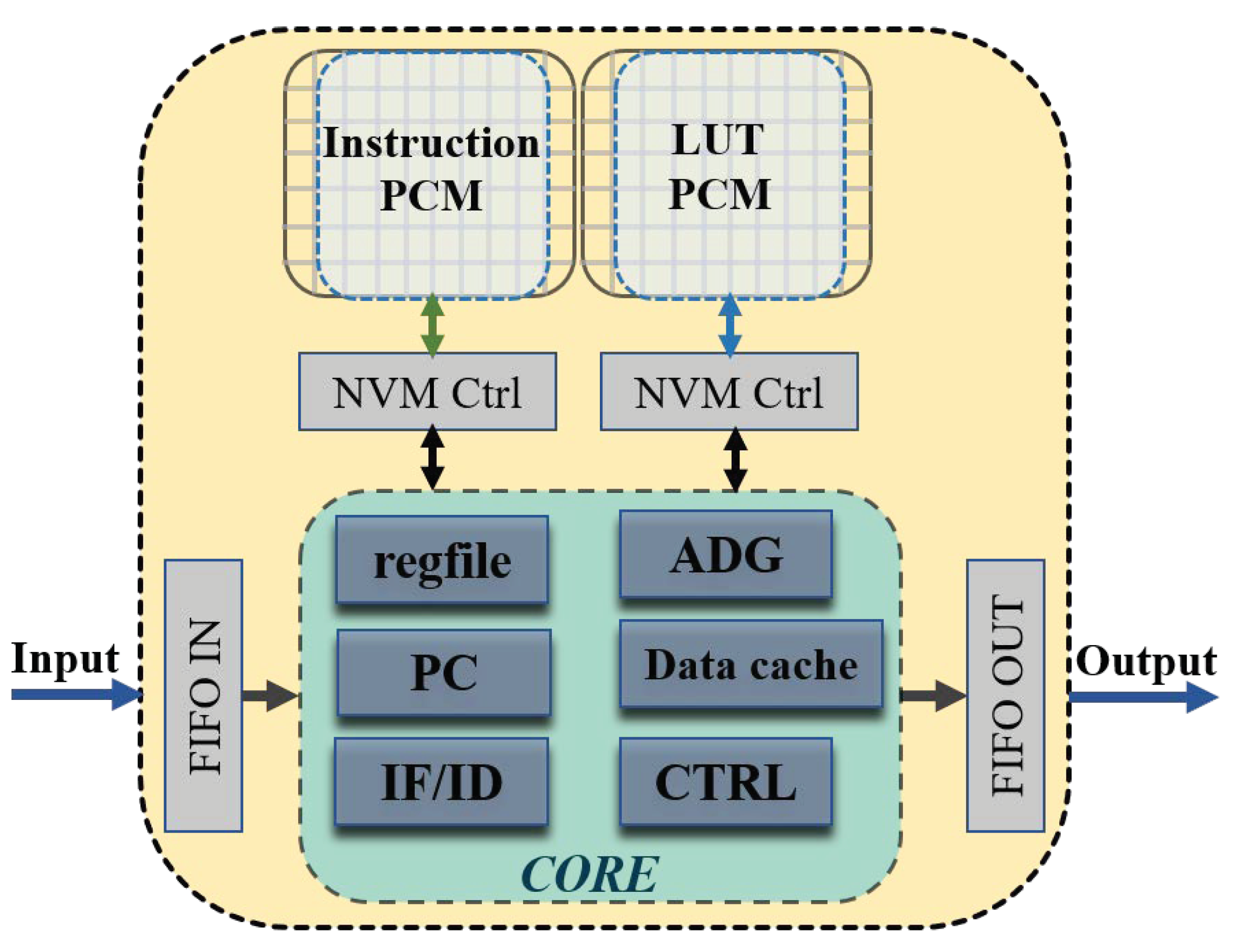

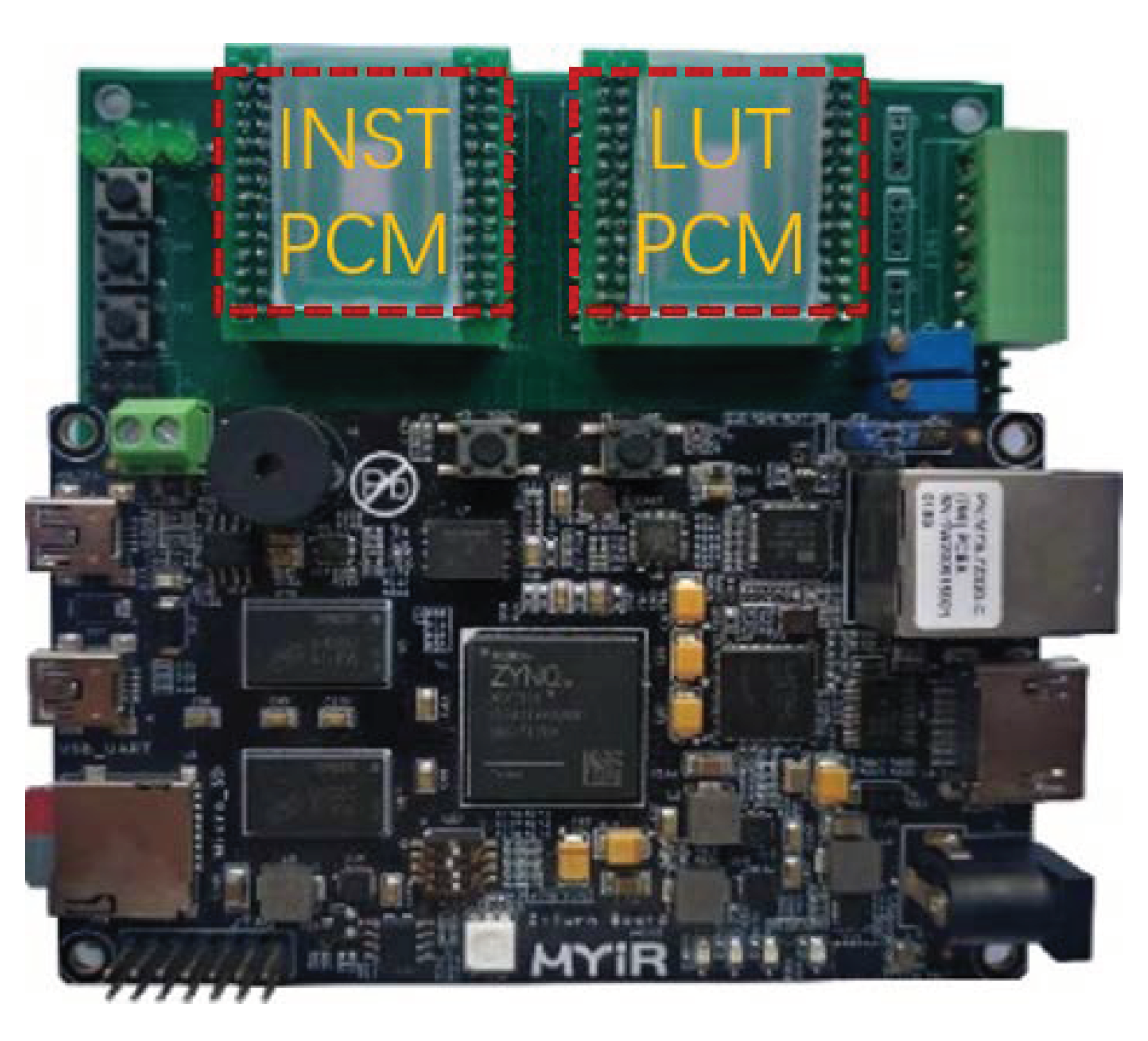

3. System Hardware Architecture

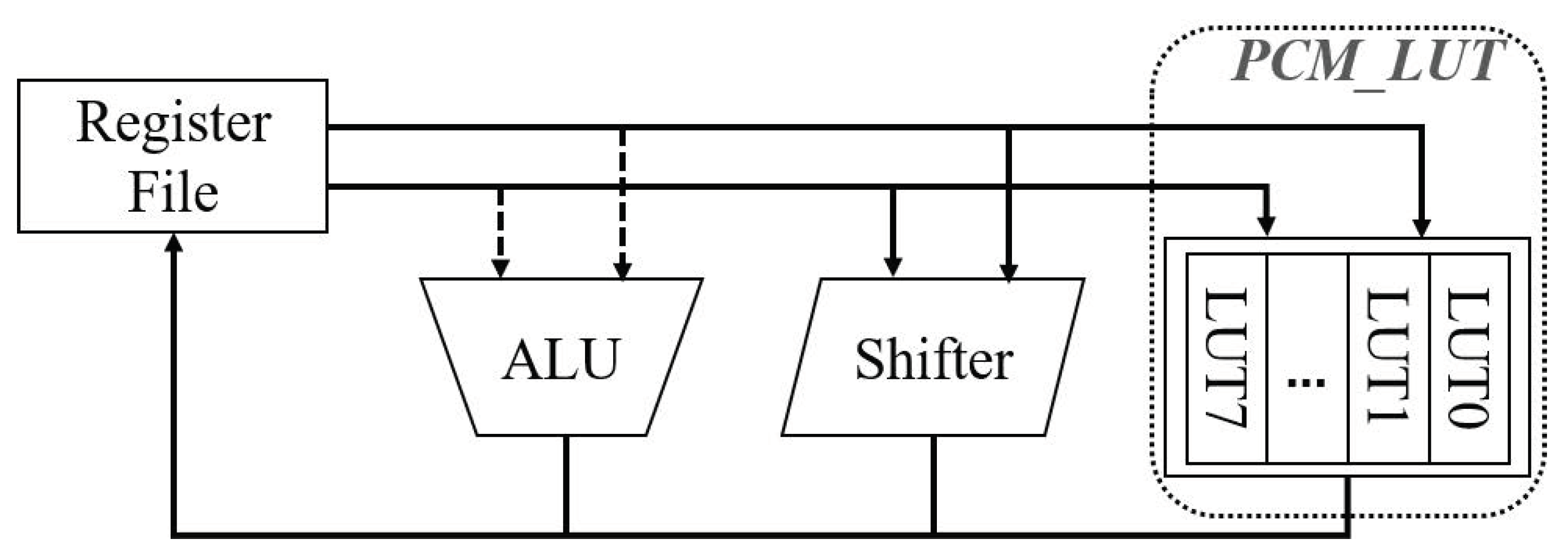

3.1. Hardware Architecture Design

3.2. Processor Instruction Set

3.2.1. RISC-V Instruction Set

3.2.2. Extended Lookup Instruction

3.2.3. Extended Data Transfer Instruction

3.3. FFT Processing Flow Example

| Algorithm 1 Pseudocode of the FFT algorithm. |

|

| Algorithm 2 RISC-V assembly code of the FFT algorithm. |

.loop:

|

| Algorithm 3 Extended instructions assembly code of the FFT algorithm. |

.loop:

|

4. Evaluation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Near Zero Power rf and Sensor Operations [Tender Documents: T25615387]. 2015. Available online: https://govtribe.com/opportunity/federal-contract-opportunity/near-zero-power-rf-and-sensor-operations-darpabaa1514 (accessed on 1 May 2023).

- Olsson, R.H.; Gordon, C.; Bogoslovov, R. Zero and Near Zero Power Intelligent Microsystems. J. Phys. Conf. Ser. 2019, 1407, 012042. [Google Scholar] [CrossRef]

- Kulshreshtha, T.; Dhar, A.S. CORDIC-Based High Throughput Sliding DFT Architecture with Reduced Error-Accumulation. Circuits Syst. Signal Process. 2018, 37, 5101–5126. [Google Scholar] [CrossRef]

- Deng, Q.; Zhang, Y.; Zhang, M.; Yang, J. LAcc: Exploiting Lookup Table-based Fast and Accurate Vector Multiplication in DRAM-based CNN Accelerator. In Proceedings of the 2019 56th ACM/IEEE DAC ’19 Design Automation Conference (DAC), Las Vegas, NV, USA, 2–6 June 2019; pp. 1–6. [Google Scholar]

- Sutradhar, P.R.; Bavikadi, S.; Connolly, M.; Prajapati, S.; Indovina, M.A.; Dinakarrao, S.M.P.; Ganguly, A. Look-up-Table Based Processing-in-Memory Architecture with Programmable Precision-Scaling for Deep Learning Applications. IEEE Trans. Parallel Distrib. Syst. 2022, 33, 263–275. [Google Scholar] [CrossRef]

- Peroni, D.; Imani, M.; Rosing, T. ALook: Adaptive Lookup for GPGPU Acceleration. In Proceedings of the ASPDAC ’19—24th Asia and South Pacific Design Automation Conference, New York, NY, USA, 21–24 January 2019; pp. 739–746. [Google Scholar] [CrossRef]

- Ramanathan, A.K.; Kalsi, G.S.; Srinivasa, S.; Chandran, T.M.; Pillai, K.R.; Omer, O.J.; Narayanan, V.; Subramoney, S. Look-Up Table based Energy Efficient Processing in Cache Support for Neural Network Acceleration. In Proceedings of the IEEE 2020 53rd Annual IEEE/ACM International Symposium on Microarchitecture (MICRO), Athens, Greece, 17–21 October 2020; pp. 88–101. [Google Scholar]

- Bavikadi, S.; Sutradhar, P.R.; Ganguly, A.; Dinakarrao, S.M.P. uPIM: Performance-aware Online Learning Capable Processing-in-Memory. In Proceedings of the 2021 IEEE 3rd International Conference on Artificial Intelligence Circuits and Systems (AICAS), Washington, DC, USA, 6–9 June 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Sutradhar, P.R.; Connolly, M.; Bavikadi, S.; Pudukotai Dinakarrao, S.M.; Indovina, M.A.; Ganguly, A. pPIM: A Programmable Processor-in-Memory Architecture with Precision-Scaling for Deep Learning. IEEE Comput. Archit. Lett. 2020, 19, 118–121. [Google Scholar] [CrossRef]

- Mittal, S. A Survey of Techniques for Approximate Computing. ACM Comput. Surv. 2016, 48, 1–33. [Google Scholar] [CrossRef]

- Liu, W.; Lombardi, F.; Shulte, M. A Retrospective and Prospective View of Approximate Computing [Point of View]. Proc. IEEE 2020, 108, 394–399. [Google Scholar] [CrossRef]

- Tian, Y.; Zhang, Q.; Wang, T.; Xu, Q. Lookup table allocation for approximate computing with memory under quality constraints. In Proceedings of the EDAA 2018 Design, Automation & Test in Europe Conference & Exhibition (DATE), Dresden, Germany, 19–23 March 2018; pp. 153–158. [Google Scholar]

- Cong, J.; Ercegovac, M.; Huang, M.; Li, S.; Xiao, B. Energy-efficient computing using adaptive table lookup based on nonvolatile memories. In Proceedings of the IEEE International Symposium on Low Power Electronics and Design (ISLPED), Boston, MA, USA, 1–3 August 2013; pp. 280–285. [Google Scholar]

- Tian, Y.; Wang, T.; Zhang, Q.; Xu, Q. ApproxLUT: A novel approximate lookup table-based accelerator. In Proceedings of the Institute of Electrical and Electronics Engineers, Inc. (IEEE) Conference, Piscataway, NJ, USA, 21–26 July 2017; p. 438. [Google Scholar]

- Pozidis, H.; Papandreou, N.; Stanisavljevic, M. Circuit and System-Level Aspects of Phase Change Memory. IEEE Trans. Circuits Syst. II Express Briefs 2021, 68, 844–850. [Google Scholar] [CrossRef]

- Garofalakis, M.; Gehrke, J.; Rastogi, R. Data Stream Management: Processing High-Speed Data Streams, 1st ed.; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Namiot, D.; Sneps-Sneppe, M.; Pauliks, R. On Data Stream Processing in IoT Applications. In Proceedings of the Internet of Things Smart Spaces, and Next Generation Networks and Systems, St. Petersburg, Russia, 27–29 August 2018; Lecture Notes in Computer Science. Springer: Cham, Switzerland, 2018; pp. 41–51. [Google Scholar]

- Parhami, B. Tabular Computation. In Encyclopedia of Big Data Technologies; Sakr, S., Zomaya, A.Y., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 1667–1672. [Google Scholar] [CrossRef]

- Mach, S.; Schuiki, F.; Zaruba, F.; Benini, L. FPnew: An Open-Source Multiformat Floating-Point Unit Architecture for Energy-Proportional Transprecision Computing. IEEE Trans. Very Large Scale Integr. Syst. 2021, 29, 774–787. [Google Scholar] [CrossRef]

- Cooley, J.W.; Tukey, J.W. An Algorithm for the Machine Calculation of Complex Fourier Series. Math. Comput. 1965, 19, 297–301. [Google Scholar] [CrossRef]

- Beulet Paul, A.S.; Raju, S.; Janakiraman, R. Low power reconfigurable FP-FFT core with an array of folded DA butterflies. EURASIP J. Adv. Signal Process. 2014, 2014, 1–17. [Google Scholar] [CrossRef]

- Le Ba, N.; Kim, T.T.H. An Area Efficient 1024-Point Low Power Radix-22 FFT Processor With Feed-Forward Multiple Delay Commutators. IEEE Trans. Circuits Syst. I Regul. Pap. 2018, 65, 3291–3299. [Google Scholar] [CrossRef]

- Seok, M.; Jeon, D.; Chakrabarti, C.; Blaauw, D.; Sylvester, D. A 0.27 V 30 MHz 17.7 nJ/transform 1024-pt complex FFT core with super-pipelining. In Proceedings of the 2011 IEEE International Solid-State Circuits Conference, San Francisco, CA, USA, 20–24 February 2011; pp. 342–344. [Google Scholar]

- Yantır, H.E.; Guo, W.; Eltawil, A.M.; Kurdahi, F.J.; Salama, K.N. An Ultra-Area-Efficient 1024-Point In-Memory FFT Processor. Micromachines 2019, 10, 509. [Google Scholar] [CrossRef]

| Bit-Width (Bit) | Memory Bit-Width (Bit) | LUT Size |

|---|---|---|

| 4 | 4 | 32 B |

| 8 | 8 | 512 B |

| 10 | 16 | 2 KB |

| 12 | 16 | 8 KB |

| 14 | 16 | 32 KB |

| 16 | 16 | 130 KB |

| Type | Instruction | |

|---|---|---|

| Basic instruction set | Logical operations Shifting Conditional branch Unconditional branch | and,or,xor,andi,ori,andi,ori,xori sll,srl,sra,slli,srli,srai bnq,bne,blt,bge,bltu,bgeu jal,jalr |

| Extend instruction set | Data transfer Table lookup | pl.t, plu.t, plr.t, ps.t, psu.t, psr.t ptlu.x, plut.adg.x, plut.adgi.x, plutw.x |

| 20 ns | 10 μA | 3.3 V | 0.66 nJ/KB |

| Our | [24] | [23] | [22] | [21] | Zynq 7020 | |

|---|---|---|---|---|---|---|

| FFT size (N) | 1024 | 1024 | 1024 | 1024 | 1024 | 1024 |

| Word length | 8/Float | 12-bit | 16-bit | 32-bit | 32/Float | 32/Float |

| Frequency (MHz) | 50 | - | 30 | 600 | 50 | 50 |

| Throughput (MS/s) | 100 | 890 | 30 | 800 | 0.202 | 6.11 |

| Execution time (μs) | 122.88 | - | - | - | 206.44 | 40 |

| Power (mW) | 3.53 | 12 | 4.15 | 60.3 | 68 | 44 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yuemaier, A.; Chen, X.; Qian, X.; Dai, W.; Li, S.; Song, Z. A Streaming Data Processing Architecture Based on Lookup Tables. Electronics 2023, 12, 2725. https://doi.org/10.3390/electronics12122725

Yuemaier A, Chen X, Qian X, Dai W, Li S, Song Z. A Streaming Data Processing Architecture Based on Lookup Tables. Electronics. 2023; 12(12):2725. https://doi.org/10.3390/electronics12122725

Chicago/Turabian StyleYuemaier, Aximu, Xiaogang Chen, Xingyu Qian, Weibang Dai, Shunfen Li, and Zhitang Song. 2023. "A Streaming Data Processing Architecture Based on Lookup Tables" Electronics 12, no. 12: 2725. https://doi.org/10.3390/electronics12122725

APA StyleYuemaier, A., Chen, X., Qian, X., Dai, W., Li, S., & Song, Z. (2023). A Streaming Data Processing Architecture Based on Lookup Tables. Electronics, 12(12), 2725. https://doi.org/10.3390/electronics12122725