Graph Convolution Network over Dependency Structure Improve Knowledge Base Question Answering

Abstract

1. Introduction

- For underutilization of the relationships between words in the question, we propose a question answering method on a knowledge base by applying GCNs, which permits it to efficiently pool information above arbitrary dependency formations and to produce a more effective sequence vector representation.

- For the problem of an incorrect relation selection in the process of query graph generation, we analyze the dependency structure to establish the relation between words and use the structure to obtain a more effective representation to further affect the ranking and action selection of the query graph.

- On the WebQuestionsSP (WQSP) and ComplexQuestions (CQ) datasets, our method performs well, and it is more effective in ranking query graphs.

2. Related Work

3. Method

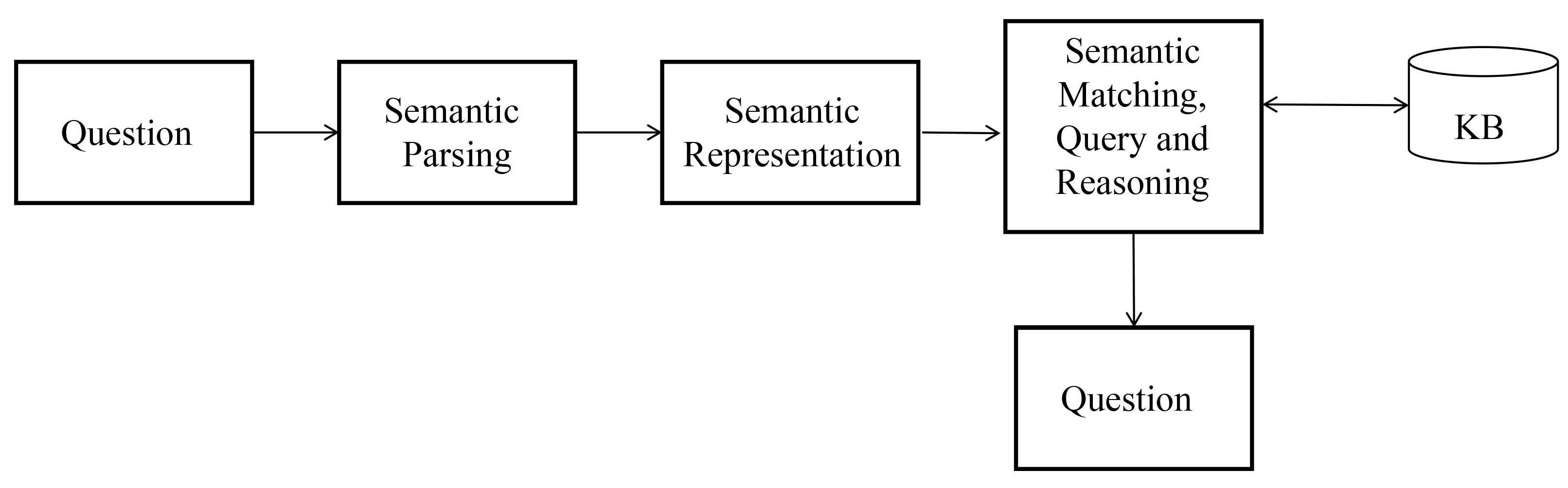

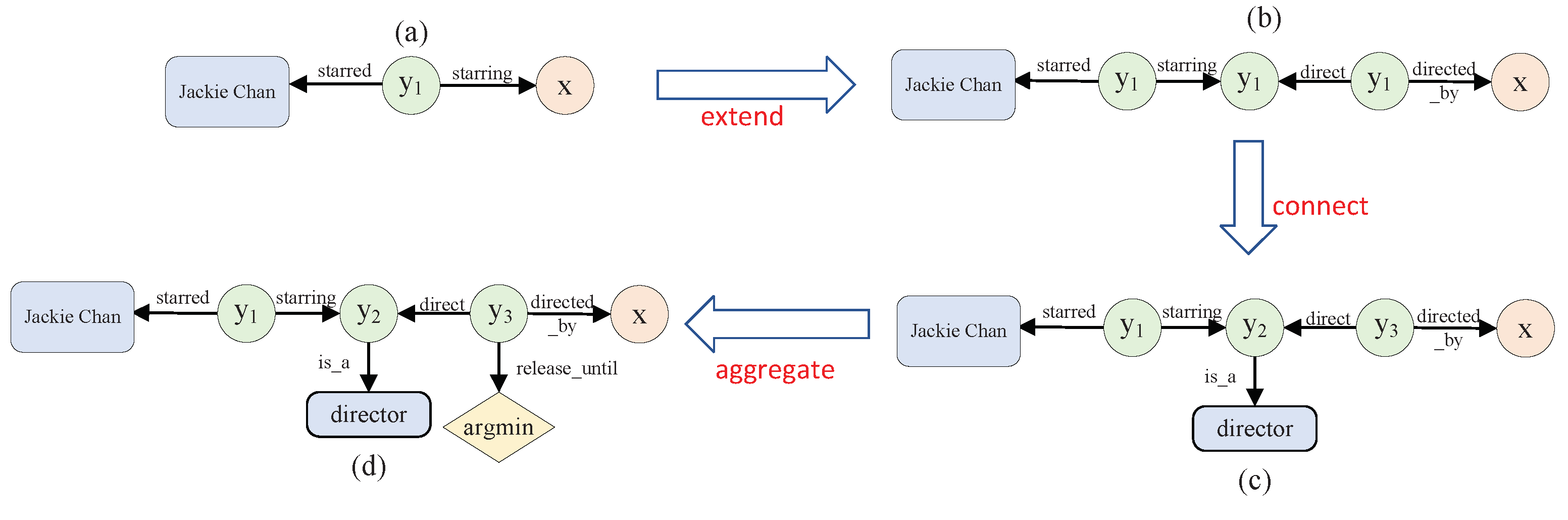

3.1. Query Graph Generation

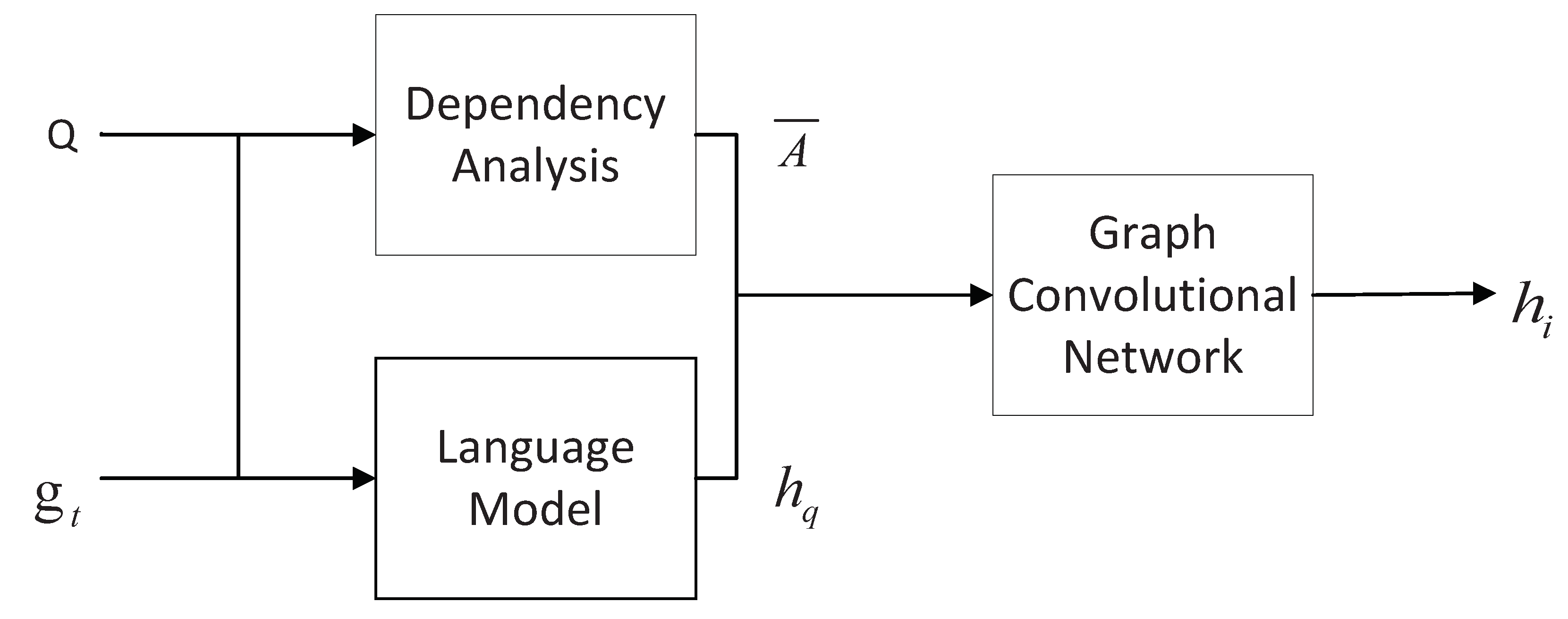

3.2. Dependency Structure of a Question Based on a GCN

3.3. Query Graph Ranking

3.4. Learning

4. Experiments

4.1. Datasets and Settings

4.2. Experimental Results and Comparison

4.3. Qualitative Analysis

4.4. Error Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Bordes, A.; Usunier, N.; Chopra, S.; Weston, J. Large-scale simple question answering with memory networks. arXiv 2015, arXiv:1506.02075. [Google Scholar] [CrossRef]

- Cai, Q.; Alexander, Y. Large-scale semantic parsing via schema matching and lexicon extension. In Proceedings of the Annual Meeting of the Association for Computational Linguistics, Sofia, Bulgaria, 4–9 August 2013; pp. 423–433. [Google Scholar]

- Krishnamurthy, J.; Mitchel, T.M. Weakly supervised training of semantic parsers. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Jeju Island, Republic of Korea, 12–14 July 2012; pp. 754–765. [Google Scholar]

- Abujabal, A.; Yahya, M.; Riedewald, M.; Weikum, G. Automated template generation for question answering over knowledge graphs. In Proceedings of the 26th International Conference on World Wide Web, Perth, Australia, 3–7 April 2017; pp. 1191–1200. [Google Scholar] [CrossRef]

- Hu, S.; Zou, L.; Yu, J.X.; Wang, H.; Zhao, D. Answering natural language questions by subgraph matching over knowledge graphs. IEEE Trans. Knowl. Data Eng. 2017, 30, 824–837. [Google Scholar] [CrossRef]

- Bao, J.; Duan, N.; Yan, Z.; Zhou, M.; Zhao, T. Constraint-based question answering with knowledge graph. In Proceedings of the COLING, Osaka, Japan, 11–16 December 2016; pp. 2503–2514. [Google Scholar]

- Luo, K.; Lin, F.; Luo, X.; Zhu, K.Q. Knowledge base question answering via encoding of complex query graphs. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November2018; pp. 2185–2194. [Google Scholar] [CrossRef]

- Yih, W.-T.; Chang, M.-W.; He, X.; Gao, J. Semantic Parsing via Staged Query Graph Generation: Question Answering with Knowledge Base. In Proceedings of the Annual Meeting of the Association for Computational Linguistics, Beijing, China, 26–31 July 2015. [Google Scholar]

- Chen, Z.-Y.; Chang, C.-H.; Chen, Y.-P.; Nayak, J.; Ku, L.-W. UHop: An unrestricted-hop relation extraction framework for knowledge-based question answering. arXiv 2019, arXiv:1904.01246. [Google Scholar] [CrossRef]

- Lan, Y.; Wang, S.; Jiang, J. Multi-hop knowledge base question answering with an iterative sequence matching model. In Proceedings of the IEEE International Conference on Data Mining (ICDM), Beijing, China, 8–11 November 2019; pp. 359–368. [Google Scholar] [CrossRef]

- Lan, Y.; Jiang, J. Query graph generation for answering multi-hop complex questions from knowledge bases. In Proceedings of the Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020. [Google Scholar] [CrossRef]

- Miwa, M.; Bansal, M. End-to-End Relation Extraction using LSTMs on Sequences and Tree Structures. In Proceedings of the Annual Meeting of the Association for Computational Linguistics, Berlin, Germany, 7–12 August 2016. [Google Scholar] [CrossRef]

- Xu, K.; Feng, Y.; Huang, S.; Zhao, D. Semantic Relation Classification via Convolutional Neural Networks with Simple Negative Sampling. Comput. Sci. 2015, 71, 941–949. [Google Scholar] [CrossRef]

- Youcef, D.; Gautam, S.; Wei, L.J.C. Fast and accurate convolution neural network for detecting manufacturing data. IEEE Trans. Ind. Inform. 2020, 17, 2947–2955. [Google Scholar] [CrossRef]

- Peng, H.; Chang, M.; Yih, W.T. Maximum margin reward networks for learning from explicit and implicit supervision. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 7–11 September 2017; pp. 2368–2378. [Google Scholar] [CrossRef]

- Sorokin, D.; Gurevych, I. Modeling semantics with gated graph neural networks for knowledge base question answering. In Proceedings of the 27th International Conference on Computational Linguistics, Association for Computational Linguistics, Santa Fe, NM, USA, 20–26 August 2018; pp. 3306–3317. [Google Scholar] [CrossRef]

- Iyyer, M.; Yih, W.-T.; Chang, M.-W. Search-based neural structured learning for sequential question answering. In Proceedings of the Annual Meeting of the Association for Computational Linguistics, Vancouver, BC, Canada, 30 July–4 August 2017; pp. 1821–1831. [Google Scholar] [CrossRef]

- Krishnamurthy, J.; Dasigi, P.; Gardner, M. Neural semantic parsing with type constraints for semi-structured tables. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 7–11 September 2017; pp. 1516–1526. [Google Scholar] [CrossRef]

- Moqurrab, S.A.; Ayub, U.; Anjum, A.; Asghar, S.; Srivastava, G. An accurate deep learning model for clinical entity recognition from clinical notes. IEEE J. Biomed. Health Inform. 2021, 25, 3804–3811. [Google Scholar] [CrossRef] [PubMed]

- Wang, F.; Wu, W.; Li, Z.; Zhou, M. Named entity disambiguation for questions in community question answering. Knowl.-Based Syst. 2017, 126, 68–77. [Google Scholar] [CrossRef]

- Bast, H.; Haussmann, E. More accurate question answering on freebase. In Proceedings of the 24th ACM International Conference on Information and Knowledge Management, Melbourne, Australia, 18–23 October 2015; pp. 1431–1440. [Google Scholar] [CrossRef]

- Chakraborty, N.; Lukovnikov, D.; Maheshwari, G.; Trivedi, P.; Lehmann, J.; Fischer, A. Introduction to neural network based approaches for question answering over knowledge graphs. arXiv 2019, arXiv:1907.09361. [Google Scholar] [CrossRef]

- Chen, H.-C.; Chen, Z.-Y.; Huang, S.-Y.; Ku, L.-W.; Chiu, Y.-S.; Yang, W.-J. Relation extraction in knowledge base question answering: From general-domain to the catering industry. In Proceedings of the International Conference on HCI in Business, Government, and Organizations, Las Vegas, NV, USA, 15 July 2018; pp. 26–41. [Google Scholar] [CrossRef]

- Yang, Z.; Garg, H.; Li, J.; Srivastava, G.; Cao, Z. Investigation of multiple heterogeneous relationships using a q-rung orthopair fuzzy multi-criteria decision algorithm. Neural Comput. Appl. 2021, 33, 10771–10786. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2019, arXiv:1810.04805. [Google Scholar] [CrossRef]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. arXiv 2016, arXiv:1609.02907. [Google Scholar] [CrossRef]

- Marcheggiani, D.; Ivan, T. Encoding Sentences with Graph Convolutional Networks for Semantic Role Labeling. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 7–11 September 2017; pp. 1506–1515. [Google Scholar] [CrossRef]

- Das, R.; Dhuliawala, S.; Zaheer, M.; Vilnis, L.; Durugkar, I.; Krishnamurthy, A.; Smola, A.; McCallum, A. Go for a walk and arrive at the answer: Reasoning over paths in knowledge bases using reinforcement learning. arXiv 2018, arXiv:1711.05851. [Google Scholar]

- Lan, Y.; Wang, S.; Jiang, J. Knowledge base question answering with topic units. In Proceedings of the International Joint Conference on Artificial Intelligence, Macao, China, 10–16 August 2019; pp. 5046–5052. [Google Scholar] [CrossRef]

- Bhutani, N.; Suhara, Y.; Tan, W.-C.; Halevy, A.Y.; Jagadis, H.V. Open Information Extraction from Question-Answer Pairs. In Proceedings of the 17th Annual Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL-HLT 2019), Minneapolis, MN, USA, 3–5 June 2019; pp. 2294–2305. [Google Scholar]

- Ahmed, G.A.; Saha, A.; Kumar, V.; Bhambhani, M.; Sankaranarayanan, K.; Chakrabarti, S. Neural Program Induction for KBQA Without Gold Programs or Query Annotations. In Proceedings of the International Joint Conference on Artificial Intelligence, Macao, China, 10–16 August 2019; pp. 4890–4896. [Google Scholar] [CrossRef]

| WQSP | CQ | |

|---|---|---|

| Total QA pairs | 4737 | 2100 |

| Training set QA pairs | 3098 | 1300 |

| Test set QA pairs | 1639 | 800 |

| Method | Dataset | |

|---|---|---|

| WQSP (F1) | CQ (F1) | |

| [8] | 69.0 | - |

| [6] | - | 40.9 |

| [7] | - | 42.8 |

| [29] | 67.9 | - |

| [9] | 68.5 | 35.3 |

| [30] | 60.3 | - |

| [31] | 72.6 | - |

| [11] | 74.0 | 43.3 |

| Our | 74.8 | 44.2 |

| Method | CQ | WQSP |

|---|---|---|

| Lan et al. (2020) [11] | 0.715 | 0.640 |

| Our method | 0.730 | 0.670 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, C.; Zha, D.; Wang, L.; Mu, N.; Yang, C.; Wang, B.; Xu, F. Graph Convolution Network over Dependency Structure Improve Knowledge Base Question Answering. Electronics 2023, 12, 2675. https://doi.org/10.3390/electronics12122675

Zhang C, Zha D, Wang L, Mu N, Yang C, Wang B, Xu F. Graph Convolution Network over Dependency Structure Improve Knowledge Base Question Answering. Electronics. 2023; 12(12):2675. https://doi.org/10.3390/electronics12122675

Chicago/Turabian StyleZhang, Chenggong, Daren Zha, Lei Wang, Nan Mu, Chengwei Yang, Bin Wang, and Fuyong Xu. 2023. "Graph Convolution Network over Dependency Structure Improve Knowledge Base Question Answering" Electronics 12, no. 12: 2675. https://doi.org/10.3390/electronics12122675

APA StyleZhang, C., Zha, D., Wang, L., Mu, N., Yang, C., Wang, B., & Xu, F. (2023). Graph Convolution Network over Dependency Structure Improve Knowledge Base Question Answering. Electronics, 12(12), 2675. https://doi.org/10.3390/electronics12122675