Medical Image Fusion Using SKWGF and SWF in Framelet Transform Domain

Abstract

:1. Introduction

2. Preliminaries

2.1. Framelet Transform

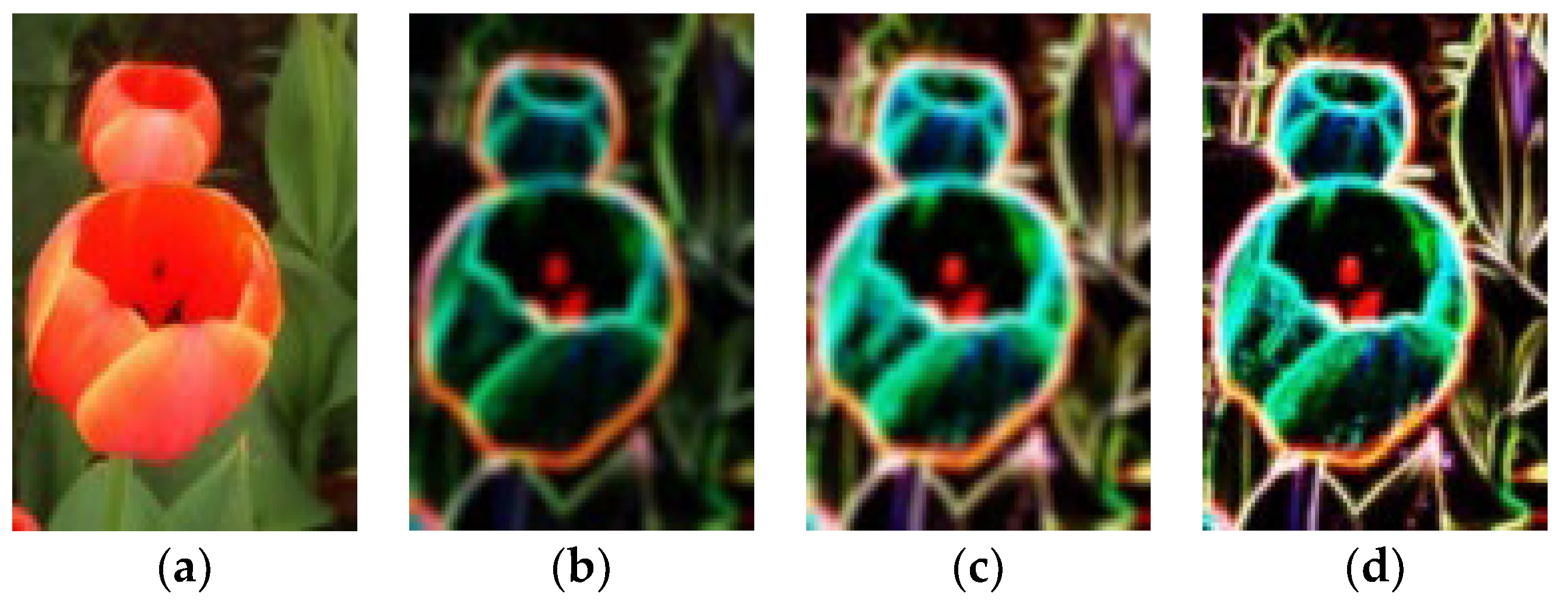

2.2. Guided Filter

2.3. Side Window Filter

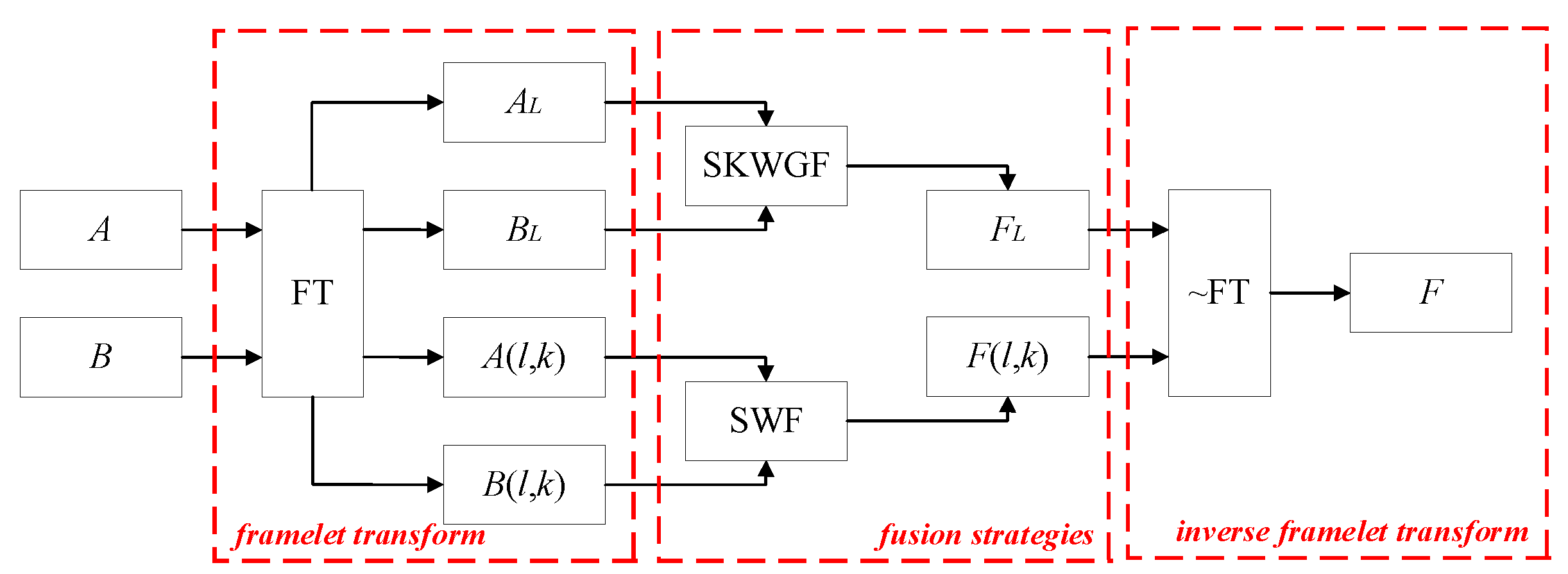

3. Proposed Method

3.1. Overall Framework

3.2. Details of Proposed Method

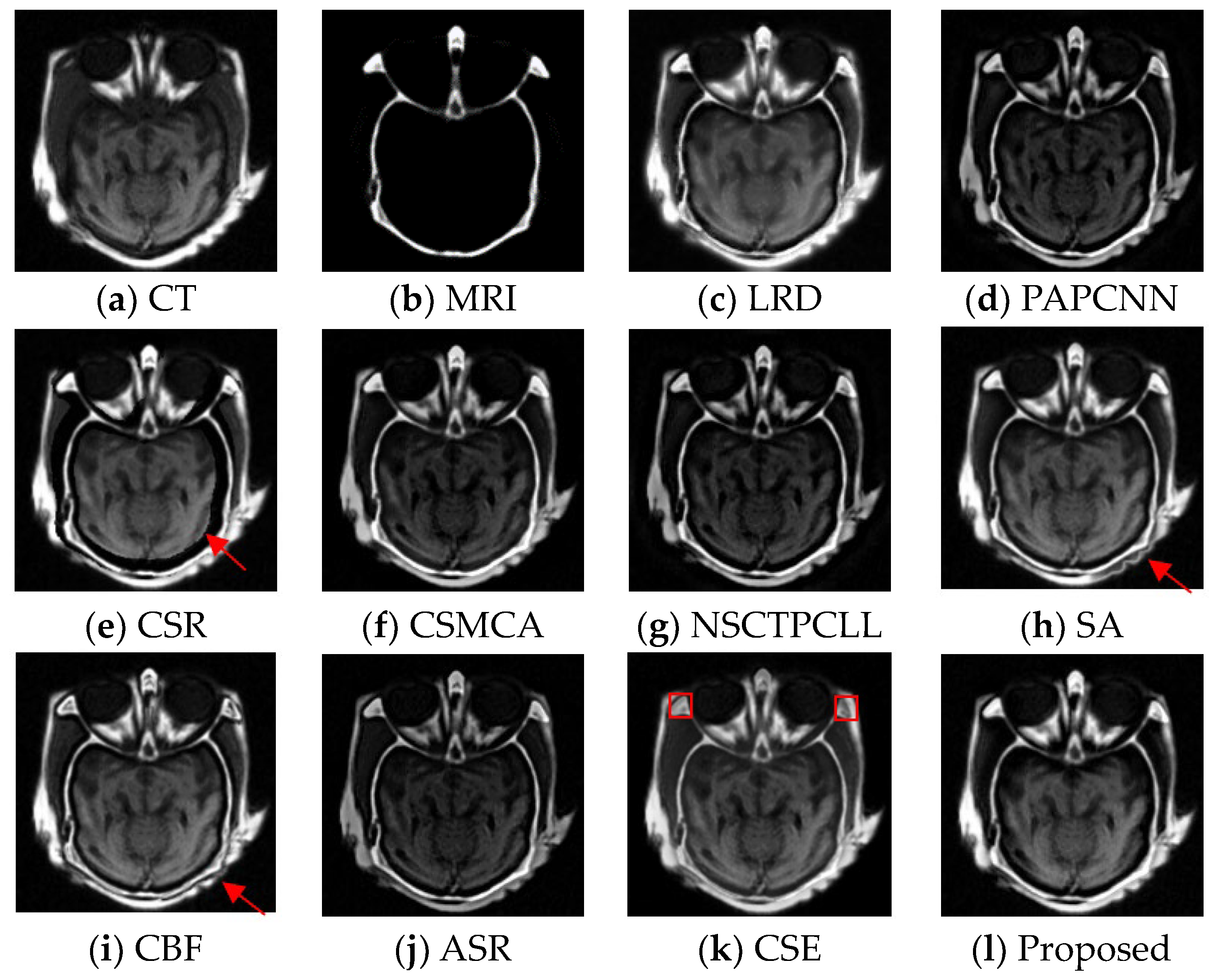

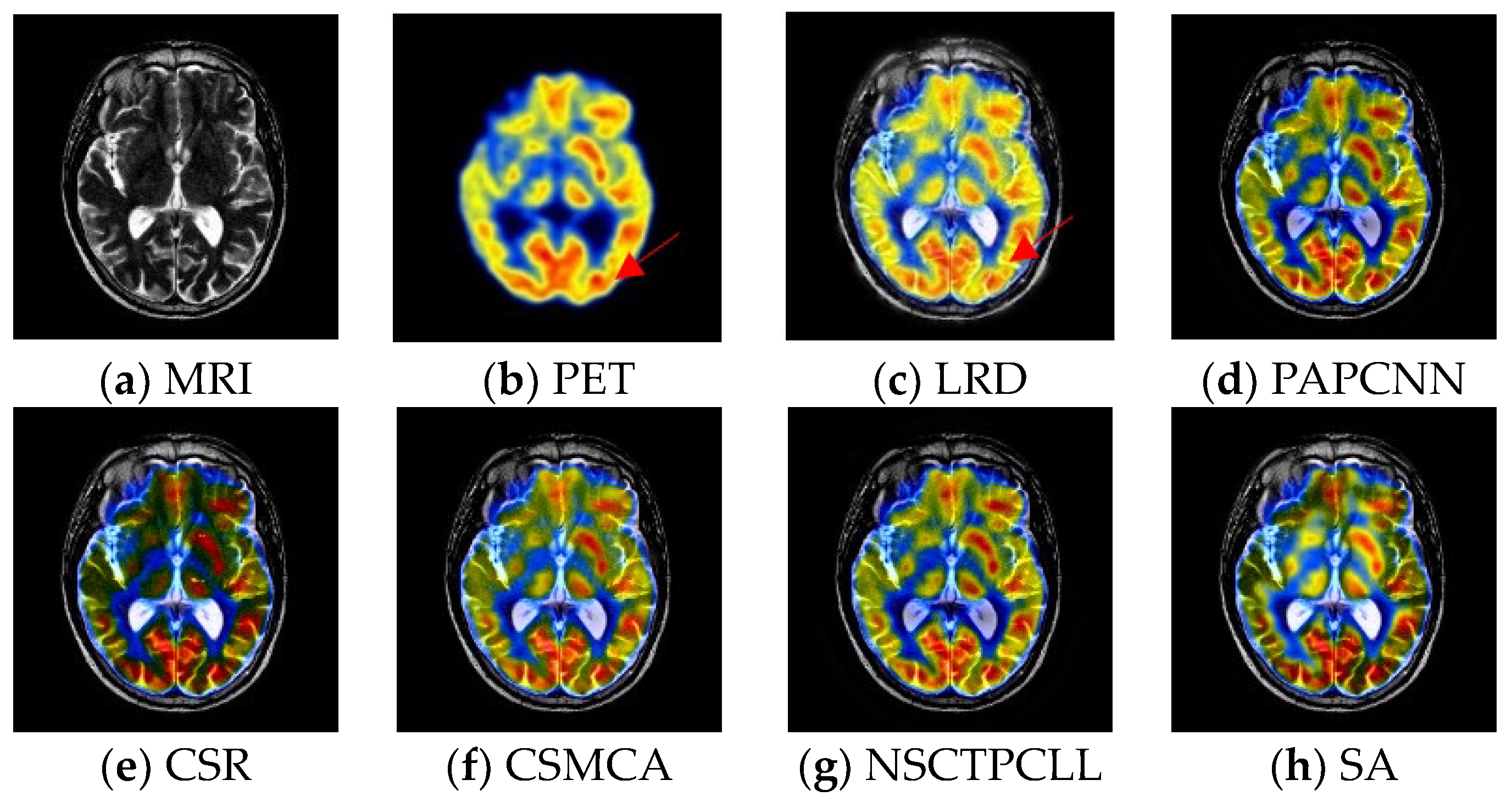

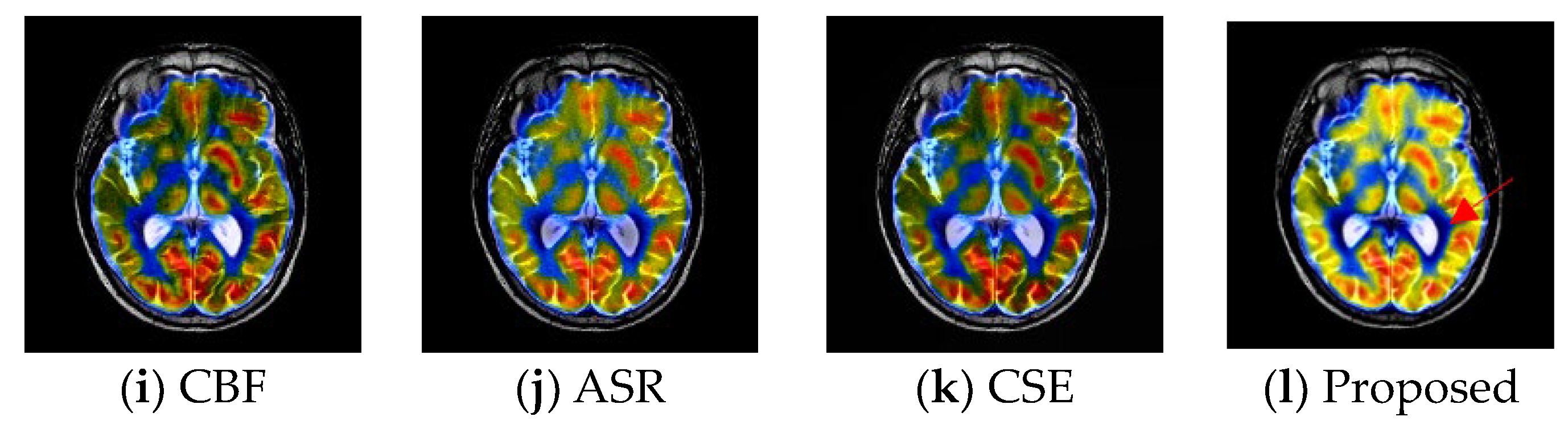

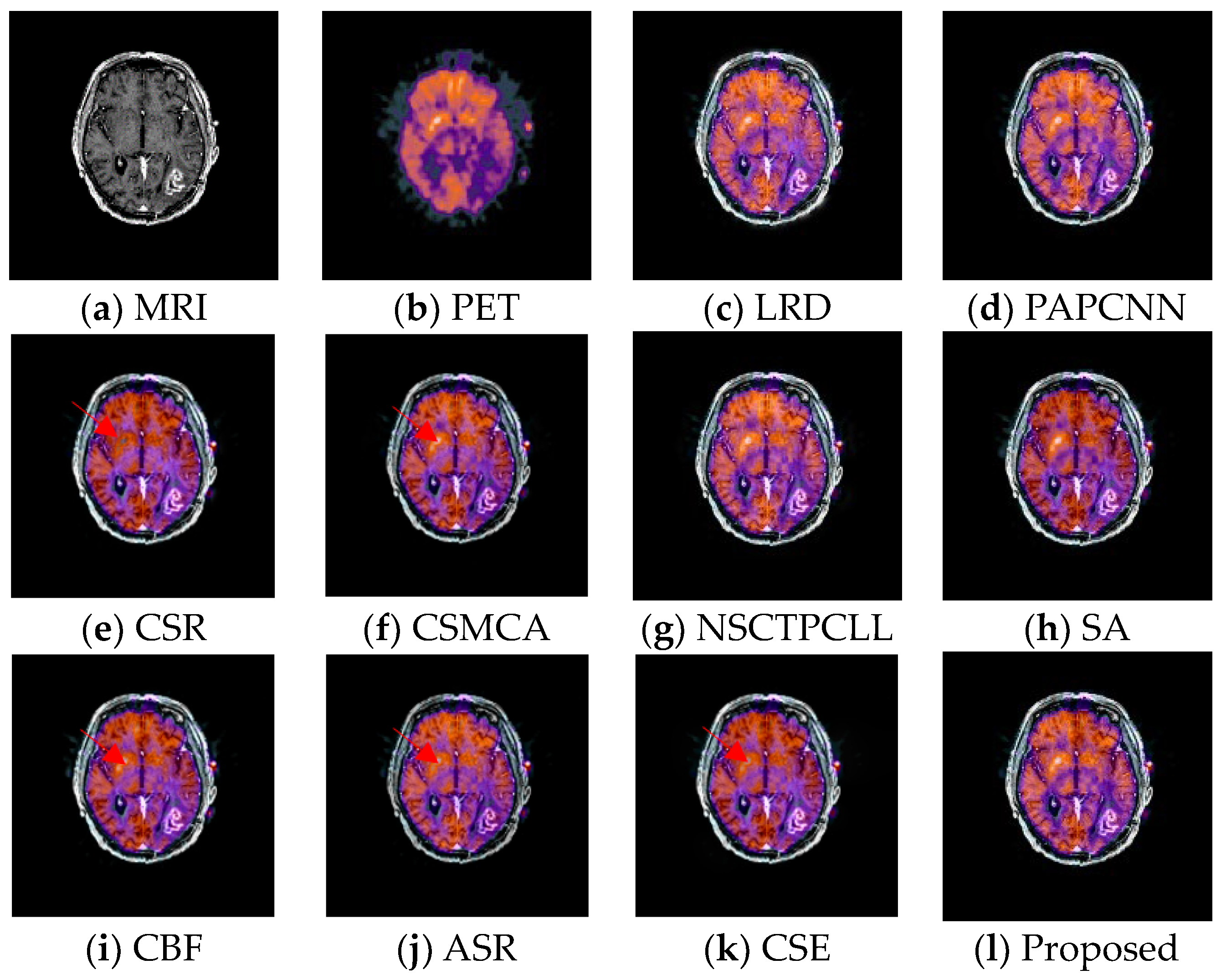

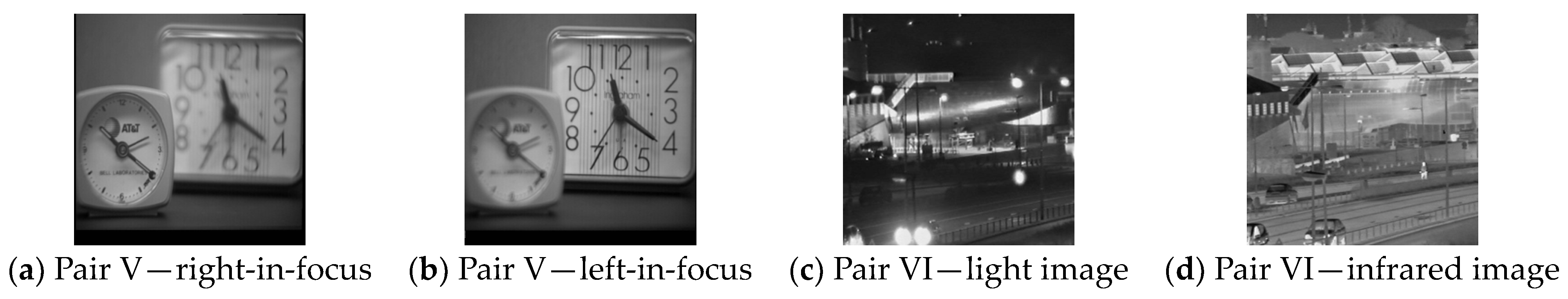

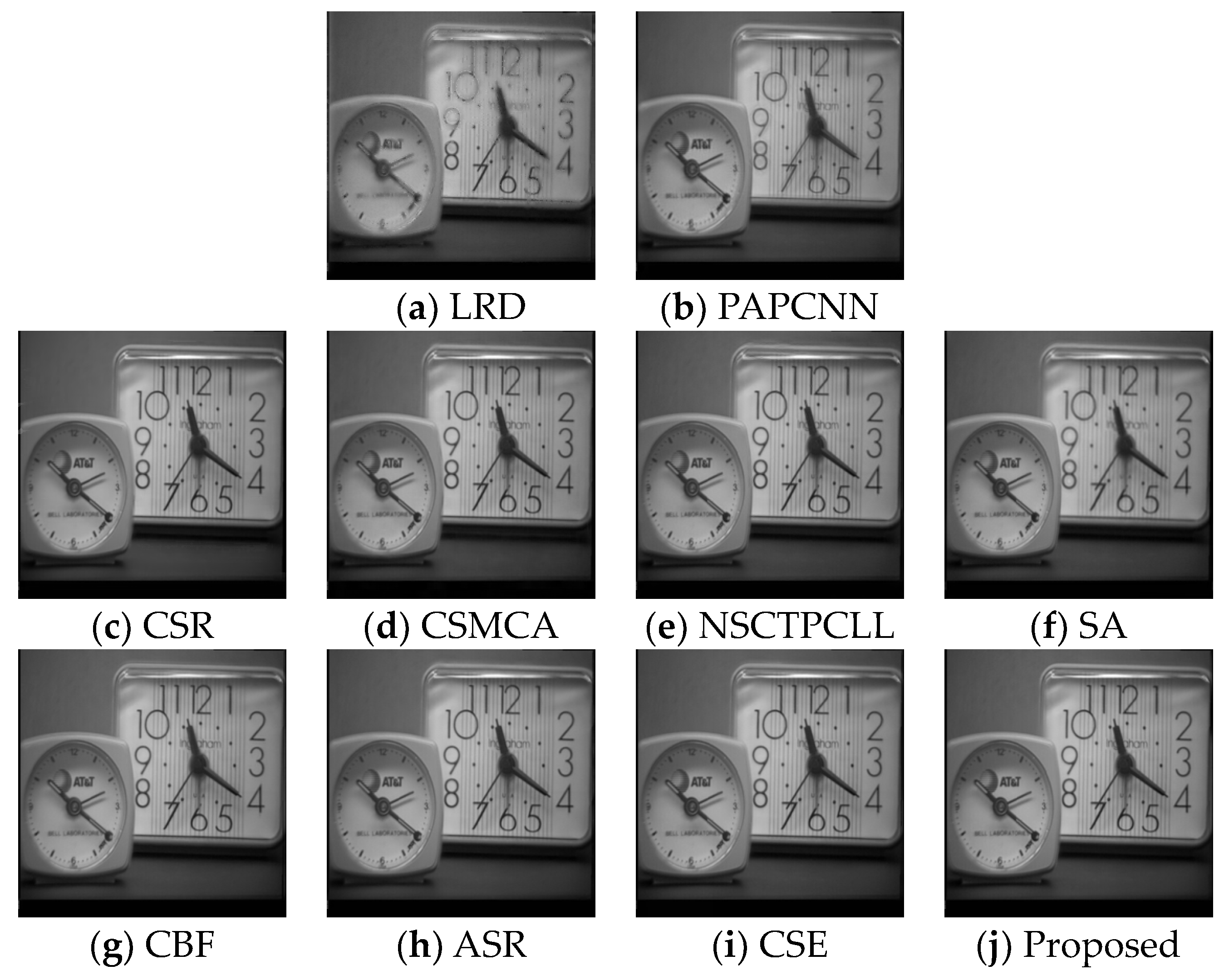

4. Experiment and Result Analysis

4.1. Data Sets

4.2. Objective Evaluation Metrics

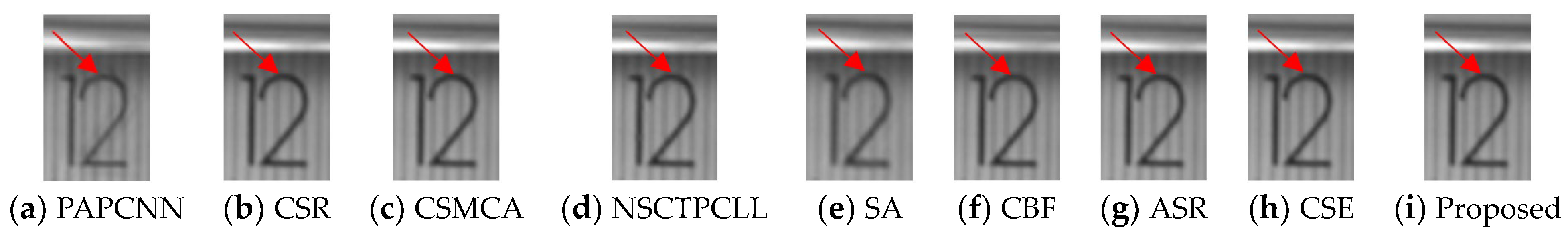

4.3. Contrast Methods

4.4. Experimental Results

4.5. More Ablation Experiments and Further Discussions

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Li, W.S.; Du, J.; Zhao, Z.M.; Long, J. Fusion of medical sensors using adaptive cloud model in local Laplacian pyramid domain. IEEE Trans. Biomed. Eng. 2019, 66, 1172–1183. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.Q.; Li, X.F.; Zhu, R.; Zhang, X.; Wang, Z.; Zhang, S. Medical image fusion algorithm based on L0 gradient minimization for CT and MRI. Multimed. Tools Appl. 2021, 80, 21135–21164. [Google Scholar] [CrossRef]

- Shahdoosti, H.R.; Ghassemian, H. Combining the spectral PCA and spatial PCA fusion methods by an optimal filter. Inf. Fusion 2016, 27, 150–160. [Google Scholar] [CrossRef]

- Vijayarajan, R.; Muttan, S. Spatial weighted fuzzy c-means clustering based principal component averaging image fusion. Int. J. Tomogr. Simul. 2016, 29, 104–113. [Google Scholar]

- Juneja, S.; Anand, R. Contrast enhancement of an image by DWT-SVD and DCT-SVD. In Data Engineering and Intelligent Computing; Satapathy, S., Bhateja, V., Raju, K., Janakiramaiah, B., Eds.; Advances in Intelligent Systems and Computing; Springer: Singapore, 2017; p. 542. [Google Scholar] [CrossRef]

- Li, X.X.; Guo, X.P.; Han, P.F.; Wang, X.; Li, H.; Luo, T. Laplacian re-decomposition for multimodal medical image fusion. IEEE Trans. Instrum. Meas. 2020, 69, 6880–6890. [Google Scholar] [CrossRef]

- Naveenadevi, R.; Nirmala, S.; Babu, G.T. Fusion of CT-PET lungs Tumor images using dual tree complex wavelet transform. Res. J. Pharm. Biol. Chem. Sci. 2017, 8, 310–316. [Google Scholar]

- Chavan, S.S.; Mahajan, A.; Talbar, S.N.; Desai, S.; Thakur, M.; D’Cruz, A. Nonsubsampled rotated complex wavelet transform (NSRCxWT) for medical image fusion related to clinical aspects in neurocysticercosis. Comput. Biol. Med. 2017, 81, 64–78. [Google Scholar] [CrossRef]

- Ganasala, P.; Prasad, A.D. Medical image fusion based on laws of texture energy measures in stationary wavelet transform domain. Int. J. Imaging Syst. Technol. 2020, 30, 544–557. [Google Scholar] [CrossRef]

- Chao, Z.; Duan, X.G.; Jia, S.F.; Guo, X.; Liu, H.; Jia, F. Medical image fusion via discrete stationary wavelet transform and an enhanced radial basis function neural network. Appl. Soft Comput. 2022, 118, 108542. [Google Scholar] [CrossRef]

- Da Cunha, A.L.; Zhou, J.; Do, M.N. The nonsubsampled contourlet transform: Theory, design, and applications. IEEE Trans. Image Process. 2006, 15, 3089–3101. [Google Scholar] [CrossRef] [Green Version]

- Easley, G.; Labate, D.; Lim, W.Q. Sparse directional image representation using the discrete shearlet transforms. Appl. Comput. Harmon. Anal. 2008, 25, 25–46. [Google Scholar] [CrossRef] [Green Version]

- Liu, X.B.; Mei, W.B.; Du, H.Q. Structure tensor and nonsubsampled shearlet transform based algorithm for CT and MRI image fusion. Neurocomputing 2017, 235, 131–139. [Google Scholar] [CrossRef]

- Liu, X.B.; Mei, W.B.; Du, H.Q. Multi-modality medical image fusion based on image decomposition framework and nonsubsampled shearlet transform. Biomed. Signal Process. Control 2018, 40, 343–350. [Google Scholar] [CrossRef]

- Yin, M.; Liu, X.N.; Liu, Y.; Chen, X. Medical image fusion with parameter-adaptive pulse coupled neural network in nonsubsampled shearlet transform domain. IEEE Trans. Instrum. Meas. 2019, 68, 49–64. [Google Scholar] [CrossRef]

- Jose, J.; Gautam, N.; Tiwari, M.; Tiwari, T.; Suresh, A.; Sundararaj, V.; Rejeesh, M.R. An image quality enhancement scheme employing adolescent identity search algorithm in the NSST domain for multimodal medical image fusion. Biomed. Signal Process. Control 2021, 66, 102480. [Google Scholar] [CrossRef]

- Li, H.; He, X.; Tao, D.; Tang, Y.; Wang, R. Joint medical image fusion, denoising and enhancement via discriminative low-rank sparse dictionaries learning. Pattern Recognit. 2018, 79, 130–146. [Google Scholar] [CrossRef]

- Zhu, Z.Q.; Chai, Y.; Yin, H.P.; Li, Y.; Liu, Z. A novel dictionary learning approach for multi-modality medical image fusion. Neurocomputing 2016, 214, 471–482. [Google Scholar] [CrossRef]

- Daniel, E. Optimum wavelet-based homomorphic medical image fusion using hybrid genetic-grey wolf optimization algorithm. IEEE Sens. J. 2018, 18, 6804–6811. [Google Scholar] [CrossRef]

- Manchanda, M.; Sharma, R. A novel method of multimodal medical image fusion using fuzzy transform. J. Vis. Commun. Image Represent. 2016, 40, 197–217. [Google Scholar] [CrossRef]

- Xu, X.Z.; Shan, D.; Wang, G.Y.; Jiang, X. Multimodal medical image fusion using PCNN optimized by the QPSO algorithm. Appl. Soft Comput. 2016, 46, 588–595. [Google Scholar] [CrossRef]

- Liu, X.B.; Mei, W.B.; Du, H.Q. Multimodality medical image fusion algorithm based on gradient minimization smoothing filter and pulse coupled neural network. Biomed. Signal Process. Control 2016, 30, 140–148. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, X.; Ward, R.; Wang, Z.J. Image fusion with convolutional sparse representation. IEEE Signal Process. Lett. 2016, 23, 1882–1886. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, X.; Ward, R.; Wang, Z.J. Medical image fusion via convolutional sparsity based morphological component analysis. IEEE Signal Process. Lett. 2019, 26, 485–489. [Google Scholar] [CrossRef]

- Li, J.; Peng, Y.X.; Song, M.H.; Liu, L. Image fusion based on guided filter and online robust dictionary learning. Infrared Phys. Technol. 2020, 105, 103171. [Google Scholar] [CrossRef]

- Zhang, S.; Huang, F.Y.; Liu, B.Q.; Li, G.; Chen, Y.; Chen, Y.; Zhou, B.; Wu, D. A multi-modal image fusion framework based on guided filter and sparse representation. Opt. Laser Eng. 2021, 137, 106354. [Google Scholar] [CrossRef]

- Li, X.S.; Zhou, F.Q.; Tan, H.S.; Zhang, W.; Zhao, C. Multimodal medical image fusion based on joint bilateral filter and local gradient energy. Inf. Sci. 2021, 569, 302–325. [Google Scholar] [CrossRef]

- Zhu, Z.Q.; Zheng, M.Y.; Qi, G.Q.; Wang, D.; Xiang, Y. A phase congruency and local Laplacian energy based multi-modality medical image fusion method in NSCT domain. IEEE Access 2019, 7, 20811–20824. [Google Scholar] [CrossRef]

- Zhu, R.; Li, X.F.; Zhang, X.L.; Wang, J. HID: The hybrid image decomposition model for MRI and CT fusion. IEEE J. Biomed. Health 2022, 26, 727–739. [Google Scholar] [CrossRef] [PubMed]

- Ullah, H.; Ullah, B.; Wu, L.W.; Abdalla, F.Y.; Ren, G.; Zhao, Y. Multi-modality medical images fusion based on local-features fuzzy sets and novel sum-modified-Laplacian in non-subsampled shearlet transform domain. Biomed. Signal Process. Control 2020, 57, 101724. [Google Scholar] [CrossRef]

- Cao, Y.; Ma, S.W.; Liu, J.J.; Liu, Y.; Zhang, X. Fusion of medical images based on salient features extraction by PSO optimized fuzzy logic in NSST domain. Biomed. Signal Process. Control 2021, 69, 102852. [Google Scholar]

- Ganasala, P.; Prasad, A.D. Contrast enhanced multi-sensor image fusion based on guided image filter and NSST. IEEE Sens. J. 2020, 20, 939–946. [Google Scholar] [CrossRef]

- Daubechies, I.; Han, B.; Ron, A.; Shen, Z. Framelets: MRA-based constructions of wavelet frames. Appl. Comput. Harmon. Anal. 2003, 14, 1–46. [Google Scholar] [CrossRef] [Green Version]

- He, K.M.; Sun, J.; Tang, X.O. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1397–1409. [Google Scholar] [CrossRef]

- Yin, H.; Gong, Y.H.; Qiu, G.P. Side window filtering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; Volume 1, pp. 8758–8766. [Google Scholar]

- Sun, Z.G.; Han, B.; Li, J.; Zhang, J.; Gao, X. Weighted guided image filtering with steering kernel. IEEE Trans. Image Process. 2020, 29, 500–508. [Google Scholar] [CrossRef]

- The Whole Brain Atlas. Available online: http://www.med.harvard.edu/AANLIB/home.htm (accessed on 16 March 2023).

- Han, Y.; Cai, Y.Z.; Cao, Y.; Xu, X. A new image fusion performance metric based on visual information fidelity. Inf. Fusion 2013, 14, 127–135. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Mou, X.Q.; Zhang, D. FSIM: A feature similarity index for image quality assessment. IEEE Trans. Image Process. 2011, 20, 2378–2386. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Aslantas, V.; Bendes, E. A new image quality metric for image fusion: The sum of the correlations of differences. AEU-Int. J. Electron. Commun. 2015, 69, 1890–1896. [Google Scholar] [CrossRef]

- Zheng, Y.F.; Essock, E.A.; Hansen, B.C.; Haun, A.M. A new metric based on extended spatial frequency and its application to DWT based fusion algorithm. Inf. Fusion 2007, 8, 177–192. [Google Scholar] [CrossRef]

- Li, W.; Xie, Y.G.; Zhou, H.L.; Han, Y.; Zhan, K. Structure-aware image fusion. Optik 2018, 172, 1–11. [Google Scholar] [CrossRef]

- Kumar, B.K.S. Image fusion based on pixel significance using cross bilateral filter. Signal Image Video Process. 2015, 9, 1193–1204. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, Z.F. Simultaneous image fusion and denoising with adaptive sparse representation. IET Image Process. 2015, 9, 347–357. [Google Scholar] [CrossRef] [Green Version]

- Sufyan, A.; Imran, M.; Shah, S.A.; Han, Y.; Zhan, K. A novel multimodality anatomical image fusion method based on contrast and structure extraction. Int. J. Imaging Syst. Technol. 2022, 32, 324–342. [Google Scholar] [CrossRef]

| LRD | PAPCNN | CSR | CSMCA | NSCT PCLL | SA | CBF | ASR | CSE | Proposed | ||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Pair I | SF | 26.8121 (9) | 29.9538 (3) | 34.6401 (1) | 28.0534 (7) | 30.0566 (2) | 28.1254 (6) | 29.7505 (4) | 27.0640 (8) | 25.1767 (10) | 29.3681 (5) |

| FSIM | 0.9520 (2) | 0.9416 (9) | 0.9462 (6) | 0.9563 (1) | 0.9420 (8) | 0.9476 (4) | 0.9455 (7) | 0.9466 (5) | 0.9416 (9) | 0.9513 (3) | |

| SCD | 1.5737 (1) | 1.0319 (5) | 0.9752 (8) | 1.1536 (3) | 1.0360 (4) | 0.8739 (9) | 0.8270 (10) | 1.0059 (6) | 0.9889 (7) | 1.4117 (2) | |

| VIFF | 0.6033 (1) | 0.4146 (6) | 0.4240 (5) | 0.4417 (3) | 0.4251 (4) | 0.2539 (8) | 0.2238 (9) | 0.3353 (7) | 0.1860 (10) | 0.5555 (2) | |

| RANK | 13 (2nd) | 23 | 20 | 14 | 18 | 27 | 30 | 26 | 36 | 12 (1st) | |

| Pair II | SF | 18.6469 (8) | 19.7244 (4) | 23.4363 (1) | 19.5172 (6) | 19.5219 (5) | 19.8635 (3) | 21.2294 (2) | 18.6255 (9) | 16.0243 (10) | 18.9910 (7) |

| FSIM | 0.9317 (2) | 0.9295 (4) | 0.9252 (10) | 0.9300 (3) | 0.9292 (5) | 0.9269 (7) | 0.9253 (9) | 0.9266 (8) | 0.9347 (1) | 0.9285 (6) | |

| SCD | 1.7753 (3) | 1.6007 (9) | 1.6585 (6) | 1.7815 (1) | 1.6200 (8) | 1.6759 (5) | 1.6295 (7) | 1.5430 (10) | 1.7632 (4) | 1.7776 (2) | |

| VIFF | 0.5257 (3) | 0.4287 (8) | 0.5810 (2) | 0.5103 (4) | 0.4442 (7) | 0.4953 (5) | 0.4753 (6) | 0.3444 (10) | 0.4072 (9) | 0.5821 (1) | |

| RANK | 16 (2nd) | 25 | 19 | 14 (1st) | 25 | 20 | 24 | 37 | 24 | 16 (2nd) | |

| Pair III | SF | 31.3911 (10) | 32.1327 (8) | 33.8108 (2) | 32.7217 (5) | 32.1942 (6) | 34.2373 (1) | 33.5904 (3) | 32.0246 (9) | 33.0751 (4) | 32.1373 (7) |

| FSIM | 0.9005 (4) | 0.8946 (9) | 0.9088 (1) | 0.9026 (3) | 0.8943 (10) | 0.8970 (7) | 0.8989 (5) | 0.8969 (8) | 0.9046 (2) | 0.8983 (6) | |

| SCD | 1.7136 (1) | 1.3566 (5) | 0.4248 (10) | 1.4074 (3) | 1.3682 (4) | 0.9932 (9) | 1.0607 (8) | 1.3235 (6) | 1.0931 (7) | 1.6352 (2) | |

| VIFF | 0.4528 (2) | 0.4040 (4) | 0.2106 (10) | 0.2774 (5) | 0.4075 (3) | 0.2364 (9) | 0.2479 (7) | 0.2658 (6) | 0.2372 (8) | 0.5098 (1) | |

| RANK | 17 (2nd) | 26 | 23 | 16 (1st) | 23 | 26 | 23 | 29 | 21 | 16 (1st) | |

| Pair IV | SF | 33.7943 (6) | 33.5398 (8) | 34.0098 (3) | 33.1408 (9) | 33.6981 (7) | 34.4743 (1) | 33.8475 (5) | 32.0853 (10) | 33.8869 (4) | 34.1113 (2) |

| FSIM | 0.9806 (7) | 0.9813 (1) | 0.9812 (2) | 0.9807 (6) | 0.9800 (9) | 0.9809 (4) | 0.9804 (8) | 0.9809 (4) | 0.9787 (10) | 0.9810 (3) | |

| SCD | 1.5594 (1) | 1.5447 (2) | 0.6316 (10) | 0.9386 (6) | 1.4146 (3) | 0.7970 (9) | 0.9291 (7) | 0.9634 (5) | 0.8268 (8) | 1.2932 (4) | |

| VIFF | 0.7715 (2) | 0.7768 (1) | 0.7028 (6) | 0.5910 (9) | 0.7369 (4) | 0.7162 (5) | 0.7022 (7) | 0.5006 (10) | 0.6815 (8) | 0.7689 (3) | |

| RANK | 16 (2nd) | 12 (1st) | 21 | 30 | 23 | 19 | 27 | 29 | 30 | 12 (1st) |

| LRD | PAPCNN | CSR | CSMCA | NSCT PCLL | SA | CBF | ASR | CSE | Proposed | ||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Pair V | SF | 12.7985 (10) | 13.4532 (9) | 15.8582 (4) | 15.9873 (3) | 16.0194 (2) | 16.1320 (1) | 15.2466 (7) | 15.7746 (6) | 15.7830 (5) | 13.5003 (8) |

| FSIM | 0.9896 (2) | 0.9885 (4) | 0.9869 (8) | 0.9865 (9) | 0.9859 (10) | 0.9872 (7) | 0.9890 (3) | 0.9884 (5) | 0.9880 (6) | 0.9908 (1) | |

| SCD | 0.1550 (10) | 0.2687 (9) | 0.4864 (2) | 0.4446 (6) | 0.4572 (5) | 0.4306 (7) | 0.3798 (8) | 0.4795 (3) | 0.4677 (4) | 0.5466 (1) | |

| VIFF | 0.7818 (10) | 0.8047 (9) | 0.9391 (4) | 0.9291 (5) | 0.9394 (3) | 0.9504 (1) | 0.9041 (8) | 0.9235 (6) | 0.9473 (2) | 0.9190 (7) | |

| RANK | 32 | 31 | 18 | 23 | 20 | 16 (1st) | 26 | 20 | 17 (2nd) | 17 (2nd) | |

| Pair VI | SF | 20.2025 (7) | 20.6165 (6) | 23.7851 (1) | 20.8746 (5) | 21.5583 (3) | 20.9258 (4) | 22.2221 (2) | 19.9784 (8) | 18.9172 (10) | 19.4269 (9) |

| FSIM | 0.9392 (7) | 0.9422 (3) | 0.9387 (9) | 0.9439 (2) | 0.9490 (1) | 0.9404 (6) | 0.9335 (10) | 0.9391 (8) | 0.9419 (4) | 0.9415 (5) | |

| SCD | 1.3405 (3) | 1.3159 (5) | 0.9424 (10) | 1.5042 (1) | 1.0946 (8) | 1.0309 (9) | 1.1058 (7) | 1.4101 (2) | 1.3217 (4) | 1.1503 (6) | |

| VIFF | 0.4514 (8) | 0.5622 (4) | 0.5071 (6) | 0.5910 (2) | 0.5908 (3) | 0.5461 (5) | 0.4161 (9) | 0.4134 (10) | 0.4881 (7) | 0.6608 (1) | |

| RANK | 25 | 18 | 26 | 10 (1st) | 15 (2nd) | 24 | 28 | 28 | 25 | 21 |

| LRD | PAPCNN | CSR | CSMCA | NSCTPCLL | SA | CBF | ASR | CSE | Proposed |

|---|---|---|---|---|---|---|---|---|---|

| 58.693 | 7.901 | 23.695 | 76.551 | 3.813 | 0.489 1st | 8.287 | 68.578 | 0.774 2nd | 2.458 3rd |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kong, W.; Li, Y.; Lei, Y. Medical Image Fusion Using SKWGF and SWF in Framelet Transform Domain. Electronics 2023, 12, 2659. https://doi.org/10.3390/electronics12122659

Kong W, Li Y, Lei Y. Medical Image Fusion Using SKWGF and SWF in Framelet Transform Domain. Electronics. 2023; 12(12):2659. https://doi.org/10.3390/electronics12122659

Chicago/Turabian StyleKong, Weiwei, Yiwen Li, and Yang Lei. 2023. "Medical Image Fusion Using SKWGF and SWF in Framelet Transform Domain" Electronics 12, no. 12: 2659. https://doi.org/10.3390/electronics12122659

APA StyleKong, W., Li, Y., & Lei, Y. (2023). Medical Image Fusion Using SKWGF and SWF in Framelet Transform Domain. Electronics, 12(12), 2659. https://doi.org/10.3390/electronics12122659