Evaluation and Comparison of Lattice-Based Cryptosystems for a Secure Quantum Computing Era

Abstract

:1. Introduction

- How much is the science of Cryptography affected by quantum computers ?

- Which cryptosystems are efficient and secure for the quantum era?

- Which are the most known lattice-based cryptographic schemes and how do they function?

- How can we evaluate NTRU, LWE, and GGH cryptosystem?

- What are their strengths and weaknesses ?

2. The Evolution of Quantum Computing in Cryptography

2.1. Quantum Cryptography

2.2. Quantum Key Distribution

3. Cryptographic Schemes in Quantum Era

3.1. Code-Based Cryptosystems

3.2. Hash-Based Cryptosystems

3.3. Multivariate Cryptosystems

3.4. Lattice-Based Cryptosystems

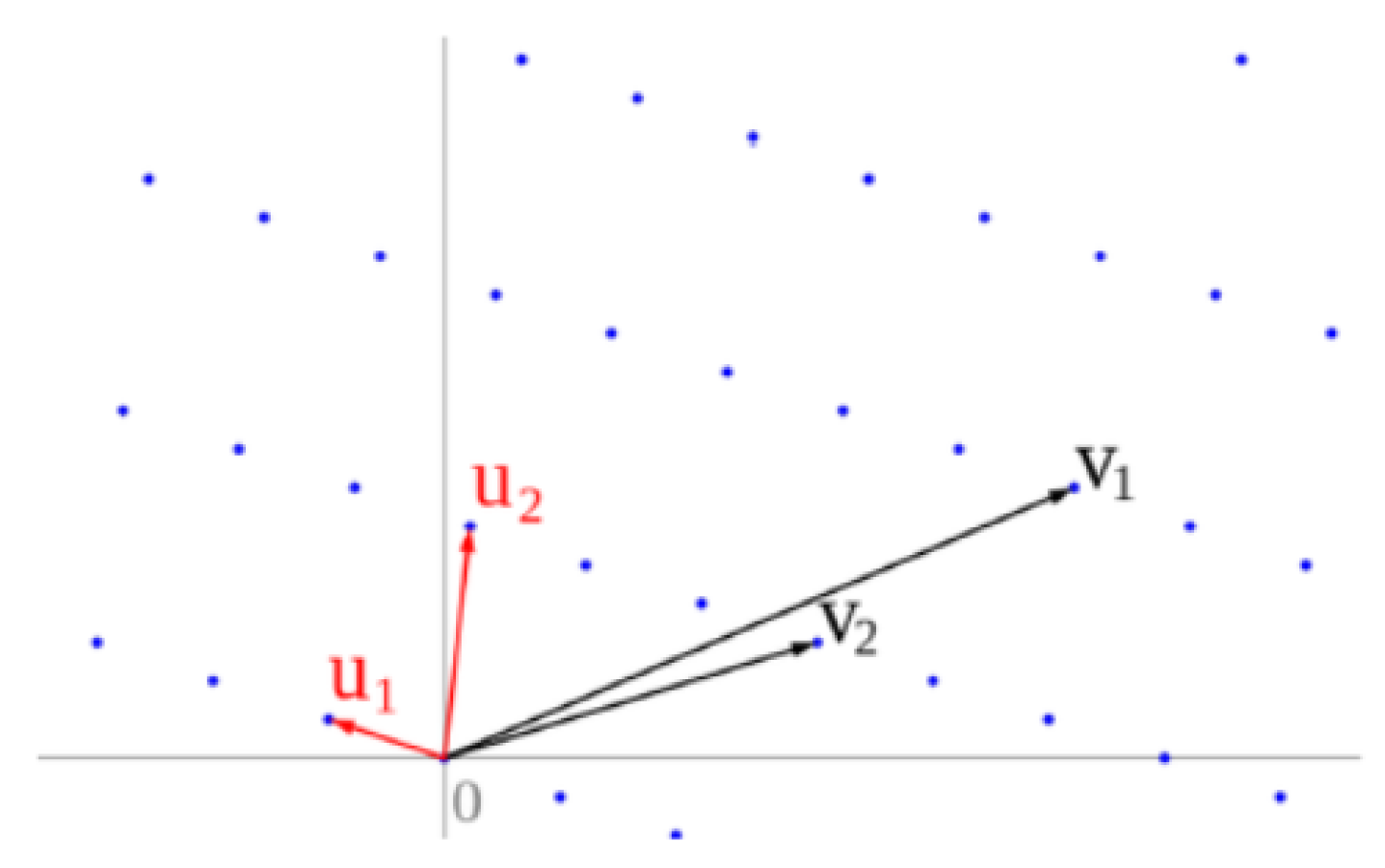

4. Lattices

4.1. Shortest Vector Problem (SVP)

4.2. Closest Vector Problem (CVP)

| Algorithm 1 Babai’s Round-off Algorithm. |

Input: basis , target vector Output: approximate closest lattice point of c in 1: procedure RoundOff 2: Compute inverse of 3: 4: return v 5: end procedure |

4.3. Lattice Reduction

5. The NTRU Cryptosystem

5.1. Description

- Alice chooses randomly two polynomials and . These two polynomials are Alice’s private key.

- Alice computes the inverse polynomials

- Alice computes and the polynomial is Alice’s public key. Alice’s private key is the pair and by only using this key, she can decrypt messages. Otherwise, she can store it, which is probably and compute when she needs it.Alice publishes her key h.

- Bob wants to encrypt a message and chooses his plaintext . The is a polynomial with coefficients such that .

- Bob chooses a random polynomial , which is called ephemeral key, and computesand this is the encrypted message that Bob sends to Alice.

- Alice computes

- Alice chooses the coefficients of a in the interval from (center lifts to an element of R).

- Alice computesand she recovers the message m as if the parameters have been chosen correctly; the polynomial equals the plaintext .

5.2. Discrete Implementation

- Assume the trusted party chooses the parameters . As we can see, and are prime numbers, and the condition is satisfied as it is .

- Alice chooses the polynomialsThese polynomials, are the private key of Alice.

- Alice computes the inversesAlice can store as her private key.

- Alice computesand publishes her public key .

- Bob decides to encrypt the message and uses the ephemeral key .

- Bob computes and sends to Alice the encrypted messagethat is

- Alice receives the ciphertext and computes

- Therefore, Alice centerlifts modulo 61 to obtain

- She reduces modulo 3 and computesand recovers Bob’s message

5.3. Security

- Brute-Force Attack. In this type of attack, all possible values of the private key are tested until the correct one is found. Brute-force attacks are generally not practical for NTRU, as the size of the key space is very large [50].

- Key Recovery Attack. This type of attack relies on exploiting vulnerabilities in the key-generation process of NTRU. For example, if assuming the arbitrary number generator used to create the confidential key is frail, a fraudulent user may be able to recover the private key [51].

6. The LWE Cryptosystem

6.1. The Learning with Errors Problem

6.2. Description

- Choose q, a prime number between and .

- Let for some arbitrary constant .

- The probability distribution is chosen to be for

- Alice chooses uniformly at random . s is the private key.

- Alice generates a public key by choosing m vectors independently from the uniform distribution. She also chooses elements (error offsets) independently according to . The public key is , where .In matrix form, the public key is the LWE sample , where s is the secret vector.

- Bob, in order to encrypt a bit, chooses a random set S uniformly among all subsets of . The encryption is if the bit is 0 and if the bit is 1.In matrix form, Bob can encrypt a bit m by calculating two LWE problems: one using A as random public element, and one using b. Bob generates his own secret vectors and e and make the LWE samples , . Bob has to add the message that wants to encrypt to one of these samples, where is a random integer between 0 and q. The encrypted message of Bob consists of the two samples , .

- Alice wants to decrypt Bob’s ciphertext. The decryption of a pair is 0 if is closer to 0 than to modulo q. In another case, the decryption is 1.In matrix form, Alice firstly calculates . As long as is small enough, Alice recovers the message as .

6.3. Discrete Implementation

- Alice chooses the private key .

- Let so Alice generates the public key with the aid of three vectors and three elements (error terms). She chooses: and , and and and . Therefore, Alice’s public key is:

- Bob wants to encrypt 0 so he takes the subset . So, he computes

- Alice performs the decryption algorithm by computingand obviously the decryption is 0 since the output value is closer to 0 (in this case equal to 0) than to modulo 13.

6.4. Implementations and Variants

The RING-LWE Cryptosystem

- A secret vector s with n length is chosen with modulo q integer entries in ring , where . This is the private key of the system.

- An element is chosen and a random small element from the error distribution and we compute .The public key of the system is the pair .

- Let m be the n bit message that is meant for encryption.

- The message m is considered an element of R and the bits are used as coefficients of a polynomial of a degree less than n.

- The elements are generated from error distribution.

- The is computed.

- The is computed and it is sent to receiver.

- The second party receives the payload and computes . Each is evaluated and if , then the bits are recovered back to 1, or else 0.The Ring-LWE cryptographic scheme is similar to the LWE cryptosystem that was proposed by Regev. Their difference is that the inner products are replaced with ring products, so the result is a new ring structure, increasing the efficiency of the operations.

6.5. Security

- Dual Attack. This type of attack is based on the dual lattice and is most effective against LWE instances with small size of plaintext messages.Thus, hybrid dual attacks are appropriate for spare and small secrets, and in a hybrid attack, one estimates part of the secret without knowledge and performs some attacks on the leftover part [58] The cost of attacking the remaining portion of the secret is decreased because guessing reduces the problem’s size. Additionally, the component of the lattice attack can be utilized for multiple guesses. When the lattice attack component is a primal attack, we call it a hybrid primal attack and a hybrid dual attack, respectively, and the optimal attack is achieved when the cost of guessing is equal to the lattice attack cost.

- Sieving Attack. This type of attack relies on the idea of sieving, which claims to find linear combinations of the LWE samples that reveal information about the secret. Sieving attacks can be used to solve the LWE problem with fewer samples than its original complexity.

- Algebraic attack. This type of attack is based on the idea of finding algebraic relations between the LWE samples that let out secret data information. Algebraic attacks can be suitable for solving the LWE problem with fewer samples than the original complexity as well.

- Side-channel attack. This type of attack exploits weaknesses in the implementation of the LWE-based scheme, such as timing attacks and others. Side-channel attacks are generally easier to mount than attacks against the LWE problem itself, but they require physical access to the device running the implementation.

- Attacks that use the BKW algorithm. This is a classic attack; it is considered to be sub-exponential and is most effective against small or small-structured LWE instances.

7. The GGH Cryptosystem

7.1. Description

- The key generation algorithm K generates a lattice L by choosing a basis matrix V that is nearly orthogonal. An integer matrix U it is chosen which has determinant and the algorithm computes . Then, the algorithm outputs and .

- The encryption algorithm E receives as input an encryption key and a plain message . It chooses a random vector and a random noise vector u. Then it computes , and encrypts the message . It outputs the ciphertext .

- The decryption algorithm D takes as input a decryption key and a ciphertext . It computes and and decrypts as . If algorithm outputs the symbol ⊥ the decryption fails and then D outputs ⊥, otherwise the algorithm outputs m.

- Alice chooses a set of linearly independent vectors which form the matrix . Alice, by calculating the Hadamard Ratio of matrix V and verifying that is not too small, checks her vector’s choice. This is Alice’s private key and we let L be the lattice generated by these vectors.

- Alice chooses an unimodular matrix U with integer coefficients that satisfies .

- Alice computes a bad basis for the lattice L, as the rows of , and this is Alice’s public key. Then, she publishes the key .

- Bob chooses a plaintext that he wants to encrypt and he chooses a small vector m (e.g., a binary vector) as his plaintext. Then, he chooses a small random “noise” vector r which acts as a random element and r has been chosen randomly between and , where is a fixed public parameter.

- Bob computes the vector using Alice’s public key and sends the ciphertext e to Alice.

- Alice, with the aid of Babai’s algorithm, uses the basis to find a vector in L that is close to e. This vector is the , since the “noise” vector r is small and since she uses a good basis. Then, she computes ans she recovers m.

7.2. Discrete Implementation

- Alice chooses a private basis and which is a good basis since and are orthogonal vectors, e.g., it is . The rows of the matrix are Alice’s private key. The lattice L spanned by and has determinant and the Hadamard ratio of the basis is

- Alice chooses the unimodular matrix U that its determinant is equal to 1, such that with .

- Alice computes the matrix W, such that . Its rows are Alice’s bad basis and , since it is and these vectors are nearly parallel, so they are suitable for a public key.

- It is very important for the noise vector to be selected carefully and that it is not shifted where the nearest point is located. For Alice’s basis that generates the lattice L, is chosen that . So, the vector is chosen to be () with and .

- Bob wants to encrypt the message . The message can be seen as a linear combination of the basis , such as and the noise vector can be added.

- The corresponding ciphertext is and Bob sends it to Alice.

- Alice, using the private basis, applies Babai’s algorithm and finds the closest lattice point. So, she solves the equation and finds and . So, the closest lattice point is and this lattice vector is close to e.

- Alice realizes that Bob must have computed as a linear combination of the public basis vectors and then solving the linear combination again , she finds and and recovers the message .

7.3. Security

- Leak information and obtain the private key V from the public key W.For this type of attack, a lattice basis reduction (LLL) algorithm is performed on the public key, the matrix W. It is possible that the output is a basis that is good enough to enable the effective solution of the necessary instances of the closest vector. It will be extremely difficult for this attack to succeed if the dimension of the lattice is sufficiently large.

- Assuming we have a small error vector r, try to extract information about the message from the ciphertext e.For this type of attack, it is useful that in the ciphertext , the error vector r is a vector with small entries. An idea is to compute and try to deduce possible values for some entries of . For example, if the j-th column of has a particularly small norm, then one can deduce that the j-th entry of is always small and hence get an accurate estimate for the j-th entry of m. To defeat this attack, one should only use some low-order bits of some entries of m to carry information, or use an appropriate randomized padding scheme

- Try to solve the Closest Vector Problem of e with respect to the lattice that is being generated by W, for example, by performing the Babai’s nearest plane algorithm or the embedding technique.

8. Evaluation, Comparison and Discussion

9. Lattice-Based Cryptographic Implementations and Future Research

- CRYSTALS-KyberThis cryptographic scheme is selected by NIST for general encryption and is based on the module Learning with Errors problem. CRYSTALS-Kyber is similar to the Ring-LWE cryptographic scheme but it is considered to be more secure and flexible. The parties that communicate can use small encrypted keys and exchange them easily with high speed.

- CRYSTALS-DilithiumThis algorithm is recommended for digital signatures and relies its security on the difficulty of lattice problems over module lattices. Like other digital signature schemes, the Dilithium signature scheme allows a sender to sign a message with their private key, and a recipient uses the sender’s public key to verify the signature but Dilithium has the minor public key and signature size of any lattice-based signature scheme that only uses uniform sampling.

- FALCONFALCON is a cryptographic protocol which is proposed for digital signatures. The FALCON cryptosystem is based on the theoretical framework of Gentry et al [80]. It is a promising post-quantum algorithm as it provides capabilities for quick signature generation and verification. The FALCON cryptographic algorithm has strong advantages such as security, compactness, speed, scalability, and RAM Economy.

- SPHINCS+SPHINCS plus is the third digital signature algorithm that was selected by NIST. SPHINCS + uses hash functions and is considered to be a bit larger and slower than FALCON and Dilithium. It is regarded as an improvement of the SPHINCS signature scheme, which was presented in 2015, as it reduces the size of the signature. One of the key points of interest of SPHINCS+ over other signature schemes is its resistance to quantum attacks by depending on the hardness of a one-way function.

10. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Sabani, M.; Savvas, I.K.; Poulakis, D.; Makris, G. Quantum Key Distribution: Basic Protocols and Threats. In Proceedings of the 26th Pan-Hellenic Conference on Informatics (PCI 2022), Athens, Greece, 25–27 November 2022; ACM: New York, NY, USA, 2022; pp. 383–388. [Google Scholar]

- Nielsen, M.; Chuang, I. Quantum Computation and Quantum Information; Cambridge University Press: Cambridge, UK, 2011. [Google Scholar]

- Sabani, M.; Savvas, I.K.; Poulakis, D.; Makris, G.; Butakova, M. The BB84 Quantum Key Protocol and Potential Risks. In Proceedings of the 8th International Congress on Information and Communication Technology (ICICT 2023), London, UK, 20–23 February 2023. [Google Scholar]

- Preskill, J. Quantum computing and the entanglement frontier. In Proceedings of the 25th Solvay Conference on Physics, Brussels, Belgium, 19–25 October 2011; Available online: https://arxiv.org/abs/1203.5813 (accessed on 26 March 2012).

- Poulakis, D. Cryptography, the Science of Secure Communication, 1st ed.; Ziti Publications: Thessaloniki, Greece, 2004. [Google Scholar]

- Shor, P.W. Polynomial-time algorithms for prime factorization and discrete logarithms on a quantum computer. J. Comput. SIAM 1997, 26, 1484–1509. [Google Scholar] [CrossRef] [Green Version]

- Alkim, E.; Dukas, L.; Pöppelmann, T.; Schwabe, P. Post-Quantum Key Exchange—A New Hope. In Proceedings of the USENIX Security Symposium 2016, Austin, TX, USA, 10–12 August 2016; Available online: https://eprint.iacr.org/2015/1092.pdf (accessed on 11 November 2015).

- Berstein, D.J.; Buchmann, J.; Brassard, G.; Vazirani, U. Post-Quantum Cryptography; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Zheng, Z.; Tian, K.; Liu, F. Modern Cryptography Volume 2 a Classical Introduction to Informational and Mathematical Principle; Springer: Singapore, 2023. [Google Scholar]

- Silverman, J.H.; Piher, J.; Hoffstein, J. An Introduction to Mathematical Cryptopraphy, 1st ed.; Springer: New York, NY, USA, 2008. [Google Scholar]

- Galbraith, S. Mathematics of Public Key Cryptography; Cambridge University Press: Cambridge, UK, 2012. [Google Scholar]

- Kannan, R. Algorithmic Geometry of Numbers. In Annual Reviews of Computer Science; Annual Review Inc.: Palo Alto, CA, USA, 1987; pp. 231–267. [Google Scholar]

- Rivest, R.L.; Shamir, A.; Adleman, A. Method for Obtaining Digital Signatures and Public-Key Cryptosystems. J. ACM 1978, 21, 120–126. [Google Scholar] [CrossRef] [Green Version]

- Sabani, M.; Galanis, I.P.; Savvas, I.K.; Garani, G. Implementation of Shor’s Algorithm and Some Reliability Issues of Quantum Computing Devices. In Proceedings of the 25th Pan-Hellenic Conference on Informatics (PCI 2021), Volos, Greece, 26–28 November 2021; ACM: New York, NY, USA, 2021; pp. 296–392. [Google Scholar]

- Wiesner, S. Conjugate coding. Sigact News 1983, 15, 78–88. [Google Scholar] [CrossRef]

- Van Assche, G. Quantum Cryptography and Secret-Key Distillation, 3rd ed.; Cambridge University Press: New York, NY, USA, 2006. [Google Scholar]

- Scherer, W. Mathematics of Quantum Computing, An Introduction; Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- Bennett, C.H.; Brassard, G.; Ekert, A. Quantum cryptography. Sci. Am. 1992, 50–57. Available online: https://https://www.jstor.org/stable/e24939235 (accessed on 2 October 1992). [CrossRef]

- Bennett, C.H.; Brassard, G.; Breidbart, S.; Wiesner, S. Quantum cryptography, or Unforgeable subway tokens. In Advances in Cryptology; Conference Paper; Springer Science + Business Media: New York, NY, USA, 1982; pp. 267–275. [Google Scholar] [CrossRef]

- Bennett, C.H.; Brassard, G. Quantum Cryptography: Public Key Distribution and Coin Tossing. In Proceedings of the International Conference in Computer Systems and Signal Processing, Bangalore, India, 10–12 December 1984. [Google Scholar]

- Teklu, B. Continuous-variable entanglement dynamics in Lorenzian environment. Phys. Lett. A 2022, 432, 128022. [Google Scholar] [CrossRef]

- Vasile, R.; Olivares, S.; Paris, M.G.A.; Maniscalco, S. Continuous variable quantum key distribution in non-Markovian channels. Phys. Rev. A 2011, 83, 042321. [Google Scholar] [CrossRef] [Green Version]

- Teklu, B.; Bina, M.; Paris, M.G.A. Noisy propagation of Gaussian states in optical media with finite bandwidth. Sci. Rep. 2022, 12, 11646. Available online: https://www.nature.com/articles/s41598-022-15865-5 (accessed on 8 June 2023). [CrossRef]

- Adnane, H.; Teklu, B.; Paris, M.G. Quantum phase communication assisted by non-deterministic noiseless amplifiers. J. Opt. Soc. Am. B 2019, 36, 2938–2945. [Google Scholar] [CrossRef]

- Teklu, B.; Trapani, J.; Olivares, S.; Paris, M.G.A. Noisy quantum phase communication channels. Phys. Scr. 2015, 90, 074027. [Google Scholar] [CrossRef]

- Trapani, J.; Teklu, B.; Olivares, S.; Paris, M.G.A. Quantum phase communication channels in the presence of static and dynamical phase diffusion. Phys. Rev. A 2015, 92, 012317. [Google Scholar] [CrossRef] [Green Version]

- Diffie, W.; Hellman, M. New Directions in Cryptography. IEEE Trans. Inf. Theory 1976, 22, 644–654. [Google Scholar] [CrossRef] [Green Version]

- Trappe, W.; Washington, L.C. Introduction to Cryptography with Coding Theory; Pearson Education: New York, NY, USA, 2006. [Google Scholar]

- McEliece, R. A public key cryptosystem based on alegbraic coding theory. DSN Prog. Rep. 1978, 42–44, 114–116. [Google Scholar]

- Niederreiter, H. Knapsack-type cryptosystems and algebraic coding theory. Probl. Control Inf. Theory Probl. Upr. I Teor. Inf. 1986, 15, 159–166. [Google Scholar]

- Merkle, R. A certified digital signature. In Advances in Cryptology—CRYPTO’89, Proceedings of the CRYPTO ’89, 9th Annual International Cryptology Conference, Santa Barbara, California, USA, 20–24 August 1989; Springer: Berlin/Heidelberg, Germany, 1989; pp. 218–238. [Google Scholar]

- Bai, S.; Gong, Z.; Hu, L. Revisiting the Security of Full Domain Hash. In Proceedings of the 6th International Conference on Security, Privacy and Anonymity in Computation, Communication and Storage, Nanjing, China, 18–20 December 2013. [Google Scholar]

- Matsumoto, T.; Imai, H. Public quadratic polynomials-tuples for efficient signature verification and message encryption. Adv. Cryptol. Eur. Crypt’88 1988, 330, 419–453. [Google Scholar]

- Patarin, J. Hidden field equations and isomorphism of polynomials. In Proceedings of the Eurocrypto’96, Zaragoza, Spain, 12–16 May 1996. [Google Scholar]

- Nguyen, P.Q.; Stern, J. The two faces of Lattices in Cryptology. In Proceedings of the International Cryptography and Lattices Conference, Rhode, RI, USA, 29–30 March 2001; pp. 146–180. [Google Scholar]

- Micciancio, D.; Regev, O. Lattice-based cryptography. In Post-Quantum Cryptography; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Lyubashevsky, V. A Decade of Lattice Cryptography. In Advances in Cryptology—EUROCRYPT 2015; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Peikert, C. Lattice-Based Cryptography: A Primer. IACR Cryptol. ePrint Arch. 2016. Available online: https://eprint.iacr.org/2015/939.pdf (accessed on 17 February 2016).

- Micciancio, D. On the Hardness of the Shortest Vector Problem. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 1998. [Google Scholar]

- Micciancio, D. The shortest vector problem is NP-hard to approximate within some constant. In Proceedings of the 39th FOCS IEEE, Palo Alto, CA, USA, 8–11 November 1998. [Google Scholar]

- Babai, L. On Lovasz’ lattice reduction and the nearest lattice point problem. Combinatorica 1986, 6, 1–13. [Google Scholar] [CrossRef]

- Micciancio, D. The hardness of the closest vector problem with preprocessing. IEEE Trans. Inform. Theory 2001, 47, 1212–1215. [Google Scholar] [CrossRef] [Green Version]

- Lenstra, A.K.; Lenstra, H.W., Jr.; Lovasz, L. Factoring polynomials with rational coefficients. Math. Ann. 1982, 261, 513–534. [Google Scholar] [CrossRef]

- Hoffstein, J.; Pipher, J.; Silverman, J. NTRU: A ring-based public key cryptosystem. In Algorithmic Number Theory (Lecture Notes in Computer Science); Springer: New York, NY, USA, 1998; Volume 1423, pp. 267–288. [Google Scholar]

- Faugère, J.C.; Otmani, A.; Perret, L.; Tillich, J.P. On the Security of NTRU Encryption. In Advances in Cryptology—EUROCRYPT 2010; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Lyubashevsky, V.; Peikert, C.; Regev, O. On Ideal Lattices and Learning with Errors over Rings. ACM 2013, 60, 43:1–43:35. [Google Scholar] [CrossRef]

- Albrecht, M.; Ducas, L. Lattice Attacks on NTRU and LWE: A History of Refinements; Cambridge University Press: Cambridge, UK, 2021. [Google Scholar]

- Ashur, T.; Tromer, E. Key Recovery Attacks on NTRU and Schnorr Signatures with Partially Known Nonces. In Proceedings of the 38th Annual International Cryptology Conference, Santa Barbara, CA, USA, 19–23 August 2018. [Google Scholar]

- Coppersmith, D.; Shamir, A. Lattice attacks on NTRU. In Advances in Cryptology—EUROCRYPT’97; Springer: Berlin/Heidelberg, Germany, 1997. [Google Scholar]

- Buchmann, J.; Dahmen, E.; Vollmer, U. Cryptanalysis of the NTRU Signature Scheme. In Proceedings of the 6th IMA International Conference on Cryptography and Coding, Cirencester, UK, 17–19 December 1997. [Google Scholar]

- Singh, S.; Padhye, S. Cryptanalysis of NTRU with n public keys. IEEE. 2017. Available online: https://ieeexplore.ieee.org/document/7976980 (accessed on 13 July 2017).

- May, A.; Peikert, C. Lattice Reduction and NTRU. In Proceedings of the 46th Annual IEEE Symposium on Foundations of Computer Science, Pittsburgh, PA, USA, 23–25 October 2005. [Google Scholar]

- Buchmann, J.; Dahmen, E.; Hulsing, A. XMSS - A Practical Forward Secure Signature Scheme Based on Minimal Security Assumptions. In Post-Quantum Cryptography; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Regev, O. On lattices, learning with errors, random linear codes, and cryptography. J. ACM 2009, 56, 1–40. [Google Scholar] [CrossRef]

- Komano, Y.; Miyazaki, S. On the Hardness of Learning with Rounding over Small Modulus. In Proceedings of the 21st Annual International Conference on the Theory and Application of Cryptology and Information Security, Auckland, New Zealand, 29 November–3 December 2015. [Google Scholar]

- Regev, O. Learning with Errors over Rings. In Algorithmic Number Theory: 9th International Symposium, ANTS-IX, Nancy, France, 19–23 July 2010. Proceedings 9, 2010. Available online: https://link.springer.com/chapter/10.1007/978-3-642-14518-6_3 (accessed on 10 July 2010).

- Brakerski, Z.; Gentry, C.; Vaikuntanathan, V. New Constructions of Strongly Unforgeable Signatures Based on the Learning with Errors Problem. In Proceedings of the 48th Annual ACM Symposium on Theory of Computing, Cambridge, MA, USA, 19–21 June 2016. [Google Scholar]

- Bi, L.; Lu, X.; Luo, J.; Wang, K.; Zhang, Z. Hybrid Dual Attack on LWE with Arbitrary Secrets. Cryptol. ePrint Arch. 2022. Available online: https://eprint.iacr.org/2021/152 (accessed on 25 February 2021).

- Bos, W.; Costello, C.; Ducas, L.L.; Mironov, I.; Naehrig, M.; Nikolaenko, V.; Raghunathan, A.; Stebila, D. Frodo: Take off the ring! Practical, quantum-secure key exchange from LWE. In Proceedings of the CCS 2016; Vienna, Austria, 24–28 October 2016, Available online: https://eprint.iacr.org/2016/659.pdf (accessed on 28 June 2016).

- Chunsheng, G. Integer Version of Ring-LWE and its Applications. Cryptol. ePrint Arch. 2017. Available online: https://eprint.iacr.org/2017/641.pdf (accessed on 24 October 2019).

- Goldreich, O.; Goldwasser, S.; Halive, S. Public-Key cryptosystems from lattice reduction problems. Crypto’97 1997, 10, 112–131. [Google Scholar]

- Micciancio, D. Lattice based cryptography: A global improvement. Technical report. Theory Cryptogr. Libr. 1999, 99-05. Available online: https://citeseerx.ist.psu.edu/document?repid=rep1&type=pdf&doi=9591bda3813b0d09522eff2ba17c3665b530ebb9 (accessed on 4 March 1999).

- Micciancio, D. Improving Lattice Based Cryptosystems Using the Hermite Normal Form. In Cryptography and Lattices Conference; Springer: Berlin/Heidelberg, Germany, 2001. [Google Scholar]

- Nguyen, P.Q. Cryptanalysis of the Goldreich-Goldwasser-Halevi cryptosystem from crypto’97. In Annual International Cryptology Conference; Springer: Santa Barbara, CA, USA, 1999; pp. 288–304. [Google Scholar]

- Nguyen, P.Q.; Regev, O. Learning a parallelepiped: Cryptanalysis of GGH and NTRU signatures. J. Cryptol. 2009, 22, 139–160. [Google Scholar] [CrossRef]

- Lee, M.S.; Hahn, S.G. Cryptanalysis of the GGH Cryptosystem. Math. Comput. Sci. 2010, 3, 201–208. [Google Scholar] [CrossRef]

- Gu, C.; Yu, Z.; Jing, Z.; Shi, P.; Qian, J. Improvement of GGH Multilinear Map. In Proceedings of the IEEE Conference on P2P, Parallel, Grid, Cloud and Internet Computing (3PGCIC), Krakow, Poland, 4–6 November 2015; pp. 407–411. [Google Scholar]

- Minaud, B.; Fouque, P.A. Cryptanalysis of the New Multilinear Map over the Integers. IACR Cryptol. ePrint Arch. 2015, 941. Available online: https://eprint.iacr.org/2015/941 (accessed on 28 September 2015).

- Yoshino, M. Kunihiro, Improving GGH Cryptosystem for Large Error Vector. In Proceedings of the International Symposium on Information Theory and Its Applications, Honolulu, HI, USA, 28–31 October 2012; pp. 416–420. [Google Scholar]

- Barros, C.; Schechter, L.M. GGH may not be dead after all. In Proceedings of the Congresso Nacional de Matemática Aplicada e Computacional, Sao Paolo, PR, Brazil, 8–12 September 2014. [Google Scholar]

- Brakerski, Z.; Gentry, C.; Halevi, S.; Lepoint, T.; Sahai, A.; Tibouchi, M. Cryptanalysis of the Quadratic Zero-Testing of GGH. IACR Cryptol. ePrint. Available online: https://eprint.iacr.org/2015/845 (accessed on 21 September 2015).

- Susilo, W.; Mu, Y. Information Security and Privacy; Springer: Berlin/Heidelberg, Germany, 2014; Volume 845. [Google Scholar]

- Bonte, C.; Iliashenko, I.; Park, J.; Pereira, H.V.; Smart, N. FINAL: Faster FHE Instantiated with NTRU and LWE. Cryptol. ePrint Arch. 2022. Available online: https://eprint.iacr.org/2022/074 (accessed on 20 January 2022).

- Bai, S.; Chen, Y.; Hu, L. Efficient Algorithms for LWE and LWR. In Proceedings of the 10th International Conference on Applied Cryptography and Network Security, Singapore, 26–29 June 2012. [Google Scholar]

- Brakerski, Z.; Langlois, A.; Regev, O.; Stehl, D. Classical Hardness of Learning with Errors. In Proceedings of the 45th Annual ACM Symposium on Theory of Computing (STOC), Palo Alto, CA, USA, 2–4 June 2013; pp. 575–584. [Google Scholar]

- Lyubashevsky, V.; Micciancio, D. Generalized Compact Knapsacks Are Collision Resistant. In Proceedings of the 33rd International Colloquium on Automata, Languages and Programming, Venice, Italy, 10–14 July 2006; pp. 144–155. [Google Scholar]

- Takagi, T.; Kiyomoto, S. Improved Sieving Algorithms for Shortest Lattice Vector Problem and Its Applications to Security Analysis of LWE-based Cryptosystems. In Proceedings of the 23rd Annual International Conference on the Theory and Applications of Cryptographic Techniques, Lyon, France, 2–6 May 2004. [Google Scholar]

- Balbas, D. The Hardness of LWE and Ring-LWE: A Survey. Cryptol. ePrint Arch. 2021. Available online: https://eprint.iacr.org/2021/1358.pdf (accessed on 8 October 2021).

- Post-Quantum Cryptography. Available online: https://csrc.nist.gov/Projects/post-quantum-cryptography/selected-algorithms-2022 (accessed on 8 June 2023).

- Gentry, C.; Peikert, C.; Vaikuntanathan, V. Trapdoors for Hard Lattices and New Cryptographic Constructions. Cryptol. ePrint Arch. 2007. Available online: https://eprint.iacr.org/2007/432 (accessed on 24 November 2007).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sabani, M.E.; Savvas, I.K.; Poulakis, D.; Garani, G.; Makris, G.C. Evaluation and Comparison of Lattice-Based Cryptosystems for a Secure Quantum Computing Era. Electronics 2023, 12, 2643. https://doi.org/10.3390/electronics12122643

Sabani ME, Savvas IK, Poulakis D, Garani G, Makris GC. Evaluation and Comparison of Lattice-Based Cryptosystems for a Secure Quantum Computing Era. Electronics. 2023; 12(12):2643. https://doi.org/10.3390/electronics12122643

Chicago/Turabian StyleSabani, Maria E., Ilias K. Savvas, Dimitrios Poulakis, Georgia Garani, and Georgios C. Makris. 2023. "Evaluation and Comparison of Lattice-Based Cryptosystems for a Secure Quantum Computing Era" Electronics 12, no. 12: 2643. https://doi.org/10.3390/electronics12122643

APA StyleSabani, M. E., Savvas, I. K., Poulakis, D., Garani, G., & Makris, G. C. (2023). Evaluation and Comparison of Lattice-Based Cryptosystems for a Secure Quantum Computing Era. Electronics, 12(12), 2643. https://doi.org/10.3390/electronics12122643