Soft Segmentation and Reconstruction of Tree Crown from Laser Scanning Data

Abstract

1. Introduction

- (1)

- Construct an algorithm for segmenting and reconstructing tree crowns from laser scanning data, which can be applied to the forest inventory.

- (2)

- Propose a soft segmentation algorithm for making the reconstructed tree crown more natural and accurate.

- (3)

- Propose a fast reconstruction algorithm that fuses down-sampling and constructs a kd-tree.

2. Data and Methods

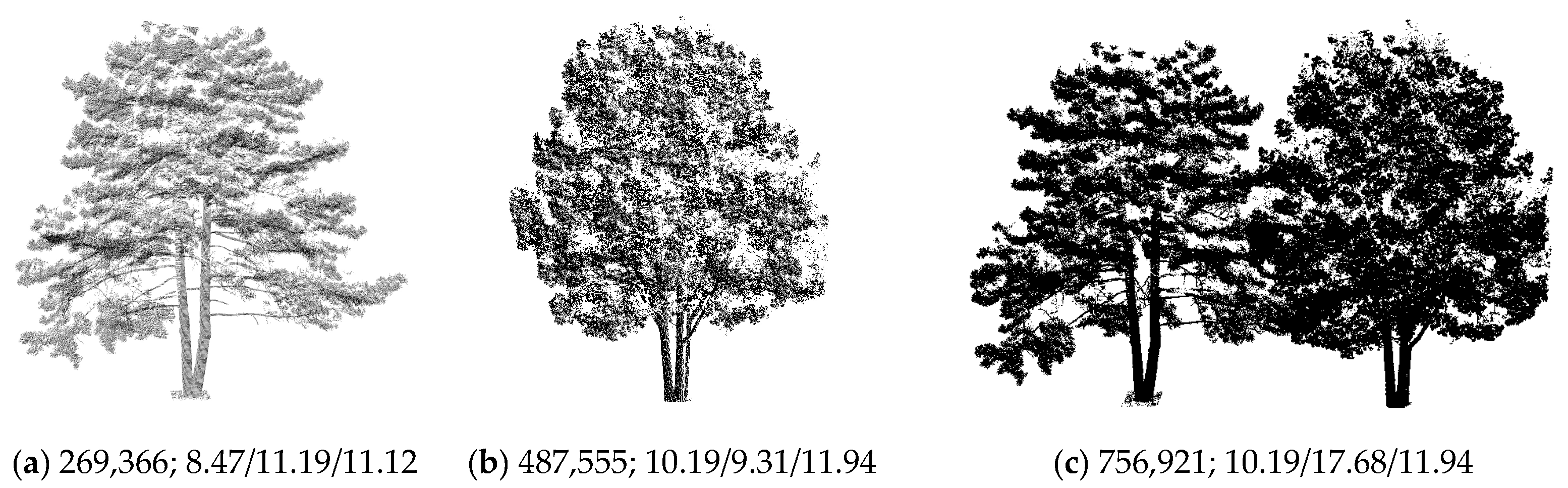

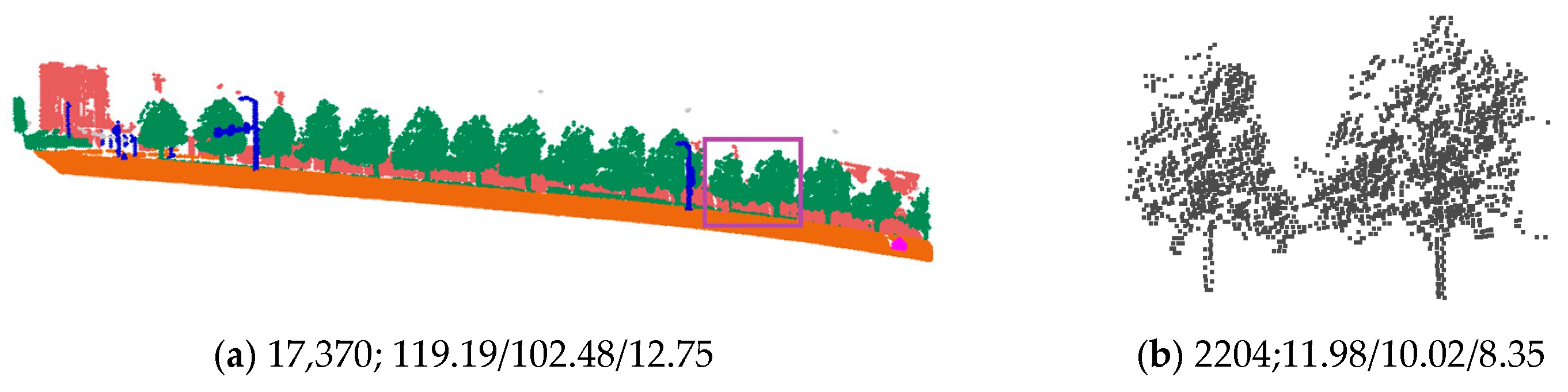

2.1. Data

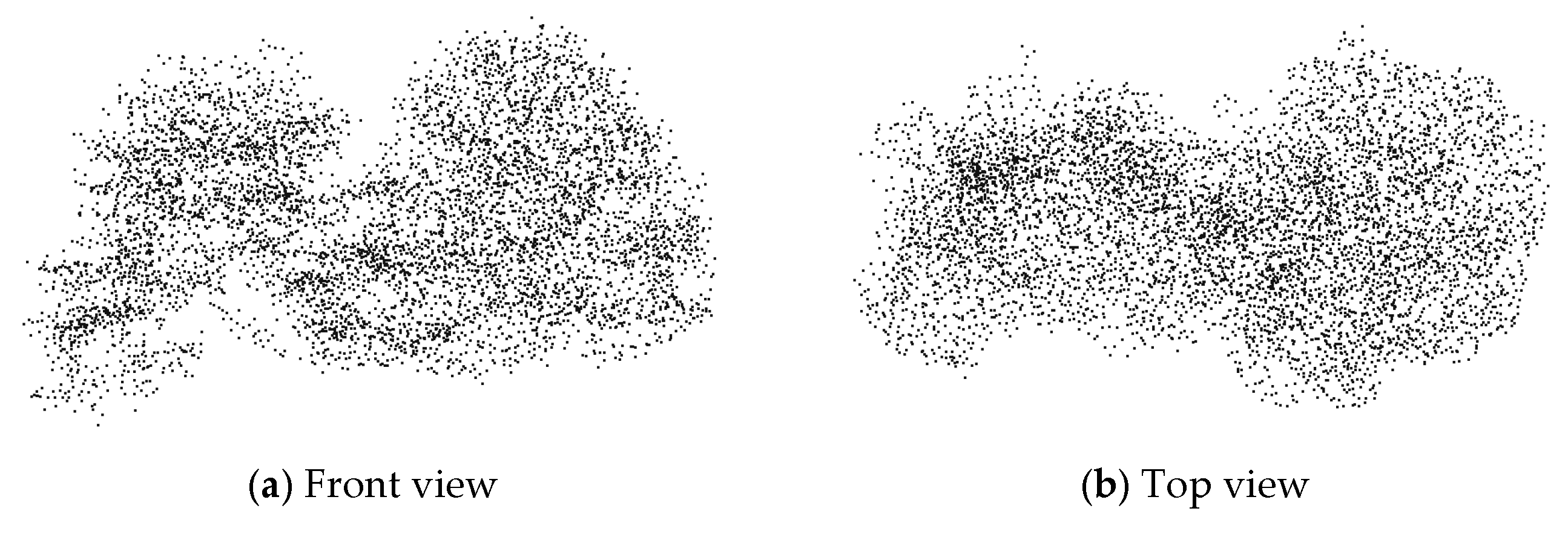

2.2. Soft Segmentation

2.2.1. Crown Points Extraction

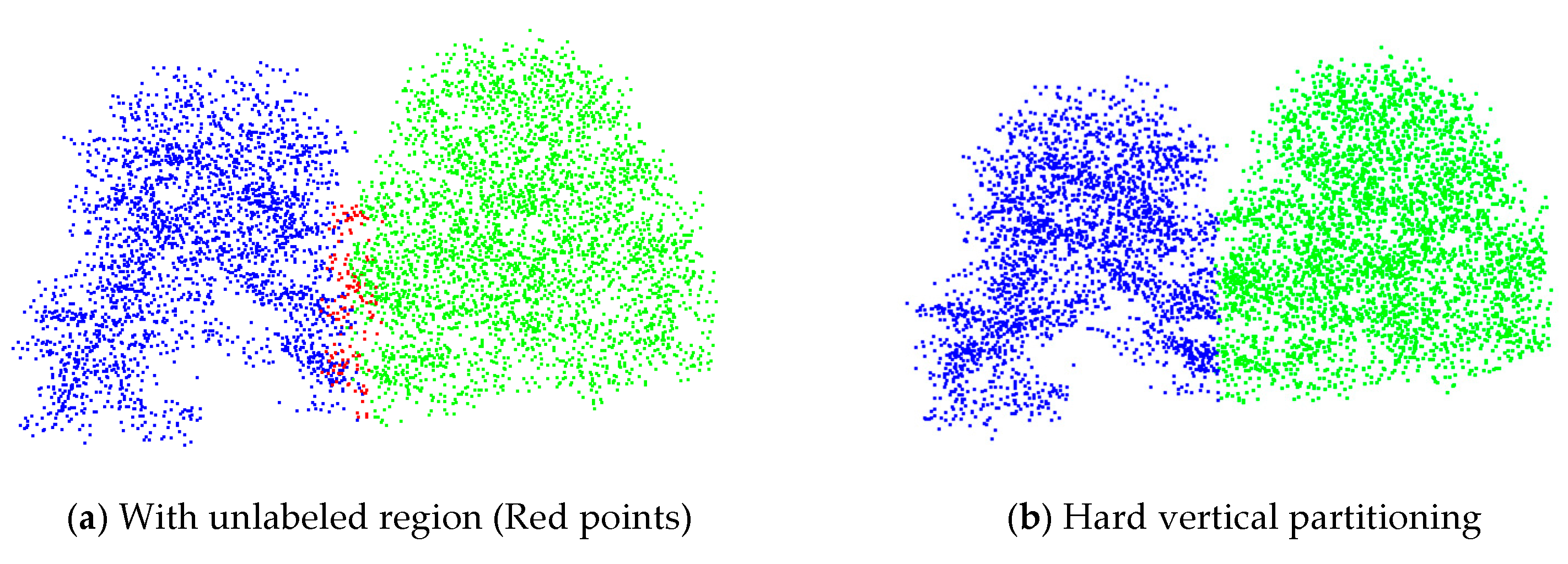

2.2.2. Vertical Partition

2.2.3. Crown Layers Partition

2.2.4. Layer Contour Extraction and Refinement

2.3. Reconstruction

2.3.1. Detecting Boundary Points of Bins

2.3.2. Building the Crown Surface

| Algorithm 1: BCSwBPs. |

| Input: Output: crown surface geometry S1: construct a kd-tree with all points in the set S2: for S3: calculate two centers of two balls . Note that are on and , S4: Find a nearest neighbor of S5: Find a nearest neighbor of S6: if or , then S7: is a boundary triangle for output S8: end if S9: end for |

2.3.3. Estimating the Attributes

3. Results

3.1. Segmentation of the Tree Crown with Different Overlap Degrees

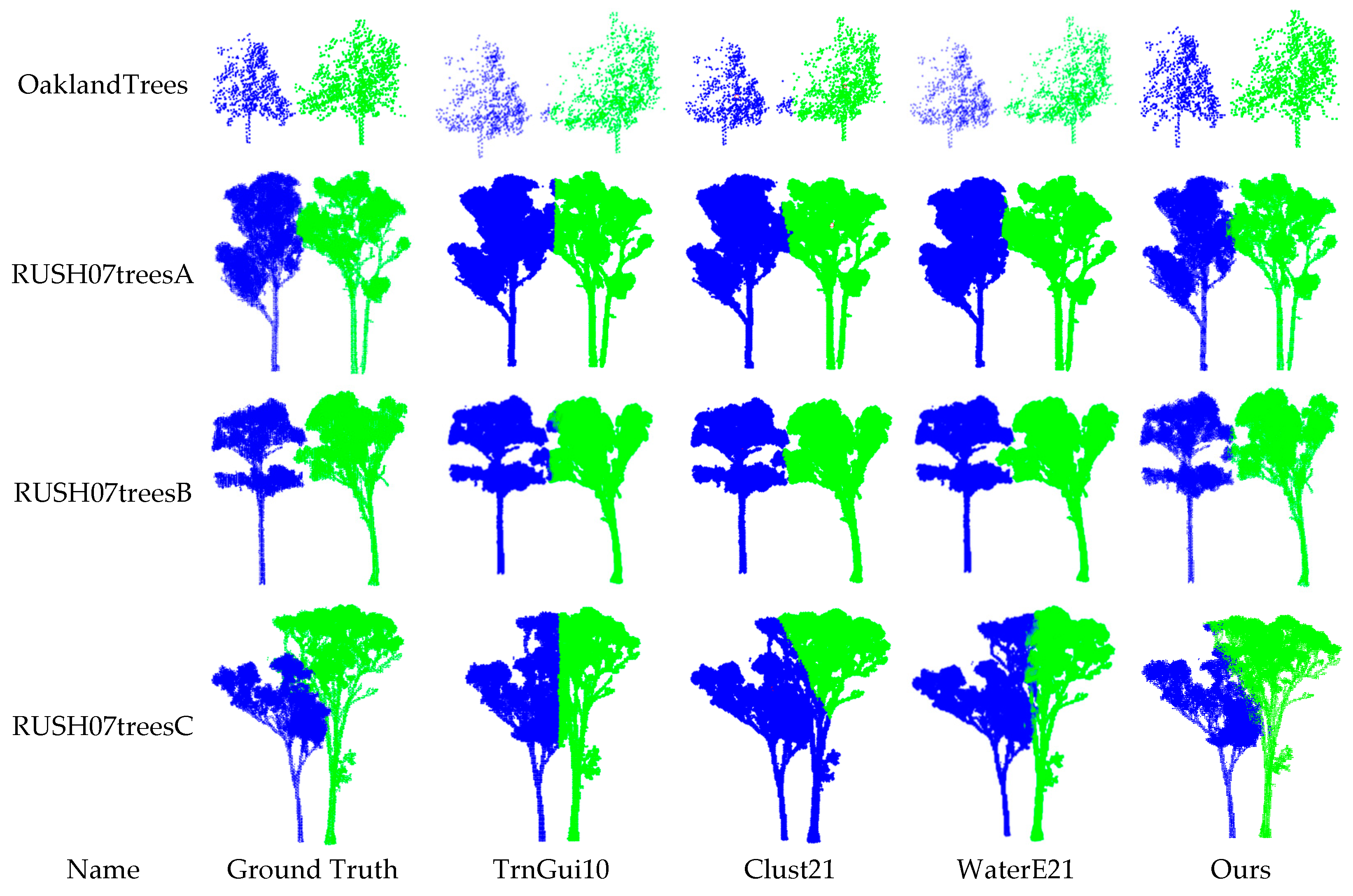

3.2. Segmentation and Comparison

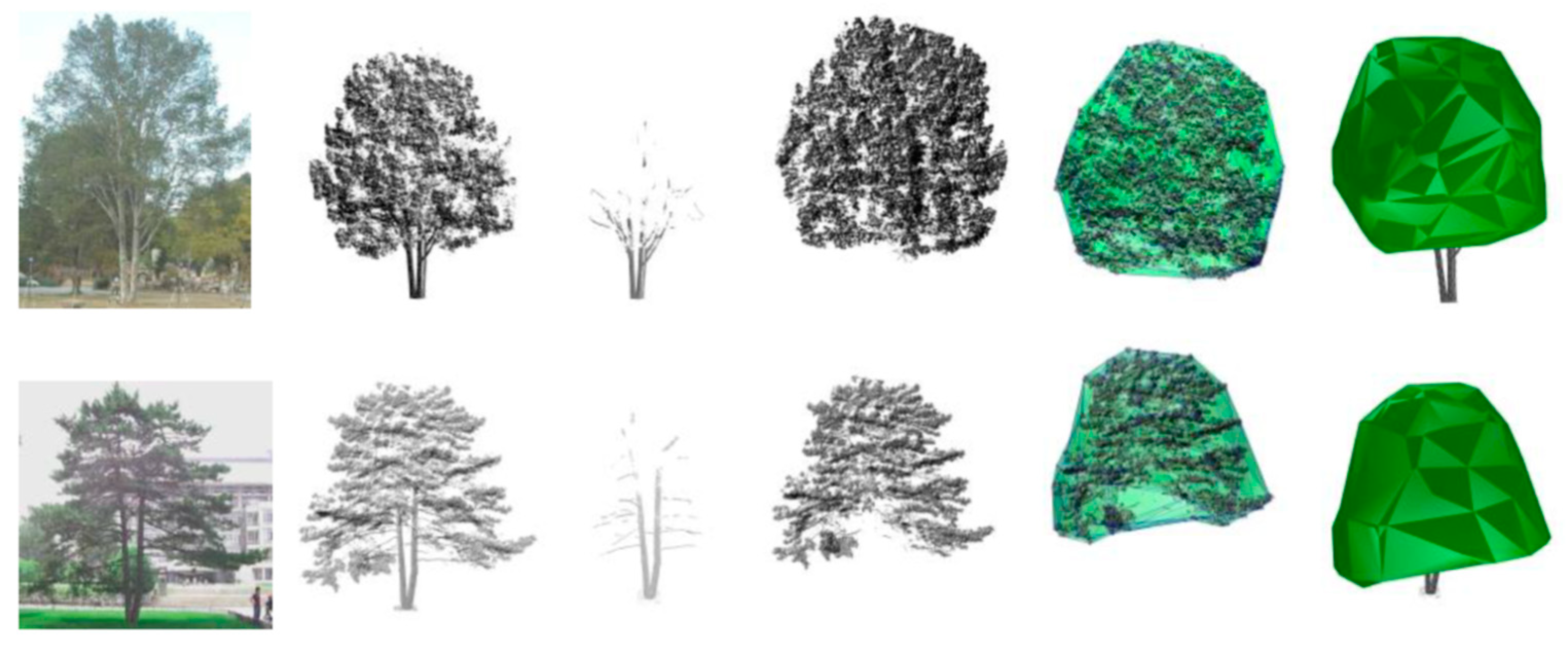

3.3. Reconstruction Results

3.4. Discussion

3.4.1. Time Efficiency with Down-Sampling

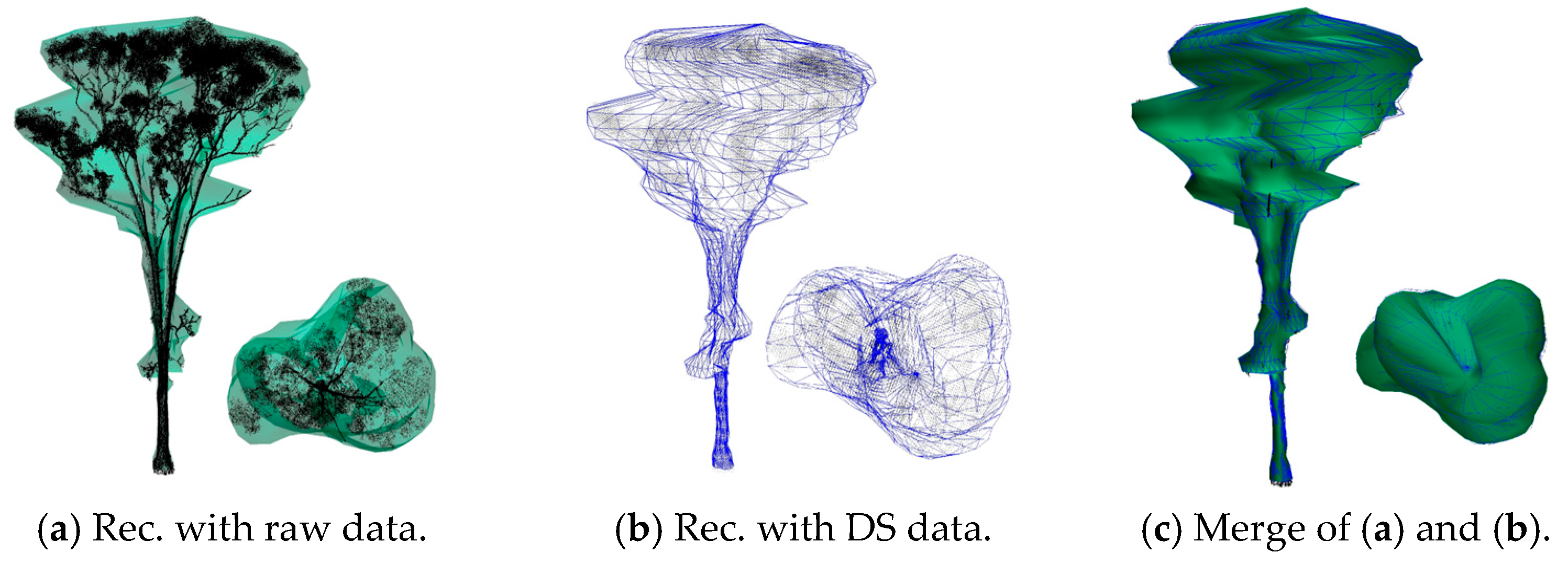

3.4.2. Error Caused by Down-Sampling

3.4.3. Visual Effect of the Reconstructed Crown

3.4.4. Segmentation Using the Deep Learning Method

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Jakubowski, M.K.; Li, W.; Guo, Q.; Kelly, M. Delineating Individual Trees from LiDAR Data: A Comparison of Vector- and Raster-based Segmentation Approaches. Remote Sens. 2013, 5, 4163–4186. [Google Scholar] [CrossRef]

- Li, W.; Guo, Q.; Jakubowski, M.K.; Kelly, M. A New Method for Segmenting Individual Trees from the Lidar Point Cloud. Photogramm. Eng. Remote Sens. 2012, 78, 75–84. [Google Scholar] [CrossRef]

- Zhang, C.; Zhou, Y.; Qiu, F. Individual Tree Segmentation from LiDAR Point Clouds for Urban Forest Inventory. Remote Sens. 2015, 7, 7892–7913. [Google Scholar] [CrossRef]

- Wang, J.; Lindenbergh, R.; Menenti, M. Scalable individual tree delineation in 3D point clouds. Photogramm. Rec. 2018, 33, 315–340. [Google Scholar] [CrossRef]

- Yang, J.; Kang, Z.; Cheng, S.; Yang, Z.; Akwensi, P.H. An Individual Tree Segmentation Method Based on Watershed Algorithm and Three-Dimensional Spatial Distribution Analysis From Airborne LiDAR Point Clouds. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 1055–1067. [Google Scholar] [CrossRef]

- Irlan, I.; Saleh, M.B.; Prasetyo, L.B.; Setiawan, Y. Evaluation of Tree Detection and Segmentation Algorithms in Peat Swamp Forest Based on LiDAR Point Clouds Data. J. Manaj. Hutan Trop. J. Trop. For. Manag. 2020, 26, 123–132. [Google Scholar] [CrossRef]

- Ayrey, E.; Fraver, S.; Kershaw, J.A., Jr.; Kenefic, L.S.; Hayes, D.; Weiskittel, A.R.; Roth, B.E. Layer Stacking: A Novel Algorithm for Individual Forest Tree Segmentation from LiDAR Point Clouds. Can. J. Remote Sens. 2017, 43, 16–27. [Google Scholar] [CrossRef]

- Chen, Q.; Wang, X.; Hang, M.; Li, J. Research on the improvement of single tree segmentation algorithm based on airborne LiDAR point cloud. Open Geosci. 2021, 13, 705–716. [Google Scholar] [CrossRef]

- Bienert, A.; Georgi, L.; Kunz, M.; von Oheimb, G.; Maas, H.-G. Automatic extraction and meas-urement of individual trees from mobile laser scanning point clouds of forests. Ann. Bot. 2021, 128, 787–804. [Google Scholar] [CrossRef]

- Liu, H.; Dong, P.; Wu, C.; Wang, P.; Fang, M. Individual tree identification using a new clus-ter-based approach with discrete-return airborne LiDAR data. Remote Sens. Environ. 2021, 258, 112382. [Google Scholar] [CrossRef]

- Qin, Y.; Ferraz, A.; Mallet, C.; Iovan, C. Individual tree segmentation over large areas using airborne LiDAR point cloud and very high resolution optical imagery. In Proceedings of the 2014 IEEE Geoscience and Remote Sensing Symposium, Quebec City, QC, Canada, 13–18 July 2014; pp. 800–803. [Google Scholar] [CrossRef]

- Comesaña-Cebral, L.; Martínez-Sánchez, J.; Lorenzo, H.; Arias, P. Individual Tree Segmentation Method Based on Mobile Backpack LiDAR Point Clouds. Sensors 2021, 21, 6007. [Google Scholar] [CrossRef] [PubMed]

- Lisiewicz, M.; Kamińska, A.; Kraszewski, B.; Stereńczak, K. Correcting the Results of CHM-Based Individual Tree Detection Algorithms to Improve Their Accuracy and Reliability. Remote Sens. 2022, 14, 1822. [Google Scholar] [CrossRef]

- Huo, L.; Lindberg, E.; Holmgren, J. Towards low vegetation identification: A new method for tree crown segmentation from LiDAR data based on a symmetrical structure detection algorithm (SSD). Remote Sens. Environ. 2022, 270, 112857. [Google Scholar] [CrossRef]

- Li, J.; Cheng, X.; Xiao, Z. A branch-trunk-constrained hierarchical clustering method for street trees individual extraction from mobile laser scanning point clouds. Measurement 2021, 189, 110440. [Google Scholar] [CrossRef]

- Pires, R.D.P.; Olofsson, K.; Persson, H.J.; Lindberg, E.; Holmgren, J. Individual tree detection and estimation of stem attributes with mobile laser scanning along boreal forest roads. ISPRS J. Photogramm. Remote Sens. 2022, 187, 211–224. [Google Scholar] [CrossRef]

- Braga, J.R.G.; Peripato, V.; Dalagnol, R.; Ferreira, M.P.; Tarabalka, Y.; Aragão, L.E.O.C.; Velho, H.F.d.C.; Shiguemori, E.H.; Wagner, F.H. Tree Crown Delineation Algorithm Based on a Convolutional Neural Network. Remote Sens. 2020, 12, 1288. [Google Scholar] [CrossRef]

- Janoutová, R.; Homolová, L.; Novotný, J.; Navrátilová, B.; Pikl, M.; Malenovský, Z. Detailed reconstruction of trees from terrestrial laser scans for remote sensing and radiative transfer modelling applications. Silico Plants 2021, 3, diab026. [Google Scholar] [CrossRef]

- Dai, M.; Li, H.; Zhang, X. Tree Modeling through Range Image Segmentation and 3D Shape Analysis. In Lecture Notes in Electrical Engineering Book Series (LNEE)2010; Springer: Berlin/Heidelberg, Germany, 2010; Volume 67, pp. 413–422. [Google Scholar] [CrossRef]

- Livny, Y.; Yan, F.; Olson, M.; Chen, B.; Zhang, H.; El-Sana, J. Automatic reconstruction of tree skeletal structures from point clouds. ACM Trans. Graph. 2010, 29, 151:1–151:8. [Google Scholar] [CrossRef]

- Zhang, X.; Li, H.; Dai, M.; Ma, W.; Quan, L. Data-driven synthetic modeling of trees. IEEE Trans. Vis. Comput. Graph. 2014, 20, 1214–1226. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, L.; Fang, T.; Mathiopoulos, P.T.; Qu, H.; Chen, D.; Wang, Y. A Structure-Aware Global Optimization Method for Reconstructing 3-D Tree Models From Terrestrial Laser Scanning Data. IEEE Trans. Geosci. Remote Sens. 2014, 52, 5653–5669. [Google Scholar] [CrossRef]

- Moorthy, I.; Miller, J.R.; Berni, J.A.J.; Zarco-Tejada, P.; Hu, B.; Chen, J. Field characterization of olive (Olea europaea L.) tree crown architecture using terrestrial laser scanning data. Agric. For. Meteorol. 2011, 151, 204–214. [Google Scholar] [CrossRef]

- Paris, C.; Kelbe, D.; van Aardt, J.; Bruzzone, L. A Novel Automatic Method for the Fusion of ALS and TLS LiDAR Data for Robust Assessment of Tree Crown Structure. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3679–3693. [Google Scholar] [CrossRef]

- Alexander, C. Delineating tree crowns from airborne laser scanning point cloud data using delaunay triangulation. Int. J. Remote Sens. 2009, 30, 3843–3848. [Google Scholar] [CrossRef]

- Morsdorf, F.; Meier, E.; Kötz, B.; Itten, K.I.; Dobbertin, M.; Allgöwer, B. LIDAR-based geometric reconstruction of boreal type forest stands at single tree level for forest and wildland fire management. Remote Sens. Environ. 2004, 92, 353–362. [Google Scholar] [CrossRef]

- Kato, A.; Schreuder, G.F.; Calhoun, D.; Schiess, P.; Stuetzle, W. Digital surface model of tree canopy structure from LiDAR data through implicit surface reconstruction. In Proceedings of the ASPRS 2007 Annual Conference, Tampa, FL, USA, 7–11 May 2007. [Google Scholar]

- Kato, A.; Moskal, L.M.; Schiess, P.; Swanson, M.E.; Calhoun, D.; Stuetzle, W. Capturing tree crown formation through implicit surface reconstruction using airborne lidar data. Remote Sens. Environ. 2016, 113, 1148–1162. [Google Scholar] [CrossRef]

- Lin, Y.; Hyyppa, J. Multiecho-Recording Mobile Laser Scanning for Enhancing Individual Tree Crown Reconstruction. IEEE Trans. Geosci. Remote Sens. 2012, 50, 4323–4332. [Google Scholar] [CrossRef]

- Zhu, C.; Zhang, X.; Hu, B.; Jaeger, M. Reconstruction of Tree Crown Shape from Scanned Da-Ta; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Tang, S.; Dong, P.; Buckles, B.P. Three-dimensional surface reconstruction of tree canopy from lidar point clouds using a region-based level set method. Int. J. Remote Sens. 2012, 34, 1373–1385. [Google Scholar] [CrossRef]

- Xie, D.; Wang, X.; Qi, J.; Chen, Y.; Mu, X.; Zhang, W.; Yan, G. Reconstruction of Single Tree with Leaves Based on Terrestrial LiDAR Point Cloud Data. Remote Sens. 2018, 10, 686. [Google Scholar] [CrossRef]

- Kim, D.; Jo, K.; Lee, M.; Sunwoo, M. L-shape model switching-based precise motion tracking of moving vehicles using laser scanners. IEEE Trans. Intell. Transp. Syst. 2017, 19, 598–612. [Google Scholar] [CrossRef]

- Ma, Q.; Su, Y.; Tao, S.; Guo, Q. Quantifying individual tree growth and tree competition using bi-temporal airborne laser scanning data: A case study in the Sierra Nevada Mountains, California. Int. J. Digit. Earth 2017, 11, 485–503. [Google Scholar] [CrossRef]

- Yu, X.; Hyyppä, J.; Kaartinen, H.; Hyyppa, H.; Maltamo, M.; Rnnholm, P. Measuring the growth of individual trees using multi-temporal airborne laser scanning point clouds. In Proceedings of the ISPRS Workshop on “Laser Scanning 2005”, Enschede, The Netherlands, 12–14 September 2005; pp. 204–208. [Google Scholar]

- Aubry-Kientz, M.; Dutrieux, R.; Ferraz, A.; Saatchi, S.; Hamraz, H.; Williams, J.; Coomes, D.; Piboule, A.; Vincent, G. A Comparative Assessment of the Performance of Individual Tree Crowns Delineation Algorithms from ALS Data in Tropical Forests. Remote Sens. 2019, 11, 1086. [Google Scholar] [CrossRef]

- Daniel Munoz, J.; Bagnell, A.; Vandapel, N.; Hebert, M. Contextual Classification with Functional Max-Margin Markov Networks. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Calders, K. Terrestrial Laser Scans—Riegl VZ400, Individual Tree Point Clouds and Cylinder Models, Rushworth Forest; Version 1; Terrestrial Ecosystem Research Network: Indooroopilly, QLD, Australia, 2014. [Google Scholar] [CrossRef]

- Fang, H.; Li, H. Counting of Plantation Trees Based on Line Detection of Point Cloud Data; Geomatics and Information Science of Wuhan University: Wuhan, China, 2022; Volume 7. [Google Scholar] [CrossRef]

- Edelsbrunner, H.; Kirkpatrick, D.; Seidel, R. On the shape of a set of points in the plane. IEEE Trans. Inf. Theory 1983, 29, 551–559. [Google Scholar] [CrossRef]

- Bernardini, F.; Bajaj, C. Sampling and Reconstructing Manifolds Using Alphashapes. 1997. Available online: https://docs.lib.purdue.edu/cgi/viewcontent.cgi?article=2349&context=cstech (accessed on 12 April 2023).

- Edelsbrunner, H.; Mücke, E.P. Three-dimensional alpha shapes. ACM Trans. Graph. 1994, 13, 43–72. [Google Scholar] [CrossRef]

- Arya, S.; Malamatos, T.; Mount, D.M. Space-time tradeoffs for approximate nearest neighbor searching. J. ACM 2009, 57, 1–54. [Google Scholar] [CrossRef]

- Li, H.; Zhang, X.; Jaeger, M.; Constant, T. Segmentation of forest terrain laser scan data. In Proceedings of the 9th ACM SIGGRAPH Conference on Virtual-Reality Continuum and its Applications in Industry (VRCAI ’10), Association for Computing Machinery, New York, NY, USA, 12–13 December 2010; pp. 47–54. [Google Scholar] [CrossRef]

- Yun, T.; Jiang, K.; Li, G.; Eichhorn, M.P.; Fan, J.; Liu, F.; Chen, B.; An, F.; Cao, L. Individual tree crown segmentation from airborne LiDAR data using a novel Gaussian filter and energy function minimization-based approach. Remote Sens. Environ. 2021, 256, 112307. [Google Scholar] [CrossRef]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. Adv. Neural Inf. Process. Syst. 2017, 30, 1–10. [Google Scholar]

| SN | Class | HS.T1 | HS.T2 | HS.T1p | HS.T2p | SS.T1 | SS.T2 | SS.T1p | SS.T2p |

|---|---|---|---|---|---|---|---|---|---|

| Row1 | Tru.T1 | 3092 | 161 | 0.9505 | 0.0495 | 3078 | 175 | 0.9462 | 0.0538 |

| Tru.T2 | 238 | 2685 | 0.0814 | 0.9186 | 227 | 2696 | 0.0777 | 0.9223 | |

| Row2 | Tru.T1 | 3250 | 3 | 0.9991 | 0.0009 | 3242 | 11 | 0.9966 | 0.0034 |

| Tru.T2 | 365 | 2558 | 0.1249 | 0.8751 | 341 | 2582 | 0.1167 | 0.8833 | |

| Row3 | Tru.T1 | 3253 | 0 | 1 | 0 | 3251 | 2 | 0.9994 | 0.0006 |

| Tru.T2 | 280 | 2643 | 0.0958 | 0.9042 | 270 | 2653 | 0.0924 | 0.9076 | |

| Row4 | Tru.T1 | 3253 | 0 | 1 | 0 | 3251 | 2 | 0.9994 | 0.0006 |

| Tru.T2 | 180 | 2743 | 0.0616 | 0.9384 | 162 | 2761 | 0.0554 | 0.9446 | |

| Row5 | Tru.T1 | 3253 | 0 | 1 | 0 | 3253 | 0 | 1 | 0 |

| Tru.T2 | 285 | 2638 | 0.0975 | 0.9025 | 279 | 2644 | 0.0954 | 0.9046 | |

| Row6 | Tru.T1 | 3253 | 0 | 1 | 0 | 3253 | 0 | 1 | 0 |

| Tru.T2 | 52 | 2871 | 0.0178 | 0.9822 | 42 | 2881 | 0.0144 | 0.9856 | |

| Row7 | Tru.T1 | 3253 | 0 | 1 | 0 | 3253 | 0 | 1 | 0 |

| Tru.T2 | 22 | 2901 | 0.0075 | 0.9925 | 18 | 2905 | 0.0062 | 0.9938 |

| Name | Pts.N | TrnGui10 | Clust21 | WaterE21 | Ours | ||||

|---|---|---|---|---|---|---|---|---|---|

| OaklandTrees | 1370 | 1351 | 19 | 1319 | 51 | 1370 | 0 | 1370 | 0 |

| 834 | 0 | 834 | 0 | 834 | 0 | 834 | 0 | 834 | |

| RUSH07TreesA | 33,424 | 27,505 | 5919 | 29,072 | 4352 | 33,278 | 146 | 30,812 | 2612 |

| 43,286 | 0 | 43,286 | 0 | 43,286 | 76 | 43,210 | 23 | 43,263 | |

| RUSH07TreesB | 33,849 | 32,592 | 1257 | 33,524 | 325 | 33,849 | 0 | 33,709 | 140 |

| 27,065 | 0 | 27,065 | 1 | 27,064 | 7 | 27,058 | 48 | 27,017 | |

| RUSH07TreesC | 34,053 | 23,255 | 10,798 | 25,063 | 8990 | 22,831 | 11,222 | 30,906 | 3147 |

| 24,057 | 2106 | 21,951 | 0 | 24,057 | 105 | 23,952 | 1506 | 22,551 | |

| SN | N.Pts | H.Tree | W.Tree | A.Sup | A.proj |

|---|---|---|---|---|---|

| Tree1 | 487,555 | 11.9 | 10.1 | 292.5 | 71.7 |

| Tree2 | 269,366 | 11.1 | 11.1 | 303.3 | 73.1 |

| Tree SN | N.Pts | H.Tree | W.Tree | A.Sup | Volume |

|---|---|---|---|---|---|

| 1 | 977 | 7.138 | 6.515 | 113.109 | 64.803 |

| 2 | 435 | 6.747 | 3.334 | 46.002 | 12.153 |

| 3 | 978 | 6.654 | 6.454 | 108.756 | 48.789 |

| 4 | 1527 | 8.745 | 7.293 | 176.111 | 123.144 |

| 5 | 1370 | 8.601 | 7.687 | 158.965 | 108.512 |

| 6 | 834 | 7.540 | 5.727 | 112.835 | 54.985 |

| 7 | 1267 | 10.064 | 7.283 | 185.166 | 121.502 |

| 8 | 1427 | 9.610 | 9.353 | 220.818 | 153.500 |

| 9 | 1319 | 9.003 | 8.101 | 176.291 | 115.188 |

| 10 | 1042 | 9.816 | 7.222 | 172.671 | 108.886 |

| 11 | 1083 | 9.157 | 8.454 | 168.591 | 109.401 |

| 12 | 1333 | 9.507 | 9.000 | 273.591 | 174.541 |

| 13 | 1024 | 8.858 | 7.363 | 182.162 | 98.426 |

| 14 | 777 | 7.880 | 5.909 | 112.269 | 49.672 |

| 15 | 677 | 8.055 | 4.576 | 96.327 | 37.486 |

| 16 | 834 | 7.437 | 6.111 | 130.311 | 65.083 |

| 17 | 122 | 4.265 | 1.394 | 13.499 | 1.379 |

| Method | DS | Roots Detect | Layer Bin Build | Vertical Partitioning | Contour Build | Segmentation Refine | Total Time |

|---|---|---|---|---|---|---|---|

| With DS | 0.068 | 0.3446 | 0.0189 | 0.0107 | 0.0119 | 0.0023 | 0.4564 |

| Without DS | 0 | 12.4051 | 0.2433 | 0.1549 | 0.2651 | 0.0215 | 13.0899 |

| Method | N.Pts | N.Polygon | H.Tree | W.Tree | A.Sup | Volume |

|---|---|---|---|---|---|---|

| With DS | 34,053 | 2700 | 23.176 | 13.333 | 545.831 | 532.967 |

| Without DS | 536,461 | 2700 | 23.318 | 13.412 | 549.142 | 525.064 |

| Error | −93.65% | 0.00% | −0.61% | −0.59% | −0.60% | 1.51% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dai, M.; Li, G. Soft Segmentation and Reconstruction of Tree Crown from Laser Scanning Data. Electronics 2023, 12, 2300. https://doi.org/10.3390/electronics12102300

Dai M, Li G. Soft Segmentation and Reconstruction of Tree Crown from Laser Scanning Data. Electronics. 2023; 12(10):2300. https://doi.org/10.3390/electronics12102300

Chicago/Turabian StyleDai, Mingrui, and Guohua Li. 2023. "Soft Segmentation and Reconstruction of Tree Crown from Laser Scanning Data" Electronics 12, no. 10: 2300. https://doi.org/10.3390/electronics12102300

APA StyleDai, M., & Li, G. (2023). Soft Segmentation and Reconstruction of Tree Crown from Laser Scanning Data. Electronics, 12(10), 2300. https://doi.org/10.3390/electronics12102300