1. Introduction

A new type of sensor called an event camera was recently developed based on the new biomimetic technologies proposed in the neuromorphic engineering domain [

1]. The event camera is bio-inspired by the human brain, as each pixel operates separately to mimic the biological neural system and performs simple tasks with small energy consumption. More exactly, in contrast to the conventional camera where all are operating simultaneously, the event camera sensor introduces a novel design where each pixel detects and reports independently only the changes (increased or deceased) of the incoming light intensity above a threshold or remains silent otherwise.

The event camera proposes a new

paradigm shift for capturing visual data, where only the dynamic information of the scene is captured using a sequence (stream) of events that are triggered asynchronously, without capturing the unnecessary static information represented by the background or skyline. This gives the event camera some very important properties of low energy consumption, low latency, high dynamic range (HDR), and high temporal resolution, as the asynchronous events can be triggered individually at the smallest timestamp distance of one microsecond (

s). Two types of sensors are now made available on the market: the dynamic vision sensor (DVS) [

2], which captures sequences of asynchronous events; and the dynamic and active-pixel vision sensor (DAVIS) [

3], which adds a second camera to the DVS sensor, an active pixel sensor (APS) for capturing greyscale or color (RGB) frames.

In the computer vision domain, event cameras are now widely used as the solutions designed to process the RGB and event modalities already reached state-of-the-art performance for many applications such as deblurring [

4], feature detection and tracking [

5,

6], optic flow estimation [

7], 3D estimation [

8], super-resolution [

9], interpolation [

10], visual odometry [

11], and many others. For a comprehensive literature review of event-based applications in computer vision, we recommend the work presented in [

12]. Since many of these upper-level applications are designed to process the event modality as an image rather than an asynchronous events sequence, the event data acquired by the DVS sensor is pre-processed, usually using an event-accumulation process to form a sequence of synchronous Event Frames (EFs), i.e., the EF contains a set of synchronous events having assigned the timestamp associated with the EF.

In general, an EF is represented using the ternary alphabet, , where for each pixel position , one of the following three possible symbols is assigned: to signal the position of an event with negative polarity (report a light intensity decrease), to signal the position of a non-event, and to signal the position of an event with positive polarity (report a light intensity increase). Please note that sometimes the representation of the first two symbols is swapped. Although is used to store the EF on the disk, one can note that in some cases, a ternary alphabet containing different symbols might be used for a better visual representation. E.g., is preferred for signaling the negative and positive event polarity; is preferred for having an 8-bit intensity-like representation and guarantees compatibility with traditional image and video codecs. In the DVS sensor representation, each event is stored using a package of 8 Bytes (B). While in the raw EF representation, each pixel contains a ternary symbol that is stored using 2 bits, i.e., an EF having a pixel resolution sensor is stored using a total of bits.

The event data captured by the event sensor contains different distortions (e.g., noise, latency), which must be corrected by the sensor or the event-processing chip, i.e., on low-power chips with limited memory before it can be consumed by upper-level applications. Since the raw EF representation reaches high bitrate levels, a more efficient fixed-length representation is required for low-bandwidth transmission of the event data from the event sensor to the event-processing chip. One can note that such a method must satisfy several constraints. The codec must operate in a group of pixels as other algorithms are employed to correct the event data using a limited chip memory, i.e., the codec must provide a fixed-length representation to provide random access to any group of pixels by allocating an equal number of bits to encode each group. Note that in data compression, large coding gains are achieved by allocating a variable-length representation to each group of pixels, as the coding gain depends on the amount of data stored inside each group. The codec must have a low complexity so it can be hardware written, i.e., only simple computations are accepted. The codec must provide an efficient memory representation to justify the costs of hardware writing the lossless codec. Hence, in this paper, we propose a novel low-complexity lossless compression framework of synchronous EFs based on fixed-length coding, which is suitable for integration into very-low-power (VLP) processing chips.

In the literature, the event data compression problem remains understudied. Only a few coding solutions are proposed to either encode asynchronous event sequences [

13,

14] or synchronous EF sequences [

15,

16]. In [

13], the authors propose to encode lossless asynchronous event sequences by exploiting the spatial and temporal characteristics of the event location information. The research was further extended in [

14]. In [

15], the Time Aggregation-based Lossless Video Encoding for Neuromorphic vision sensor data (TALVEN) algorithm is proposed, where an event-accumulation process is employed to generate EFs by concatenating the positive and negative polarity EF counts and form an EF sequence then compresses by the High-Efficiency Video Coding (HEVC) [

17] standard. Finally, in [

16], a lossy coding solution is proposed for the DAVIS sensor. One can note that these solutions are much too complex for hardware implementation in the event-processing chip with limited memory. These are performance–oriented compression solutions that can be integrated only in a system-on-a-chip (SoC) where enough computation power is available. Here, we propose a low-complexity–oriented lossless compression framework of EFs suitable for integration into low-power event signal processing (ESP) chips or VLP event-processing chips, i.e., inside the sensor, where the event data are constrained to have fixed-length representation.

In our prior work, we employ an event-accumulation process where each asynchronous event sequence is split into spatiotemporal neighborhoods of time interval

where each neighborhood generates an EF as follows: at each pixel position

, the polarity sum of the events triggered in the time interval

is computed so that

is set as the sum’s sign, see

Figure 1. In our prior work [

18], we proposed a context-based lossless image codec for encoding a stack of up to eight EFs using two data structures: an event map image (EMI) that stores the event spatial information; and the concatenated polarity vector (CPV) that stores the polarity information; a lossless codec suitable for integration into SoC. In our prior work [

19], we proposed a low-complexity lossless coding framework by adapting the run-length encoding scheme and Elias coding for EF coding, suitable for integration into low-cost ESP chips. While in our prior work [

20], we proposed a low-complexity lossless coding method for encoding the event data represented as asynchronous event sequences that employ only low-complexity coding techniques so that it is suitable for integration into low-cost ESP chips. Moreover, these solutions provide a variable-length representation, a strategy used by most state-of-the-art coding methods. In contrast, the goal of this work is to introduce a novel low-complexity lossless coding framework for fixed-length representation of EFs using multi-level look-up tables (LUTs) that is suitable for integration into VLP chips.

In summary, the novel contributions proposed in this paper are listed below.

- (1)

A novel lossless compression framework for encoding synchronous EFs, suitable for hardware implementation in VLP event-processing chips; see

Section 3.

- (2)

A novel ternary frame representationmusing group partitioning and symbol remapping; see

Section 3.1.

- (3)

A novel memory-efficient low-complexity coding scheme for lossless compression of sparse images using a novel fixed-length representation based on multi-level LUTs; see

Section 3.2.2.

- (4)

An improved fixed-length representation using a mask-LUT structure for encoding EFs using very-large groups of pixels; see

Section 3.2.3.

- (5)

A codec that provides random access (RA) to any group of pixels using a fixed-length representation; see

Section 3.3.

The remainder of this paper is organized as follows.

Section 2 presents a discussion on state-of-the-art methods developed for the new event modality.

Section 3 describes the proposed fixed-length coding framework.

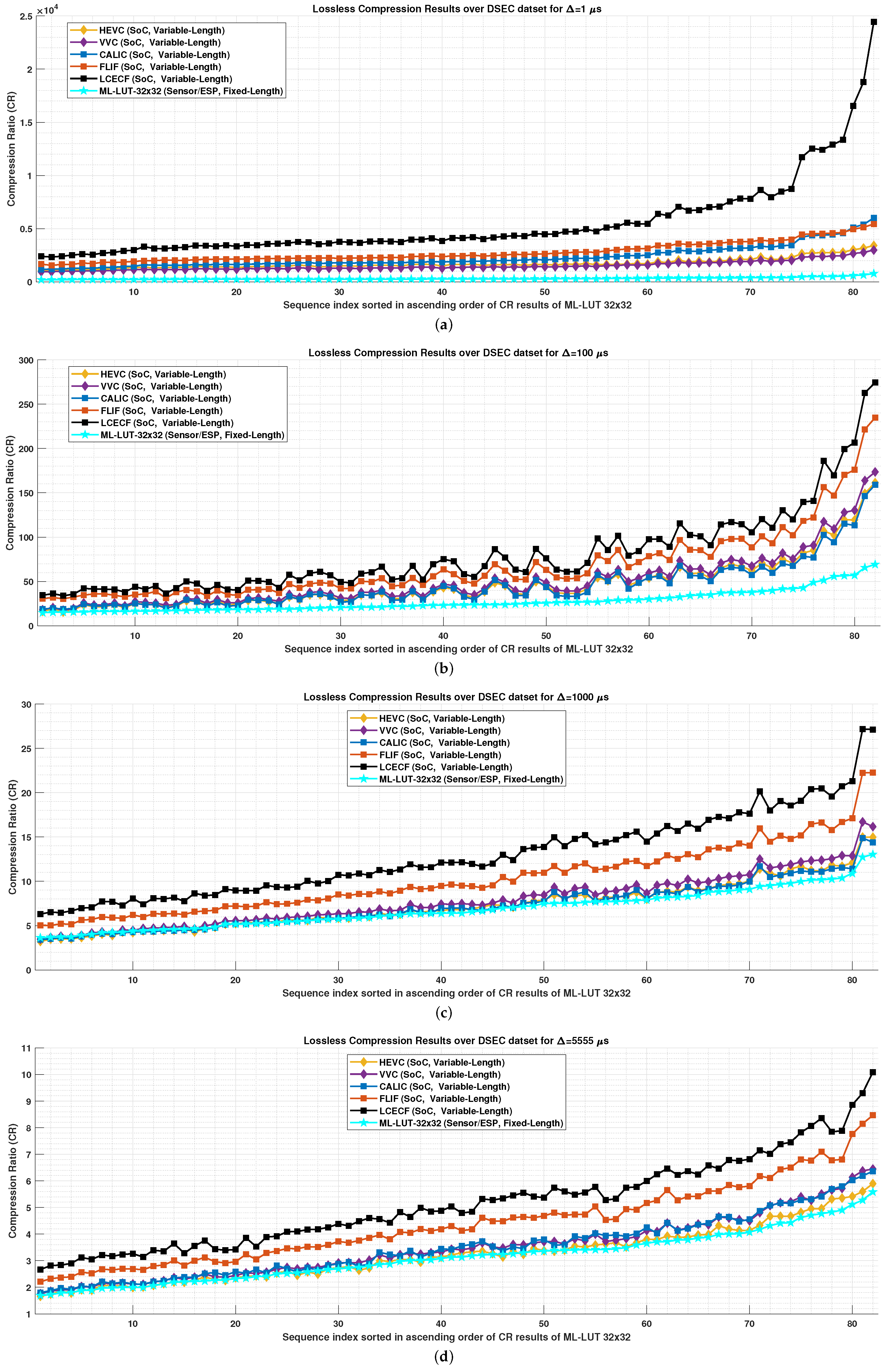

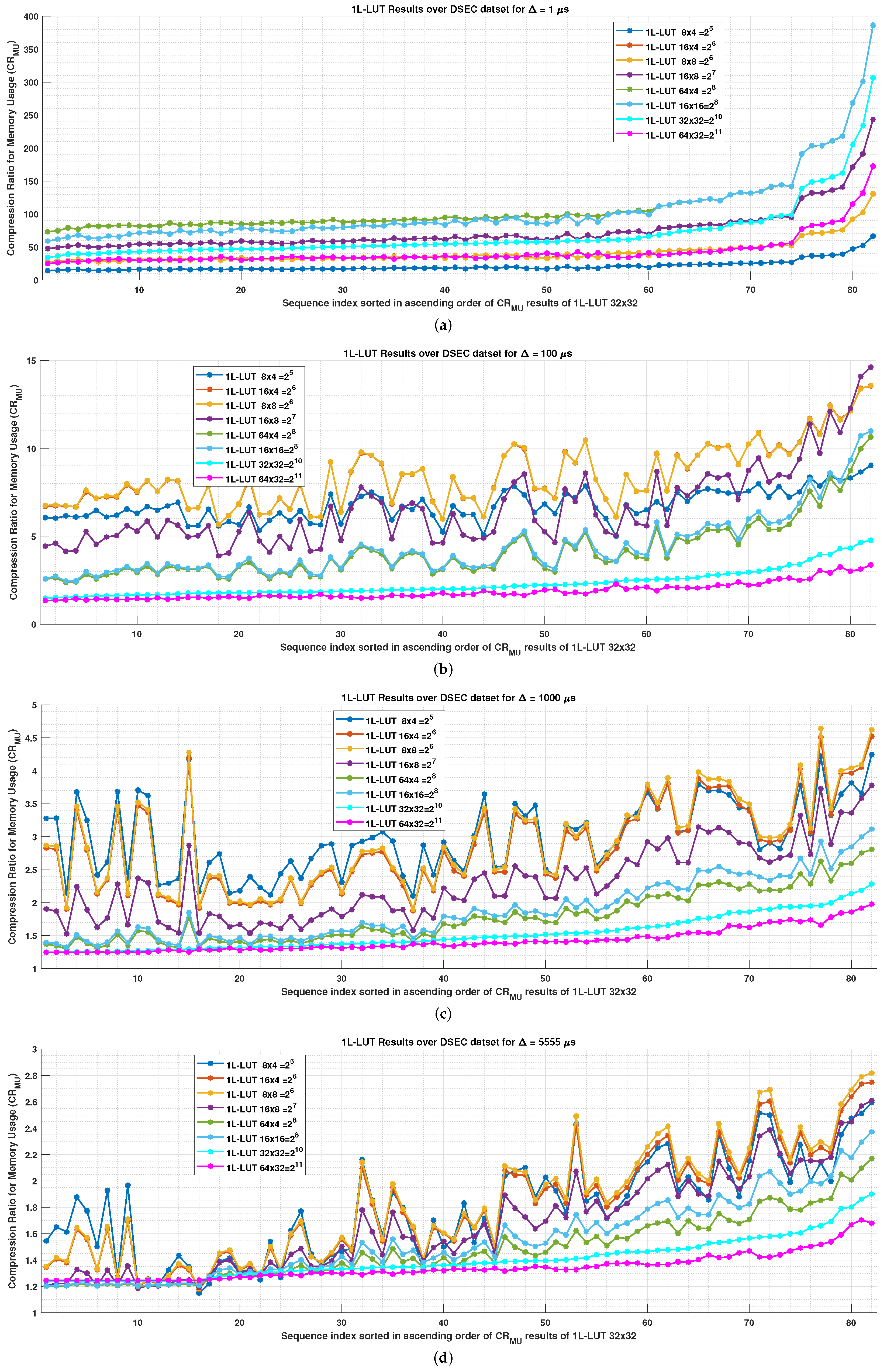

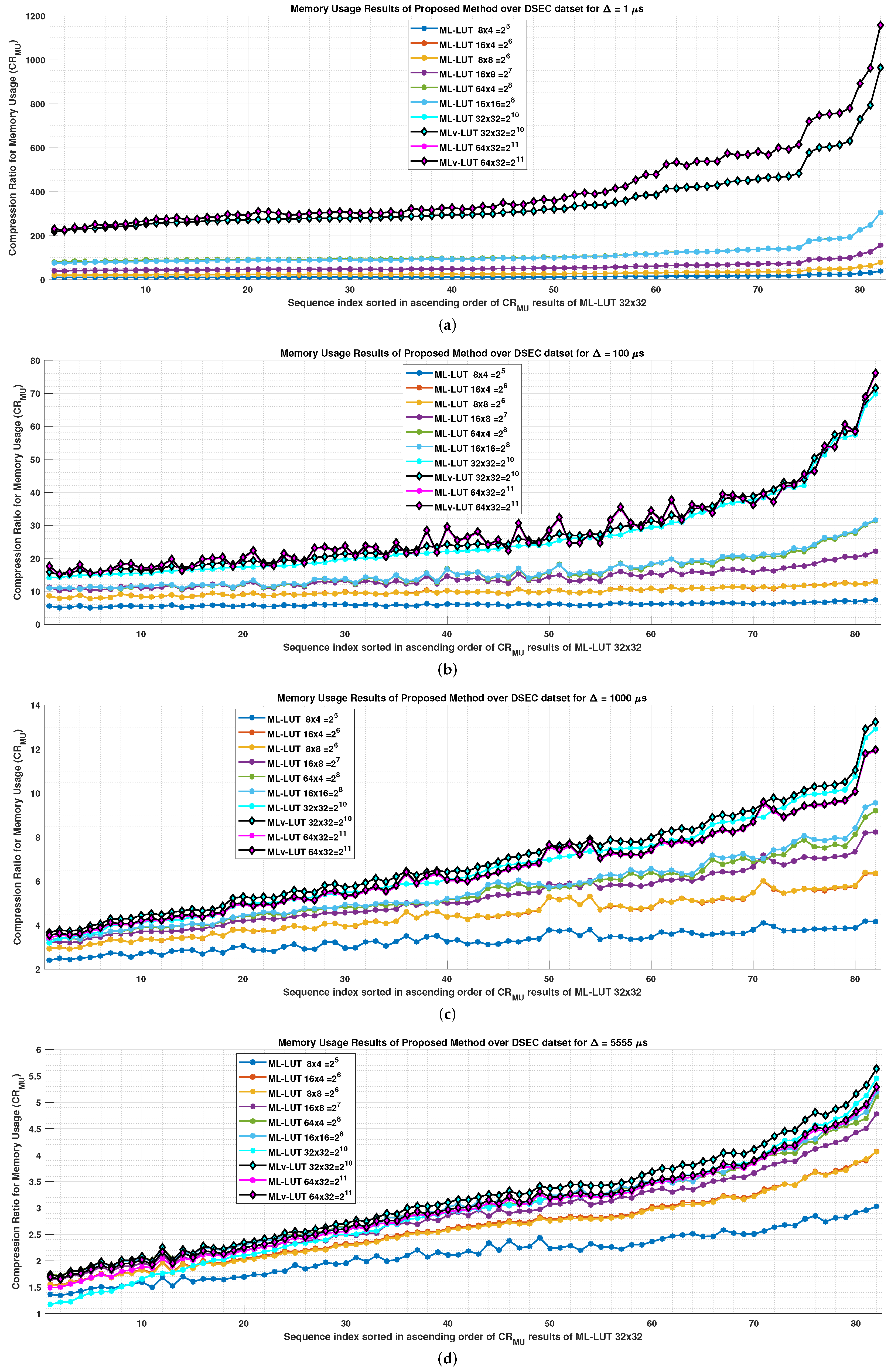

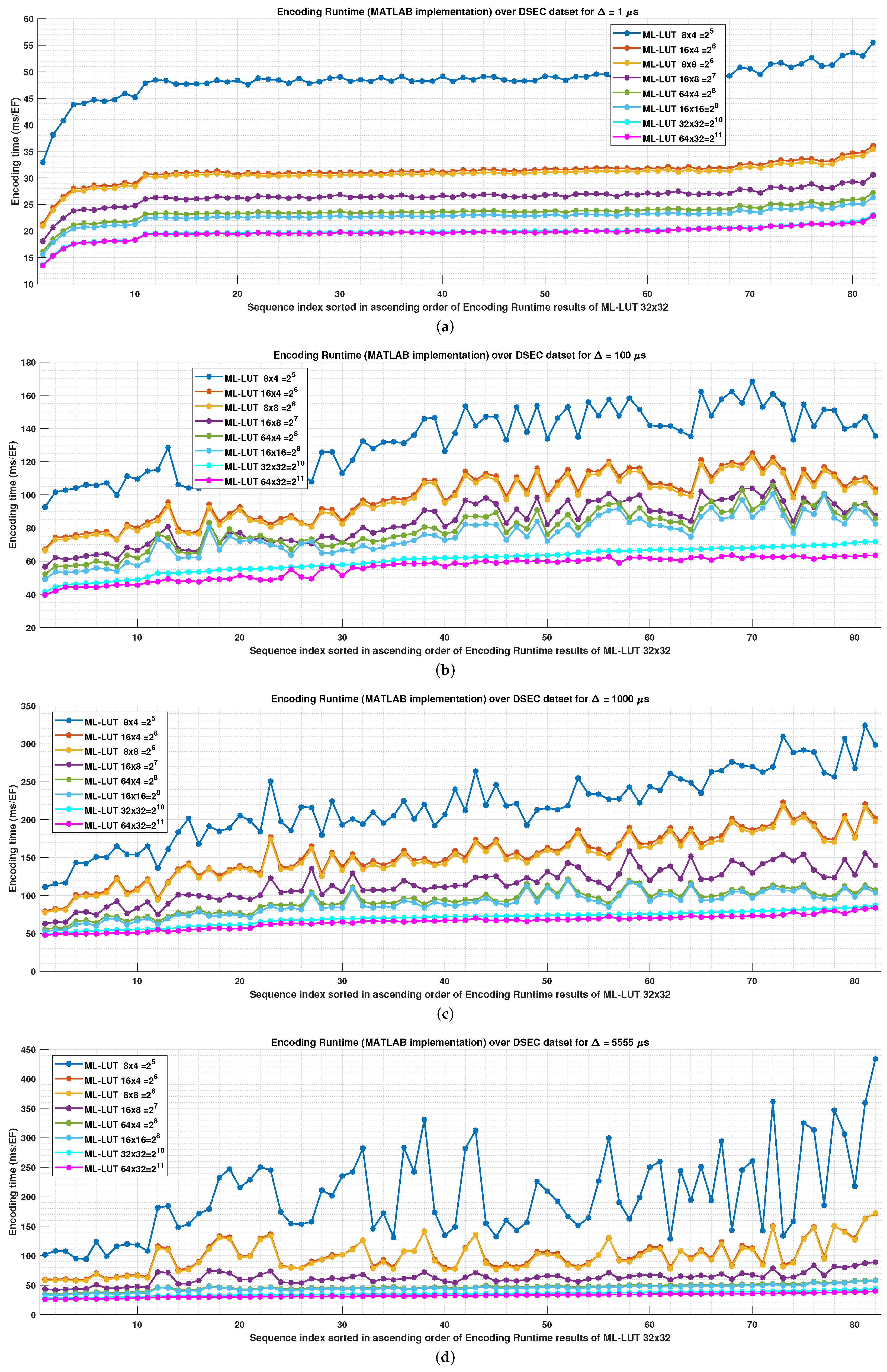

Section 4 presents the experimental evaluation of the proposed framework.

Section 5 concludes this work.

2. State-of-the-Art Methods

Let us consider an event sensor having a resolution of

pixels. When the sensor detects a change in the incoming light intensity that is above a set threshold, an asynchronous event, denoted

is triggered by encapsulating and transmitting 8 B of event data containing the following information:

(i) the event spatial information, denoted

where

and

corresponding to the pixel position

where the change was detected;

(ii) the event polarity information, denoted

which signals the

type of change using symbol

for a decrease and symbol

for an increase in the incoming light intensity; and

(iii) the event time information,

i.e., the timestamp

when the change was detected. Let us denote the asynchronous event sequence capture by a DVS sensor [

2] as

where

events were triggered over the time period

s.

One can note that the processing steps in event-based computer vision solutions are significantly different than in the case of conventional color cameras. In the event-signal processing framework, an important decision is represented by the selection of the event representation. is first extracted from the raw DVS data and then further transformed into the format interpretable by the following processing steps, which may differ from one application to another.

The section is organized as follows.

Section 2.1 analysis the event representations proposed in the computer vision domain.

Section 2.2 outlines the proposed state-of-the-art methods for lossless event data compression and analysis how to modify the traditional data compression codecs for lossless event data compression.

2.1. Event Data Representations in Computer Vision

The simplest event representation that gained a lot of popularity in recent years is spike processing, such as Spike Neural Networks (SNNs), which directly can process the asynchronous event sequences [

22]. Another representation used in Computer Vision is the “image-like" representation, denoted here EF, as most of the upper applications prefer to consume the event data as an image rather than an asynchronous event sequence. One can note that the event data can be stored as a sequence of generated EFs in a lossless manner (no information is lost) only by setting

with the smallest time-window size, i.e.,

s. Then the raw EF representation will require an impressive amount of space to store one second of captured event data, i.e.,

MB (around

GB). Note that the raw EF representation (2-bit per pixel) is more efficient than the raw sensor representation (8 B per event) only if more than

of the positions in the generated EF sequence signal event positions, i.e., a capturing event density that the DVS sensor cannot reach using current technology. Therefore,

is usually set using values in the ms time range so that the generated EFs have a much higher matrix filling percentage than

which results in losing some part of the raw captured information.

Different pre-processing strategies were proposed in the literature to quantize the event data and further process it in such a way that an upper-level application can achieve state-of-the-art performance. In [

23], the event polarity sum frames are computed by summing the polarity of events within a given time window for real-time visual-inertial odometry. In [

24], the count-based event-accumulation representation is proposed for steering prediction for self-driving cars, where the events within a temporal window are counted for each polarity type to generate two EFs. In [

25], the distance surface representation is proposed for pixel motion estimation, where the generated image contains the spatial distance to the active pixel. In [

26], the surface of active events representation is proposed for computing the visual flow, where the EF contains only the latest event triggered at each pixel position. In [

27,

28], the graph-based representation is proposed for object classification, where the events are transformed within a temporal window into a set of connected nodes. Although this representation is quite compact and efficient, the generated graphs can be computationally expensive. In [

29], the voxel grid representation is proposed. In [

30], the event spike tensor representation is proposed as a voxel grid variation. In [

31], the time-ordered recent events representation is proposed for a wide range of applications (denoising, reconstruction, classification, pose estimation), where a first-in-first-out buffer is used to retain the most recent events at each location, while the others are ignored.

2.2. Event Data Representations in Lossless Compression

In the lossless compression domain, two event data representations, asynchronous event sequences, and event-accumulation EFs, were studied. In [

13], the authors propose a solution for encoding the raw asynchronous event sequences by removing the redundancy of the spatial and temporal information using three strategies: adaptive macro-cube partitioning structure, the address-prior mode, and time-prior mode. An extension was proposed in [

14] by introducing an event sequence octree-based cube partition and a flexible inter-cube prediction method based on motion estimation and motion compensation. In [

32], the authors present a complex performance analysis of different lossless data compression algorithms employed to compete against the Spike Coding approach proposed in [

14]. The study shows that several state-of-the-art traditional data compression codecs, such as the Lempel–Ziv–Markov chain algorithm (LZMA) [

33] developed by Igor Pavlov and the dictionary-based codec Zeta Library (ZLIB) [

34], provide a good coding performance. One can note that the comparison with [

13] and [

14] is not possible as their codec/source code is not made publicly available, and the PKU-DVS dataset [

35] is partially made available only for academic research purpose.

The study in [

32] was extended in [

15] by introducing the TALVEN algorithm for encoding the count-accumulated EF sequences so that they can be further encoded by employing the HEVC codec [

17]. For encoding event-accumulation EFs, in our prior work [

18,

19], we proposed to compare the coding performance with that of prior and current video coding standards, HEVC [

17] and VVC [

36], and of well-known state-of-the-art lossless image codecs, such as Context Adaptive Lossless Image Codec (CALIC) [

37], and Free Lossless Image Format (FLIF) codec [

38]. Experiments show that an improved performance is achieved when encoding an intensity-like event representation obtained using a symbol remapping technique where a set of five (consecutive) EFs is merged into a combined EF that contains (8-bit) intensity-like values.

In conclusion, the current coding solutions of event data, either based on event codecs [

13,

14,

15] or traditional data/image/video codecs [

17,

33,

34,

36,

37,

38], provide competitive variable-length representations designed to optimize the codec’s performance. In contrast, in this work, we propose a fixed-length representation designed to achieve low complexity and fast RA to any group of pixels, suitable for integration into VLP chips.

3. Proposed Framework

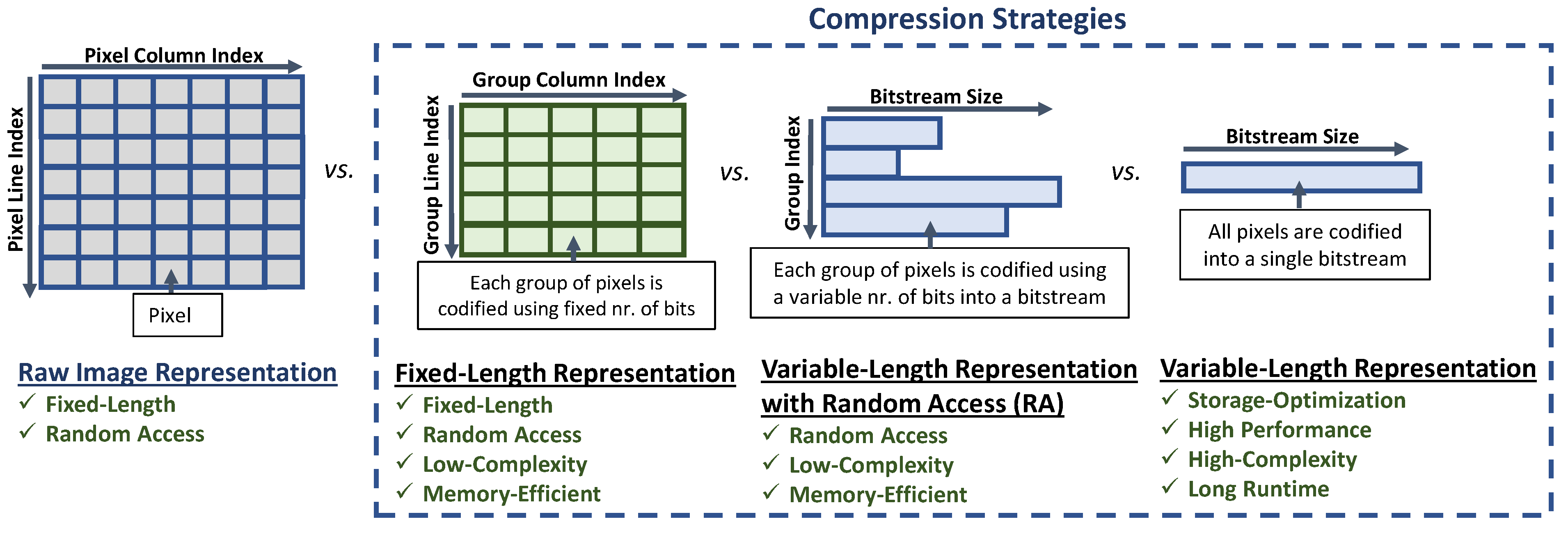

Figure 2 presents a comparison between the requirements imposed for different compression strategies. In a left-to-right order, the first representation is the raw image representation, where no compression strategy is employed as all pixels are represented uncompressed in memory using a fixed-length representation of the maximum number of bits required to store each symbol in the alphabet, and which provides random access to any pixel. The first compression strategy is the fixed-length representation, where on top of the fixed-length and random-access requirements, the codec must provide a more efficient representation of the image in the memory, i.e., to store the image using a reduced number of bits compared with raw representation. Moreover, such compression methods usually propose to encode together a group of pixels (e.g., a

pixel-block) and must have a very low computational complexity so that it can be directly hardware written in the sensors. The second compression strategy is the variable-length representation with random access, where the coding performance is further improved by removing the fixed-length representation of each group of pixels and where a header is introduced to store additional information needed to provide random access to each group of pixels. The last compression strategy is storage-optimized, i.e., it is designed to provide the highest coding performance possible without imposing any constraints on algorithmic complexity, runtime, or memory requirements.

One can note that the fixed-length representation requirement is a very hard constraint and does not allow the solution to achieve the best performance. The fixed-length and variable-length representations offer the possibility to apply any pre-processing algorithm to a group of pixels while still storing in memory the entire package of data. In general, variable-length representations, also known as performance-oriented codecs, are optimized to achieve the best coding performance without imposing any constraints on complexity, runtime, or having access to groups of pixels.

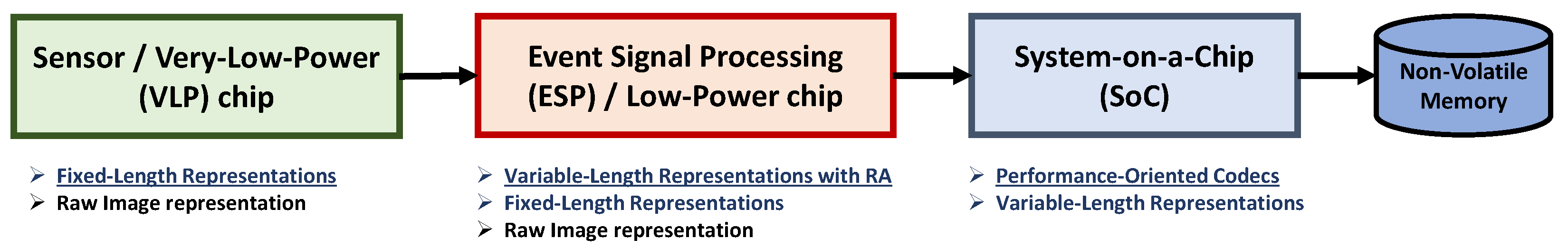

Figure 3 presents what type of compression strategies can be used for each type of chip. E.g., the VLP chips may incorporate a lossless compression method as long as its complexity is very low so that it is possible to be written on hardware, and each group of pixels can be encoded separately so that they can be packetized together. The ESP chip may incorporate a lossless compression method to reduce the amount of data to be transmitted. Such a chip contains a limited memory of a few MB, and the codec must be simple enough to be hardware written. While in general, the SoC chip may incorporate any compression method as long as the SoC specifications allow the coding method to be run on the SoC. Finally, the data are transmitted from the SoC chip to be stored on the non-volatile memory of a mobile phone (e.g., memory card) or a computer (e.g., hard disk drive).

In this work, we propose a fixed-length representation framework suitable for integration into VLP chips, which offers the possibility to store more efficiently in memory the input image compared with the raw image representation.

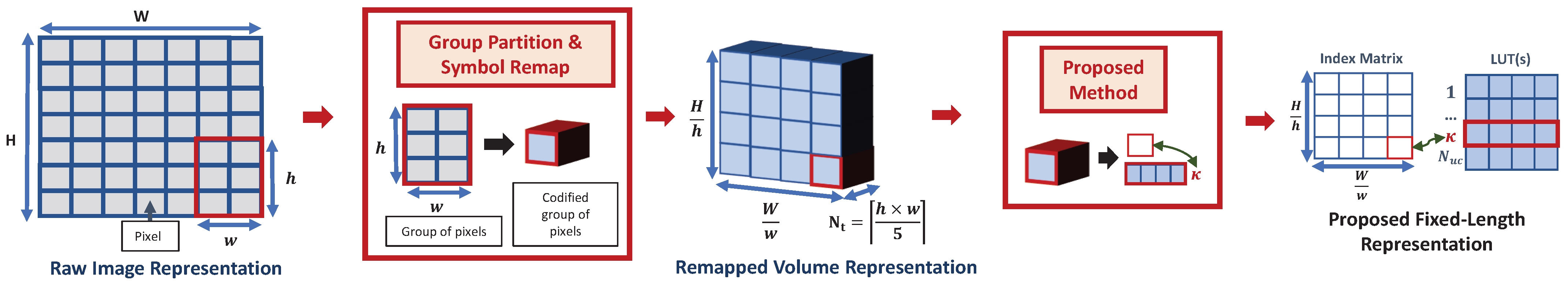

Figure 4 presents the proposed fixed-length representation framework for encoding the input raw image representation. The proposed strategy consists in first dividing the raw image into groups of pixels of

size, where each group is represented using a reduced set of (

) remapped symbols. The figure’s central part shows that the raw image can be represented as remapped volume having group-partition resolution and remapped-group-size depth. While the right part shows the proposed method is employed to store the remapped volume using two data structures: a matrix for storing using a fixed-length representation of an index and additional information and a set of one or more LUTs for storing the unique symbols combination used to remap the original raw data.

The section is organized as follows.

Section 3.1 presents the group partitioning and symbol remapping processes. The remapped volume is used to generate the proposed fixed-length representation consisting of an index matrix and a set of one or more LUTs.

Section 3.2 presents the proposed LUT-based Representations.

Section 3.3 presents the final algorithmic details.

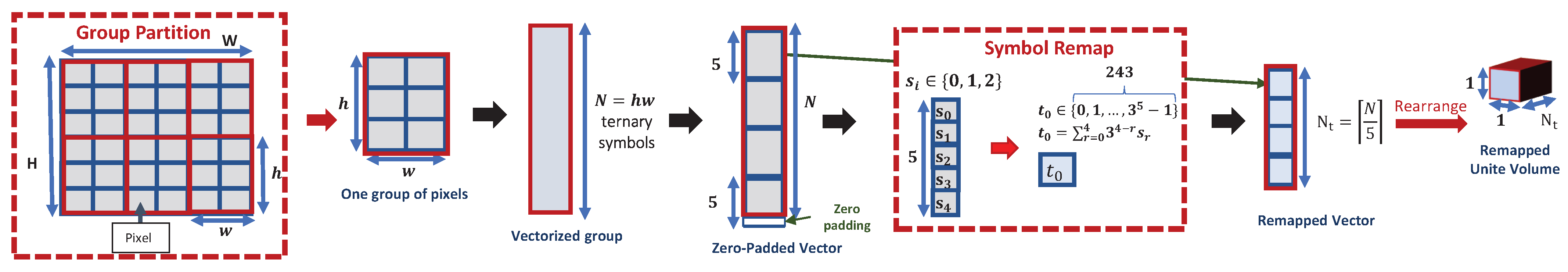

3.1. Group Partition and Symbol Remap

Let us denote

the input image of size

which stores using 2 bits a ternary symbol,

at each pixel position

In this work, we propose to partition

into

groups of pixels, where each group

of size

contains

ternary symbols. Firstly, each group of pixels

is vectorized as follows:

Next,

is then zero-padded up to the closest multiple-of-five length such that the zero-padded vector can be split into

subgroups of five ternary symbols, see

Figure 5. Let us denote the zero-padded group vector as

where

Each subgroup

k of five ternary symbols,

is then remapped into a symbol

t in the alphabet

(represented using 8 bits), by employing the following symbol remapping formula:

Figure 5 shows that

is remapped as follows:

Furthermore, can be further rearranged as a remapped unite volume of size

In conclusion, the proposed fixed-length representation first stores the input image

as a remapped volume

of size

as depicted in the middle part of

Figure 4. One can note that the input image

having a raw image representation of

bits, stored in memory using the proposed remapped volume representation,

using

i.e., the input memory size is reduced with a percentage of

A maximum memory reduction of

(or a compression ratio of

see

Section 4) is obtained when the pixel-group size,

is selected as a multiple-of-five number.

3.2. Proposed LUT-Based Representations

The proposed fixed-length representation is designed to encode each group

using an index value (represented on a specific number of bits) stored in the index matrix

The main idea is to find all unique combinations of

symbols found in any vector

and store them in memory using a novel LUT-based structure that continues to provide a fixed-length representation. Therefore, the remapped volume representation,

is represented using a fixed number of bits by the index matrix

and the LUT-based structure, see the right part of

Figure 4, where

contains all the necessary information to extract

from the LUT-based structure. Several fixed-length representation solutions based on different levels of LUT indexing are proposed. A variable-length representation solution for storing the LUT-based structure is also studied.

Section 3.2.1,

Section 3.2.2, and

Section 3.2.3 present the proposed fixed-length representation solutions based on a single-level LUT, double-level LUTs, and multi-level LUT structures, respectively.

Section 3.2.4 presents the variable-length representation solution for compressing the LUT-based structure.

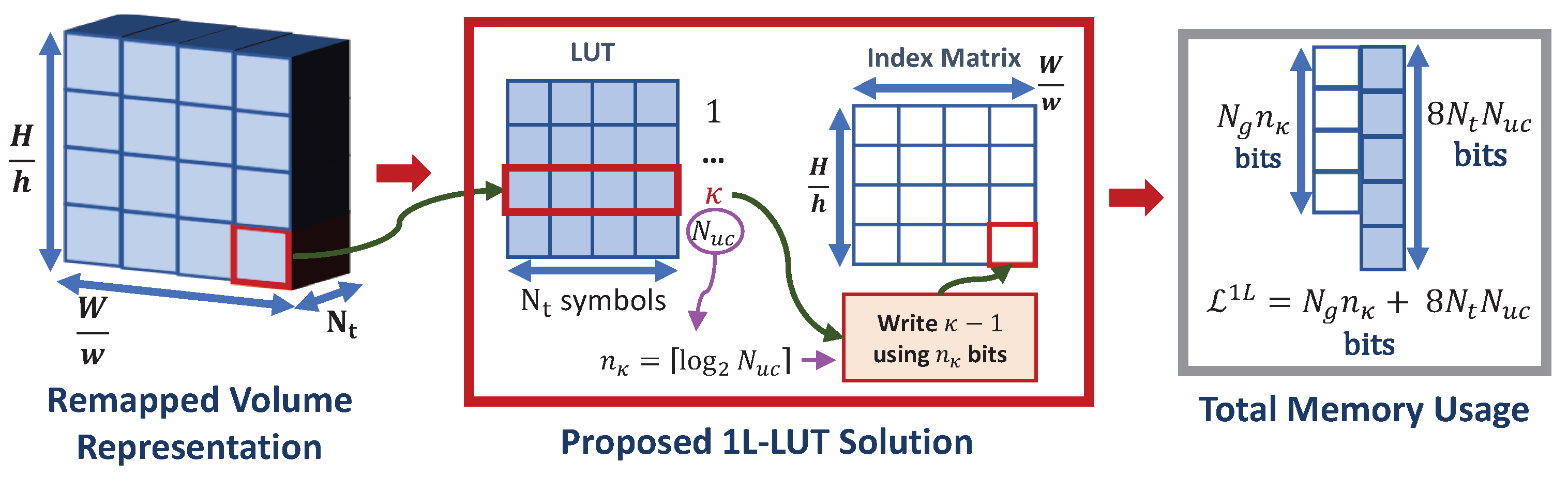

3.2.1. Single-Level LUT Solution

The proposed single-level LUT solution, called 1L-LUT, introduces a simple fixed-length representation by storing the

unique combinations of

symbols using a single LUT table structure, which requires

In such case,

stores an index

using

bits, where

is extracted from LUT as

The index matrix

is represented using

The number of bits needed to represent

using the 1L-LUT solution is computed as follows:

Figure 6 depicts the proposed 1L-LUT solution. One can note that the value

(instead of

) is written in

using

bits as

For example, if

then only two bits are needed to represent the index

instead of three (

).

depends on the group size,

as follows:

- (i)

If N is too small, then contains too much information, and is too large as too many unique combinations are stored by LUT;

- (ii)

If N is too large, then contains too less information, and each line in LUT contains too much information as is too large.

An optimal provides the smallest memory representation for the 1L-LUT solution, however, its coding performance remains limited.

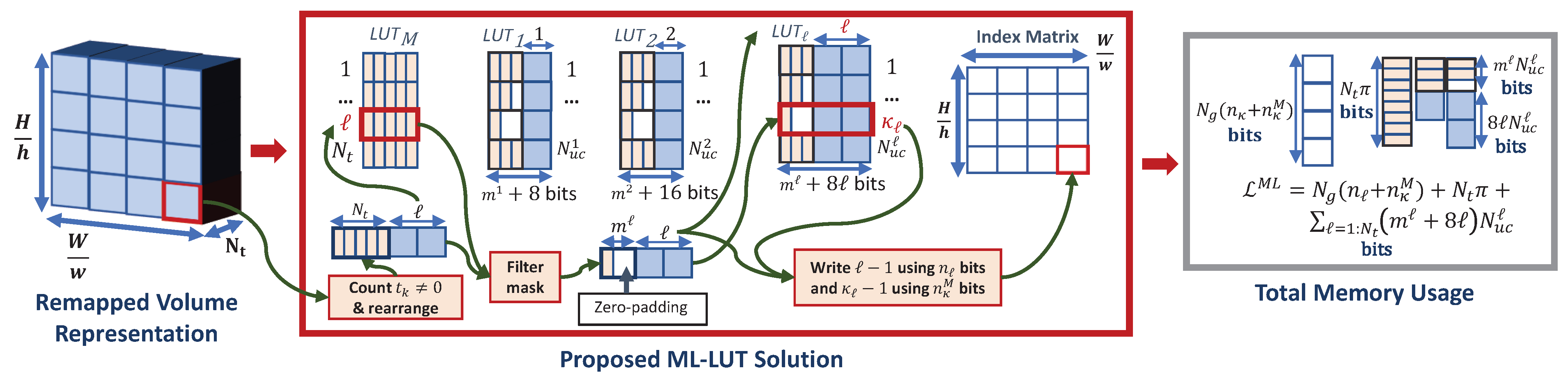

3.2.2. Double-Level LUT Solution

The proposed double-level LUT solution, called 2L-LUT, introduces a fixed-length representation where the

unique combinations of

symbols are further divided into a set of

LUTs,

, to achieve an improve compression performance and faster search of the unique combinations. The main idea is that

is first classified into

classes by counting the number of non-zero values as

2L-LUT propose to created

LUTs, where the

ℓ-th LUT, denoted LUT

stores

unique combinations of

symbols found in any vector

One can note that for

, no LUT is needed to be stored in memory as a single deterministic case can be found: all values are zero. In such case,

stores the LUT index,

using

bits, see Equation (

9), and the index in the LUT,

using

bits, see Equation (

10), i.e., using the maximum number of bits needed to represent the largest number of unique combinations in the set of LUTs. Hence,

stores

ℓ using the first

bits and

using the following

bits.

is extracted from the

ℓ-th LUT as

Therefore,

is stored by 2L-LUT using

bits, see Equation (

11).

The set of

LUTs,

, contains

entries. 2L-LUT makes use of the information that

(the

ℓ-th LUT) contains

ℓ non-zero symbols by introducing a mask of

binary symbols,

where the

ℓ-th mask symbol is set by checking if the corresponding symbol

is or not symbol

in the alphabet, see Equation (

12).

The

ℓ non-zero symbols are stored using

bits. Hence, 2L-LUT stores LUT

in memory using

bits, see Equation (

13), and

using

bits, see Equation (

14).

Figure 7 depicts the proposed 2L-LUT solution. Note that

can be computed only after populating the entire set of LUTs with unique combinations of

symbols. Therefore, the following bit-cleaner procedure,

BitCleaner(

), is employed to guarantee a single pass encoding of

BitCleaner() procedure:

- (i)

Compute as i.e., in the extreme case each group contains a unique combination of symbols.

- (e)

Employ 2L-LUT and write each value using bits instead of bits.

- (b1)

Compute

using Equation (

10) and

- (b2)

Remove the unnecessary bits, from the representation of each index value

In the encoding algorithm, one can note that step (i) is employed in the initialization stage, step (e) in the encoding stage; and steps (b1)–(b2) in the final processing stage of the final bitstream, generated for encoding the index matrix.

A similar procedure was developed to post-process the bitstream and remove the unnecessary bits used in the signaling of ℓ for the large group size case, where not all LUTs are used in the proposed LUT-based representation, i.e., only the first few LUTs are used as, for example, has the lowest probability to be used included in the LUT set. Therefore, the number of bits needed to represent ℓ is the number of bits needed to represent the index of the last LUT in the set for which The bit-cleaner procedure, BitCleaner(), is employed.

BitCleaner() procedure:

- (i)

Compute

- (e)

Employ 2L-LUT and write each value using bits.

- (b1)

Compute

- (b2)

Remove the extra bits, from the representation of each

Similarly, step (i) is employed in the initialization stage, (e) in the encoding stage; and steps (b1)–(b2) in the final processing stage of

3.2.3. Multi-Level LUT Solution

When the selected group size is very large, for example, for

the memory allocated to store the LUT-based structure of 2L-LUT contains a lot of redundancy. One can note that in such a case, the number of unique combinations in the set of LUTs is reduced, and the unique combinations are more populated with the symbol

the most frequent symbol in the alphabet. In this work, we propose to modify 2L-LUT for very large block sizes further and introduce the multi-level LUT solution called ML-LUT. ML-LUT introduces an extra LUT compared with 2L-LUT, called LUT mask and denoted

to store a mask associated with each LUT in

i.e., line

ℓ in

is associated with

Therefore, the position

k in line

is set by checking if all the unique combinations stored by

contain a symbol

on the

k-th position, i.e.,

see Equation (

15). Let us denote

as the number of lines in

and

as the number of bits required to store

computed using Equation (

16).

Figure 8 depicts the proposed ML-LUT solution. One can note that, by using

only for the positions

, we can employ Equation (

12) to generate the mask. Let us denote

the number of positions for which Equation (

12) is employed. Since

depends on the structure of the found unique combination, a variable-length representation would be required to store in memory the LUT-based structure of ML-LUT efficiently. However, to guarantee a fixed-length representation, we propose to store each updated mask using a fixed-length representation of

bits. Hence, Equation (

13) is updated as Equation (

17), and Equation (

14) is updated as Equation (

18).

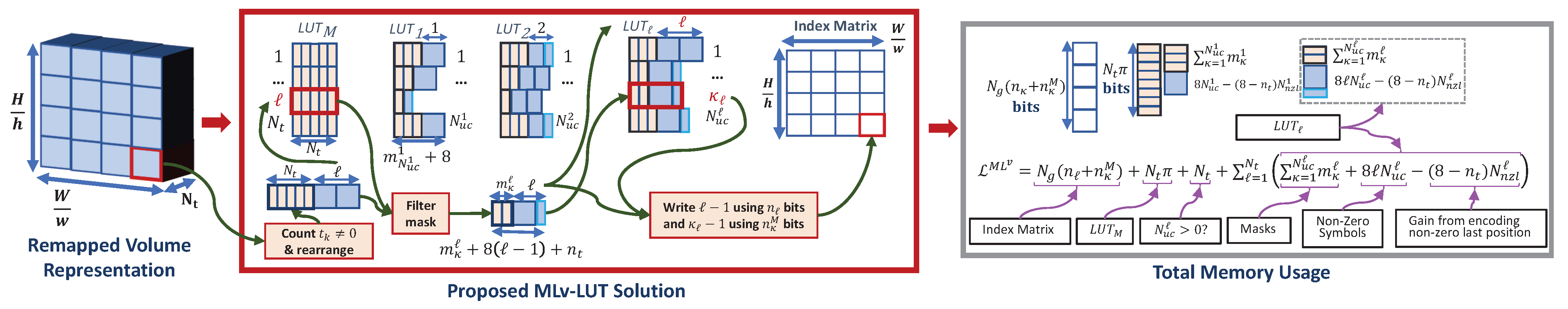

3.2.4. Variable-Length Solution

In this work, we also studied the possibility of relaxing the constraint of fixed-length representation for encoding the LUT-based structure and introducing a variable-length multi-level LUT solution called MLv-LUT, see

Figure 9. MLv-LUT simply modifies the fixed-length representation version, ML-LUT, by introducing two variable-length strategies for storing each

without where the zero-padding procedure is removed from the generation of the filtered mask and the last non-zero symbol

Firstly, instead of storing each filtered mask using a fixed number of bits,

we propose to store each filtered mask using the exact number of bits,

and avoid the zero-padding process. The cost of storing the filtered mask of

is computed by (

19).

Secondly, the last non-zero symbol

can be efficiently represented using less then 8 bits by removing the zero-padding process of

In such case, the last group of ternary symbols in

is

and has a length of

symbols, where

is computed by Equation (

20). We can now update Equation (

3) as Equation (

21) for remapping

If

then

and, instead of 8 bits, only

bits are needed to store

where

is computed using Equation (

22). Let us denote

, the number of unique combinations in

which contain a non-zero symbol on the last position. The gain obtained by employing such a strategy is computed by (

23). Due to the variable-length representation of the LUT-based structure, an additional cost of

bits, computed by (

24), is required to signal which LUTs are included in the LUT set, i.e., for which

Finally, MLv-LUT stores in memory

using

bits, where

is computed by (

25) using (

11), (

16), (

19), (

23), and (

24).

3.3. Algorithmic Details

The

encoder implementation of the proposed 2L-LUT/ML-LUT fixed-length representation is presented in Algorithm 1. The threshold

is used to select the appropriate solution based on the group size information. One can note that the index matrix is initially encoded as bitstream

using steps 5–22 in Algorithm 1. The proposed LUT-based representation is encoded as bitstream

using steps 23–39 in Algorithm 1. Bitstream

is finally updated using steps 40–41 in Algorithm 1 by removing the unnecessary bits based on the information provided by the proposed LUT-based representation. The output bitstream,

concatenates

and

in this order.

| Algorithm 1:

Encode using ML-LUT |

![Electronics 12 02302 i001 Electronics 12 02302 i001]() |

The pseudo-code provided by Algorithm 1 is used to encode the input image and store the data on the disk, while (

14) and (

18) compute the total memory needed to store in memory all the necessary information.

The decoder implementation of the proposed 2L-LUT/ML-LUT fixed-length representation is presented in Algorithm 2. Similarly, the threshold is used to select the appropriate solution based on the group size information. The proposed LUT-based representation is first decoded from the first part of using steps 7–24 in Algorithm 2. The index matrix, is decoded from the remaining part of using steps 26–29 in Algorithm 2, i.e., ℓ and are decode on steps 28 and 29 in Algorithm 2, respectively. Note that the inverse remap process consists in first representing each symbol in in base 3 to generate five ternary symbols and then selecting with the first ternary symbols. Finally, is generated using the proposed LUT-based representation and using steps 30–36 in Algorithm 2.

Algorithm 1 and Algorithm 2 are used to introduce the encoder and decoder of the proposed fixed-length ML-LUT method, respectively. In the case of the proposed variable-length MLv-LUT method, the two algorithms may be further modified using the algorithmic description presented in

Section 3.2.4, i.e., by modifying steps 33–34 in Algorithm 1 and steps 21–22 in Algorithm 2.

The size of

i.e., the compressed file size, is usually smaller than the total memory usage size computed by

or

In this work,

and

are computed to report the total memory usage of each proposed fixed-length method, while

is computed to report the optimal storage size of the proposed framework.

| Algorithm 2:

Decode using ML-LUT |

![Electronics 12 02302 i002 Electronics 12 02302 i002]() |