Syntheses of Dual-Artistic Media Effects Using a Generative Model with Spatial Control

Abstract

:1. Introduction

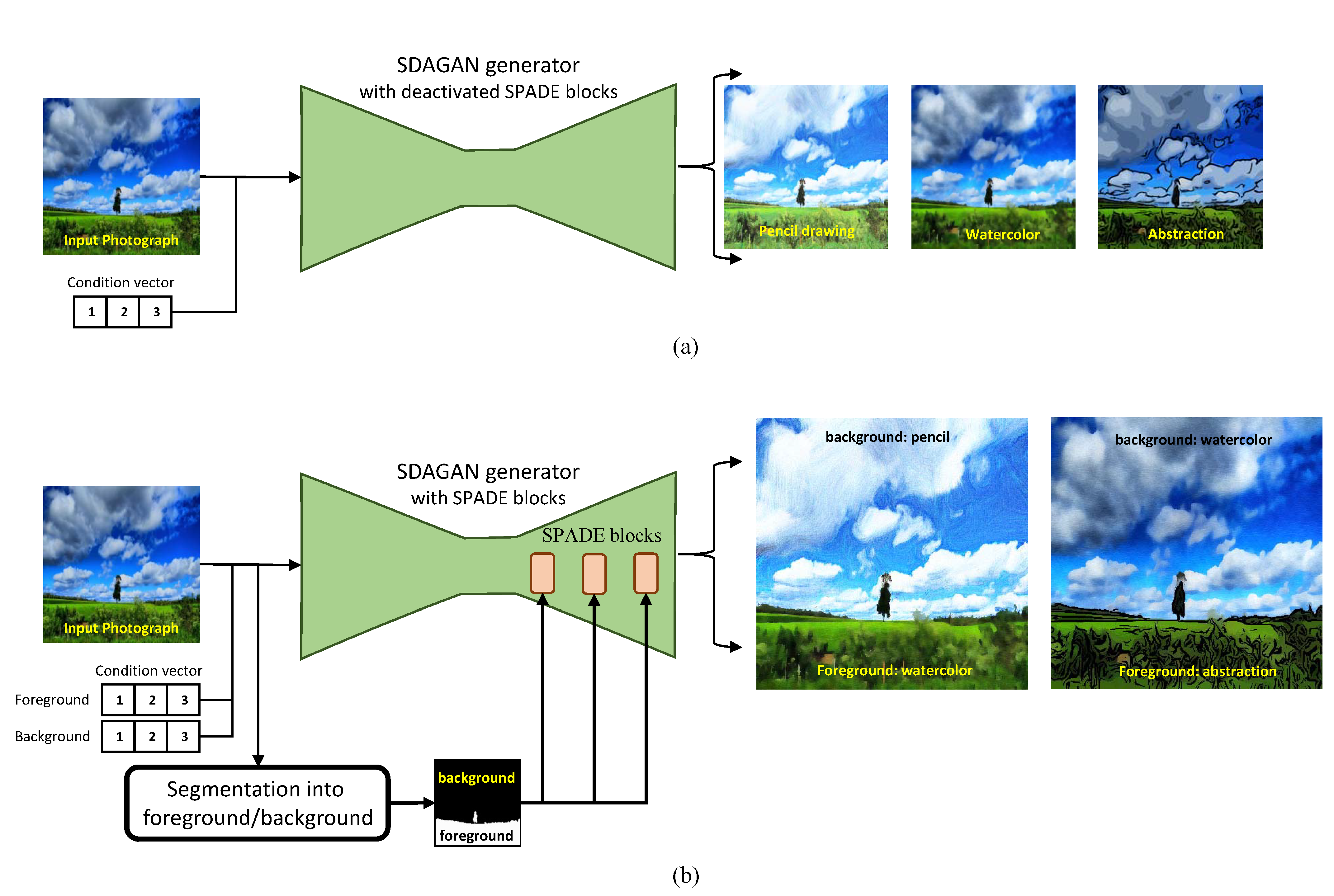

- We build a paired dataset for photographs and their matched artwork images by employing the existing techniques that synthesize various artistic media effects. This approach enables us to generate artwork images with very realistic artistic media effects. Using this dataset, we can execute the pix2pix approach for translating a photograph to artwork images of various styles including abstraction, watercolor and pencil drawing.

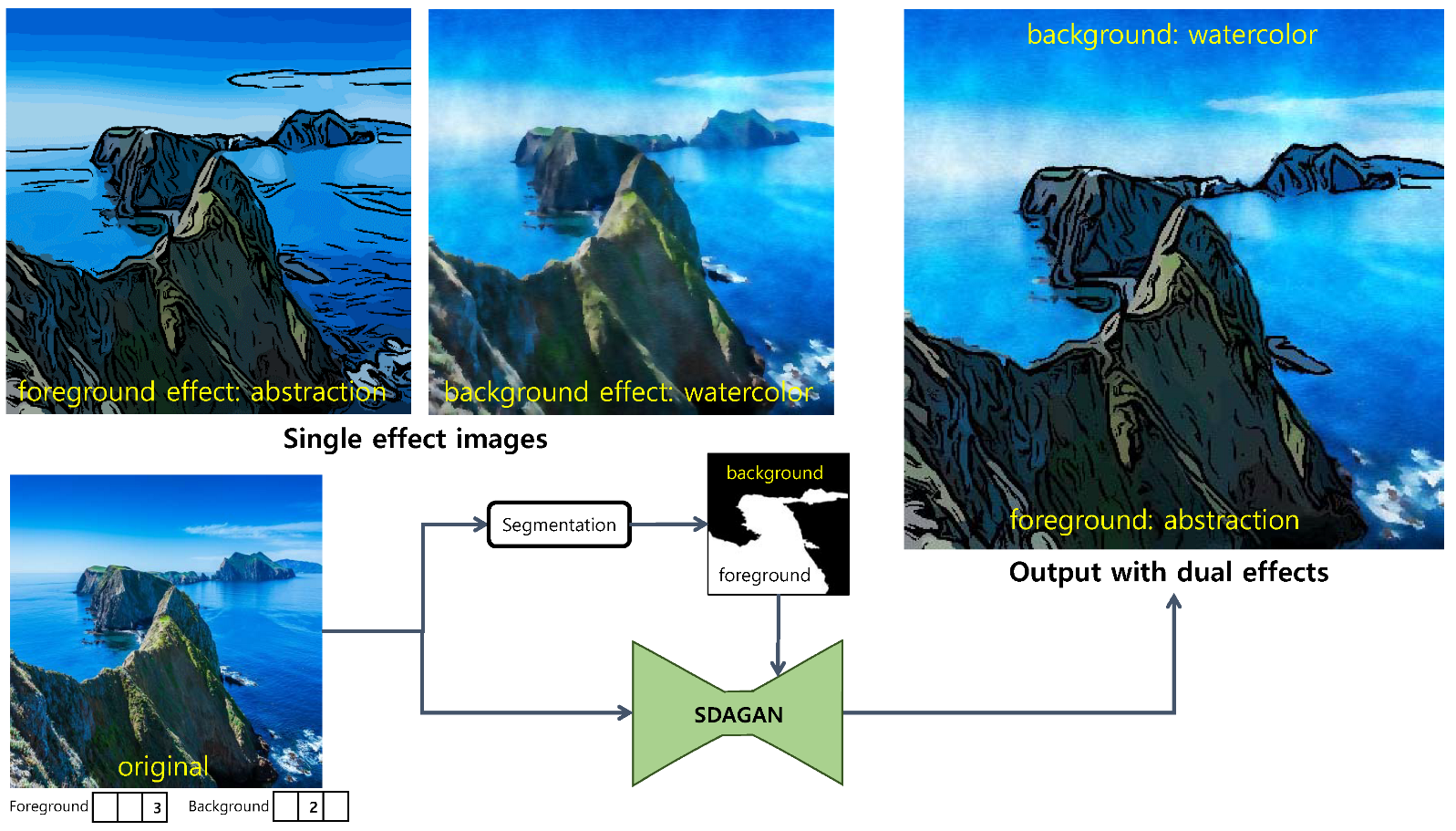

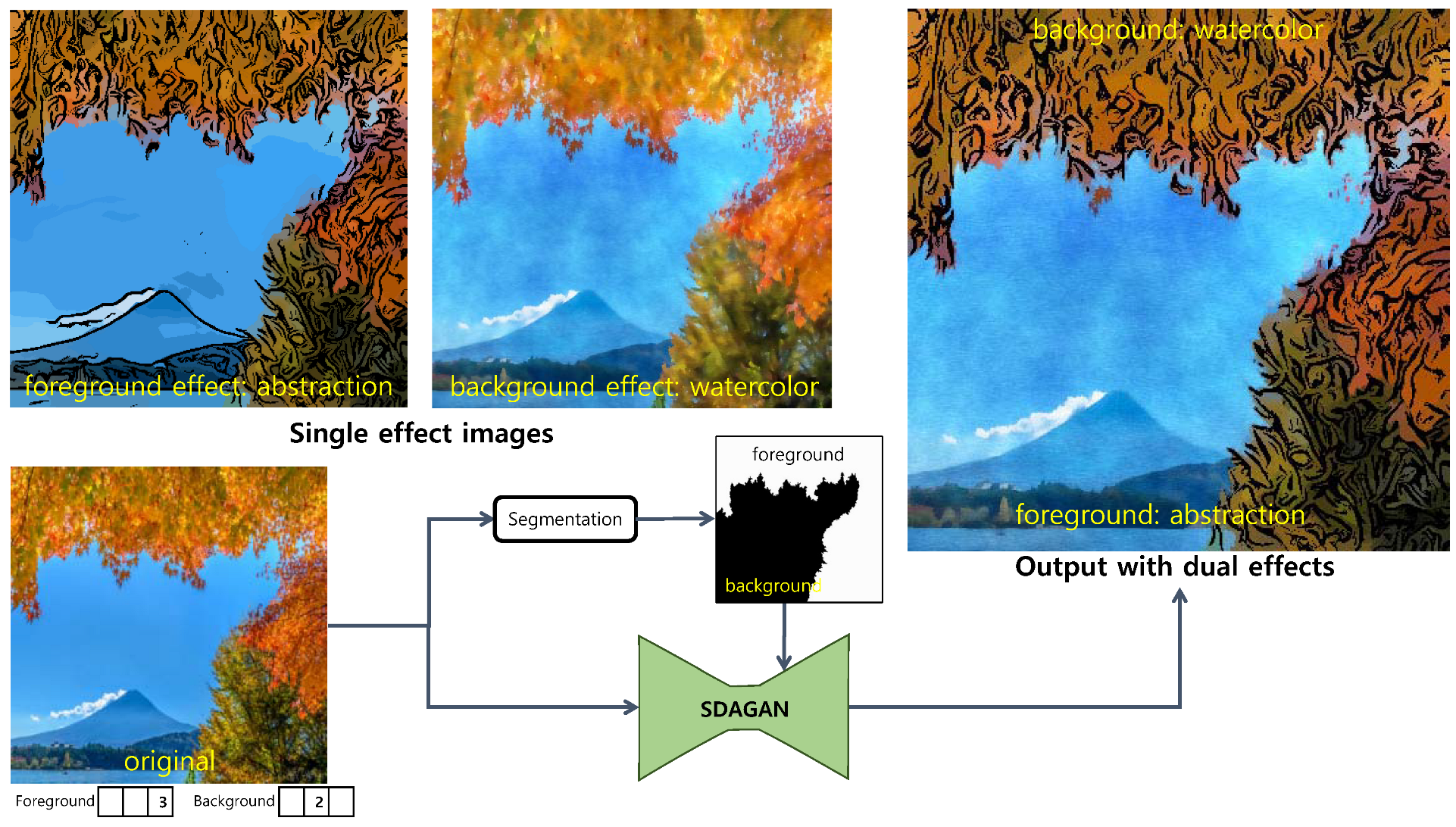

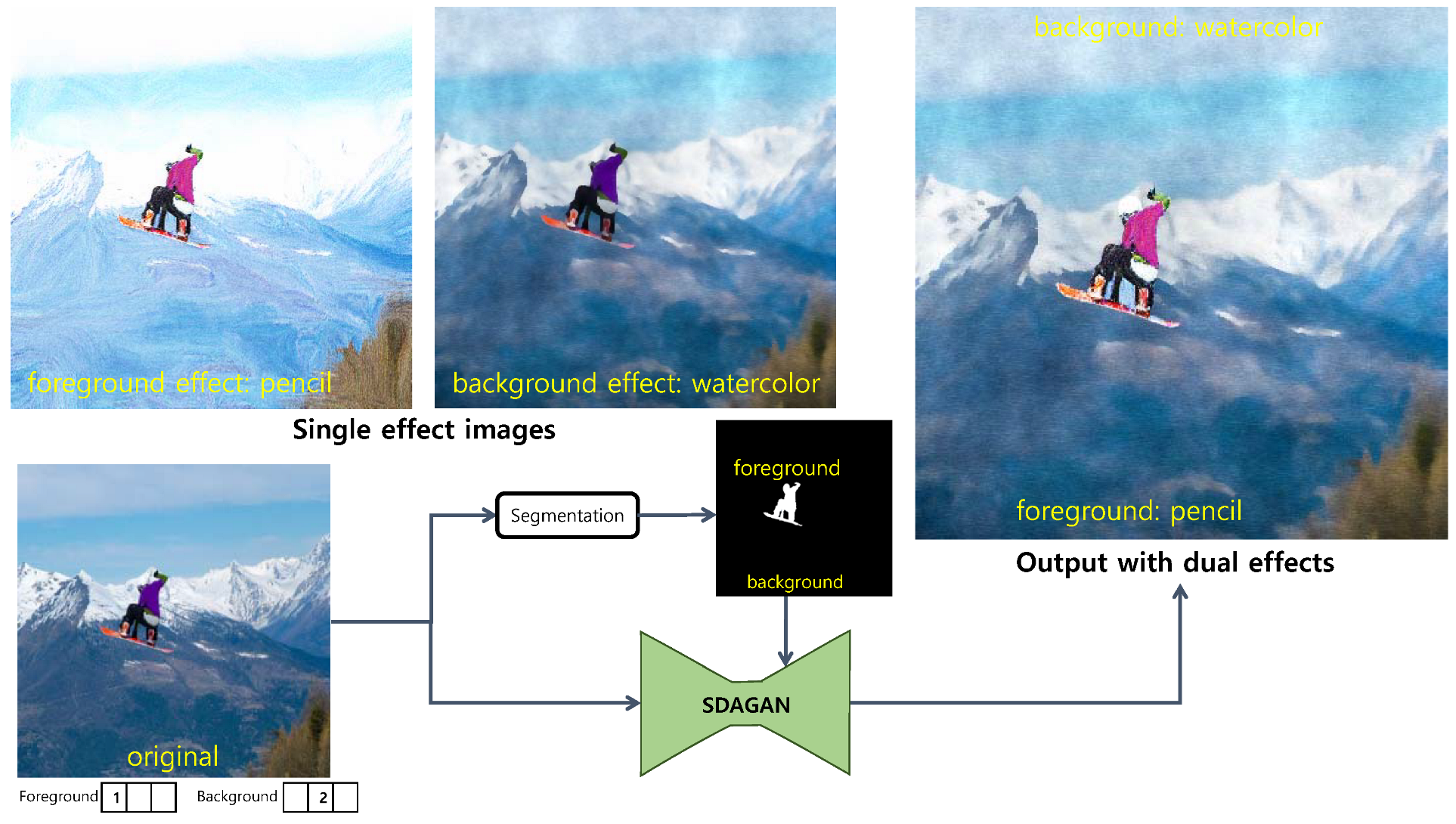

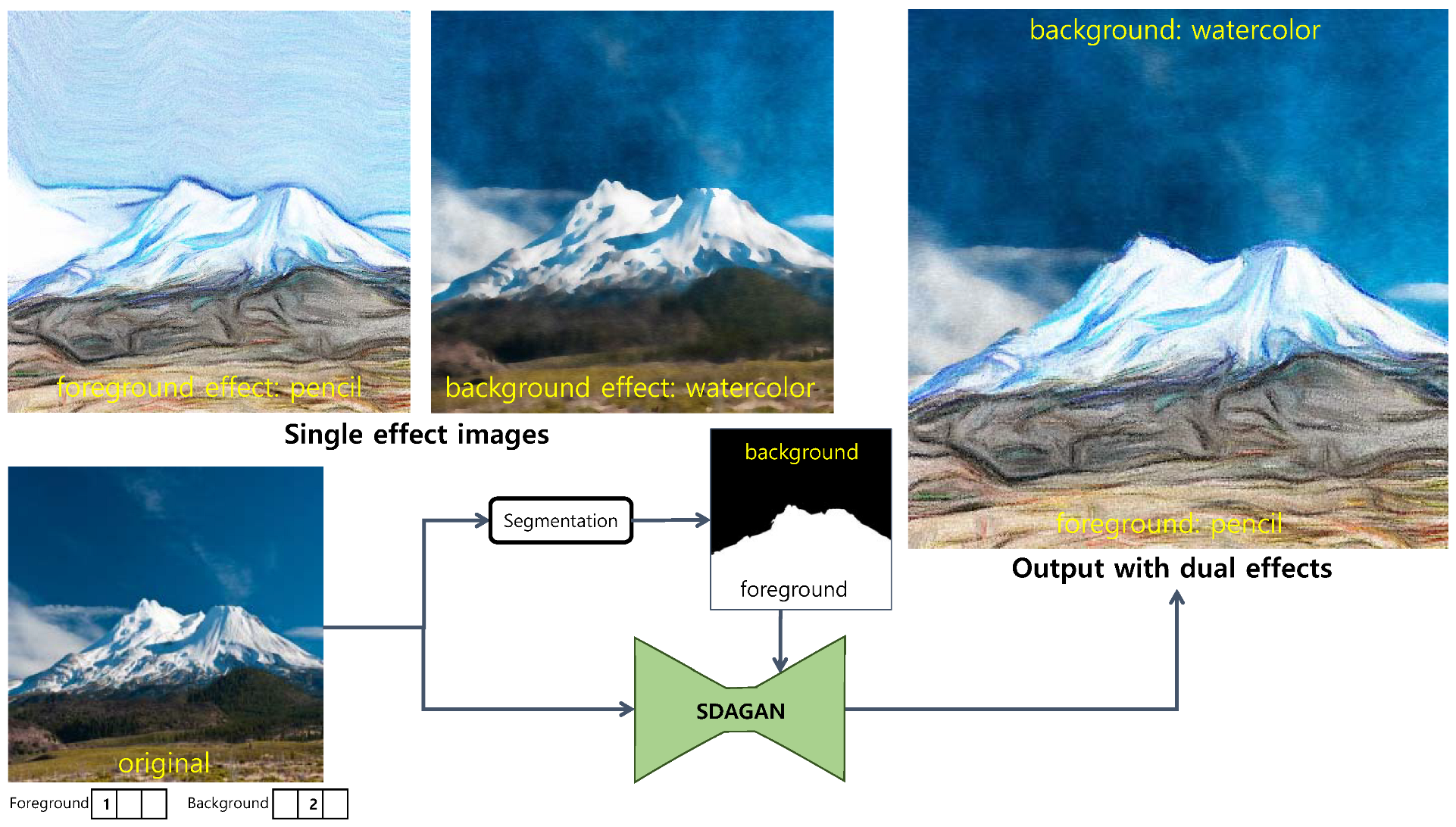

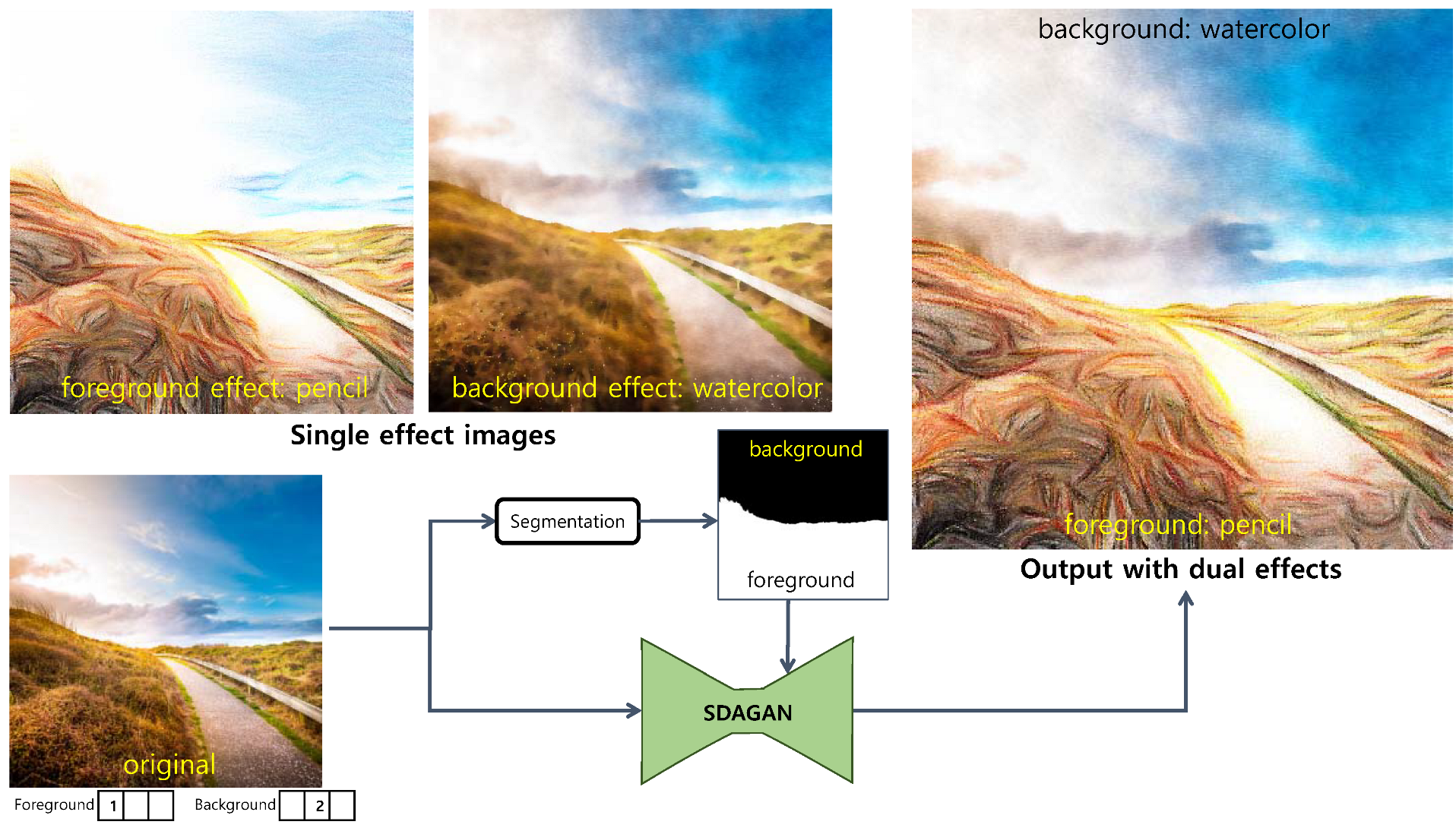

- We develop a framework that synthesizes dual artistic media effects using region information extracted from a semantic segmentation scheme. The SPADE module introduced in GauGAN [7] is employed for this purpose. Our framework can successfully produce an artwork image whose foreground and background are depicted with different artistic media effects.

2. Related Work

2.1. Procedural Work

2.1.1. Abstraction and Line Drawing

2.1.2. Watercoloring

2.1.3. Pencil Drawing

2.2. Deep Learning-Based Work

2.3. Region-Based Work

2.4. Dual Style Transfer

3. Our Generative Model with Spatial Control

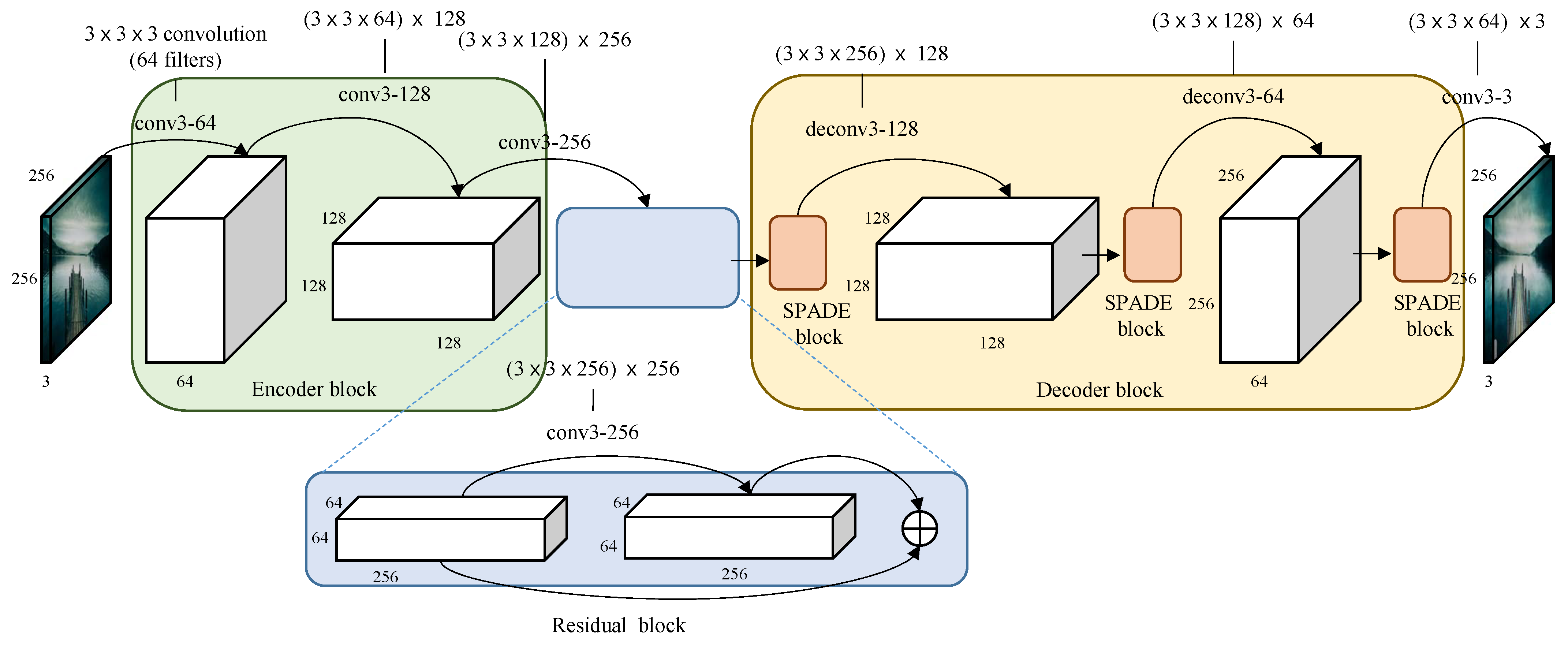

3.1. Generator

3.1.1. Encoder Block

3.1.2. Residual Block

3.1.3. SPADE Block

3.1.4. Decoder Block

3.2. Discriminator

3.3. Loss Function

4. Training

4.1. Generation of the Training Dataset

4.2. Foreground/Background Segmentation

5. Implementation and Results

5.1. Implementation

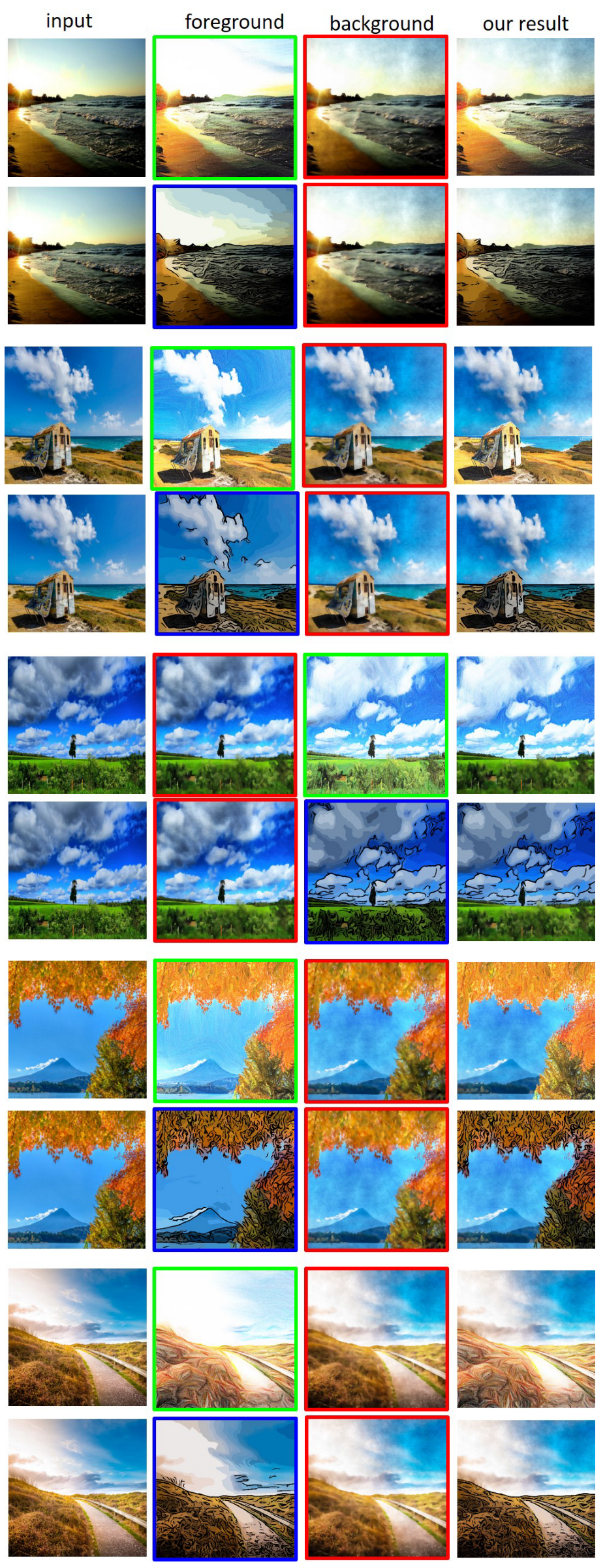

5.2. Results

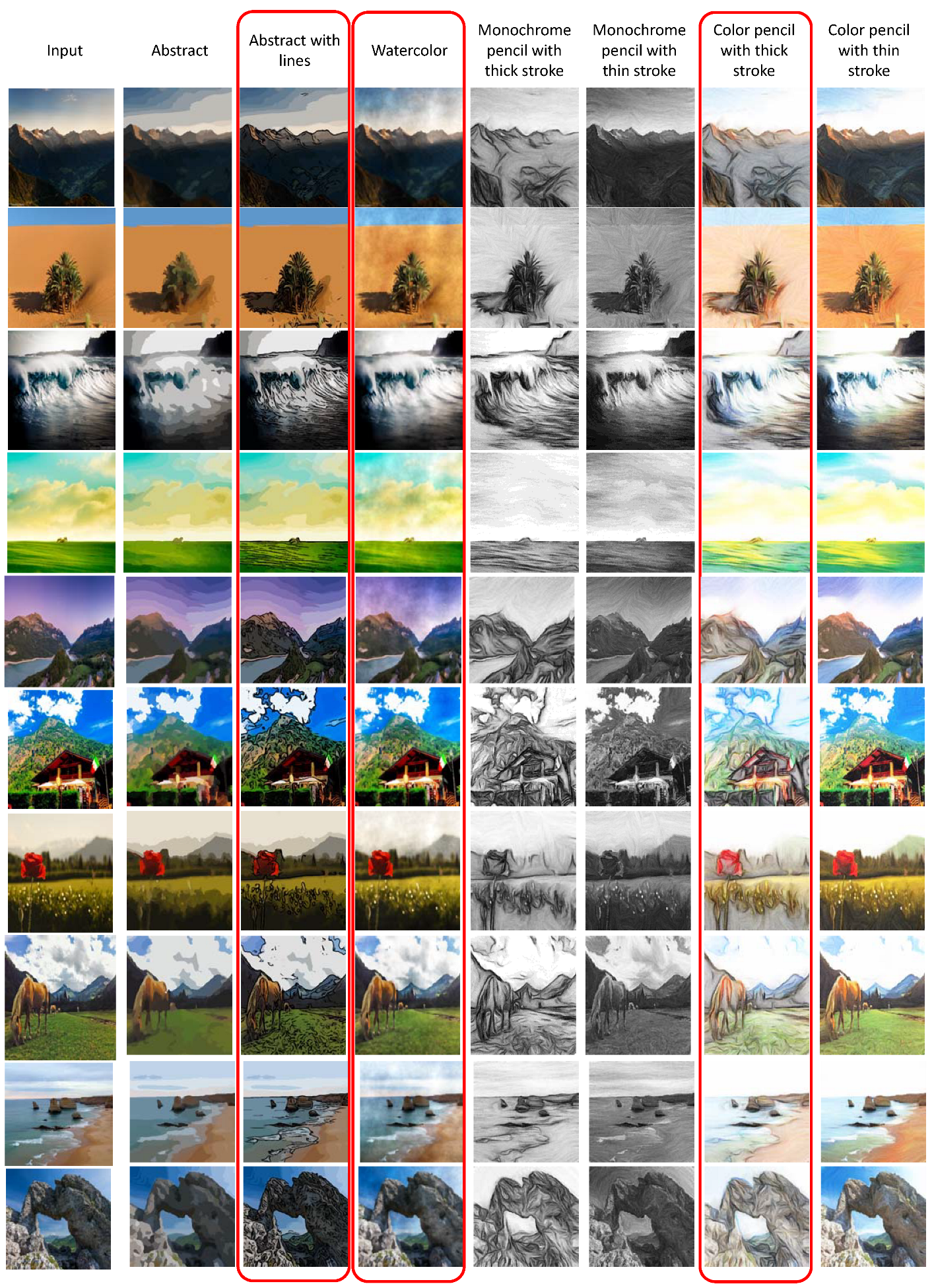

5.2.1. Synthesis of Single Artistic Media Effects

5.2.2. Synthesis of Dual Artistic Media Effects

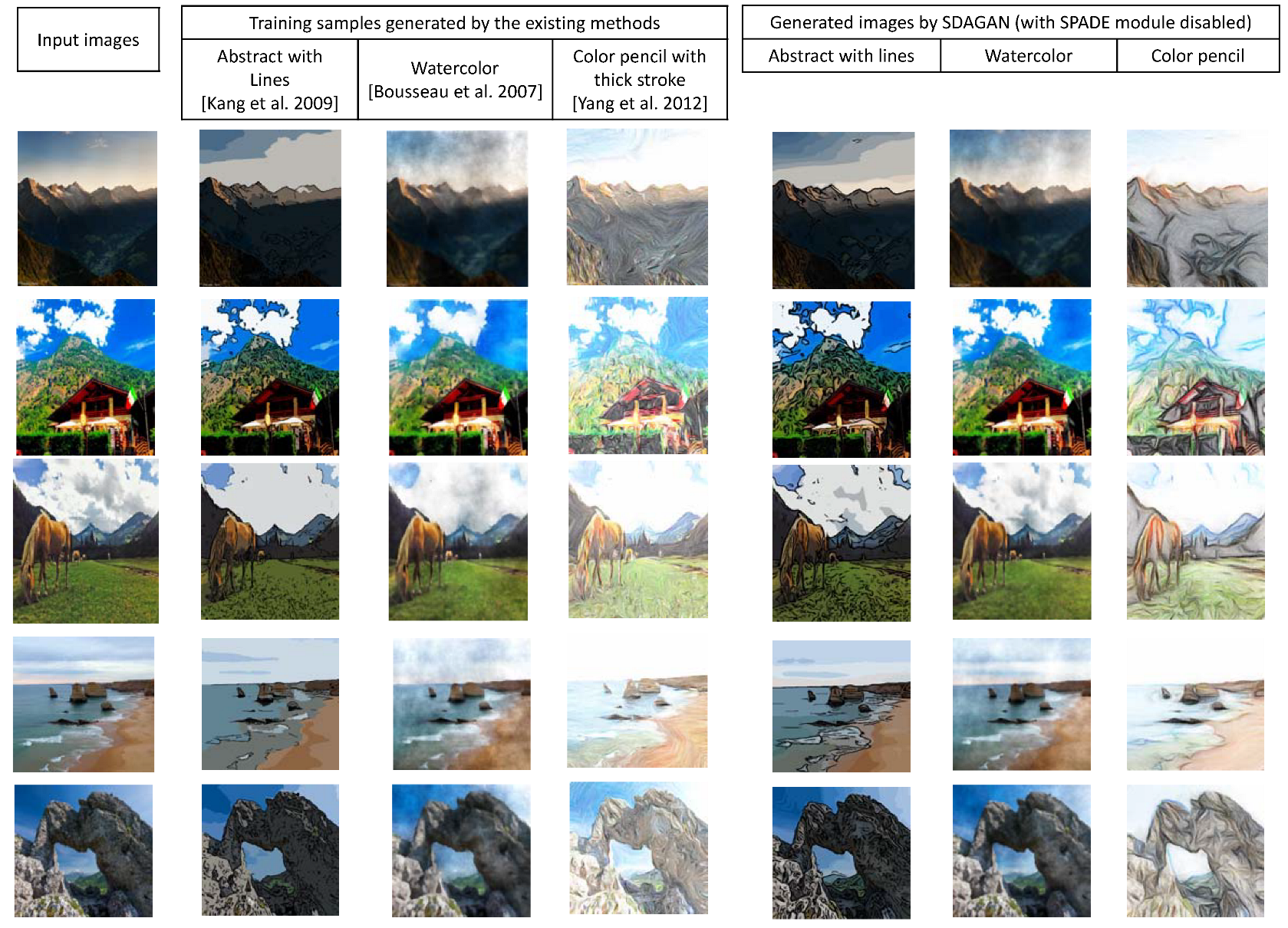

5.3. Comparison to Existing Studies

5.4. Evaluation

5.4.1. FID Evaluation

5.4.2. User Survey

- q1. Evaluate the stylization for each stylized image. Mark the degree of the stylization in five-point metric: 1 for least stylized, 2 for less stylized, 3 for medium, 4 for more stylized, 5 for maximally stylized.

- q2. Evaluate how much information of the input image is preserved for each stylized image. The information includes details of objects in the scene as well as tone and color. Mark the degree of preservation of the information in five-point metric: 1 for least preserved, 2 for less preserved, 3 for medium, 4 for somewhat preserved, 5 for maximally preserved.

5.5. Analysis

5.5.1. t-Test

5.5.2. Effect Size

5.6. Discussion

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kyprianidis, J.; Kang, H. Image and video abstraction by coherence-enhancing filtering. Comput. Graph. Forum 2011, 30, 593–602. [Google Scholar] [CrossRef]

- Bousseau, A.; Neyret, F.; Thollot, J.; Salesin, D. Video watercolorization using bidirectional texture advection. ACM Trans. Graph. 2007, 26, 104:1–104:10. [Google Scholar] [CrossRef]

- Yang, H.; Kwon, Y.; Min, K. A stylized approach for pencil drawing from photographs. Comput. Graph. Forum 2012, 31, 1471–1480. [Google Scholar] [CrossRef]

- Gatys, L.; Ecker, A.; Bethge, M. Image style transfer using convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2016, Las Vegas, NV, USA, 30–27 June 2016; pp. 2411–2423. [Google Scholar]

- Isola, P.; Zhu, J.; Zhou, T.; Efros, A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2017, Honolulu, HI, USA, 21–26 June 2017; pp. 1125–1134. [Google Scholar]

- Zhu, J.; Park, T.; Isola, P.; Efros, A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision 2017, Honolulu, HI, USA, 21–26 June 2017; pp. 2223–2232. [Google Scholar]

- Park, T.; Liu, M.; Wang, T.; Zhu, J. Semantic image synthesis with spatially-adaptive normalization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2019, Long Beach, CA, USA, 15–20 June 2019; pp. 2337–2346. [Google Scholar]

- Kang, H.; Lee, S.; Chui, C. Flow-based image abstraction. IEEE Trans. Vis. Comput. Graph. 2009, 15, 62–76. [Google Scholar] [CrossRef] [PubMed]

- Champandard, A. Semantic style transfer and turning two-bit doodles into fine artworks. arXiv 2016, arXiv:1603.01768. [Google Scholar]

- Huang, X.; Belongie, S. Arbitrary style transfer in real-time with adaptive instance normalization. In Proceedings of the IEEE International Conference on Computer Vision 2017, Honolulu, HI, USA, 21–26 June 2017; pp. 1501–1510. [Google Scholar]

- Li, Y.; Fang, C.; Yang, J.; Wang, Z.; Lu, X.; Yang, M. Universal style transfer via feature transforms. In Proceedings of the Neural Information Processing Systems 2017, Long Beach, CA, USA, 4–9 December 2017; pp. 385–395. [Google Scholar]

- Castillo, C.; De, S.; Han, X.; Singh, B.; Yadav, A.; Goldstein, T. Son of zorn’s lemma: Targeted style transfer using instanceaware semantic segmentation. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing 2017, New Orleans, LA, USA, 5–9 March 2017; pp. 1348–1352. [Google Scholar]

- DeCarlo, D.; Santella, A. Stylization and abstraction of photographs. In Proceedings of the ACM Computer Graphics and Interactive Techniques 2002, San Antonio, TX, USA, 21–26 June 2002; pp. 769–776. [Google Scholar]

- Winnemoller, H.; Olsen, S.; Gooch, B. Real-time video abstraction. In Proceedings of the ACM Computer Graphics and Interactive Techniques 2006, Boston, MA, USA, 30 July–3 August 2006; pp. 1221–1226. [Google Scholar]

- Kang, H.; Lee, S. Shape-simplifying image abstraction. Comput. Graph. Forum 2008, 27, 1773–1780. [Google Scholar] [CrossRef]

- Kyprianidis, J.; Kang, H.; Dollner, J. Image and video abstraction by anisotropic Kuwahara filtering. Comput. Graph. Forum 2009, 28, 1955–1963. [Google Scholar] [CrossRef]

- DeCarlo, D.; Finkelstein, A.; Rusinkiewicz, S.; Santella, A. Suggestive contours for conveying shape. ACM Trans. Graph. 2003, 22, 848–855. [Google Scholar] [CrossRef]

- Kang, H.; Lee, S.; Chui, C. Coherent line drawing. In Proceedings of the 5th Non-Photorealistic Animation and Rendering Symposium, San Diego, CA, USA, 4–5 August 2007; pp. 43–50. [Google Scholar]

- Winnemoller, H.; Kyprianidis, J.; Olsen, S. XDoG: An eXtended difference-of-Gaussians compendium including advanced image stylization. Comput. Graph. 2012, 36, 740–753. [Google Scholar] [CrossRef] [Green Version]

- Curtis, C.; Anderson, S.; Seims, J.; Fleischer, K.; Salesin, D. Computer-generated watercolor. In Proceedings of the ACM Computer Graphics and Interactive Techniques 1997, Los Angeles, CA, USA, 3–8 August 1997; pp. 421–430. [Google Scholar]

- Bousseau, A.; Kaplan, M.; Thollot, J.; Sillion, F. Interactive watercolor rendering with temporal coherence and abstraction. In Proceedings of the Non-Photorealistic Animation and Rendering Symposium, Annecy, France, 5–7 June 2006; pp. 141–149. [Google Scholar]

- Kang, H.; Chui, C.; Chakraborty, U. A unified scheme for adaptive stroke-based rendering. Vis. Comput. 2006, 22, 814–824. [Google Scholar] [CrossRef] [Green Version]

- van Laerhoven, T.; Lisenborgs, J.; van Reeth, F. Real-time watercolor painting on a distributed paper model. In Proceedings of the Computer Graphics International 2004, Crete, Greece, 16–19 June 2004; pp. 640–643. [Google Scholar]

- Sousa, M.; Buchanan, J. Computer-generated graphite pencil rendering of 3D polygonal models. Comput. Graph. Forum 1999, 18, 195–208. [Google Scholar] [CrossRef]

- Matsui, H.; Johan, H.; Nishita, T. Creating colored pencil style images by drawing strokes based on boundaries of regions. In Proceedings of the Computer Graphics International 2005, Stony Brook, NY, USA, 22–24 June 2005; pp. 148–155. [Google Scholar]

- Murakami, K.; Tsuruno, R.; Genda, E. Multiple illuminated paper textures for drawing strok. In Proceedings of the Computer Graphics International 2005, Stony Brook, NY, USA, 22–24 June 2005; pp. 156–161. [Google Scholar]

- Kwon, Y.; Yang, H.; Min, K. Pencil rendering on 3D meshes using convolution. Comput. Graph. 2012, 36, 930–944. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems 2014, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. In Proceedings of the International Conference on Learning Representations 2016, San Diego, CA, USA, 2–4 May 2016; pp. 1–16. [Google Scholar]

- Chen, H.; Tak, U. Image Colored-Pencil-Style Transformation Based on Generative Adversarial Network. In Proceedings of the International Conference on Wavelet Analysis and Pattern Recognition, Adelaide, Australia, 2 December 2020; pp. 90–95. [Google Scholar]

- Zhou, H.; Zhou, C.; Wang, X. Pencil Drawing Generation Algorithm Based on GMED. IEEE Access 2021, 9, 41275–41282. [Google Scholar] [CrossRef]

- Kim, J.; Kim, M.; Kang, H.; Lee, K. U-gat-it: Unsupervised generative attentional networks with adaptive layer-instance normalization for image-to-image translation. In Proceedings of the ICLR 2020, Online, 26 April–1 May 2020. [Google Scholar]

- Platkevic, A.; Curtis, C.; Sykora, D. Fluidymation: Stylizing Animations Using Natural Dynamics of Artistic Media. Comput. Graph. Forum 2021, 40, 21–32. [Google Scholar] [CrossRef]

- Sochorova, S.; Jamriska, O. Practical pigment mixing for digital painting. ACM Trans. Graph. 2021, 40, 234. [Google Scholar] [CrossRef]

- Gatys, L.; Ecker, A.; Bethge, M.; Hertzmann, A.; Shechtman, E. Controlling perceptual factors in neural style transfer. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 3985–3993. [Google Scholar]

- Kotovenko, D.; Sanakoyeu, A.; Lang, S.; Ommer, B. Content and Style Disentanglement for Artistic Style Transfer. In Proceedings of the ICCV 2019, Seoul, Korea, 27 October–2 November 2019; pp. 4422–4431. [Google Scholar]

- Kotovenko, D.; Sanakoyeu, A.; Ma, P.; Lang, S.; Ommer, B. A Content Transformation Block For Image Style Transfer. In Proceedings of the CVPR 2019, Long Beach, CA, USA, 16–20 June 2019; pp. 10032–10041. [Google Scholar]

- Svobada, J.; Annosheh, A.; Osendorfer, C.; Masci, J. Two-Stage Peer-Regularized Feature Recombination for Arbitrary Image Style Transfer. In Proceedings of the CVPR 2020, Online, 14–19 June 2020; pp. 13816–13825. [Google Scholar]

- Sanakoyeu, A.; Kotovenko, D.; Lang, S.; Ommer, B. A Style-Aware Content Loss for Real-time HD Style Transfer. In Proceedings of the ECCV 2018, Munich, Germany, 8–14 September 2018; pp. 698–714. [Google Scholar]

- Chen, H.; Zhao, L.; Wang, Z.; Zhang, H.; Zuo, Z.; Li, A.; Xing, W.; Lu, D. DualAst: Dual Style-Learning Networks for Artistic Style Transfer. In Proceedings of the CVPR 2021, Online, 19–25 June 2021; pp. 872–881. [Google Scholar]

- Lim, L.; Keles, H. Foreground segmentation using a triplet convolutional neural network for multiscale feature encoding. Pattern Recognit. Lett. 2018, 112, 256–262. [Google Scholar] [CrossRef] [Green Version]

- Wang, X.; Juang, J.; Chan, S. Automatic foreground extraction from imperfect backgrounds using multi-agent consensus equilibrium. J. Vis. Commun. Image Represent. 2020, 72, 102907. [Google Scholar] [CrossRef]

- Tezcan, M.; Ishwar, P.; Konrad, J. BSUV-Net: A Fully-convolutional neural network for background subtraction of unseen videos. In Proceedings of the WACV 2020, Snowmass Village, CO, USA, 2–5 March 2020; pp. 2774–2783. [Google Scholar]

- Bouwmans, T.; Javed, S.; Sultana, M.; Jung, S. Deep neural network concepts for background subtraction: A systematic review and comparative evaluation. Neural Netw. 2019, 117, 8–66. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yu, C.; Wang, J.; Peng, C.; Gao, C.; Yu, G.; Sang, N. BiSeNet: Bilateral segmentation network for real-time semantic segmentation. In Proceedings of the ECCV 2018, Munich, Germany, 8–14 September 2018; pp. 325–341. [Google Scholar]

| Dataset | Size | Resolution | ||

|---|---|---|---|---|

| abstraction with lines | 1.1 K | |||

| training set | watercolor | 1.1 K | 3.3 K | |

| color pencil with thick stroke | 1.1 K | |||

| abstraction with lines | 0.2 K | |||

| validation set | watercolor | 0.2 K | 0.6 K | |

| color pencil with thick stroke | 0.2 K | |||

| abstraction with lines | 0.2 K | |||

| test set | watercolor | 0.2 K | 0.6 K | |

| color pencil with thick stroke | 0.2 K | |||

| Evaluation 1: FID | Evaluation 2: User survey | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Case | FID | Q1: Stylization | Q2: Preservation of Content | ||||||

| F/G | B/G | Ours | F/G | B/G | Ours | F/G | B/G | Ours | |

| 1 | 381.1 | 333.6 | 236.3 | 3.4 | 3.3 | 3.9 | 2.6 | 3.4 | 4.2 |

| 2 | 314.0 | 333.6 | 280.1 | 3.5 | 3.3 | 4.1 | 3.1 | 3.4 | 4.1 |

| 3 | 487.6 | 381.5 | 236.2 | 3.6 | 3.5 | 4.2 | 2.4 | 3.3 | 4.1 |

| 4 | 489.4 | 381.5 | 247.1 | 3.5 | 3.5 | 4.3 | 3.0 | 3.3 | 3.9 |

| 5 | 275.1 | 324.4 | 262.3 | 3.4 | 3.1 | 4.0 | 4.2 | 3.1 | 3.9 |

| 6 | 275.1 | 315.2 | 251.4 | 3.4 | 3.4 | 4.3 | 4.2 | 3.0 | 3.8 |

| 7 | 241.8 | 225.2 | 150.3 | 2.9 | 3.3 | 3.8 | 2.8 | 3.4 | 4.2 |

| 8 | 108.5 | 225.2 | 118.9 | 3.4 | 3.3 | 4.4 | 2.7 | 3.4 | 4.4 |

| 9 | 242.5 | 201.7 | 174.5 | 3.1 | 3.5 | 3.3 | 2.8 | 3.5 | 4.4 |

| 10 | 233.8 | 201.7 | 179.8 | 3.2 | 3.5 | 3.8 | 3.2 | 3.5 | 4.6 |

| avg | 312.09 | 292.36 | 213.69 | 3.34 | 3.37 | 4.01 | 3.10 | 3.33 | 4.16 |

| std | 106.97 | 71.73 | 53.77 | 0.21 | 0.13 | 0.33 | 0.63 | 0.16 | 0.25 |

| Evaluation 1: FID | Evaluation 2: User Survey | |||

|---|---|---|---|---|

| FID | Q1: Stylization | Q2: Preservation of Content | ||

| p value | F/G and ours | 0.022 | 0.00033 | |

| B/G and ours | 0.012 | |||

| Cohen’s d | F/G and ours | 1.64 | 3.43 | 3.14 |

| B/G and ours | 1.76 | 3.61 | 5.48 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, H.; Min, K. Syntheses of Dual-Artistic Media Effects Using a Generative Model with Spatial Control. Electronics 2022, 11, 1122. https://doi.org/10.3390/electronics11071122

Yang H, Min K. Syntheses of Dual-Artistic Media Effects Using a Generative Model with Spatial Control. Electronics. 2022; 11(7):1122. https://doi.org/10.3390/electronics11071122

Chicago/Turabian StyleYang, Heekyung, and Kyungha Min. 2022. "Syntheses of Dual-Artistic Media Effects Using a Generative Model with Spatial Control" Electronics 11, no. 7: 1122. https://doi.org/10.3390/electronics11071122

APA StyleYang, H., & Min, K. (2022). Syntheses of Dual-Artistic Media Effects Using a Generative Model with Spatial Control. Electronics, 11(7), 1122. https://doi.org/10.3390/electronics11071122