1. Introduction

Human–machine interaction (HMI) systems can greatly assist humans with a wide range of physical capabilities and ages to access and control machines [

1,

2]. Device control using hand gestures has high intuition for humans. Instead of learning keyboard entry combinations, a user makes natural gestures by hand that indicate a command such as to turn on or turn off a machine. There are many use cases for HMI systems. A system could be programmed to recognize sign language using the patterns of light cast by each gesture. Security personnel may wish to control or communicate without making voice commands. The emerging consumer market for virtual reality and gaming systems is expected to further promote interest in gesture recognition systems. The proliferation of the Internet of Things (IoT) [

3] has also increased the demand for HMI to enable control of devices used in work, leisure, health, communication and education. The purpose-built gesture recognition systems tend to be expensive and can only accomplish the task of recognition.

Gesture recognition methodologies can be broadly divided into those techniques that do and those that do not require physical contact with a sensing device [

4]. Contactless methods enable controlling a device ‘anywhere, anytime’ but can require more sophisticated signal processing. A wrist-strapped inertial sensor is the most common contact-based method and it employs an accelerometer sensor that detects the acceleration forces in order to establish its spatial and temporal location. A capacitive sensor placed on each finger phalange was proposed in [

5]. Changes in capacitance can be recorded through film deformation due to the movement of ligaments and muscles. The wrist-based sensors, however, have the problem that the user always has to put on a cumbersome device which is also not very practical. Contactless methods have been commonly based on radar or video image processing. Automotive communications have driven the development of mm-band radar that can also be used to detect human movement, gestures and signs of life using Doppler shift measurements. However, this technique is prone to clutter between the Tx and Rx, limiting the resolution. Many image-based techniques operate on static images of the hand for recognition while techniques operating on the moving image are likely to be more accurate. While image-based techniques can provide good results, the cost and computational complexity need to be considered.

Meanwhile, interest in using light for control applications is gaining traction with the emergence of the Li-Fi standard planned for 2022 [

6]. Visible light communication (VLC) systems will use existing lighting infrastructure to provide high-speed communications and are projected to become ubiquitous around the world with the IEEE 802.11bb [

6] standard in 2022. The visible light operates with wavelengths extending from 400 nm for blue to 700 nm for red light and hence is part of the petahertz band (10

Hz) which is gaining interest in the research community [

7,

8,

9]. The visible light band has a gigantic available bandwidth in the non-ionizing visible electromagnetic spectrum. Light is an ideal medium of communication in medical use cases [

10,

11] where rigorous electromagnetic compatibility rules apply. For the blind, a VLC-based health monitoring and alerting system has been created [

12]. VLC systems may be constructed at a low cost [

13] by using ordinary room lighting light-emitting diodes (LEDs) and photodiodes (PDs) found in DVD players.

Our motivation for investigating the light-based systems stems from exploiting existing infrastructure for a low-cost solution. Traditional image recognition systems often comprise a sensor either strapped to the wrist or placed in a glove and either are wireless or use cumbersome trailing wires. The necessity of wearing a dedicated sensing device detracts from the desirability of this approach. Research on gesture control tends to focus on the more well-known neural networks such as the convolutional neural network (CNN) operating on video images or the long short-term memory (LSTM) neural network. However, complexity is one of the major issues with these techniques.

Proposed Solution

Our proposed solution uses the same hardware as a light communications system and hence the gesture recognition capability is a low-cost additional feature. The system learns to link finger motions with the pattern of light directly impinging on the PD and by not relying on reflection is unaffected by clutter or the characteristics of the subject’s skin. We use the probabilistic neural network (PNN) algorithm for classification, which has a much lower complexity than the CNN technique for video processing. We apply a novel pre-processing procedure that does not need to know the number of clusters in advance. The number of sampled light data points is reduced, however, by grouping the periods of light activity and blocking them together. Despite its low complexity, the gesture recognition functions effectively (73%) and has the benefit of being compatible with a light communications system.

The contributions of this work can be summarized as follows:

Compiled a literature review of the latest research in activity recognition.

Developed a practical sensing technique suitable for light communication systems.

Described a novel pre-processing methodology to convert the gestures into light sequences compatible with the PNN.

Demonstrated that the system can achieve good performance under normal operating conditions with limited computational complexity.

Demonstrated that the accuracy depends on the size of the PNN pre-processing array and the Gaussian spread function.

This paper is organized as follows: A literature survey on recent gesture activity systems in provided in

Section 1. The proposed gesture recognition using a PNN for a light communications system is detailed in

Section 3. In

Section 4 the implementation and experiment setup are discussed, while

Section 5 presents the performance results. A discussion on the limiting factors and areas for further work are provided in

Section 6 and

Section 7, respectively. A summary of the work is provided in

Section 8.

2. Gesture Activity Recognition Survey

In this section we review the recent literature on gesture recognition using machine learning. In particular we focus the discussion on wireless, contact sensor, convolutional neural network, recurrent neural network, probabilistic neural network and other learning-based techniques. A comparative summary of key results is given in

Table 1 at the end of this Section.

2.1. Wireless-Based Techniques

Gesture recognition is closely related to human activity recognition (HAR) [

14] and a focus of much research has been on indirect sensing through wireless signals. Wireless LAN signals have been shown to be correlated with human movement causing the signals to be reflected or obstructed, and accuracies over 90% have been reported by using support vector machines (SVM) machine learning (ML) [

15]. However, classifying tiny finger motions through wireless signals in a practical situation is currently challenging, and becomes even more difficult with many persons in the room.

2.2. Contact-Sensor-Based Techniques

In the past decade, improvements in material science have benefited the glove sensor approach whereby flexible sensors are integrated seamlessly into comfortable nano-material gloves [

16]. This approach can produce high accuracy as the finger directly touches the sensor. However, the approach with connected wires is still cumbersome and various issues such as cross-talk between closely positioned sensors have been cited. Although wireless (typically Bluetooth) can be incorporated, the increased latency makes it less suitable for certain applications, for example medical use cases. A physical activity identification system for wheelchair-bound patients based on wrist-band sensors was developed in [

17] and HMI systems employing wearable sensors were proposed in [

16].

2.3. CNN-Based Techniques

Alhamazani et al. [

18] developed a C-based program for recognizing images using a Kinect depth camera. Images are parsed and pixels encoded by a one for movement and zero for lack of movement. Challenges with video-based recognition systems include the difficulty to separate the foreground and background objects, the significant processing plus memory requirements as well as legal issues about privacy laws. Fusion of video and acceleration data was demonstrated for improved classification in crowded environments in [

19]. Image classification using a CNN for gesture recognition achieved 97% accuracy in [

20]. Authors in [

21] employ a 2D CNN for recognizing Doppler features for hand movements. Eleven gestures could be identified with 92.4% accuracy for a single user. Training the CNN, however, is time-consuming and requires careful optimization and handling of convergence issues at each of several tens of layers. Therefore, some researchers have exploited pre-trained models such as the AlexNet or VGG-16 and rely on transfer learning to apply the model for new images such as hand gestures that were not originally used for the network construction. Sahoo et al. [

22] demonstrated the recognition of static sign language (ASL) using pre-trained (AlexNet [

23], VGG-16 [

24] and HUST-ASL) CNN models with a score-level fusion technique. The recognition accuracy of the proposed system ranged from 64.3 to 100% using the Massey University dataset. Employing the standard YOLO (You Only Look Once)-v3 and DarkNet-53 CNN, the authors in [

25] achieved an average F1-score of 96.7% for static hand gestures. A gradient descent method achieved a lower average of 78.0% accuracy due to problems identifying moving images.

2.4. Recurrent Neural-Network-Based Techniques

A short-range millimeter wave radar achieved a classification accuracy of 98% using the LSTM algorithm [

26]. A single photodiode was used to detect gestures in a system capable of visible light communication with an accuracy of up to 88% using the LSTM algorithm in [

27]. However, the recurrent LSTM can have high computational complexity due to the feedback architecture. A 5-layer recurrent neural network derived from a LSTM and drop-out layer is employed in [

28] to control a surgical robot based on hand gestures. Hand rotation is captured using depth cameras focused directly on the hand. It was shown that the recurrent neural network outperformed traditional machine learning techniques such as k-NN and SVM.

2.5. PNN-Based Techniques

Although less well known, recently there has been growing interest in applying the PNN to pattern identification in human activity and gesture recognition. Early work on classification using the PNN with sensors placed on each wrist and leg achieved an accuracy of 91.3% in [

29]. A facial expression recognition was proposed in [

30] using 112

92 gray pixel images. The PNN classifier operated on feature-extracted data using principle component analysis (PCA) and kernel PCA. It was shown that a cross-validation type PNN produced better results (by a few percent), although the complexity order increases from

to

. Dance routines were identified with 91.7% accuracy using captured Kinect video images by extracting features following the estimation of angles and displacement distances of limbs during movement [

31,

32]. It is beneficial to reduce the complexity by using an efficient representation of the data using as short a code word as possible. In many systems a simple binary one–zero such as for on–off or busy–idle is sufficient. Hand-written character recognition was achieved by converting the text into a 16 × 16 pixel picture where black and white pixels were encoded as one and zero, respectively [

33]. Partially camouflaged object tracking using a modified PNN was tackled in [

34]. It was shown that a fuzzy-energy-based active contour was an effective method for the variable image intensity. Child emotion recognition was achieved by PNN classification of extracted features made through vector quantization and eigenvalue decomposition [

35]. Note that for a practical system it is important to limit the complexity of not only the classifier stage but also the feature extraction stages. Luzanin et al. recorded hand gestures using a smart glove with five sensors for the four fingers and thumb [

36]. They applied a K-means and X-means pre-processing to find clusters (typically about 10–12) in a 2D space for each gesture and effectively reduce the number of data points for PNN processing. They then applied the PNN based on the output of each clustering predictor. The cluster pre-processing approach increases the system complexity, however, and for K-means to work well it is necessary to know an accurate number of clusters in advance. Using principal component analysis to reduce the dimensionality and applying the PNN finger movements from forearm surface electromyography (EMG) could be classified with an average 92.2% accuracy in [

37]. The necessity of wearing special gloves or sensors is a severe drawback that reduces the practicality of the three approaches above. Ge et al. recorded gestures as a sequence of images using a webcam [

38]. Dimensionality was reduced by computing the distances between neighboring points, and sequences were tracked considering an image’s relative position in a database. Accuracy ranged from 71.8% for 5 dimensions to 93.2% for 30 dimensions. However, this technique requires extensive pre-processing to remove background, extraction and computations on each pixel and its neighbor points.

2.6. Other Machine-Learning-Based Techniques

Classification of reflected infrared waves was accomplished using a hybrid k-NN and SVM technique in [

39]. When the spacing was 20 cm, the average denoised accuracy was 85% and decreased to 73% for a separation of 35 cm. After denoising they employed the K-nearest neighbors and SVM classification and achieved 96% accuracy for 8 gestures with 5 different subjects. Reflected light is threshold-sensitive and exploiting it generally requires extra post-processing to eliminate artifacts caused by multipath reflections from surrounding clutter. This complicates the task of developing a feasible low-cost system, and these systems often have lower data rates. While image-processing-based solutions typically provide the maximum performance, they demand a significant amount of computational power, making them unsuitable for low-cost portable use cases. The non-parametric K-nearest neighbors (k-NN) technique classifies gestures based on the Euclidean distances between samples and has modest complexity. An accuracy of 48% was attained using k-NN with a single PD and climbed to 83% when two PDs were used [

40].

Parvathy et al. [

41] employ the discrete wavelet transform (DWT) and a modified speed-up feature extraction method for obtaining rotation and scale-invariant descriptors. A bag-of-words technique is employed to develop the fixed dimension input vector which is needed for the support vector machine. The classification accuracy of two different gestures, ‘No’ and ‘grasp’, reached 98%. The number of PD detection chains, however, should be minimized for real-time operation with limited complexity. Control of a stereo music system using static gestures captured by video camera was achieved with 97.1% accuracy using a neural-network-based solution in [

42], and television remote control using gesture recognition was demonstrated in [

43].

Table 1.

Summary of recent gesture recognition systems using machine learning.

Table 1.

Summary of recent gesture recognition systems using machine learning.

| Algorithm | Sensor Type | Accuracy (%) | VLC Capable | Reference |

|---|

| CNN | Video camera | 97 | No | [20] |

| CNN | Video camera | 92.4 | No | [21] |

| CNN | Video camera | 64.3–100 | No | [22] |

| LSTM | Radar | 98 | No | [26] |

| LSTM | PD | 88 | Yes | [27] |

| PNN | Wrist/leg sensors | 91.3 | No | [29] |

| PNN | 5-sensor glove | 95 | No | [36] |

| PCA+PNN | EMG | 92.2 | No | [37] |

| PNN | Web-cam | 93.2 | No | [38] |

| SVM | PD | 73 | No | [39] |

| KNN | 1 × 1 (3 × 3) PD | 48(99) | No | [40] |

| SVM | Video camera | 98 | No | [41] |

| Wavelet | RGB | 97.1 | No | [42] |

| PNN | PD | 73 | Yes | This |

3. The Gesture Recognition System Using PNN Network

Gesture categorization is determined by an examination of the light pattern activity detected on each PD. Through training, a system develops an association between sequences and the activities. The identifying process is conducted on light transitions detected on the PD, as depicted in

Figure 1. The objective is to link the sequence of incident light to a specific gesture.

A hand typically moves at a speed of about 1 m/s or less. Because the space between fingers is small, periods of activity and rest will normally be less than about 10 ms; therefore, to detect these motions, the system should sample the PD about an order of magnitude higher in order to cater for a wide range of people. The data rates of Li-Fi systems are of the order of 100s Mbit/s and hence these systems innately sample at a sufficiently high rate to capture the gestures. The slot time is also a compromise between the communications data rate, prediction accuracy and computing complexity.

3.1. Lighting Model

Assume a channel model between a Tx (LED) and a Rx (PD) and consider only the line-of-sight (LOS) path. The channel impulse response of this LOS component is deterministic and given by Equation (

1) [

44].

where

is the photodiode surface area,

is the angle from the Tx to Rx,

is the angle of incidence with respect to the axis normal to the receiver surface,

d is distance between Tx and Rx,

c is the speed of light,

is the Rx optical gain function and

I(

) is the luminous intensity.

At the Rx, the received optical power can be expressed as (

2).

where

is the channel DC gain, and

is the emitted optical intensity.

3.2. Probabilistic Neural Network (PNN)

The PNN features a feed-forward architecture with a limited-complexity radial basis function-based model that was proposed by Sprecht [

45,

46]. The PNN employs a linear Bayesian decision-making algorithm which is capable of reaching the outcomes of nonlinear learning algorithms while maintaining the nonlinear algorithm’s high accuracy. The algorithm is robust to noise samples and outliers do not exert a strong influence on the selection. Training is fast as the weights are assigned directly based on the observed input probabilities. Moreover, new weights can be added in real-time and they do not affect the existing weights—merely new probability functions are added. The PNN can learn time-series sequences by treating them as a pattern matching exercise and comprises a reduced-complexity forward pass only with no back-propagation [

47].

The PNN has been applied to a range of medical image classification problems such as brain tumor detection [

48,

49] and Alzheimer’s detection [

50]. A classification of magnetoencephalography (MEG) signals using a PNN was made in [

51]. Classification accuracies of 82.36% were achieved using the PNN, while this was reduced to 77.78% using the same classifier output but with a multilayer neural network. A fault recognition method for rotary machinery based on information entropy was used in [

52]. The PNN has been successfully applied to predicting the upcoming number of idle slots in a wireless local area network system [

53] and channel fading prediction for an automated guided vehicle system [

54].

There are four processing layers in the PNN which are summarized in

Figure 2. Neurons in the pattern layer compute the Euclidean distance between the input and training vector and shape the result with a Gaussian smoothing function. As the input vector becomes closer to the training vector, the output approaches to one. Neurons in the summation layer sum the contributions for each classification,

c, producing probabilities as expressed by

where

v is the input data,

is the

j-th training vector,

is number of training vectors and

represents spread.

represents the Euclidean distance between the input and

j-th training vector of class,

c. The Parzen approach is used to estimate the unknown probability density functions (PDFs) used to activate the neurons in the pattern layer [

55]. Gaussian functions are a popular option for the PDF because they enable the use of a feed-forward classifier. The function with the maximum PDF is selected as the most likely class as

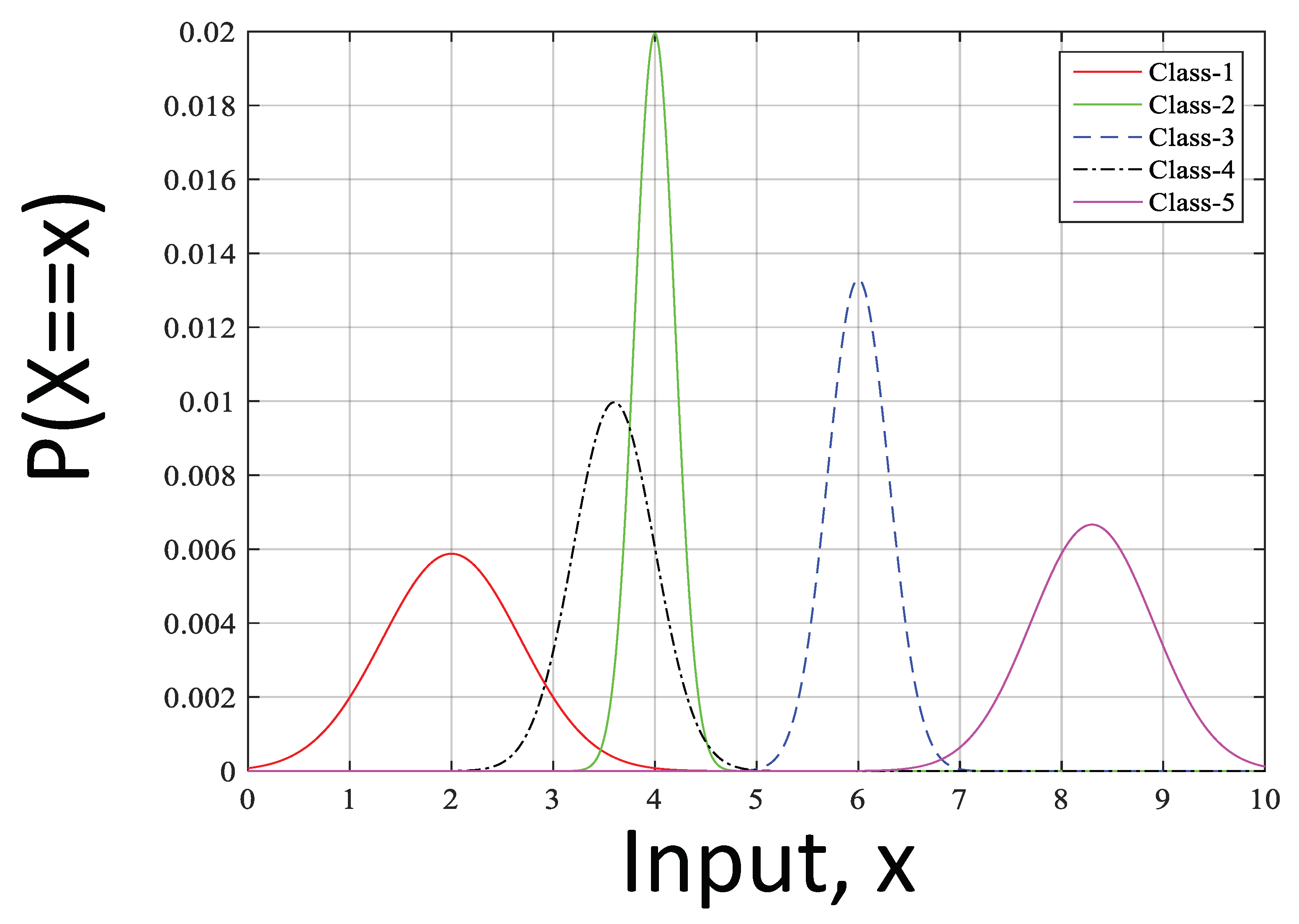

An example of Gaussian PDFs associated with a five gesture class is depicted in

Figure 3 and class number two would be selected as the most likely.

PNNs have just one training parameter for smoothing the PDFs. The spread of the Gaussian function ranges from zero (local nodes connected) to one (all input nodes connected) and hence impacts both the accuracy and the complexity. The spread parameter

is the standard deviation of the underlying Gaussian distribution or Parzen window and is a determinant of the receptive width of the Gaussian window for the training set’s PDF [

56]. If the spread is chosen incorrectly, it cannot direct the PNN’s training; as a consequence, the PNN produces inaccurate prediction results. If the smoothing factor is too low, the approximation consists of spikes resulting in problematic generalization. On the other hand, if the deviation is too large then the features are smoothed out. As the distribution of input data is application specific, a usual method is to sweep these parameters to find an optimized range.

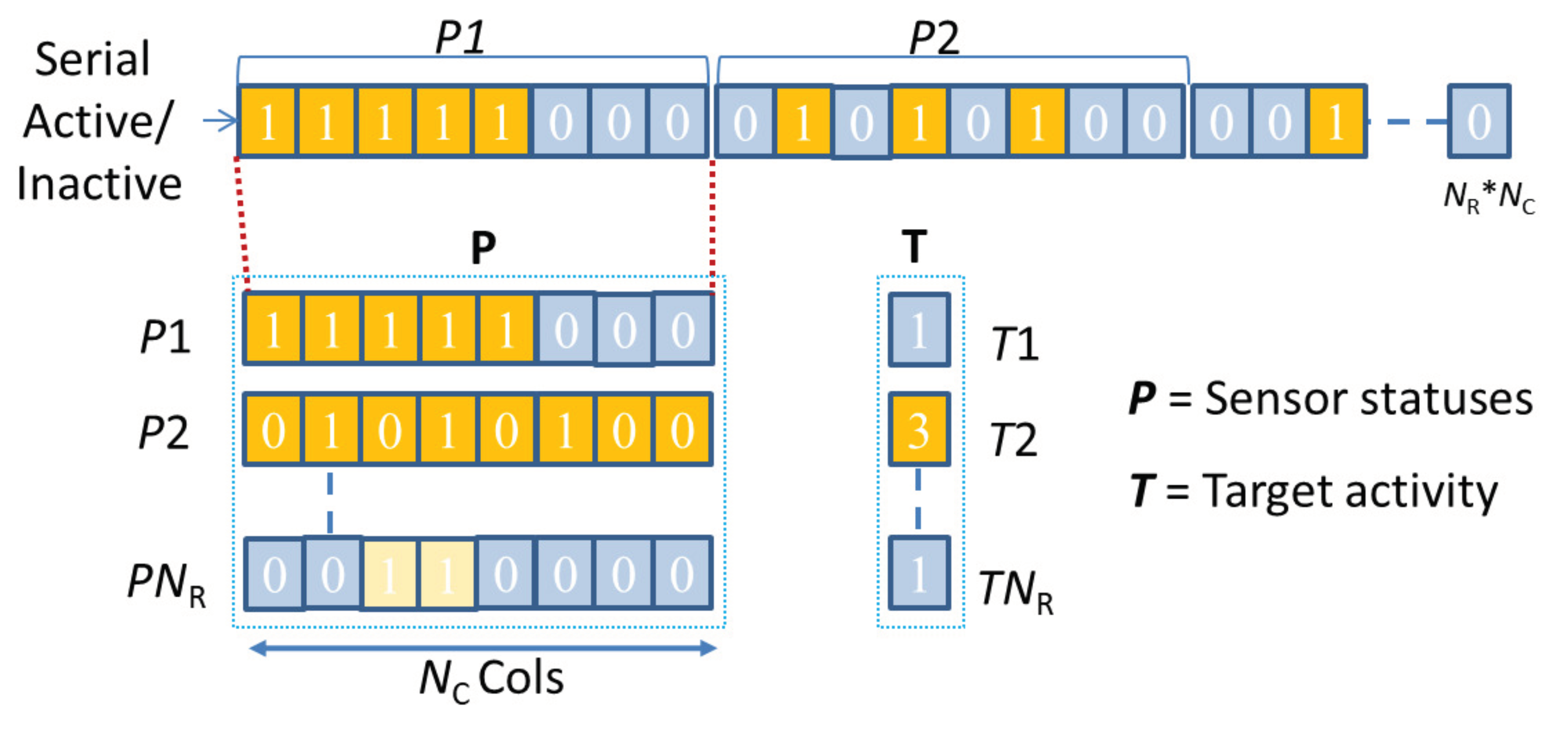

3.3. PNN Pre-Processing

Our technique involves pre-processing the Rx light signal into a form that is suitable for processing by the PNN. The continuous pre-processed light signal is converted to a digital signal using a hard-limiting operation. Sensed light on the PD is represented by logic-one while absence of light is represented by a logic-zero. The length

×

digital signal is converted into a

predictor matrix

P of dimensions

(rows) ×

(columns), and a

target matrix

T of dimensions

, as shown in

Figure 4. Each row of the

P matrix contains a data sequence of sufficient duration to predict the action, while the contents of

T are indices representing the respective gesture for each row during training.

4. System Implementation

In this section the VLC transceiver and gesture waveform capture process is described.

4.1. VLC Transceiver

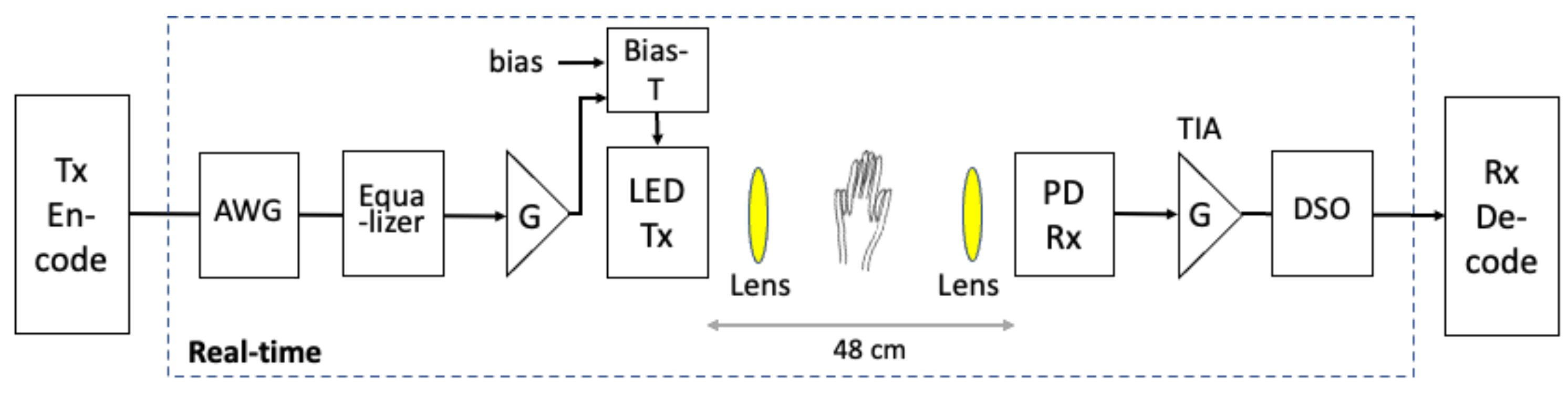

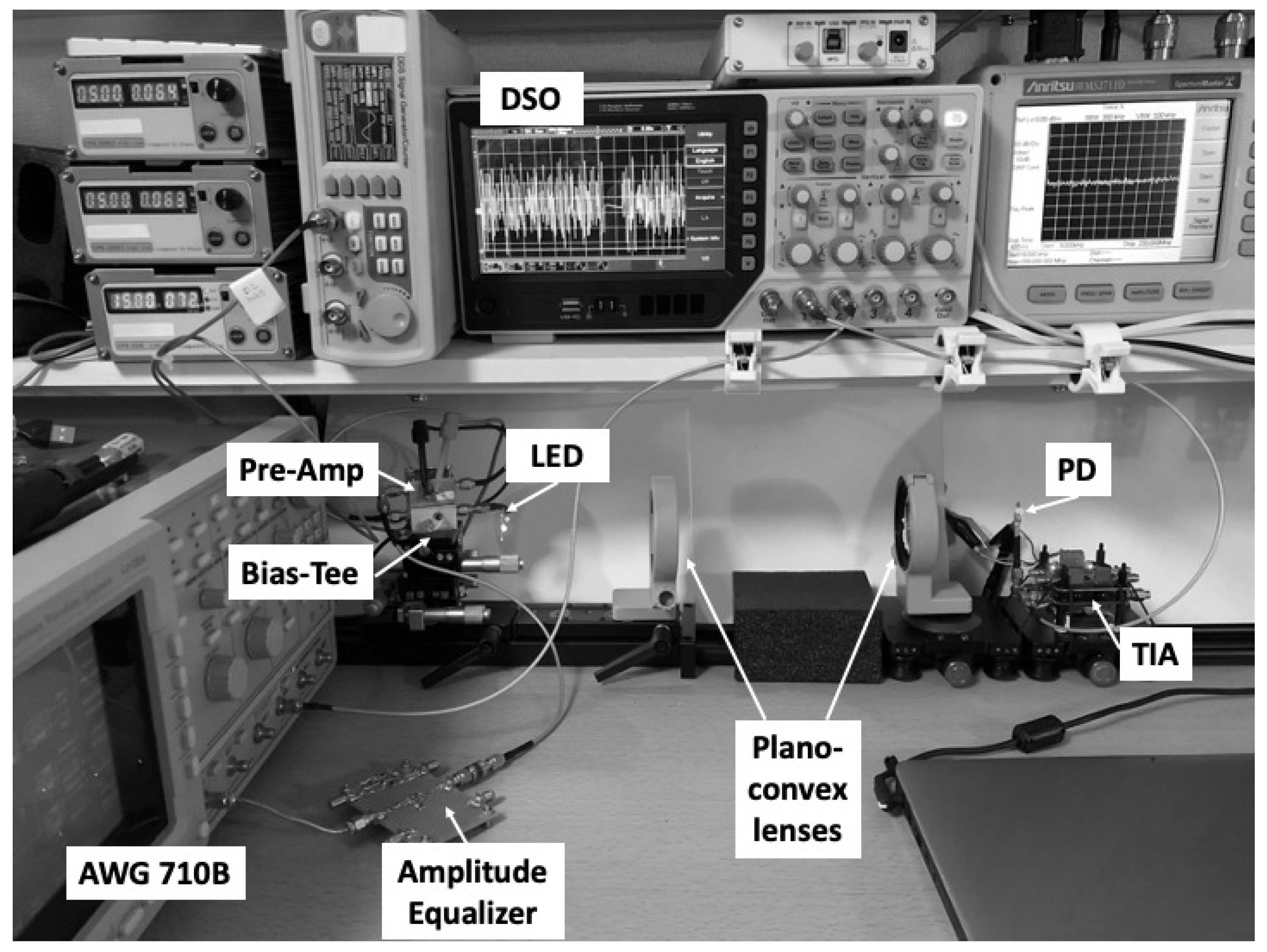

The VLC system utilizes a real-time arbitrary waveform generator (AWG) at the transmit side and a digital storage oscilloscope (DSO) at the receive side, as shown in

Figure 5.

The Tx signal was produced in real time with amplitude 1.80 V at 100 kHz using a Tektronix AWG. An amplitude equalizer was inserted to compensate for the LED’s (Luxeon Rebel) limited frequency response. A 500 MHz 17-dB pre-amplifier boosted the equalized signal and a 4.2 V LED bias voltage was added using a ZFBT-4R2GW (6 GHz bandwidth) Bias-T circuit. To extend the communication distance, a planar convex focusing lens with an aperture of 40 mm and focal length of 9 cm was installed on both the Tx and Rx sides. The separation of the two lenses was 30 cm. The focusing lens generates a narrow beam with an optimal focus in the area between the Tx LED and RX PD, where the hand is situated as seen in

Figure 6. At the receiver, a low-cost Hamamatsu S10784 photodiode was utilized. The PD photocurrent was increased using a 200 MHz low-noise amplifier (LNA) circuit and the signal recovered using envelope detection. The digital sampling oscilloscope was configured to record length 3.2 Mpoints and 2 Msa/s rate with peak-sampling mode. The testbed was positioned at right angles to a window at a distance of 4.7 m from the center. The light entering the room was directed toward the VLC system device but an office privacy barrier prevented direct light from entering the PD. Data were captured in real time and processed offline using a computer. When the real and estimated gestures are identical, a proper categorization is established.

4.2. Gesture Waveform Capture

As a proof of concept, the system was trained with the following five movements using an increasing number of fingers:

Reference Rx signal (absence of movement);

Motion up–down with 1 finger;

Motion up–down with 2 fingers;

Motion up–down with 3 fingers;

Motion up–down with 4 fingers.

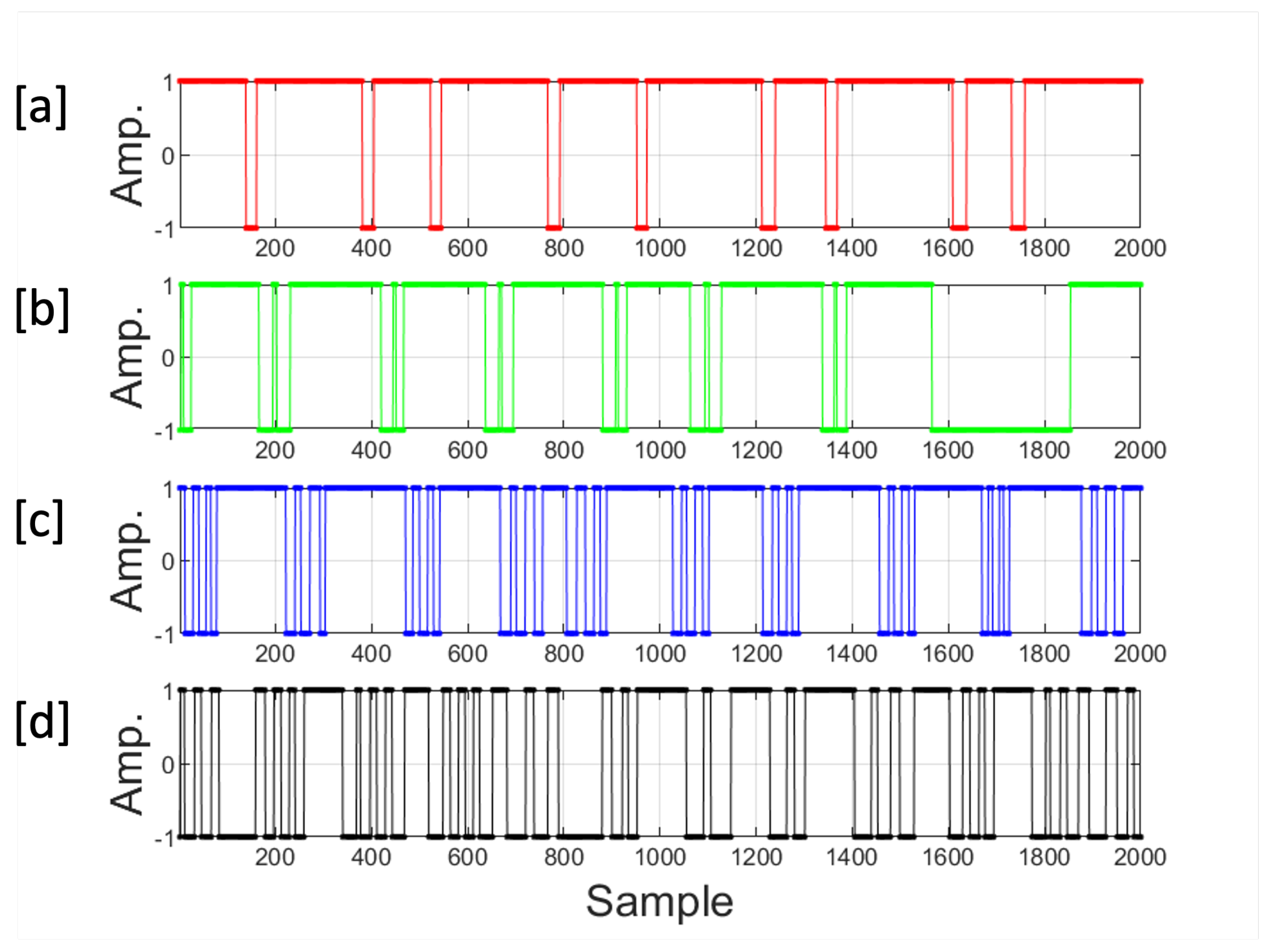

Over a two-second interval, the hand was moved up and down at a consistent pace, mimicking a natural hand motion. Due to the close proximity of each finger (about 4 mm), the sampling rate must be sufficiently fast to catch the similarly brief duration of light. Because the sampling rate of the Rx signal is greater than that of the modulated light signal, the Rx signal is first down-sampled. The modulation is eliminated by locating the signal maxima, and the resulting signal corresponding to the presence of 1–4 fingers is shown in

Figure 7a–d. The little peaks at the start of each cycle are caused by the filtering response of the analogue and sample and hold circuits. As can be observed, the reaction decays rapidly and has no effect on the system’s functioning. When a person makes a gesture with two fingers the angle between them, i.e., the opening, is generally slightly greater than when they make a gesture with four fingers. When the opening is smaller there is an increased chance that the light will not be received by the detector depending on the angle of hand inclination. The result is that there is an increased probability that a four-finger gesture may be misclassified. In part, accuracy may decline as the number of fingers increases owing to the raw signal’s decreasing clarity. This loss is somewhat mitigated by the fact that classification performance increases when a waveform contains a greater number of unique characteristics.

4.3. Probabilistic Neural Network

The PNN computes the most likely gesture class with the highest probability in the presence of noise. Each row of the PNN matrix should cover the duration corresponding to at least one up–down movement of the hand. In this work, the number of columns was varied through {100, 200, 300, 400, 500} samples, and for training each activity was represented by eighty rows. The network was configured with Gaussian spread factor varying between 0 and 1 at intervals of 0.05. After training, the PNN was switched to evaluation mode, where a randomly selected sample from the non-training set was evaluated. The random selection process was repeated using a Monte Carlo simulation consisting of 3200 test segments for each measurement point.

4.4. Process Flow

Three phases of processing are involved in this method: signal conditioning, training and classification. During the signal conditioning stage the captured waveform is signal-conditioned by normalizing the signal magnitude to ensure that the maximum value for each gesture is one. During the training stage each of the five repeated motions is captured over a two-second interval. One hundred waveforms of each gesture were captured for each of the four participants. Following that, the data were randomly partitioned into two sets: one for training and one for classification. During the classification stage, machine learning was used to categorize the motions. A realistic gesture recognition system must be capable of real-time operation and a trade-off between computing complexity and accuracy may be made. We chose the PNN algorithm because it has a good performance-to-complexity ratio and is well-suited to the sequential nature of waveforms produced by hand gestures.

4.5. Signal Conditioning

The training and classification signals should represent the finger motion, and performance should be reasonably insensitive to ambient light levels. Any reflected light from an item near the PD should not provide a signal with a high amplitude that is indistinguishable from the motion without reflection. As a result, the signal should be normalized such that all signals have the same amplitude regardless of the level of ambient light. Natural light in the morning is different from artificial light in the evening and it might change depending on whether the weather is overcast or sunny. The normalization process adjusts the signal in accordance with the signal’s lowest and maximum levels across the measurement period and it can also be useful when data from numerous devices are integrated. The Rx signal is then constrained to the range −1/+1 and processed using the PNN algorithm. Compensation for dropped samples can be made by extrapolation. The signal capture by the PD and subsequent sampling by the DSO was very reliable, however, and no samples were found to have been dropped.

To prevent outliers adversely affecting the operation of a machine learning algorithm, outliers can be handled by a variety of techniques [

57]. In Winsorization extreme values are replaced by specified percentiles. The technique used here to locate outliers is to compute the z-score as

where

x is the received sample and

and

are the mean and standard deviation, respectively. Outliers here are considered values that have z-scores greater than ±3. These samples are then set to the maximum value of ±3

. In practice, values above 2

are rare and extremely rare above 3

.

The processed signal for the corresponding 1–4-finger gestures is shown in

Figure 8a–d.

4.6. Training and Evaluation

As a proof of concept, data sets for four different hands were collected. The smallest hand was 16.3 cm (from extended little finger to thumb), while the biggest hand measured 21.4 cm. Two different measurement sessions were conducted to gather data on the four hands. A first session was used to gather data for the neural network algorithm’s training. A second validation session was performed to assess the trained network’s performance. The data were split 50:50 for training and verification.

5. Performance Evaluation

Two methods for detection were considered in this work and are described in this section. The performance using the PNN is compared with a reference method based on counting the period of time between light transitions.

5.1. Classification Using Probabilistic Neural Network

The average percentage of correct categorizations over all of the motions is tabulated in

Table 2 and plotted in

Figure 9. For each gesture, an average accuracy is calculated over all users and tests. In general it was observed that the performance improved with decreasing PNN column length. This can be explained by noting that the average duration of an up–down gesture is about 200 samples. When the column length was 100, important features of the motion could be missed during training; there is therefore a lower limit on the length between 100 and 200 samples. As the column size increases, on the other hand, the PNN can suffer from over-fitting where too much detail is included during training and a match becomes increasingly hard to find. It can be seen that the performance also depends on the Gaussian spread or smoothing function. The spread is the standard deviation of the Gaussian distribution and is a determinant of the receptive width of the Gaussian function. It should be carefully selected and this is usually achieved in the training phase through offline simulations. Setting the spread at 0.85 would optimize the accuracy and the PNN is able to correctly identify the motion in up to 73% of occasions for a column length of 200. A performance accuracy of 71% was achieved when the spread was 0.3 and it is preferred over a spread setting of 0.85 as the complexity is reduced. However, there are fluctuations in the performance for spread values between 0.3 and 0.9 and hence an average value could be computed by processing results for two spreads (such as 0.3 and 0.4) as an insurance from selecting the lower value of the two performances.

5.2. Classification Using Signal Transition Boundaries

Here the categorization is based on counting the average number of light transitions sensed by the photodiode. The algorithm estimates the number of fingers in each motion cycle by computing an average number of low–high and high–low signal transitions. A mid-point between the count for n and n + 1 fingers is computed during training to set the boundary in the decision unit. A Monte Carlo simulation was set up within which training segments were used to set the decision boundaries and an evaluation stage was carried out using an independent data set. The decision boundaries between gestures for {1–2, 2–3, 3–4} fingers as computed during the training stage were {19.9, 26.1, 34.7} transitions, respectively. For example, if the average number of transitions over 2 s were 23, then the system would predict that the gesture was two fingers. The average success rate for all gestures, users and trials per user was then measured at 65%. In some segments the success rate was very high but the algorithm is blind to other information such as gesture shape that may improve performance when a simple transition is missed. The performance of this algorithm will clearly degrade if the gesture changes mid-way through the measurement period. An issue with this technique is that the boundaries need to be pre-determined and they will vary with each individual.

5.3. Computational Complexity

In our proposal the hardware complexity is limited due to the efficient pre-processing of data into the P-matrix with limited dimensions. The hardware resource count for a real-time implementation of the PNN algorithm on a field-programmable gate array (FPGA) is proportional to the number of classes when implemented in parallel [

58]. Assuming the PNN matrix has dimensions of C = 200 columns and R = 200 rows, an estimate for 10 hand gestures is 84K configurable logic blocks (CLB), 110 DSP-blocks and 51 RAM memory blocks. The classes are implemented in parallel in order to reduce the latency; for 10 classes the training time would therefore be 7

s and execution time 20

s. Although the PNN exponential function can be a source of high run-time complexity [

59], the use of look-up tables can considerably reduce this requirement. Meanwhile, the competing RNN has feedback loops which enable it to solve problems related to time-series data. The computational complexity of a standard LSTM algorithm using the stochastic gradient descent optimization approach is order

, with time complexity per step proportional to the total number of parameters. The learning time for a modest size network is dominated by the factor

×

, where

is the number of memory cells and

is the number of output units [

60].

6. Discussion

We have designed a baseline system that works well when the hand is positioned approximately halfway between the Tx and Rx. In our experiment there would have been a position error present regarding the optimum and actual hand position of up to a few centimeters. The received signal, however, has sufficient clarity for the gesture recognition. The accuracy of hand positioning can be enhanced using additional processing and hardware as part of the future work. There are several ways to enable the ideal positioning of the hand. There could be a feedback signal providing a beep sound to nudge the user to move their hand towards the optimal position. Careful initial set-up of the Tx and Rx will also improve the quality of communication. For example, a chair can be positioned directly under a ceiling light or facing a VLC-embedded transmitter. The ceiling light lens could be automatically adjusted so that a portion of the light is also focused on the hand without affecting the main light beam. Different lenses at the Tx and Rx could be selected enabling the hand to be identified at various points between the Tx and Rx. In this case a calibration can be periodically made to find the position of the user relative to the Tx and Rx.

Machine learning is another powerful technique that can be used to manage and operate in non-linear data. As the hand moves away from the ideal focusing point, it becomes more defocused. However for the ML algorithm this merely adds uncertainty but through calibration the network can select the most likely gesture. It is also worth mentioning that the competing methods also have disadvantages. Using the indirect lighting method, detection operates on shadows which also have a high degree of variability and opportunity for error leading to false detections. As part of future work, a study on the accuracy versus offset from the center position can be conducted.

Humans are rarely able to consistently gesture at a constant rate and hence the captured signal may vary with each sweep. To compensate, the signal may be time-scaled as a function of the captured limb velocity, which could be estimated during calibration. Optimization of data rate and distance for purposes of light communications as well as sensing is an area for future work. The light intensity within a room will be a function of the distance to the light source, and the amplitude normalization step should be sufficient to control the natural variations. However, if the hardware is relocated to a new site then a calibration should be performed to ensure optimal operation. The key parameters such as Tx LED amplitude, equalization coefficient and Tx and Rx amplifier gain can be tuned during a start-up calibration or by extrapolating a look-up table depending on the time and location.

7. Further Work

There are some optimizations of the PNN algorithm that can be explored. The Gaussian function can be replaced with a Laplacian one which potentially could be more tolerant to outliers due to its sharper peak and fatter tails. The PNN spread factor can be optimized using an iterative updating method such as the least squares. The performance of the PNN could be compared with other classification algorithms such as the SVM. Pre-processing the waveforms using wavelet processing could extract important features that would enable the PNN algorithm to perform even better. The number of recognizable motions can be expanded to include those used in conventional sign-language. By extending the LED-photodiode distance, the system could be used for body-centric human activity identification. We would also like to determine whether there is any variation in performance due to the positioning of the testbed with respect to sunlight.

8. Conclusions

This work described the design of a gesture recognition system for light communication systems using a novel pre-processing and probabilistic neural network. The system is applicable to human–computer interaction for health-care, robot systems, commerce and in the home. The hardware system is comprised of a single low-cost LED at the Tx and a single PD at the Rx and is based on patterns of direct light blockage caused by finger movements. Categorization accuracy using the PNN outperformed a reference technique based on recognizing gestures based on the timing of boundaries between detected light transitions. It was shown that the PNN algorithm can correctly categorize hand gestures on 73% of occasions. The optimized performance was achieved when the Gaussian spread function was 0.3 and a low value is preferred to limit the complexity. The performance is sensitive to the particular value of the spread function. To avoid selecting a less optimal value we proposed computing the performance for two values of the spread function and computing an average value.

This paper described, to the best of our knowledge, the first demonstration of hand gesture recognition using a working VLC system; a direct comparison of results is therefore difficult. The gesture recognition performance of 73% can be compared with other recognition systems in the literature. Our system recognizes the moving hand using a simple low-cost photodiode, and thus it is expected that the performance would be lower than a system requiring significant image processing to recognize images collected by cameras such as [

42] in

Table 1. Although the achieved performance is lower than from reported systems requiring special wrist sensors [

29] and gloves [

36], our untethered solution is considered more practical. Our future work will concentrate on expanding the number of gestures and challenging the system to recognize people based on their gesture signatures.

Author Contributions

All authors contributed to the paper. Conceptualization & methodology, J.W., A.M. and R.T.; software, J.W.; validation, investigation, formal analysis, all authors; writing—original draft preparation, J.W., A.M. and R.T.; writing—review and editing, all authors; funding acquisition, A.A. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partially supported by the Kuwait Foundation for Advancement of Sciences (KFAS) under Grant #PR19-13NH-04.

Conflicts of Interest

The authors declare that there are no conflicts of interest regarding the publication of this paper.

References

- Gorecky, D.; Schmitt, M.; Loskyll, M.; Zühlke, D. Human–machine-interaction in the industry 4.0 era. In Proceedings of the 2014 12th IEEE International Conference on Industrial Informatics (INDIN), Porto Alegre, Brazil, 27–30 July 2014; pp. 289–294. [Google Scholar]

- Mahmud, S.; Lin, X.; Kim, J.H. Interface for Human Machine Interaction for assistant devices: A review. In Proceedings of the 2020 10th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 6–8 January 2020; pp. 0768–0773. [Google Scholar]

- Malik, P.K.; Sharma, R.; Singh, R.; Gehlot, A.; Satapathy, S.C.; Alnumay, W.S.; Nayak, J. Industrial Internet of Things and its applications in industry 4.0: State of the art. Comput. Commun. 2021, 166, 125–139. [Google Scholar] [CrossRef]

- Vyas, K.K.; Pareek, A.; Vyas, S. Gesture recognition and control. Int. J. Recent Innov. Trends Comput. Commun. 2013, 1, 575–581. [Google Scholar]

- Wong, W.K.; Juwono, F.H.; Khoo, B.T.T. Multi-features capacitive hand gesture recognition sensor: A machine learning approach. IEEE Sens. J. 2021, 21, 8441–8450. [Google Scholar] [CrossRef]

- Popadić, M.; Kočan, E. LiFi Networks: Concept, Standardization Activities and Perspectives. In Proceedings of the 2021 25th International Conference on Information Technology (IT), Žabljak, Montenegro, 16–20 February 2021; pp. 1–4. [Google Scholar]

- Xu, Z.; Liu, W.; Wang, Z.; Hanzo, L. Petahertz communication: Harmonizing optical spectra for wireless communications. Digit. Commun. Netw. 2021, 8, 605–614. [Google Scholar] [CrossRef]

- Arya, S.; Chung, Y.H. Multiuser Interference-Limited Petahertz Wireless Communications Over Málaga Fading Channels. IEEE Access 2021, 8, 137356–137369. [Google Scholar] [CrossRef]

- Garg, M.; Zhan, M.; Luu, T.T.; Lakhotia, H.; Klostermann, T.; Guggenmos, A.; Goulielmakis, E. Multi-petahertz electronic metrology. Nature 2016, 538, 359–363. [Google Scholar] [CrossRef] [PubMed]

- Ding, W.; Yang, F.; Yang, H.; Wang, J.; Wang, X.; Zhang, X.; Song, J. A hybrid power line and visible light communication system for indoor hospital applications. Comput. Ind. 2015, 68, 170–178. [Google Scholar] [CrossRef]

- Lim, K.; Lee, H.; Chung, W. Multichannel visible light communication with wavelength division for medical data transmission. J. Med. Imaging Health Inform. 2015, 5, 1947–1951. [Google Scholar] [CrossRef]

- Tan, Y.; Chung, W. Mobile health–monitoring system through visible light communication. Bio-Med. Mater. Eng. 2014, 24, 3529–3538. [Google Scholar] [CrossRef] [Green Version]

- Zhang, C.; Tabor, J.; Zhang, J.; Zhang, X. Extending mobile interaction through near-field visible light sensing. In Proceedings of the ACM International Conference on Mobile Computing and Networking, MobiCom’15, Paris, France, 7–11 September 2015; pp. 345–357. [Google Scholar]

- Vrigkas, M.; Nikou, C.; Kakadiaris, I.A. A review of human activity recognition methods. Front. Robot. AI 2015, 2, 1–28. [Google Scholar] [CrossRef] [Green Version]

- Bhat, S.; Mehbodniya, A.; Alwakeel, A.; Webber, J.; Al-Begain, K. Human Recognition using Single-Input-Single-Output Channel Model and Support Vector Machines. Int. J. Adv. Comput. Sci. Appl. (IJACSA) 2021, 12, 811–823. [Google Scholar] [CrossRef]

- Yin, R.; Wang, D.; Zhao, S.; Lou, Z.; Shen, G. Wearable Sensors-Enabled Human–Machine Interaction Systems: From Design to Application. Adv. Funct. Mater. 2021, 31, 2008936. [Google Scholar] [CrossRef]

- Alhammad, N.; Al-Dossari, H. Dynamic Segmentation for Physical Activity Recognition Using a Single Wearable Sensor. Appl. Sci. 2021, 11, 2633. [Google Scholar] [CrossRef]

- Alhamazani, K.T.; Alshudukhi, J.; Aljaloud, S.; Abebaw, S. Hand Gesture of Recognition Pattern Analysis by Image Treatment Techniques. Comput. Math. Methods Med. 2022, 2022, 1905151. [Google Scholar] [CrossRef] [PubMed]

- Cabrera-Quiros, L.; Tax, D.M.; Hung, H. Gestures in-the-wild: Detecting conversational hand gestures in crowded scenes using a multimodal fusion of bags of video trajectories and body worn acceleration. IEEE Trans. Multimed. 2020, 22, 138–147. [Google Scholar] [CrossRef]

- Pinto, R.F.; Borges, C.D.; Almeida, A.; Paula, I.C. Static hand gesture recognition based on convolutional neural networks. Hindawi Wirel. Commun. Mob. Comput. 2019, 2019, 4167890. [Google Scholar] [CrossRef]

- Scherer, M.; Magno, M.; Erb, J.; Mayer, P.; Eggimann, M.; Benini, L. Tinyradarnn: Combining spatial and temporal convolutional neural networks for embedded gesture recognition with short range radars. IEEE Internet Things J. 2021, 8, 10336–10346. [Google Scholar] [CrossRef]

- Sahoo, J.P.; Prakash, A.J.; Pławiak, P.; Samantray, S. Real-Time Hand Gesture Recognition Using Fine-Tuned Convolutional Neural Network. Sensors 2022, 22, 706. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper withconvolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Mujahid, A.; Awan, M.J.; Yasin, A.; Mohammed, M.A.; Damaševičius, R.; Maskeliūnas, R.; Abdulkareem, K.H. Real-time hand gesture recognition based on deep learning YOLOv3 model. Appl. Sci. 2021, 11, 4164. [Google Scholar] [CrossRef]

- Choi, J.W.; Ryu, S.J.; Kim, J.H. Short-range radar based real-time hand gesture recognition using LSTM encoder. IEEE Access 2019, 7, 33610–33618. [Google Scholar] [CrossRef]

- Webber, J.; Mehbodniya, A.; Teng, R.; Arafa, A.; Alwakeel, A. Finger-Gesture Recognition for Visible Light Communication Systems Using Machine Learning. Appl. Sci. 2021, 12, 11582. [Google Scholar] [CrossRef]

- Qi, W.; Ovur, S.E.; Li, Z.; Marzullo, A.; Song, R. Multi-Sensor Guided Hand Gesture Recognition for a Teleoperated Robot Using a Recurrent Neural Network. IEEE Robot. Autom. Lett. 2021, 6, 6039–6045. [Google Scholar] [CrossRef]

- Wang, Z.; Jiang, M.; Hu, Y.; Li, H. An incremental learning method based on probabilistic neural networks and adjustable fuzzy clustering for human activity recognition by using wearable sensors. IEEE Trans. Inf. Technol. Biomed. 2012, 16, 691–699. [Google Scholar] [CrossRef] [PubMed]

- Lotfi, A.; Benyettou, A. Cross-validation probabilistic neural network based face identification. J. Inf. Process. Syst. 2018, 14, 1075–1086. [Google Scholar]

- Saha, S.; Lahiri, R.; Konar, A.; Banerjee, B.; Nagar, A.K. Human skeleton matching for e-learning of dance using a probabilistic neural network. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; pp. 1754–1761. [Google Scholar]

- Konar, A.; Saha, S. Probabilistic Neural Network Based Dance Gesture Recognition. In Gesture Recognit; Springer: Cham, Switzerland, 2018; pp. 195–224. [Google Scholar]

- Tang, Y.; Zhang, S.; Niu, L. Handwritten Arabic numerals recognition system using probabilistic neural networks. J. Phys. Conf. Ser. 2021, 1738, 012082. [Google Scholar] [CrossRef]

- Mondal, A.; Ghosh, S.; Ghosh, A. Partially camouflaged object tracking using modified probabilistic neural network and fuzzy energy based active contour. Int. J. Comput. Vis. 2017, 122, 116–148. [Google Scholar] [CrossRef]

- Mohanty, M.N.; Palo, H.K. Child emotion recognition using probabilistic neural network with effective features. Measurement 2020, 152, 107369. [Google Scholar] [CrossRef]

- Luzanin, O.; Plancak, M. Hand gesture recognition using low-budget data glove and cluster-trained probabilistic neural network. Assem. Autom. 2014, 34, 94–105. [Google Scholar] [CrossRef]

- Fu, J.; Xiong, L.; Song, X.; Yan, Z.; Xie, Y. Identification of finger movements from forearm surface emg using an augmented probabilistic neural network. In Proceedings of the IEEE/SICE International Symposium on System Integration (SII), Taipei, Taiwan, 11–14 December 2017; pp. 547–552. [Google Scholar]

- Ge, S.S.; Yang, Y.; Lee, T.H. Hand gesture recognition and tracking based on distributed locally linear embedding. Image Vis. Comput. 2018, 26, 1607–1620. [Google Scholar] [CrossRef]

- Yu, L.; Abuella, H.; Islam, M.Z.; O’Hara, J.F.; Crick, C.; Ekin, S. Gesture recognition using reflected visible and infrared lightwave signals. IEEE Trans. Hum.-Mach. Syst. 2021, 51, 44–55. [Google Scholar] [CrossRef]

- Kaholokula, M.D.A. Reusing Ambient Light to Recognize Hand Gestures. Undergraduate Thesis, Dartmouth College, Hanover, NH, USA, 2016. [Google Scholar]

- Parvathy, P.; Subramaniam, K.; Prasanna Venkatesan, G.K.D.; Karthikaikumar, P.; Varghese, J.; Jayasankar, T. Development of hand gesture recognition system using machine learning. J. Ambient. Intell. Humaniz. Comput. 2021, 12, 6793–6800. [Google Scholar] [CrossRef]

- Srivastava, H.; Ojha, N.; Vaish, A. Stereo Music System Control using Vision based Static Hand Gesture Recognition. In Proceedings of the 2021 Asian Conference on Innovation in Technology (ASIANCON), Pune, India, 27–29 August 2021; pp. 1–5. [Google Scholar]

- Vishwakarma, D.K.; Kapoor, R. An efficient interpretation of hand gestures to control smart interactive television. Int. J. Comput. Vis. Robot. 2021, 7, 454–471. [Google Scholar] [CrossRef]

- Kahn, J.M.; Barry, J.R. Wireless infrared communications. Proc. IEEE 2019, 85, 265–298. [Google Scholar] [CrossRef] [Green Version]

- Sprecht, D. Probabilistic Neural Networks. Neural Netw. 1990, 3, 109–118. [Google Scholar] [CrossRef]

- Kusy, M.; Kowalski, P.A. Weighted probabilistic neural network. Inf. Sci. 2018, 430, 65–76. [Google Scholar] [CrossRef]

- Mohebali, B.; Tahmassebi, A.; Meyer-Baese, A.; Gandomi, A.H. Probabilistic neural networks: A brief overview of theory, implementation, and application. In Handbook of Probabilistic Models; Elsevier: Amsterdam, The Netherlands, 2017; pp. 347–367. [Google Scholar]

- Shree, N.V.; Kumar, T.N.R. Identification and classification of brain tumor MRI images with feature extraction using DWT and probabilistic neural network. Brain Inform. 2018, 5, 23–30. [Google Scholar] [CrossRef] [Green Version]

- Hasan, S.; Yousif, M.; Al-Talib, T.M. Brain tumor classification using Probabilistic Neural Network. J. Fundam. Appl. Sci. 2018, 10, 667–670. [Google Scholar]

- Duraisamy, B.; Shanmugam, J.V.; Annamalai, J. Alzheimer disease detection from structural MR images using FCM based weighted probabilistic neural network. Brain Imaging Behav. 2019, 13, 87–110. [Google Scholar] [CrossRef]

- Cetin, O.; Temurtas, F. A comparative study on classification of magnetoencephalography signals using probabilistic neural network and multilayer neural network. Soft Comput. 2021, 25, 2267–2275. [Google Scholar] [CrossRef]

- Jiang, Q.; Shen, Y.; Li, H.; Xu, F. New fault recognition method for rotary machinery based on information entropy and a probabilistic neural network. Sensors 2018, 18, 337. [Google Scholar] [CrossRef] [Green Version]

- Webber, J.; Mehbodniya, A.; Hou, Y.; Yano, K.; Kumagai, T. Study on Idle Slot Availability Prediction for WLAN using a Probabilistic Neural Network. In Proceedings of the IEEE Asia Pacific Conference on Communications (APCC’17), Perth, WA, Australia, 11–13 December 2017; pp. 157–162. [Google Scholar]

- Webber, J.; Suga, N.; Mehbodniya, A.; Yano, K.; Kumagai, T. Study on fading prediction for automated guided vehicle using probabilistic neural network. In Proceedings of the 23rd Asia-Pacific Microwave Conference (APMC), Kyoto, Japan, 6–9 November 2018; pp. 887–889. [Google Scholar]

- Parzen, E. On estimation of a probability density function and mode. Ann. Math. Stat. 1962, 3, 1065–1076. [Google Scholar] [CrossRef]

- Babatunde, O.H.; Armstrong, L.; Leng, J.; Diepeveen, D. The Application Of Genetic Probabilistic Neural Network sand Cellular Neural Networks. Asian J. Comput. Inf. Syst. 2014, 2, 90–101. [Google Scholar]

- Ghosh, D.; Vogt, A. Outliers: An evaluation of methodologies. In Proceedings of the Joint Statistical Meetings, San Diego, CA, USA, 28 July–2 August 2012. [Google Scholar]

- Webber, J.; Mehbodniya, A.; Hou, Y.; Yano, K.; Usui, M.; Kumagai, T. Prediction scheme of WLAN idle status using probabilistic neural network and implementation on FPGA. IEICE Tech. Rep. 2018, 117, 117–124. [Google Scholar]

- Savchenko, A. Probabilistic Neural Network with Complex Exponential Activation Functions in Image Recognition. IEEE Trans. Neural Netw. Learn. Syst. 2017, 31, 651–660. [Google Scholar] [CrossRef] [PubMed]

- Sak, H.; Senior, A.; Beaufays, F. Long short-term memory based recurrent neural network architectures for large vocabulary speech recognition. arXiv 2014, arXiv:1402.1128. [Google Scholar]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).