Key Requirements for the Detection and Sharing of Behavioral Indicators of Compromise

Abstract

:1. Introduction

- Analyzing the problems of specification, detection and sharing of behavioral indicators of compromise.

- Extracting the key features of cyber operations from advanced threat actors.

- Identifying and structuring the key requirements, from an intelligence perspective, for the detection and sharing of behavioral indicators of compromise.

- Identifying current efforts to fulfill those requirements and the failures of those efforts.

2. Background

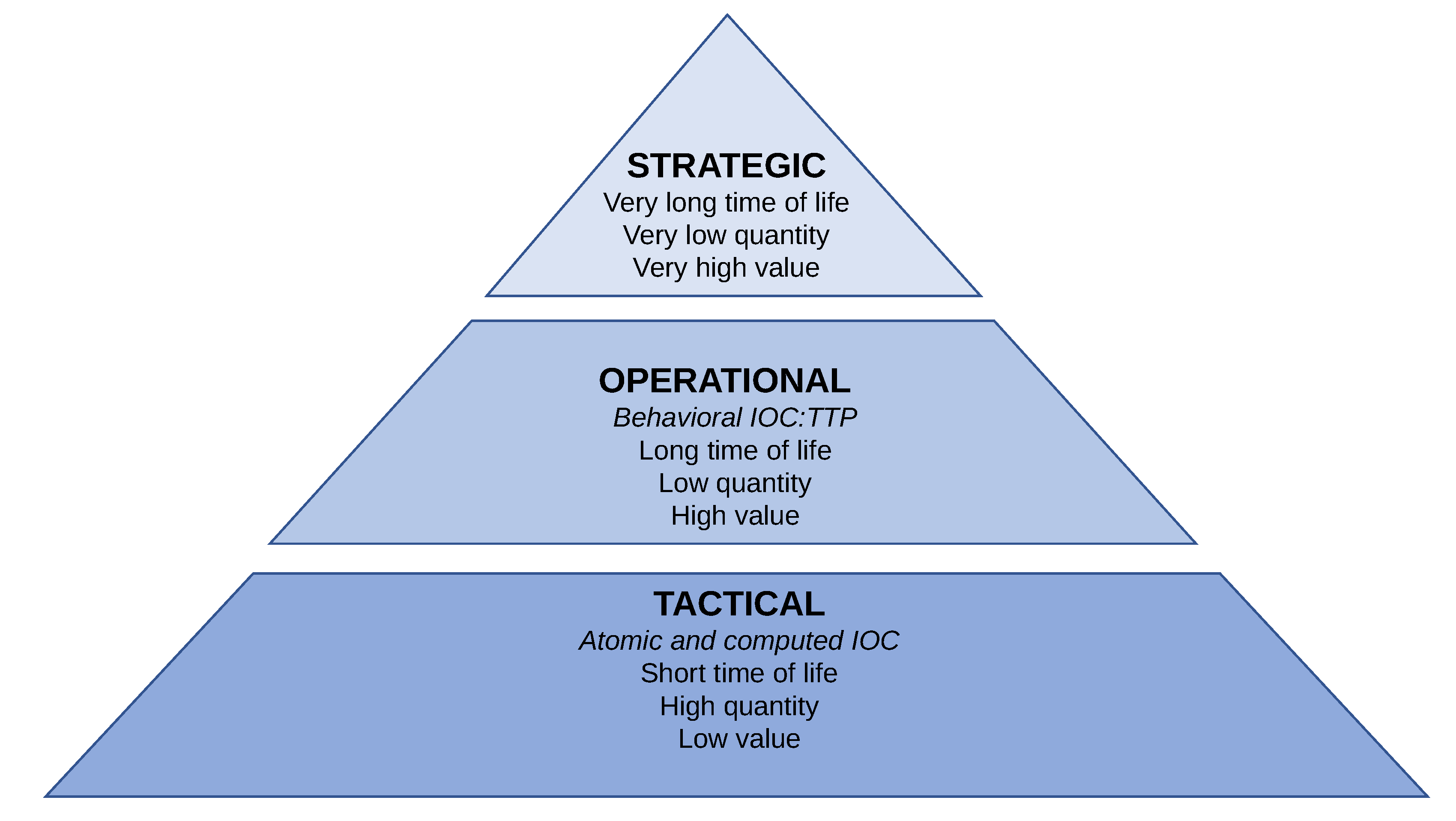

2.1. Indicators of Compromise

- Atomic. Atomic indicators are those which cannot be broken down into smaller parts and retain their meanings in the context of an intrusion. Examples of atomic indicators include IP addresses and domain names.

- Computed. Computed indicators are those which are derived from data involved in an incident. Examples of computed indicators include hash values and regular expressions.

- Behavioral. Behavioral indicators are collections of computed and atomic indicators, often subject to qualification by quantity and possibly combinatorial logic. An example of a complex behavioral indicator could be repeated social engineering attempts of a specific style via email against low-level employees to gain a foothold in the network, followed by unauthorized remote desktop connections to other computers on the network delivering specific malware [4]; a simpler example could be a document file creating an executable object. Such indicators are captured as tactics, techniques and procedures, representing the modus operandi of the attacker [6].

2.2. Intelligence Cycle

- Direction. Determination of intelligence requirements, planning the collection effort, issuance of orders and requests to collection agencies and maintenance of continuous checking on the productivity of such agencies.

- Collection. The exploitation of sources by collection agencies and the delivery of the information obtained to the appropriate processing unit for use in the production of intelligence.

- Processing. The conversion of information into usable data suitable for analysis.

- Analysis. Tasks related to integration, evaluation or interpretation of information to turn it into intelligence: a contextualized, coherent whole.

- Dissemination. The timely conveyance of intelligence, in an appropriate form and by any suitable means, to those who need it.

3. The Issue

3.1. Threat Specification

3.2. Real-World IOC

4. Approaches and Limitations

|

5. Key Requirements for Behavioral IOC Detection and Sharing

5.1. Acquisition

- Endpoint, including not only user endpoints but also servers, where processes are created, files are opened and threat activities are performed at last; this data source includes global infrastructures for endpoints, such as Windows Active Directory.

- Network, including payload and net flow, where threat movements, both lateral and external, are performed.

- Perimeter, where input and output of data between the threat actor and its target is performed, including network devices such as firewalls, data loss prevention systems and virtual private network servers.

5.2. Processing

5.3. Analysis

5.4. Dissemination

5.5. A Practical Example

6. Discussion

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Abu, S.; Selamat, S.R.; Yusof, R.; Ariffin, A. An Enhancement of Cyber Threat Intelligence Framework. J. Adv. Res. Dyn. Control. Syst. 2018, 10, 96–104. [Google Scholar]

- Rantos, K.; Spyros, A.; Papanikolaou, A.; Kritsas, A.; Ilioudis, C.; Katos, V. Interoperability challenges in the cybersecurity information sharing ecosystem. Computers 2020, 9, 18. [Google Scholar] [CrossRef] [Green Version]

- Harrington, C. Sharing indicators of compromise: An overview of standards and formats. Emc Crit. Incid. Response Cent. 2013. [Google Scholar]

- Rid, T.; Buchanan, B. Attributing cyber attacks. J. Strateg. Stud. 2015, 38, 4–37. [Google Scholar] [CrossRef]

- Niakanlahiji, A.; Safarnejad, L.; Harper, R.; Chu, B.T. IoCMiner: Automatic Extraction of Indicators of Compromise from Twitter. In Proceedings of the 2019 IEEE International Conference on Big Data (Big Data), Los Angeles, CA, USA, 9–12 December 2019; pp. 4747–4754. [Google Scholar]

- Skopik, F.; Filip, S. Design principles for national cyber security sensor networks: Lessons learned from small-scale demonstrators. In Proceedings of the 2019 International Conference on Cyber Security and Protection of Digital Services (Cyber Security), Oxford, UK, 3–4 June 2019; pp. 1–8. [Google Scholar]

- Cloppert, M. Security Intelligence: Attacking the Cyber Kill Chain; SANS Institute: Bethesda, MA, USA, 2009; Volume 26. [Google Scholar]

- Hutchins, E.M.; Cloppert, M.J.; Amin, R.M. Intelligence-driven computer network defense informed by analysis of adversary campaigns and intrusion kill chains. Lead. Issues Inf. Warf. Secur. Res. 2011, 1, 80. [Google Scholar]

- Brown, R.; Lee, R.M. The Evolution of Cyber Threat Intelligence (CTI): 2019 SANS CTI Survey; SANS Institute: Bethesda, MA, USA, 2019. [Google Scholar]

- Iqbal, Z.; Anwar, Z. Ontology Generation of Advanced Persistent Threats and their Automated Analysis. Nust J. Eng. Sci. 2016, 9, 68–75. [Google Scholar]

- Vakilinia, I.; Cheung, S.; Sengupta, S. Sharing susceptible passwords as cyber threat intelligence feed. In Proceedings of the MILCOM 2018-2018 IEEE Military Communications Conference (MILCOM), Los Angeles, CA, USA, 29–31 October 2018; pp. 1–6. [Google Scholar]

- Kazato, Y.; Nakagawa, Y.; Nakatani, Y. Improving Maliciousness Estimation of Indicator of Compromise Using Graph Convolutional Networks. In Proceedings of the 2020 IEEE 17th Annual Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 10–13 January 2020; pp. 1–7. [Google Scholar]

- Wu, Y.; Huang, C.; Zhang, X.; Zhou, H. GroupTracer: Automatic Attacker TTP Profile Extraction and Group Cluster in Internet of Things. Secur. Commun. Netw. 2020, 2020. [Google Scholar] [CrossRef]

- Office, N.S. NATO Glossary of Terms and Definitions (English and French); NATO Standardization Agency (NSA): Brussels, Belgium, 2018. [Google Scholar]

- Joint Chief of Staff. Joint Publication 2-0. Joint Intelligence. Technical Report. 2013. Available online: https://www.jcs.mil/Portals/36/Documents/Doctrine/pubs/jp2_0.pdf (accessed on 21 December 2021).

- Boury-Brisset, A.C.; Frini, A.; Lebrun, R. All-Source Information Management and Integration for Improved Collective Intelligence Production; Technical Report; Defence Research and Development Canada Valcartier: Quebec, QC, Canada, 2011. [Google Scholar]

- Clark, R.M.; Oleson, P.C. Cyber Intelligence. J. U.S. Intell. Stud. 2018, 24, 11–23. [Google Scholar]

- Richelson, J. The US Intelligence Community, 7th ed.; Routledge: England, UK, 2016. [Google Scholar]

- U.S. Army. Field Manual 2-0 Intelligence, The US Army; Headquarters Department of the Army: Washington, DC, USA, 2004. [Google Scholar]

- Danyliw, R.; Meijer, J.; Demchenko, Y. The Incident Object Description Exchange Format. RFC 5070. 2017. Available online: https://datatracker.ietf.org/doc/html/rfc5070 (accessed on 21 December 2021).

- Burger, E.W.; Goodman, M.D.; Kampanakis, P.; Zhu, K.A. Taxonomy model for cyber threat intelligence information exchange technologies. In Proceedings of the 2014 ACM Workshop on Information Sharing & Collaborative Security, Vienna, Austria, 23–25 October 2014; pp. 51–60. [Google Scholar]

- Cain, P.; Jevans, D. Extensions to the IODEF-Document Class for Reporting Phishing. RFC 5901. 2010. Available online: https://datatracker.ietf.org/doc/html/rfc5901 (accessed on 17 December 2021).

- Mavroeidis, V.; Bromander, S. Cyber Threat Intelligence Model: An Evaluation of Taxonomies, Sharing Standards, and Ontologies within Cyber Threat Intelligence. In Proceedings of the 2017 European Intelligence and Security Informatics Conference (EISIC), Athens, Greece, 11–13 September 2017; pp. 91–98. [Google Scholar]

- Barnum, S. Standardizing cyber threat intelligence information with the Structured Threat Information eXpression (STIX). Mitre Corp. 2012, 11, 1–22. [Google Scholar]

- Ramsdale, A.; Shiaeles, S.; Kolokotronis, N. A Comparative Analysis of Cyber-Threat Intelligence Sources, Formats and Languages. Electronics 2020, 9, 824. [Google Scholar] [CrossRef]

- Mirza, Q.K.A.; Mohi-Ud-Din, G.; Awan, I. A cloud-based energy efficient system for enhancing the detection and prevention of modern malware. In Proceedings of the 2016 IEEE 30th International Conference on Advanced Information Networking and Applications (AINA), Crans-Montana, Switzerland, 23–25 March 2016; pp. 754–761. [Google Scholar]

- Abe, S.; Uchida, Y.; Hori, M.; Hiraoka, Y.; Horata, S. Cyber Threat Information Sharing System for Industrial Control System (ICS). In Proceedings of the 2018 57th Annual Conference of the Society of Instrument and Control Engineers of Japan (SICE), Nara, Japan, 11–14 September 2018; pp. 374–379. [Google Scholar]

- Troiano, E.; Soldatos, J.; Polyviou, A.; Polyviou, A.; Mamelli, A.; Drakoulis, D. Big Data Platform for Integrated Cyber and Physical Security of Critical Infrastructures for the Financial Sector: Critical Infrastructures as Cyber-Physical Systems. In Proceedings of the 11th International Conference on Management of Digital EcoSystems, Limassol, Cyprus, 12–14 November 2019; pp. 262–269. [Google Scholar]

- Ussath, M.; Jaeger, D.; Cheng, F.; Meinel, C. Pushing the limits of cyber threat intelligence: Extending STIX to support complex patterns. In Information Technology: New Generations; Springer: Berlin/Heidelberg, Germany, 2016; pp. 213–225. [Google Scholar]

- Tounsi, W. Cyber-Vigilance and Digital Trust: Cyber Security in the Era of Cloud Computing and IoT; John Wiley & Sons: Hoboken, NJ, USA, 2019. [Google Scholar]

- Sauerwein, C.; Sillaber, C.; Mussmann, A.; Breu, R. Threat intelligence sharing platforms: An exploratory study of software vendors and research perspectives. In Proceedings of the Wirtschaftsinformatik 2017 Proceedings (Track 8—Information Privacy and Information Security), St. Gallen, Switzerland, 8–15 February 2017; pp. 837–851. [Google Scholar]

- Gong, S.; Lee, C. Cyber Threat Intelligence Framework for Incident Response in an Energy Cloud Platform. Electronics 2021, 10, 239. [Google Scholar] [CrossRef]

- Cyberwiser. Implementation of the Network and Information Security (NIS) Directive; Technical Report ETSI TR 103 456; European Telecommunications Standards Institute: Sophia Antipolis Cedex, France, 2017. [Google Scholar]

- Rhoades, D. Machine actionable indicators of compromise. In Proceedings of the 2014 International Carnahan Conference on Security Technology (ICCST), Rome, Italy, 13–16 October 2014; pp. 1–5. [Google Scholar]

- Gong, S.; Cho, J.; Lee, C. A Reliability Comparison Method for OSINT Validity Analysis. IEEE Trans. Ind. Informatics 2018, 14, 5428–5435. [Google Scholar] [CrossRef]

- Tounsi, W.; Rais, H. A survey on technical threat intelligence in the age of sophisticated cyber attacks. Comput. Secur. 2018, 72, 212–233. [Google Scholar] [CrossRef]

- Ghazi, Y.; Anwar, Z.; Mumtaz, R.; Saleem, S.; Tahir, A. A supervised machine learning based approach for automatically extracting high-level threat intelligence from unstructured sources. In Proceedings of the 2018 International Conference on Frontiers of Information Technology (FIT), Islamabad, Pakistan, 17–19 December 2018; pp. 129–134. [Google Scholar]

- Bianco, D. The Pyramid of Pain. Technical Report. 2013. Available online: http://detect-respond.blogspot.com/2013/03/the-pyramid-of-pain.html (accessed on 13 November 2021).

- Park, C.; Chung, H.; Seo, K.; Lee, S. Research on the classification model of similarity malware using fuzzy hash. J. Korea Inst. Inf. Secur. Cryptol. 2012, 22, 1325–1336. [Google Scholar]

- French, D.; Casey, W. Fuzzy Hashing Techniques in Applied Malware Analysis. In Results of SEI Line-Funded Exploratory New Starts Projects; Software Engineering Institute, Carnegie Mellon University: Pittsburgh, PA, USA, 2012; pp. 2–19. [Google Scholar]

- Almohannadi, H.; Awan, I.; Al Hamar, J.; Cullen, A.; Disso, J.P.; Armitage, L. Cyber threat intelligence from honeypot data using elasticsearch. In Proceedings of the 2018 IEEE 32nd International Conference on Advanced Information Networking and Applications (AINA), Krakow, Poland, 16–18 May 2018; pp. 900–906. [Google Scholar]

- Kambara, Y.; Katayama, Y.; Oikawa, T.; Furukawa, K.; Torii, S.; Izu, T. Developing the Analysis Tool of Cyber-Attacks by Using CTI and Attributes of Organization. In Workshops of the International Conference on Advanced Information Networking and Applications; Springer: Berlin/Heidelberg, Germany, 2019; pp. 673–682. [Google Scholar]

- Jacob, G.; Debar, H.; Filiol, E. Behavioral detection of malware: From a survey towards an established taxonomy. J. Comput. Virol. 2008, 4, 251–266. [Google Scholar] [CrossRef]

- Ligh, M.; Adair, S.; Hartstein, B.; Richard, M. Malware Analyst’s Cookbook and DVD: Tools and Techniques for Fighting Malicious Code; Wiley Publishing: Indianapolis, IN, USA, 2010. [Google Scholar]

- Beaucamps, P.; Gnaedig, I.; Marion, J.Y. Behavior abstraction in malware analysis. In International Conference on Runtime Verification; Springer: Berlin/Heidelberg, Germany, 2010; pp. 168–182. [Google Scholar]

- Husari, G.; Al-Shaer, E.; Ahmed, M.; Chu, B.; Niu, X. TTPDrill: Automatic and accurate extraction of threat actions from unstructured text of CTI sources. In Proceedings of the 33rd Annual Computer Security Applications Conference, New York, NY, USA, 4–8 December 2017; pp. 103–115. [Google Scholar]

- De Tender, P.; Rendon, D.; Erskine, S. Pro Azure Governance and Security. A Comprehensive Guide to Azure Policy, Blueprints, Security Center, and Sentinel; Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- Bussa, T.; Litan, A.; Phillips, T. Market Guide for User and Entity Behavior Analytics; Technical Report; Gartner: Stamford, CT, USA, 2016. [Google Scholar]

- Gates, C.; Taylor, C. Challenging the anomaly detection paradigm: A provocative discussion. In Proceedings of the 2006 Workshop on New Security Paradigms, Schloss Dagstuhl, Germany, 19–22 September 2006; pp. 21–29. [Google Scholar]

- Intelligence, T. APT28: A Window into Russia’s Cyber Espionage Operations; Technical Report. Available online: https://www.fireeye.com/content/dam/fireeye-www/global/en/current-threats/pdfs/rpt-apt28.pdf (accessed on 3 October 2021).

- Mwiki, H.; Dargahi, T.; Dehghantanha, A.; Choo, K.K.R. Analysis and triage of advanced hacking groups targeting western countries critical national infrastructure: APT28, RED October, and Regin. In Critical Infrastructure Security and Resilience; Springer: Berlin/Heidelberg, Germany, 2019; pp. 221–244. [Google Scholar]

- Utterback, K. An Analysis of the Cyber Threat Actors Targeting the United States and Its Allies. Ph.D Thesis, Utica College, New York, NY, USA, 2021. [Google Scholar]

- Shuya, M. Russian cyber aggression and the new Cold War. J. Strateg. Secur. 2018, 11, 1–18. [Google Scholar] [CrossRef] [Green Version]

- Kotenko, I.; Chechulin, A. Attack modeling and security evaluation in SIEM systems. Int. Trans. Syst. Sci. Appl. 2012, 8, 129–147. [Google Scholar]

- Kotenko, I.; Chechulin, A. Common framework for attack modeling and security evaluation in SIEM systems. In Proceedings of the 2012 IEEE International Conference on Green Computing and Communications, Besancon, France, 20–23 November 2012. [Google Scholar]

- Miloslavskaya, N. Stream Data Analytics for Network Attacks’ Prediction. Procedia Comput. Sci. 2020, 169, 57–62. [Google Scholar] [CrossRef]

- Ayoade, G.; Chandra, S.; Khan, L.; Hamlen, K.; Thuraisingham, B. Automated threat report classification over multi-source data. In Proceedings of the 2018 IEEE 4th International Conference on Collaboration and Internet Computing (CIC), Philadelphia, PA, USA, 18–20 October 2018. [Google Scholar]

- Center, T.M.I. APT1 Exposing One of China’s Cyber Espionage Units; Technical Report. 2014. Available online: https://www.mandiant.com/media/9941/download (accessed on 28 October 2021).

- Wagner, T.D. Cyber Threat Intelligence for “Things”. In Proceedings of the 2019 International Conference on Cyber Situational Awareness, Data Analytics Furthermore, Assessment (Cyber SA), Oxford, UK, 3–4 June 2019; pp. 1–2. [Google Scholar]

- Strom, B.E.; Applebaum, A.; Miller, D.P.; Nickels, K.C.; Pennington, A.G.; Thomas, C.B. Mitre ATT&CK: Design and Philosophy; Technical Report; The MITRE Corporation. Available online: https://www.mitre.org/sites/default/files/publications/pr-18-0944-11-mitre-attack-design-and-philosophy.pdf (accessed on 5 December 2021).

- Wheelus, C.; Bou-Harb, E.; Zhu, X. Towards a big data architecture for facilitating cyber threat intelligence. In Proceedings of the 2016 8th IFIP International Conference on New Technologies, Mobility and Security (NTMS), Larnaca, Cyprus, 21–23 November 2016; pp. 1–5. [Google Scholar]

- Mtsweni, J.; Mutemwa, M.; Mkhonto, N. Development of a cyber-threat intelligence-sharing model from big data sources. J. Inf. Warf. 2016, 15, 56–68. [Google Scholar]

- Pennington, A.; Applebaum, A.; Nickels, K.; Schulz, T.; Strom, B.; Wunder, J.; Getting Started With ATT&CK. Technical Report; The MITRE Corporation. Available online: https://www.mitre.org/publications/technical-papers/getting-started-with-attack (accessed on 5 December 2021).

- Preuveneers, D.; Joosen, W. Sharing Machine Learning Models as Indicators of Compromise for Cyber Threat Intelligence. J. Cybersecur. Priv. 2021, 1, 8. [Google Scholar] [CrossRef]

- Ring, T. Threat intelligence: Why people do not share. Comput. Fraud. Secur. 2014, 2014, 5–9. [Google Scholar] [CrossRef]

- Wagner, T.D.; Mahbub, K.; Palomar, E.; Abdallah, A.E. Cyber threat intelligence sharing: Survey and research directions. Comput. Secur. 2019, 87, 101589. [Google Scholar] [CrossRef]

- Peng, K.; Li, M.; Huang, H.; Wang, C.; Wan, S.; Choo, K.K.R. Security Challenges and Opportunities for Smart Contracts in Internet of Things: A Survey. IEEE Internet Things J. 2021, 8, 12004–12020. [Google Scholar] [CrossRef]

- Elmaghraby, A.S.; Losavio, M.M. Cyber security challenges in Smart Cities: Safety, security and privacy. J. Adv. Res. 2014, 5, 491–497. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Malhotra, P.; Singh, Y.; Anand, P.; Bangotra, D.K.; Singh, P.K.; Hong, W.C. Internet of Things: Evolution, Concerns and Security Challenges. Sensors 2021, 21, 1809. [Google Scholar] [CrossRef] [PubMed]

- Shaukat, K.; Alam, T.M.; Hameed, I.A.; Khan, W.A.; Abbas, N.; Luo, S. A Review on Security Challenges in Internet of Things (IoT). In Proceedings of the 2021 26th International Conference on Automation and Computing (ICAC), Portsmouth, UK, 2–4 September 2021; pp. 1–6. [Google Scholar]

- Latif, S.; Idrees, Z.; e Huma, Z.; Ahmad, J. Blockchain technology for the industrial Internet of Things: A comprehensive survey on security challenges, architectures, applications, and future research directions. Trans. Emerg. Telecommun. Technol. 2021, 32, e4337. [Google Scholar] [CrossRef]

- Sullivan, S.; Brighente, A.; Kumar, S.; Conti, M. 5G Security Challenges and Solutions: A Review by OSI Layers. IEEE Access 2021, 9, 116294–116314. [Google Scholar] [CrossRef]

- Navamani, T. A Review on Cryptocurrencies Security. J. Appl. Secur. Res. 2021, 1–21. [Google Scholar] [CrossRef]

| Artifact | Autonomous System (AS) | Directory |

|---|---|---|

| Domain name | Email Address | Email Message |

| File | IPv4 Address | IPv6 Address |

| MAC Address | Mutex | Network Traffic |

| Process | Software | URL |

| User Account | Windows Registry Key | X.509 Certificate |

| Feature | Description |

|---|---|

| Multiple targets | Advanced threat actors target a wide spectrum of victims, including sectors such as military, government, technology, energy or even non-profit organizations |

| Broad range of techniques | Advanced threat actors achieve their goals through a broad range of techniques. These techniques are usually stealth, in order to go unnoticed, and one single threat actor can execute different techniques linked to the same tactic, even in a single operation against a particular target |

| Tailored tools and artifacts | Advanced threat actors can use multiple tools and artifacts in their operations. These tools and artifacts range from specifically developed malware to legitimate system tools, and in many cases the threat actor is aware of the deployed counter measures in the target and knows how to evade them |

| Potential indicators | Hostile activities leave traces in targeted systems and internal network traffic. In addition, the target perimeter security must be monitored in order to detect connections to command and control or exfiltration servers |

| Compromises spread over time | Once a target is compromised, this compromise spreads over time in most operations, thereby giving the threat actor the ability to control its target for months or years |

| IC Stage | Key Requirements |

|---|---|

| Acquisition | Acquire data from multiple, relevant sources |

| Acquire not only alerts, but regular events | |

| Processing | Central data repository where relationships can be established |

| Common format for stored data | |

| Long term retention | |

| Analysis | Platform-agnostic implementation |

| Full native coverage for all techniques | |

| Correlation of data from multiple sources | |

| Comparison of correlated data against a reference | |

| Dissemination | Machine readable and exportable format |

| Standard query language among providers |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Villalón-Huerta, A.; Ripoll-Ripoll, I.; Marco-Gisbert, H. Key Requirements for the Detection and Sharing of Behavioral Indicators of Compromise. Electronics 2022, 11, 416. https://doi.org/10.3390/electronics11030416

Villalón-Huerta A, Ripoll-Ripoll I, Marco-Gisbert H. Key Requirements for the Detection and Sharing of Behavioral Indicators of Compromise. Electronics. 2022; 11(3):416. https://doi.org/10.3390/electronics11030416

Chicago/Turabian StyleVillalón-Huerta, Antonio, Ismael Ripoll-Ripoll, and Hector Marco-Gisbert. 2022. "Key Requirements for the Detection and Sharing of Behavioral Indicators of Compromise" Electronics 11, no. 3: 416. https://doi.org/10.3390/electronics11030416

APA StyleVillalón-Huerta, A., Ripoll-Ripoll, I., & Marco-Gisbert, H. (2022). Key Requirements for the Detection and Sharing of Behavioral Indicators of Compromise. Electronics, 11(3), 416. https://doi.org/10.3390/electronics11030416