Orientation Detection System Based on Edge-Orientation Selective Neurons

Abstract

:1. Introduction

2. Methods

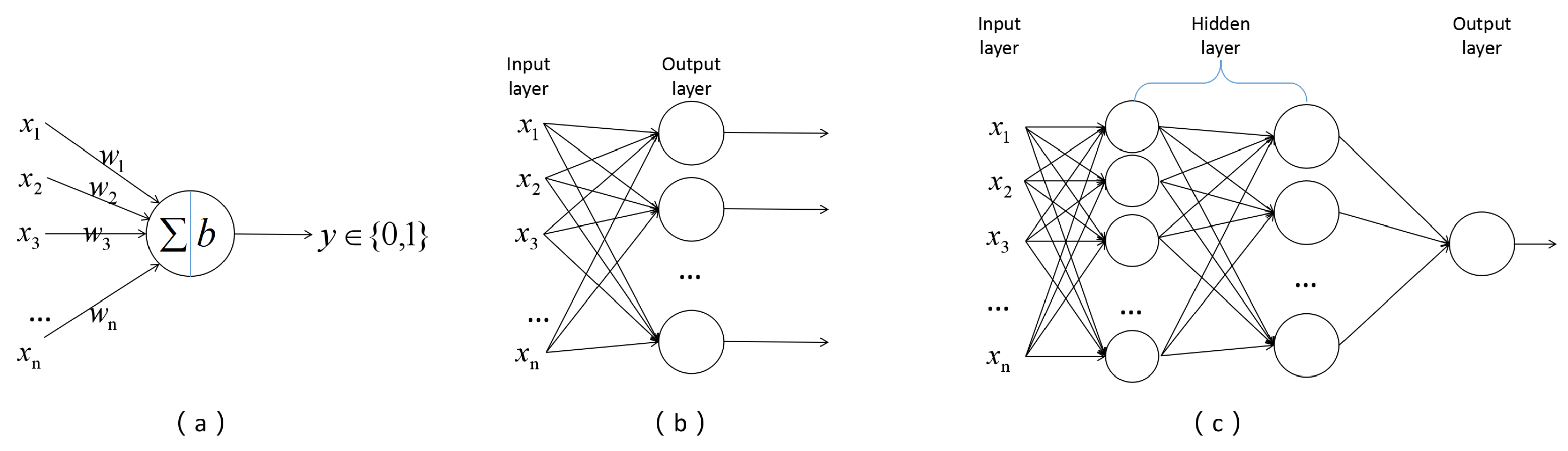

2.1. McCulloch-Pitts Neuron and Perceptron

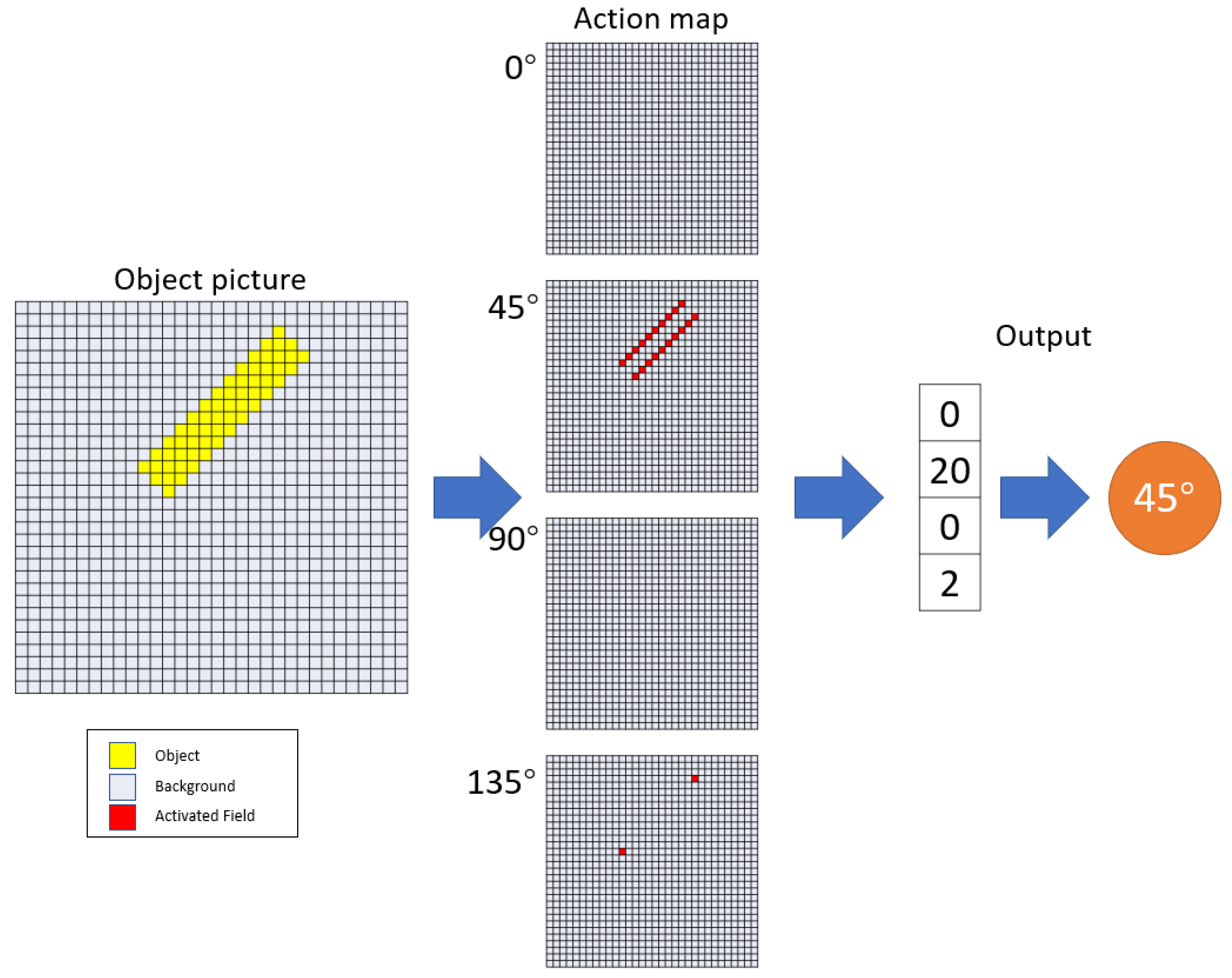

2.2. Local Edge-Orientation Detection Neuron

2.3. Global Edge-Orientation Detection Neuron

- (1)

- Each neuron receives 9 specific inputs from the photoreceptors they are in charge of, and obtain the weight depending on the characteristics of the distribution of the different inputs.

- (2)

- In the local receptive field, four neurons can be defined as four different orientation and edge selective neurons, in order to detect the orientation of the objects’ edge.

- (3)

- After the output layer of the four selective neurons, we set up four ladders as a sumpooling layer, and the output comes from former steps, which calculates the sum of the effective outputs, and then, counts the number of such neurons activated.

- (4)

- A kind of specialized cell will do a comparison of the number of 4 kinds of outputs, as the function of complex cells in Hubel’s theory, and decide the final output of the orientation detection result.

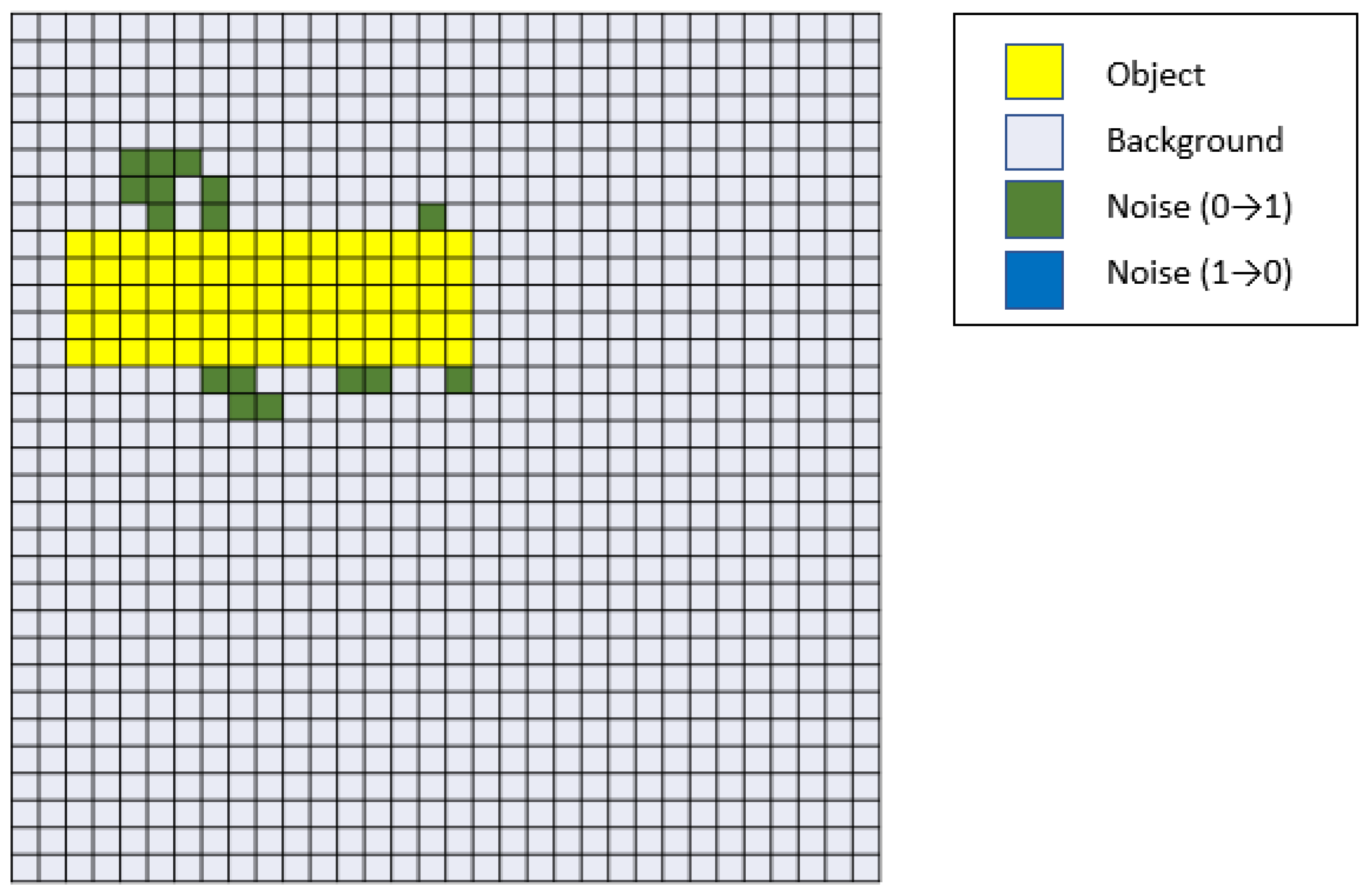

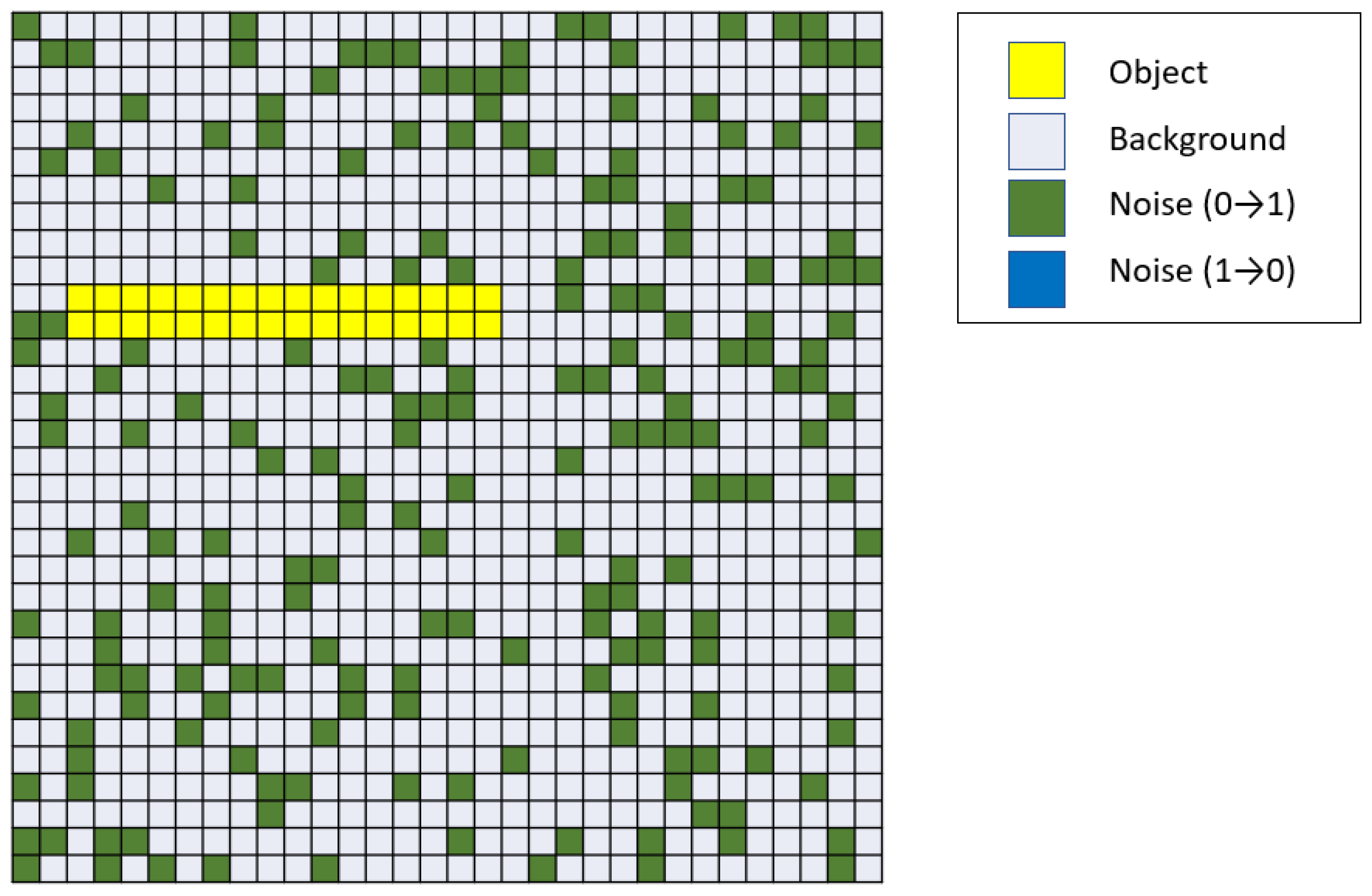

3. Simulation Results

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Todo, Y.; Tang, Z.; Todo, H.; Ji, J.; Yamashita, K. Neurons with multiplicative interactions of nonlinear synapses. Int. J. Neural Syst. 2019, 29, 1950012. [Google Scholar] [CrossRef] [PubMed]

- Medina, J. Brain Rules: 12 Principles for Surviving and Thriving at Work, Home, and School; ReadHowYouWant: Sydney, Australia, 2011. [Google Scholar]

- Fiske, S.T.; Taylor, S.E. Social Cognition; Mcgraw-Hill Book Company: New York, NY, USA, 1991. [Google Scholar]

- Vanston, J.E.; Strother, L. Sex differences in the human visual system. J. Neurosci. Res. 2017, 95, 617–625. [Google Scholar] [CrossRef] [PubMed]

- Namboodiri, V.M.K.; Huertas, M.A.; Monk, K.J.; Shouval, H.Z.; Shuler, M.G.H. Visually cued action timing in the primary visual cortex. Neuron 2015, 86, 319–330. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hubel, D.H.; Wiesel, T.N. Receptive fields, binocular interaction and functional architecture in the cat’s visual cortex. J. Physiol. 1962, 160, 106. [Google Scholar] [CrossRef] [PubMed]

- Hubel, D.H.; Wiesel, T.N. Shape and arrangement of columns in cat’s striate cortex. J. Physiol. 1963, 165, 559. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hubel, D.H.; Wiesel, T.N. Receptive fields of single neurones in the cat’s striate cortex. J. Physiol. 1959, 148, 574. [Google Scholar] [CrossRef] [PubMed]

- Hubel, D.H. Exploration of the primary visual cortex, 1955–1978. Nature 1982, 299, 515–524. [Google Scholar] [CrossRef] [PubMed]

- Hubel, D.H.; Wiesel, T.N. Receptive fields and functional architecture of monkey striate cortex. J. Physiol. 1968, 195, 215–243. [Google Scholar] [CrossRef] [PubMed]

- Baylor, D.; Hodgkin, A.; Lamb, T. The electrical response of turtle cones to flashes and steps of light. J. Physiol. 1974, 242, 685–727. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Vallerga, S.; Covacci, R.; Pottala, E. Artificial cone responses: A computer-driven hardware model. Vis. Res. 1980, 20, 453–457. [Google Scholar] [CrossRef] [PubMed]

- Kwon, S.M.; Cho, S.W.; Kim, M.; Heo, J.S.; Kim, Y.H.; Park, S.K. Environment-adaptable artificial visual perception behaviors using a light-adjustable optoelectronic neuromorphic device array. Adv. Mater. 2019, 31, 1906433. [Google Scholar] [CrossRef] [PubMed]

- Kadota, T.; Mizote, M.; Kadota, K. Synaptic spinules attendant on post-tetanic potentiation in cat sympathetic ganglion. Proc. Jpn. Acad. Ser. B 1996, 72, 48–51. [Google Scholar] [CrossRef] [Green Version]

- Baxter, L.C.; Coggins, J.M. Supervised pixel classification using a feature space derived from an artificial visual system. In Proceedings of the Intelligent Robots and Computer Vision IX: Algorithms and Techniques, SPIE, Orlando, FL, USA, 2–4 April 1991; Volume 1381, pp. 459–469. [Google Scholar]

- Li, B.; Todo, Y.; Tang, Z. The Mechanism of Orientation Detection Based on Local Orientation-Selective Neuron. In Proceedings of the 2021 6th International Conference on Computational Intelligence and Applications (ICCIA), Xiamen, China, 11–13 June2021; pp. 195–199. [Google Scholar]

- Francis, P.J.; Wills, B.J. Introduction to principal components analysis. arXiv 1999, arXiv:astro-ph/9905079. [Google Scholar]

- Veeser, S.; Cumming, D. Object Position and Orientation Detection System. U.S. Patent 9,536,163, 3 January 2017. [Google Scholar]

- Knutsson, H. Filtering and Reconstruction in Image Processing. Ph.D. Thesis, Linköping University Electronic Press, The Institute of Technology at Linköping University, Linköping, Sweden, 1982. [Google Scholar]

- Veeser, S.; Cumming, D. Learning orientation-estimation convolutional neural network for building detection in optical remote sensing image. In Proceedings of the 2018 Digital Image Computing: Techniques and Applications (DICTA), Canberra, Australia, 10–13 December 2018; pp. 1–8. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Burr, D.; Thompson, P. Motion psychophysics: 1985–2010. Vis. Res. 2011, 51, 1431–1456. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, B.; Todo, Y.; Tang, Z. Artificial Visual System for Orientation Detection Based on Hubel–Wiesel Model. Brain Sci. 2022, 12, 470. [Google Scholar] [CrossRef] [PubMed]

- Zhu, M.M.; Xu, Y.L.; Ma, H.Q. Edge Detection Based On the Characteristic of Primary Visual Cortex Cells. J. Phys. Conf. Ser. 2018, 960, 012052. [Google Scholar] [CrossRef]

- Kandel, E.R.; Schwartz, J.H.; Jessell, T.M.; Siegelbaum, S.; Hudspeth, A.J.; Mack, S.; Mack, S. Principles of Neural Science; McGraw-Hill: New York, NY, USA, 2000; Volume 4. [Google Scholar]

- McCulloch, W.S.; Pitts, W. A logical calculus of the ideas immanent in nervous activity. Bull. Math. Biophys. 1943, 5, 115–133. [Google Scholar] [CrossRef]

- Collobert, R.; Bengio, S. Links between perceptrons, MLPs and SVMs. In Proceedings of the Twenty-First International Conference on Machine Learning, Banff, AB, Canada, 4–8 July 2004; p. 23. [Google Scholar]

- Minsky, M.; Papert, S. An introduction to computational geometry. Camb. Tiass HIT 1969, 479, 480. [Google Scholar]

- Rosenblatt, F. Principles of Neurodynamics. Perceptrons and the Theory of Brain Mechanisms; Technical Report; Cornell Aeronautical Lab Inc.: Buffalo, NY, USA, 1961. [Google Scholar]

- Antinucci, P.; Suleyman, O.; Monfries, C.; Hindges, R. Neural mechanisms generating orientation selectivity in the retina. Curr. Biol. 2016, 26, 1802–1815. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Henning, M.; Ramos-Traslosheros, G.; Gür, B.; Silies, M. Populations of local direction–selective cells encode global motion patterns generated by self-motion. Sci. Adv. 2022, 8, eabi7112. [Google Scholar] [CrossRef] [PubMed]

| Object Size | Accurate Number | Number of Pictures | Accuracy |

|---|---|---|---|

| Small objects 1 | 10,000 | 10,000 | 100.00% |

| Large objects 2 | 10,000 | 10,000 | 100.00% |

| Object Size | Noise Size | EOAVS 1 | CNN | EfN |

|---|---|---|---|---|

| Small size2 | 1 | 99.57% | 72.85% | 69.58% |

| 2 | 88.75% | 66.00% | 67.83% | |

| Large size3 | 1 | 100.00% | 100.00% | 100.00% |

| 2 | 100.00% | 99.95% | 97.66% | |

| 4 | 99.70% | 99.40% | 94.11% | |

| 8 | 96.66% | 77.40% | 83.92% | |

| 16 | 76.23% | 59.60% | 73.23% |

| Object Size | Noise Size | EOAVS 1 | CNN | EfN |

|---|---|---|---|---|

| Small size2 | 1 | 97.70% | 98.35% | 95.03% |

| 2 | 93.32% | 86.05% | 88.47% | |

| 4 | 86.77% | 71.00% | 79.17% | |

| 8 | 76.08% | 49.85% | 59.53% | |

| Large size3 | 1 | 100.00% | 100.00% | 100.00% |

| 2 | 100.00% | 99.94% | 96.90% | |

| 4 | 99.28% | 99.20% | 88.80% | |

| 8 | 96.14% | 77.70% | 86.43% | |

| 16 | 89.83% | 61.60% | 82.85% |

| Object Size | Noise Percentage 1 | EOAVS 2 | CNN | EfN |

|---|---|---|---|---|

| Small size3 | 5% | 91.41% | 45.75% | 34.07% |

| 10% | 77.05% | 33.65% | 29.80% | |

| 15% | 63.51% | 31.70% | 28.20% | |

| 20% | 52.34% | 37.95% | 24.20% | |

| Large size4 | 5% | 99.87% | 48.95% | 40.63% |

| 10% | 98.51% | 48.70% | 38.52% | |

| 15% | 93.58% | 48.40% | 37.97% | |

| 20% | 82.17% | 36.20% | 35.44% |

| Orientation Detection System | Device | Type | Duration |

|---|---|---|---|

| EOAVS 1 | CPU | Intel(R) Xeon(R) CPU @ 2.20 GHz | 1 min 17 s |

| CNN | GPU | NVIDIA Tesla P100 | 5 min 3 s |

| EfN | GPU | NVIDIA Tesla P100 | 4 min 47 s |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, T.; Li, B.; Todo, Y. Orientation Detection System Based on Edge-Orientation Selective Neurons. Electronics 2022, 11, 3946. https://doi.org/10.3390/electronics11233946

Chen T, Li B, Todo Y. Orientation Detection System Based on Edge-Orientation Selective Neurons. Electronics. 2022; 11(23):3946. https://doi.org/10.3390/electronics11233946

Chicago/Turabian StyleChen, Tianqi, Bin Li, and Yuki Todo. 2022. "Orientation Detection System Based on Edge-Orientation Selective Neurons" Electronics 11, no. 23: 3946. https://doi.org/10.3390/electronics11233946

APA StyleChen, T., Li, B., & Todo, Y. (2022). Orientation Detection System Based on Edge-Orientation Selective Neurons. Electronics, 11(23), 3946. https://doi.org/10.3390/electronics11233946