| AQ1 | V33 | A/E | Errors in an unsupervised system are always present. How easy was it to determine the error baseline?/Fehler sind in einem unüberwachten System immer präsent. Wie schwierig war es den Fehlerbasiswert zu ermitteln? | Rate from 1 = not easy/nicht einfach zu ermitteln to 5 = very easy/sehr einfach zu ermitteln |

| AQ2 | V34 | A/E | How easy was it to distinguish between good and bad and non-functional context models?/Wie einfach war es zwischen guten, schlechten und nicht funktionierenden Modellen zu unterscheiden? | Rate from 1 = not easy/nicht einfach zu ermitteln to 5 = very easy/sehr einfach zu ermitteln |

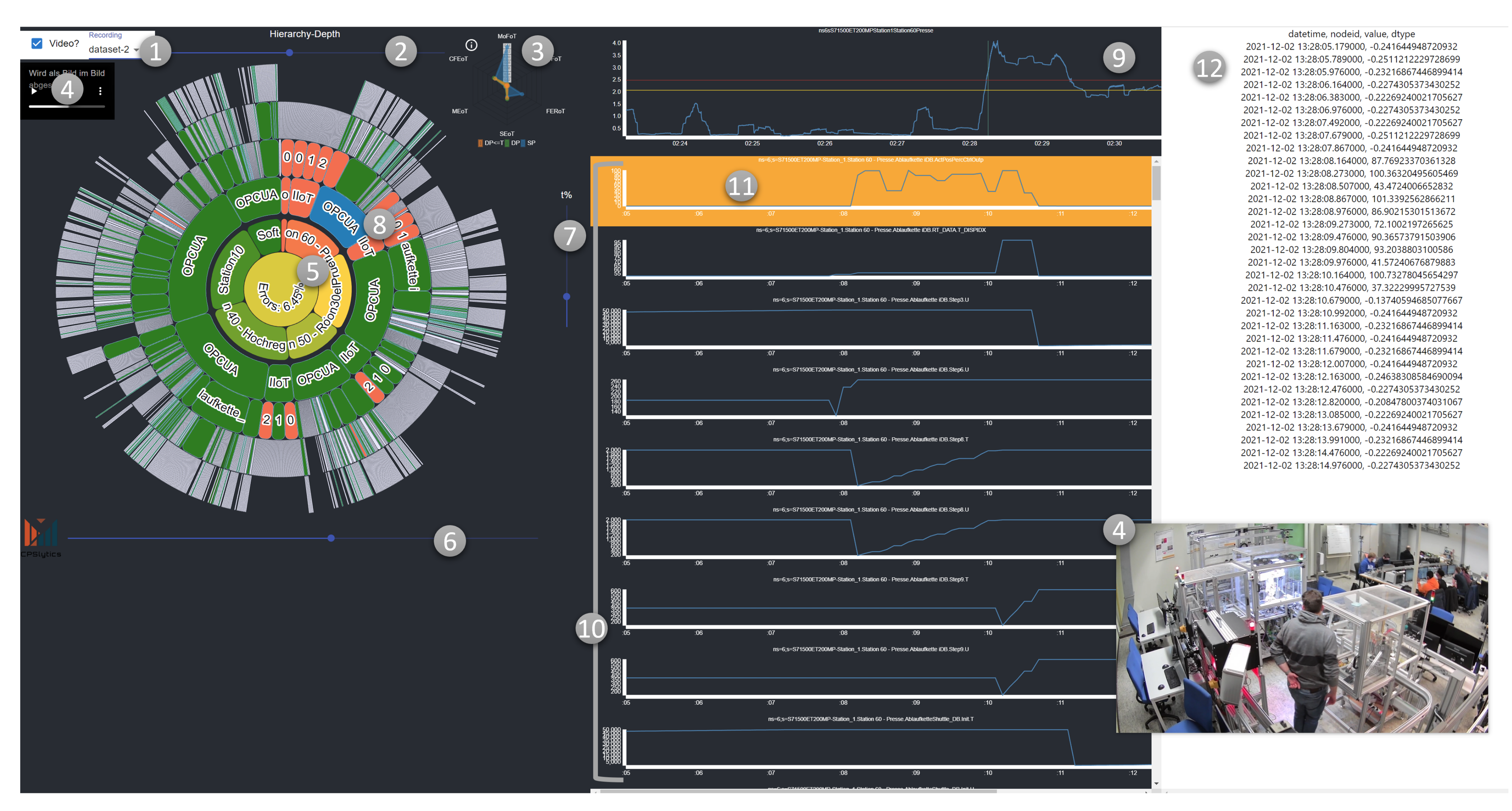

| AQ3 | V1 | A/E | Rate the complexity of the dashboard./Bewerte die Komplexität des Dashboards. | Rate from 1 = very complex/sehr komplex to 5 = no complexity at all/keinerlei Komplexität |

| | V3 | A/E | Why do you took this rating? (optional, 1–2 Sentences)/Warum bewerten Sie so? (optional, 1–2 Sätze) | free form |

| | V4 | A/E | What is hard to understand? (optional, 1–2 Sentences)/Was ist schwer zu verstehen? (optional, 1–2 Sätze) | free form |

| | V5 | A/E | What is good to understand? (optional, 1–2 Sentences)/Was ist gut zu verstehen? (optional, 1–2 Sätze) | free form |

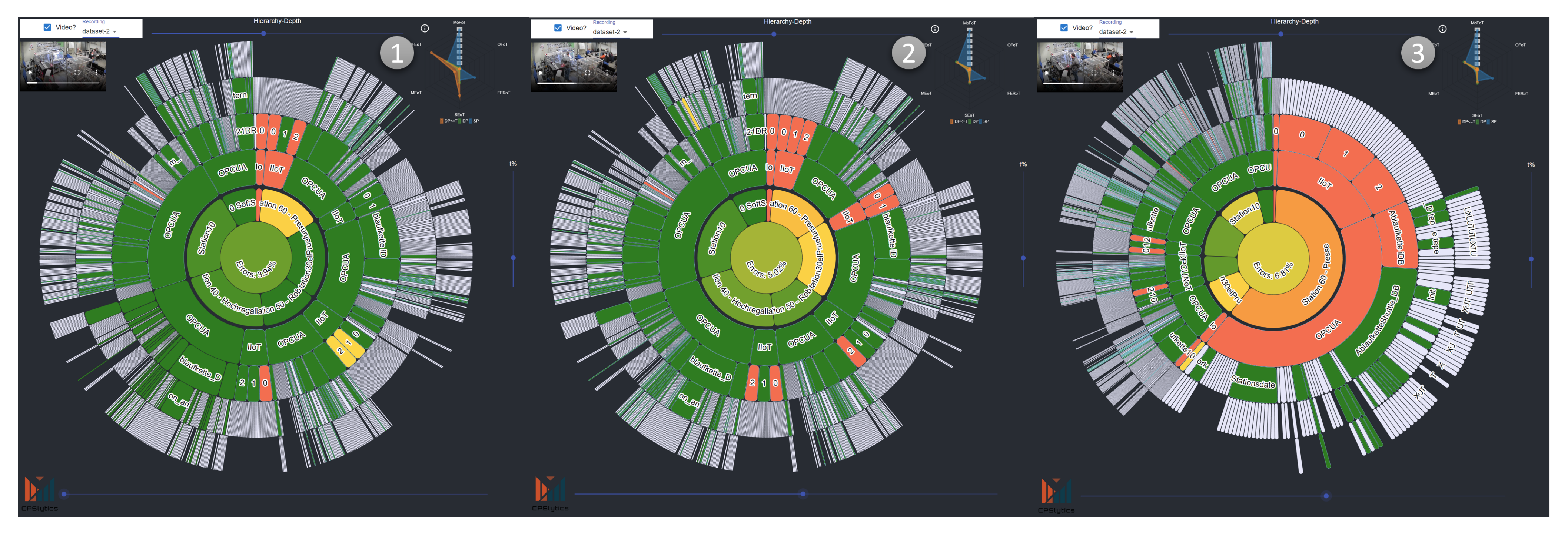

| AQ4 | V2 | A/E | Is the visual structure of the central visualization understandable?/Ist die visuelle Struktur der zentralen Visualisierung verständlich? | Rate from 1 = not understandable/nicht verständlich to 5 = fully understandable/vollkommen verständlich |

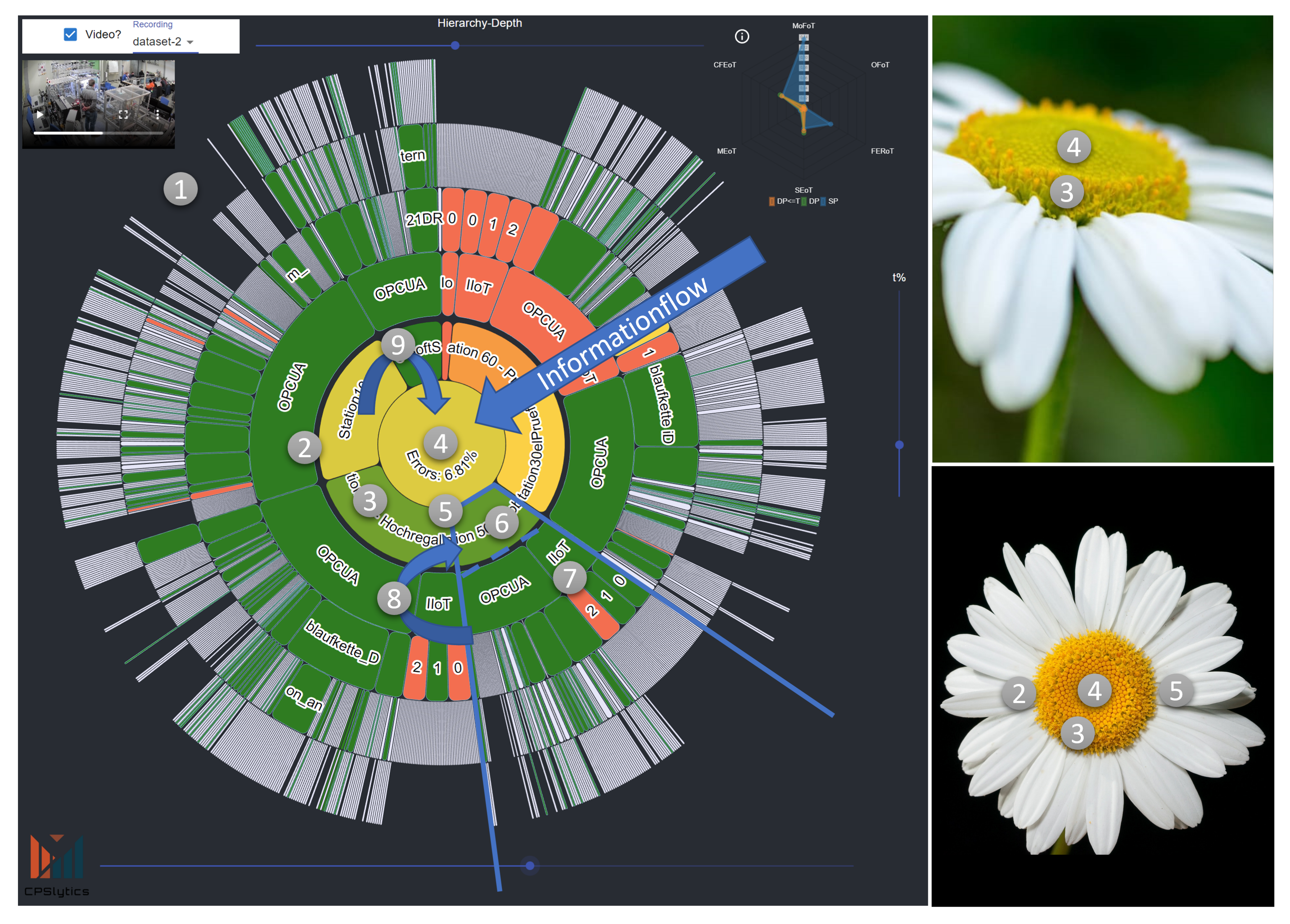

| | V86 | A/E | Can be a connection seen between the smart factory and the visualisation? How and why? (optional, 1–2 Sentences)/Ist es möglich eine Verbindung zwischen der Visualisierung und dem Aufbau der smarten Fabrik zu erkennen? Wie und Warum? (optional, 1–2 Sätze) | free form |

| AQ5 | V6 | A/E | Is the navigation throughout the dashboard clear?/Ist die Navigation durch das Dashboard klar? | Rate from 1 = not clear/unklar to 5 = fully clear/vollständig klar |

| AQ6 | V7 | A/E | After the first training, How good would you estimate finding faults with the system?/Nach dem ersten Training. Wie gut würden Sie einschätzen mit dem System Fehler zu finden? | Rate from 1 = I would not find any faults/Ich würde keine Fehler finden to 5 = I definitly would find faults/Ich würde garantiert Fehler finden |

| CQ1 | V9 | A/E | How good are dependencies visible throughout a specific machinery context hierarchy?/Wie gut sind Abghängigkeiten durch die spezifische Maschinenkontext-Hierarchie sichtbar? | Rate from 1 = cannot see any dependencies/Ich erkenne keine Abhängigkeiten to 5 = the dependencies are very clear to detect/die Abhängigkeiten sind sehr klar zu erkennen |

| CQ2 | V8 | A/E | How good are inter-machinery dependencies visible throughout the context hierarchy?/Wie gut sind inter-Maschinen Abhängigkeiten durch die Kontext-Hierarchie sichtbar? | Rate from 1 = cannot see any dependencies/Ich erkenne keine Abhängigkeiten to 5 = the dependencies are very clear to detect/die Abhängigkeiten sind sehr klar zu erkennen |

| CQ3 | V10 | A/E | How good are dependencies in the whole production line visible throughout the context hierarchy?/Wie gut sind Abhängigkeiten in der Produktionslinie durch die Kontext-Hierarchie sichtbar? | Rate from 1 = cannot see any dependencies/Ich erkenne keine Abhängigkeiten to 5 = the dependencies are very clear to detect/die Abhängigkeiten sind sehr klar zu erkennen |

| CQ4 | V11 | A/E | How understandable is the centered information aggregation (traffic light) for the machinery contexts and the production line context?/Wie verständlich ist die zentrale Informationsaggregation (Ampel) für die Maschinenkontexte und den Produktionslinienkontext? | Rate from 1 = I do not understand the information aggregation/Ich verstehe die Informationsaggregation nicht to 5 = it is clear how the information aggregation works/Es ist vollkommen klar wie die Informationsaggregations funktioniert |

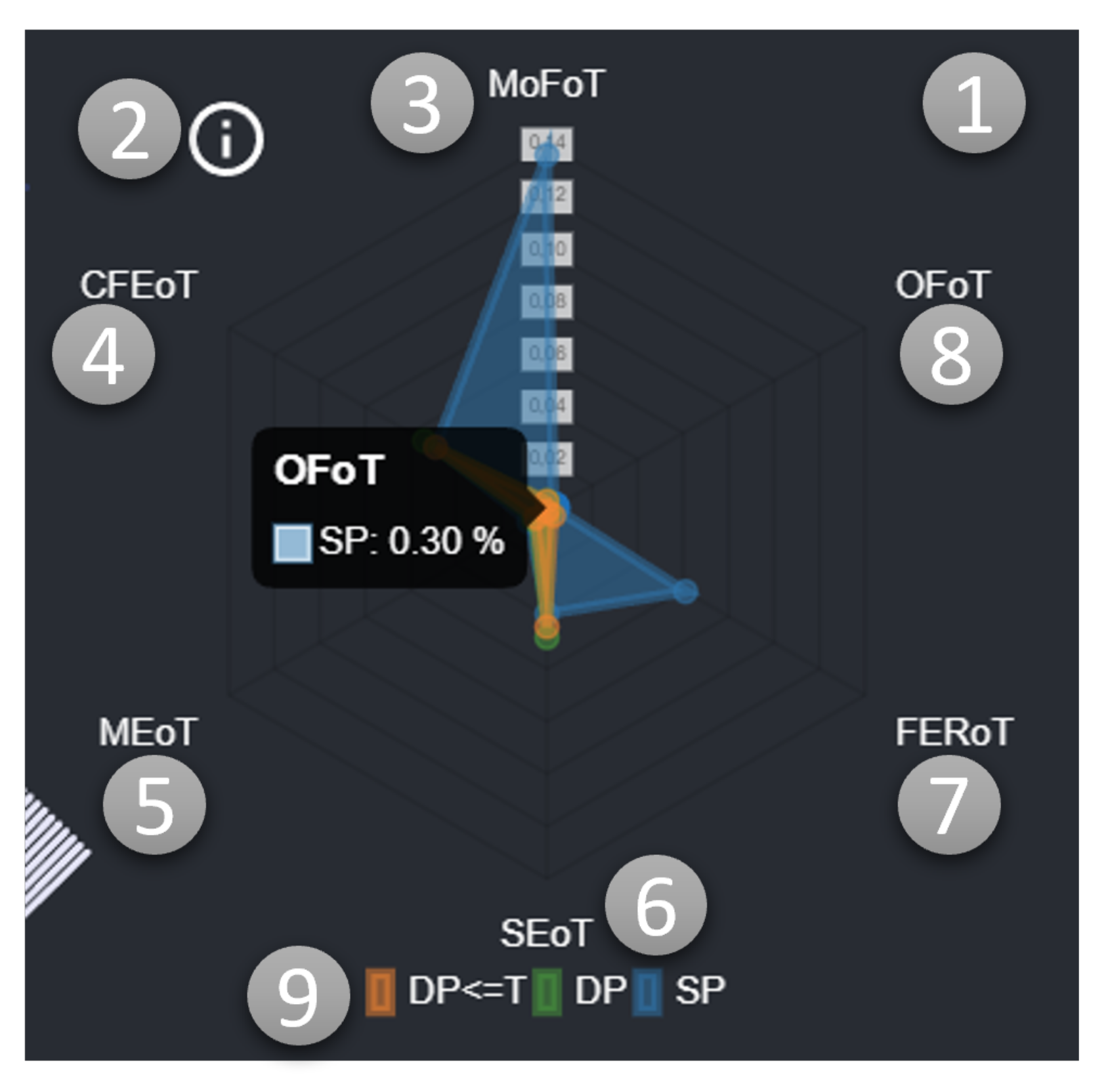

| CQ5 | V12 | A/E | How well the model performance is reflected by the central visualization?/Wie gut reflektiert die zentrale Visualisierung die Modelperformance? | Rate from 1 = i cannot see a relationship between visualization and model performance/Ich kann keine Beziehung zwischen Visualisierung und Model Perfomance erkennen to 5 = the relationship is clearly given and understandable/Die Beziehung ist klar gegeben und ist verständlich |

| CQ6 | V13 | A/E | How well is the model performance explainable by the surveyed window values?/Wie gut ist die Model Perfmance erklärbar durch die überwachten Fensterwerte? | Rate from 1 = model performance cannot be explained/Die Model Performance kann nicht erklärt werden to 5 = model performance is explainable by the surveyed values/Die Model Performance ist vollständig erklärbar anhand der beobachteten Werte |

| DQ1 | V14 | A/E | Is the central information aggregation (traffic light) a help in finding faults?/Ist die zentrale Informationsaggregation (Ampel) eine Hilfe im Finden von Fehlern? | Rate from 1 = no not at all/nicht im geringsten to 5 = yes it helps me to quickly finding faults/ja es hat mir geholfen Fehler schnell zu finden |

| DQ2 | V15 | A/E | Is the visualization of contexts and the context hierarchy a help in finding faults?/Ist die Visualisierung der Kontexte und der Kontext-Hierarchy eine Hilfe im Finden von Fehlern? | Rate from 1 = the visualization does not help/Die Visualisierung hilft nicht to 5 = the visualization does help definitely to find faults/Die Visualisierung hilft Fehler zu finden |

| | V16 | A/E | What parts of the dashboard helped to identify the fault? (optional, 1–2 Sentences)/Welche Teile des Dashboards haben geholfen den Fehler zu identifizieren? (optional, 1–2 Sätze) | free form |

| DQ3 | V17 | A/E | How difficult was it to validate the fault using the model performance from one or more contexts?/Wie schwierig war es den Fehler anhand der Model Perfomance von einem oder mehreren Kontexten zu validieren? | Rate from 1 = very difficult/sehr schwer to 5 = very easy/sehr einfach |

| | V25 | A/E | Multiple neural networks are used for detection. How you have found a valid functional model? (optional, 2–3 Sentences)/Mehrere neuronale Netzwerke werden zur Detektion benutzt. Wie haben Sie ein valides funktionierendes Model gefunden? (optional, 2–3 Sätze) | free form |

| DQ4 | V18 | A/E | Is the visualization of the real log values, together with the surveyed values and the model performance, a help in validation of the fault?/Ist die Visualisierung der realen Log-Daten, zusammen mit den überwachten Werten und der Model Performance eine Hilfe um den Fehler zu validieren? | Rate from 1 = no help/keine Hilfe to 5 = all three are equal helpful/alle drei sind gleichverteilt hilfreich |

| | V26 | A/E | What is helping the most and why? (optional, 2–3 Sentences)/Was hat dabei am meisten geholfen und warum? (optional, 2–3 Sätze) | free form |

| DQ5 | V19 | A/E | Has the transparency in analysis (model performance, surveyed values, log values, metrics) higher the trust in the system results?/Hat die Transparenz in der Analyse (Model Performance, überwachte Werte, Log-Daten, Metriken) das Vertrauen in des System erhöht? | Rate from 1 = no help to trust the system/Es war keine Hilfe dem System zu vertrauen to 5 = very helpful for the trust level/Es hat sehr geholfen für das Vertrauen |

| DQ6 | V20 | A/E | Has the transparency in analysis (model performance, surveyed values, log values, metrics) helped to find the context fault?/Hat die Transparenz in der Analyse (Model Performance, überwachte Werte, Log-Daten, Metriken) geholfen den Kontext-Fehler zu finden? | Rate from 1 = transperency did not help/Transparenz hat nicht geholfen to 5 = transperency did help alot/Transparenz hat sehr geholfen |

| EQ1 | V21 | A/E | Has the transparency in analysis (surveyed values, log values, metrics) helped to validate the model performance?/Hat die Transparenz in der Analyse (Model Performance, überwachte Werte, Log-Daten, Metriken) geholfen die Model Performance zu validieren? | Rate from 1 = transperency did not help/Transparenz hat nicht geholfen to 5 = transperency did help alot/Transparenz hat sehr geholfen |

| EQ2 | V22 | A/E | Have helped the visualisation of the performance metrics (history, day, shift) to higher the trust in the displayed information?/Hat die Visualisierung der Performancemetriken (Historisch, Tages- und Schicht-bezogen) geholfen das Vertrauen in die dargestellten Information zu erhöhen? | Rate from 1 = visualization did not help/Die Visualisierung hat nicht geholfen to 5 = visualization did help alot/Die Visualisierung hat sehr geholfen |

| | V28 | E | Why do you took this rating? (optional, 1–2 Sentences)/Warum bewerten Sie so? (optional, 1–2 Sätze) | free form |

| EQ3 | V23 | A/E | Suppose you have to make a guess. Are the displayed information enough to prioritize the fault?/Wenn Sie raten müsstest. Sind die dargestellten Informationen genug um einen Fehler zu priorisieren? | Rate from 1 = the displayed information is not enough/Die dargestellte Information ist nicht genug to 5 = the displayed information is enough/Die dargestellte Information is ausreichend |

| | V24 | A/E | What parts of the dashboard would help you to prioritize the fault and why? (optional, 2–3 Sentences)/Welche Teile des Dashboards würden helfen den Fehler zu priorisieren und warum? (optional, 2–3 Sätze) | free form |

| EQ4 | V28 | A | After your first usage. May you be able to find a fault chain with enough time?/Nach der ersten Benutzung. Wäre es Ihnen möglich mit genug Zeit eine Fehlerkette zu finden? | Rate from 1 = no not at all/nein nicht im geringsten to 5 = i would defentily find a fault chain/Ich würde definitiv eine Fehlerkette finden |

| | V29 | A | What parts of the dashboard would be helpful for finding a fault chain if you have to make a guess and why? (optional, 2–3 Sentences)/Welche Teile des Dashboard wären hilfreich eine Fehlerkette zu finden, wenn Sie raten müssten und warum? (optional, 2–3 Sätze) | free form |

| EQ5 | V48 | E | If you can identify a chain of faults. How difficult was it to find the fault chain?/Wenn Sie eine Fehlerkette identifizieren konnten, wie schwer war es die Fehlerkette zu finden? | Rate from 1 = very difficult/sehr schwer to 5 = the identification was easy/die Identifikation war einfach |

| | V49 | E | What fault chain was found? (optional, 2–3 Sentences)/Welche Fehlerkette wurde gefunden? (optional, 2–3 Sätze) | free form |

| | V50 | E | How you found and validate the fault chain? (optional, 2–3 Sentences)/Wie haben Sie die Fehlerkette gefunden und validiert? (optional, 2–3 Sätze) | free form |

| | V51 | E | What parts of the dashboards helped to find fault chains and why? (optional, 2–3 Sentences)/Welche Teile des Dashboards waren eine Hilfe um die Fehlerketten zu finden und warum? (optional, 2–3 Sätze) | free form |

| RQ1 | V44 | A/E | Is a fault present in the dataset?/Ist ein Fehler im Datensatz? | yes/ja, no/nein, I cannot say/Kann ich nicht sagen |

| RQ2 | V27 | A/E | What fault is present in the dataset?/Was für ein Fehler ist im Datensatz? | Missing Parts/Fehlendes Bauteil, Must be something else/Muss etwas anderes sein, No fault present/Kein Fehler vorhanden |

| | V31 | A/E | If you need to guess whats the contextual fault and why? (1–2 Sentences)/Wenn Sie raten müssten, was ist der vorhanden Kontext-Fehler? (1–2 Sätze) | free form |

| | V30 | A/E | What helped to identify the dataset? (1–2 Sentences)/Was half Ihnen den Datensatz zu identifizieren? (1–2 Sätze) | free form |

| EQ6 | V83 | A/E | How confident are you that you have identified the dataset right?/Wie sicher sind Sie, dass Sie den Datensatz richtig identifiziert haben? | Rate from 1 = low confidence/gar nicht sicher to 5 = high confidence/sehr sicher |

| | V45 | A/E | What parts of the dashboard have helped to build the trust in your identification? (1–2 Sentences)/Welche Teile des Dashboards haben Ihnen geholfen Vertrauen in deine Identifikation aufzubauen? (1–2 Sätze) | free form |

| FQ1 | V32 | A/E | What was the impact of the missing video information canal?/Was hatte der fehlende Video Informationskanal für einen Einfluss? | Rate from 1 = had high impact/hatte einen sehr großen Einfluss to 5 = no impact. the dataset could be identified with the other information sources/Keinerlei Einfluss. Der Datensatz konnte mit den anderen Datenquellen identifiziert werden |

| BQ1 | V35 | A/E | Errors in an unsupervised system are always present. How easy was it to determine the error baseline?/Fehler sind in einem unüberwachten System immer präsent. Wie schwierig war es den Fehlerbasiswert zu ermitteln? | Rate from 1 = not easy/nicht einfach zu ermitteln to 5 = very easy/sehr einfach zu ermitteln |

| BQ2 | V36 | A/E | How easy was it to distinguish between good and bad and non-functional context models?/Wie einfach war es zwischen guten, schlechten und nicht funktionierenden Modellen zu unterscheiden? | Rate from 1 = not easy/nicht einfach zu unterscheiden to 5 = very easy/sehr einfach zu unterscheiden |

| BQ3 | V87 | A/E | Rate the complexity of the dashboard./Bewerte die Komplexität des Dashboards. | Rate from 1 = very complex/sehr komplex to 5 = no complexity at all/Keinerlei Komplexität |

| | V88 | A/E | Why do you took this rating? (optional, 1–2 Sentences)/Warum bewerten Sie so? (optional, 1–2 Sätze) | free form |

| | V89 | A/E | What is hard to understand? (optional, 1–2 Sentences)/Was ist schwer zu verstehen? (optional, 1–2 Sätze) | free form |

| | V90 | A/E | What is good to understand? (optional, 1–2 Sentences)/Was ist gut zu verstehen? (optional, 1–2 Sätze) | free form |

| BQ4 | V91 | A/E | Is the visuall structure of the central visualization understandable?/Ist der visuelle Aufbau der zentralen Visualisierung verständlich? | Rate from 1 = not understandable/nicht verständlich to 5 = fully understandable/vollkommen verständlich |

| | V92 | A/E | Frage 48 - Can be a connection seen between the smart factory and the visualisation? How and why? (optional, 1–2 Sentences)/Ist es möglich eine Verbindung zwischen der Visualisierung und dem Aufbau der smarten Fabrik zu erkennen? Wie und Warum? (optional, 1–2 Sätze) | free form |

| BQ5 | V93 | A/E | Is the navigation throughout the dashboard clear?/Ist die Navigation durch das Dashboard klar? | Rate from 1 = not clear/unklar to 5 = fully clear/vollständig klar |

| BQ6 | V94 | A/E | After the fault cases. How good would you estimate finding faults with the system?/Nach den Fehlerfällen. Wie gut würden Sie einschätzen mit dem System Fehler zu finden? | Rate from 1 = I would not find any faults/Ich würde keine Fehler finden to 5 = I definitly would find faults/Ich würde garantiert Fehler finden |

| FQ2 | V95 | A/E | How good is the visualization in helping find faults?/Wie gut hilft die Visualisierung bei der Fehlerfindung? | Rate from 1 = very bad/sehr schlecht to 5 = very good/sehr gut |

| FQ3 | V96 | A/E | How good are the contexts in helping find faults and classify them?/Wie gut helfen Kontexte bei der Fehlerfindung und -klassifizierung? | Rate from 1 = very bad/sehr schlecht to 5 = very good/sehr gut |

| FQ4 | V97 | A/E | How would you estimate the potential to find the origin of the fault through context-aware fault diagnosis?/Wie würdest du das Potenzial einschätzen die Fehlerursache durch die Kontext-bezogene Fehlerdiagnose zu finden? | Rate from 1 = no pontential/Kein Potenzial to 5 = very good potential/sehr großes Potenzial |

| | V98 | A/E | What brings you to this rating? What chances and problems do you see? (optional, 1–3 sentences)/Was veranlasst Sie zu dieser Bewertung? Welche Chancen und Probleme sehen Sie dabei? (optional, 2–3 Sätze) | free form |

| FQ5 | V99 | A/E | How would you rate the usefulness of the current prototypical implementation of the context-aware fault diagnosis?/Wie würden Sie die Nützlichkeit der aktuellen prototypischen Implementierung bewerten? | Rate from 1 = not usefull/nicht nützlich to 5 = very usefull/sehr nützlich |

| | V46 | A/E | What do you like the most about the current implementation and why? (optional, 1–3 sentences)/Was mögen Sie am meisten an der aktuellen Implementierung und warum? (optional, 1–3 Sätze) | free form |

| | V47 | A/E | If you get three wishes granted, what would you alter on the current implementation? (optional, 1–3 sentences)/Wenn Sie drei Wünsche frei hätten, was würden Sie ändern an der aktuellen Implementierung? (optional, 1–3 Sätze) | free form |

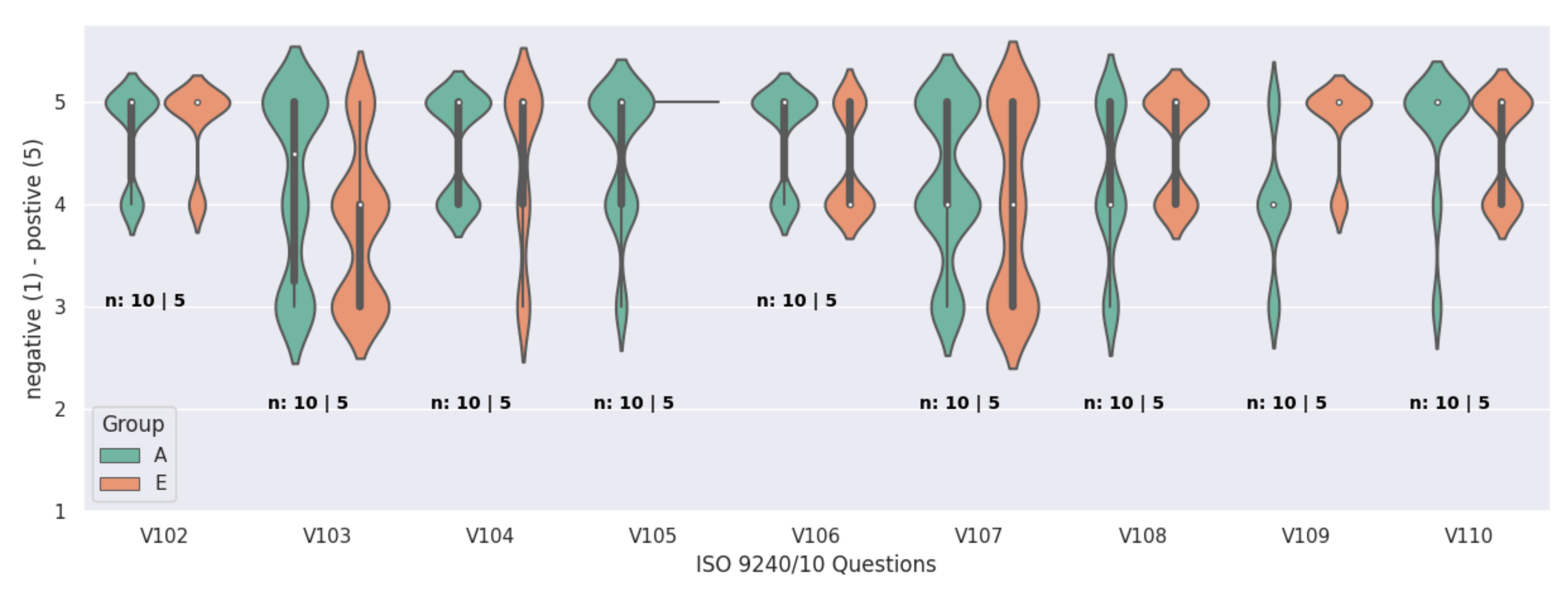

| | V102 | A/E | The functions implemented in the software support me in performing my work./Die implementieren Funktionen in der Software unterstützen mich meiner Arbeit nachzugehen. | Rate from 1 = no not at all/nein überhaupt nicht to 5 = yes every feature is good to have/ja es ist gut jedes Feature zu haben |

| | V103 | A/E | Too many different steps need to be performed to deal with a given task./Es sind zu viele verschiedene Schritte zu tun um eine bestimmte Aufgabe zu lösen. | Rate from 1 = yes there are too many steps/ja es sind viel zu viele Schritte to 5 = no, the number of steps is right/nein, die Anzahl der Schritte ist genau richtig |

| | V104 | A/E | The software is well suited to the requirements of my work./Die Software entspricht den Anforderungen an meine Arbeit. | Rate from 1 = no not at all/nein überhaupt nicht to 5 = yes the software suits the requirements/ja, die Software entspricht genau den Anforderungen |

| | V105 | A/E | The important commands required to perform my work are easy to find./Die wichtigen Befehle um meine Arbeit zu erledigen sind einfach zu finden. | Rate from 1 = no not at all/nein überhaupt nicht to 5 = yes the commands are very easy to find/ja, die Kommandos sind sehr einfach zu finden |

| | V106 | A/E | The presentation of the information on the screen supports me in performing my work./Die Präsentation der Informationen auf dem Bildschirm unterstützen mich meine Arbeit zu erledigen. | Rate from 1 = no not at all/nein überhaupt nicht to 5 = yes the presented information is supportive/Ja, die dargestellten Informationen sind eine klare Unterstützung |

| | V107 | A/E | The possibilities for navigation within the software are adequate./Die Möglichkeiten der Navigation in der Software sind angemessen. | Rate from 1 = no not at all/nein überhaupt nicht to 5 = yes totally adequate/ja total angemessen |

| | V108 | A/E | When executing functions, I have the feeling that the results are predictable./Wenn ich Funktionen ausführe habe ich das Gefühl das die Ergebnisse einschätzbar sind. | Rate from 1 = no not at all/nein überhaupt nicht to 5 = yes the results are highly predictable/Ja, die Ergebnisse sind stark vorhersehbar |

| | V109 | A/E | I needed a long time to learn how to use the software./Ich habe eine lange Zeit gebraucht um zu lernen wie die Software verwendet wird. | Rate from 1 = long time needed/lange Einarbeitung nötig to 5 = was learnable fast/war schnell zu erlernen |

| | V110 | A/E | It is easy for me to relearn how to use the software after a lenghy interruption./Es ist einfach für mich die Software neu zu erlernen wenn ich eine längere Pause einlegen müsste. | Rate from 1 = not an easy task/keine einfache Aufgabe to 5 = very easy to relearn the software/sehr einfach die Software erneut zu erlernen |

| | V111 | A/E | I think I would like to use the system frequently./Ich denke ich würde das System häufig nutzen. | Rate from 1 = strongly disagree/stimme überhaupt nicht zu to 5 = strongly agree/stimme vollkommen zu |

| | V112 | A/E | I find the system was unnecessarily complex./Ich finde das System unnötig komplex. | Rate from 1 = strongly disagree/stimme überhaupt nicht zu to 5 = strongly agree/stimme vollkommen zu |

| | V113 | A/E | I thought the system was easy to use./Ich dachte das System war einfach zu benutzen. | Rate from 1 = strongly disagree/stimme überhaupt nicht zu to 5 = strongly agree/stimme vollkommen zu |

| | V114 | A/E | I think I would need the support of a technical person to be able to use this system./Ich denke ich brauche die Unterstützung einer technischen Person um das System nutzen zu können. | Rate from 1 = strongly disagree/stimme überhaupt nicht zu to 5 = strongly agree/stimme vollkommen zu |

| | V115 | A/E | I found the various function in this system were well integrated./Ich fande die verschiedenen Funktionen in das System gut integriert. | Rate from 1 = strongly disagree/stimme überhaupt nicht zu to 5 = strongly agree/stimme vollkommen zu |

| | A: V48/E: V29 | A/E | I thought there was too much inconsistency in this system./Ich dachte, da waren zu viele Inkonsistenzen in diesem System. | Rate from 1 = strongly disagree/stimme überhaupt nicht zu to 5 = strongly agree/stimme vollkommen zu |

| | V116 | A/E | I would imagine that most people would learn to use this system very quickly./Ich würde mir vorstellen die meisten Leute könnten das System sehr schnell erlernen. | Rate from 1 = strongly disagree/stimme überhaupt nicht zu to 5 = strongly agree/stimme vollkommen zu |

| | V117 | A/E | I found the system very cumbersome to use./Ich fande es sehr mühselig das System zu benutzen. | Rate from 1 = strongly disagree/stimme überhaupt nicht zu to 5 = strongly agree/stimme vollkommen zu |

| | V118 | A/E | I felt very confident using the system./Ich fühlte mich sehr sicher in der Benutzung des Systems. | Rate from 1 = strongly disagree/stimme überhaupt nicht zu to 5 = strongly agree/stimme vollkommen zu |

| | V119 | A/E | I needed to learn a lot of things before I could get going with the system./Ich musste sehr viel lernen bevor ich das System erstmalig nutzen konnte. | Rate from 1 = strongly disagree/stimme überhaupt nicht zu to 5 = strongly agree/stimme vollkommen zu |