1. Introduction

Since their introduction, convolutional neural networks have been extensively used in computer vision, with outstanding results in image classification [

1,

2,

3] and target recognition [

4,

5,

6]. Feature extraction is the most basic unit of a convolutional neural network. The fundamental unit then constructs a trainable multilayer network structure using a nonlinear activation function and downsampling. Among these component units, the activation function can retain the features extracted from the convolutional layer, remove redundant features, and map the feature data with a nonlinear function. Its presence is essential for the network to obtain nonlinearity [

7].

To further improve the performance of convolutional neural networks, an increasing number of activation functions have been proposed, which are usually divided into two categories: nonparametric activation functions and parametric activation functions. The commonly-used nonparametric activation functions are sigmoid [

8], tanh [

9,

10], and rectified linear unit (ReLU) [

11,

12]. Specifically, sigmoid and tanh are often referred to as saturated activation functions, but since saturated activation functions are prone to gradient disappearance and slow model learning, this has led to the birth of nonsaturated activation functions. ReLU is a classic nonsaturated option, whose simple but efficient nature has made it widely used in computer vision tasks and has shown superior performance by using the segmentation function. The positive part of the activation function is set as a constant function, and the negative part is set to zero. Thus, alleviating the gradient disappearance problem in neural networks. However, since ReLU makes the weight value of the input that is input into the hard saturated region to zero during training, which results in the problem of neuron death [

13], the actual output deviates from the desired output, thus affecting the performance of the neural network. The leaky rectified linear unit (LeakyReLU) [

14,

15,

16] proposed a solution to this problem by introducing a tunable parameter in the negative part of the ReLU, which assigns a nonzero slope to all negative values and makes the negative values participate in the computation of the neural network. The exponential linear unit (ELU) [

17,

18] function introduces the exponential operation into the negative part of the parametric activation function, which makes the mean value of the negative part of the data close to zero and the gradient closer to the natural gradient. This enhances the noise robustness of the network. The introduction of parameters in the activation function has become a mainstream research direction in order to evaluate the performance of activation functions and network models.

The above activation function treats the negative part and the positive part as two different parts for separate activation operations, severing the connection between the data. In this paper, the authors try to establish a direct connection between the two values by proposing transforming the negative features, which were initially forced to zero in ReLU, into positive features of lower importance to implement the feature extraction of the model and to construct an adaptive right-shifted activation function, which will address the problems of low accuracy of image classification in current image classification models by adjusting an adaptive parameter based on data, as well as a custom parameter to shift the ReLU activation function to the right and up. The overall change in the activation function is used to improve the convolutional neural network’s picture categorization performance. Furthermore, experiments show that using our proposed AOAF in a network model improves the average classification accuracy of four publicly available datasets.

The main contributions of this paper are summarized as follows: (1) This paper proposes a novel parametric activation function called AOAF. (2) A large number of experiments have demonstrated that AOAF achieves better average classification accuracy than other parametric and nonparametric activation functions in most image classification tasks.

The remainder of this paper is organized as follows.

Section 2 reviews a series of activation functions.

Section 3 introduces the proposed AOAF and its various parameters selected by the experiment. Then, experiments are introduced in

Section 4.

Section 5 presents conclusions.

2. Related Work

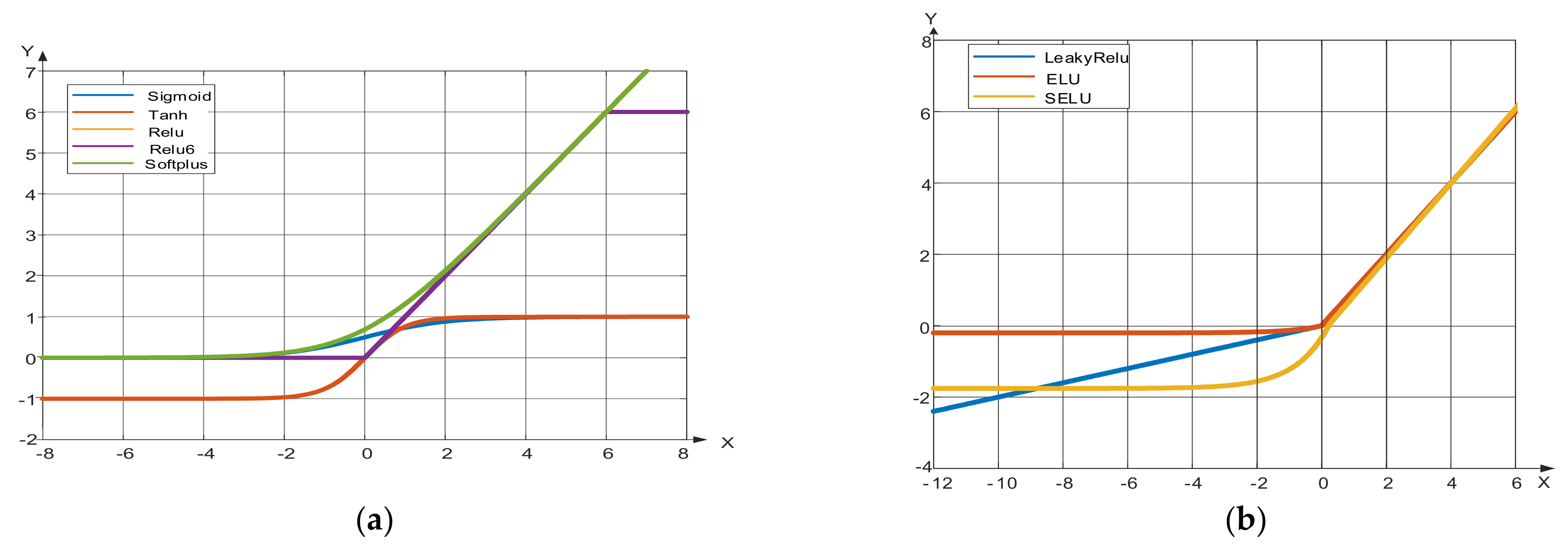

Sigmoid, tanh, Softplus [

19,

20], ReLU, and ReLU6 [

21] can be classified as nonparametric activation functions, and their function images are shown in

Figure 1a. By contrast, LeakyReLU, ELU, and SELU [

22,

23] can be regarded as parametric activation functions. These functions add different types of adjustable parameters to improve performance to varying extent, and their function images are shown in

Figure 1b.

The sigmoid function is described as Equation (1):

Sigmoid is a common S-shaped activation function, but its small gains on either side of the data easily cause the gradient to become zero when the input is very large or very small. Thus, it cannot complete the training of the network.

Tanh is described in Equation (2):

Tanh is a variant of sigmoid and an S-shaped activation function, which solves the problem of slowing down the convergence of sigmoid and improves the convergence speed. However, the same problem of gradient disappearance can occur.

ReLU is described in Equation (3):

ReLU is an unsaturated activation function consisting of a segmentation function that enhances the sparsity of the network by forcing negative inputs to zero, but it also leads to the problem of neuron death.

ReLU6 is described in Equation (4), which limits the maximum output value of ReLU to 6 and has been effective in some specific tasks. However, this approach still drives the negative half of the inputs zero, which means that it still suffers from neuron death.

Softplus can be seen as a smooth deformation of ReLU, lying in the continuous derivability of the definition domain and allowing the gradient to propagate throughout the definition domain. Softplus is described in Equation (5):

The parameterized activation function LeakyReLU makes the output range of the function change from negative infinity to positive infinity by giving an adjustable nonzero slope parameter to the negative part of ReLU. This means that LeakyReLU does not force the negative data to zero, but rather activates it by a function with a nonzero slope set with strong adaptability, whose function equation is shown in Equation (6). ELU also avoids making the output zero when the input is negative by introducing an exponential function with a parameter into the negative part of ReLU, which makes the activated mean close to zero. ELU makes the natural gradient closer to the unit natural gradient by reducing the effect of the bias offset. The SELU is improved by introducing a fixed parameter to the negative half-axis of the ELU and also introduces a fixed parameter to the ELU as a whole, as shown in Equation (8).

3. The Proposed Method

LeakyReLU, ELU, and SELU have improved ReLU by adding adjustable parameters [

24] and have achieved excellent results in improving the diagnostic accuracy of the network as well as alleviating the gradient disappearance problem. We believe that the reasons for the outstanding achievements of these abovementioned variants of ReLU are as follows:

- (1)

The introduction of adjustable parameters brings flexibility to the function;

- (2)

The characteristic of a lower bound without an upper bound alleviates the problem of gradient saturation to a certain extent;

- (3)

The utilization of the negative part.

Therefore, we introduce the idea of adaptive parameters as well as attention mechanisms based on ReLU and propose the adaptive offset activation function (AOAF), which transforms the original negative-valued features into positive-valued features of lower importance, in order to participate in the feature extraction of the model so that the proposed function still has a lower bound without an upper bound.

3.1. Right-Shifted Activation Function: AOAF

By mimicking how humans process things and information, the attention mechanism highlights important information by compressing it according to its importance and then amplifying the original information matrix. Combining the actual situation of ReLU and the idea of the attention mechanism, we shift the negative part of the input information upward to a smaller positive number, such that the negative part, which was forced to be zero in ReLU, now becomes non-important data compared to the positive part. This allows previously wasted data to be used in the subsequent operation of the network, which can avoid the missing information problem caused by discarding the negative information. The function is then shifted to the right after the upward shift, and the degree of the shift depends on the adaptive predefined parameters.

The formula for AOAF and its gradient function formula are shown in Equation (9) with Equation (10).

To combine all the features in the data tensor, we choose the mean values of the data tensor as the adaptive parameters, which are predefined.

3.2. Selection of Parameters in AOAF

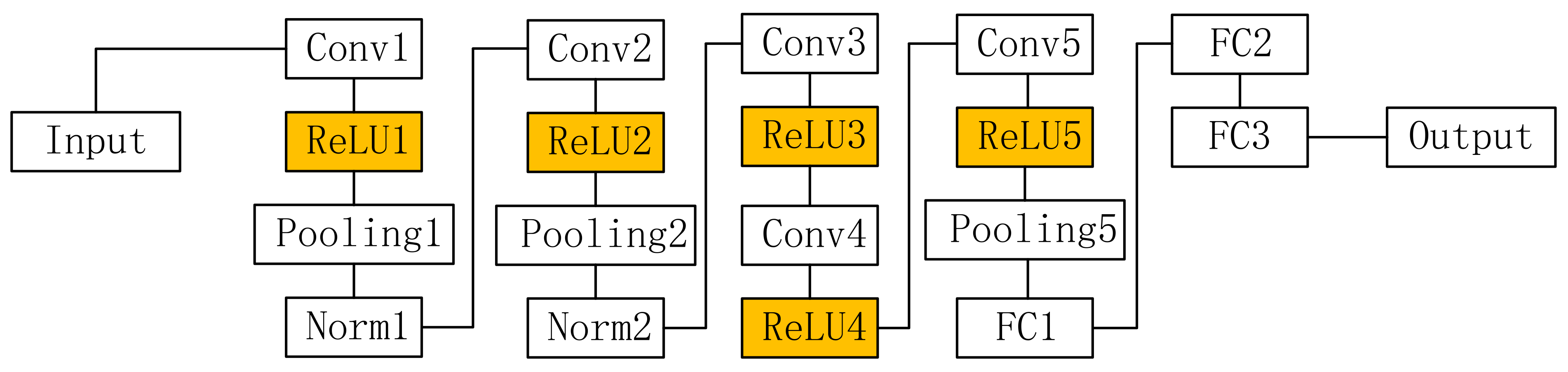

Ever since AlexNet [

25] was proposed in 2012, it has achieved remarkable success in computer vision applications. Its network structure is shown in

Figure 2 and contains eight layers—the first five being convolutional layers and the last three being fully connected layers. Due to the excellent performance of the AlexNet network in image classification and its ability to avoid overfitting during training, we used the AlexNet network on the Cifar100 dataset to determine the optimal adaptive parameters and the predefined parameters. The Cifar100 dataset contains 100 categories, each with 500 training images and 100 test images, for a total of 60,000 images.

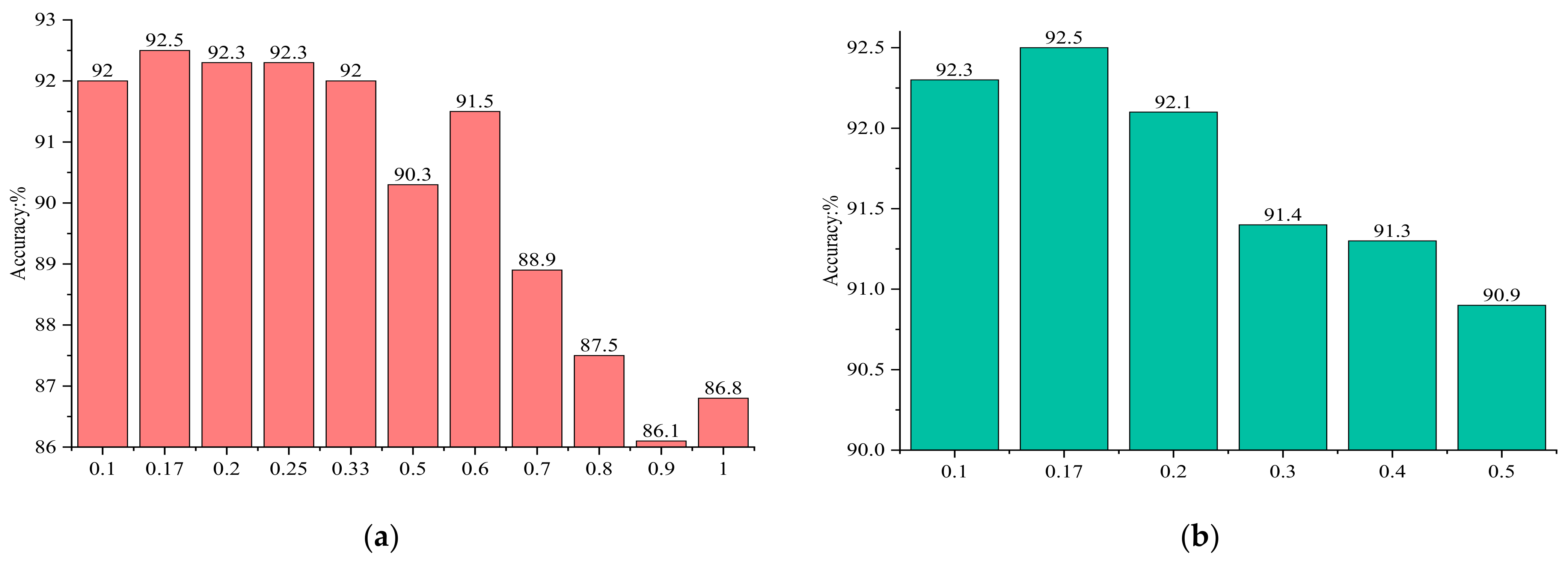

When

=

= a, AOAF is brought into AlexNet by setting different parameters a and comparing the experimental results. The experimental results are shown in

Figure 3a. When a = 0.17, the classification accuracy of the AlexNet network for the Cifar100 dataset reaches the highest value. When

= 0.17 and

= b, the AOAF is brought into AlexNet by setting different values b after the experiment and comparing the experimental results. The experimental results are shown in

Figure 3b. The classification accuracy of the AlexNet network for the Cifar100 dataset reaches the highest when b = 0.17. At this time,

= 0.17 and

= 0.17.

In order to further verify whether the above two parameters are the best parameters for AOAF, we conducted the same parameter selection experiments on Resnet, and the experimental results are shown in

Figure 4a,b, which further proved that AOAF has the best image classification accuracy when

= 0.17,

= 0.17. Therefore, the finalized AOAF function formula is as in Equation (11), and the gradient function formula is as in Equation (12). Its function image and the image of the derivative function are shown in

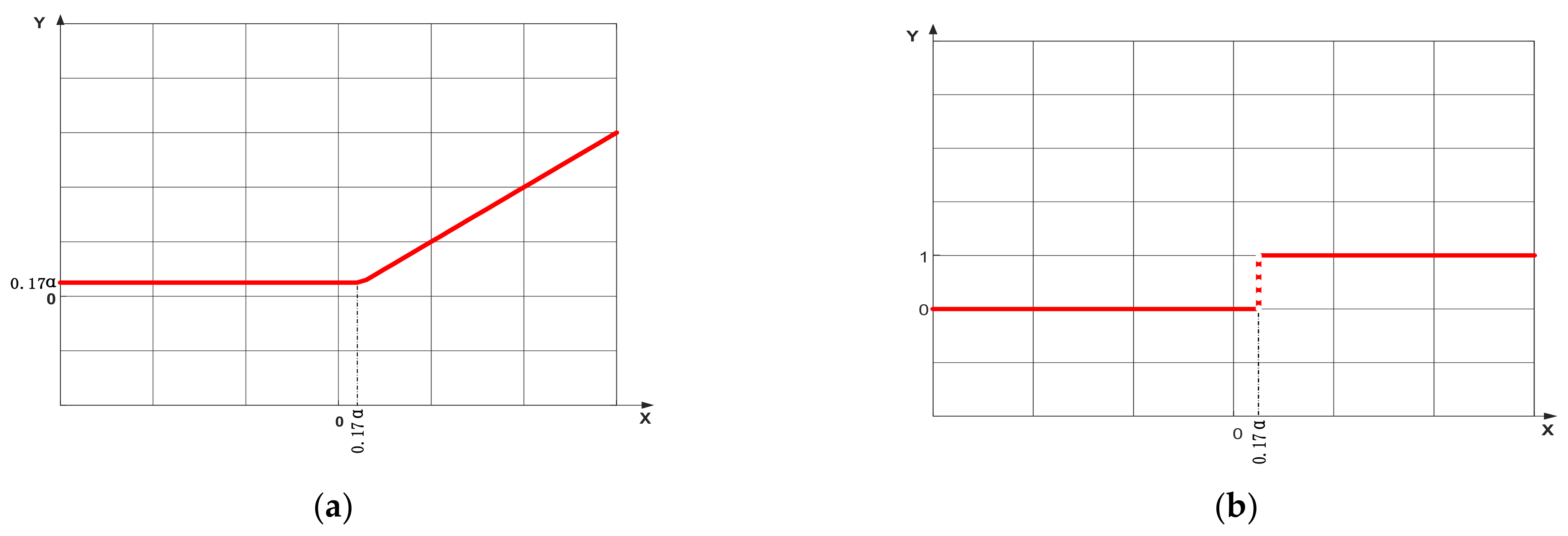

Figure 5.

Figure 5a shows the image of the function of AOAF: the segment function with

as the segment point, the left side of the segment point, consists of a horizontal straight line, at this time the input values less than 0.17α are uniformly transformed into 0.17α, the right side of the segment point consists of a slope line with slope 1, which also assumes that the input values greater than 0.17α will keep their original values.

Figure 5b shows the image of the derivative function of AOAF: the same segment function with 0.17α as the segment point, the segment function consists of two horizontal straight lines, the value less than 0.17α will be converted to 0 after the input, and the value greater than 0.17α will be converted to 1.

4. Experiment and Analysis

4.1. Experimental Settings

The authors conducted experiments using the PyTorch framework environment on a machine equipped with an NVIDIA Geforce3060 graphics card and tested the image classification accuracy of LeakyReLU, ELU, SELU, ReLU, ReLU6, tanh, Softplus, sigmoid, and the proposed AOAF on four different datasets, using different network models with the same parameter settings as in the common network framework. In terms of the optimizer, Adam is an excellent optimizer because it can dynamically and smoothly adjust the learning rate of each parameter and can quickly handle the sparse gradient problem of convex functions [

26]. In order to eliminate the influence of the optimizer performance on the experimental results of the activation function, the authors chose Adam as the optimizer for the model used in our experiments. Under the condition of ensuring the regular operation of the program, the authors pursue to make full use of the performance of the hardware by opening four threads at the same time when inputting images. Multi-threading represents that the model can be trained at a faster speed, and at the same time, each thread input 16 images at a time, i.e., num_workers = 4, batch_size = 16. One hundred epochs were conducted for each trial, and the final experimental result was taken as the highest of all results.

4.2. Networks and Datasets

In this paper, the authors try to validate the performance of the proposed function by using ShuffleNet [

27], MobileNet [

28], Regnet [

29], and GoogleNet [

30] on four different datasets: Cifar10, Cifar100, Fashion-MNIST, and Corel5k, respectively. Concretely, the Cifar10 dataset consists of 60,000 images, each image of size 32 ∗ 32, and the dataset contains a total of ten categories, with 50,000 images for training and 10,000 for testing. The Cifar100 dataset is similar to the Cifar10 dataset, but the Cifar100 dataset has 100 types, 50,000 images for training, and 10,000 for testing. The Fashion-MNIST dataset consists of 28 ∗ 28 grayscale images of 70,000 fashion products from 10 categories, with 7000 images per category. The training set has 60,000 images, and the test set has 10,000 images. Finally, the Corel5k dataset contains a total of Corel’s collection of 5000 images, containing a total of 50 categories, with each category consisting of 100 images of equal sizes, such as buses, dinosaurs, beaches, etc. The authors used the first 90 images of each topic as the training set and the rest as the test set to evaluate the proposed function’s performance.

4.3. Results and Analysis

The experimental results of ShuffleNet, MobileNet, Regnet, and GoogelNet on the four datasets are presented in

Table 1,

Table 2,

Table 3 and

Table 4, respectively. Authors use the classification accuracy of the network on the images as the evaluation criterion, and the columns in the table represent the experimental results of the same network using different activation functions on the same dataset. Authors highlight the values that achieved the highest classification accuracy in the same dataset by highlighting them in bold. The rows in the table represent the experimental results of the same network having the same activation function on different datasets. In addition, the average represents the average image classification accuracy of one activation function on four different datasets; the contrast represents the difference between the average classification accuracy of other activation functions relative to the proposed AOAF on four different datasets. A positive number indicates that the accuracy relative to AOAF rises, and a negative number means that the accuracy decreases relative to AOAF.

Rank indicates the ranking of various activation functions in terms of average accuracy among all activation functions.

The experimental results in

Table 1,

Table 2,

Table 3 and

Table 4 indicate that when MobileNet and RegNet use AOAF as the activation function, AOAF achieves higher average accuracy than other activation functions, with the highest classification accuracy on all datasets in MobileNet. In the comparison experiment using the RegNet network, the highest classification accuracy was also achieved on the Corel5k dataset. When using ShuffleNet for experiments, it achieved the second-best result, but it reached the highest classification accuracy on both the Cifar100 and Cifar10 datasets. Compared with the first three network models, AOAF only achieved the third-ranking on GoogleNet, which also illustrates the greater effectiveness of AOAF in improving the image classification ability of lightweight networks. In conclusion, the above experimental results show that AOAF achieves the best experimental results when tested on four datasets from four convolutional neural networks, and it is worth mentioning that AOAF performs even better in a lightweight network.

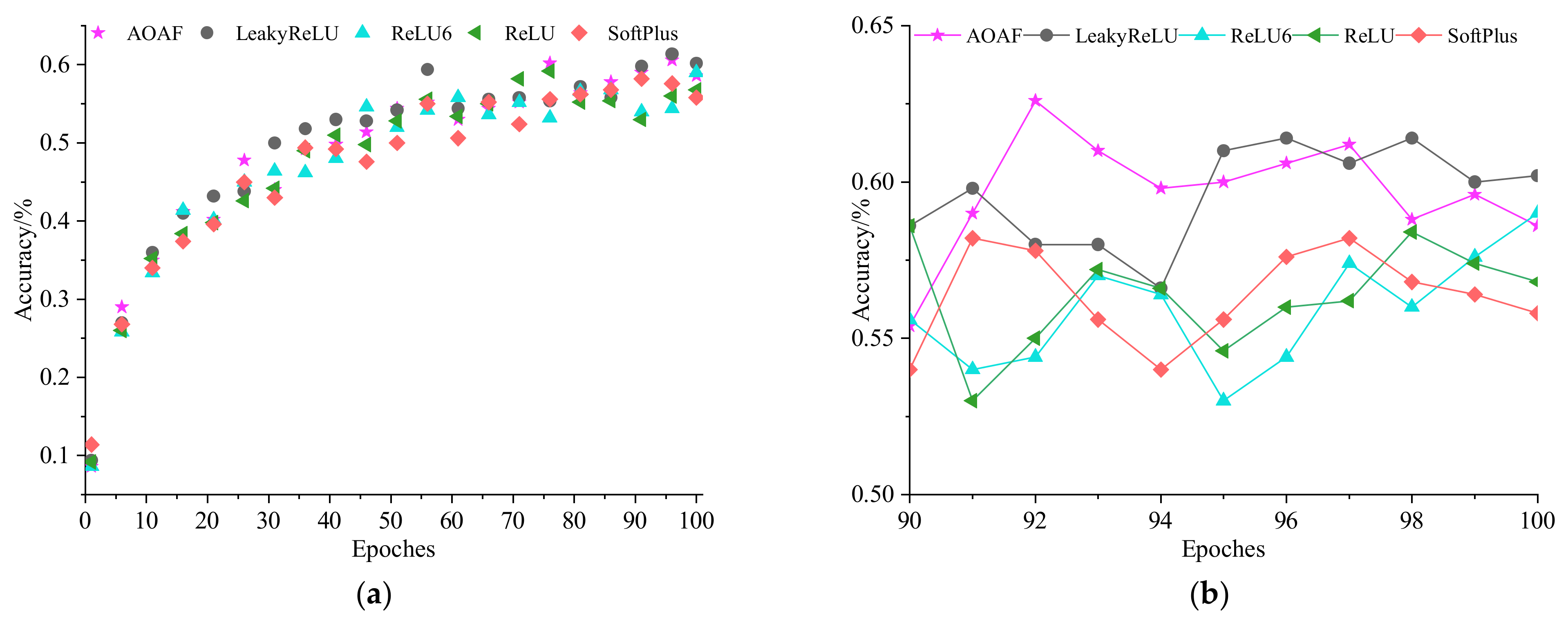

To show the performance of AOAF more clearly, the authors plot the experimental results of the experiments using MobileNet on various activation functions, as shown in

Figure 6,

Figure 7 and

Figure 8.

Figure 6a,

Figure 7a and

Figure 8a represent the classification accuracies on the Cifar100, Cifar10, and Corel5k datasets, respectively;

Figure 6b,

Figure 7b and

Figure 8b correspond to the enlarged plots of their experimental results in the last ten epochs, it is clear that compared with the other activation functions in the top five accuracy rankings, the proposed image classification accuracy of AOAF is in the first echelon.

5. Conclusions

In convolutional neural networks, the activation function used can significantly improve the performance of the model, and a suitable activation function can effectively improve the accuracy of the model for image classification. This paper proposed AOAF in the research to enhance the model’s image classification performance by introducing the concepts of attention and parameterization. Using the Alexnet and Resnet network on the Cifar100 dataset for controlled variable experiments, the authors included an adaptive parameter (for the mean of the input feature tensor) and two custom parameters in AOAF and investigated the optimal custom parameters. The proposed activation functions on Corel5k, Cifar100, Fashion- MNIST, and Cifar10 were tested using MobileNet, RegNet, ShuffleNet, and GoogleNet as experimental vehicles. In the following studies, the performance of AOAF was compared to seven common activation functions, which were reviewed and categorized. The experimental results demonstrate that AOAF achieves three first-ranked and one third-ranked image classification accuracies in the comparison experiments of four network models, respectively. Furthermore, the average classification accuracy of four neural network models using ReLU as an activation function on four image data sets is 74.33%, 85.33%, 83.98%, and 75.78%, respectively. Four neural network models equipped with AOAF achieved an average classification accuracy of 78.15%, 85.93%, 85.0%, and 80.58%, respectively. It can be seen that when AOAF is used as the activation function of the model, compared with ReLU as the activation function, average image classification accuracy of the model is improved by 3.82%, 0.6%, 1.02%, and 4.8%, respectively, showing that the AOAF proposed in this paper has superior performance in image classification tasks compared to existing parametric and nonparametric activation functions. Furthermore, the AOAF provides a new direction in the study of activation functions: adaptive offsets, by introducing the mean value of the input tensor into the activation function to achieve its flexibility, and by offsetting the function to introduce the idea of attention, so that the negative values can participate in the feature extraction process of convolutional neural networks as smaller positive values.

Author Contributions

Conceptualization, Y.J. and J.X.; methodology, Y.J. and J.X.; software, J.X. and D.Z.; validation, J.X. and D.Z.; formal analysis, J.X. and D.Z.; investigation, Y.J.; resources, Y.J.; data curation, J.X. and D.Z.; writing—original draft preparation, J.X.; writing—review and editing, Y.J. and J.X.; visualization, J.X. and D.Z.; supervision, Y.J.; project administration, Y.J.; funding acquisition, Y.J. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Key Research and Development Program of Anhui Province under Grant 202104g01020012 and the Research and Development Special Fund for Environmentally Friendly Materials and Occupational Health Research Institute of Anhui University of Science and Technology under Grant ALW2020YF18. The authors would like to thank the reviewers for their valuable suggestions and comments.

Data Availability Statement

Conflicts of Interest

The authors declare no conflict of interest.

References

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Rastegari, M.; Ordonez, V.; Redmon, J.; Redmon, J.; Farhadi, A. XNOR-NET: ImageNet Classification Using Binary Convolutional Neural Networks. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Cham, Switzerland; pp. 525–542. [Google Scholar]

- Tan, Y.; Tang, P.; Zhou, Y.; Luo, W.; Kang, Y.; Li, G. Photograph aesthetical evaluation and classification with deep convolutional neural networks. Neurocomputing 2017, 228, 165–175. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhang, C.; Zhou, D.; Wang, X.; Bai, X.; Liu, W. Traffic sign detection and recognition using fully convolutional network guided proposals. Neurocomputing 2016, 214, 758–766. [Google Scholar] [CrossRef]

- Yang, T.; Long, X.; Sangaiah, A.K.; Zheng, Z.; Tong, C. Deep detection network for real-life traffic sign in vehicular networks. Comput. Netw. 2018, 136, 95–104. [Google Scholar] [CrossRef]

- Yu, J.; Zhang, B.; Kuang, Z.; Lin, D.; Fan, J. iPrivacy: Image privacy protection by identifying sensitive objects via deep multi-task learning. IEEE Trans. Inf. Forensics Secur. 2016, 12, 1005–1016. [Google Scholar] [CrossRef]

- Parhi, R.; Nowak, R.D. The Role of Neural Network Activation Functions. IEEE Signal Process. Lett. 2020, 27, 1779–1783. [Google Scholar] [CrossRef]

- Iliev, A.; Kyurkchiev, N.; Markov, S. On the Approximation of the step function by some sigmoid functions. Math. Comput. Simul. 2017, 133, 223–234. [Google Scholar] [CrossRef]

- Hamidoglu, A. On general form of the Tanh method and its application to nonlinear partial differential equations. Numer. Algebra Control Optim. 2016, 6, 175–181. [Google Scholar] [CrossRef][Green Version]

- Prashanth, D.S.; Mehta, R.V.K.; Ramana, K.; Bhaskar, V. Handwritten Devanagari Character Recognition Using Modified Lenet and Alexnet Convolution Neural Network. Wirel. Pers. Commun. Int. J. 2022, 122, 349–378. [Google Scholar] [CrossRef]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the 27th International Conference on Machine Learning, Haifa, Israel, 21–24 June 2010; pp. 1–8. [Google Scholar]

- Yu, Y.B.; Adu, K.; Tashi, N.; Anokye, P.; Wang, X.; Ayidzoe, M.A. RMAF: Relu-Memristor-Like Activation Function for Deep Learning. IEEE Access 2020, 8, 72727–72741. [Google Scholar] [CrossRef]

- Zheng, Q.; Tan, D.; Wang, F. Improved Convolutional Neural Network Based on Fast Exponentially Linear Unit Activation Function. IEEE Access 2019, 7, 151359–151367. [Google Scholar] [CrossRef]

- Maas, A.L.; Hannun, A.Y.; Ng, A.Y. Rectifier nonlinearities improve neural network acoustic models. In Proceedings of the International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013. [Google Scholar]

- Shi, J.; Ye, Y.; Liu, H.; Zhu, D.; Su, L.; Chen, Y.; Huang, Y.; Huang, J. Super-resolution reconstruction of pneumocystis carinii pneumonia images based on generative confrontation network. Comput. Methods Programs Biomed. 2022, 215, 106578. [Google Scholar] [CrossRef] [PubMed]

- Wei, X.; Liu, W.; Chen, L.; Ma, L.; Chen, H.; Zhuang, Y. FPGA-based hybrid-type implementation of quantized neural networks for remote sensing applications. Sensors 2019, 19, 924. [Google Scholar] [CrossRef] [PubMed]

- Clevert, D.A.; Unterthiner, T.; Hochreiter, S. Fast and Accurate Deep Network Learning by Exponential Linear Units (ELUs). arXiv 2015, arXiv:1511.07289. [Google Scholar]

- Adem, K. Impact of activation functions and number of layers on detection of exudates using circular Hough transform and convolutional neural networks. Expert Syst. Appl. 2022, 203, 117583. [Google Scholar] [CrossRef]

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. J. Mach. Learn. Res. 2010, 9, 249–256. [Google Scholar]

- Swiderski, B.; Osowski, S.; Gwardys, G.; Kurek, J.; Slowinska, M.; Lugowska, I. Random CNN structure: Tool to increase generalization ability in deep learning. J. Image Video Process. 2022, 2022, 3. [Google Scholar] [CrossRef]

- Liu, G.; Xu, X.; Yu, X.; Wang, F. A New Method of Identifying Graphite Based on Neural Network. Wirel. Commun. Mob. Comput. 2021, 2021, 4716430. [Google Scholar] [CrossRef]

- Huang, Z.; Ng, T.; Liu, L.; Mason, H.; Zhuang, X.; Liu, D. SNDCNN: Self-Normalizing Deep CNNs with Scaled Exponential Linear Units for Speech Recognition. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020. [Google Scholar]

- Klambauer, G.; Unterthiner, T.; Mayr, A.; Hochreiter, S. Self-normalizing neural networks. In Advances in Neural Information Processing Systems; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., et al., Eds.; MIT Press: Cambridge, MA, USA, 2017; Volume 2017. [Google Scholar]

- Qian, S.; Liu, H.; Liu, C.; Wu, S.; Wong, H.S. Adaptive activation functions in convolutional neural networks. Neurocomputing 2018, 272, 204–212. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. ImageNet classification with deep convolutional neural networks. In Proceedings of the Conference on Neural Information Processing Systems NIPS, Lake Tahoe, NV, USA, 3–8 December 2012; Curran Associates Inc.: Red Hook, NY, USA. [Google Scholar] [CrossRef]

- Liang, K.; Zhao, H.J.; Song, W.Z. Research on Evaluation Method of Internal Combustion Engine Sound Quality Based on Convolution Neural Network. Chin. Intern. Combust. Engine Eng. 2019, 40, 67–75. [Google Scholar] [CrossRef]

- Ma, N.; Zhang, X.; Zheng, H.; Sun, J. ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design; Springer: Cham, Switzerland, 2018; Volume 11218, pp. 122–138. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L. Inverted Residuals and Linear Bottlenecks: Mobile Networks for Classification, Detection and Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Radosavovic, I.; Kosaraju, R.P.; Girshick, R.; He, K.M.; Dollar, P. Designing Network Design Spaces; IEEE: New York, NY, USA, 2020; pp. 10425–10433. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2018; pp. 1–9. [Google Scholar]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).