An Improved U-Net for Watermark Removal

Abstract

1. Introduction

- A serial architecture is used to facilitate more useful information for improving the performance of watermark removal.

- U-nets are gathered into a serial architecture to extract more salient hierarchical information to address the long-term dependency on deep CNNs for watermark removal.

- To improve the adaptability of IWRU-net on mobile devices in the real world, randomly distributed watermarks with different types are used to train a blind watermark removal model.

2. Related Work

2.1. Deep CNNs for Watermark Removal

2.2. Cascaded Architectures for Image Applications

3. The Proposed Method

3.1. Network Architecture

3.2. Loss Function

3.3. Each Unet Block

4. Experiments

4.1. Datasets

4.2. Experimental Settings

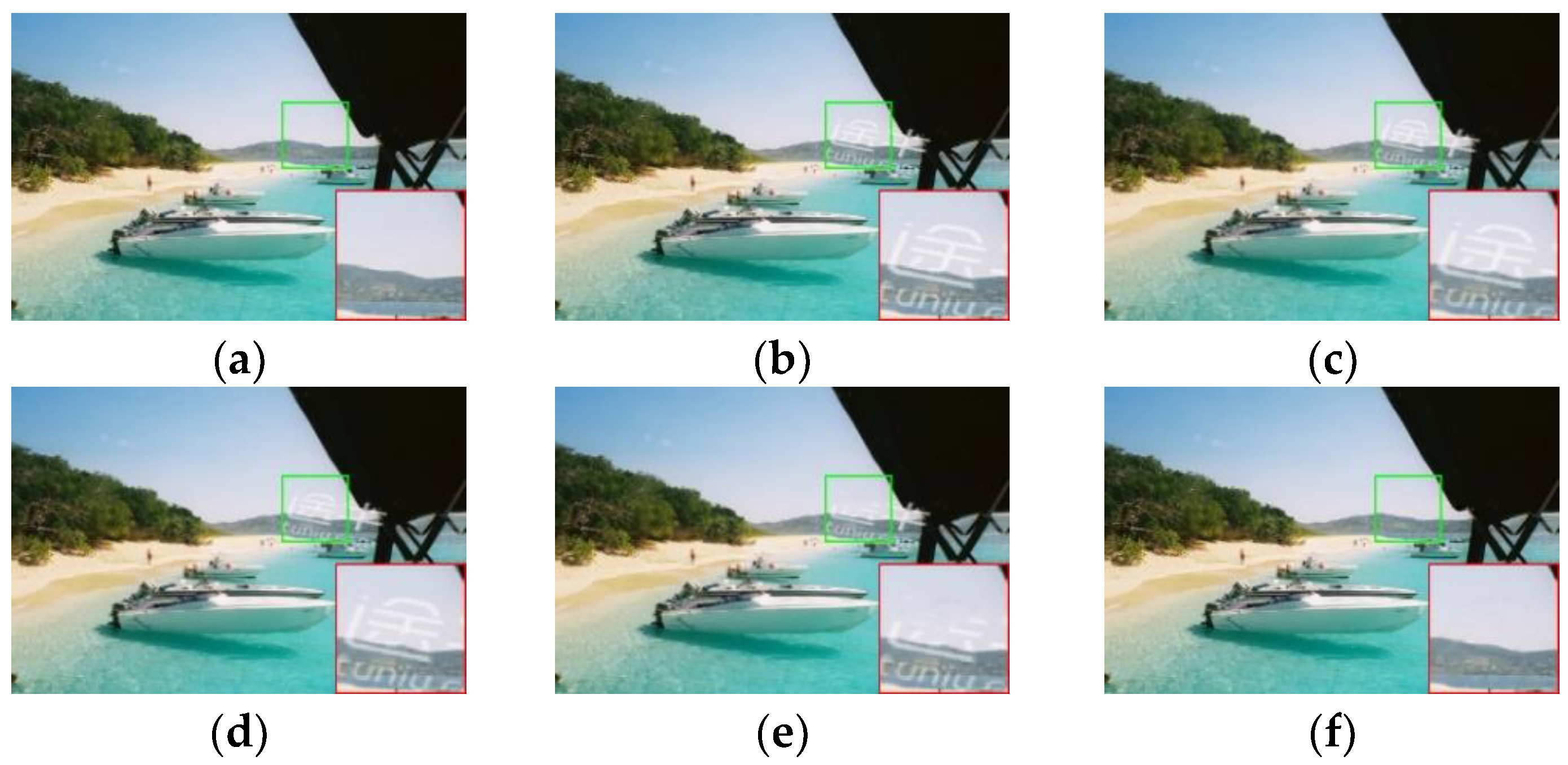

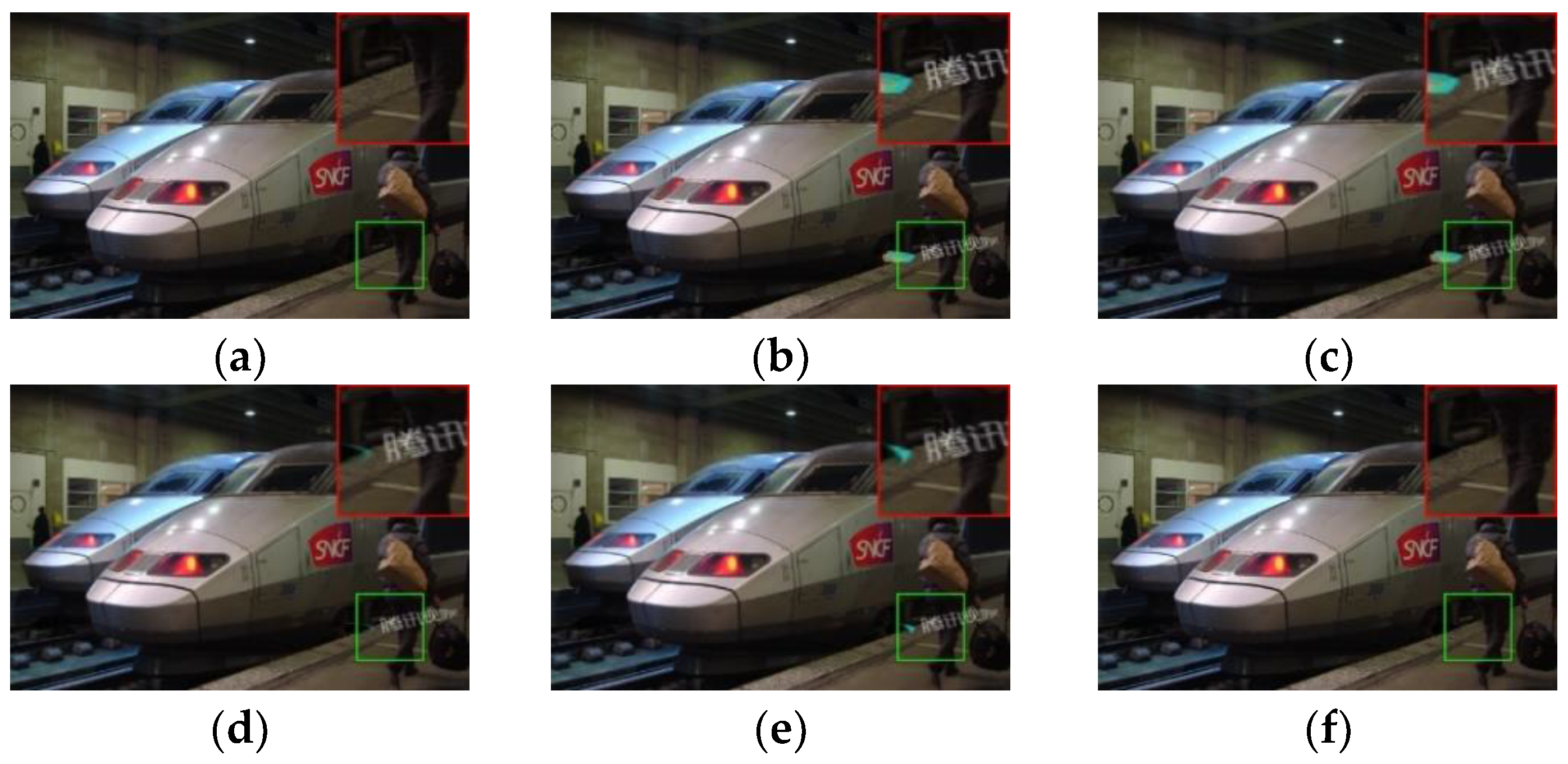

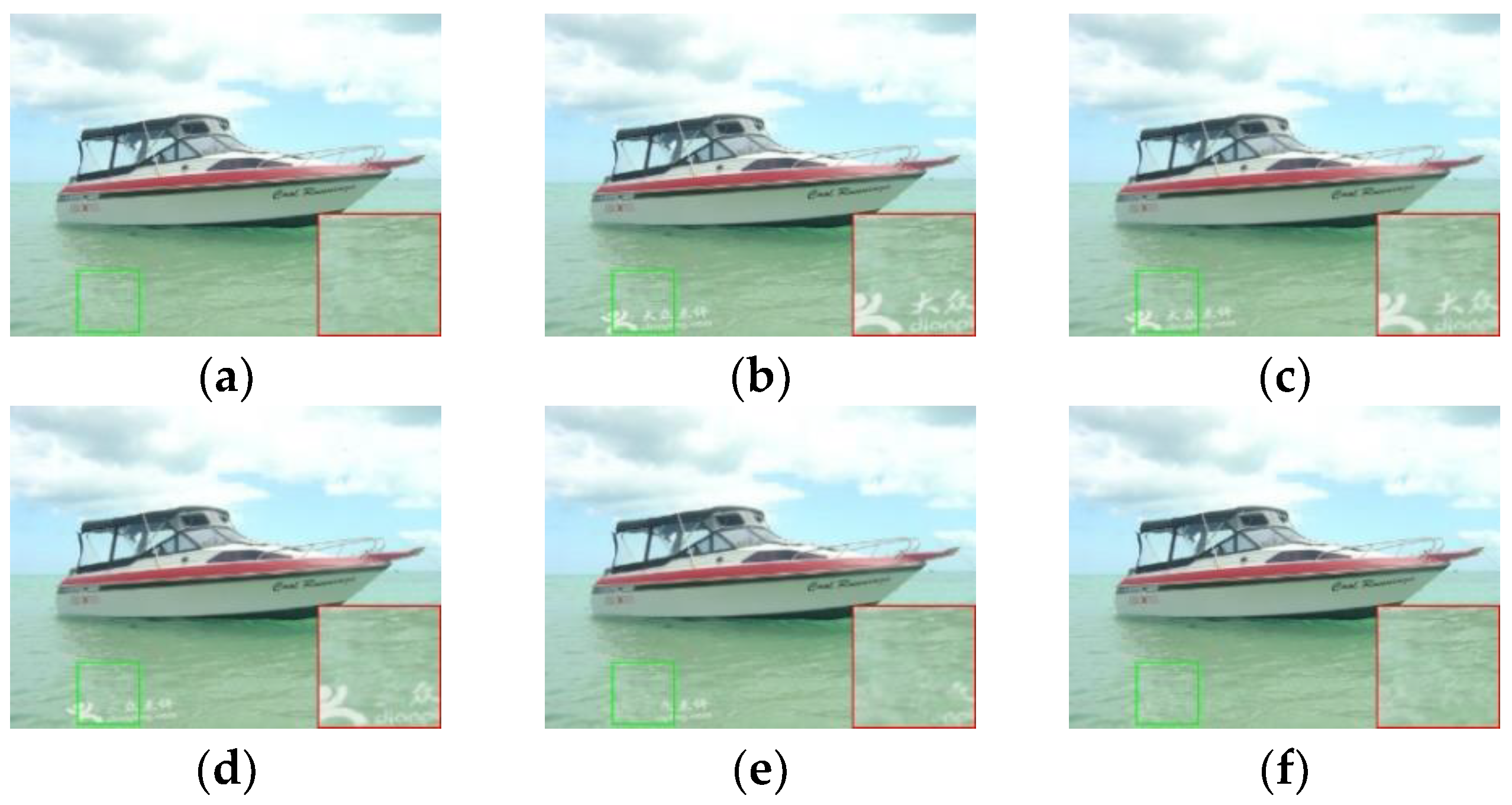

4.3. Experimental Analysis

4.4. Experimental Results

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Swanson, M.D.; Zhu, B.; Tewfik, A.H. Transparent robust image watermarking. In Proceedings of the 3rd IEEE International Conference on Image Processing, Lausanne, Switzerland, 19 September 1996; IEEE: Piscataway, NJ, USA, 1996; Volume 3, pp. 211–214. [Google Scholar]

- Wong, P.W. A public key watermark for image verification and authentication. In Proceedings of the 1998 International Conference on Image Processing, ICIP98 (Cat. No. 98CB36269), Chicago, IL, USA, 7 October 1998; IEEE: Piscataway, NJ, USA, 1998; Volume 1, pp. 455–459. [Google Scholar]

- Park, J.; Tai, Y.W.; Kweon, I.S. Identigram/watermark removal using cross-channel correlation. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 446–453. [Google Scholar]

- Hsu, T.C.; Hsieh, W.S.; Chiang, J.Y.; Su, T. New watermark-removal method based on Eigen-image energy. IET Inf. Secur. 2011, 5, 43–50. [Google Scholar] [CrossRef]

- Boyle, R.D.; Hiary, H. Watermark location via back-lighting and recto removal. Int. J. Doc. Anal. Recognit. (IJDAR) 2009, 12, 33–46. [Google Scholar] [CrossRef]

- Yang, Y.; Sun, X.; Yang, H.; Li, C. Removable visible image watermarking algorithm in the discrete cosine transform domain. J. Electron. Imaging 2008, 17, 033008. [Google Scholar] [CrossRef]

- Makbol, N.M.; Khoo, B.E.; Rassem, T.H. Block-based discrete wavelet transform-singular value decomposition image watermarking scheme using human visual system characteristics. IET Image Process. 2016, 10, 34–52. [Google Scholar] [CrossRef]

- Ansari, I.A.; Pant, M. Multipurpose image watermarking in the domain of DWT based on SVD and ABC. Pattern Recognit. Lett. 2017, 94, 228–236. [Google Scholar] [CrossRef]

- Huynh-The, T.; Banos, O.; Lee, S.; Yoon, Y.; Le-Tien, T. Improving digital image watermarking by means of optimal channel selection. Expert Syst. Appl. 2016, 62, 177–189. [Google Scholar] [CrossRef]

- Fares, K.; Amine, K.; Salah, E. A robust blind color image watermarking based on Fourier transform domain. Optik 2020, 208, 164562. [Google Scholar] [CrossRef]

- Huang, Y.; Niu, B.; Guan, H.; Zhang, S. Enhancing image watermarking with adaptive embedding parameter and PSNR guarantee. IEEE Trans. Multimed. 2019, 21, 2447–2460. [Google Scholar] [CrossRef]

- Chen, X.; Wang, W.; Ding, Y.; Bender, C.; Jia, R.; Li, B.; Song, D.X. Leveraging unlabeled data for watermark removal of deep neural networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 10–15 June 2019; pp. 1–6. [Google Scholar]

- Sharma, S.S.; Chandrasekaran, V. A robust hybrid digital watermarking technique against a powerful CNN-based adversarial attack. Multimed. Tools Appl. 2020, 79, 32769–32790. [Google Scholar] [CrossRef]

- Haribabu, K.; Subrahmanyam, G.; Mishra, D. A robust digital image watermarking technique using auto encoder based convolutional neural networks. In Proceedings of the 2015 IEEE Workshop on Computational Intelligence: Theories, Applications and Future Directions (WCI), Kanpur, India, 14–17 December 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–6. [Google Scholar]

- Chen, X.; Wang, W.; Bender, C.; Ding, Y.; Jia, R.; Li, B.; Song, D.X. Refit: A unified watermark removal framework for deep learning systems with limited data. In Proceedings of the 2021 ACM Asia Conference on Computer and Communications Security, Hong Kong, China, 7–11 June 2021; pp. 321–335. [Google Scholar]

- Liu, Y.; Zhu, Z.; Bai, X. Wdnet: Watermark-decomposition network for visible watermark removal. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 4–8 January 2022; pp. 3685–3693. [Google Scholar]

- Lu, J.; Ni, J.; Su, W.; Xie, H. Wavelet-Based CNN for Robust and High-Capacity Image Watermarking. In Proceedings of the 2022 IEEE International Conference on Multimedia and Expo (ICME), Taipei, Taiwan, 18–22 July 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–6. [Google Scholar]

- Cao, L.; Liang, Y.; Lv, W.; Park, K.; Miura, Y.; Shinomiya, Y.; Yoshida, S. Relating brain structure images to personality characteristics using 3D convolution neural network. CAAI Trans. Intell. Technol. 2021, 6, 338–346. [Google Scholar] [CrossRef]

- Jafarbigloo, S.K.; Danyali, H. Nuclear atypia grading in breast cancer histopathological images based on CNN feature extraction and LSTM classification. CAAI Trans. Intell. Technol. 2021, 6, 426–439. [Google Scholar] [CrossRef]

- Cheng, D.; Li, X.; Li, W.; Lu, C.; Li, F.; Zhao, H.; Zheng, W. Large-scale visible watermark detection and removal with deep convolutional networks. In Proceedings of the Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Guangzhou, China, 23–26 November 2018; Springer: Cham, Switzerland, 2018; pp. 27–40. [Google Scholar]

- Lee, J.E.; Seo, Y.H.; Kim, D.W. Convolutional neural network-based digital image watermarking adaptive to the resolution of image and watermark. Appl. Sci. 2020, 10, 6854. [Google Scholar] [CrossRef]

- Li, T.; Feng, B.; Li, G.; Li, X.; He, M.; Li, P. Visible Watermark Removal Based on Dual-input Network. In Proceedings of the 2021 ACM International Conference on Intelligent Computing and its Emerging Applications, Jinan, China, 28–29 December 2021; pp. 46–52. [Google Scholar]

- Meng, Z.; Morizumi, T.; Miyata, S.; Kinoshita, H. An Improved Design Scheme for Perceptual Hashing based on CNN for Digital Watermarking. In Proceedings of the 2020 IEEE 44th Annual Computers, Software, and Applications Conference (COMPSAC), Madrid, Spain, 13–17 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1789–1794. [Google Scholar]

- Le Merrer, E.; Perez, P.; Trédan, G. Adversarial frontier stitching for remote neural network watermarking. Neural Comput. Appl. 2020, 32, 9233–9244. [Google Scholar] [CrossRef]

- Ingaleshwar, S.; Dharwadkar, N.V. Water chaotic fruit fly optimization-based deep convolutional neural network for image watermarking using wavelet transform. In Multimedia Tools and Applications; Springer: Berlin/Heidelberg, Germany, 2021; pp. 1–25. [Google Scholar]

- Li, Q.; Wang, X.; Ma, B.; Wang, X.; Wang, C.; Gao, S.; Shi, Y. Concealed attack for robust watermarking based on generative model and perceptual loss. In IEEE Transactions on Circuits and Systems for Video Technology; IEEE: Piscataway, NJ, USA, 2021. [Google Scholar]

- Dhaya, R. Light weight CNN based robust image watermarking scheme for security. J. Inf. Technol. Digit. World 2021, 3, 118–132. [Google Scholar]

- Chacko, A.; Chacko, S. Deep learning-based robust medical image watermarking exploiting DCT and Harris hawks optimization. Int. J. Intell. Syst. 2022, 37, 4810–4844. [Google Scholar] [CrossRef]

- Wang, C.; Hao, Q.; Xu, S.; Ma, B.; Xia, Z.; Li, Q.; Li, J.; Shi, Y.Q. RD-IWAN: Residual Dense based Imperceptible Watermark Attack Network. In IEEE Transactions on Circuits and Systems for Video Technology; IEEE: Piscataway, NJ, USA, 2022. [Google Scholar]

- Qin, H.; Yan, J.; Li, X.; Hu, X. Joint training of cascaded CNN for face detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 1–26 July 2016; pp. 3456–3465. [Google Scholar]

- Zhang, K.; Zuo, W.; Chen, Y.; Meng, D.; Zhang, L. Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar] [CrossRef] [PubMed]

- Schlemper, J.; Caballero, J.; Hajnal, J.V.; Price, A.N.; Rueckert, D. A deep cascade of convolutional neural networks for MR image reconstruction. In Proceedings of the International Conference on Information Processing in Medical Imaging, Boone, CA, USA, 25–30 June 2017; Springer: Cham, Switzerland, 2017; pp. 647–658. [Google Scholar]

- Wu, D.; Kim, K.; Fakhri, G.E.; Li, Q. A cascaded convolutional neural network for X-ray low-dose CT image denoising. arXiv 2017, arXiv:1705.04267. [Google Scholar]

- Li, C.; Guo, J.; Porikli, F.; Fu, H.; Pang, Y. A cascaded convolutional neural network for single image dehazing. IEEE Access 2018, 6, 24877–24887. [Google Scholar] [CrossRef]

- Yan, S.; Wu, C.; Wang, L.; Xu, F.; An, L.; Guo, K.; Liu, Y. Ddrnet: Depth map denoising and refinement for consumer depth cameras using cascaded cnns. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 151–167. [Google Scholar]

- Zhao, S.; Dong, Y.; Chang, E.I.; Xu, Y. Recursive cascaded networks for unsupervised medical imageregistration. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 10600–10610. [Google Scholar]

- Hang, R.; Liu, Q.; Hong, D.; Ghamisi, P. Cascaded recurrent neural networks for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 5384–5394. [Google Scholar] [CrossRef]

- Wu, J.; Ma, J.; Liang, F.; Dong, W.; Shi, G.; Lin, W. End-to-end blind image quality prediction with cascaded deep neural network. IEEE Trans. Image Process. 2020, 29, 7414–7426. [Google Scholar] [CrossRef]

- Tian, C.; Xu, Y.; Zuo, W.; Zhang, B.; Fei, L.; Lin, C. Coarse-to-fine CNN for image super-resolution. IEEE Trans. Multimed. 2020, 23, 1489–1502. [Google Scholar] [CrossRef]

- Lu, X.; Zhang, J.; Yang, D.; Xu, L.; Jia, F. Cascaded convolutional neural network-based hyperspectral image resolution enhancement via an auxiliary panchromatic image. IEEE Trans. Image Process. 2021, 30, 6815–6828. [Google Scholar] [CrossRef] [PubMed]

- Xue, F.; Tan, Z.; Zhu, Y.; Ma, Z.; Guo, G. Coarse-to-fine cascaded networks with smooth predicting for video facial expression recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 4 November 2022; pp. 2412–2418. [Google Scholar]

- Tian, C.; Yuan, Y.; Zhang, S.; Lin, C.; Zuo, W.; Zhang, D. Image Super-resolution with An Enhanced Group Convolutional Neural Network. arXiv 2022, arXiv:2205.14548. [Google Scholar] [CrossRef] [PubMed]

- Tian, C.; Zhang, Y.; Zuo, W.; Lin, C.; Zhang, D.; Yuan, Y. A heterogeneous group CNN for image super-resolution. arXiv 2022, arXiv:2209.12406. [Google Scholar] [CrossRef] [PubMed]

- Bloomfield, P.; Steiger, W.L. Least Absolute Deviations: Theory, Applications, and Algorithms; Birkhäuser: Boston, MA, USA, 1983. [Google Scholar]

- Pollard, D. Asymptotics for least absolute deviation regression estimators. Econom. Theory 1991, 7, 186–199. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Agarap, A.F. Deep learning using rectified linear units (relu). arXiv 2018, arXiv:1803.08375. [Google Scholar]

- Murray, N.; Perronnin, F. Generalized max pooling. In Proceedings of the IEEE conference on computer vision and pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2473–2480. [Google Scholar]

- Xu, J.; Li, Z.; Du, B.; Zhang, M.; Liu, J. Reluplex made more practical: Leaky ReLU. In Proceedings of the 2020 IEEE Symposium on Computers and communications (ISCC), Rennes, France, 7–10 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–7. [Google Scholar]

- Li, X.; Lu, C.; Cheng, D.; Li, W.; Cao, M.; Liu, B.; Ma, J.; Zheng, W. Towards photo-realistic visible watermark removal with conditional generative adversarial networks. In Proceedings of the International Conference on Image and Graphics, Beijing, China, 23–25 August 2019; Springer: Cham, Switzerland, 2019; pp. 345–356. [Google Scholar]

- Liang, J.; Niu, L.; Guo, F.; Long, T.; Zhang, L. Visible Watermark Removal via Self-calibrated Localization and Background Refinement. In Proceedings of the 29th ACM International Conference on Multimedia, Chengdu, China, 20 October 2021; pp. 4426–4434. [Google Scholar]

- Cun, X.; Pun, C.M. Split then refine: Stacked attention-guided ResUNets for blind single image visible watermark removal. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 2–9 February 2021; Volume 35, pp. 1184–1192. [Google Scholar]

- Zhang, Q.; Xiao, J.; Tian, C.; Chun Wei Lin, J.; Zhang, S. A robust deformed convolutional neural network (CNN) for image denoising. CAAI Trans. Intell. Technol. 2022. [Google Scholar] [CrossRef]

- Khan, A.; Sohail, A.; Zahoora, U.; Qureshi, A.S. A survey of the recent architectures of deep convolutional neural networks. Artif. Intell. Rev. 2020, 53, 5455–5516. [Google Scholar] [CrossRef]

- Hore, A.; Ziou, D. Image quality metrics: PSNR vs. SSIM. In Proceedings of the 2010 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 2366–2369. [Google Scholar]

- Zhang, K.; Zuo, W.; Zhang, L. FFDNet: Toward a fast and flexible solution for CNN-based image denoising. IEEE Trans. Image Process. 2018, 27, 4608–4622. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Tian, C.; Xu, Y.; Li, Z.; Zuo, W.; Fei, L.; Liu, H. Attention-guided CNN for image denoising. Neural Netw. 2020, 124, 117–129. [Google Scholar] [CrossRef] [PubMed]

- Setiadi, D.R.I.M. PSNR vs SSIM: Imperceptibility quality assessment for image steganography. Multimed. Tools Appl. 2021, 80, 8423–8444. [Google Scholar] [CrossRef]

- Dolbeau, R. Theoretical peak FLOPS per instruction set: A tutorial. J. Supercomput. 2018, 74, 1341–1377. [Google Scholar] [CrossRef]

| Methods | PSNR |

|---|---|

| IWRU-net (ours) | 44.85 |

| IWRU-net with four up- and down-sampling operations in each U-net | 34.75 |

| IWRU-net without up- and down-sampling operations | 43.18 |

| A single U-net | 43.71 |

| IWRU-net with two extra residual operations | 36.77 |

| Transparency Rate | PSNR |

|---|---|

| 100% | 45.67 |

| 50% | 41.32 |

| Methods | PSNR | SSIM |

|---|---|---|

| DnCNN [53] | 42.95 | 0.9961 |

| FFDNet [56] | 38.48 | 0.9847 |

| Unet [57] | 43.71 | 0.9963 |

| IWRU-net (ours) | 44.85 | 0.9970 |

| Methods | PSNR | SSIM |

|---|---|---|

| DnCNN [53] | 44.67 | 0.9753 |

| FFDNet [56] | 37.54 | 0.9912 |

| Unet [57] | 45.35 | 0.9972 |

| RDDCNN [31] | 46.25 | 0.9971 |

| ADNet [58] | 46.47 | 0.9972 |

| IWRU-net (ours) | 46.52 | 0.9975 |

| Methods | Parameters | Flops |

|---|---|---|

| DnCNN [53] | 0.5594 M | 36.6582 G |

| FFDNet [56] | 0.4945 M | 8.1023 G |

| Unet [57] | 1.0120 M | 18.6813 G |

| RDDCNN [31] | 0.5591 M | 36.7060 G |

| ADNet [58] | 0.5215 M | 34.2393 G |

| IWRU-net (ours) | 2.0240 M | 37.3625 G |

| Methods | 256 × 256 | 512 × 512 | 1024 × 1024 |

|---|---|---|---|

| DnCNN [53] | 0.038228 | 0.154801 | 0.638453 |

| FFDNet [56] | 0.010732 | 0.037471 | 0.124227 |

| Unet [57] | 0.027889 | 0.097742 | 0.316260 |

| RDDCNN [31] | 0.057355 | 0.222245 | 1.559665 |

| ADNet [58] | 0.036286 | 0.147691 | 0.563838 |

| IWRU-net (ours) | 0.058375 | 0.199374 | 0.654419 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fu, L.; Shi, B.; Sun, L.; Zeng, J.; Chen, D.; Zhao, H.; Tian, C. An Improved U-Net for Watermark Removal. Electronics 2022, 11, 3760. https://doi.org/10.3390/electronics11223760

Fu L, Shi B, Sun L, Zeng J, Chen D, Zhao H, Tian C. An Improved U-Net for Watermark Removal. Electronics. 2022; 11(22):3760. https://doi.org/10.3390/electronics11223760

Chicago/Turabian StyleFu, Lijun, Bei Shi, Ling Sun, Jiawen Zeng, Deyun Chen, Hongwei Zhao, and Chunwei Tian. 2022. "An Improved U-Net for Watermark Removal" Electronics 11, no. 22: 3760. https://doi.org/10.3390/electronics11223760

APA StyleFu, L., Shi, B., Sun, L., Zeng, J., Chen, D., Zhao, H., & Tian, C. (2022). An Improved U-Net for Watermark Removal. Electronics, 11(22), 3760. https://doi.org/10.3390/electronics11223760