Abstract

This paper presents a novel feature descriptor termed principal component analysis (PCA)-based Advanced Local Octa-Directional Pattern (ALODP-PCA) for content-based image retrieval. The conventional approaches compare each pixel of an image with certain neighboring pixels providing discrete image information. The descriptor proposed in this work utilizes the local intensity of pixels in all eight directions of its neighborhood. The local octa-directional pattern results in two patterns, i.e., magnitude and directional, and each is quantized into a 40-bin histogram. A joint histogram is created by concatenating directional and magnitude histograms. To measure similarities between images, the Manhattan distance is used. Moreover, to maintain the computational cost, PCA is applied, which reduces the dimensionality. The proposed methodology is tested on a subset of a Multi-PIE face dataset. The dataset contains almost 800,000 images of over 300 people. These images carries different poses and have a wide range of facial expressions. Results were compared with state-of-the-art local patterns, namely, the local tri-directional pattern (LTriDP), local tetra directional pattern (LTetDP), and local ternary pattern (LTP). The results of the proposed model supersede the work of previously defined work in terms of precision, accuracy, and recall.

1. Introduction

Realization of information and communication technologies, machine learning, and artificial intelligence techniques has opened new horizons for new applications. With the immense growth of internet and digital media, the domain of image processing has evolved rapidly in the past decade.

In image processing, shape, color, and texture play key roles in creating a feature vector [1,2]. A variety of descriptors is proposed using different techniques, such as the gray level co-occurrence matrix (GLCM), HOG, LBP, and SIFTS techniques [3,4,5,6,7]. These descriptors are further used in combination with machine learning techniques (Random Forest, SVM) to extract features of images [8,9,10,11].

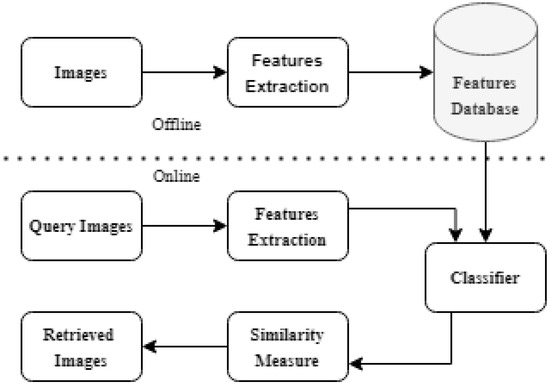

It is very important to have effective techniques that can search the desired images from big databases. Several approaches have been used for this purpose, which utilizes color, shape, and texture features. Content-based image retrieval (CBIR) is used to retrieve the similar image from an image database. CBIR utilizes visual properties of images, i.e., shape, color, and texture, to locate the desired image from a huge database in minimal time. The process flow of generic CBIR is shown in Figure 1.

Figure 1.

Content-based image retrieval.

Focusing on CBIR techniques, the local descriptor uses local pixel information extraction, which is one vital methodology gaining limelight in the engineering industries and research arena. Within local descriptor methodology, local binary pattern (LBP) was the first methodology that used local pixel information to extract the image features. LBP has multiple extensions such as local ternary patterns (LTP), local tri-directional pattern (LTriDP), and local tetra directional pattern (LTetDP).

Some of these techniques have used directions and magnitude to find the local neighborhood intensity patterns in four directions, whereas some approaches used the hybrid approach, i.e., in combination with other different methodologies (GLCM, HOG) for image retrieval by orchestrating a histogram from the co-occurrence matrix. On the other hand, the same local neighborhood intensity patterns were used in combination with GLCM to obtain more information about the co-relation of pixels and their frequency instead of creating a histogram [7]. In some places, local neighborhood intensity patterns are used in combination with color patterns for content-based image retrieval. Nevertheless, local neighborhood pixel information has always been the key point of interest in content-based image retrieval. Most of the patterns proposed so far utilize uniform approaches to obtain information from neighboring pixels, and only a handful of approaches use direction-based pixel information.

Problem Statement and Contribution

The above-described techniques have limitations; for example, methods focus on obtaining color features and ignore the texture features. In other cases, the focus is on creating histograms, ignoring the structural elements and their spatial correlation (SEC). Moreover, SEC does not fully regard all the structural element information. Some approaches are proposed which use information from neighboring pixels. Such techniques, however, are not quite successful due to the increase in dimensionality and computational cost, thus increasing image retrieval time. However, local pixel information is used in a lot of methods. However, some of them are not quite successful in picking up on changes in local pixel information trends. Another disadvantage includes a large dimension of the feature vector. To the best of our knowledge, no study has ever explored the impact of utilizing eight directions to compute the features and retrieve the image. Hence, there is a dire need to develop descriptors that utilize all eight directions of the first radius of a pixel so that the problem of poor accuracy and high dimensionality could be considered.

- We proposed a novel approach which considers all surrounding pixels to obtain the complete pixel features.

- We proposed a methodology to reduce the computation cost by applying dimension reduction techniques using PCA.

- We provided a mechanism to complete direction and magnitude patterns on the edges of the image (at Radius 2) where pixel information is not available.

The proposed methodology is twofold. Initially, eight surrounding pixels (nearest to center pixels) are considered to attain the most relevant information, focusing on the first radius only. Afterwards, the magnitude of the vector is computed, resulting in a joint histogram. At this stage, PCA is introduced to keep the data normalized and attain maximum information. This approach generates a small but enriched feature vector, which has all the qualities of the surrounding eight pixels.

2. State of the Art

Early methods for texture classification includes different approaches majority of them are filtered approaches [6]. One of the popular filter Gabor filter, which uses 40 different kinds of kernel to convolve on an image to extract features [3,5], wavelet transform utilized by [4,9], and wavelet frames extracted by [10]. Haralick et al. [8] proposed a novel approach named the gray level co-occurrence matrix (GLCM). GLCM measures the spatial relationship between two different gray tones and is utilized as a classification technique and extract texture feature of images.

Local binary pattern (LBP), on the other hand, uses local pixel information to extract texture features of the image. Several algorithms have been proposed to improve quality, i.e., noise reduction. Zheng et al. [12] and Zhiqin et al. [13] proposed a novel approach to dehaze images obtained during cloudy and moist weather, which helps to extract robust features. Wavelet transform is a powerful tool for efficiently representing signals and images at multiple levels of detail with many advantages, such as compression, level-of-detail display, progressive transmission, level-of-detail editing, filtering, modeling, fractals and multifractals, etc. [14]. Ahmed et al. [15] proposed a nonparametric denoising methods based on contourlet transform with sharp frequency localization. Ouahabi et al. [16] proposed wavelet-based image denoising methods. The local ternary pattern (LTP) [11] uses only three directions of information on the pixel. LTP requires users to set the initial threshold value. Murala et al. [17] introduced a new pattern which utilizes local pixel information, named the local tetra pattern (LTP). This pattern utilizes four directions of neighboring pixel information (0, 45, 90, and 135 degrees). As per Murala, only four angles are sufficient to cover the whole pixel, as 0 has same information as 180, 45 is same as 225, 90 degrees is equivalent to 270 degrees, and lastly 135 is equivalent to 315 degrees. The reason for choosing only four angles was to reduce the computation cost. A local ternary pattern was introduced by [18] and used in biomedical images.

The BRINT descriptor was proposed by Liu [19] which was rotation invariant, noise sensitive and computation vise very simple. BRINT descriptor used similar technique as used in LBP, because it utilizes surrounding eight pixels, but the difference is only pixels should be multiple of eight. Vimina et al. and Mistry et al. [20,21] used LBP to extract texture features in combination with color features to create multi-channel feature descriptor.

Noise-Resistant LBP (NR-LBP) [22] and Robust LBP (RLBP) [23] uses LBP technique with enhancement of noise reduction and efficiency, respectively. The local tetra directional pattern [17] focuses only on two directions: horizontal and vertical (0 and 90 degree). Two directional LBP showed better results than existing LBP. Another enhanced version of LBP was proposed by [24], named the local tetra oppugnant pattern, which also uses RGB color space in extracting neighbor pixel information.

Walia et al. [25] proposed the color difference histogram (CDH), which was used for color and texture features. Jain et al. [26] used Fuzzy C-Mean algorithm, Co-occurrence Matrix and HSI Hue algorithm to extract shape, texture, and color features, respectively. Feng et al. [27] used color and texture features to propose a global correlation descriptor (GCD) for efficient image retrieval.

Asif et al. [28] proposed novel approach that combines color vector quantization used for CBIR. The proposed approach extracts features based on edge orientation and color features. Dhiman et al. [29] used GLCM and LBP for content-based image retrieval to apply to the CORAL dataset. Suleman et al. [30] and Zeeshan et al. [31] used contextual techniques to find similarities in 1D and 2D signals. Sukhjeet et al. [32] used a hybrid approach which utilizes color space and quaternion moment vector to create this unique feature vector. Sawat et al. [33] used a heuristic approach for real-time content-based images retrieval. Qin et al. [34] used local gradient of images to extract the directional gradient feature vector and tested their approach on six different datasets.

Current state-of-the-art methodologies using most recent databases, 2D and 3D face recognition methods, were analyzed [35]. Adjabi et al. [36] analysed different approaches and proposed a multi-block color binarized statistical approach for face recognition. Khaldi et al. [37] used unsupervised deep learning approach for human ear recognition from face. El et al. [38] used the CNN approach to detect pain from the human face. Mimouna et al. [39] proposed a heterogeneous multimodal dataset approach OLIMP for advanced environment perception.

A local wavelet decomposition method was proposed by Dubey et al. [40] which produces output by comparing values with its center pixel. Giveki et al. [41] used SIFT in combination with a local directional pattern to produce the feature vector. Wu [42] introduced an enhanced version of LTP, which takes an image as input and creates two patterns called upper and lower binary patterns.

Ouahabi et al. proposed new approach based on discrete wavelet transform (DWT) and used for multifractal analysis [43]. Multifractal analysis technique was used on ultrasound images [44]. Gupta et al. [45] used SIFT and SURF descriptor for face recognition and apply to five different public datasets. Juan et al. [46] used an evolutionary technique to obtain the texture features from the facial images dataset. The evolutionary technique finds several interest points from face image. Shekhar et al. [47] used two new texture feature descriptors based on a zigzag approach named the color zigzag binary pattern (CZZBP) and color median block zigzag binary pattern (CMBZZBP). This ZigZag texture feature descriptor uses three color components RGB to extract features. Agarwal et al. [48] introduced a descriptor based on local pixel information named the Haar-like local ternary co-occurrence pattern (HLTCoP) for image retrieval. Al-Barhi et al. [49] used a PCA-based SIFT for the static face recognition system.

The local binary co-occurrence pattern [50] and local extrema co-occurrence pattern [51] were suggested by Verma, which compare each pixel from the image with its center symmetric pixels. Verma considered only four directions 0, 45, 90, and 135 degrees. This approach reduces the computation cost, but due to considering only four pixels, lot of important information, which could be used to create a good descriptor is left behind. Verma proposed another directional pattern named the local tri-directional pattern (LTriDP) [52], which considers only three directions.

Numerous approaches have been developed using neural network (NN). The CNN-based feature transformation (NFT) approach was introduced [53], which helps in reducing the redundancy in features before performing classification. Charles et al. [54] introduced a local mesh color texture pattern (LMCTP), which uses color and spatial features and merges them to create a local descriptor. Amy et al. [55] used the transfer learning approach to find the TM logo similarity used for images retrieval. Christy et al. [56] proposed new methodology for CBIR, which extract pixels information from an image based on its shape, attributes, and tag.

Multi-trend structure descriptor (MTSD) [57] compares the target pixel with its four neighbor directions—0, 45, 90, and 135 degrees—and ignores the rest. MTSD uses the left-to-right/bottom-up approach for convolution and only uses the 3 × 3 kernel for it. MTSD uses the fix threshold to compute the binary value. Bala et al. [58] proposed texton-based local texton XOR patterns (LTxXORP) and used them for CBIR. The PM extract’s structure features by using texton XOR. Banerjee et al. [59] introduced a local neighborhood intensity pattern (LNIP) to retrieve the content-based images. One of the best features of this approach was illumination resistance. The color component approach was utilized again by Bhunia et al. [60], in which he combined color and texture information, and HSV (Hue and Saturation) was used for the color component. Manickam et al. [61] used local directional technique (four directions only) named the extrema number pattern (LDIRENP) for content-based image retrieval. Li et al. [62] studied current developments in the field of CBIR.

Recently, a local tetra-directional pattern was introduced by Bedi et al. [63], in which they compared a pixel with the four most adjacent pixels (0°, 45°, 90°, and 135°).

Among all the above-described descriptors, the most appropriate was the local tri-directional or tetra-directional patterns. These methods are used to fix directions while leaving very important chunk of image information behind, which compromises the accuracy of the feature descriptor. The proposed methodology has tried to overcome this issue by utilizing all the neighboring pixel’s information. It has also proposed a solution to reduce the computation cost.

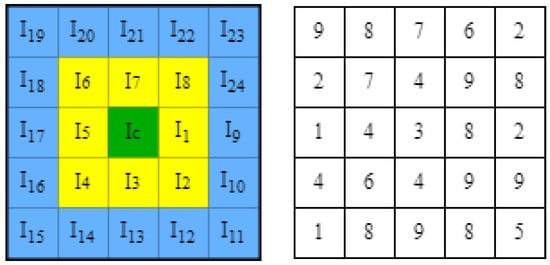

Each of the eight pixels are as important as the three angles used by [51] or the four angles used by [63]. For example, in Figure 2, considering the tri directional pattern for location I1, value 8 is only compared with the above, below, and central pixels, whereas we have higher intensity pixels available in the second radius, which could be used in making the strongest feature vectors which were left behind; on the other hand, in the tetra directional pattern, the second radius is not fully utilized; in I1, value 8 is only compared with angles (0°, 45°, 90°, and 135°) which are (2, 8, 9 and 4), and again we have high intensity pixels available at angles 270° and 315°, which were left behind. The second drawback is that Bedi [63] utilized the second radius, but he did not mention any methodology pertaining to how to handle image edges where use of the second radius is not possible. The third disadvantage of both approaches is the need to stick with the Euclidian distance calculation approach while the accuracy of Manhattan distance is proven [64]. The recent state of the artwork did not use all the directions because of high computation cost. Therefore, new methods were introduced to truncate important pixel information.

Figure 2.

Radius 1 and 2 Pixel Numbering.

3. Materials and Methods

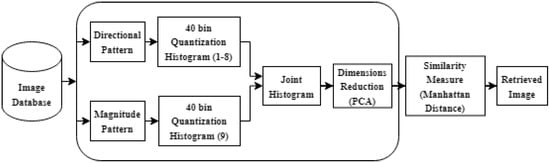

The proposed model is shown in Figure 3. The algorithm has three parts: in the first section, the directional pattern is created, and in the second part, the magnitude pattern is created. Both pattern’s histograms are then joined to create a combined feature vector, which is then passed to the third part to reduce the dimensionality while using PCA.

Figure 3.

Proposed Framework of PCA-based Advanced Local Octa-Directional Pattern (ALODP-PCA).

3.1. Steps

- Directional pattern calculation: In step 1 of the proposed algorithm, the directional pattern is created. Detail of the calculation is given in Section 4.1.

- Magnitude pattern calculation: In step 2 of the proposed algorithm, the magnitude pattern is created. Detail of the calculation is given in Section 4.2.

- Quantization histogram creation: For each directional and magnitude pattern a 40-bin quantization histogram is created.

- Joint histogram creation: In this step, all directional and magnitude histograms are combined to create a joint histogram.

- Dimensions reduction: In this step, principal component analysis (PCA) is used to select the most important features from the image. Detail is given in Section 4.3.

- Similarity measure: In the final step, Manhattan distance is utilized to find the similarity measure. Detail is given in Section 4.3.

3.2. Advance Local Octa Directional Pattern

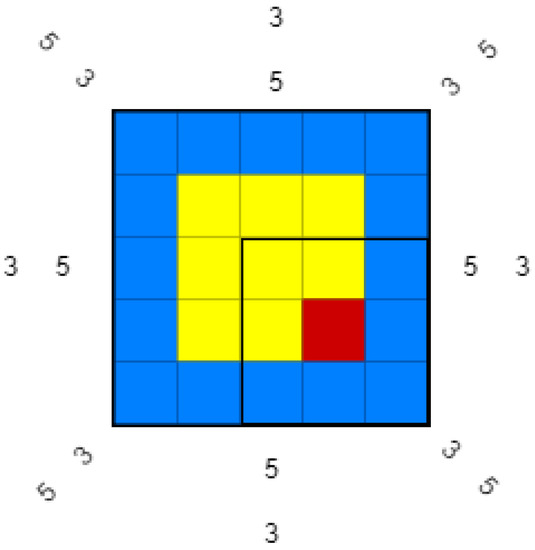

The advanced local octa-directional pattern (ALODP-PCA) not only utilizes all the surrounding pixel information, but also reduces the computation cost as well. Instead of taking 3 directions [52] or 4 directions [63], all of the directions in the first degree radius are utilized in ALODP-PCA. True information about any pixel is retained by its closest neighboring pixels. With the help of all surrounding pixel information, we can obtain the true value of the center pixel. Therefore, the proposed methodology considers eight neighbor pixels close to the center pixels (from the first radius) to obtain the direction pattern. To handle the edges and corner, where the surrounding 8 pixels are not available, the value is taken as 0 to compute the value of the center pixel. Figure 4 shows how and which pixels of radii 1 and 2 are involved in the octa directional pattern.

Figure 4.

Radius 1 and 2 Pixel Involved in Octa Directional Calculation.

3.2.1. Direction Pattern

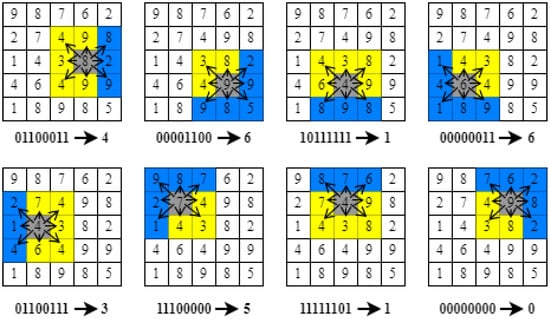

The center pixel is named Ic, which has 8 neighborhood pixels, I1, I2, …, I8, in the first radius and 16 pixels in the second radius. All the mathematical calculations are similar to the calculation performed by Verma et al. [52] The only difference is that Verma created 3 directional patterns, whereas we have created eight directional patterns, and all the equations are modified accordingly. In the first step, the proposed methodology calculates the difference of the pixel against its center pixel. Figure 5 shows how the proposed methodology is used to calculate the directional pattern. To elaborate the working of the proposed methodology, a sample image of 5 × 5 is used. For each pixel location I1 to I8, directional differences are calculated by comparing each pixel with its surrounding eight pixels, as given below.

Figure 5.

Direction Calculation In for First Radius Pixels.

D1 = Ii−1, j−1 − Ii,j D2 = Ii−1,j − Ii,j

D3 = Ii−1, j+1 − Ii,j D4 = Ii,j−1 − Ii,j

D5 = Ii, j+1 − Ii,j D6 = Ii+1,j−1 − Ii,j

D7 = Ii+1, j − Ii,j D8 = Ii+1,j+1 − Ii,j

After obtaining eight differences, D1, D2, …, D8, a pattern with each distance is calculated as given below in Equation (1).

In Equation (1), is the count of distances less than 0 processed by taking the mod with the number of directions, which is 8 in our case. This will give us the function value. Next, we calculate the pattern value for each neighborhood pixel using Equation (2).

Equations (3)–(5) shows the conversion of each pattern value into binary. Such as for each value of Equation (3) a function S as shown in Equation (3a) is applied which transforms it into binary form. By the end of this step, we should have 8 patterns; each one is made up of 8 binary values.

PM produces 8 directional patterns, which are used to generate 8 histograms using Equation (6).

3.2.2. Magnitude Pattern

Murala et al. [17] has proved that, similar to directional patterns, the magnitude of a pixel also plays a very important role in creating a feature vector. Similar to the directional pattern, the magnitude of the pixel will use all eight directions. Figure 6 shows how the proposed methodology is used to calculate the magnitude pattern. To elaborate the working of PM, a sample image of 5 × 5 is used. The magnitude of pixel location I1 to I8 is calculated using Equation (7), where each pixel is compared with its surrounding eight pixels.

where represents pixels at location . Each value of magnitude is compared with central magnitude value, if the magnitude at pixel is found to be greater than the central magnitude, the binary value 1 is assigned to it or else it will be 0, as shown in Equation (8).

Figure 6.

Magnitude Calculation in 5 × 5 Matrix for Radius 1 Pixels.

After obtaining the magnitude pattern, histogram is generated from each magnitude pattern. Then, all nine histograms concatenated into single joint histogram using Equation (9).

To elaborate the working of ALODP, a sample image of 5 × 5 is used, as shown in Figure 6. The magnitude pattern is given below.

Here, Magc is greater than Magi; therefore, as per Equation (8a), Magi will be 0.

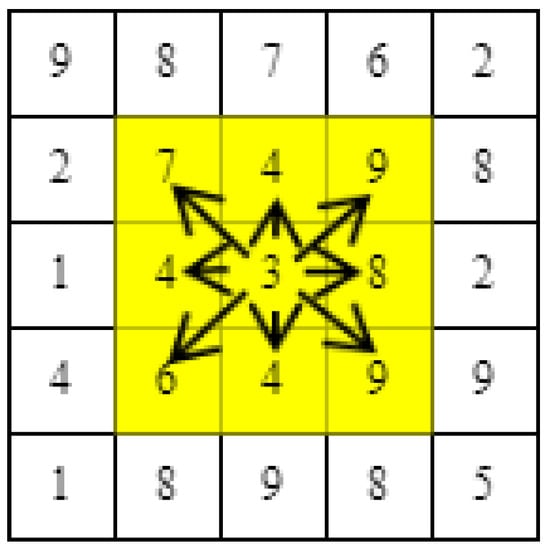

Figure 7 shows how our proposed methodology is used to calculate the directional pattern for radius 2. For each pixel location, I1 to I8, directional differences are calculated by comparing each pixel with its surrounding eight pixels, as given below, and taking the value as zero for the pixels where no value was available, especially at the edges. The same methodology is used to calculate the magnitude for edges as depicted in Figure 7.

Figure 7.

Direction and Magnitude Calculation In Second Radius Pixels.

3.2.3. Dimension Reduction and Similarity Measure

Principal component analysis (PCA) is one of the popular techniques for reducing multidimensional data. PCA uses eigenvalues and eigenvectors to create a covariance matrix, which is used to find the most variance data. The highest rank in PCA means the most valuable component. That is the reason we find, utilize, and compare the results of the top 5, 10, and 15 principal components. To quantify the resemblance between images, either we can use the high similarity between two images or use less difference between images—both give the same results. It has been proven that the Manhattan similarity distance works far better compared with the other distance matrix [64].

3.3. Dataset Description

In this research, a subset of the Multi-PIE face dataset has been used. This dataset contains over 750,000 images of 337 people’s faces taken in different angles and with different expressions. Another reason for choosing this dataset is that this dataset contains images under 19 illumination conditions with different resolutions. The overall size of this dataset is 305 GB. Some of the images from the datasets are shown in Figure 8.

Figure 8.

CMU Multi-PIE Database Sample Images.

4. Results

In order to evaluate the capability of the proposed methodology accuracy, precision and recall along with computational time are used as performance metrics. The proposed method queries an image from the image database, which results in many images. Some are relevant and some are not. Therefore, the precision, recall, and accuracy are computed by Equations (10)–(12), respectively.

The proposed methodology is tested in two ways: one without the dimension reduction technique, and one with dimension reduction. Each set of time results are compared with state-of-the-art methodologies.

4.1. Computaton Time: Without Dimension Reduction Techniques

The proposed methodology is tested on the Multi-PIE dataset against state-of-the-art methodologies, such as LTriDP [42], LTetDP [14], and LTP [11] without dimension reduction. The execution time to retrieve 1000 images is 60 s for LTriDP, 68 s for LTetDP, and 55 s for LTP, as shown in Table 1. The results have shown the computation time, which is increased using proposed methodology because it utilizes all eight directions.

Table 1.

Comparison of computation time: without dimension reduction.

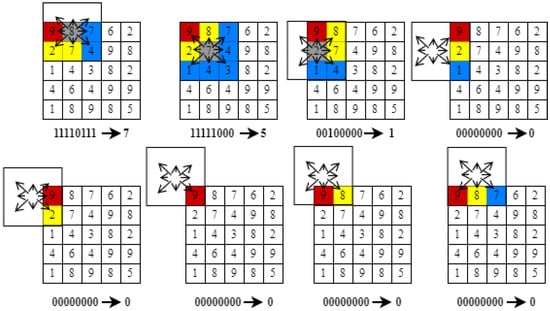

4.2. Computation Time: With Dimension Reduction Techniques

The proposed mythology is tested on the Multi-PIE dataset against state-of-the-art methodologies such as LTriDP [42], LTetDP [14], and LTP [11] with dimension reduction, and the results has shown a significant improvement compared with ALODP. Results of the performance measure clearly show that ALODP outperforms the previously developed state-of-the-art methodologies. Table 2 represents a detailed comparison of results obtained above with dimension reduction by taking 5, 10, and 15 principal components and their execution time.

Table 2.

Comparison of computation time: with dimension reduction.

Execution time for the full dataset using the top 5, 10, and 15 principal components is 7.5, 8.75, 9.58 h, respectively. Figure 9 shows the computation time comparison of LTriDP, LTetDP, and LTP with ALODP, which clearly shows that the computation time of ALODP is smallest.

Figure 9.

Computation time comparison of all methods on the Multi-PIE dataset.

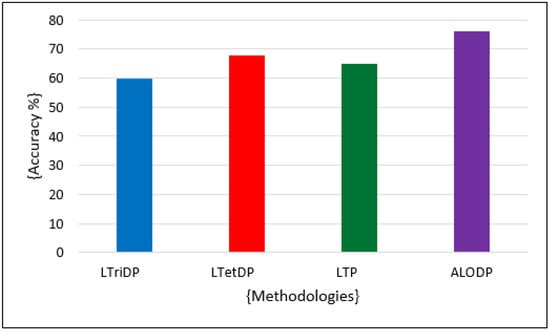

4.3. Comparative Analysis with Respect to Accuracy

Comparative analysis with respect to accuracy between ALODP and state-of-the-art methodologies shows that ALODP scores 76% accuracy in comparison with LTriDP, LTetDP, and LTP, which are 60%, 68%, and 65%, respectively. Results for the accuracy of the Multi-PIE dataset are given below in Table 3.

Table 3.

Comparative analysis of accuracy.

Figure 10 shows the accuracy comparison of LtriDP, LtetDP, and LTP with ALODP, which clearly shows that the accuracy of ALODP is high.

Figure 10.

Accuracy comparison of all methods on Multi-PIE dataset.

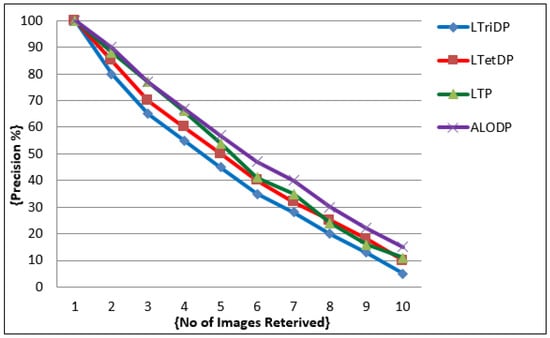

4.4. Comparative Analysis with Respect to Precision

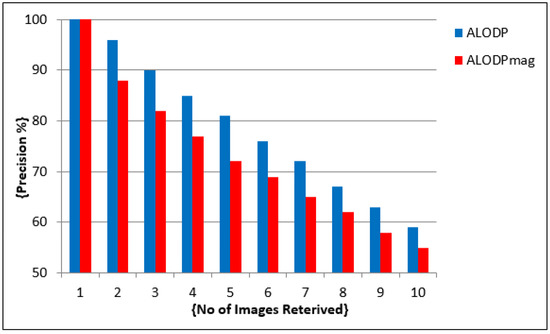

Figure 11 shows the precision results of ALODP with LTriDP [42], LTetDP [14], and LTP [11] obtained on the Multi-PIE face dataset. Figure 12 shows the comparison of ALODP and ALODPmag in terms of precision, which clearly shows that all eight directions play a bigger role in image retrieval, which was not considered in previous research.

Figure 11.

Precision comparison of all methods on the Multi-PIE dataset.

Figure 12.

Precision comparison of direction and magnitude feature on Multi-PIE dataset.

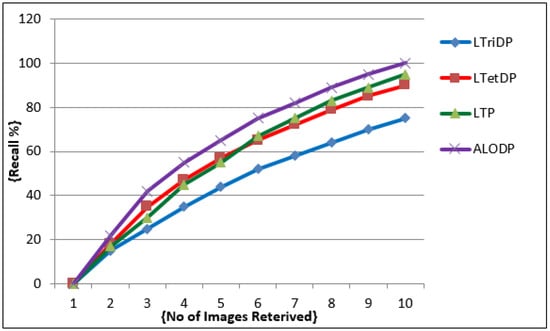

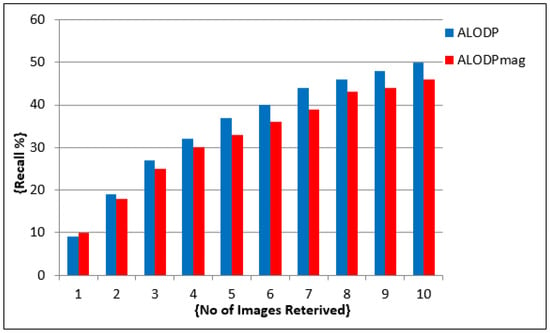

4.5. Comparative Analysis with Respect to Recall

This section explains the recall analysis of ALODP with other techniques on the Multi-PIE face dataset. Figure 13 shows the mean recall for the top 10 retrieval is 96.02%, which clearly shows the efficiency of PM on the Multi-PIE dataset. This proves that the PM can be used to retrieve many images perfectly. Figure 14 shows the comparison of ALODP and ALODPmag in terms of recall, which clearly shows all eight directions playing a bigger role in image retrieval, which was not considered in previous research.

Figure 13.

Recall comparison of all methods on Multi-PIE.

Figure 14.

Recall comparison of direction and magnitude feature on the Multi-PIE dataset.

Demonstration results of five query images are also shown in Figure 15. Regardless of which expression they have on their face, the system was able to locate the correct images.

Figure 15.

Multi-PIE Face Database Query Example.

Without PCA implementation, feature vector length will be high, which can cause slower performance. However, because of the use of PCA for reducing the feature vector dimensions from 9 histograms of 40 bin each to just a few ones (5, 10 and 15 as used in this work), yields the most accurate features to be utilized. By the increase in the PCA count, the results started decreasing but at a slow speed. In all the cases, the proposed methodology shows improved results as compared with LTriDP, LTetDP, and LTP (Table 3).

5. Conclusions

This research proposes an improved version of the local directional pattern using the advance local octa-directional pattern based on PCA. To attain all the pixels’ surrounding information, we compared the target pixel with its eight surrounding pixels to obtain the 8-directional patterns. In a similar way, the magnitude pattern also utilized 8-side pixels to obtain the magnitude vector, because it is a computationally expansive process to check all the pixels. To minimize this computational overhead, PCA is utilized, which gives an optimal performance. Previously designed approaches skipped the pixels’ surrounding information; however, the proposed approach collects all the surrounding pixels’ information to create the enriched feature vector and eliminates only the information with less pixel information. Moreover, the proposed methodology also describes the way to handle pixels in the second radius or pixels at the edge, which was not previously, described anywhere. Extensive experiments proved that the ALODP clearly outperforms the existing ones—LTriDP [52], LTetDP [17], and LTP [11]—in terms of accuracy, precision, and recall.

Author Contributions

Conceptualization, M.Q. and D.M.; methodology, M.Q., D.M., G.A.; software, M.Q., A.B., S.J.H.; validation, M.Q., D.M. and A.B.; formal analysis, S.K., N.Z.J.; investigation, A.B., G.A.; resources, S.K., S.J.H.; data curation, G.A. and S.K.; writing—original draft preparation, M.Q.; writing—review and editing, M.Q., A.B., D.M., N.Z.J.; visualization, A.B., G.A.; supervision, D.M.; project administration, D.M. and G.A.; funding acquisition, M.M. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by Taif University Researchers Supporting Project number (TURSP-2020/10) Taif University, Taif, Saudi Arabia.

Data Availability Statement

In this research Multi-PIE face dataset has been used. Which is state of the art freely available dataset has over 750,000 images of 337 people faces taken in different angles and with different expressions.

Acknowledgments

The authors would like to acknowledge Taif University Researchers Supportng Project number (TURSP-2020/10) Taif University, Taif, Saudi Arabia.

Conflicts of Interest

The authors declare that they have no conflict of interest to report regarding the present study.

References

- Verma, M.; Raman, B. Local neighborhood difference pattern: A new feature descriptor for natural and texture image retrieval. Multimed. Tools Appl. 2017, 77, 11843–11866. [Google Scholar] [CrossRef]

- Yu, L.; Feng, L.; Wang, H.; Li, L.; Liu, Y.; Liu, S. Multi-trend binary code descriptor: A novel local texture feature descriptor for image retrieval. Signal Image Video Process. 2017, 12, 247–254. [Google Scholar] [CrossRef]

- Bovik, A.; Clark, M.; Geisler, W.S. Multichannel texture analysis using localized spatial filters. IEEE Trans. Pattern Anal. Mach. Intell. 1990, 12, 55–73. [Google Scholar] [CrossRef]

- Chang, T.; Kuo, C.-C. Texture analysis and classification with tree-structured wavelet transform. IEEE Trans. Image Process. 1993, 2, 429–441. [Google Scholar] [CrossRef] [Green Version]

- Manjunath, B.S.; Ma, W.Y. Texture features for browsing and retrieval of image data. IEEE Trans. Pattern Anal. Mach. Intell. 1996, 18, 837–842. [Google Scholar] [CrossRef] [Green Version]

- Randen, T.; Husøy, J.H. Filtering for texture classification: A comparative study. IEEE Trans. Pattern Anal. Mach. Intell. 1999, 21, 291–310. [Google Scholar] [CrossRef] [Green Version]

- VNaghashi, V. Co-occurrence of adjacent sparse local ternary patterns: A feature descriptor for texture and face image retrieval. Optik 2018, 157, 877–889. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. Syst. 1973, 6, 610–621. [Google Scholar] [CrossRef] [Green Version]

- Laine, A.; Fan, J. Texture classification by wavelet packet signatures. IEEE Trans. Pattern Anal. Mach. Intell. 1993, 15, 1186–1191. [Google Scholar] [CrossRef] [Green Version]

- Unser, M. Texture classification and segmentation using wavelet frames. IEEE Trans. Image Process. 1995, 4, 1549–1560. [Google Scholar] [CrossRef] [Green Version]

- Tan, X.; Triggs, W. Enhanced Local Texture Feature Sets for Face Recognition Under Difficult Lighting Conditions. IEEE Trans. Image Process. 2010, 19, 1635–1650. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zheng, M.; Qi, G.; Zhu, Z.; Li, Y.; Wei, H.; Liu, Y. Image Dehazing by an Artificial Image Fusion Method Based on Adaptive Structure Decomposition. IEEE Sens. J. 2020, 20, 8062–8072. [Google Scholar] [CrossRef]

- Zhu, Z.; Wei, H.; Hu, G.; Li, Y.; Qi, G.; Mazur, N. A Novel Fast Single Image Dehazing Algorithm Based on Artificial Multiexposure Image Fusion. IEEE Trans. Instrum. Meas. 2020, 70, 1–23. [Google Scholar] [CrossRef]

- Ouahabi, A. Signal and Image Multiresolution Analysis; John Wiley & Sons: Hoboken, NJ, USA, 2012. [Google Scholar]

- Ahmed, S.S.; Messali, Z.; Ouahabi, A.; Trepout, S.; Messaoudi, C.; Marco, S. Nonparametric Denoising Methods Based on Contourlet Transform with Sharp Frequency Localization: Application to Low Exposure Time Electron Microscopy Images. Entropy 2015, 17, 3461–3478. [Google Scholar] [CrossRef] [Green Version]

- Ouahabi, A. A Review of Wavelet Denoising in Medical Imaging. In Proceedings of the 8th International Workshop on Systems, Signal Processing and Their Applications (IEEE/WoSSPA), Algiers, Algeria, 12–15 May 2013; pp. 19–26. [Google Scholar]

- Murala, S.; Maheshwari, R.P.; Balasubramanian, R. Local Tetra Patterns: A New Feature Descriptor for Content-Based Image Retrieval. IEEE Trans. Image Process. 2012, 21, 2874–2886. [Google Scholar] [CrossRef]

- Murala, S.; Wu, Q.J. Local ternary co-occurrence patterns: A new feature descriptor for MRI and CT image retrieval. Neurocomputing 2013, 119, 399–412. [Google Scholar] [CrossRef]

- Liu, L.; Yang, B.; Fieguth, P.; Yang, Z.; Wei, Y. BRINT: A Binary Rotation Invariant and Noise Tolerant Texture Descriptor. In Proceedings of the 2013 IEEE International Conference on Image Processing, Melbourne, Australia, 15–18 September 2013; pp. 255–259. [Google Scholar]

- Vimina, E.R.; Divya, M.O. Maximal multi-channel local binary pattern with colour information for CBIR. Multimed. Tools Appl. 2020, 79, 25357–25377. [Google Scholar] [CrossRef]

- Mistry, Y.D. Textural and color descriptor fusion for efficient content-based image retrieval algorithm. Iran J. Comput. Sci. 2020, 3, 169–183. [Google Scholar] [CrossRef]

- Ren, J.; Jiang, X.; Yuan, J. Noise-Resistant Local Binary Pattern With an Embedded Error-Correction Mechanism. IEEE Trans. Image Process. 2013, 22, 4049–4060. [Google Scholar] [CrossRef]

- Zhao, Y.; Jia, W.; Hu, R.-X.; Min, H. Completed robust local binary pattern for texture classification. Neurocomputing 2013, 106, 68–76. [Google Scholar] [CrossRef]

- Jacob, I.J.; Srinivasagan, K.; Jayapriya, K. Local Oppugnant Color Texture Pattern for image retrieval system. Pattern Recognit. Lett. 2014, 42, 72–78. [Google Scholar] [CrossRef]

- Walia, E.; Pal, A. Fusion framework for effective color image retrieval. J. Vis. Commun. Image Represent. 2014, 25, 1335–1348. [Google Scholar] [CrossRef]

- Jain, Y.K.; Yadav, R. Content-based image retrieval approach using three features color, texture and shape. Int. J. Comput. Appl. 2014, 97, 1–8. [Google Scholar]

- Feng, L.; Wu, J.; Liu, S.; Zhang, H. Global Correlation Descriptor: A novel image representation for image retrieval. J. Vis. Commun. Image Represent. 2015, 33, 104–114. [Google Scholar] [CrossRef]

- Asif, M.D.A.; Wang, J.; Gao, Y.; Zhou, J. Composite description based on color vector quantization and visual primary features for CBIR tasks. Multimed. Tools Appl. 2021, 80, 33409–33427. [Google Scholar] [CrossRef]

- Garg, M.; Dhiman, G. A novel content-based image retrieval approach for classification using GLCM features and texture fused LBP variants. Neural Comput. Appl. 2020, 33, 1311–1328. [Google Scholar] [CrossRef]

- Khan, S.; Ilyas, Q.M.; Anwar, W. Contextual Advertising Using Keyword Extraction through Collocation. In Proceedings of the 7th International Conference on Frontiers of Information Technology, Abbottabad, Pakistan, 16–18 December 2009; pp. 1–5. [Google Scholar]

- Mubeen, Z.; Afzal, M.; Ali, Z.; Khan, S.; Imran, M. Detection of impostor and tampered segments in audio by using an intelligent system. Comput. Electr. Eng. 2021, 91, 107122. [Google Scholar] [CrossRef]

- Ranade, S.K.; Anand, S. Color face recognition using normalized-discriminant hybrid color space and quaternion moment vector features. Multimed. Tools Appl. 2021, 80, 10797–10820. [Google Scholar] [CrossRef]

- Sawat, D.D.; Santosh, K.C.; Hegadi, R.S. Optimization of Face Retrieval and Real Time Face Recognition Systems Using Heuristic Indexing. In Proceedings of the International Conference on Recent Trends in Image Processing and Pattern Recognition, Aurangabad, India, 3–4 January 2020; pp. 69–81. [Google Scholar]

- Qian, J.; Yang, J.; Xu, Y.; Xie, J.; Lai, Z.; Zhang, B. Image decomposition based matrix regression with applications to robust face recognition. Pattern Recognit. 2020, 102, 107204. [Google Scholar] [CrossRef]

- Adjabi, I.; Ouahabi, A.; Benzaoui, A.; Taleb-Ahmed, A. Past, Present, and Future of Face Recognition: A Review. Electronics 2020, 9, 1188. [Google Scholar] [CrossRef]

- Adjabi, I.; Ouahabi, A.; Benzaoui, A.; Jacques, S. Multi-Block Color-Binarized Statistical Images for Single-Sample Face Recognition. Sensors 2021, 21, 728. [Google Scholar] [CrossRef] [PubMed]

- Khaldi, Y.; Benzaoui, A.; Ouahabi, A.; Jacques, S.; Taleb-Ahmed, A. Ear Recognition Based on Deep Unsupervised Active Learning. IEEE Sens. J. 2021, 21, 20704–20713. [Google Scholar] [CrossRef]

- El Morabit, S.; Rivenq, A.; Zighem, M.-E.; Hadid, A.; Ouahabi, A.; Taleb-Ahmed, A. Automatic Pain Estimation from Facial Expressions: A Comparative Analysis Using Off-the-Shelf CNN Architectures. Electronics 2021, 10, 1926. [Google Scholar] [CrossRef]

- Mimouna, A.; Alouani, I.; Ben Khalifa, A.; El Hillali, Y.; Taleb-Ahmed, A.; Menhaj, A.; Ouahabi, A.; Ben Amara, N.E. OLIMP: A Heterogeneous Multimodal Dataset for Advanced Environment Perception. Electronics 2020, 9, 560. [Google Scholar] [CrossRef] [Green Version]

- Dubey, S.R.; Singh, S.K.; Singh, R.K. Local Wavelet Pattern: A New Feature Descriptor for Image Retrieval in Medical CT Databases. IEEE Trans. Image Process. 2015, 24, 5892–5903. [Google Scholar] [CrossRef]

- Giveki, D.; Soltanshahi, M.A.; Montazer, G.A. A new image feature descriptor for content based image retrieval using scale invariant feature transform and local derivative pattern. Optik 2017, 131, 242–254. [Google Scholar] [CrossRef]

- Wu, X.; Sun, J.; Fan, G.; Wang, Z. Improved Local Ternary Patterns for Automatic Target Recognition in Infrared Imagery. Sensors 2015, 15, 6399–6418. [Google Scholar] [CrossRef] [Green Version]

- Ouahabi, A. Multifractal Analysis for Texture Characterization: A New Approach Based on DWT. In Proceedings of the 10th International Conference on Information Science, Signal Processing and their Applications (ISSPA 2010), Kuala Lumpur, Malaysia, 10–13 May 2010; pp. 698–703. [Google Scholar]

- Meriem, D.; Abdeldjalil, O.; Hadj, B.; Adrian, B.; Denis, K. Discrete Wavelet for Multifractal Texture Classification: Application to Medical Ultrasound Imaging. In Proceedings of the 2010 IEEE International Conference on Image Processing, Hong Kong, China, 26–29 September 2010; pp. 637–640. [Google Scholar]

- Gupta, S.; Thakur, K.; Kumar, M. 2D-human face recognition using SIFT and SURF descriptors of face’s feature regions. Vis. Comput. 2020, 37, 447–456. [Google Scholar] [CrossRef]

- Villegas-Cortez, J.; Benavides-Alvarez, C.; Avilés-Cruz, C.; Román-Alonso, G.; de Vega, F.F.; Chávez, F.; Cordero-Sánchez, S. Interest points reduction using evolutionary algorithms and CBIR for face recognition. Vis. Comput. 2020, 37, 1883–1897. [Google Scholar] [CrossRef]

- Karanwal, S.; Diwakar, M. Two novel color local descriptors for face recognition. Optik 2020, 226, 166007. [Google Scholar] [CrossRef]

- Agarwal, M.; Singhal, A. Directional local co-occurrence patterns based on Haar-like filters. Multimed. Tools Appl. 2021, 1–15. [Google Scholar] [CrossRef]

- Al-Bahri, I.M.; Fageeri, S.O.; Said, A.M.; Sagayee, G.M.A. A Comparative Study between PCA and Sift Algorithm for Static Face Recognition. In Proceedings of the 2020 International Conference on Computer, Control, Electrical, and Electronics Engineering (ICCCEEE), Khartoum, Sudan, 21–23 September 2021; pp. 1–5. [Google Scholar]

- Verma, M.; Raman, B. Center symmetric local binary co-occurrence pattern for texture, face and bio-medical image retrieval. J. Vis. Commun. Image Represent. 2015, 32, 224–236. [Google Scholar] [CrossRef]

- Verma, M.; Raman, B.; Murala, S. Local extrema co-occurrence pattern for color and texture image retrieval. Neurocomputing 2015, 165, 255–269. [Google Scholar] [CrossRef]

- Verma, M.; Raman, B. Local tri-directional patterns: A new texture feature descriptor for image retrieval. Digit. Signal Process. 2016, 51, 62–72. [Google Scholar] [CrossRef]

- Song, Y.; Li, Q.; Feng, D.; Zou, J.J.; Cai, W. Texture image classification with discriminative neural networks. Comput. Vis. Media 2016, 2, 367–377. [Google Scholar] [CrossRef] [Green Version]

- Charles, Y.R.; Ramraj, R. A novel local mesh color texture pattern for image retrieval system. AEU Int. J. Electron. Commun. 2016, 70, 225–233. [Google Scholar] [CrossRef]

- Trappey, A.J.; Trappey, C.V.; Shih, S. An intelligent content-based image retrieval methodology using transfer learning for digital IP protection. Adv. Eng. Inform. 2021, 48, 101291. [Google Scholar] [CrossRef]

- Christy, A.J.; Dhanalakshmi, K. Content-Based Image Recognition and Tagging by Deep Learning Methods. Wirel. Pers. Commun. 2021, 1–26. [Google Scholar] [CrossRef]

- Zhao, M.; Zhang, H.; Sun, J. A novel image retrieval method based on multi-trend structure descriptor. J. Vis. Commun. Image Represent. 2016, 38, 73–81. [Google Scholar] [CrossRef]

- Bala, A.; Kaur, T. Local texton XOR patterns: A new feature descriptor for content-based image retrieval. Eng. Sci. Technol. Int. J. 2016, 19, 101–112. [Google Scholar] [CrossRef] [Green Version]

- Banerjee, P.; Bhunia, A.K.; Bhattacharyya, A.; Roy, P.P.; Murala, S. Local Neighborhood Intensity Pattern—A new texture feature descriptor for image retrieval. Expert Syst. Appl. 2018, 113, 100–115. [Google Scholar] [CrossRef] [Green Version]

- Bhunia, A.K.; Bhattacharyya, A.; Banerjee, P.; Roy, P.P.; Murala, S. A novel feature descriptor for image retrieval by combining modified color histogram and diagonally symmetric co-occurrence texture pattern. Pattern Anal. Appl. 2019, 23, 703–723. [Google Scholar] [CrossRef] [Green Version]

- Manickam, A.; Soundrapandiyan, R.; Satapathy, S.C.; Samuel, R.D.J.; Krishnamoorthy, S.; Kiruthika, U.; Haldar, R. Local Directional Extrema Number Pattern: A New Feature Descriptor for Computed Tomography Image Retrieval. Arab. J. Sci. Eng. 2021, 1–23. [Google Scholar] [CrossRef]

- Li, X.; Yang, J.; Ma, J. Recent developments of content-based image retrieval (CBIR). Neurocomputing 2021, 452, 675–689. [Google Scholar] [CrossRef]

- Bedi, A.K.; Sunkaria, R.K. Local Tetra-Directional Pattern—A New Texture Descriptor for Content-Based Image Retrieval. Pattern Recognit. Image Anal. 2020, 30, 578–592. [Google Scholar] [CrossRef]

- Ponnmoli, K.; Selvamuthukumaran, D.S. Analysis of Face Recognition using Manhattan Distance Algorithm with Image Segmentation. Int. J. Comput. Sci. Mob. Comput. 2014, 3, 18–27. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).