Abstract

The creation of trustworthy models of the equities market enables investors to make better-informed choices. A trading model may lessen the risks that are connected with investing and make it possible for traders to choose companies that offer the highest dividends. However, due to the high degree of correlation between stock prices, analysis of the stock market is made more difficult by batch processing approaches. The prediction of the stock market has entered a technologically advanced era with the advent of technological marvels such as global digitization. For this reason, artificial intelligence models have become very important due to the continuous increase in market capitalization. The novelty of the proposed study is the development of the robustness time series model based on deep leaning for forecasting future values of stock marketing. The primary purpose of this study was to develop an intelligent framework with the capability of predicting the direction in which stock market prices will move based on financial time series as inputs. Among the cutting-edge technologies, artificial intelligence has become the backbone of many different models that predict the direction of markets. In particular, deep learning strategies have been effective at forecasting market behavior. In this article, we propose a framework based on long short-term memory (LSTM) and a hybrid of a convolutional neural network (CNN-LSTM) with LSTM to predict the closing prices of Tesla, Inc. and Apple, Inc. These predictions were made using data collected over the past two years. The mean squared error (MSE), root mean squared error (RMSE), normalization root mean squared error (NRMSE), and Pearson’s correlation (R) measures were used in the computation of the findings of the deep learning stock prediction models. Between the two deep learning models, the CNN-LSTM model scored slightly better (Tesla: R-squared = 98.37%; Apple: R-squared = 99.48%). The CNN-LSTM model showed a superior performance compared with the single deep learning LSTM and existing systems in predicting stock market prices.

1. Introduction

A high quality of living can be achieved when nations prioritize the growth and development of their economies in order to maintain adequate levels of public spending. In today’s fast-paced economy, big businesses spring up to take advantage of untapped opportunities and adapt to the constant flux of the international marketplace [1,2]. The stock market is a marketplace where diverse assets may be bought and sold by participating investors on public, private, and mixed-ownership stock exchanges [3]. Shares of publicly traded corporations are traded on the open stock exchange, whereas shares of privately owned companies are traded on the private stock exchange. The mixed-ownership stock exchange is invested in businesses with common shares that can be traded on the public stock market only in limited circumstances. Mixed-ownership stock exchanges are established in countries such as the United Kingdom (London Stock Exchange) and the United States (New York Stock Exchange) [4,5,6,7,8,9].

Investors have been attempting to foresee fluctuations in share prices ever since stock capital markets were first established. At that time, however, the amount of data accessible to them was rather restricted, and the methods for processing these data were relatively straightforward. Since then, the amount of data made available to investors has substantially increased, and new methods of processing these data have been made available as well. Even with all the recent advances in technology and cutting-edge trading algorithms, it is still an exceedingly difficult challenge for the majority of academics and investors to accurately predict the movements of stock prices. Traditional models, which have been in use for decades and are typically based on fundamental analysis, technical analysis, and statistical methods (such as regression in [10]), are frequently unable to completely reflect the complexity of the problem that is currently being addressed.

The stock market serves as the backbone of every economy, and the primary objectives of any stock market investment are to earn a high return and reduce losses to a minimum [4]. Therefore, countries should strive to strengthen their stock markets, since doing so is associated with economic growth [11]. Since the stock market is a potential source of quick returns on investment, making profitable stock market predictions is a viable means to financial independence. The prediction of the stock market is not linear, which makes it more difficult to forecast the stock prices of a particular firm in a certain market [12]. As a consequence, researchers and investors are required to identify methods that have the potential to lead to more accurate outcomes and bigger earnings [13]. Machine learning models that have been around for some time, such as autoregressive integrated moving average (ARIMA), are inferior to more traditional machine learning models [14]. Additionally, research shows that deep learning models such as long short-term memory (LSTM) outperform machine learning models such as support vector regression (SVR) [15]. Artificial neural networks (ANNs) were shown to be the superior deep learning model over support vector machines [16].

The advances made in machine learning and deep learning have created new opportunities for building stock price movement prediction models based on time-series data characterized by high cardinality, such as large object (LOB) data. These models are used to forecast how stock prices will change in the future. As a direct result, this particular field has garnered an ever-increasing amount of attention from researchers over the course of the past several years. The performance of the recommended models in terms of prediction is typically reported to be quite high. The authors of several state-of-the-art machine learning and deep learning models (e.g., [17,18]) have claimed that these models have an accuracy of more than 80%. From a more pragmatic point of view, these outcomes look too good to actually be repeatable when carrying out stock trading in the real world.

It is important to keep in mind, however, that the stock market is a trading platform that is ultimately controlled by the forces of supply and demand. In this paper, deep learning models were developed to anticipate momentum, strength, and volatility indicators for the purpose of assisting investors in making judgments that would provide accuracy and safety against rapid volatility in opposite directions to a transaction. To find the strategy that is most accurate for predicting future price fluctuations, we carried out a comprehensive investigation.

2. Background of the Study

Predicting the values of currency exchanges and stock markets has been the subject of a large number of studies [19,20,21,22,23] in recent years. In [24], a generative adversarial network architecture is described, and LSTM is recommended for use as a generator. As a discriminator for the layout, multi-layer perceptron (MLP) was suggested. Multiple metrics have been utilized to make comparisons between GAN, LSTM, ANNs, and SVR. Across the board, the suggested generative adversarial network (GAN) model was shown to be the most effective. The use of big data would make it possible to innovate more quickly and with greater efficiency. Examples of financial innovations that have contributed to the development of the financial sector and the expansion of the economy include exchange-traded funds, venture capital, and equity funds [25,26,27,28,29,30].

Because of this issue, there is a need for intelligent systems that can retrieve real-time pricing information, which can improve investors’ ability to maximize their profits [28]. Decision support, modeling expertise, and automation of complex tasks have all benefited from the development of intelligent systems and artificial intelligence techniques in recent years. Some examples include artificial neural networks (ANNs), genetic algorithms, support vector machines, machine learning, probabilistic belief networks, and fuzzy logic [31,32]. Among these methods, ANNs are the most widely used in a variety of fields. ANNs are able to evaluate complicated non-linear relationships between input factors and output variables because they learn directly from the training data. This is the primary reason for this ability. Researchers investigating the use of ANNs for a variety of decision support systems have found that one of the most appealing aspects of ANNs is their capacity to serve as models for a broad range of real-world systems. However, despite growing popularity for the use of ANNs, only limited success has been realized thus far. This is mostly because of the unpredictable behavior and complexity of the stock market [33]. Several researchers have investigated the use of artificial intelligence to make predictions on stock prices. For instance, we analyzed and reviewed approximately 100 studies that focused on neural and neuro-fuzzy techniques that were applied to forecast stock markets; we carried out a comparative research review of three popular artificial intelligence techniques—expert systems, ANNs, and hybrid intelligent systems—that were applied in finance; and they methodically analyzed and reviewed stock market prediction techniques [34,35].

It was discovered that LSTM performs far better than other models, as it achieved a score of 0.0151, while LR came in second with a score of 13.872, and SVR performed poorly with a score of 34.623 [36,37]. Both a correlational and a causal model were proposed based on graph theory. The findings demonstrated that graph-based models are superior to conventional approaches, with a causation-based model achieving somewhat better outcomes than a correlation-based model. The recurrent neural network (RNN), LSTM, and gated recurrent unit (GRU) models are presented in their most fundamental forms in [38]. The GRU model performed the best, with an accuracy of 0.670 and a log loss of 0.629. This was followed by the LSTM model, which performed with an accuracy of 0.665 and a log loss of 0.629, and the RNN model, which performed with an accuracy of 0.625 and a log loss of 0.725. Nevertheless, once both LSTM and GRU were modified by the addition of a dropout layer, the GRU model did not exhibit any improvement while the LSTM model showed a minor improvement in its performance.

The LSTM model, which is an RNN architecture utilized in natural language processing, was proposed in [39] to forecast NIFTY 50 stock values. The findings indicated that the model’s performance improved with the addition of new parameters and epochs, and the model’s root mean squared error (RMSE) value was 0.00859 when it was run with 500 iterations using the high, low, open, and closed parameter sets. Four different types of deep learning models were used in [40], and those models were multilayer perceptron (MLP), recurrent neural network (RNN), CNN, and LSTM. Every one of these models was educated with the use of data provided by Tata Motors. After initialization and training, the models were put to the test by being asked to predict future stock values. The models were able to recognize the patterns of stock movement, even in other stock markets, which allowed for the achievement of good outcomes. CNN was shown to be better than the other three models, despite the fact that this indicates that deep learning models are able to uncover the underlying dynamics. The ARIMA model was part of that research as well, but it was unable to understand the underlying dynamics that occur between different time series.

The authors of [41] made their predictions about the stock market using a CNN, which is a kind of deep learning. Furthermore, the genetic algorithm (GA) was used to systematically improve the parameters of the CNN technique. The findings demonstrated that the GA-CNN, which is a hybrid approach that combines GA and CNN, outperformed the comparison models. This was demonstrated by the fact that the GA-CNN outperformed the comparison models. Convolutional neural networks were proposed by Sim et al. [42] as a method for predicting market values (CNN). The main objective of the research was to enhance the performance of CNNs when applied to data pertaining to stock markets. Wen et al. [43] took a fresh approach by applying the CNN algorithm to noisy temporal data based on common patterns. This was a revolutionary method. The findings provided conclusive evidence that the technique is effective, exhibiting a 4–7% improvement in accuracy in comparison to more traditional signal processing methodologies.

Convolutional neural networks (CNNs) and recurrent neural networks were used in the study by Rekha et al. [44] to analyze and evaluate the performance of two different methodologies when applied to data from the stock market (RNNs). Lee et al. [45] used data from many countries for the purpose of training and testing their model in order to make a prediction about the global stock market using CNNs. Baldo, A., Cuzzocrea et al. [46] used the RNN model for forecasting financial marketing.

Additionally, the literature on the usage of artificial intelligence and soft computing techniques for stock market prediction is yet to develop an accurate predictive model; however, the disadvantage of these studies are, firstly, that the pre-processing of input data has not been carried out with precision in the extant literature so far. Secondly, the extant literature is, at best, partially successful in optimizing the parameter selections and model architectures. Thirdly, they used the pre-training model for predicting values, but the forecasting future for helping the investors was not used. Whereas the advantage of the proposed system is the achieved lesser prediction errors, preprocessing was used for enhancing the performance of the proposed prediction models.

3. Materials and Methods

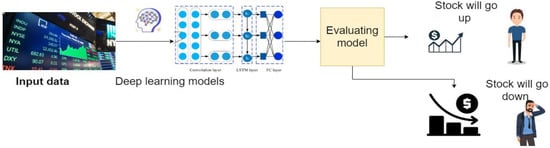

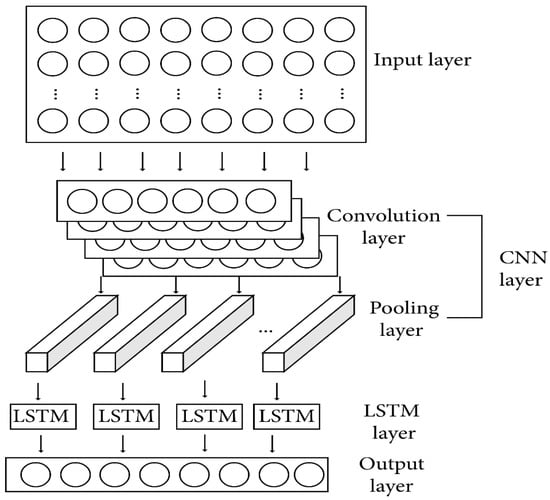

For the purposes of forecasting the future values of Tesla and Apple stocks, we used a deep learning approach layered on top of the LSTM and CNN models. The framework of the deep learning model for predicting stock market values is presented in Figure 1.

Figure 1.

The framework of the deep learning model used to forecast the prices of stock markets.

3.1. Dataset

3.1.1. Tesla, Inc. Data

Tesla, Inc. is an American company that specializes in electric vehicles and sustainable energy and has its headquarters in Palo Alto, CA, USA. The current goods and services provided by Tesla include electric cars, battery energy storage on a scale ranging from the house to the grid, solar panels, solar roof tiles, and other products and services associated with this industry. This dataset offers historical information regarding the shares of TESLA INC (TSLA). The information can be accessed on a daily basis. USD is used as the currency. The dataset was collected for the period of 4 August 2014 to 17 August 2017.

3.1.2. Apple, Inc. Data

Apple, Inc. is a global technology firm that develops, manufactures, and promotes a range of electronic products, including smartphones, personal computers, tablets, wearables, and accessories. The company’s stock is traded on the New York Stock Exchange under the ticker code AAPL. The iPhone, the Mac series of personal computers and laptops, the iPad, the Apple Watch, and Apple TV are some of the company’s most well-known and successful product lines. The iCloud cloud service and Apple’s digital streaming content services, such as Apple Music and Apple TV+, are two examples of the company’s rapidly expanding service industry, which also covers the company’s other revenue sources. This dataset offers historical information regarding the shares of Apple, Inc. The information can be accessed on a daily basis. USD is used as the currency. In total, 70% of the dataset was used as a training set while the remaining 30% was placed aside as a test set and was not presented to the model in any way. In addition to the test set, we set aside 30% of the training dataset as a validation split while the model was being trained. This enabled the model to adjust the weights in a manner that is more applicable to the real world and prevent it from becoming overfit during the training period of 3 January 2010 to 28 February 2020.

3.2. Normalization of Data

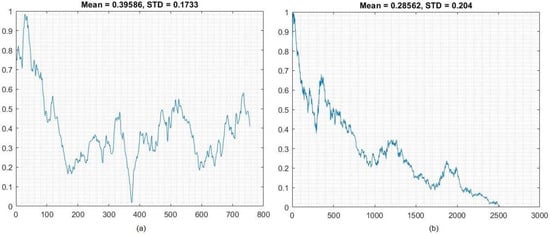

Normalization is a useful approach for scaling the data so that they fall into a particular interval when the amount of data being utilized is rather vast. Normalization improves the speed and accuracy of gradient descent. Min–max normalization is commonly used for scaling the data between specific ranges by applying a linear transformation to the initial data. The lowest and highest values of an attribute are denoted by the notations and , respectively. The value is mapped to a value in the range [ and ] using computation to determine the difference between the two values. Figure 2 displays the normalization of the Tesla (mean = 0.3904) and Apple (mean = 0.2856) data:

where the xmax and xmin variables represent the highest and lowest possible values, respectively. The lowest number is denoted by the notation , whereas the highest number is written as .

Figure 2.

Normalization of (a) Tesla and (b) Apple data.

3.3. Prediction Models

3.3.1. Convolutional Neural Networks (CNNs)

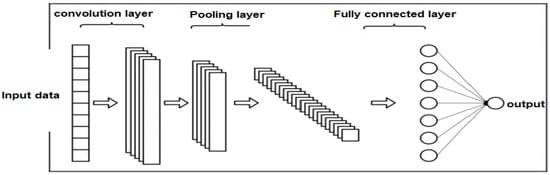

LeCun et al. [47] first presented the concept of CNNs in their 1995 article. The primary purpose of a CNN is to perform feature extraction to capture topology. In order to achieve this, filters are used on groups of input data. CNNs have found use in a broad range of domains, such as image processing, speech recognition, and time series analysis, thanks to their ability to capture both sequential and spatial input. The layers of a convolutional neural network (CNN) are as follows: input, convolution, pooling, and output.

- (a)

- Convolution layer

The data points in a neighborhood are subjected to various filters as they are processed by a convolutional layer. The succeeding layer receives the result of the convolution operation once it has been processed. The output matrix is a matrix that is generated by multiplying the input matrix by the filter, which is itself a matrix. The primary characteristics of the filter are its weight and its form. The weight is acquired by the model while it is being trained, and the shape represents the amount of space covered by the filter. Figure 3 provides a visual representation of an example of the convolution technique.

Figure 3.

Structure of a CNN.

- (b)

- Pooling layer

The data are subsampled using the pooling layer as the intermediate step. The dimensions may be decreased by the use of pooling, which in turn results in lower calculation costs. After the convolutional layer produces the data, the data are gathered by pooling, which then outputs the data in accordance with the kind of pooling that was selected. The characteristics collected from CNN are allocated to layers that are either dense or completely linked. The following equation describes the relationship between the input and output of a perceptron in a multi-layer perceptron (MLP):

where refers to the output of the jth perceptron in a neural network, and f refers to an activation function. The activation function takes its input from the layer below it, which is calculated as the total of the inputs from the layer below it multiplied by the weight associated with that layer.

- (c)

- Fully Connected Layer (FC)

The fully connected layer is the layer in a typical neural network that connects each neuron in the network to each and every other neuron in the network. It is shown here that each of the nodes in the last frames of the fully connected layer—whether convolutional, ReLU, or pooling—is linked as a vector to the initial layer. These are the CNN parameters that are utilized the most often in these layers and training them takes a significant amount of effort.

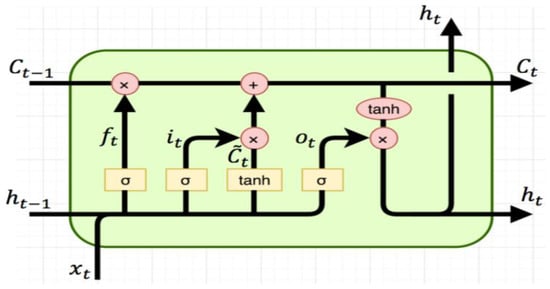

3.3.2. LSTM

The LSTM model, which is based on RNNs, is utilized in situations in which long-term dependencies play a substantial role in the learning process. Long short-term memory (LSTM) is achieved by adding forgotten gates on top of the two primary gates (input and output gates), allowing LSTM to retain information about dependencies for a considerable amount of time. Forgotten gates teach the model when it is okay to forget. [42]. This is a key benefit of using LSTM. The operation of the LSTM cell is shown in Figure 4. Cells, an input gate, an output gate, and a forget gate are the fundamental components that make up an LSTM unit. There is no limit to the amount of values that may be saved in the memory of the cell, and there are three gates that regulate how information enters and leaves the structure. LSTM is particularly effective when it comes to recognizing patterns in time series whose duration is unknown and generating predictions about such time series [48,49,50].

Figure 4.

LSTM model.

As shown in Figure 4, and represent the previous and current cell states, respectively. Both ht−1 and represent the output of the cell that came before the current one. While is the input gate, it is often overlooked as a gate. A sigmoid gate’s output, denoted by ot, is seen here. All of the data from the cell gates is sent from through the wire linking them. In order to remember anything again, the layer decides [43] and the output is multiplied by . Afterwards, is multiplied by the product of the sigmoid layer gate and the tanh layer gate, and the output is created by conducting a point-wise multiplication of and tanh.

Both CNN and LSTM include a variety of parameters that may be adjusted, such as the number of layers and the number of filters that are used in each layer. The primary indicator of the input data has to be selected so that the intended output may be achieved. For CNN, the size of each layer of filters is a highly crucial consideration. Figure 5 shows the CNN-LSTM structure. The parameters of the CNN-LSTM model are presented in Table 1.

Figure 5.

Structure of the CNN-LSTM model.

Table 1.

Deep learning parameters.

3.4. Evaluation Metrics

The mean squared error (MSE), RMSE, NRMSE, and R-squared (R2) were the assessment criteria of the methodologies utilized to evaluate the forecasting impact of the CNN-LSTM and LSTM models:

where and are the experimental and prediction values, respectively, and is the number of samples.

4. Experiments

The MSE, RMSE, NRMSE, and R2 values were determined by comparing the prediction stock closing price with the actual stock closing price throughout the tests. We then utilized the forecasted data to determine the range of the stock price change on the prediction day. These computations were performed in MATLAB and we used the deep learning and financial libraries on MATLAB.

4.1. Results

4.1.1. Deep Learning Results of Tesla Data

Table 2 displays the results of the LSTM and CNN-LSTM models used to forecast Tesla’s closing price during training. The results show the reliability and robustness of the deep learning model to predict the closing price based on the training. CNN-LSTM scored low for prediction errors according to the RMSE (0.001190) and NRMSE (0.030032) metrics.

Table 2.

Deep learning results for predicting the closing price of Tesla during the training phase.

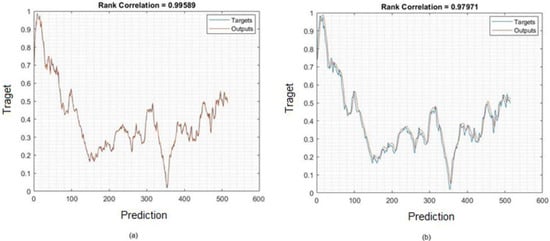

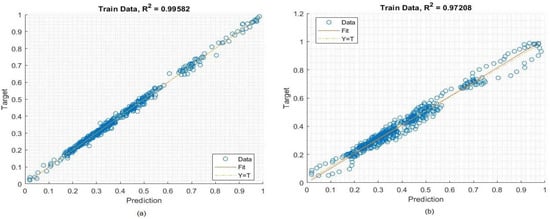

Figure 6 demonstrates that there is a perfect match between the forecasted values of Tesla’s stock price and R percentage (shown on the Y-axis) and the actual values (shown on the X-axis) for the stock marketing dataset during the training phase. This is evidenced by the fact that there is no significant difference between the actual predicted values. Because both CNN-LSTM and LSTM had high values of R percentage (99.58% and 97.97%, respectively) and very low values of MSE and RMSE, then they were ready to be put through the paces in the training phase. These values signify that the system is prepared to meet the specified targets.

Figure 6.

Rank correlation of the deep learning models (a) CNN-LSTM and (b) LSTM for predicting the Tesla stock market closing price during the training phase.

Figure 7 illustrates the histogram error that occurred for the predicted values while the model was in the training stage. Examination of the histogram error metrics allows for the calculation of the degree of discordance that exists between the predicted and actual values. These error values indicate how the predicted values deviate from the goal values, and because of this, these numbers might be in the negative. CNN-LSTM had a very low prediction error (0.00045589), whereas the mean error of the LSTM model was 0.01042.

Figure 7.

Histogram of the deep learning models (a) CNN-LSTM and (b) LSTM for predicting the Tesla stock market closing price during the training phase.

After training, the remaining 30% of the data were used to validate the results of the deep leaning models during the testing phase (Table 3). The testing phase is very important for examining and estimating the strengths of the proposed deep learning models for predicting the closing price of Tesla. The CNN-LSTM model achieved the best results (MSE = 0.0001308; RMSE = 0.01143).

Table 3.

Deep learning results for predicting the closing price of Tesla during the testing phase.

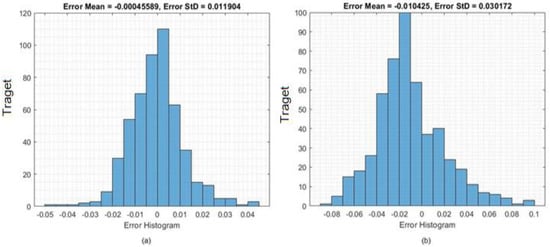

The performance of the deep learning models during the testing phase is shown in Figure 8. A higher R percentage was found for CNN-LSTM (99.26%) compared to LSTM (97.77%). We then investigated whether the closing prices of the prediction values were close to the actual values.

Figure 8.

Rank correlation of deep learning models (a) CNN-LSTM and (b) LSTM for predicting the Tesla stock market closing price during the testing stage.

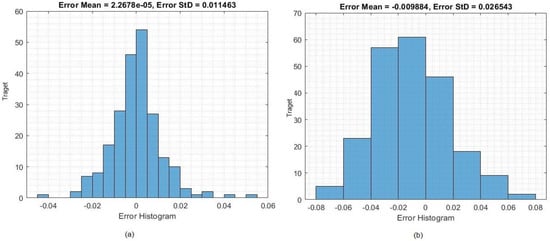

Figure 9 presents the deep learning models’ accuracy histograms for predicting the Tesla stock market closing price during the testing phase. Histogram errors were used to identify the differences between the actual and predicted data. The CNN-LSTM model scored a low mean error of 2.2678 × 10−5, 0.00544, and the mean error of the LSTM model was −0.00988.

Figure 9.

Histogram of deep learning models (a) CNN-LSTM and (b) LSTM for predicting the Tesla stock market closing price during the testing phase.

4.1.2. Deep Learning Results of Apple Data

The deep learning models were then applied to predict the stock market closing price of Apple. The results of the deep learning models during the training phase (using 70% of the data) are presented in Table 4. The CNN-LSTM model achieved lower prediction (5.7258 × 10−5) according to the MSE metrics.

Table 4.

Deep learning results for predicting the closing price of Apple during the training phase.

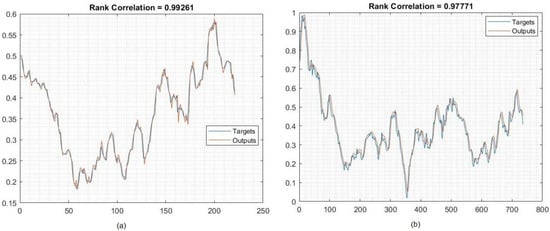

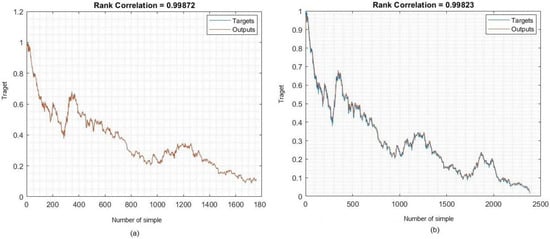

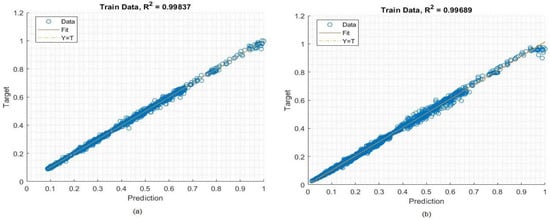

The performance of the CNN-LSTM and LSTM models is presented in Figure 10. According to the correlation metric evaluation, the CNN-LSTM model attained a higher R percentage (99.87%). This confirms that this deep learning model is appropriate for forecasting the future values of stock market closing prices.

Figure 10.

Rank correlation of deep learning models (a) CNN-LSTM and (b) LSTM for predicting the Apple stock market closing price during the training phase.

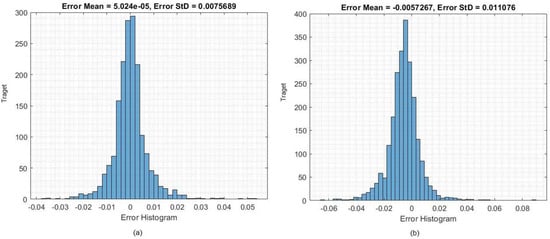

The histogram metric is very important for finding the mean error between the actual and predicted values. The histogram results of the deep leaning approaches for predicting the Apple stock market closing price during the training phase are presented in Figure 11. The mean error of CNN-LSTM was 5.024 × 10−5, whereas the error of LSTM was 0.005726.

Figure 11.

Histogram of the deep learning models (a) CNN-LSTM and (b) LSTM for predicting the Apple stock market closing price during the training phase.

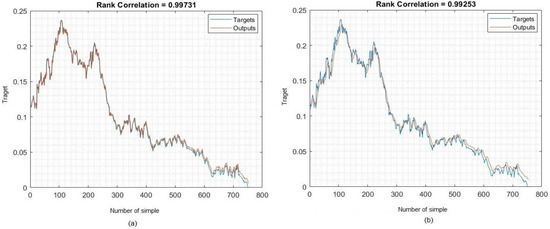

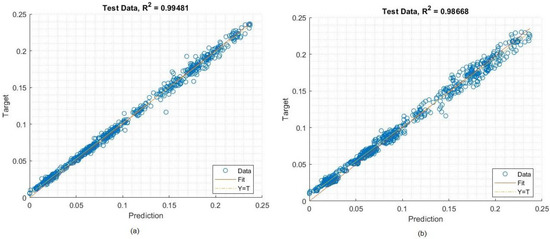

Table 5 and Figure 12 present the MSE and RMSE of the deep learning methods for forecasting the Apple stock market closing price during the testing phase. While the MSE and RMSE of CNN-LSTM were the lowest possible values, the R percentage (99.73%) was rather close to 100. The MSE and RMSE of CNN-LSTM in this study were lower than those of LSTM. For LSTM, the R percentage was 99.25%, which is close to 1. CNN-LSTM had slightly better results than LSTM.

Table 5.

Deep learning results for predicting the closing price of Apple during the testing phase.

Figure 12.

Rank correlation of the deep learning models (a) CNN-LSTM and (b) LSTM for predicting the Apple stock market closing price during the testing phase.

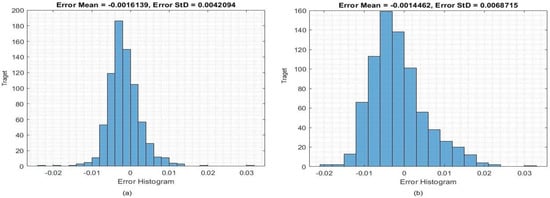

The histogram errors of the deep learning models during the testing phase are shown in Figure 13. The mean histogram error was 0.0016139 for CNN-LSTM and 0.00144 for LSTM.

Figure 13.

Histogram of the deep learning models (a) CNN-LSTM and (b) LSTM for predicting the Apple stock market closing price during the testing phase.

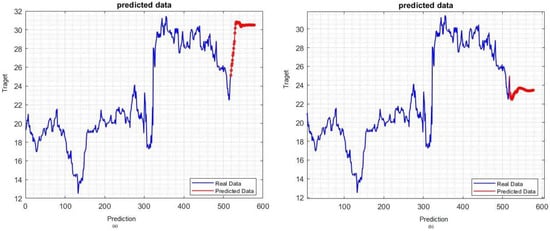

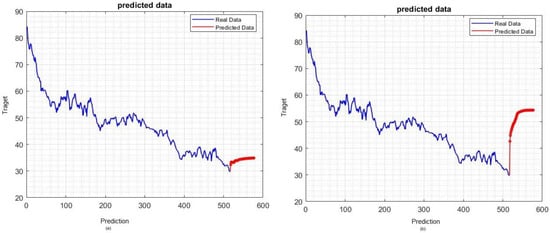

4.1.3. Forecasting Future Values of Tesla and Apple Data

In order to test the effectiveness of the CNN-LSTM and LSTM models, we forecasted future values from 17 August 2017 to 18 October 2017 for Tesla and from 28 February 2020 to 29 April 2020 for Apple (60-day periods). It was discovered that the error value had the least variation in the day after the prediction, which was carried out with the intention of forecasting future fluctuations. Figure 14 shows that in forecasting the values of the Tesla company using the deep leaning model, it is observed that the close price of 18 October 2017 was $25.14 and forecasting the values of last days of 18 October 2017, it is $30.51 whereas the forecasting value of LSTM for 18 October 2017 is $23.46. The forecasting values of the Apple company identified by employing the deep leaning model are presented in Figure 15, where the value of 17 August 2017 is $29.86 and the future values of 29 April 2020 for CNN-LSTM and LSTM are $34.88 and $54.34, respectively.

Figure 14.

Forecasting the future values of Tesla using (a) CNN-LSTM and (b) LSTM.

Figure 15.

Forecasting the future values of Apple using (a) CNN-LSTM and (b) LSTM.

5. Discussion

The stock market is an extremely important topic in today’s society. Investors are able to purchase additional stocks with relative ease and stand to make large gains from dividends distributed as part of the company’s incentive scheme for shareholders. Through stock brokerages and electronic trading platforms, investors can also trade their own stocks with other traders on the stock market. On the stock market, traders want to acquire stocks with values that are forecasted to increase and sell stocks with values that are forecasted to fall. Therefore, before making a trading choice to buy or sell a stock, stock traders need to be able to accurately forecast the general behavior trends of the stock they are trading. The more correct their estimate is regarding how a stock will behave, the more profit they will make from that prediction. As a result, it is vital to design an autonomous algorithm that is able to precisely predict market movements in order to assist traders in maximizing their profits. However, the prediction of trends in the stock market is a difficult task due to a number of elements, including industry performance, company news and performance, investor attitudes, and economic variables.

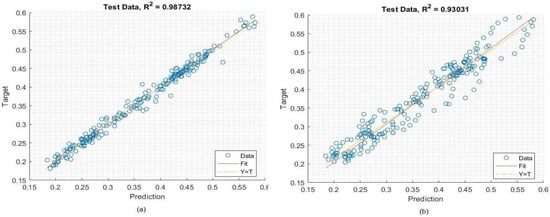

Therefore, in this paper, the deep learning algorithms LSTM and hybrid CNN-LSTM were tested for their ability to predict Tesla and Apple stocks. The performance of these models during the training and testing phase for Tesla are presented in Figure 16 and Figure 17, respectively. CNN-LSTM scored higher than LSTM during both the training (CNN-LSTM: R2 = 99.58%; LSTM: R2 = 97.20%) and testing (CNN-LSTM: R2 = 98.37%; LSTM: R2 = 93.03%) phases. Therefore, CNN-LSTM achieved better accuracy compared to LSTM.

Figure 16.

Squared regression plot of the deep learning models (a) CNN-LSTM and (b) LSTM during the training phase for Tesla.

Figure 17.

Squared regression plot of the deep learning models (a) CNN-LSTM and (b) LSTM during the testing phase for Tesla.

As can be seen in Figure 18 and Figure 19, there is an outstanding agreement between the predicted and actual values. In addition, extremely high values of the R percent were reported during the training (CNN-LSTM: 99.83%; LSTM: 99.68%) and testing (CNN-LSTM: 99.48%; LSTM: 98.66%) phases. These figures demonstrate that the CNN-LSTM model was more accurate and reliable than LSTM for the Apple data.

Figure 18.

Squared regression plot of the deep learning models (a) CNN-LSTM and (b) LSTM during the training phase for Apple.

Figure 19.

Squared regression plot of the deep learning models (a) CNN-LSTM and (b) LSTM during the testing phase for Apple.

In order to prove the effectiveness of CNN-LSTM, we compared this proposed deep learning model’s result with the result of [51], who also used the companies Tesla and Apple. The results of the CNN-LSTM model proposed in this study compared to the models used in [51] are shown in Table 6. The propped CNN-LSTM model is superior to the other study’s models according to the MSE metric.

Table 6.

Prediction results of the proposed CNN-LSTM system compared to the results of [44].

Because of the potential advantages, it may provide accurate prediction of the future, which has long been a goal of most economies and individuals. Those who are interested in exploring stock market prediction can also benefit from learning how to forecast fluctuations in stock prices. Researchers will have access to predictions that are more precise than they have ever been because of artificial intelligence. In addition, its precision will increase over time as both technological capabilities and algorithmic precision improve.

Researchers are now able to make market forecasts using non-traditional textual data gleaned from social networks thanks to the development of sophisticated trading algorithms. These models are based on artificial intelligence. In addition, we recommend that may researchers investigate the connection between the use of social media and the performance of the stock market. The economic climate that is created by the news media or the direct observation of the stock market sentiment can be used to make decisions regarding stock marketing.

6. Conclusions

The behavior of the stock market is unpredictable and is dependent on a variety of variables, including the state of the global economy, the geopolitical environment, company performance, investor expectations, and financial reports. A firm’s profits is an important factor that should be considered when assessing gain or loss in the stock price. Additionally, it can be difficult for an investor to forecast how the market will behave. Because the stock market is such an integral component of the economy as a whole, an increasing number of investors are focusing their attention on strategies that will allow them to maximize their returns while mitigating the negative effects of certain risks. The direction of the stock market is influenced by a wide variety of variables, and the information that is pertinent to this topic often takes the shape of a time series. The objective of this study was to anticipate the price at which the stock will close for the day using a composite model called LSTM and CNN-LSTM. The performance of the CNN-LSTM model was significantly better than the LSTM model. To complete this task, the Tesla and Apple stock market data were used. According to the findings of the experiments, the CNN-LSTM and LSTM stock prediction models were superior to the existing models in terms of their ability to make accurate forecasts. The forecasting of the stock price at the end of the trading day was included in this research. The deep learning models were used to predict the closing price of a stock on the next trading day, which is a valuable reference for investments. Because investors want to forecast the closing price and trend of a stock over the next time period, more in-depth studies on the changes in stocks are needed.

A limitation of this study is that we did not use the sentiment information that was derived from the investigation of the financial markets. One of the problems with this study is that it had this constraint.

In future work, we will use an advanced approach to deep learning or a hybrid model that uses both stock price indexes and sentiment news analysis in order to improve the results of the proposed system.

Author Contributions

T.H.H.A. and A.A. resources, T.H.H.A. data curation, A.A. and A.A.; writing—original draft preparation, T.H.H.A. and A.A. writing—review and editing, A.A. visualization, T.H.H.A. and A.A. supervision, T.H.H.A.; project administration, T.H.H.A. and A.A. funding acquisition, T.H.H.A. and A.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research and the APC were funded by The Saudi Investment Bank Chair for Investment Awareness Studies, the Deanship of Scientific Research, The vice Presidency for Graduate Studies and Scientific Research, King Faisal University, Al Ahsa, Saudi Arabia [Grant No. 144].

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

https://www.kaggle.com/code/faressayah/stock-market-analysis-prediction-using-lstm/data (accessed on 25 August 2022).

Acknowledgments

This work was supported by The Saudi Investment Bank Chair for Investment Awareness Studies, the Deanship of Scientific Research, The vice Presidency for Graduate Studies and Scientific Research, King Faisal University, Al Ahsa, Saudi Arabia [Grant No. 144].

Conflicts of Interest

The authors declare no conflict of interest.

References

- Al-qaness, M.A.; Ewees, A.A.; Fan, H.; Abualigah, L.; Abd Elaziz, M. Boosted ANFIS model using augmented marine predator algorithm with mutation operators for wind power forecasting. Appl. Energy 2022, 314, 118851. [Google Scholar] [CrossRef]

- Danandeh Mehr, A.; Rikhtehgar Ghiasi, A.; Yaseen, Z.M.; Sorman, A.U.; Abualigah, L. A novel intelligent deep learning predictive model for meteorological drought forecasting. J. Ambient. Intell. Humaniz. Comput. 2022, 24, 1–5. [Google Scholar] [CrossRef]

- Mexmonov, S. Stages of Development of the Stock Market of Uzbekistan. Арxив Нayчныx Иccлeдoвaний 2020, 24, 6661–6668. [Google Scholar]

- Nti, I.K.; Adekoya, A.F.; Weyori, B.A. A systematic review of fundamental and technical analysis of stock market predictions. Artif. Intell. Rev. 2019, 53, 3007–3057. [Google Scholar] [CrossRef]

- Sengupta, A.; Sena, V. Impact of open innovation on industries and firms—A dynamic complex systems view. Technol. Forecast. Soc. Chang. 2020, 159, 120199. [Google Scholar] [CrossRef]

- Terwiesch, C.; Xu, Y. Innovation Contests, Open Innovation, and Multiagent Problem Solving. Manag. Sci. 2008, 54, 1529–1543. [Google Scholar] [CrossRef]

- Blohm, I.; Riedl, C.; Leimeister, J.M.; Krcmar, H. Idea evaluation mechanisms for collective intelligence in open innovation communities: Do traders outperform raters? In Proceedings of the 32nd International Conference on Information Systems, Cavtat, Croatia, 21–24 June 2010. [Google Scholar]

- Del Giudice, M.; Carayannis, E.G.; Palacios-Marqués, D.; Soto-Acosta, P.; Meissner, D. The human dimension of open innovation. Manag. Decis. 2018, 56, 1159–1166. [Google Scholar] [CrossRef]

- Daradkeh, M. The Influence of Sentiment Orientation in Open Innovation Communities: Empirical Evidence from a Business Analytics Community. J. Inf. Knowl. Manag. 2021, 20, 2150031. [Google Scholar] [CrossRef]

- Chang, P.C.; Liu, C.H. A TSK type fuzzy rule based system for stock price prediction. Expert Syst. Appl. 2008, 34, 135–144. [Google Scholar] [CrossRef]

- Bahadur, S.G.C. Stock market and economic development: A causality test. J. Nepal. Bus. Stud. 2006, 3, 36–44. [Google Scholar]

- Bharathi, S.; Geetha, A.; Sathiynarayanan, R. Sentiment Analysis of Twitter and RSS News Feeds and Its Impact on Stock Market Prediction. Int. J. Intell. Eng. Syst. 2017, 10, 68–77. [Google Scholar] [CrossRef]

- Sharma, A.; Bhuriya, D.; Singh, U. Survey of stock market prediction using machine learning approach. In Proceedings of the 2017 International Conference of Electronics, Communication and Aerospace Technology (ICECA), Coimbatore, India, 20–22 April 2017; pp. 506–509. [Google Scholar]

- Nassar, L.; Okwuchi, I.E.; Saad, M.; Karray, F.; Ponnambalam, K. Deep learning based approach for fresh produce market price prediction. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–7. [Google Scholar]

- Bathla, G. Stock Price prediction using LSTM and SVR. In Proceedings of the 2020 Sixth International Conference on Parallel, Distributed and Grid Computing (PDGC), Waknaghat, India, 6–8 November 2020; pp. 211–214. [Google Scholar]

- Kara, Y.; Boyacioglu, M.A.; Baykan, Ö.K. Predicting direction of stock price index movement using artificial neural networks and support vector machines: The sample of the Istanbul Stock Exchange. Expert Syst. Appl. 2011, 38, 5311–5319. [Google Scholar] [CrossRef]

- Zhang, Z.; Zohren, S.; Roberts, S. Deeplob: Deep convolutional neural networks for limit order books. IEEE Trans. Signal Process. 2019, 67, 3001–3012. [Google Scholar] [CrossRef]

- Wallbridge, J. Transformers for limit order books. arXiv 2020, arXiv:2003.00130. [Google Scholar]

- Watanabe, C.; Shin, J.; Heikkinen, J.; Tarasyev, A. Optimal dynamics of functionality development in open innovation. IFAC Proc. Vol. 2009, 42, 173–178. [Google Scholar] [CrossRef]

- Jeong, H.; Shin, K.; Kim, E.; Kim, S. Does Open Innovation Enhance a Large Firm’s Financial Sustainability? A Case of the Korean Food Industry. J. Open Innov. Technol. Mark. Complex. 2020, 6, 101. [Google Scholar] [CrossRef]

- Le, T.; Hoque, A.; Hassan, K. An Open Innovation Intraday Implied Volatility for Pricing Australian Dollar Options. J. Open Innov. Technol. Mark. Complex. 2021, 7, 23. [Google Scholar] [CrossRef]

- Wu, B.; Gong, C. Impact of open innovation communities on enterprise innovation performance: A system dynamics perspective. Sustainability 2019, 11, 4794. [Google Scholar] [CrossRef]

- Arias-Pérez, J.; Coronado-Medina, A.; Perdomo-Charry, G. Big data analytics capability as a mediator in the impact of open innovation on firm performance. J. Strategy Manag. 2021, 15, 1–15. [Google Scholar] [CrossRef]

- Zhang, K.; Zhong, G.; Dong, J.; Wang, S.; Wang, Y. Stock Market Prediction Based on Generative Adversarial Network. Procedia Comput. Sci. 2019, 147, 400–406. [Google Scholar] [CrossRef]

- Chesbrough, H.W. Open Innovation: The New Imperative for Creating and Profiting from Technology; Harvard Business Press: Boston, MA, USA, 2003. [Google Scholar]

- Moretti, F.; Biancardi, D. Inbound open innovation and firm performance. J. Innov. Knowl. 2020, 5, 1–19. [Google Scholar] [CrossRef]

- Kiran, G.M. Stock Price prediction with LSTM Based Deep Learning Techniques. Int. J. Adv. Sci. Innov. 2021, 2, 18–21. [Google Scholar]

- Bhatti, S.H.; Santoro, G.; Sarwar, A.; Pellicelli, A.C. Internal and external antecedents of open innovation adoption in IT organisations: Insights from an emerging market. J. Knowl. Manag. 2021, 25, 1726–1744. [Google Scholar] [CrossRef]

- Yang, C.H.; Shyu, J.Z. A symbiosis dynamic analysis for collaborative R&D in open innovation. Int. J. Comput. Sci. Eng. 2010, 5, 74. [Google Scholar]

- Esfahanipour, A.; Aghamiri, W. Adapted neuro-fuzzy inference system on indirect approach TSK fuzzy rule base for stock market analysis. Expert Syst. Appl. 2010, 37, 4742–4748. [Google Scholar] [CrossRef]

- Chen, Y.; Dong, X.; Zhao, Y. Stock Index Modeling Using EDA Based Local Linear Wavelet Neural Network. In Proceedings of the 2005 International Conference on Neural Networks Brain, ICNNB’05, Beijing, China, 13–15 October 2005; Volume 3, pp. 1646–1650. [Google Scholar]

- Sharma, G.D.; Yadav, A.; Chopra, R. Artificial intelligence and effective governance: A review, critique and research agenda. Sustain. Futures 2020, 2, 100004. [Google Scholar] [CrossRef]

- Baba, N.; Suto, H. Utilization of artificial neural networks and the TD-learning method for constructing intelligent decision support systems. Eur. J. Oper. Res. 2000, 122, 501–508. [Google Scholar] [CrossRef]

- Atsalakis, G.S.; Valavanis, K.P. Surveying stock market forecasting techniques—Part II: Soft computing methods. Expert Syst. Appl. 2009, 36, 5932–5941. [Google Scholar] [CrossRef]

- Gandhmal, D.P.; Kumar, K. Systematic analysis and review of stock market prediction techniques. Comput. Sci. Rev. 2019, 34, 100190. [Google Scholar] [CrossRef]

- Patil, P.; Wu, C.S.M.; Potika, K.; Orang, M. Stock market prediction using ensemble of graph theory, machine learning and deep learning models. In Proceedings of the 3rd International Conference on Software Engineering and Information Management, Sydney, Australia, 12–15 January 2020; pp. 85–92. [Google Scholar]

- Rana, M.; Uddin, M.M.; Hoque, M.M. Effects of activation functions and optimizers on stock price prediction using LSTM recurrent networks. In Proceedings of the 2019 3rd International Conference on Computer Science and Artificial Intelligence, Normal, IL, USA, 6–8 December 2019; pp. 354–358. [Google Scholar]

- Di Persio, L.; Honchar, O. Recurrent neural networks approach to the financial forecast of Google assets. Int. J. Math. Comput. Simul. 2017, 11, 7–13. [Google Scholar]

- Roondiwala, M.; Patel, H.; Varma, S. Predicting stock prices using LSTM. Int. J. Sci. Res. 2017, 6, 1754–1756. [Google Scholar]

- Hiransha, M.; Gopalakrishnan, E.A.; Menon, V.K.; Soman, K.P. NSE stock market prediction using deep-learning models. Procedia Comput. Sci. 2018, 132, 1351–1362. [Google Scholar]

- Chung, H.; Shin, K.-S. Genetic algorithm-optimized multi-channel convolutional neural network for stock market prediction. Neural Comput. Appl. 2020, 2, 7897–7914. [Google Scholar] [CrossRef]

- Sim, H.S.; Kim, H.I.; Ahn, J.J. Is deep learning for image recognition applicable to stock market prediction? Complexity 2019, 2019, 4324878. [Google Scholar] [CrossRef]

- Wen, M.; Li, P.; Zhang, L.; Chen, Y. Stock Market Trend Prediction Using High-Order Information of Time Series. IEEE Access 2019, 7, 28299–28308. [Google Scholar] [CrossRef]

- Rekha, G.; Sravanthi, D.B.; Ramasubbareddy, S.; Govinda, K. Prediction of Stock Market Using Neural Network Strategies. J. Comput. Theor. Nanosci. 2019, 16, 2333–2336. [Google Scholar] [CrossRef]

- Lee, J.; Kim, R.; Koh, Y.; Kang, J. Global stock market prediction based on stock chart images using deep Q-network. IEEE Access 2019, 7, 167260–167277. [Google Scholar] [CrossRef]

- Baldo, A.; Cuzzocrea, A.; Fadda, E.; Bringas, P.G. Financial Forecasting via Deep-Learning and Machine-Learning Tools over Two-Dimensional Objects Transformed from Time Series. In Proceedings of the Hybrid Artificial Intelligent Systems: 16th International Conference, HAIS 2021, Bilbao, Spain, 22–24 September 2021; pp. 550–563. [Google Scholar]

- LeCun, Y.; Bengio, Y. Convolutional Networks for Images, Speech, and Time Series. In The Handbook of Brain Theory and Neural Networks; MIT Press: Cambridge, MA, USA, 1995; Volume 3361, p. 1995. [Google Scholar]

- Ammer, M.A.; Aldhyani, T.H.H. Deep Learning Algorithm to Predict Cryptocurrency Fluctuation Prices: Increasing Investment Awareness. Electronics 2022, 11, 2349. [Google Scholar] [CrossRef]

- Aldhyani, T.H.H.; Alkahtani, H. A Bidirectional Long Short-Term Memory Model Algorithm for Predicting COVID-19 in Gulf Countries. Life 2021, 11, 1118. [Google Scholar] [CrossRef]

- Aldhyani, T.H.H.; Alkahtani, H. Artificial Intelligence Algorithm-Based Economic Denial of Sustainability Attack Detection Systems: Cloud Computing Environments. Sensors 2022, 22, 4685. [Google Scholar] [CrossRef]

- Alkhatib, K.; Khazaleh, H.; Alkhazaleh, H.A.; Alsoud, A.R.; Abualigah, L. A New Stock Price Forecasting Method Using Active Deep Learning Approach. J. Open Innov. Technol. Mark. Complex. 2022, 8, 96. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).