Improvement of LSTM-Based Forecasting with NARX Model through Use of an Evolutionary Algorithm

Abstract

1. Introduction and Literature Review

2. The NARX Model and Performance Metrics

- -

- T+ is the number of true positive cases: Y(t + 1) −Y(t) ≥ 0 and (t + 1) − (t) ≥ 0;

- -

- F+ is the number of false positive cases: Y(t + 1) −Y(t) < 0 and (t + 1) − (t) ≥ 0;

- -

- T− is the number of true negative cases: Y(t + 1) −Y(t) < 0 and (t + 1) − (t) < 0;

- -

- F− is the number of false negative cases: Y(t + 1) −Y(t) ≥ 0 and (t + 1) − (t) < 0.

3. Preprocessing

3.1. Scaling

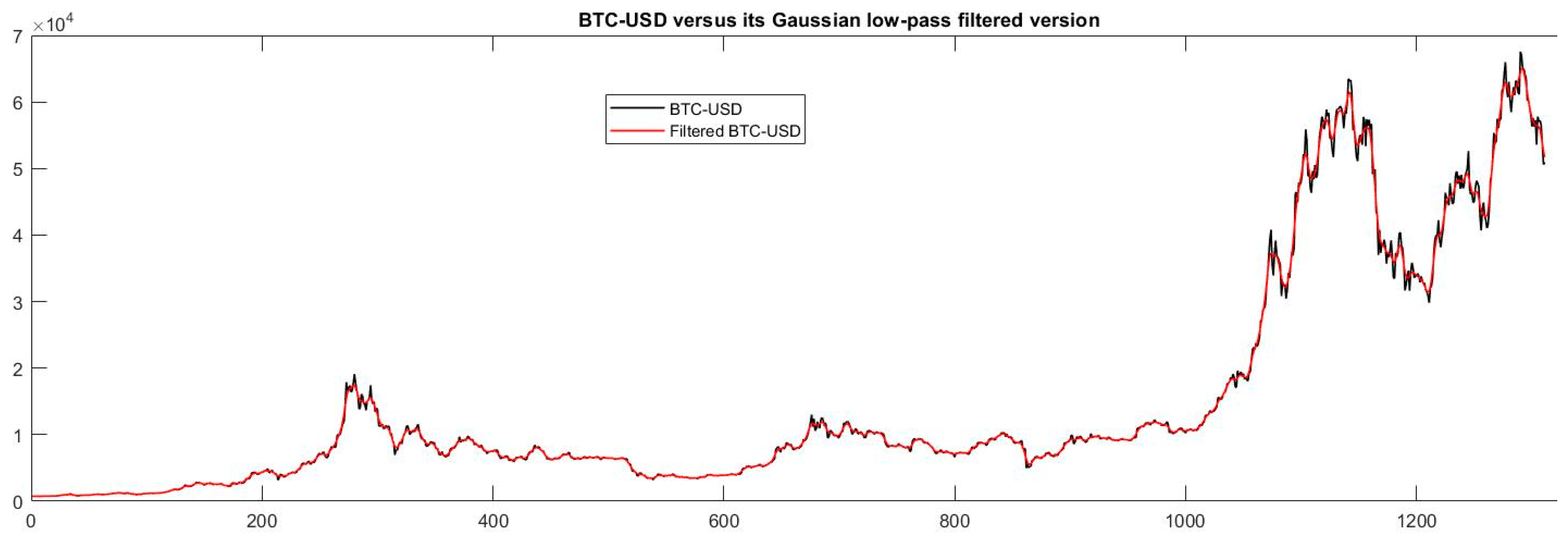

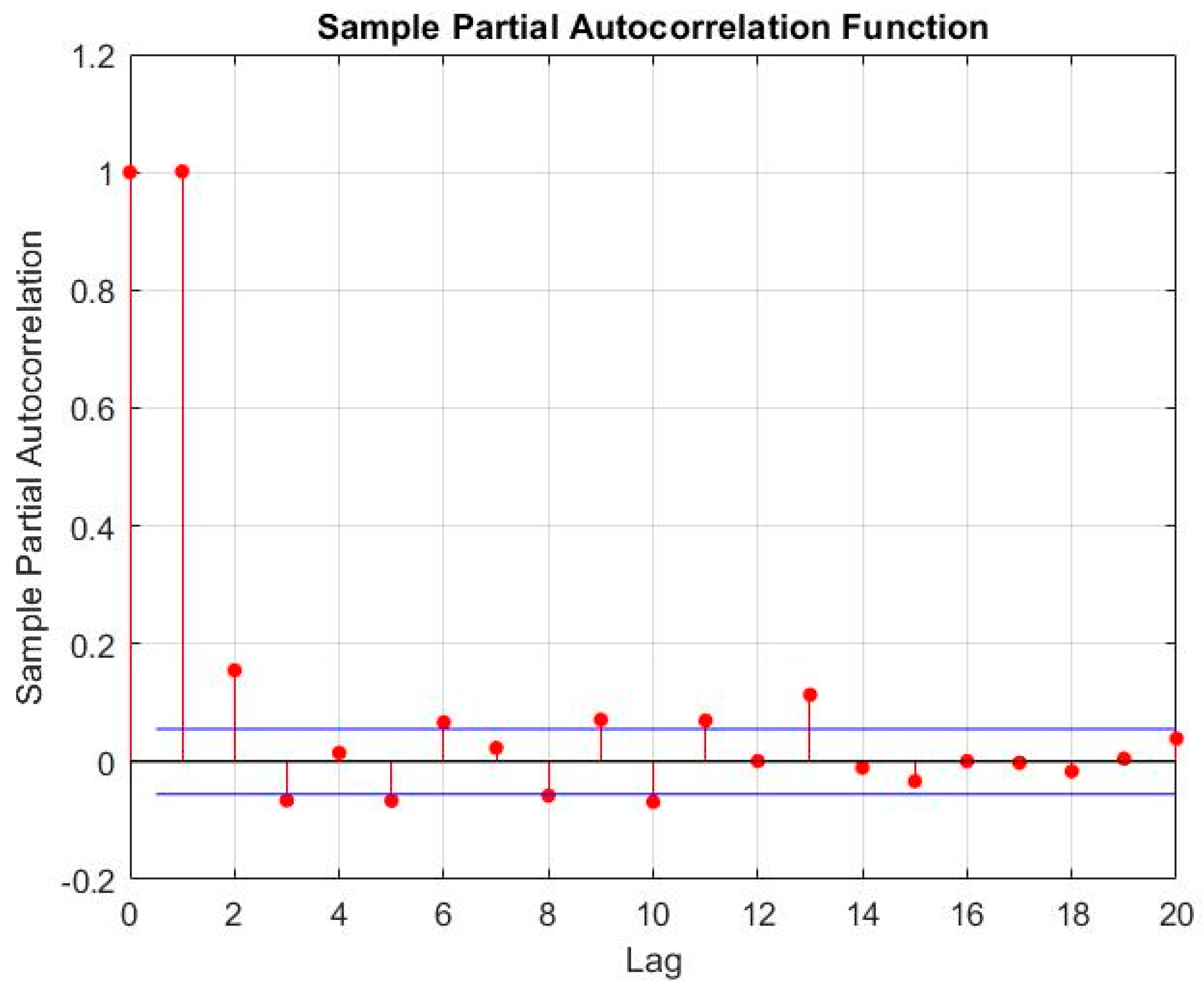

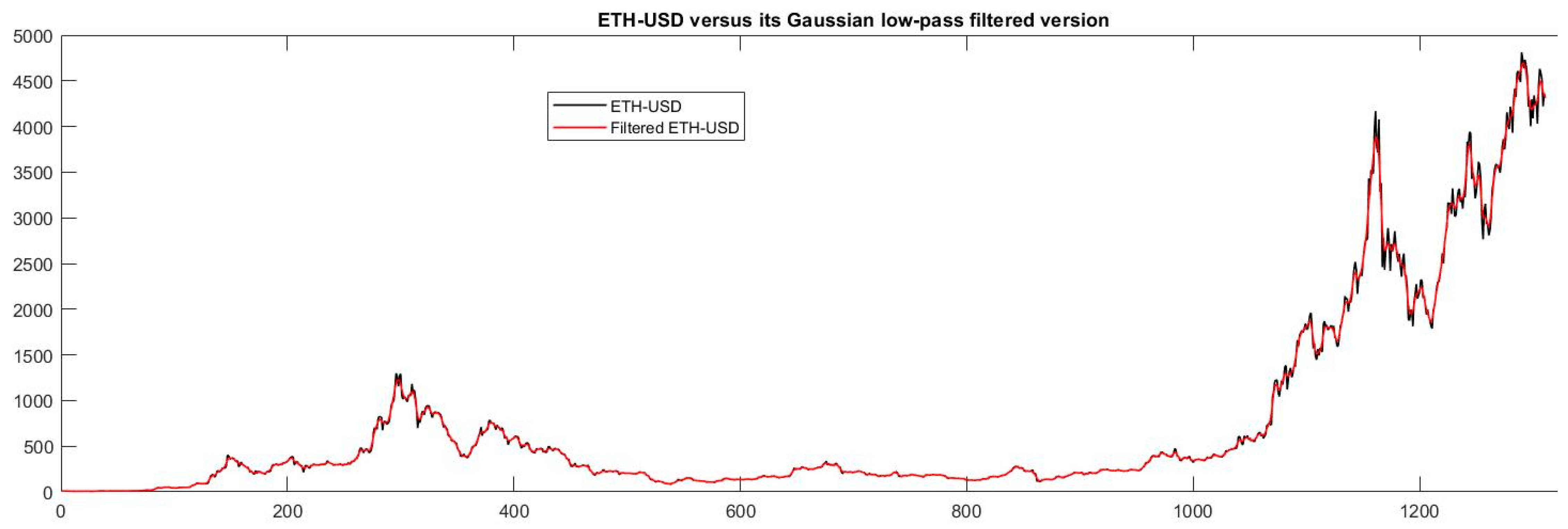

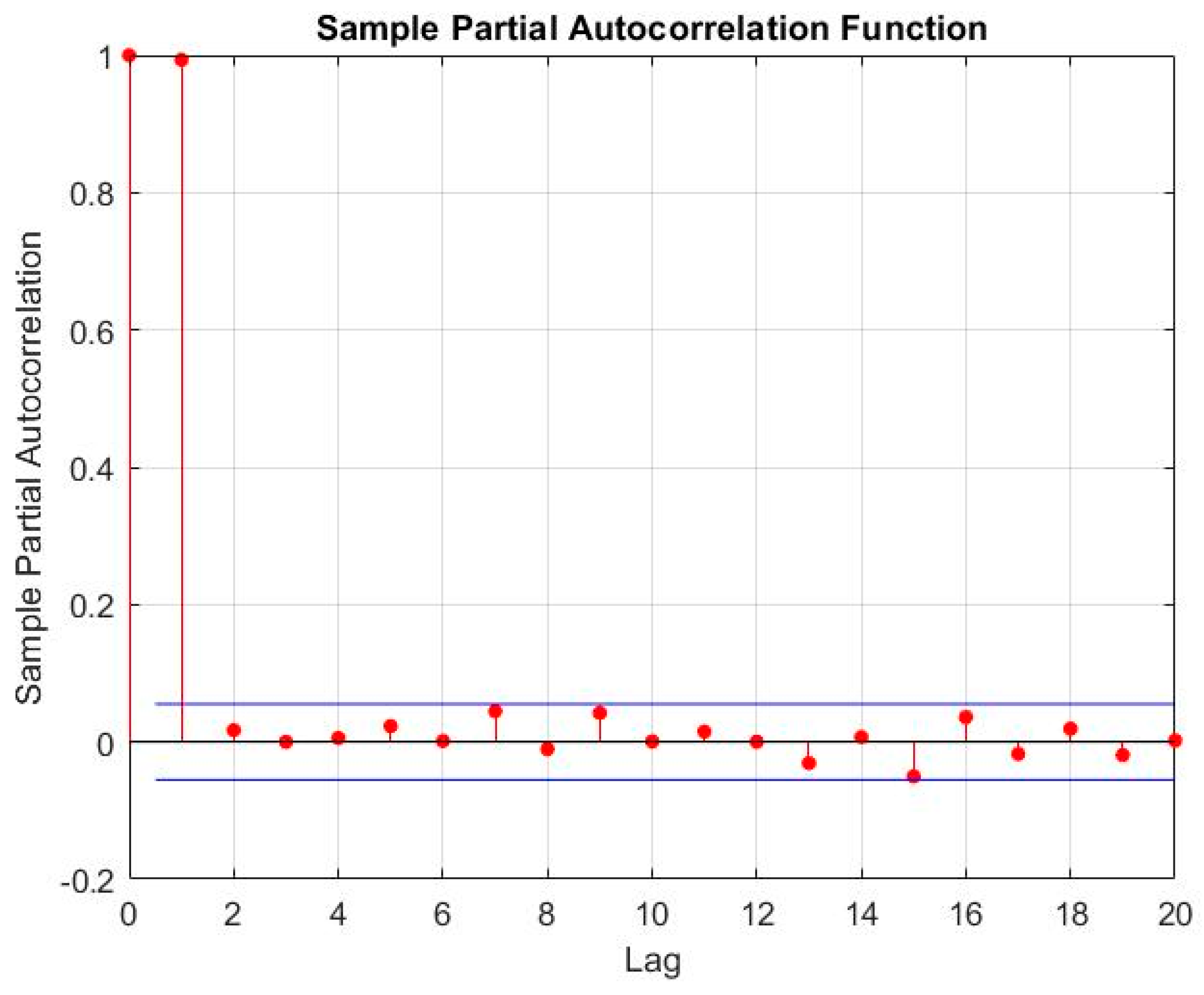

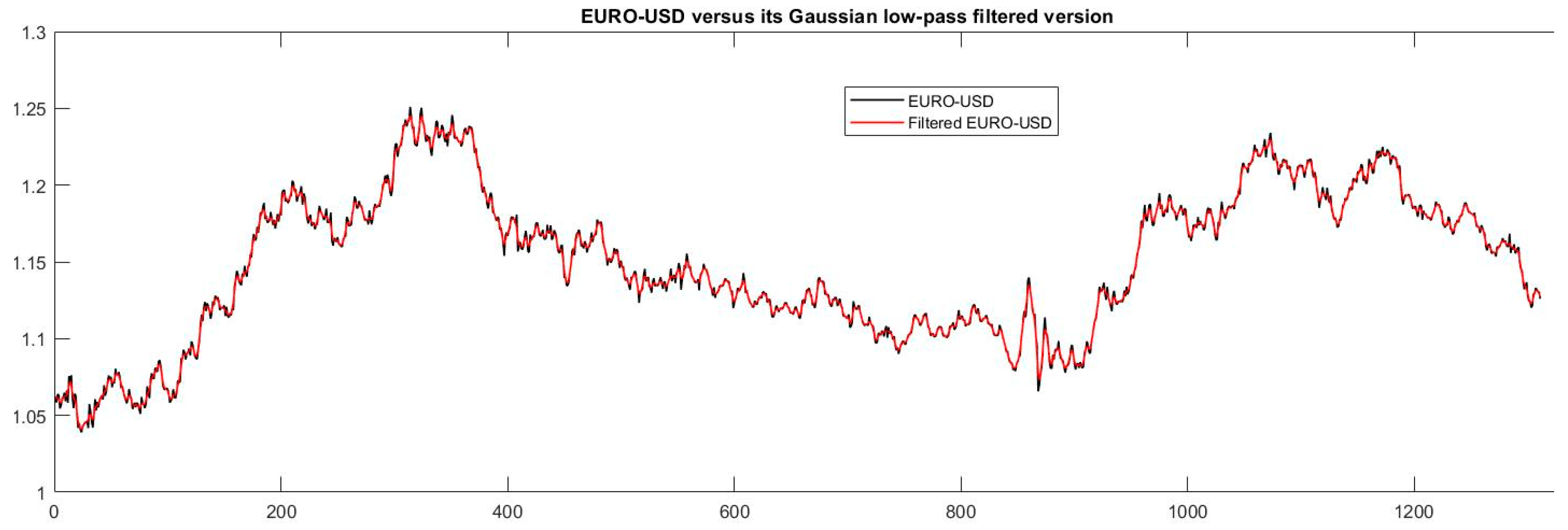

3.2. Data Smoothing

4. Evolutionary Search in LSTMs Space

4.1. Two-Membered Evolution Strategies

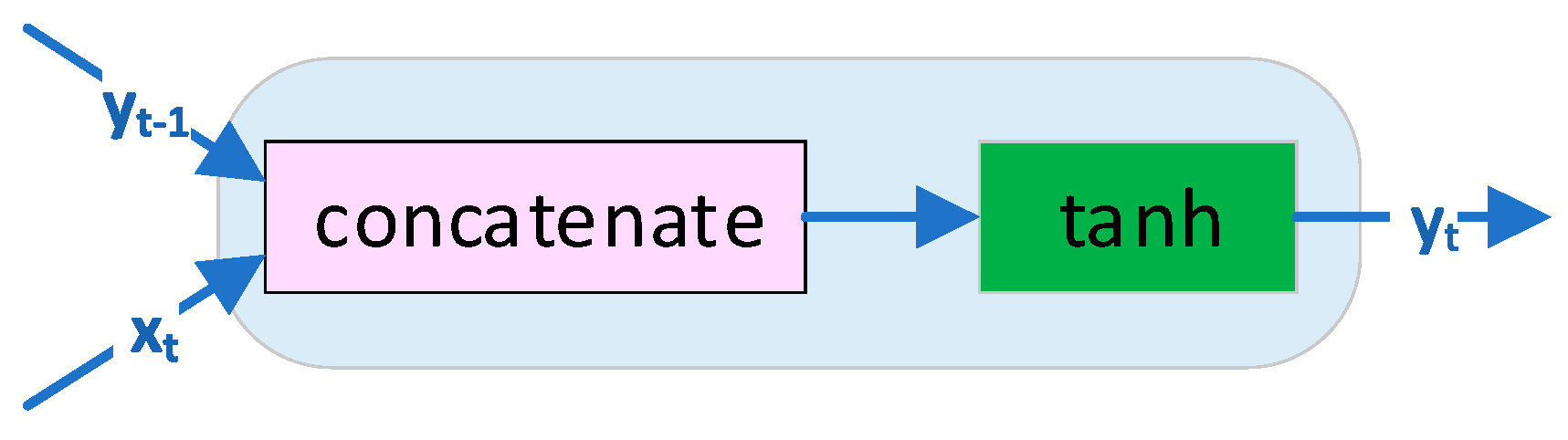

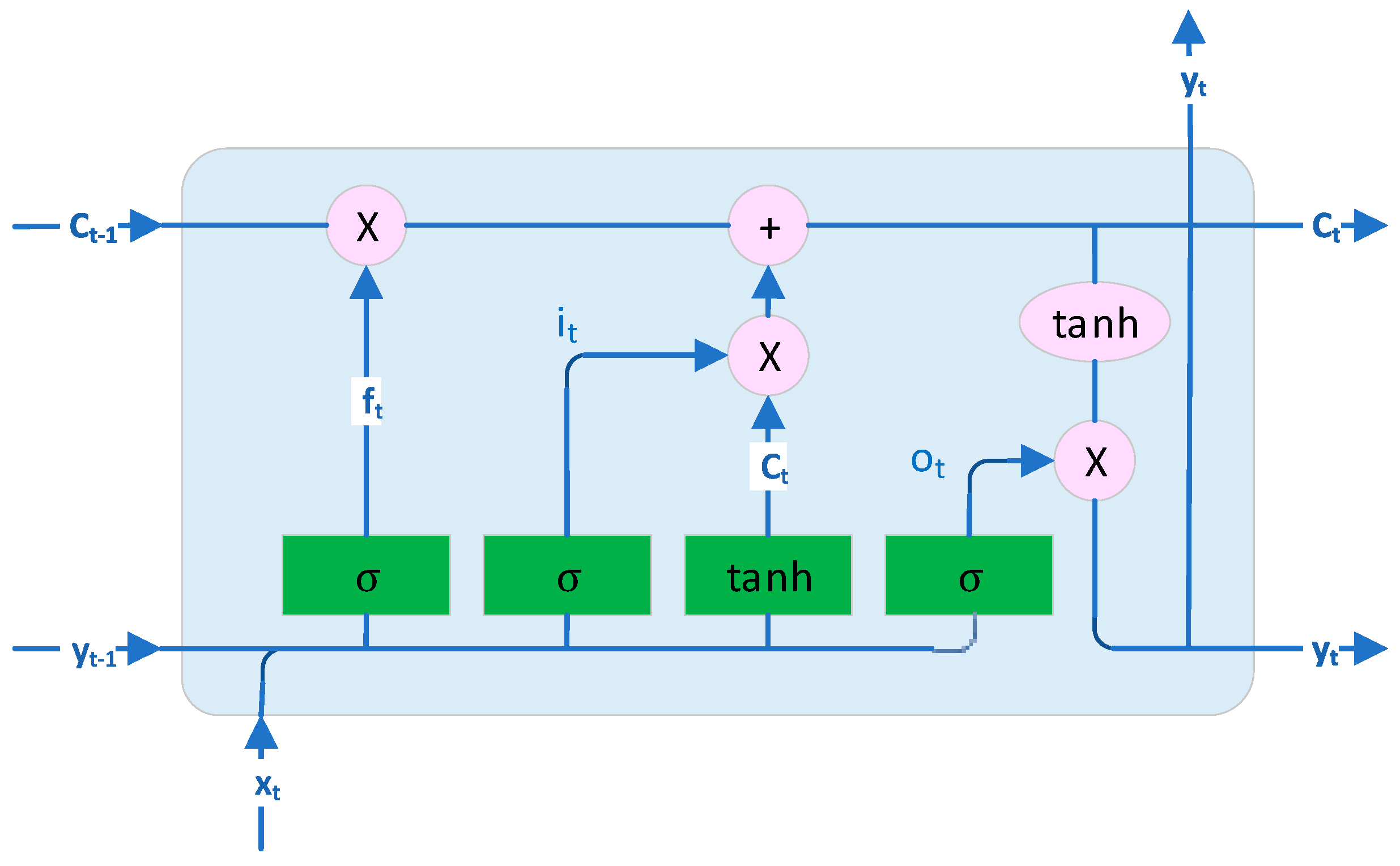

4.2. LSTM

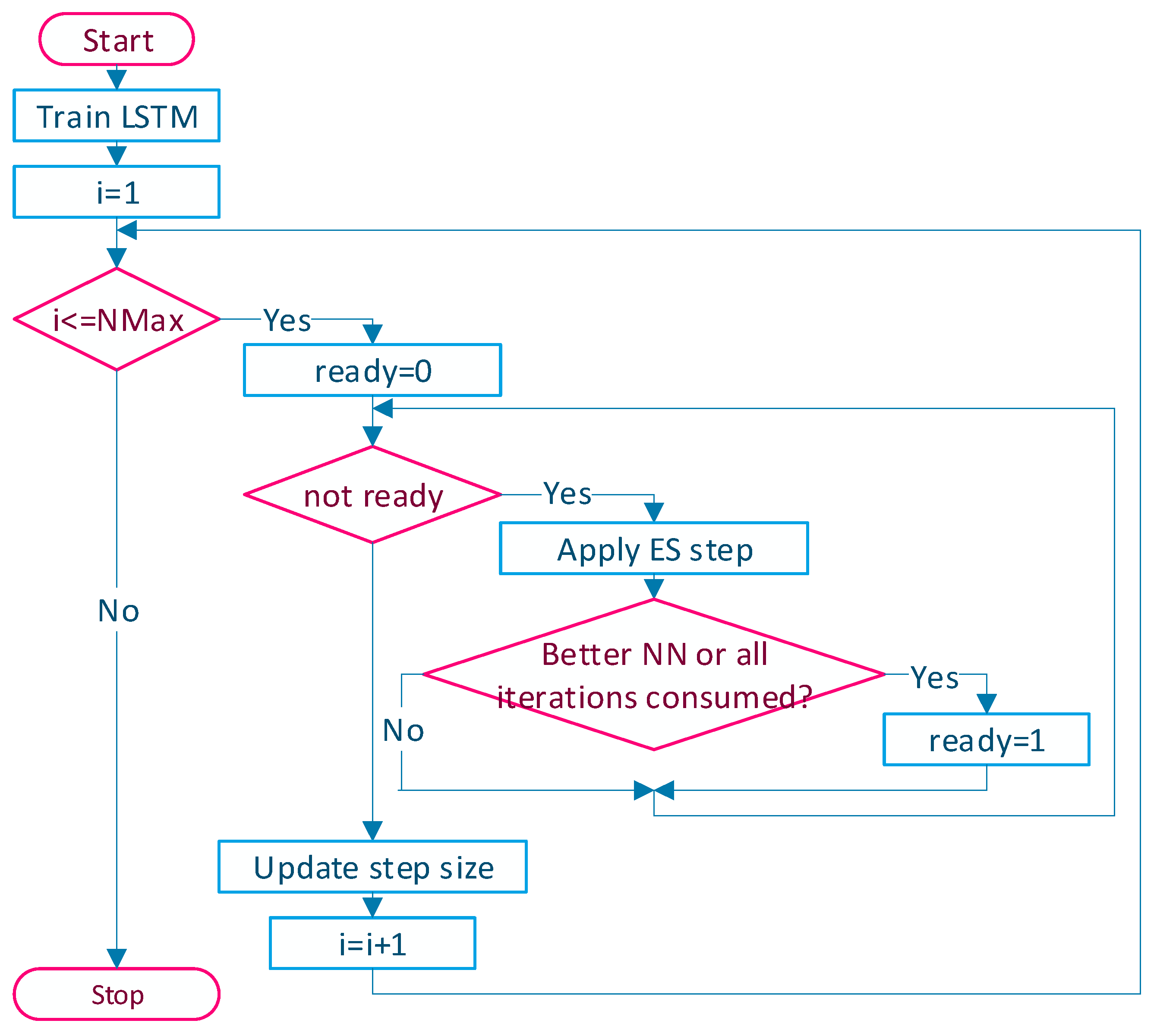

4.3. ES-LSTM Method

| Algorithm 1: |

| Inputs. , , ct, NMax, NAtt, , and |

| Step 1. Train the LSTM neural network using and obtain |

| Step 2. Compute , and using and (3), (4) and (9). |

| Step 3. for i =1..NMax |

| 3.1. ok0; nr_a0; |

| 3.2. while not ok |

| 3.2.1. Compute . |

| ; ; 3.2.2. nr_a nr_a+1 |

| 3.2.3. Compute , and using and (3), (4) and (9). |

| 3.2.4. if and ok1 |

| 3.2.5. if nr_a=NAtt |

| ok1; |

| 3.3. if nr_a< |

| else if nr_a |

| Outputs: LSTM corresponding to . |

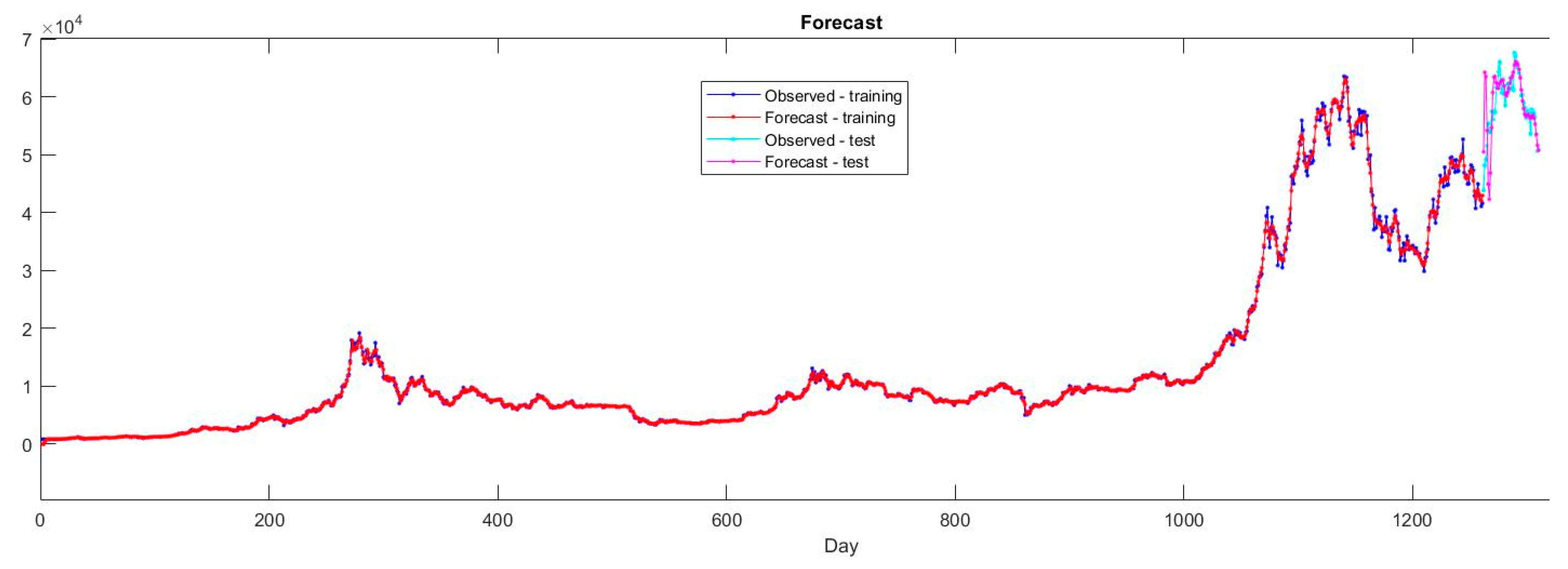

5. Experiments

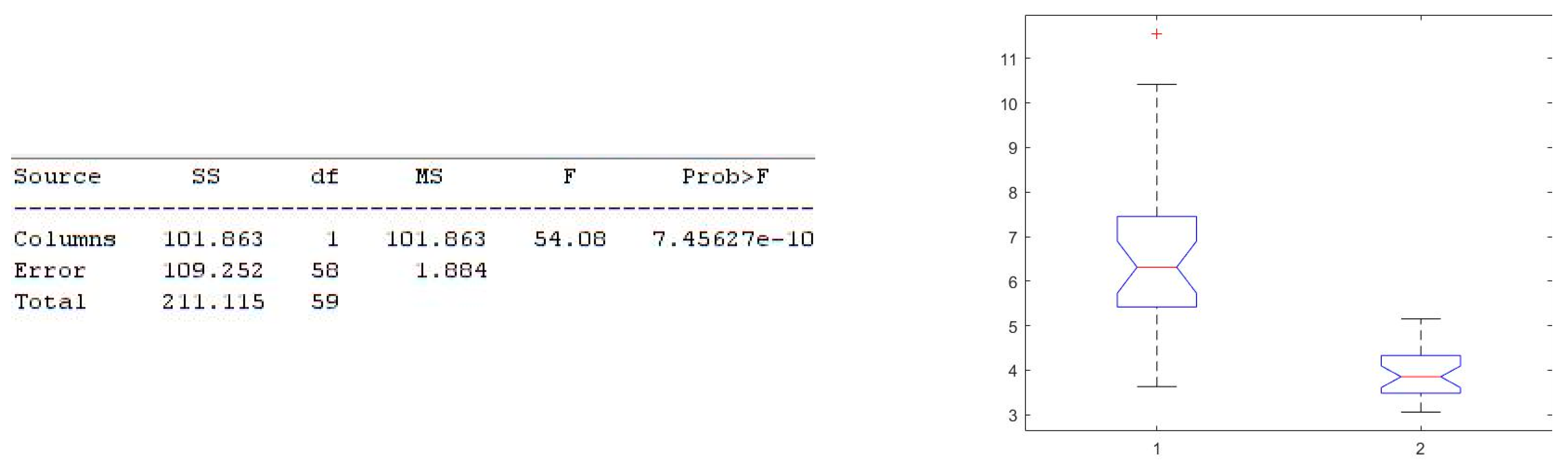

- 1.

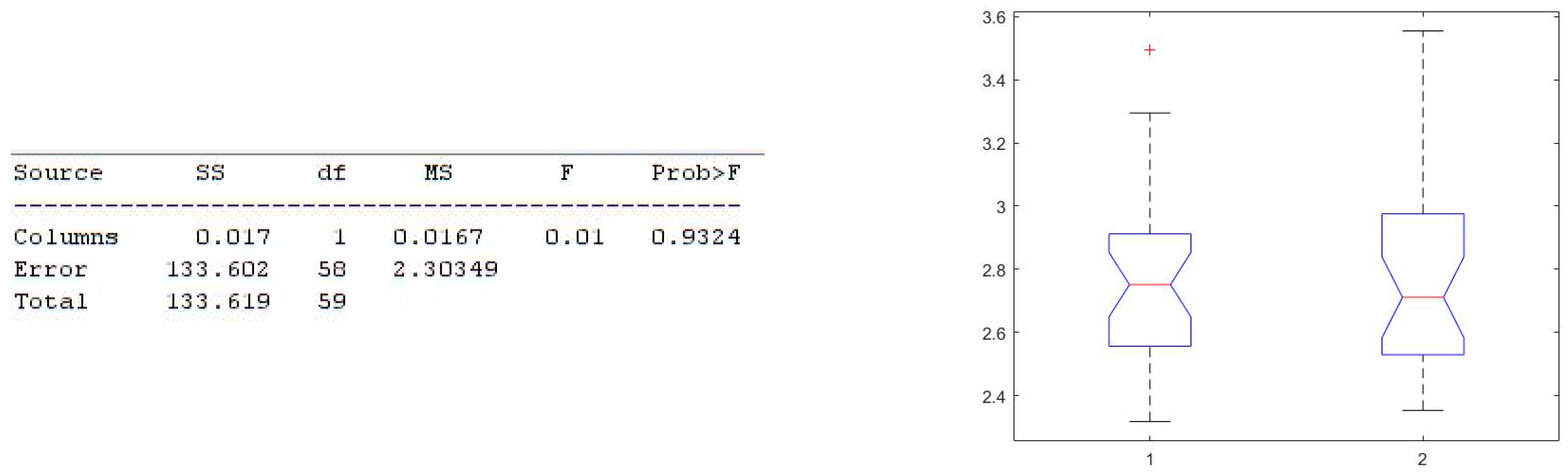

- MAPE indicator. The result indicates a similarity between the MAPE values.

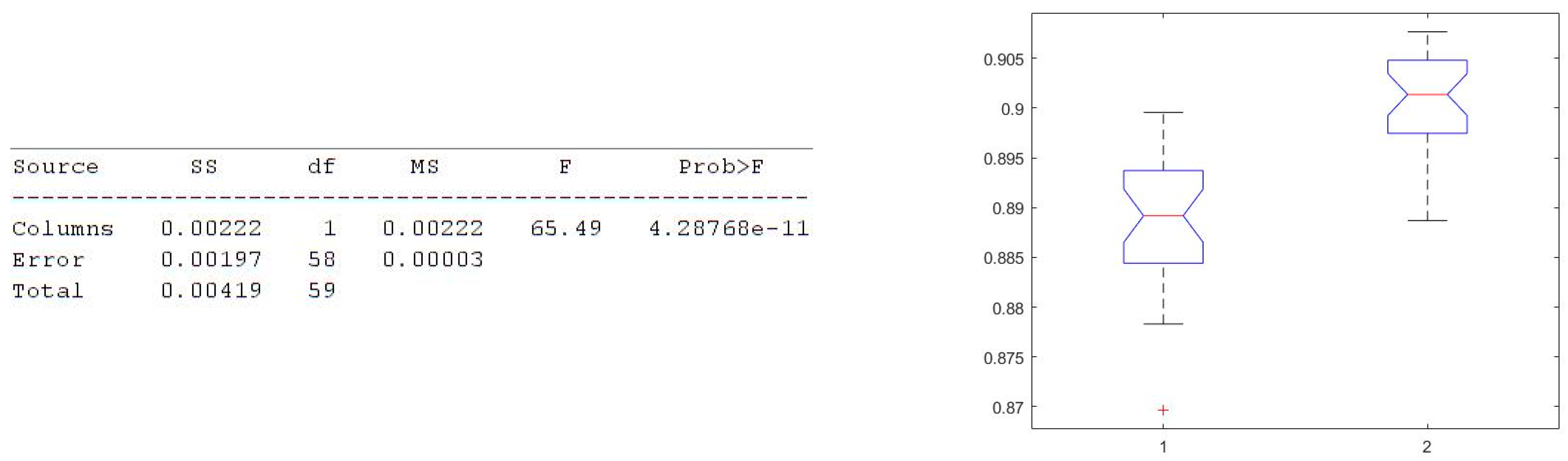

- 2.

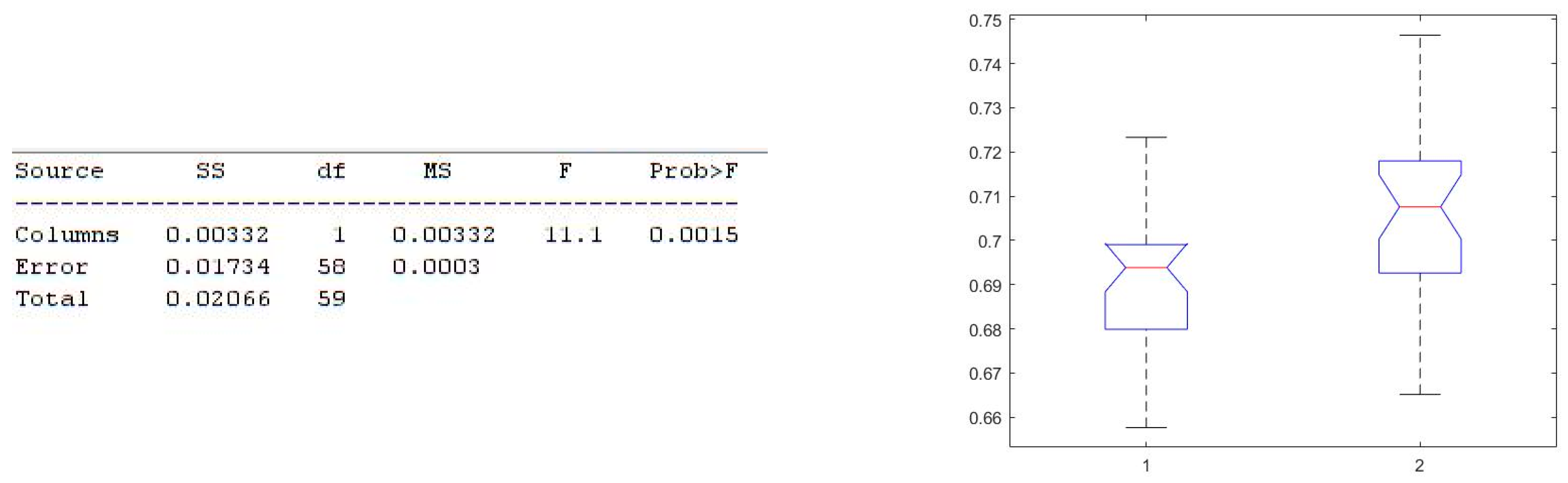

- F1 indicator. The result indicates a dissimilarity between the F1 values, the one corresponding to the proposed method being better.

- 3.

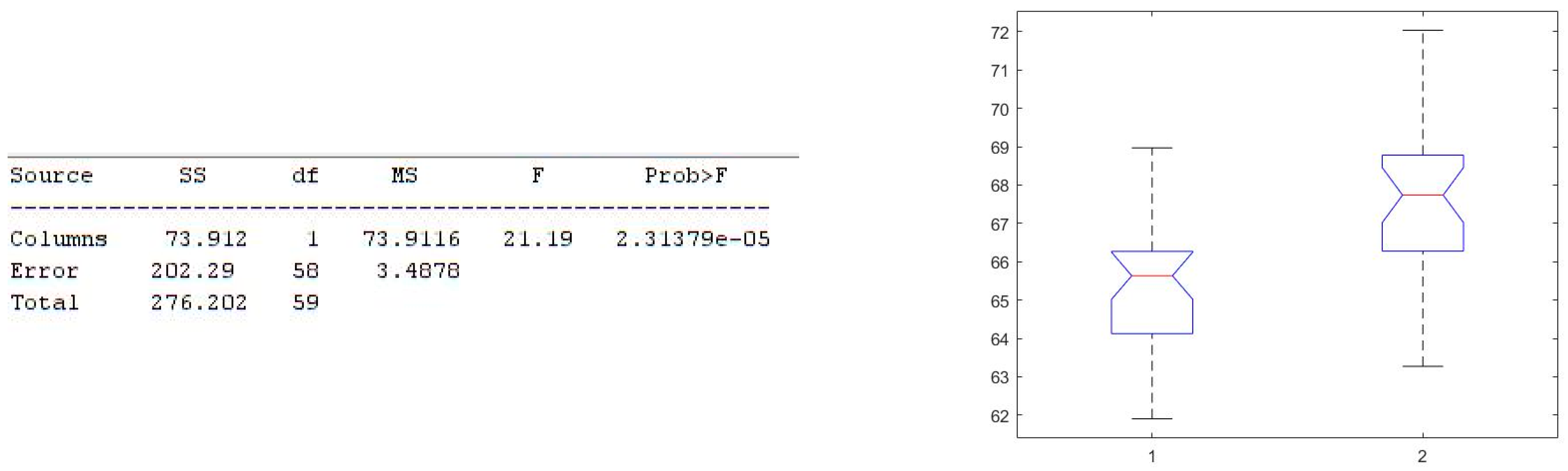

- POCID indicator. The result indicates a dissimilarity between the POCID values, the one corresponding to the proposed method being significantly better.

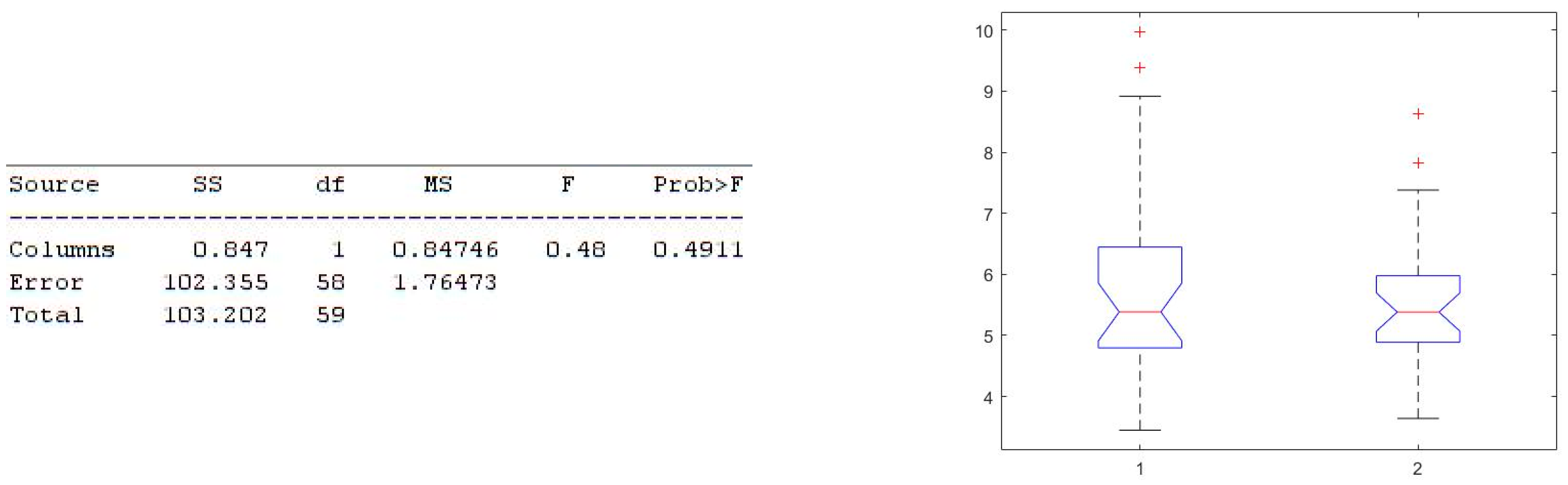

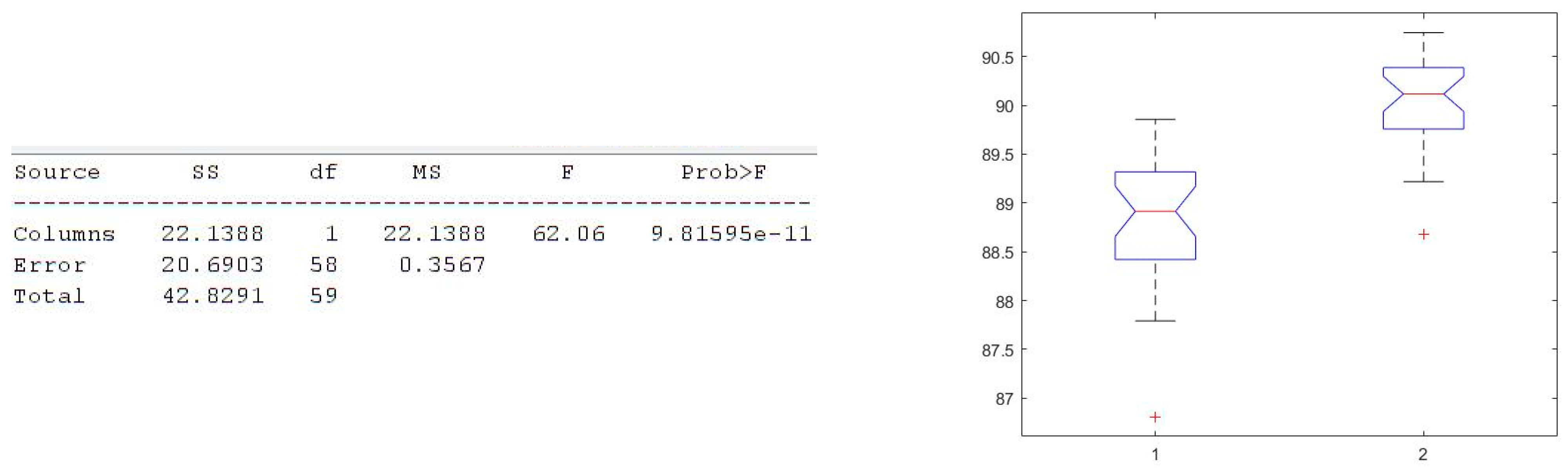

- 1.

- MAPE indicator. The result indicates a similarity between the MAPE values.

- 2.

- F1 indicator. The result indicates a dissimilarity between the F1 values, the one corresponding to the proposed method being significantly better.

- 3.

- POCID indicator. The result indicates a dissimilarity between the POCID values, the one corresponding to the proposed method being significantly better.

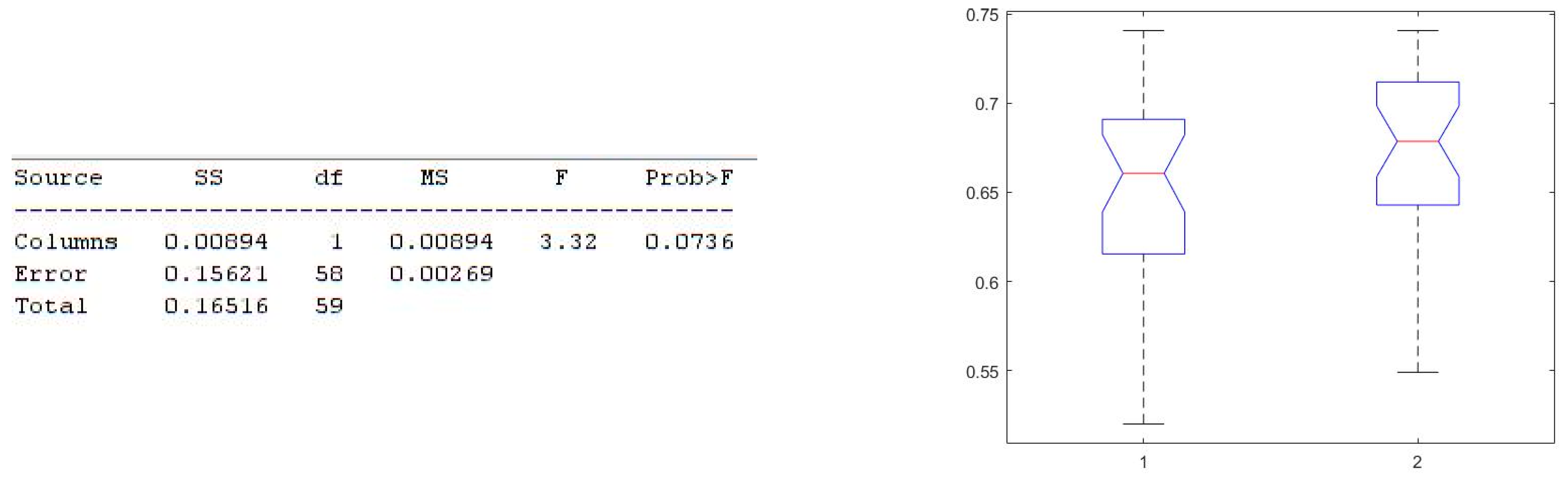

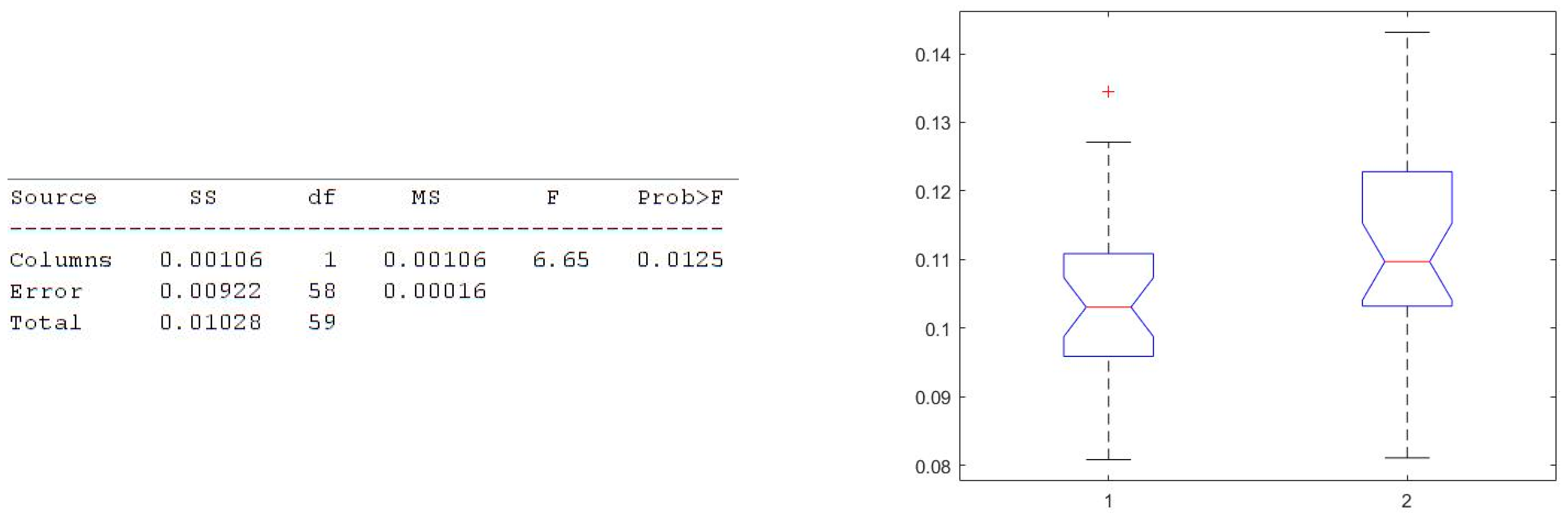

- 1.

- MAPE indicator. The result indicates a dissimilarity between the MAPE values, the one corresponding to the proposed method being far better.

- 2.

- F1 indicator. The result indicates a dissimilarity between the F1 values, the one corresponding to the proposed method being far better.

- 3.

- POCID indicator. The result indicates a dissimilarity between the POCID values, the one corresponding to the proposed method being significantly better.

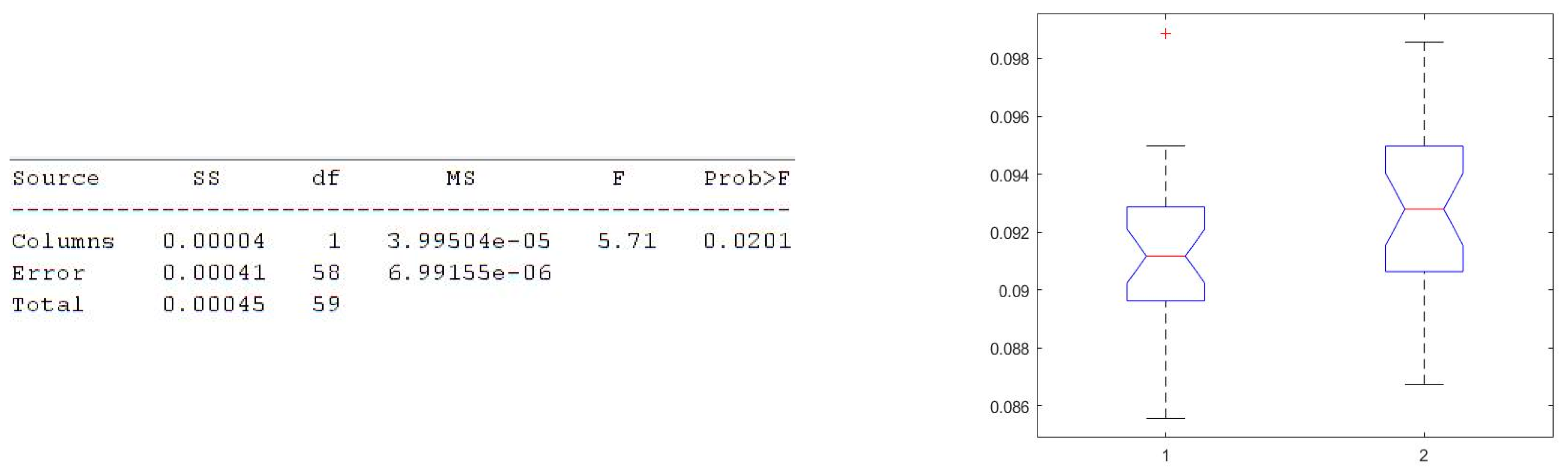

- 1.

- MAPE indicator. The result indicates a similarity between the MAPE values.

- 2.

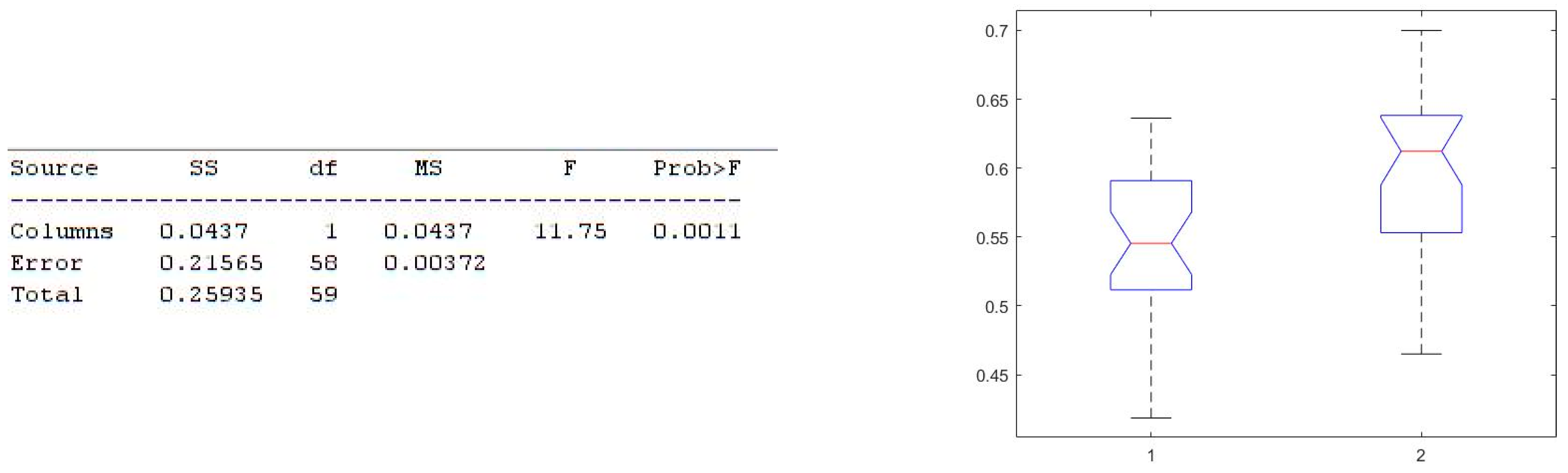

- F1 indicator. The result indicates a dissimilarity between the F1 values, and the one corresponding to the proposed method is slightly better.

- 3.

- POCID indicator. The result indicates a relative similarity between the POCID values, but the one corresponding to the proposed method is slightly better.

- 1.

- MAPE indicator. The result indicates relatively similar MAPE values.

- 2.

- F1 indicator. The result indicates a dissimilarity between the F1 values, the one corresponding to the proposed method being significantly better.

- 3.

- POCID indicator. The result indicates a dissimilarity between the POCID values, the one corresponding to the proposed method being significantly better.

- 1.

- MAPE indicator. The result indicates a similarity between the MAPE values.

- 2.

- F1 indicator. The result indicates a dissimilarity between the F1 values, the one corresponding to the proposed method being better.

- 3.

- POCID indicator. The result indicates a dissimilarity between the POCID values, the one corresponding to the proposed method being better.

6. Conclusions and Outlooks

Author Contributions

Funding

Conflicts of Interest

References

- Sangiorgio, M.; Dercole, F. Robustness of LSTM neural networks for multi-step forecasting of chaotic time series. Chaos Solitons Fractals 2020, 139, 110045. [Google Scholar] [CrossRef]

- Siami-Namini, S.; Tavakoli, N.; Namin, A.S. The Performance of LSTM and BiLSTM in Forecasting Time Series. In Proceedings of the 2019 IEEE International Conference on Big Data (Big Data), Los Angeles, CA, USA, 9–12 December 2019; pp. 3285–3292. [Google Scholar] [CrossRef]

- Thapa, S.; Zhao, Z.; Li, B.; Lu, L.; Fu, D.; Shi, X.; Tang, B.; Qi, H. Snowmelt-Driven Streamflow Prediction Using Machine Learning Techniques (LSTM, NARX, GPR, and SVR). Water 2020, 12, 1734. [Google Scholar] [CrossRef]

- Cocianu, C.; Avramescu, M. The Use of LSTM Neural Networks to Implement the NARX Model. A Case Study of EUR-USD Exchange Rates. Inform. Econ. 2020, 24, 5–14. [Google Scholar] [CrossRef]

- Massaoudi, M.; Chihi, I.; Sidhom, L.; Trabelsi, M.; Refaat, S.S.; Abu-Rub, H.; Oueslati, F.S. An Effective Hybrid NARX-LSTM Model for Point and Interval PV Power Forecasting. IEEE Access 2021, 9, 36571–36588. [Google Scholar] [CrossRef]

- Moursi, A.S.A.; El-Fishawy, N.; Djahel, S.; Shouman, M.A. Enhancing PM2.5 Prediction Using NARX-Based Combined CNN and LSTM Hybrid Model. Sensors 2022, 22, 4418. [Google Scholar] [CrossRef]

- Xu, Z.; Zhang, X. Short-term wind power prediction of wind farms based on LSTM+NARX neural network. In Proceedings of the 2021 International Conference on Computer Engineering and Application (ICCEA), Kunming, China, 25–27 June 2021; pp. 137–141. [Google Scholar] [CrossRef]

- Zhan, Z.-H.; Li, J.-Y.; Zhang, J. Evolutionary deep learning: A survey. Neurocomputing 2022, 483, 42–58. [Google Scholar] [CrossRef]

- Li, Y.; Zhu, Z.; Kong, D.; Han, H.; Zhao, Y. EA-LSTM: Evolutionary attention-based LSTM for time series prediction. Knowl.-Based Syst. 2019, 181, 104785. [Google Scholar] [CrossRef]

- Zhou, Y.; Guo, S.; Chang, F.-J. Explore an evolutionary recurrent ANFIS for modelling multi-step-ahead flood forecasts. J. Hydrol. 2019, 570, 343–355. [Google Scholar] [CrossRef]

- Kim, K.-J.; Han, I. Genetic algorithms approach to feature discretization in artificial neural networks for the prediction of stock price index. Expert Syst. Appl. 2000, 19, 125–132. [Google Scholar] [CrossRef]

- Amjady, N.; Keynia, F. Short-term load forecasting of power systems by combination of wavelet transform and neuro-evolutionary algorithm. Energy 2009, 34, 46–57. [Google Scholar] [CrossRef]

- Rout, A.K.; Dash, P.; Dash, R.; Bisoi, R. Forecasting financial time series using a low complexity recurrent neural network and evolutionary learning approach. J. King Saud Univ. Comput. Inf. Sci. 2017, 29, 536–552. [Google Scholar] [CrossRef]

- Kim, K.-J. Artificial neural networks with evolutionary instance selection for financial forecasting. Expert Syst. Appl. 2006, 30, 519–526. [Google Scholar] [CrossRef]

- Xia, P.; Ni, Z.; Zhu, X.; He, Q.; Chen, Q. A novel prediction model based on long short-term memory optimised by dynamic evolutionary glowworm swarm optimisation for money laundering risk. Int. J. Bio-Inspired Comput. 2022, 19, 77–86. [Google Scholar] [CrossRef]

- Kumar, R.; Kumar, P.; Kumar, Y. Two-phase hybridisation using deep learning and evolutionary algorithms for stock market forecasting. Int. J. Grid Util. Comput. 2021, 12, 573–589. [Google Scholar] [CrossRef]

- Ortego, P.; Diez-Olivan, A.; Del Ser, J.; Veiga, F.; Penalva, M.; Sierra, B. Evolutionary LSTM-FCN networks for pattern classification in industrial processes. Swarm Evol. Comput. 2020, 54, 100650. [Google Scholar] [CrossRef]

- Wang, H.; Wang, H.; Xu, K. Evolutionary recurrent neural network for image captioning. Neurocomputing 2020, 401, 249–256. [Google Scholar] [CrossRef]

- Izidio, D.; Neto, P.D.M.; Barbosa, L.; de Oliveira, J.; Marinho, M.; Rissi, G. Evolutionary Hybrid System for Energy Consumption Forecasting for Smart Meters. Energies 2021, 14, 1794. [Google Scholar] [CrossRef]

- Chung, H.; Shin, K.-S. Genetic Algorithm-Optimized Long Short-Term Memory Network for Stock Market Prediction. Sustainability 2018, 10, 3765. [Google Scholar] [CrossRef]

- Almalaq, A.; Zhang, J.J. Evolutionary Deep Learning-Based Energy Consumption Prediction for Buildings. IEEE Access 2018, 7, 1520–1531. [Google Scholar] [CrossRef]

- Divina, F.; Maldonado, J.T.; García-Torres, M.; Martínez-Álvarez, F.; Troncoso, A. Hybridizing Deep Learning and Neuroevolution: Application to the Spanish Short-Term Electric Energy Consumption Forecasting. Appl. Sci. 2020, 10, 5487. [Google Scholar] [CrossRef]

- Chen, Y.-H.; Chang, F.-J. Evolutionary artificial neural networks for hydrological systems forecasting. J. Hydrol. 2009, 367, 125–137. [Google Scholar] [CrossRef]

- Viswambaran, R.A.; Chen, G.; Xue, B.; Nekooei, M. Two-Stage Genetic Algorithm for Designing Long Short Term Memory (LSTM) Ensembles. In Proceedings of the 2021 IEEE Congress on Evolutionary Computation (CEC), Kraków, Poland, 28 June–1 July 2021; pp. 942–949. [Google Scholar] [CrossRef]

- Al-Hajj, R.; Assi, A.; Fouad, M.; Mabrouk, E. A Hybrid LSTM-Based Genetic Programming Approach for Short-Term Prediction of Global Solar Radiation Using Weather Data. Processes 2021, 9, 1187. [Google Scholar] [CrossRef]

- Tsokov, S.; Lazarova, M.; Aleksieva-Petrova, A. A Hybrid Spatiotemporal Deep Model Based on CNN and LSTM for Air Pollution Prediction. Sustainability 2022, 14, 5104. [Google Scholar] [CrossRef]

- Wibowo, A.; Pujianto, H.; Saputro, D.R.S. Nonlinear autoregressive exogenous model (NARX) in stock price index’s prediction. In Proceedings of the 2017 2nd International conferences on Information Technology, Information Systems and Electrical Engineering (ICITISEE), Yogyakarta, Indonesia, 1–2 November 2017; pp. 26–29. [Google Scholar] [CrossRef]

- Boussaada, Z.; Curea, O.; Remaci, A.; Camblong, H.; Mrabet Bellaaj, N. A Nonlinear Autoregressive Exogenous (NARX) Neural Network Model for the Prediction of the Daily Direct Solar Radiation. Energies 2018, 11, 620. [Google Scholar] [CrossRef]

- Di Nunno, F.; Race, M.; Granata, F. A nonlinear autoregressive exogenous (NARX) model to predict nitrate concentration in rivers. Environ. Sci. Pollut. Res. 2022, 29, 40623–40642. [Google Scholar] [CrossRef]

- Dhussa, A.K.; Sambi, S.S.; Kumar, S.; Kumar, S.; Kumar, S. Nonlinear Autoregressive Exogenous modeling of a large anaerobic digester producing biogas from cattle waste. Bioresour. Technol. 2014, 170, 342–349. [Google Scholar] [CrossRef]

- Lo, A.; Mamaysky, H.; Wang, J. Foundations of technical analysis: Computational algorithms, statistical inference, and empirical implementation. J. Financ. 2000, 55, 1705–1770. [Google Scholar] [CrossRef]

- Hyndman, R. Another Look at Forecast Accuracy Metrics for Intermittent Demand. Foresight Int. J. Appl. Forecast. 2006, 4, 43–46. [Google Scholar]

- Fallahtafti, A.; Aghaaminiha, M.; Akbarghanadian, S.; Weckman, G.R. Forecasting ATM Cash Demand Before and During the COVID-19 Pandemic Using an Extensive Evaluation of Statistical and Machine Learning Models. SN Comput. Sci. 2022, 3, 164. [Google Scholar] [CrossRef]

- Bansal, A.; Singhrova, A. Performance Analysis of Supervised Machine Learning Algorithms for Diabetes and Breast Cancer Dataset. In Proceedings of the 2021 International Conference on Artificial Intelligence and Smart Systems (ICAIS), Coimbatore, India, 25–27 March 2021; pp. 137–143. [Google Scholar] [CrossRef]

- Kulkarni, A.; Chong, D.; Batarseh, F.A. 5—Foundations of data imbalance and solutions for a data democracy. In Data Democracy; Batarseh, F.A., Yang, R., Eds.; Academic Press: Cambridge, MA, USA, 2020; pp. 83–106. ISBN 9780128183663. [Google Scholar]

- Fabrice, D. Financial Time Series Data Processing for Machine Learning. arXiv 2019, arXiv:1907.03010. [Google Scholar]

- Barsanti, R.J.; Gilmore, J. Comparing noise removal in the wavelet and Fourier domains. In Proceedings of the 2011 IEEE 43rd Southeastern Symposium on System Theory, Auburn, AL, USA, 14–16 March 2011; pp. 163–167. [Google Scholar] [CrossRef]

- Eiben, A.; Smith, J. Introduction to Evolutionary Computing; Springer: Berlin, Germany, 2015. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; ISBN 978-0-262-03561-3. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Cocianu, C.; Avramescu, M. New Approaches of NARX-Based Forecasting Model. A Case Study on CHF-RON Exchange Rate. Inform. Econ. 2018, 22, 5–13. [Google Scholar] [CrossRef]

- Sheela, K.G.; Deepa, S.N. Review on Methods to Fix Number of Hidden Neurons in Neural Networks. Math. Probl. Eng. 2013, 2013, 425740. [Google Scholar] [CrossRef]

- Diederik, P.; Ba, K.J. Adam: A Method for Stochastic Optimization. In Proceedings of the ICLR (Poster), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Available online: https://finance.yahoo.com/ (accessed on 1 May 2022).

- Grebenkov, D.S.; Serror, J. Following a trend with an exponential moving average: Analytical results for a Gaussian model. Phys. A Stat. Mech. Its Appl. 2014, 394, 288–303. [Google Scholar] [CrossRef]

- Butler, M.; Kazakov, D. A learning adaptive Bollinger band system. In Proceedings of the IEEE Conference on Computational Intelligence on Financial Engineering and Economics, New York, NY, USA, 29–30 March 2012; pp. 1–8. [Google Scholar]

- Cocianu, C.; Hakob, G. Machine Learning Techniques for Stock Market Prediction. A Case Study Of Omv Petrom. Econ. Comput. Econ. Cybern. Stud. Res. 2016, 50, 63–82. [Google Scholar]

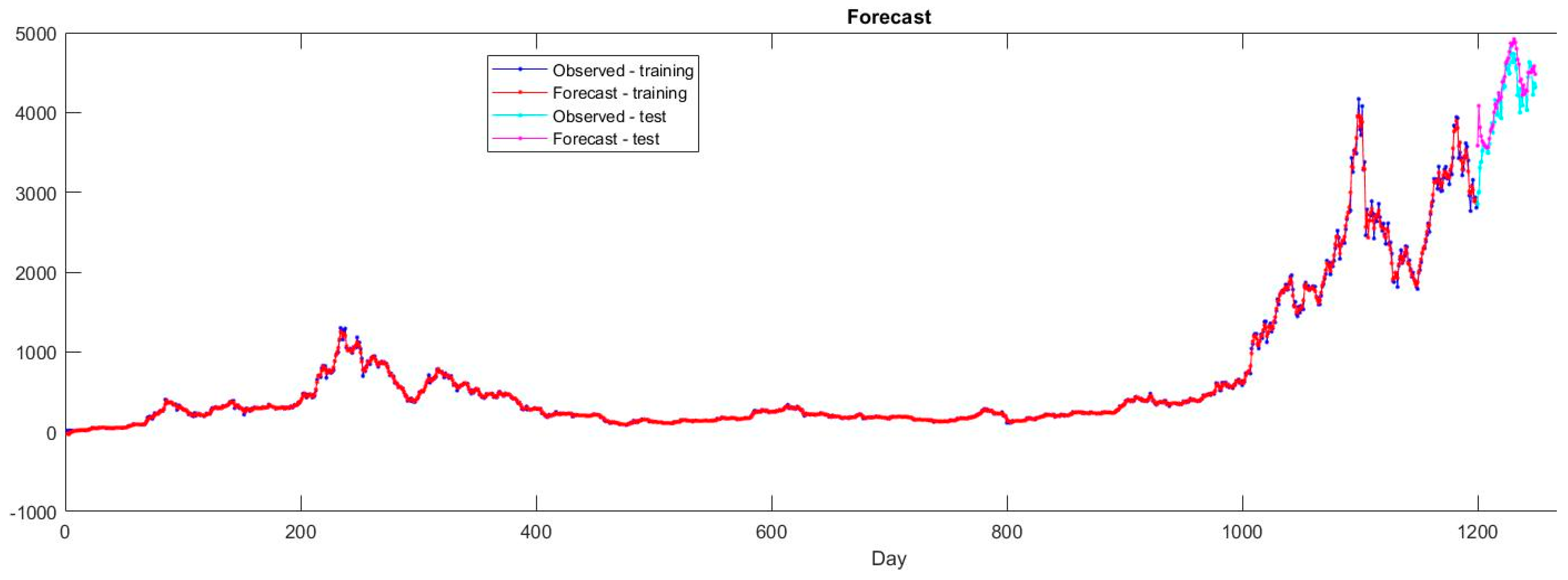

| RMSE | MAPE | F1 | POCID | |

|---|---|---|---|---|

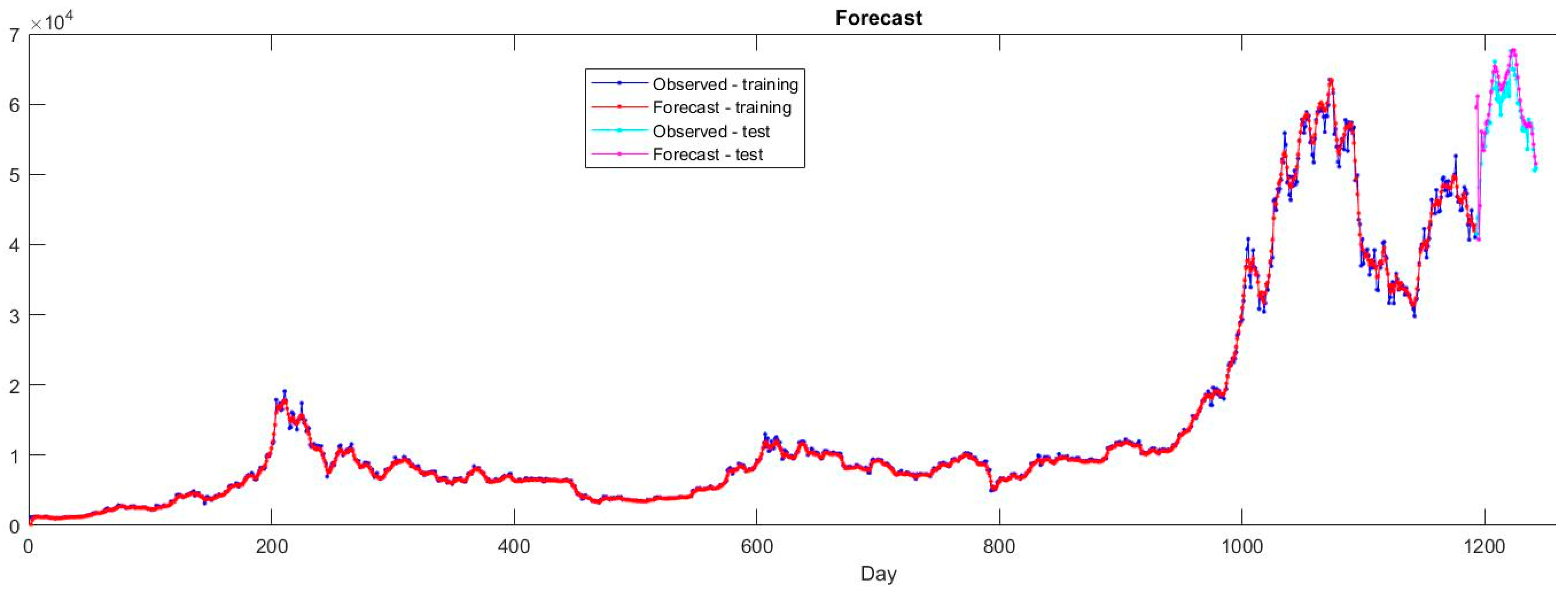

| LSTM—training | 508.18 | 2.77 | 0.690 | 65.36 |

| ES-LSTM—training | 525.27 | 2.77 | 0.705 | 67.58 |

| LSTM—test | 3906.25 | 4.423 | 0.541 | 57.77 |

| ES-LSTM—test | 3935.64 | 4.327 | 0.601 | 62.99 |

| RMSE | MAPE | F1 | POCID | |

|---|---|---|---|---|

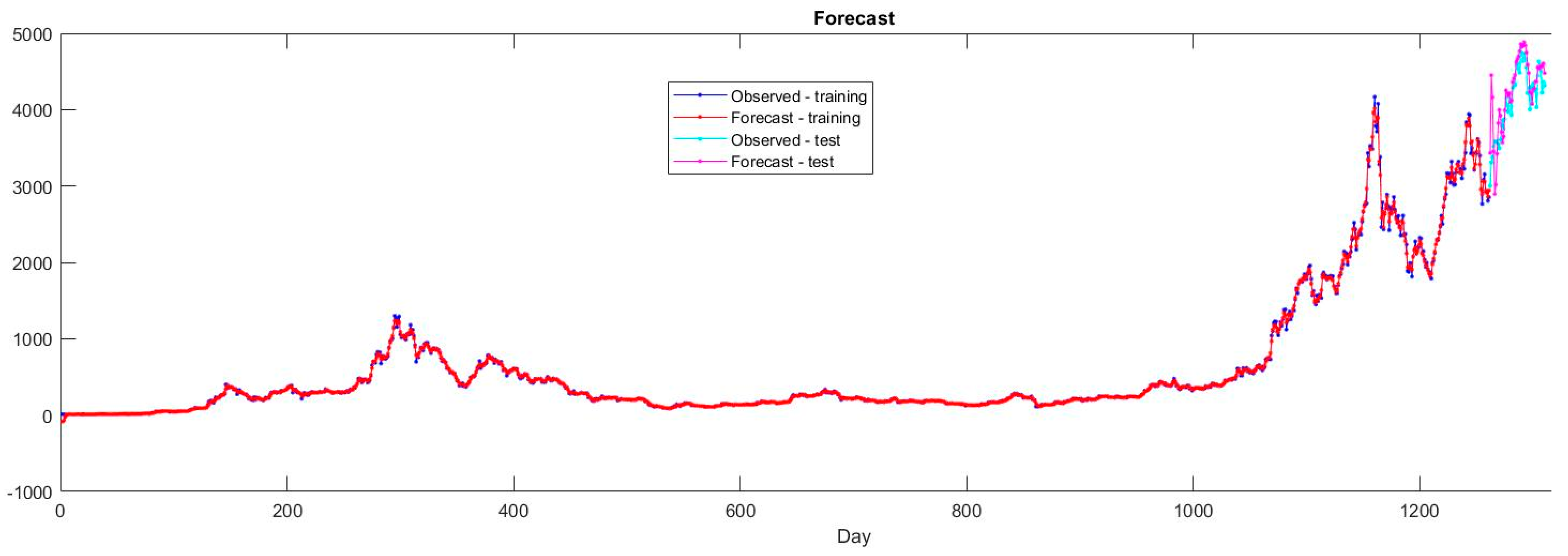

| LSTM—training | 28.17 | 6.54 | 0.693 | 67.51 |

| ES-LSTM—training | 30.23 | 3.93 | 0.714 | 69.84 |

| LSTM—test | 315.89 | 5.73 | 0.648 | 61.59 |

| ES-LSTM—test | 304.46 | 5.49 | 0.673 | 63.33 |

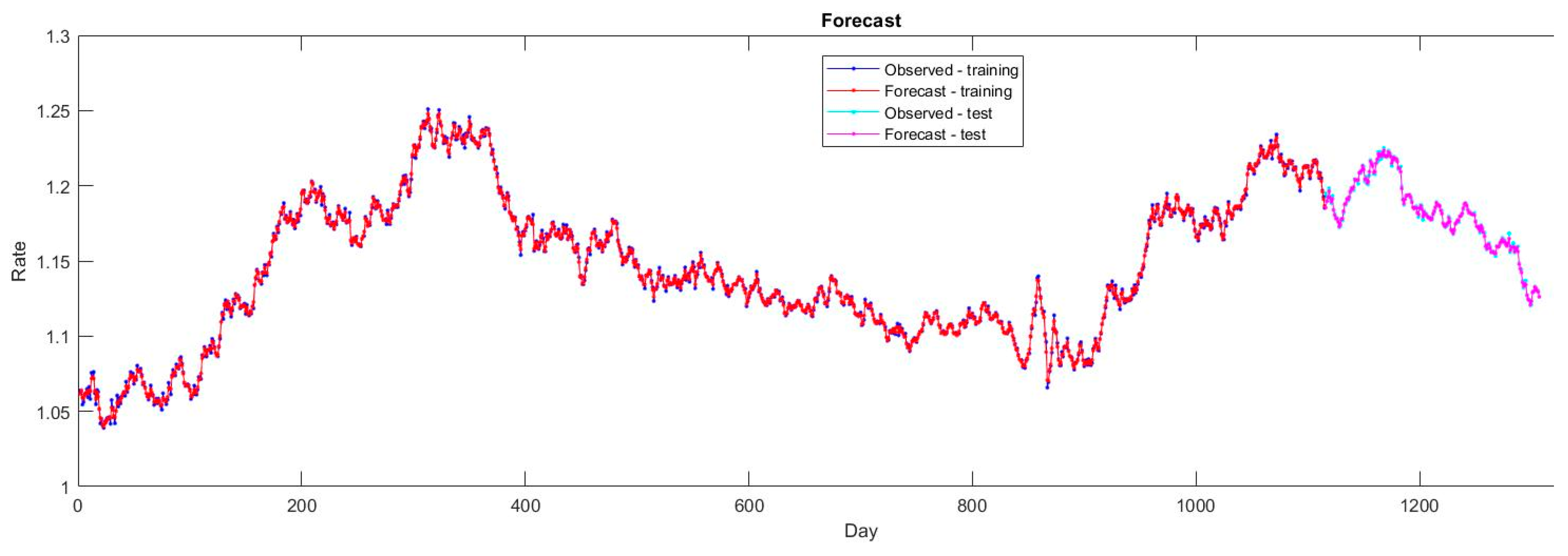

| RMSE | MAPE | F1 | POCID | |

|---|---|---|---|---|

| LSTM—training | 0.0014 | 0.092 | 0.888 | 88.82 |

| ES-LSTM—training | 0.0014 | 0.093 | 0.901 | 90.03 |

| LSTM—test | 0.0030 | 0.103 | 0.836 | 86.16 |

| ES-LSTM—test | 0.0037 | 0.111 | 0.856 | 87.51 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cocianu, C.L.; Uscatu, C.R.; Avramescu, M. Improvement of LSTM-Based Forecasting with NARX Model through Use of an Evolutionary Algorithm. Electronics 2022, 11, 2935. https://doi.org/10.3390/electronics11182935

Cocianu CL, Uscatu CR, Avramescu M. Improvement of LSTM-Based Forecasting with NARX Model through Use of an Evolutionary Algorithm. Electronics. 2022; 11(18):2935. https://doi.org/10.3390/electronics11182935

Chicago/Turabian StyleCocianu, Cătălina Lucia, Cristian Răzvan Uscatu, and Mihai Avramescu. 2022. "Improvement of LSTM-Based Forecasting with NARX Model through Use of an Evolutionary Algorithm" Electronics 11, no. 18: 2935. https://doi.org/10.3390/electronics11182935

APA StyleCocianu, C. L., Uscatu, C. R., & Avramescu, M. (2022). Improvement of LSTM-Based Forecasting with NARX Model through Use of an Evolutionary Algorithm. Electronics, 11(18), 2935. https://doi.org/10.3390/electronics11182935