RGB-Based Triple-Dual-Path Recurrent Network for Underwater Image Dehazing

Abstract

:1. Introduction

Contribution

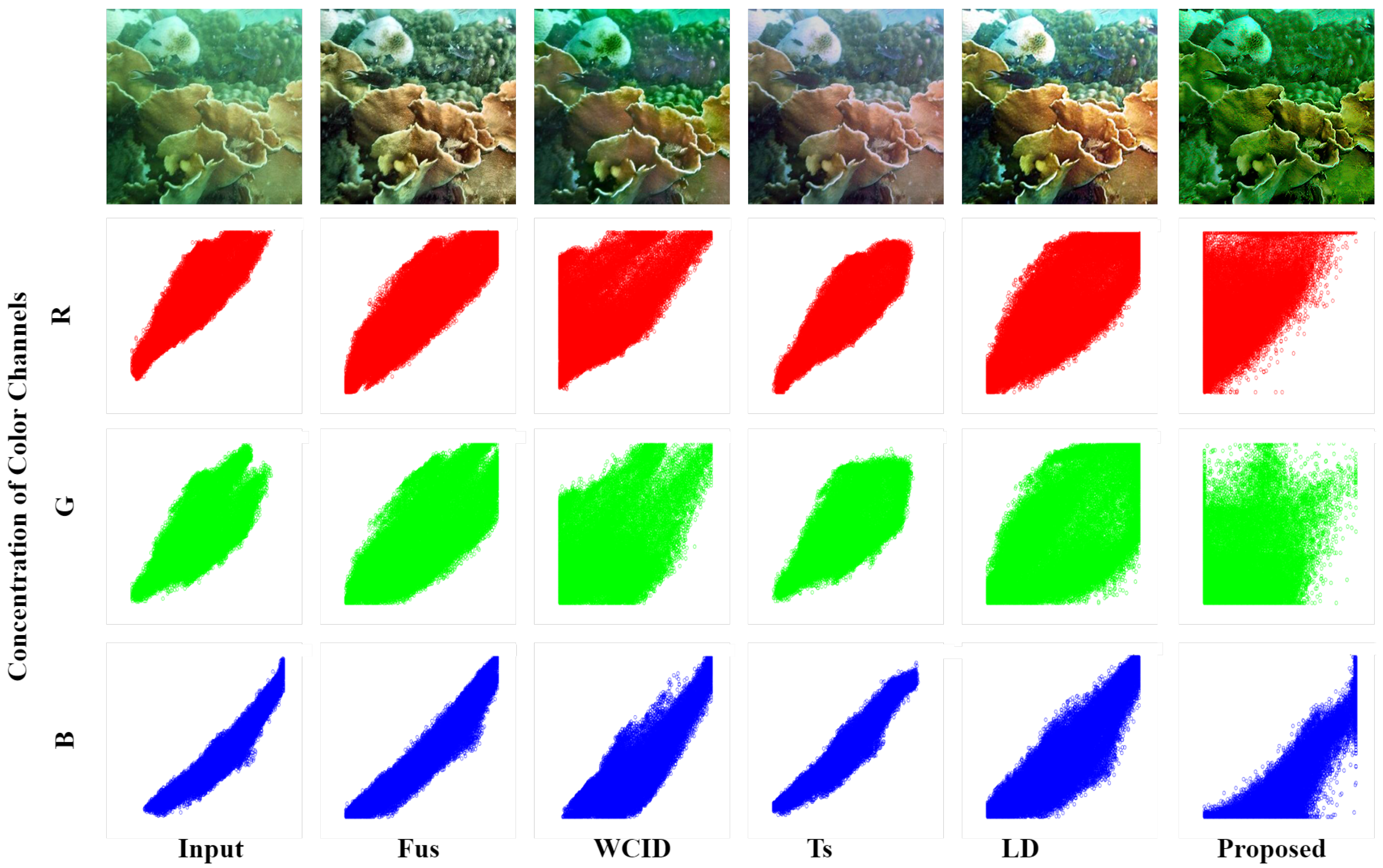

- The input image is decomposed according to the RGB color channels and the features, with each color channel decomposed into two units based on the similarities via the k-means. The k-means are described in detail by [24,25]. This guarantees the ease of adaptability and identification of similar pixels, and thus, by extension, removes pixels with a weak correlation, leaving only pixels with a higher correlation.

- The structure’s triple-dual and parallel interaction allows a comprehensive comparison; hence, even minor features, i.e., pixels with the weakest correlations, are considered. This improves the visual perception of the final image.

- The use of softmax-weighted fusion in the arrangement of the proposed structure also preserves the color, which explains why the proposed result’s color is very similar to the input color. This is achieved via adaptive learning based on the confidence levels of the pixel contribution variation in each color channel during the subsequent fuses.

2. Proposed Methodology

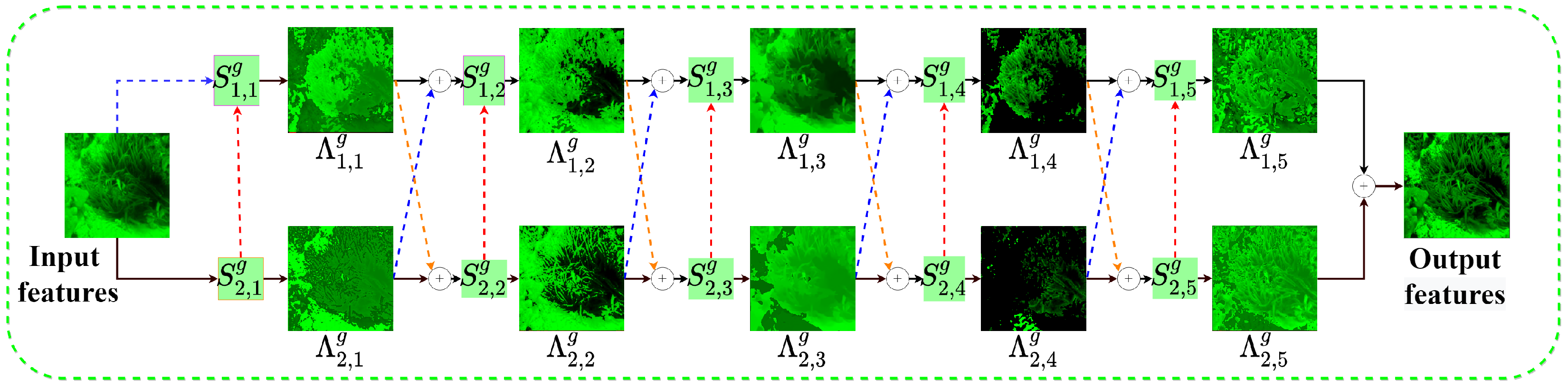

2.1. Triple-Dual-Path Recurrent Network

2.1.1. Network Architecture

2.1.2. Triple-Dual-Path Block

2.1.3. Interaction Functions within the Dual Blocks

3. Experimental Results

3.1. Dataset

3.2. Comparison Methods

3.3. Objective Evaluation of the Proposed Images’ Visual Quality

3.4. Subjective Assessment

Analysis of Underwater Image Overall Quality

4. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Alenezi, F.; Armghan, A.; Mohanty, S.N.; Jhaveri, R.H.; Tiwari, P. Block-greedy and cnn based underwater image dehazing for novel depth estimation and optimal ambient light. Water 2021, 13, 3470. [Google Scholar] [CrossRef]

- Park, E.; Sim, J.Y. Underwater image restoration using geodesic color distance and complete image formation model. IEEE Access 2020, 8, 157918–157930. [Google Scholar] [CrossRef]

- Zhu, Z.; Luo, Y.; Wei, H.; Li, Y.; Qi, G.; Mazur, N.; Li, Y.; Li, P. Atmospheric light estimation based remote sensing image dehazing. Remote Sens. 2021, 13, 2432. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar] [PubMed]

- Galdran, A.; Pardo, D.; Picón, A.; Alvarez-Gila, A. Automatic red-channel underwater image restoration. J. Vis. Commun. Image Represent. 2015, 26, 132–145. [Google Scholar] [CrossRef]

- Drews, P.L.; Nascimento, E.R.; Botelho, S.S.; Campos, M.F.M. Underwater depth estimation and image restoration based on single images. IEEE Comput. Graph. Appl. 2016, 36, 24–35. [Google Scholar] [CrossRef] [PubMed]

- Chen, D.; He, M.; Fan, Q.; Liao, J.; Zhang, L.; Hou, D.; Yuan, L.; Hua, G. Gated context aggregation network for image dehazing and deraining. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa Village, HI, USA, 7–11 January 2019; pp. 1375–1383. [Google Scholar]

- Peng, Y.T.; Cosman, P.C. Underwater image restoration based on image blurriness and light absorption. IEEE Trans. Image Process. 2017, 26, 1579–1594. [Google Scholar] [CrossRef] [PubMed]

- Xiong, J.; Zhuang, P.; Zhang, Y. An Efficient Underwater Image Enhancement Model With Extensive Beer-Lambert Law. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Online, 25–28 October 2020; pp. 893–897. [Google Scholar]

- Wang, Y.; Yu, X.; An, D.; Wei, Y. Underwater image enhancement and marine snow removal for fishery based on integrated dual-channel neural network. Comput. Electron. Agric. 2021, 186, 106182. [Google Scholar] [CrossRef]

- Ancuti, C.; Ancuti, C.O.; Haber, T.; Bekaert, P. Enhancing underwater images and videos by fusion. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 81–88. [Google Scholar]

- Fu, X.; Huang, Y.; Zeng, D.; Zhang, X.P.; Ding, X. A fusion-based enhancing approach for single sandstorm image. In Proceedings of the 2014 IEEE 16th International Workshop on Multimedia Signal Processing (MMSP), Jakarta, Indonesia, 22–24 September 2014; pp. 1–5. [Google Scholar]

- Zhang, Y.; Sun, X.; Dong, J.; Chen, C.; Lv, Q. GPNet: Gated pyramid network for semantic segmentation. Pattern Recognit. 2021, 115, 107940. [Google Scholar] [CrossRef]

- Gedamu, K.; Ji, Y.; Yang, Y.; Gao, L.; Shen, H.T. Arbitrary-view human action recognition via novel-view action generation. Pattern Recognit. 2021, 118, 108043. [Google Scholar] [CrossRef]

- Liang, Z.; Wang, Y.; Ding, X.; Mi, Z.; Fu, X. Single underwater image enhancement by attenuation map guided color correction and detail preserved dehazing. Neurocomputing 2021, 425, 160–172. [Google Scholar] [CrossRef]

- Li, J.; Skinner, K.A.; Eustice, R.M.; Johnson-Roberson, M. WaterGAN: Unsupervised generative network to enable real-time color correction of monocular underwater images. IEEE Robot. Autom. Lett. 2017, 3, 387–394. [Google Scholar] [CrossRef]

- Fabbri, C.; Islam, M.J.; Sattar, J. Enhancing underwater imagery using generative adversarial networks. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 7159–7165. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Guo, Y.; Li, H.; Zhuang, P. Underwater image enhancement using a multiscale dense generative adversarial network. IEEE J. Ocean. Eng. 2019, 45, 862–870. [Google Scholar] [CrossRef]

- Li, C.; Anwar, S.; Porikli, F. Underwater scene prior inspired deep underwater image and video enhancement. Pattern Recognit. 2020, 98, 107038. [Google Scholar] [CrossRef]

- Zhang, X.; Jiang, R.; Wang, T.; Luo, W. Single image dehazing via dual-path recurrent network. IEEE Trans. Image Process. 2021, 30, 5211–5222. [Google Scholar] [CrossRef]

- Liu, S.; Pan, J.; Yang, M.H. Learning recursive filters for low-level vision via a hybrid neural network. In Proceedings of the 2016 European Conference on Computer Vision, Amsterdam, The Netherlands, 11–16 October 2016; pp. 560–576. [Google Scholar]

- Zhao, C.; Sun, L.; Purkait, P.; Duckett, T.; Stolkin, R. Dense rgb-d semantic mapping with pixel-voxel neural network. Sensors 2018, 18, 3099. [Google Scholar] [CrossRef]

- Burney, S.A.; Tariq, H. K-means cluster analysis for image segmentation. Int. J. Comput. Appl. 2014, 96. [Google Scholar]

- Dehariya, V.K.; Shrivastava, S.K.; Jain, R. Clustering of image data set using k-means and fuzzy k-means algorithms. In Proceedings of the 2010 International Conference on Computational Intelligence and Communication Networks, Bhopal, India, 26–28 November 2010; pp. 386–391. [Google Scholar]

- Li, C.; Guo, C.; Ren, W.; Cong, R.; Hou, J.; Kwong, S.; Tao, D. An underwater image enhancement benchmark dataset and beyond. IEEE Trans. Image Process. 2019, 29, 4376–4389. [Google Scholar] [CrossRef]

- Alenezi, F.; Santosh, K. Geometric Regularized Hopfield Neural Network for Medical Image Enhancement. Int. J. Biomed. Imaging 2021, 2021, 6664569. [Google Scholar] [CrossRef]

- Alenezi, F.S.; Ganesan, S. Geometric-Pixel Guided Single-Pass Convolution Neural Network With Graph Cut for Image Dehazing. IEEE Access 2021, 9, 29380–29391. [Google Scholar] [CrossRef]

- Deng, X.; Wang, H.; Liu, X. Underwater image enhancement based on removing light source color and dehazing. IEEE Access 2019, 7, 114297–114309. [Google Scholar] [CrossRef]

- Berman, D.; Levy, D.; Avidan, S.; Treibitz, T. Underwater single image color restoration using haze-lines and a new quantitative dataset. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 2822–2837. [Google Scholar] [CrossRef] [PubMed]

- Siqueira, M.G.; Diniz, P.S. Digital filters. In The Electrical Engineering Handbook; Elsevier: Amsterdam, The Netherlands, 2005; pp. 839–860. [Google Scholar]

- Ancuti, C.O.; Ancuti, C.; De Vleeschouwer, C.; Bekaert, P. Color balance and fusion for underwater image enhancement. IEEE Trans. Image Process. 2017, 27, 379–393. [Google Scholar] [CrossRef] [PubMed]

- Chiang, J.Y.; Chen, Y.C. Underwater image enhancement by wavelength compensation and dehazing. IEEE Trans. Image Process. 2011, 21, 1756–1769. [Google Scholar] [CrossRef]

- Fu, X.; Fan, Z.; Ling, M.; Huang, Y.; Ding, X. Two-step approach for single underwater image enhancement. In Proceedings of the 2017 International Symposium on Intelligent Signal Processing and Communication Systems (ISPACS), Xiamen, China, 6–9 November 2017; pp. 789–794. [Google Scholar]

- Iqbal, M.; Riaz, M.M.; Ali, S.S.; Ghafoor, A.; Ahmad, A. Underwater Image Enhancement Using Laplace Decomposition. IEEE Geosci. Remote Sens. Lett. 2020, 19, 1–5. [Google Scholar] [CrossRef]

- Li, C.Y.; Guo, J.C.; Cong, R.M.; Pang, Y.W.; Wang, B. Underwater image enhancement by dehazing with minimum information loss and histogram distribution prior. IEEE Trans. Image Process. 2016, 25, 5664–5677. [Google Scholar] [CrossRef]

- Berman, D.; Treibitz, T.; Avidan, S. Diving into haze-lines: Color restoration of underwater images. In Proceedings of the 2017 British Machine Vision Conference (BMVC), London, UK, 4–7 September 2017; pp. 1–50. [Google Scholar]

- Fu, X.; Cao, X. Underwater image enhancement with global–local networks and compressed-histogram equalization. Signal Process. Image Commun. 2020, 86, 115892. [Google Scholar] [CrossRef]

- Song, W.; Wang, Y.; Huang, D.; Tjondronegoro, D. A rapid scene depth estimation model based on underwater light attenuation prior for underwater image restoration. In Proceedings of the 2018 Pacific Rim Conference on Multimedia, Hefei, China, 21–22 September 2018; pp. 678–688. [Google Scholar]

- Liu, X.; Gao, Z.; Chen, B.M. MLFcGAN: Multilevel feature fusion-based conditional GAN for underwater image color correction. IEEE Geosci. Remote Sens. Lett. 2019, 17, 1488–1492. [Google Scholar] [CrossRef]

- Islam, M.J.; Xia, Y.; Sattar, J. Fast underwater image enhancement for improved visual perception. IEEE Robot. Autom. Lett. 2020, 5, 3227–3234. [Google Scholar] [CrossRef]

- Qi, Q.; Zhang, Y.; Tian, F.; Wu, Q.J.; Li, K.; Luan, X.; Song, D. Underwater image co-enhancement with correlation feature matching and joint learning. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 1133–1147. [Google Scholar] [CrossRef]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “completely blind” image quality analyzer. IEEE Signal Process. Lett. 2012, 20, 209–212. [Google Scholar] [CrossRef]

- Panetta, K.; Gao, C.; Agaian, S. Human-visual-system-inspired underwater image quality measures. IEEE J. Ocean. Eng. 2015, 41, 541–551. [Google Scholar] [CrossRef]

- Yang, M.; Sowmya, A. An underwater color image quality evaluation metric. IEEE Trans. Image Process. 2015, 24, 6062–6071. [Google Scholar] [CrossRef]

- Fu, Z.; Fu, X.; Huang, Y.; Ding, X. Twice mixing: A rank learning based quality assessment approach for underwater image enhancement. Signal Process. Image Commun. 2022, 102, 116622. [Google Scholar] [CrossRef]

| Method | Reference | Year | Method | Reference | Year |

|---|---|---|---|---|---|

| Ancuti | [11] | 2012 | Guo | [36] | 2016 |

| Dark channel prior (DCP) | [4] | 2010 | Berman | [37] | 2017 |

| Histogram distribution prior (HP) | [36] | 2016 | Cosman | [8] | 2017 |

| Water-Net | [26] | 2019 | Zhuang | [19] | 2019 |

| Fus | [32] | 2017 | gl | [38] | 2020 |

| WCID | [33] | 2011 | CBF | [32] | 2017 |

| Ts | [34] | 2017 | ULAP | [39] | 2018 |

| LD | [35] | 2020 | UWCNN | [20] | 2020 |

| MLFcGAN | [40] | 2019 | FUnIEGAN | [41] | 2020 |

| waterNet | [26] | 2019 | UICoE-Net | [42] | 2021 |

| Technique | Niqe | UIQM | UCIQE |

|---|---|---|---|

| Input | 6.3007 | 1.0773 | 30.6619 |

| Ancuti [11] | 4.704 | 1.2584 | 31.4635 |

| DCP [4] | 5.9239 | 1.1049 | 32.0602 |

| HP [36] | 4.2751 | 1.5625 | 35.1167 |

| Water-Net [26] | 6.6270 | 1.0714 | 26.7070 |

| Proposed | 4.0614 | 1.6016 | 35.7874 |

| Technique | Niqe | UIQM | UCIQE |

|---|---|---|---|

| Input | 3.4554 | 1.0952 | 26.7702 |

| Fus [32] | 7.5204 | 1.5673 | 34.9629 |

| WCID [33] | 6.7322 | 1.7766 | 29.1488 |

| Ts [34] | 3.5524 | 1.1900 | 26.4551 |

| LD [35] | 3.5483 | 1.4806 | 31.6199 |

| Proposed | 3.9341 | 1.9499 | 32.9188 |

| Technique | (mAP) | Niqe | UIQM | UCIQE |

|---|---|---|---|---|

| Input | 0.1795 | 7.9761 | 1.4175 | 32.9212 |

| Ancuti [11] | 0.1891 | 8.4061 | 1.5278 | 33.1459 |

| Guo [36] | 0.2669 | 7.2922 | 1.5350 | 33.3579 |

| Berman [37] | 0.3394 | 7.4781 | 1.5617 | 33.4739 |

| Cosman [8] | 0.4095 | 7.1248 | 1.5371 | 34.1637 |

| Zhuang [19] | 0.4181 | 7.5798 | 1.5399 | 32.9392 |

| gl [38] | 0.4841 | 8.1367 | 1.5220 | 32.6404 |

| Proposed | 0.5017 | 9.2712 | 1.8174 | 35.9634 |

| Technique | Niqe | UIQM | UCIQE |

|---|---|---|---|

| Input | 5.4243 | 1.4116 | 32.6663 |

| Ancuti [11] | 5.9633 | 1.4541 | 37.1339 |

| Guo [36] | 5.6586 | 1.5828 | 33.8156 |

| Berman [37] | 5.4780 | 1.5612 | 33.3881 |

| Cosman [8] | 6.0975 | 1.4827 | 34.4037 |

| Zhuang [19] | 6.6109 | 1.4549 | 33.0792 |

| gl [38] | 5.9403 | 1.4536 | 34.5999 |

| Proposed | 6.2905 | 2.0299 | 37.1459 |

| Technique | Niqe | UIQM | UCIQE |

|---|---|---|---|

| Input | 5.6203 | 1.6118 | 31.1119 |

| CBF [32] | 7.9360 | 1.6938 | 31.0506 |

| ULAP [39] | 11.8648 | 1.8419 | 33.7829 |

| UWCNN [20] | 6.9041 | 1.6054 | 30.4853 |

| MLFcGAN [40] | 4.9413 | 1.4881 | 33.0000 |

| FUnIEGAN [41] | 7.6138 | 1.7062 | 32.1150 |

| waterNet [26] | 6.9541 | 1.6953 | 31.3519 |

| UICoE-Net [42] | 4.7577 | 1.5692 | 31.2444 |

| Proposed | 7.0530 | 2.1111 | 35.2936 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alenezi, F. RGB-Based Triple-Dual-Path Recurrent Network for Underwater Image Dehazing. Electronics 2022, 11, 2894. https://doi.org/10.3390/electronics11182894

Alenezi F. RGB-Based Triple-Dual-Path Recurrent Network for Underwater Image Dehazing. Electronics. 2022; 11(18):2894. https://doi.org/10.3390/electronics11182894

Chicago/Turabian StyleAlenezi, Fayadh. 2022. "RGB-Based Triple-Dual-Path Recurrent Network for Underwater Image Dehazing" Electronics 11, no. 18: 2894. https://doi.org/10.3390/electronics11182894

APA StyleAlenezi, F. (2022). RGB-Based Triple-Dual-Path Recurrent Network for Underwater Image Dehazing. Electronics, 11(18), 2894. https://doi.org/10.3390/electronics11182894