Abstract

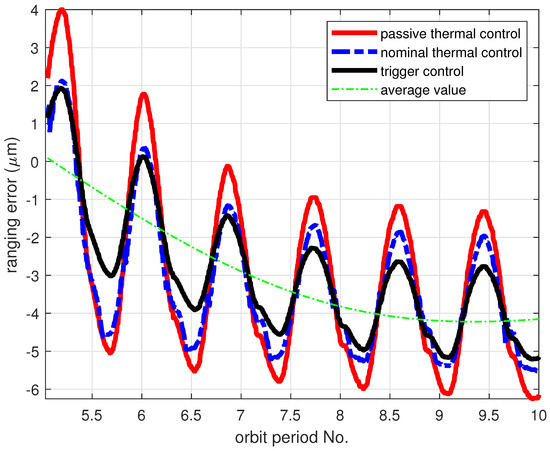

Micron-level accuracy K-band microwave ranging in space relies on the stability of the payload thermal control on-board; however, large quantities of thermal sensors and heating devices around the deployed instruments consume the precious inner communication resources of the central computer. Another problem arises, which is that the payload thermal protection environment can deteriorate gradually through years operating. In this paper, a new trigger-based thermal system controller design is proposed, with consideration of spaceborne communication burden reduction and actuator saturation, which guarantees stable temperature fluctuations of microwave payloads in space missions. The controller combines a nominal constant sampling PID inner loop and a trigger-based outer loop structure under constraints of heating device saturation. Moreover, an iterative model-free reinforcement learning process is adopted that can approximate the estimation of thermal dynamic modeling uncertainty online. Via extensive experiment in a laboratory environment, the performance of the proposed trigger thermal control is verified, with smaller temperature fluctuations compared to the nominal control, and obvious efficiency in system communications. The online learning algorithm is also tested with deliberate thermal conditions that deviate from the original system—the results can quickly converge to normal when the thermal disturbance is removed. Finally, the ranging accuracy is tested for the whole system, and a 25% (RMS) performance improvement can be realized by using a trigger-based control strategy—about 2.2 µm, compared to the nominal control method.

1. Introduction

K-band microwave ranging (MWR) technology can provide micron-level precise ranging measurements between spacecraft in space, which has potential applications in the fields of Earth elevation surveying, gravity field detection, and other space missions [1,2]. The accuracy ranging performance (time delay) of the MWR system is mainly affected by the payload thermal condition in space. The state-of-the-art payload thermal controller should be well designed with tiny temperature fluctuations during orbiting; however, the spacecraft thermal control system is a large-scale system that involves hundreds, even thousands of temperature sensors and patch heaters around instruments, which increases the pressure on communication with the on-board central computer when engaged in diversified space missions in the future [3,4,5]. At the same time, the thermal control system itself can be deteriorated to the original dynamic model during multiple year-long space missions, and the adaptive approaches should be adopted to dealing with this situation. To deal with such problems, in this paper, a new thermal control strategy is proposed that is based on triggered sampling and the model-free learning process.

The event-triggered control (ETC), in contrast to the traditional time trigger control with fixed sampling period, adopts the updating strategy of sampling in a variable period [6,7,8]. Designers can impose certain thresholds with performance indexes for the system according to actual needs. The control signals are transmitted and updated inside the system only when the states exceed the threshold conditions [9,10]. Zhang [11] embedded ETC into a linear system model to address predictive control problems, updating the predictive sampling step-time through a fixed-threshold event-triggering mechanism. In [12], the design of the multi-variable linear industrial process ETC with time delay and quantization error is studied, the controller parameters are calculated by linear matrix inequality (LMI), and the closed-loop system asymptotic stability proof using Lyapunov theory is provided. Azimi [13] proposed an ETC design that considered the system transmission delay and packet loss during the signal transmission in the chemical process, which can track the set values of the system with the signal transmission constrain. For the situation of time-varying model parameters of linear chemical control process in different working environments, Li [14] described the uncertain system by using Markov random theory, and an event-triggered sliding mode controller with finite-time convergence is designed by combining homogeneous theory with an event-triggered mechanism, which realized the finite-time convergence. The thermal control system we considered in this paper includes a saturated actuator process, which is a type of nonlinear system—scholars have also focused on the application of ETC to nonlinear systems. References [15,16] analyzed the nonlinear strict feedback system and uncertain strict feedback nonlinear system according to adaptive control theory. The results show that a control system with an event-triggering mechanism can improve the transmission efficiency of the signal—it can also reduce the energy consumption and cost. Abhinav [17] designed an adaptive event triggered sliding mode controller by combining the sliding mode control with ETC, and the results show that the designed controller has good regulating performance in the presence of external disturbances and model uncertain. Moreover, the unknown nonlinear characteristics inside the dynamics model can also be approximated by advanced control methods, such as fuzzy control [18], neural network [19,20,21], and adaptive dynamic programming (ADP) [22]. References [23,24] considered the ADP triggering problem with a saturated actuator. Seuret [25] adopted the linear quadratic optimal control method. Reference [26] provided a stability analysis of the system with disturbance. Reference [27] introduced inequality to analyze the trigger system.

A thermal system using an ETC design relies on the temperature sensors as state-sampling hardware, which can diverge from the tracking trajectory once measurement malfunctions. Some scholars have proposed the idea of self-triggered control (STC) in recent years [28,29,30,31,32]. The principle of STC is to actively predict when the next triggering time will occur according to the previously received data and system dynamics. Compared with ETC, STC reduces the transmission times of the feedback signals, effectively reduces the on-board data transmission burden, and improves the control efficiency. Wang [33] provided the self-triggering conditions of general linear systems based on the Lyapunov method. Almeida [34] studied the self-triggering of linear systems with bounded disturbance state feedback to ensure the system asymptotic stability. The application of STC to a network control system is proposed in [35].

The triggered control strategy design may improve the on-board communication efficiency most of time, relying on the system model functioning well; however, as the observation platform for long-term space missions is critical, the spacecraft payload thermodynamic will gradually deteriorate over time, which will clearly deviate from the originally designed model. As a typical intelligent agent in space with sensing and action functions, the spacecraft platform malfunction should be detected and calibrate itself on-orbit, and methods such as optimal control and dynamic programming should be adopted for this model’s uncertain situation.

Recently, efficient approximation techniques have been proposed to solve the above described problem, known as approximate dynamic programming (ApDP) or reinforcement learning (RL), including value-based RL (Q-learning methods) [36,37], policy-based RL (policy gradient methods) [38,39,40], and value-policy combined RL (actor-critic methods) [41]. For systems with disturbed dynamics, LQR has been widely used for learning-based controller design [42,43,44]. Lee [45] has developed a Q-learning framework for LQR control based on an alternative optimization formulation of the problem. The proposed framework is then used to design a model-free Q-learning algorithm based on primal dual updates. Policy gradient methods continuously calculate the gradient of the cumulative income of the agent and the strategy parameters under the current strategy in an end-to-end approach, and finally the gradient converges to the optimal strategy [38]. The actor-critic methods include two parts: actor and critic, in which the actor is responsible for interacting with the environment and selecting actions based on strategy function; the critic is based on the value function, which is responsible for evaluating the actor and guiding its next action. In the actor-critic algorithm, it is necessary to approximate the strategy function and the value function independently [46]. Basically, the critic calculates the state optimal value, and the actor uses it to iteratively update the parameters of the strategy function, selecting action, so as to obtain the immediate reward and move to the next state. The critic uses the reward and the new state to update the parameters of the value function.

The application of RL to spacecraft thermodynamic systems is rarely reported in recent years, according to the authors’ literature review. Lee [47] introduced a RL-based model-free predictive control structure for chiller plants. Qiu [48] provided an optimal operation solution of chillers by combining RL technique and expertise knowledge, aiming for a balance of power and indoor comfort. Inspired by the literature, this paper focuses on a trigger-based MWR payload thermal control system design with an online learning iteration, aiming to the micron-level precise ranging performance in space missions [49,50]. The proposed approach benefits from the following noteworthy features:

- Feasible triggered thermal system control design with obvious communication burden reduction;

- No original thermodynamic information required when faced with disturbed system model uncertainty;

- Suitable for real autonomous management of space platforms with long-term mission life;

- Thermal control strategies can be selected from nominal control, triggered control, and model-free learning process, according different orbiting period.

The rest of the paper is organized as follows: Section 2 provides the thermal system modeling for the micron-level K-band ranging system with efficient saturation trigger feedback controller design. The model-free learning method is shown in Section 3 for the case of thermal system uncertainty. Finally, the experimental results in a laboratory environment are provided, which demonstrate the effectiveness of the proposed method.

2. Thermal System Design for Precise Ranging System

2.1. Thermal Structure of Deployed Satellite

The main sources of heat during a satellite orbiting in space including sunlight, thruster ignition, and power consumption of on-board electric devices. The fluctuation of satellite temperature has the following characteristics: temperature, both daily and seasonal, periodically changes as the Earth rotates and revolves around the sun; On the other hand, on-board electric devices will also cause temperature changes due to work status changes, failures, or other uncertain factors, as the on-board thermally insulated layer performance decreases with satellite aging. In order to obtain a proper heat transfer and conversion, avoiding the temperature exceeding the normal working range, thermal control technology should be adopted that includes specially designed active and passive approaches.

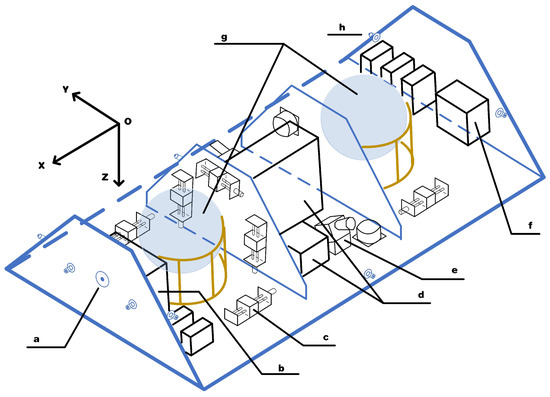

The structure of the whole satellite is shown in Figure 1 below, in which “b” indicates the installed position of the MWR system, which includes four parts: K-band transceiving antenna, waveguide switch network, signal process unit, and ultra-stable crystal oscillator (USO), which constitutes the micron-level microwave ranging system as a whole.

Figure 1.

Structure of the satellite: (a) K-band antenna phase center; (b) K-band microwave ranging system payload; (c) centroid adjustment; (d) accelerometer for gravity field detection; (e) star sensor; (f) power supply; (g) fuel tank; (h) thruster.

It can be seen from Figure 1 that the whole ranging system is installed on the +X and +Z panels of the satellite. A three-level thermal protection structure is designed in order to prevent the payload from being affected by space radiation during operation. First, the platform outer shield, with bare carbon fiber reinforced plastic (CFRP) with 10-layer multi-layer insulation (MLI) pack, is used that can block any heat from leaking in from the outer solar panel. Second, the payload cabin, with CFRP with single layer Kapton foil, is used, which can minimize radiative heat from the inner platform environment to the MWR payload enclosure. Moreover, there is thermal insulation between the satellite platform and the payload, which is made of low conductive material to reduce the effect of temperature variations at the structure interface. The internal thermal conduction balance treatment is carried out within deployed cabins for the differential temperature caused by on-orbit illumination directions on the surface of the satellite. Third, active thermal control, with dedicated heaters and condensation heat pipes, is used for critical payloads that have more stringent temperature limits than the rest of the spacecraft—mainly the four parts of the MWR system. The temperatures of the MWR equipment are monitored using sensors and maintained within the desired limits by several patch heaters and phase-changed heat pipes that are controlled by the spacecraft central computer during the stages of science operations.

According to the massive data from previous tests, the ranging error of the MWR equipment is mainly due to the microwave measurement signal chain, which includes: (1) the temperature fluctuation of USO that reduces the clock frequency stability; (2) the thermal deformation of the horn antenna may lead to changes in the antenna phase center; (3) temperature changes of phase-locked frequency doubling equipment, microwave network, quadrature mixer, intermediate frequency amplifier, and low-pass filter may induce errors in the ranging measurement result. As a result, it is necessary to improve the accuracy and stability of temperature control for these devices, which could be improved using less than ±0.15 K/orbit. In addition, the digital signal processing unit of MWR also needs high-precision temperature control within ±0.1 K/orbit, since it contains the A/D converter of the low-pass filtered signal, FPGA, and DSP components on the same circuit board, which are highly sensitive to temperature fluctuations.

2.2. Payload Thermal Dynamic Modeling and Nominal PID Control

The temperature of deployed payloads is directly related to its surrounding environment of satellite platform in space and internal thermal exchange between themselves. With a proper structure design featuring three levels of thermal protection, the payloads can be perform well within finite temperature fluctuations inside a cabin room. In this paper, we consider the thermal coupling relationship between those payload components; the thermal analysis adopts the node network method to establish the thermal balance equation of any node on satellite as follows [51]:

where the subscript i denotes the thermal nodes, denotes the specific heat capacity of the payload metal alloy block, denotes the block mass, denotes the transient thermal temperature, is target temperature, denotes the average heat transfer coefficient of each node, is the area of each heat patch/pipe surface, and is the heating/cooling power of each node around payload. The thermal control is realized by several heating and condensation patch nodes with an adiabatic section around each payload components, providing the heating and cooling actions from control command. Typical electric heating is used when the temperature is below the target, and the state-of-the-art micro-electro-mechanical system (MEMS)-based pulse width modulation (PWM) high-speed on-off valve is used to cool the liquid flow inside the phase change pipe during high temperature stages.

For the purpose of fast and stable internal temperature control of payloads during space missions, especially during precise ranging stages, two cycles of a closed-loop active thermal controller are designed: one is a high-power electric heating/cooling controller with temperature sensors patched around payloads using PID control; the other one is a precise low-power heating controller using optimal control with triggering saturation constraints.

A typical PID control algorithm is used as a nominal scheme given as [52,53,54]:

where are the coefficients of proportional, integral, and differential, respectively; is sampling time, is the difference of measured temperature and target temperature, and is the heating/cooling power consumption for active thermal control.

2.3. Trigger-Based Precise Optimal Thermal Control with Saturation Constraint

The thermal dynamic model and PID algorithm design above aim to provide the nominal thermal active control during the space orbiting period. For the purpose of the high-precision microwave ranging system that is functional during the science observing phase in space, each payload component is patched around several heating/cooling nodes and measurement sensors in order to fully realize thermal control and temperature monitoring.

Here, we want the thermal control system to be tracking the desired temperatures during the space mission with minimal control burdens. First, considering the nominal states as , where the subscript mean the four MWR components of the K-band antenna, waveguide switch network, microwave signal process unit, and USO. Define the new states as the difference of current states and , namely, with . Similarly, we can define the heating/cooling control variable with according to the deviation between the actual and the nominal thermal control input. On the premise of a given nominal temperature state sequence, the time series of the nominal control input can be obtained directly through the PID algorithm; so, after the control input of the deviation dynamics is solved, the actual temperature tracking control can be obtained through the summation of and .

The precise thermal control is realized through several accuracy calibrated patch heater and cooler, with limited power consumption constraints. Here, we consider the deviation thermal system as a saturated linear dynamic equation of the form:

where is the state matrix, and is the control matrix. denotes the feedback gain matrix through optimal control. Here, we assume that all states are observable and that the system is controllable. Because of the unmodeled dynamics and external thermal disturbances during on-orbit mission flight, and are both disturbed matrices.

Note here we define the saturation control of , where is the i-th control input signal and is the maximum amplitude of i-th control actuator, i.e., the heating/cooling power. The time-tag shows up through the event trigger, meaning the control signal triggers off at time , and holds still during time period ; this can greatly save signal transmitting bandwidth, reducing the communication burden for the whole on-board system.

Next, we give a brief introduction of the optimal control used in this paper. The tracking performance of the energy cost is written as:

where is the solution of the Riccati equation in time , , . Suppose we have the matrix pairs controllable and measurable, clearly, we will obtain the best performance with minimal value of J, and the final object of control system is finding the optimal value with dynamic modeling disturbances:

The finite horizon optimal control problem can be solved as follows: define the Hamiltonian function

With proper derivation, the following HJB equation can be obtained [55]:

Then, we have the optimal control of

where can be found in the Riccati equation of

Theorem 1.

Proof of Theorem 1.

The proof can be found in ref [55], which is not shown here. □

2.4. Trigger Condition Analysis

We define , meaning the state differences of the previous triggered time and current time. The sampling signal of the system that is sent to the controller through feedback needs to meet the selected trigger condition. Here, we design the trigger mechanism as:

where , , and are given positive constants, are a positive defined matrix, denotes the triggered time of event k, and the signal transmitting period since . Equation (10) can be explained as follows: suppose the first trigger happened in time in a real system operation, and after that, no trigger happened even if condition is satisfied, with the control signal under saturation, i.e., condition . By achieving this, it will greatly improve the communication resource utilization of the system.

Let , and ; we have the event-trigger condition of

The event-trigger transmission condition is realized by hardware samplings and trigger condition judgment. Similarly, the self-trigger is implemented through previous signal and state predictions. Here, we explain the self-trigger conditions as follows: consider time , by using the system model of Equation (3), we have

The analytical solution of Equation (12) is given as

With proper derivation, we have

Let , and . According to Equation (11), we can obtain the self-trigger time-tag of through

Clearly, Equation (15) is obtained based on the event-trigger condition of Equation (11), with less trigger period. Finally, we can obtain the self-trigger condition of

The self-trigger time-tag of meaning the sampling point, according to saturation function, and the feedback of system states in depending on . Moreover, the trigger period of relies on matrix , namely:

(1) if , then we have , and

(2) if , then we have

and

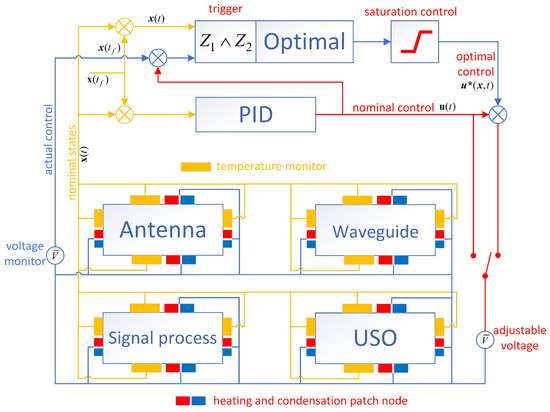

Finally, here we provide the schematic diagram of the proposed trigger-based thermal control system as in Figure 2.

Figure 2.

Structure of the trigger-based thermal control system.

2.5. Stability Analysis of Trigger Control

For the given known stable open-loop system, the global stability of the whole system can be guaranteed by selecting appropriate event triggering conditions when the actuator of the system is saturated. Here, we analyze the trigger conditions that can guarantee the global input-state stability of the system. First, considering the system model as in Equation (3), we introduce Lemma 1 and Lemma 2 as:

Lemma 1

([26]). (Input-states stable) for a system as

where f: is continuous function of t, regional Lipschitz function of . Let the continuous differentiable function be , which satisfies

where are function, then system (20) is input-state stable.

Lemma 2

([26]). For any , if belong to linear region , then we have

for any positive defined matrix , where is a dead zone nonlinear function from the saturation control of Equation (3).

Then, we have the global input-state stability of the triggered system conditions as in Theorem 2 below:

Theorem 2.

Choosing trigger condition of for system model as Equation (3), if we have that satisfies , then event-trigger system (3) is global input-state stable with , .

Proof of Theorem 2.

Constructing the Lyapunov function as , where , from the Riccati equation of Equation (9), and clearly function satisfies the condition of Lemma 2. If inequation , then matrix is a Herwitz matrix, and we have the derivative of the Lyapunov function as

According to the trigger condition of , we have ; referring to Lemma 1, finally we can guarantee that system (3) is global input-state stable. □

3. Model-Free Reinforcement Learning Formulation

3.1. Reinforcement Learning Structure

The triggered optimal control design performed stably during the test, as described in Section 4; however, the problem we are faced with is that the real thermodynamic system will gradually deteriorate over time during a long-term space mission, which will clearly deviate from the original designed model, and proper online estimation/update process should be adopted for this situation. Vamvoudakis [37] provided a learning-based approach that deals with an uncertain dynamic environment by using an up-to-date adaptive mechanism process. Similarly, here we use a value-based Q-learning algorithm to find an optimal action-selection policy from the information of thermal actuator and temperature state sensors with dynamic disturbances. The learning algorithm is in the form of an actor/critic structure, which uses an actor to select the control policies to improve the value and the critic to assess the actor’s decisions.

3.2. Reinforcement Learning Structure

Combining the optimal value function of Equation (5) and the Hamiltonian function, we obtain the Q function as

where is the action value function.

We define the generalized state , and the Q function rewritten as:

with

By using the stable condition of , we can obtain the optimal control for the model-free system as

3.3. Critic/Actor Structure

In this paper, the critic/actor structure is used to solve the problem of online learning with a disturbed model. We use the critic approximator for the Q function and the actor approximator for the triggered optimal control. The critic of the Q function is given as:

where vech(·) denotes the half vectorization operation with , and for off-diagonal elements. ⊗ is the Keronecker vector product operation.

Rewrite

with , then can be considered as the ideal weight of quadratic polynomial that approximation . Actually, the ideal weight is unknown, considering the estimation of , then we have the critic approximator as

The actor approximator is

with weight estimation .

For the purpose of determining the wanted tuning law of and , it is necessary to define proper approximate errors of the critic/actor. Here, we divide the time sequence into several tiny time periods with fixed step , then we have the following by using the integral reinforcement learning method:

The critic approximation error is defined as the critic weights converge to the ideal value when the critic error converges to zero:

where and denote the states/control signals from observer. Similarly, we define the actor approximator error as

where can be obtained from weights .

After the definition of critic/actor approximation error, the next step is finding a learning algorithm that makes the converge to zero, through weight matrix update.

3.4. Learning Process

First, define the approximation error as

and the gradient descent method is used here to solve the weight matrices update, making it converge to the ideal value. We have to find the approximate error of critic/actor by using the directional derivative of the weight matrices . Similar to [37], from the chain rule and normalization, we can obtain:

where , , are the constant gain that determines the convergence rate, and the gradient descent algorithm of (38) guarantees the convergence.

Next, we define the weight estimation error of , , and make the estimation error dynamic equation of

Similarly, we obtain the actor weight estimation error dynamic

where are the matrix elements as in Equation (27). The stable analysis of the learning process can be given in Lemma 3 as:

Lemma 3.

According to the critic approximator tuning law of Equation (38) for any given control input, the critic error dynamics converge exponentially to the equilibrium point with

where , , and the signal Δ should be persistently exciting (PE) within interval , i.e., , with and

The stability proof of the used learning method can be found in [37], and is not provided here.

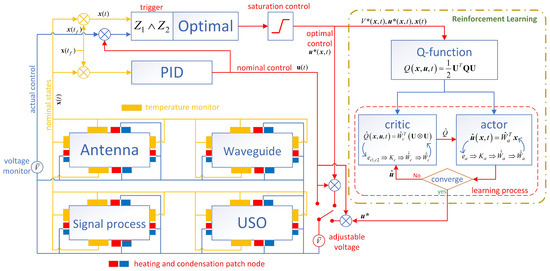

The learning process used here has to calculate the weight matrices of Equation (38) iteratively over time, which surely increased the controller complex compared to traditional learning [56]; however, no dynamic information is needed when the system deviates from the nominal one—thus, this system is applicable for space use. Finally, we provide the whole structure of the proposed event-trigger control system with a learning process, as seen in Figure 3.

Figure 3.

Structure of the triggered thermal control system with learning process.

4. Experiment Test and Simulation

4.1. Laboratory Experiment Environment

The proposed thermal control system was extensively tested in a laboratory environment on the ground, before launch. The relevant metal materials and heating parameters of MWR thermal test are given in Table 1 as:

Table 1.

Metal materials and heating/cooling parameters of MWR thermal test.

Parameters for the nominal PID control in Equation (2) include , , , and sampling time is . According to the massive experiments, the thermal dynamic model is:

Detailed information of the submatrix can be found in Appendix A.

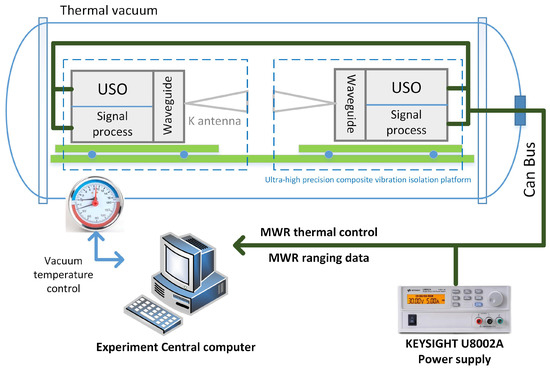

The overall thermal control experiment system for the MWR time-delay test was carefully designed to provide a temperature control environment with precision, high stability, and a wide range of output, with a vibration isolation environment that had a vibration amplitude of less than 1 µm. The schematic diagram of the whole test system is given in Figure 4 below.

Figure 4.

Schematic diagram of the MWR thermal control system in laboratory environment.

The whole thermal control system includes precise controllable temperature and humidity thermal vacuum equipment, ultra-high precision composite vibration isolation platform, MWR payload, MWR data sampling, and a process system and power supply, as shown in Figure 4. The MWR microwave ranging system A and B were placed on the vibration isolation platform with the thermal insulation structure, reducing the temperature of the isolation platform, affecting the measured MWR equipment. The data acquisition and processing system, power supply, etc., were placed on the laboratory desktop to avoid other heat sources, vibration, etc., from affecting the tested payload.

4.2. Performance of Passive and Nominal Thermal Control

To fully simulate the on-orbit thermal condition of the internal satellite compartment, we provide the baseline follow-on formation mission as: Chief spacecraft orbit altitude: 500 km; inclination: 89.2 deg; argument of perigee: 0 deg; RAAN: 0 deg; true anomaly: 0 deg; the deputy spacecraft followed the in-line flight relative to the chief spacecraft, with a distance of about 180 km in-track [57].

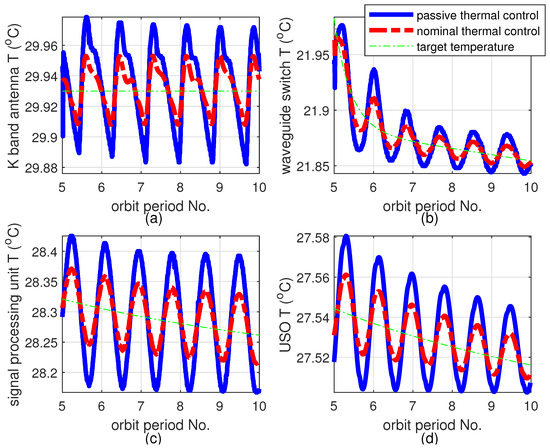

The bold blue lines in Figure 5 show the measured temperature of the ranging payloads inside the thermal vacuum equipment without active control, from sampled data during the experiment. Note that there are four sensors around each payload; the results in Figure 5 demonstrate the average temperature of those four sensors for each payload. For the sake of clarity, here we just provide the thermal states after temperature convergence from the fifth to tenth orbit. The results represent the satellite internal ranging payload thermal condition during the formation scene on-orbit, which mostly exhibit periodic fluctuations.

Figure 5.

The thermal states of K-band MWR payloads during test. (a) K-band antenna; (b) waveguide switch network; (c) signal process unit; (d) USO.

Clearly, the passive thermal protection method performed stably during the on-orbit mission time, about ±0.15 C when convergence occurred, which verified our model for practical use in a real space mission; however, for more precision ranging performance, it is necessary to conduct active thermal control to achieve less temperature fluctuations. The thermal experiment was conducted again with the same parameters. The green lines in Figure 5 are the target temperatures of each payload, through online curve fitting, and the bold dotted red lines show the results of the nominal thermal PID control as introduced in Section 2.2. The results show the decreased temperature fluctuation of about ±0.1 C, compared with passive thermal control.

4.3. Performance of Trigger Control

The performance of proposed trigger control was tested, and is described in this section. Here, we set the parameters of , meaning the triggered threshold value from the measured states. Saturation control complied with the data from Table 1 for each payload, and in Equation (11).

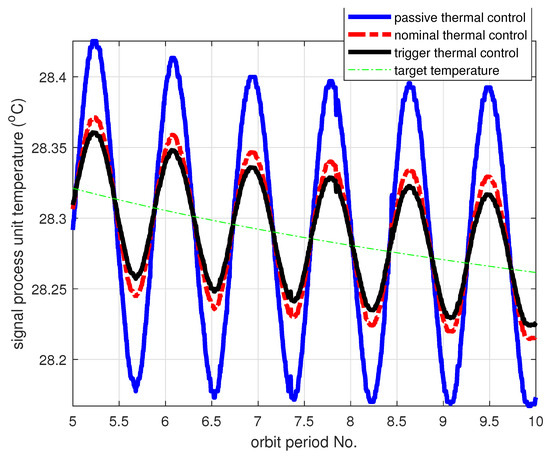

Figure 6 provides the experimental results of the self-triggered control for the signal process unit payload. We obtained less temperature fluctuation results (bold solid black line) compared to the nominal control. The reason for this improvement is due to the adopted optimal control that minimized the difference of nominal thermal trajectory to the target ones. The other payloads obtained similar thermal control performance as the signal process unit, which is not shown here for the sake of brevity.

Figure 6.

Comparison of different thermal control algorithms for signal process unit.

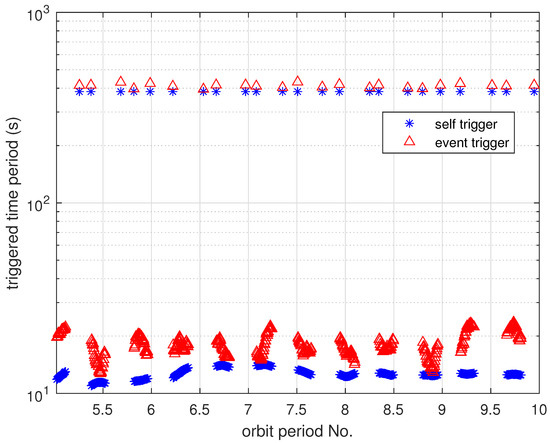

It is interesting to find the internal states by using triggered control; Figure 7 shows the triggered sample periods of the signal process unit payload. Clearly, the triggered control system design can effectively adjust the sampling period, compared to the nominal PID control of the fixed sampling time, according to the error state perturbations. Moreover, the event-trigger (red triangle) could reduce the sampling period frequency better than the self-triggered (blue stars) approach during our experiments—about 60–120 s for event-trigger and 20–35 s for self-trigger—during the undersaturation actuator stages. The reason for this phenomenon is that the event-trigger uses external thermal sensors to obtain state updates, and the self-trigger uses model prediction state information, which increased the sampling frequency more than the event-trigger structure. The upper data in Figure 7 show that the trigger points in the control saturation begin/end stages and regional minimum/maximum temperatures.

Figure 7.

Sample period vs. orbit number for self-/event-triggered control.

4.4. Performance of Learning Process

The thermal control system modeling may differ from the original design as a space mission lasts for years. Here, we use the proposed online learning method for the thermal system with the following parameters: positive semidefinite matrix and positive definite matrix

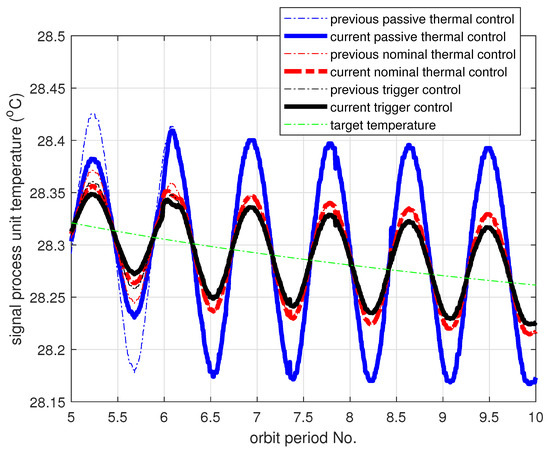

the actor/critic approximator constant gain , in Equations (39) and (40). Moreover, a 0.5 kg aluminum alloy block is patched closely with the signal process unit, until the end of the 6th orbit period, simulating the thermal system model uncertainty in space. For the experiments during orbit No. 0–6, an exploration noise was added in the control input along with nominal one to ensure persistence of excitation and state exploration.

Figure 8 shows the performance of the thermal control system with the learning process for the signal process unit payload. The results of previous and current experiments are clearly marked as thin lines and bold lines, respectively, and compared to Figure 6. It is interesting to find that the thermal system gradually recovered to normal states since the beginning of orbit No.6, as the aluminum alloy block separated from the payload and the nominal/trigger control trajectory experienced a tracking process, marked as bold red/black lines. The results illustrate the efficacy of the proposed learning algorithm when the system model was disturbed with unknown information, which can be adaptively converged to stable states. Moreover, it is meaningful to find that the internal thermal condition can be improved if a big metal alloy block is used.

Figure 8.

Comparison of different thermal control algorithms with learning processes for the signal process unit.

4.5. Time-Delay Performance of MWR Ranging System

The time-delay (ranging error) fluctuation performance of the microwave ranging system is closely related to the thermal stability of each payload on-orbit. The active microwave payloads used in the experiment were carefully tested separately at a precisely controlled constant temperature and humidity cleaning platform in advance, which revealed the time-delay coefficient (TDC—meaning the time delay value per degree Celsius) of 40 µm/K, 48 µm/K for K-band antenna and waveguide switch network; 19 µm/K, 25 µm/K for the signal process unit and USO [57]. Moreover, the time delay of the whole K-band ranging system can be realized as less than 5 µm if the payload thermal system is well protected within about 0.1 C fluctuation.

The final microwave ranging error using the proposed trigger-based thermal control structure design is shown in Figure 9 with a black line. Clearly, the thermal control system performed stably after convergence by using the optimal online triggered control structure. The ranging accuracy with passive thermal control can achieve less than approximately 6 µm in orbit No.10 (max-min) and less than 3.5 µm by using nominal thermal control. The 25% (RMS) accuracy improvement can be realized by using the trigger-based control strategy, about 2.2 µm from the test, compared to the nominal control method.

Figure 9.

The micron level ranging error during different thermal control processes.

5. Discussion

Aiming to improve autonomous and accuracy MWR ranging performance in real space missions, this paper proposed a trigger-based payload thermal control system design with an online learning process. The whole structure can be selected through option switch, according to actual needs. Basically, a nominal controller is used for coarse control under a new thermal environment, and can be switched to a trigger-based controller during the mission, minimizing the communication resources required from the spacecraft platform. RL-based control is suitable for long-term missions in space, particularly in cases where thermodynamic conditions deteriorate. The computational complexity is increased as we introduced the nominal control, trigger-based control (in trigger condition computation), and RL-based control (in actor/critic approximation iteration steps). The performance of the proposed trigger thermal control system is verified in a laboratory, which demonstrated the efficiency in communication reduction and temperature stability to practical use. The effectiveness of learning process was also validated under conditions of thermal dynamic modeling uncertainty. Finally, the ranging accuracy was tested for the whole payload system; we found that a 25% (RMS) performance improvement can be realized by using a trigger-based control strategy, about 2.2 µm compared to the nominal control method.

Author Contributions

Conceptualization, X.W. and H.Z.; methodology, X.W. and H.Z.; software, X.W. and N.W.; validation, X.W., X.L., D.W. and X.Z.; formal analysis, Q.S. and Z.Z.; investigation, X.W., X.L. and Z.Z.; resources, X.W., S.W. and C.D.; data curation, X.W. and X.L.; writing—original draft preparation, X.W.; writing—review and editing, X.W. and Q.S.; visualization, X.W.; supervision, D.W., S.W. and C.D.; project administration, X.W., D.W. and C.D.; funding acquisition, X.W., D.W. and C.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Shanghai Nature Science Fund under contract No. 19ZR1426800; Shanghai Jiao Tong University Global Strategic Partnership Fund (2019 SJTU-UoT), WF610561702; National Key R&D Program of China, No. 2020YFC2200800; Natural Science Foundation of China, No. U20B2054, No. U20B2056.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| RMS | Root mean square |

| MWR | Microwave ranging |

| ETC | Event-triggered control |

| STC | Self-triggered control |

| LMI | Linear matrix inequality |

| ADP | Adaptive dynamic programming |

| ApDP | Approximate dynamic programming |

| RL | Reinforcement learning |

| USO | Ultra-stable crystal oscillator |

| CFRP | Carbon fiber reinforced plastic |

| MLI | Multi-layer insulation |

| A/D converter | Analog-to-digital converter |

| FPGA | Field Programmable Gate Array |

| DSP | Digital Signal Processing |

| MEMS | Micro-Electro-Mechanical System |

| PWM | Pulse-width modulating |

| PID | Proportion Integration Differentiation |

| HJB function | Hamilton–Jacobi–Bellman function |

| PE | Persistently exciting |

| RAAN | Right Ascension of Ascending Node |

| TDC | Time-delay/Celsius degree |

Symbols

Crucial symbols in trigger-based control include:

| saturation control | |

| positive constants for triggering error | |

| the triggered time of event k | |

| signal transmitting period since | |

| function of | |

| trigger period of | |

| function |

Crucial symbols in learning-based process include:

| estimation and error of critic approximate weight | |

| estimation and error of actor approximate weight | |

| critic approximation error | |

| actor approximation error | |

| critic/actor approximation error function | |

| user defined function of generalized states | |

| constant gain of convergence rate | |

| constant of exponential converges function |

Appendix A

References

- Landerer, F.W.; Flechtner, F.M.; Save, H.; Webb, F.H.; Bandikova, T.; Bertiger, W.I.; Bettadpur, S.V.; Byun, S.H.; Dahle, C.; Dobslaw, H.; et al. Extending the global mass change data record: GRACE Follow-On instrument and science data performance. Geophys. Res. Lett. 2020, 47, e2020GL088306. [Google Scholar] [CrossRef]

- Bryant, R.; Moran, M.S.; McElroy, S.A.; Holifield, C.; Thome, K.J.; Miura, T.; Biggar, S.F. Data continuity of Earth observing 1 (EO-1) Advanced Land I satellite image (ALI) and Landsat TM and ETM+. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1204–1214. [Google Scholar] [CrossRef]

- Totani, T.; Ogawa, H.; Inoue, R.; Das, T.K.; Wakita, M.; Nagata, H. Thermal design procedure for micro- and nanosatellite pointing to earth. J. Thermophys. Heat Transf. 2014, 28, 524–533. [Google Scholar] [CrossRef]

- Reiss, P.; Hager, P.; Bewick, C. New methodologies for the thermal modeling of CubeSats. In Proceedings of the 26th Annual AIAA/USU Conference on Small Satellites, Logan, UT, USA, 13–16 August 2012; pp. 1–12. [Google Scholar]

- Jiang, X.; Han, Q.L.; Liu, S.; Xue, A. A New H∞ Stabilization Criterion for Networked Control Systems. IEEE Trans. Autom. Control 2008, 53, 1025–1032. [Google Scholar] [CrossRef]

- Astrom, K.J.; Bernhardsson, B.M. Comparison of Riemann and Lebesgue sampling for first order stochastic systems. In Proceedings of the 41st IEEE Conference on Decision and Control, Las Vegas, NV, USA, 10–13 December 2002; Volume 2, pp. 2011–2016. [Google Scholar]

- Pan, H.; Chang, X.; Zhang, D. Event-triggered adaptive control for uncertain constrained nonlinear systems with its application. IEEE Trans. Ind. Inform. 2019, 16, 3818–3827. [Google Scholar] [CrossRef]

- Liu, W.; Huang, J. Event-triggered global robust output regulation for a class of nonlinear systems. IEEE Trans. Autom. Control 2017, 62, 5923–5930. [Google Scholar] [CrossRef]

- Xing, L.; Wen, C.; Liu, Z.; Su, H.; Cai, J. Event-Triggered Output Feedback Control A Cl. Uncertain Nonlinear Systems. IEEE Trans. Autom. Control 2018, 64, 290–297. [Google Scholar] [CrossRef]

- Wang, R.; Si, C.; Ma, H.; Hao, C. Global event-triggered inner-outer loop stabilization of under-actuated surface vessels. Ocean Eng. 2020, 218, 108228. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, S.; Liu, J.F. Economic model predictive control with triggered evaluations: State and output feedback. J. Process Control 2014, 24, 1197–1206. [Google Scholar] [CrossRef]

- Shahid, M.I.; Ling, Q. Event-triggered distributed dynamic output-feedback dissipative control of multi-weighted and multi-delayed large-scale systems. ISA Trans. 2020, 96, 116–131. [Google Scholar] [CrossRef]

- Azimi, M.M.; Afzalian, A.A.; Ghaderi, R. Decentralized stabilization of a class of large scale networked control systems based on modified event-triggered scheme. Int. J. Dyn. Control 2021, 9, 149–159. [Google Scholar] [CrossRef]

- Li, F.; Cao, X.; Zhou, C.; Yang, C. Event-triggered asynchronous sliding mode control of CSTR based on Markov Model. J. Frankl. Inst. 2021, 358, 4688–4704. [Google Scholar] [CrossRef]

- Wang, W.; Tong, S. Distributed adaptive fuzzy event-triggered containment control of nonlinear strict-feedback systems. IEEE Trans. Cybern. 2019, 50, 3973–3983. [Google Scholar] [CrossRef] [PubMed]

- Su, X.; Liu, Z.; Lai, G.; Zhang, Y.; Chen, C.P. Event-triggered adaptive fuzzy control for uncertain strict-feedback nonlinear systems with guaranteed transient performance. IEEE Trans. Fuzzy Syst. 2019, 27, 2327–2337. [Google Scholar] [CrossRef]

- Abhinav, S.; Rajiv, K.M. Control of a nonlinear continuous stirred tank reactor via event triggered sliding modes. Chem. Eng. Sci. 2018, 187, 52–59. [Google Scholar]

- Tang, X.T.; Deng, L. Multi-step output feedback predictive control for uncertain discrete-time T-S fuzzy system via event-triggered scheme. Automatica 2019, 107, 362–370. [Google Scholar] [CrossRef]

- Li, S.; Ahn, C.K.; Guo, J.; Xiang, Z. Neural-Network Approximation-Based Adaptive Periodic Event-Triggered Output-Feedback Control of Switched Nonlinear Systems. IEEE Trans. Cybern. 2020, 51, 4011–4020. [Google Scholar] [CrossRef]

- Liu, D.; Yang, G.H. Neural Network-Based Event-Triggered MFAC for Nonlinear Discrete-Time Processes. Neurocomputing 2018, 272, 356–364. [Google Scholar] [CrossRef]

- Xing, X.; Liu, J. Event-triggered neural network control for a class of uncertain nonlinear systems with input quantization. Neurocomputing 2021, 440, 240–250. [Google Scholar] [CrossRef]

- Yang, X.; Wei, Q.L. Adaptive Critic Designs for Optimal Event-Driven Control of a CSTR System. IEEE Trans. Ind. Inform. 2020, 17, 484–493. [Google Scholar] [CrossRef]

- Yang, X.; He, H. Event-Driven H∞-Constrained Control Using Adaptive Critic Learning. IEEE Trans. Cybern. 2020, 51, 4860–4872. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.; Zhu, Y.; Dong, N.; Wei, Q.L. Decentralized Event-Driven Constrained Control Using Adaptive Critic Designs. IEEE Trans. Neural Netw. Learn. Syst. 2021; 1–15, Early Access. [Google Scholar] [CrossRef] [PubMed]

- Seuret, A.; Prieur, C.; Tarbouriech, S.; Zaccarian, L. Event-triggered control with LQ optimality guarantees for saturated linear systems. IFAC Proc. Vol. 2013, 46, 341–346. [Google Scholar] [CrossRef] [Green Version]

- Tarbouriech, S.; Garcia, G.; da Silva, J.M.G., Jr.; Queinnec, I. Stability and Stabilization of Linear Systems with Saturating Actuators; Springer Science & Business Media: Berlin, Germany, 2011. [Google Scholar]

- Wu, W.; Reimann, S.; Liu, S. Event-triggered control for linear systems subject to actuator saturation. IFAC Proc. Vol. 2014, 47, 9492–9497. [Google Scholar] [CrossRef] [Green Version]

- Åarzén, K.E. A simple event-based PID controller. IFAC Proc. Vol. 1999, 32, 8687–8692. [Google Scholar] [CrossRef]

- Heemels, W.P.; Gorter, R.J.; Van Zijl, A.; Van den Bosch, P.P.; Weiland, S.; Hendrix, W.H.; Vonder, M.R. Asynchronous measurement and control: A case study on motor synchronization. Control Eng. Pract. 1999, 7, 1467–1482. [Google Scholar] [CrossRef]

- Velasco, M.; Fuertes, J.; Marti, P. The self triggered task model for real-time control systems. In Proceedings of the Work-in-Progress Session of the 24th IEEE Real-Time Systems Symposium (RTSS03), Cancun, Mexico, 3–5 December 2003; Volume 384, pp. 67–70. [Google Scholar]

- Heemels, W.; Johansson, K.H.; Tabuada, P. An introduction to event-triggered and self-triggered control. In Proceedings of the 2012 IEEE 51st IEEE Conference on Decision and Control (CDC), Maui, HI, USA, 10–13 December 2012; pp. 3270–3285. [Google Scholar]

- Yi, X.; Liu, K.; Dimarogonas, D.V.; Johansson, K.H. Dynamic event-triggered and self-triggered control for multi-agent systems. IEEE Trans. Autom. Control 2018, 64, 3300–3307. [Google Scholar] [CrossRef]

- Wang, X.; Lemmon, M.D. Self-Triggered Feedback Control Systems with Finite-Gain L2 Stability. IEEE Trans. Autom. Control 2009, 54, 452–467. [Google Scholar] [CrossRef]

- Almeida, J.; Silvestre, C.; Pascoal, A.M. Self-triggered state-feedback control of linear plants under bounded disturbances. Int. J. Robust Nonlinear Control 2015, 25, 1230–1246. [Google Scholar] [CrossRef]

- Peng, C.; Han, Q.L. On designing a novel self-triggered sampling scheme for networked control systems with data losses and communication delays. IEEE Trans. Ind. Electron. 2015, 63, 1239–1248. [Google Scholar] [CrossRef]

- Buşoniu, L.; de Bruin, T.; Tolić, D.; Kober, J.; Palunko, I. Reinforcement learning for control: Performance, stability, and deep approximators. Annu. Rev. Control 2018, 46, 8–28. [Google Scholar] [CrossRef]

- Vamvoudakis, K.G. Q-learning for continuous-time linear systems: A model-free infinite horizon optimal control approach. Syst. Control Lett. 2017, 100, 14–20. [Google Scholar] [CrossRef]

- Fortunato, M.; Azar, M.G.; Piot, B.; Menick, J.; Osband, I.; Graves, A.; Mnih, V.; Munos, R.; Hassabis, D.; Pietquin, O.; et al. Noisy networks for exploration. arXiv 2017, arXiv:1706.10295. [Google Scholar]

- Asadi, K.; Littman, M.L. An alternative softmax operator for reinforcement learning. In Proceedings of the International Conference on Machine Learning, Sydney, NSW, Australia, 6–11 August 2017; PMLR 2017. pp. 243–252. [Google Scholar]

- Engel, Y.; Mannor, S.; Meir, R. Reinforcement learning with Gaussian processes. In Proceedings of the 22nd International Conference on Machine Learning, Bonn, Germany, 7–11 August 2005; pp. 201–208. [Google Scholar]

- Sutton, R.S.; McAllester, D.; Singh, S.; Mansour, Y. Policy gradient methods for reinforcement learning with function approximation. Adv. Neural Inf. Process. Syst. 2000, 12, 1057–1063. [Google Scholar]

- Jha, S.K.; Roy, S.B.; Bhasin, S. Direct adaptive optimal control for uncertain continuous-time LTI systems without persistence of excitation. IEEE Trans. Circuits Syst. II Express Briefs 2018, 65, 1993–1997. [Google Scholar] [CrossRef]

- Tu, S.; Recht, B. Least-squares temporal difference learning for the linear quadratic regulator. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; PMLR 2018. pp. 5005–5014. [Google Scholar]

- Umenberger, J.; Schön, T.B. Learning convex bounds for linear quadratic control policy synthesis. Adv. Neural Inf. Process. Syst. 2018, 31. Available online: https://proceedings.neurips.cc/paper/2018/hash/f610a13de080fb8df6cf972fc01ad93f-Abstract.html (accessed on 3 July 2022).

- Lee, D.; Hu, J. Primal-dual Q-learning framework for LQR design. IEEE Trans. Autom. Control 2018, 64, 3756–3763. [Google Scholar] [CrossRef]

- Konda, V.R.; Tsitsiklis, J.N. On actor-critic algorithms. SIAM J. Control Optim. 2003, 42, 1143–1166. [Google Scholar] [CrossRef]

- Lee, D.; Lin, C.J.; Lai, C.W.; Huang, T. Smart-valve-assisted model-free predictive control system for chiller plants. Energy Build. 2021, 234, 110708. [Google Scholar] [CrossRef]

- Qiu, S.; Li, Z.; Fan, D.; He, R.; Dai, X.; Li, Z. Chilled water temperature resetting using model-free reinforcement learning: Engineering application. Energy Build. 2022, 255, 111694. [Google Scholar] [CrossRef]

- Wang, X.; Gong, D.; Jiang, Y.; Mo, Q.; Kang, Z.; Shen, Q.; Wu, S.; Wang, D. A Submillimeter-Level Relative Navigation Technology for Spacecraft Formation Flying in Highly Elliptical Orbit. Sensors 2020, 20, 6524. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Wu, S.; Gong, D.; Shen, Q.; Wang, D.; Damaren, C. Evaluation of Precise Microwave Ranging Technology for Low Earth Orbit Formation Missions with Beidou Time-Synchronize Receiver. Sensors 2021, 21, 4883. [Google Scholar] [CrossRef]

- Min, G. Satellite Thermal Control Technology; China Astronautics Press: Beijing, China, 1991; Volume 249. (In Chinese) [Google Scholar]

- Choi, M. Thermal assessment of swift instrument module thermal control system and mini heater controllers after 5+ Years in Flight. In Proceedings of the 40th International Conference on Environmental Systems, Barcelona, Spain, 11–15 July 2010. AAAA 2010-6003. [Google Scholar]

- Choi, M. Thermal Evaluation of NASA/Goddard Heater Controllers on Swift BAT, Optical Bench and ACS. In Proceedings of the 3rd International Energy Conversion Engineering Conference, San Francisco, CA, USA, 15–18 August 2005. AAAA 2005-5607. [Google Scholar]

- Granger, J.; Franklin, B.; Michalik, M.; Yates, P.; Peterson, E.; Borders, J. Fault-Tolerant, Multiple-Zone Temperature Control; NASA Tech Briefs: New York, NY, USA, 1 September 2008; No. NPO-45230.

- Lewis, F.L.; Syrmos, V. Optimal Control; Wiley: New York, NY, USA, 1995. [Google Scholar]

- Bradtke, S.J.; Barto, A.G. Linear least-squares algorithms for temporal difference learning. Mach. Learn. 1996, 22, 33–57. [Google Scholar] [CrossRef] [Green Version]

- Jiao, Z.; Wang, D.; Liu, X.; Ren, S.; Yang, S.; Zhong, X. Test and research on time delay stability of micron microwave ranging system. Space Electron. Technol. 2021, 18, 58–63. (In Chinese) [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).