Application of Deep Neural Network to the Reconstruction of Two-Phase Material Imaging by Capacitively Coupled Electrical Resistance Tomography

Abstract

1. Introduction

2. Methods

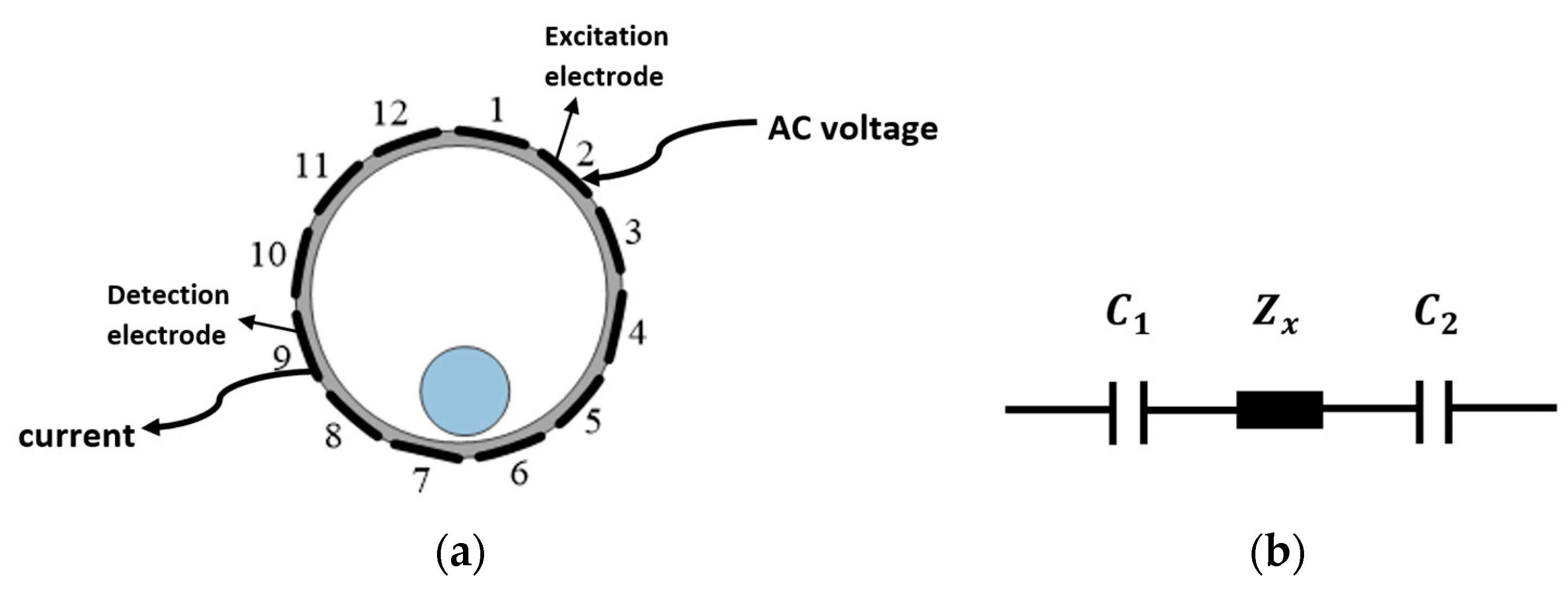

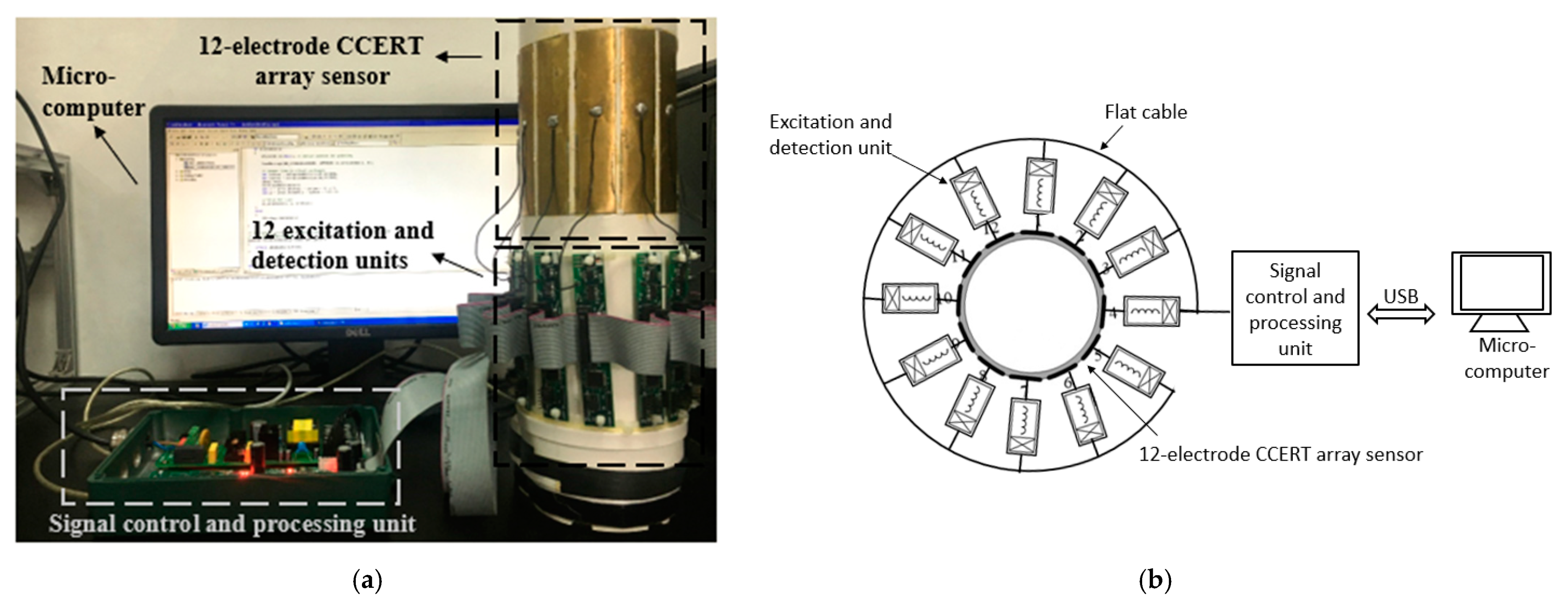

2.1. System Configuration and Data Acquisition Principle

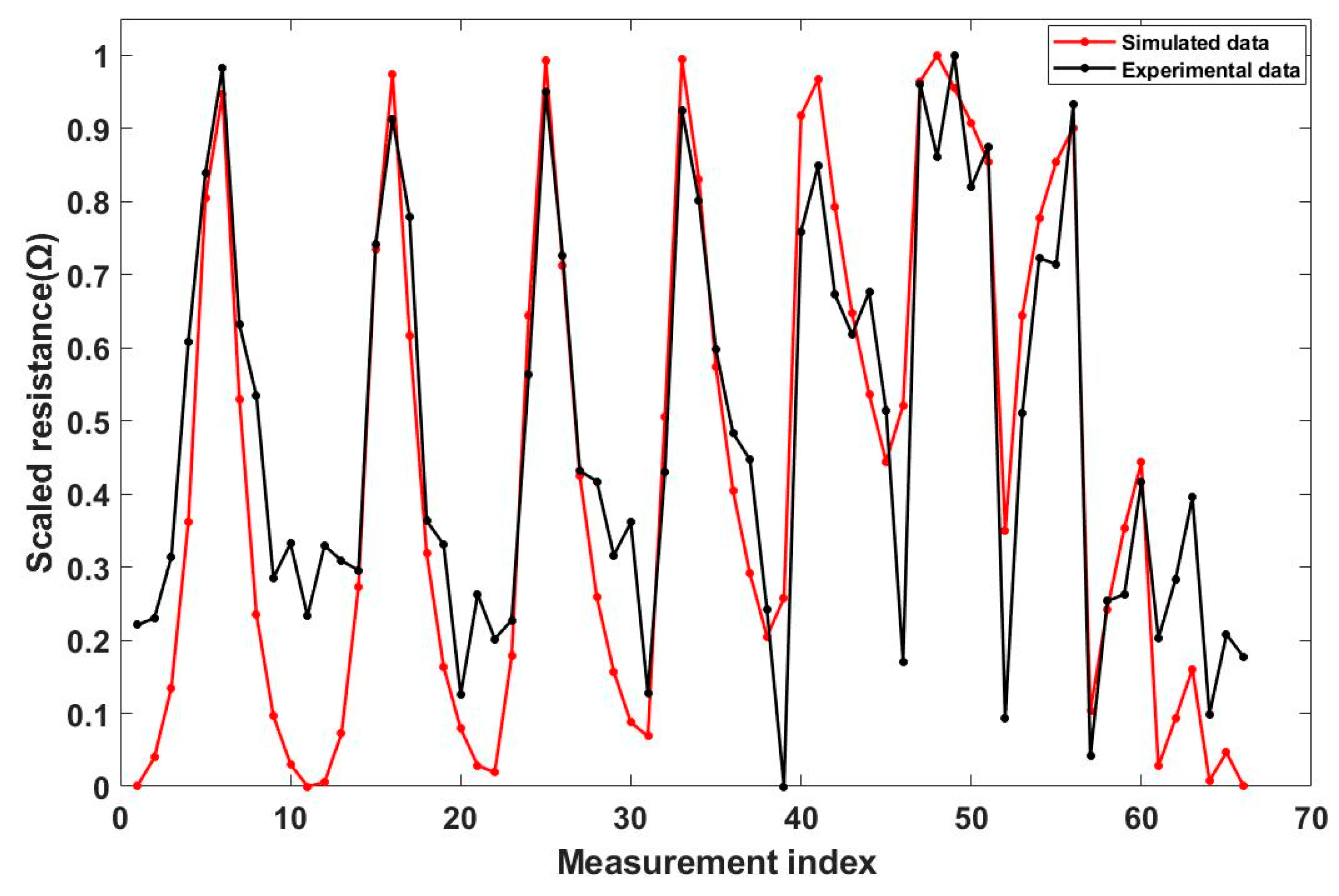

2.2. Conventional Forward Modeling and Image Reconstruction Algorithm of CCERT

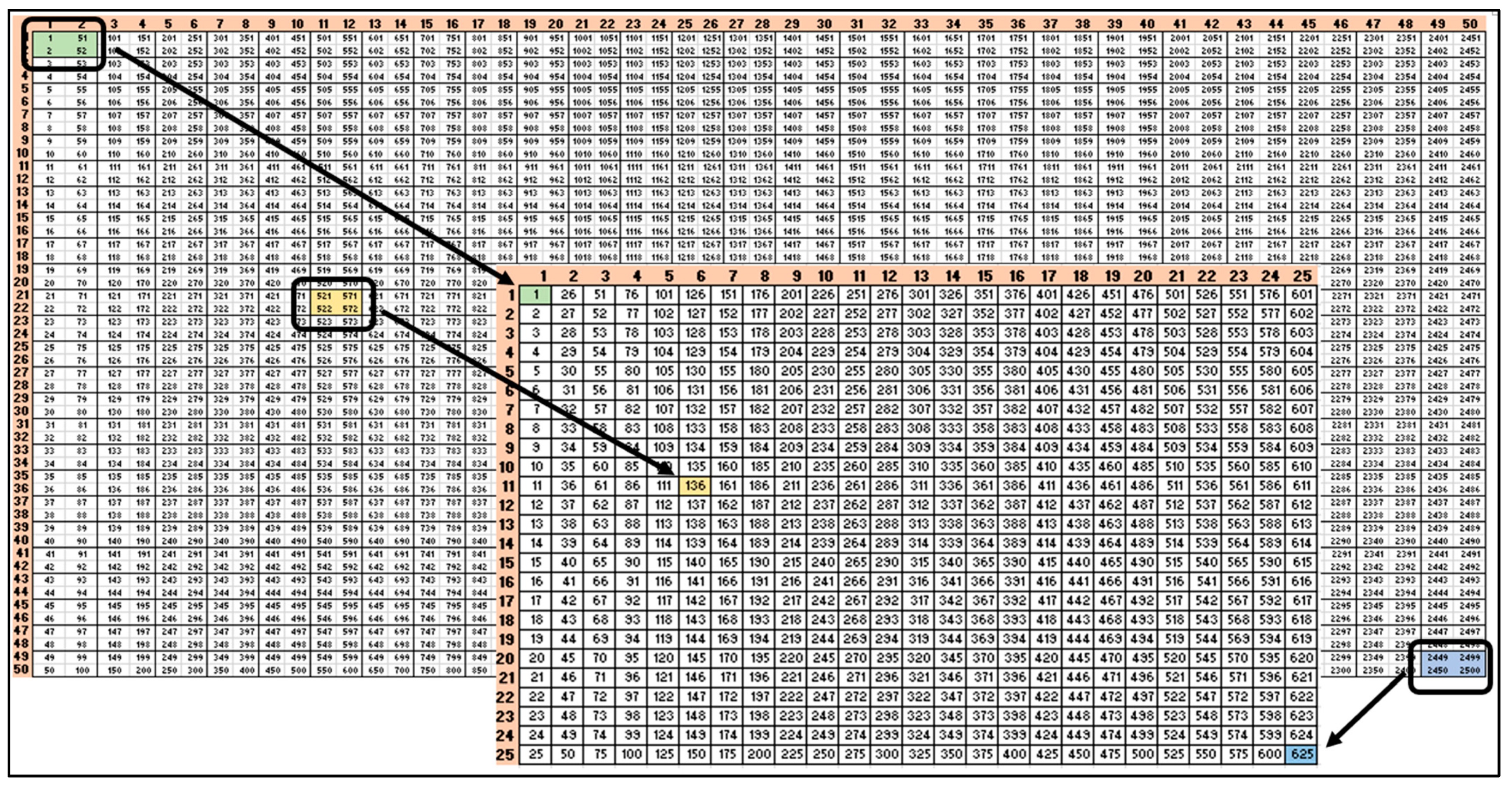

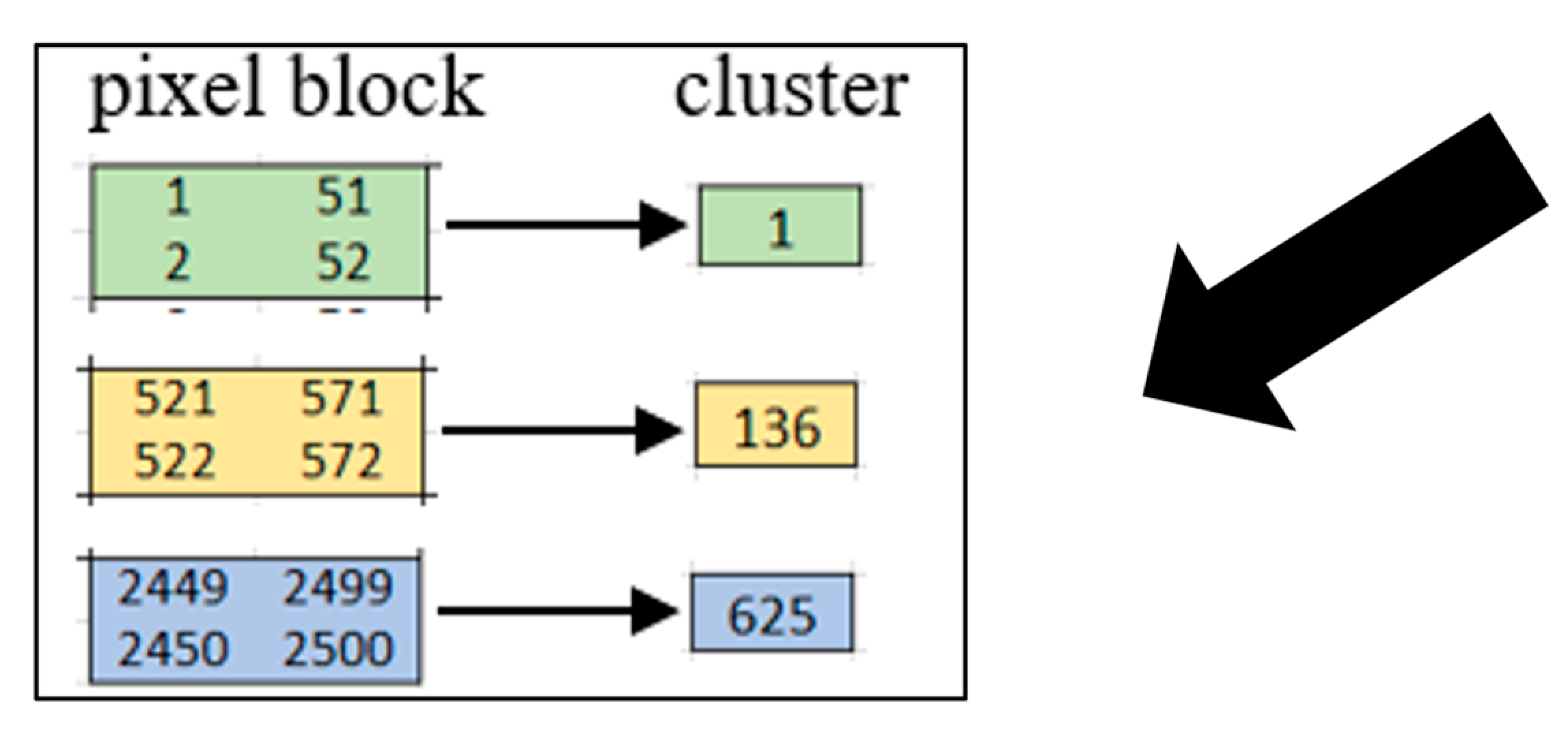

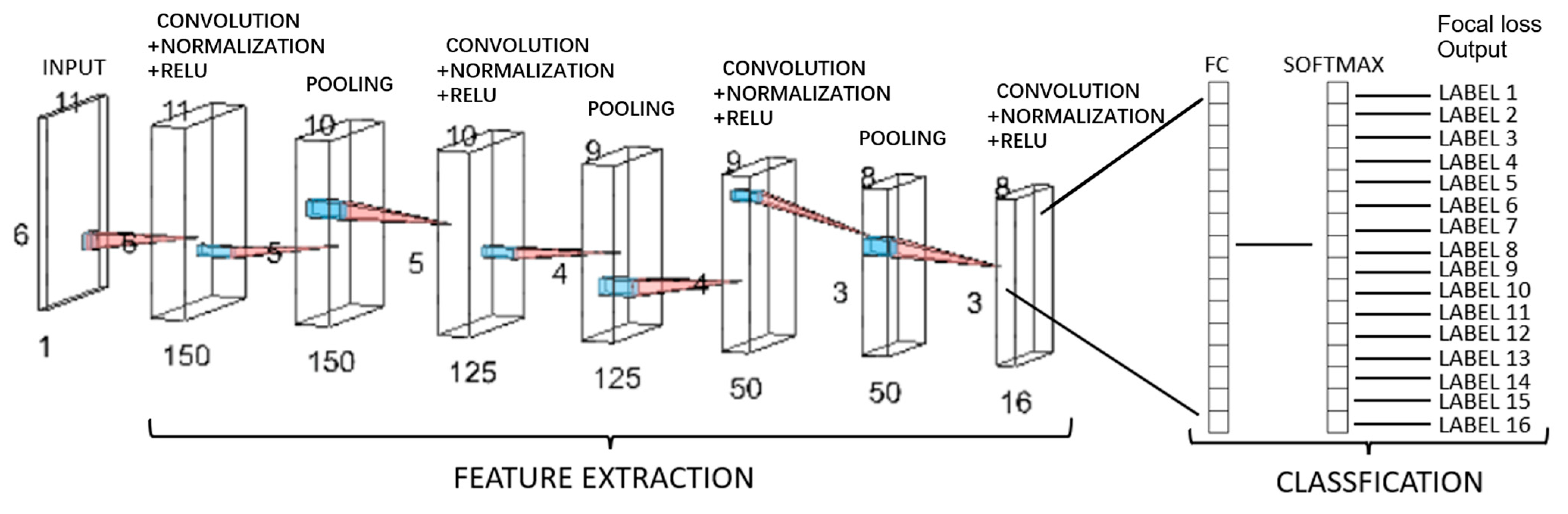

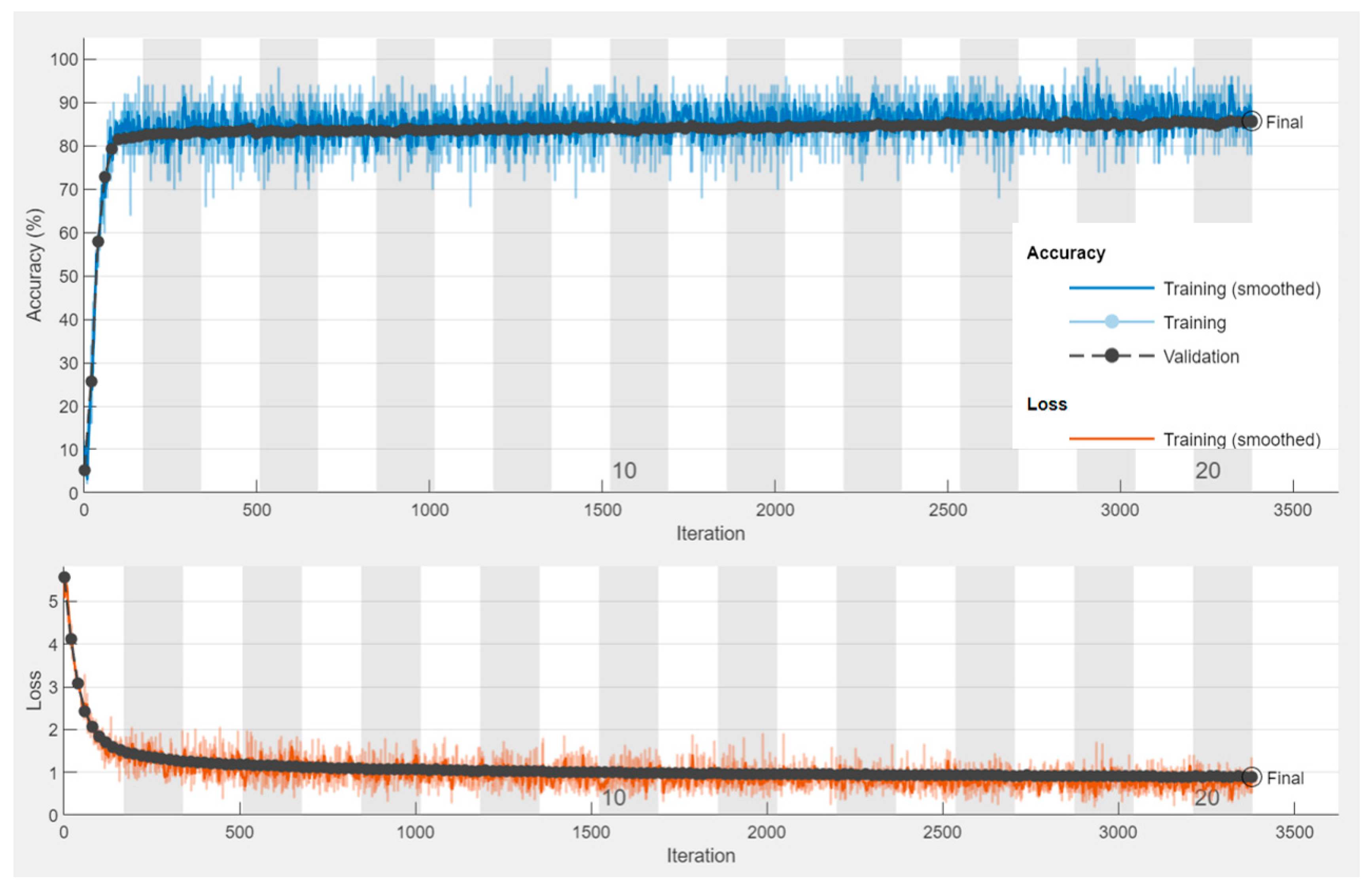

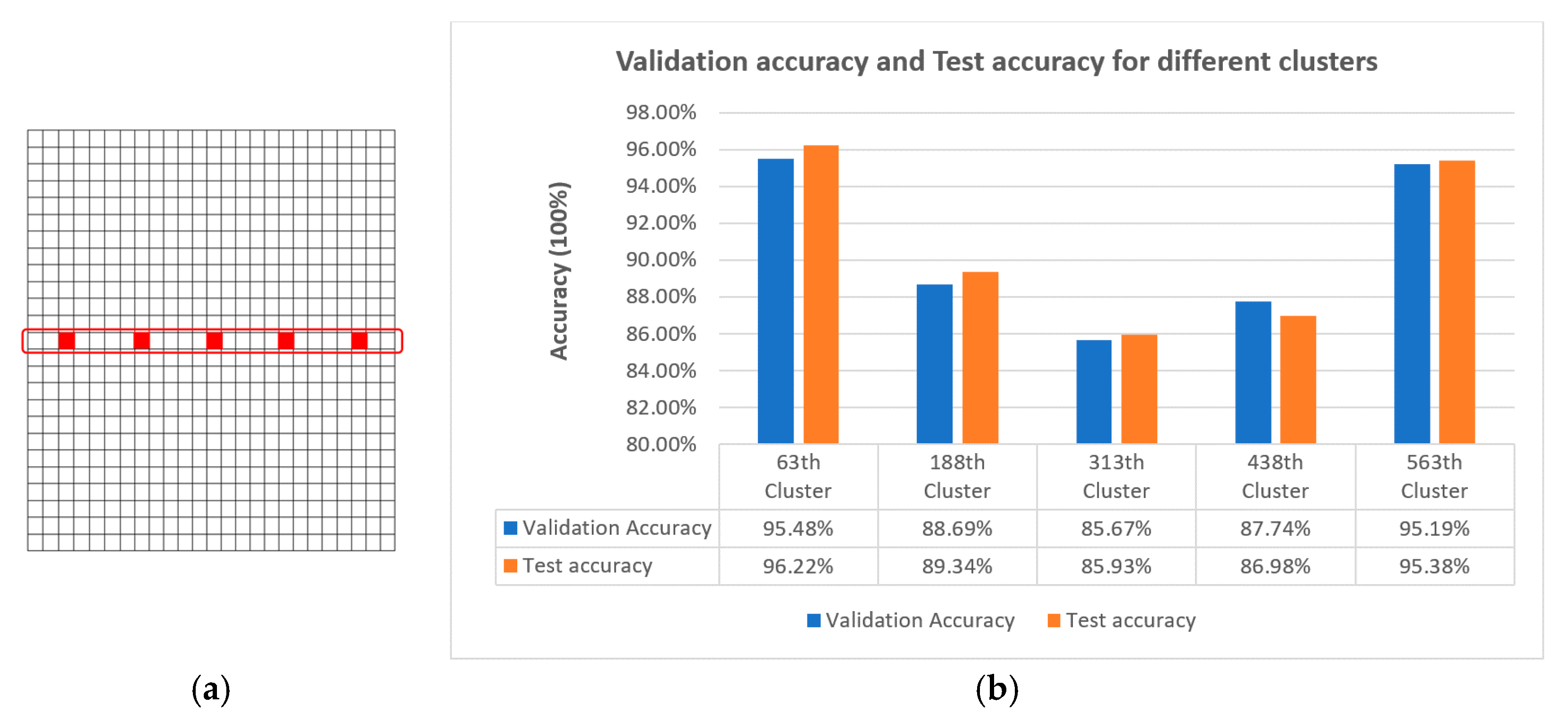

2.3. CNN-Based Image Reconstruction CCERT

3. Results

3.1. Simulation Reconstruction Results

3.2. Experimental Reconstruction Results

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Barber, D.C.; Brown, B.H. Applied potential tomography. J. Phys. E Sci. Instrum. 1984, 17, 723. [Google Scholar] [CrossRef]

- Brown, B.H.; Barber, D.C.; Seagar, A.D. Applied potential tomography: Possible clinical applications. Clin. Phys. Physiol. Meas. 1985, 6, 109. [Google Scholar] [CrossRef] [PubMed]

- Holder, D.S. Electrical impedance tomography (EIT) of brain function. Brain Topogr. 1992, 5, 87–93. [Google Scholar] [CrossRef] [PubMed]

- Adler, A.; Arnold, J.H.; Bayford, R.; Borsic, A.; Brown, B.; Dixon, P.; Faes, T.J.; Frerichs, I.; Gagnon, H.; Gärber, Y.; et al. GREIT: A unified approach to 2D linear EIT reconstruction of lung images. Physiol. Meas. 2009, 30, S35. [Google Scholar] [CrossRef]

- Cho, K.H.; Kim, S.; Lee, Y.J. A fast EIT image reconstruction method for the two-phase flow visualization. Int. Commun. Heat Mass Transf. 1999, 26, 637–646. [Google Scholar] [CrossRef]

- Brown, B.H. Medical Impedance Tomography and Process Impedance Tomography: A Brief Review. Meas. Sci. Technol 2001, 12, 991–996. [Google Scholar] [CrossRef]

- Adler, A.; Boyle, A. Electrical Impedance Tomography; Wiley Online Library: Hoboken, NJ, USA, 2020. [Google Scholar]

- Wahaba, Y.A.; Rahimb, R.A.; Rahimanc, M.H.F. Non-invasive Process Tomography in Chemical Mixtures—A Review. Sens. Actuators B Chem. 2015, 210, 602–617. [Google Scholar] [CrossRef]

- York, T.A. Status of Electrical Tomography in Industrial Applications. J. Electron. Imgaing 2001, 10, 608–619. [Google Scholar] [CrossRef]

- Boyle, A.; Adler, A. The Impact of Electrode Area, Contact Impedance and Boundary Shape on EIT Images. Physiol. Meas. 2011, 32, 745–754. [Google Scholar] [CrossRef]

- Jiang, Y.; Soleimani, M. Capacitively Coupled Resistivity Imaging for Biomaterial and Biomedical Applications. IEEE Access 2018, 6, 27069–27079. [Google Scholar] [CrossRef]

- Wang, B.; Tan, W.; Huang, Z.; Ji, H.; Li, H. Image Reconstruction Algorithm for Capacitively Coupled Electrical Resistance Tomography. Flow Meas. Instrum. 2014, 40, 216–222. [Google Scholar] [CrossRef]

- Wang, B.; Hu, Y.; Ji, H.; Huang, Z.; Li, H. A Novel Electrical Resistance Tomography System Based on C4D Technique. IEEE Trans. Instrum. Meas. 2013, 62, 1017–1024. [Google Scholar] [CrossRef]

- Wang, B.; Zhang, W.; Huang, Z.; Ji, H.; Li, H. Modeling and Optimal Design of Sensor for Capcacitively Coupled Electrical Resistance Tomography System. Flow Meas. Instrum. 2013, 31, 3–9. [Google Scholar] [CrossRef]

- Jiang, Y.; Soleimani, M. Capacitively Coupled Phase-based Dielectric Spectroscopy Tomography. Sci. Rep. 2018, 8, 1–10. [Google Scholar] [CrossRef]

- Ma, G.; Soleimani, M. Spectral Capacitively Coupled Electrical Resistivity Tomography for Breast Cancer Detection. IEEE Access 2020, 8, 50900–50910. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, B.; Huang, Z.; Ji, H.; Li, H. New Capacitively Coupled Electrical Resistance Tomography (CCERT) System. Meas. Sci. Techonol. 2018, 29, 104007. [Google Scholar] [CrossRef]

- Jiang, Y.; Soleimani, M. Capacitively Coupled Electrical Impedance Tomography for Brain Imaging. IEEE Trans. Med Imaging 2019, 38, 2104–2113. [Google Scholar] [CrossRef] [PubMed]

- Tan, C.; Lv, S.; Dong, F.; Takei, M. Image Reconstruction Based on Convolutional Neural Network for Electrical Resistance Tomography. IEEE Sens. J. 2019, 19, 196–204. [Google Scholar] [CrossRef]

- Deng, L.; Yu, D. Deep Learning: Methods and Applications. Found. Trends Signal Process. 2014, 7, 197–387. [Google Scholar] [CrossRef]

- Hahnlosor, R.H.; Mahowald, M.A.; Douglas, R.J.; Seung, H.S. Digital Selection and Analogue Amplification Coexist in a Cortex-inspired Silicon Circuit. Nature 2000, 405, 947–951. [Google Scholar] [CrossRef]

- Glorot, X.; Bordes, A.; Bengio, Y. Deep Sparse Rectifier Neural Networks. In Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, (AISTATS), Fort Lauderdale, FL, USA, 11–13 April 2011. [Google Scholar]

- Lucas, A.; LIiadis, M.; Molina, R.; Katsaggelos, A. Using Deep Neural Networks for Inverse Porblems in Imaging: Beyond Analytical Methods. IEEE Signal Process. Mag. 2018, 35, 20–36. [Google Scholar] [CrossRef]

- DeOldify: Colorizing and Restoring Old Images and Videos with Deep Learning. Available online: https://blog.floydhub.com/colorizing-and-restoring-old-images-with-deep-learning/ (accessed on 20 September 2020).

- Fan, Y.; Ying, L. Solving Electrical Impedance Tomography with Deep Learning. J. Comput. Phys. 2020, 404, 109119. [Google Scholar] [CrossRef]

- Li, H.; Schwab, J.; Antholzer, S.; Haltmeier, M. NETT: Solving Inverse Problems with Deep Neural Networks. Inverse Probl. 2020, 36, 065005. [Google Scholar] [CrossRef]

- Amjad, J.; Sokolic, J.; Rodrigues, M.R. On Deep Learning for Inverse Problems. In Proceedings of the 26th European Signal Processing Conference (EUSIPCO), Rome, Italy, 3–7 September 2018. [Google Scholar]

- Aghdam, H.; Heravi, J. Elnaz, Guide to Convolutional Neural Networks; Springer: Berlin, Germany, 2017. [Google Scholar]

- Wei, Z.; Chen, X. Induced-current learning method for nonlinear reconstructions in electrical impedance tomography. IEEE Trans. Med Imaging 2019, 39, 1326–1334. [Google Scholar] [CrossRef] [PubMed]

- Zheng, J.; Ma, H.; Peng, L. A CNN-Based Image Reconstruction for Electrical Capacitance Tomography. In Proceedings of the 2019 IEEE International Conference on Imaging Systems and Techniques (IST), Abu Dhabi, United Arab Emirates, 8–10 December 2019. [Google Scholar]

- Xiao, J.; Liu, Z.; Zhao, P.; Ji, Y.; Huo, J. Deep Learning Image Reconstruction Simulation for Electromagnetic Tomography. IEEE Sens. J. 2018, 18, 3290–3298. [Google Scholar] [CrossRef]

- Fernández-Fuentes, X.; Mera, D.; Gómez, A.; Vidal-Franco, I. Towards a fast and accurate eit inverse problem solver: A machine learning approach. Electronics 2018, 7, 422. [Google Scholar] [CrossRef]

- Rymarczyk, T.; Kłosowski, G.; Kozłowski, E.; Tchórzewski, P. Comparison of selected machine learning algorithms for industrial electrical tomography. Sensors 2019, 19, 1521. [Google Scholar] [CrossRef] [PubMed]

- Tholin-Chittenden, C.; Soleimani, M. Planar Array Capacitive Imaging Sensor Design Optimization. IEEE Sens. J. 2017, 17, 8059–8071. [Google Scholar] [CrossRef]

- Li, F.; Soleimani, M.; Abascal, J. Planar Array Magnetic Induction Tomography Further Improvement. Sens. Rev. 2019, 39, 257–268. [Google Scholar] [CrossRef]

- Wang, Y. Study on Image Reconstruction of Capacitively Coupled Electrical Impedance Tomography (CCEIT). Meas. Sci. Techonol. 2019, 30, 094002. [Google Scholar] [CrossRef]

- Tan, W.; Wang, B.; Huang, Z.; Ji, H.; Li, H. New image reconstruction algorithm for capacitively coupled electrical resistance tomography. IEEE Sensors J. 2017, 17, 8234–8241. [Google Scholar] [CrossRef]

- Russell, S.J.; Norvig, P. Artificial Intelligence: A Modern Approach, 3rd ed.; Prentice Hall: Hoboken, NJ, USA, 2010. [Google Scholar]

- Ndajah, P.; Kikuchi, H.; Yukawa, M.; Watanabe, H.; Muramatsu, S. SSIM image quality metric for denoised images. In Proceedings of the 3rd WSEAS Int. Conf. on Visualization, Imaging and Simulation, Faro, Portugal, 3–5 November 2010; pp. 53–58. [Google Scholar]

- Sara, U.; Akter, M.; Uddin, M.S. Image quality assessment through FSIM, SSIM, MSE and PSNR—A comparative study. J. Comput. Commun. 2019, 7, 8–18. [Google Scholar] [CrossRef]

| Label Number | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 |

| Binary Matrix | ||||||||||||||||

| Pixel Pattern |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |

| Case | With a Single 14-Pixel Length Inclusion | With a Single 16-Pixel Length Inclusion | With 14- and 16-Pixel Length Inclusions | With 16-, 14-, and 12-Pixel Length Inclusions | |

|---|---|---|---|---|---|

| Class | |||||

| 1 | 45 | 34 | 82 | 102 | |

| 2 | 0 | 1 | 0 | 3 | |

| 3 | 0 | 1 | 0 | 3 | |

| 4 | 0 | 1 | 0 | 3 | |

| 5 | 0 | 1 | 0 | 3 | |

| 6 | 0 | 0 | 0 | 0 | |

| 7 | 0 | 0 | 0 | 0 | |

| 8 | 3 | 3 | 5 | 3 | |

| 9 | 3 | 3 | 5 | 3 | |

| 10 | 3 | 0 | 5 | 3 | |

| 11 | 3 | 0 | 5 | 3 | |

| 12 | 1 | 1 | 1 | 4 | |

| 13 | 1 | 1 | 1 | 4 | |

| 14 | 1 | 1 | 1 | 4 | |

| 15 | 1 | 1 | 1 | 4 | |

| 16 | 564 | 577 | 519 | 483 | |

| Layer | Name and Type | Operation | Activations | Learnable |

|---|---|---|---|---|

| 1 | Imageinput (Image Input) | images with ‘zerocenter’ normalization | - | |

| 2 | conv_1 (Convolution) | 150 convolutions with stride [1 1] and padding ‘same’ | Weights Bias | |

| 3 | batchnorm_1 (Batch Normalization) | Batch normalization with 150 channels | Offset Scale | |

| 4 | relu_1 (ReLU) | ReLU | - | |

| 5 | maxpool_1 (Max Pooling) | max pooling with stride [1 1] and padding [0 0 0 0] | - | |

| 6 | conv_2 (Convolution) | 125 convolutions with stride [1 1] and padding ‘same’ | Weights Bias | |

| 7 | batchnorm_2 (Batch Normalization) | Batch normalization with 125 channels | Offset Scale | |

| 8 | relu_2 (ReLU) | ReLU | - | |

| 9 | maxpool_2 (Max Pooling) | max pooling with stride [1 1] and padding [0 0 0 0] | - | |

| 10 | conv_3 (Convolution) | 50 convolutions with stride [1 1] and padding ‘same’ | Weights Bias | |

| 11 | batchnorm_3 (Batch Normalization) | Batch normalization with 50 channels | Offset Scale | |

| 12 | relu_3 (ReLU) | ReLU | - | |

| 13 | maxpool_3 (Max Pooling) | max pooling with stride [1 1] and padding [0 0 0 0] | - | |

| 14 | conv_4 (Convolution) | 16 convolutions with stride [1 1] and padding ‘same’ | Weights Bias | |

| 15 | batchnorm_4 (Batch Normalization) | Batch normalization with 16 channels | Offset Scale | |

| 16 | relu_4 (ReLU) | ReLU | - | |

| 17 | fc (Fully Connected) | 16 fully connected layer | Weights 16 Bias | |

| 18 | softmax (Softmax) | Softmax | - | |

| 19 | focallossoutput (Focal Loss Layer) | Focal loss layer | - | - |

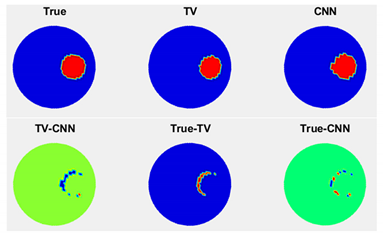

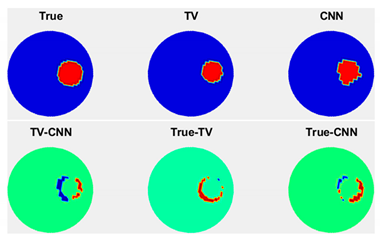

| Case | Image Reconstruction Illustrations | Evaluation Metrics | ||

|---|---|---|---|---|

| 1 |  | SSIM | CNN | 0.9011 |

| TV | 0.8544 | |||

| TV-CNN | 0.9215 | |||

| MSE | CNN | 0.0140 | ||

| TV | 0.0240 | |||

| TV-CNN | 0.0100 | |||

| PSNR | CNN | 18.5387 | ||

| TV | 16.1979 | |||

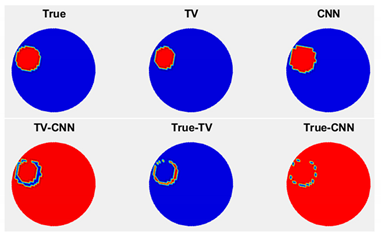

| TV-CNN | 20.0000 | |||

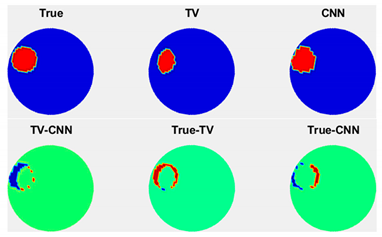

| 2 |  | SSIM | CNN | 0.9150 |

| TV | 0.8425 | |||

| TV-CNN | 0.8863 | |||

| MSE | CNN | 0.0124 | ||

| TV | 0.0296 | |||

| TV-CNN | 0.0180 | |||

| PSNR | CNN | 19.0658 | ||

| TV | 15.2871 | |||

| TV-CNN | 17.4473 | |||

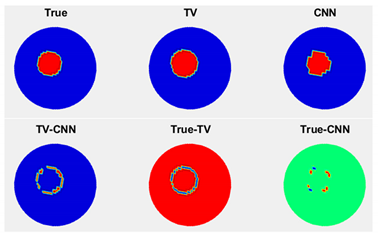

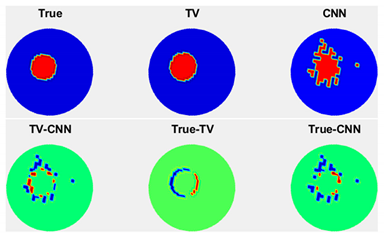

| 3 |  | SSIM | CNN | 0.9043 |

| TV | 0.9842 | |||

| TV-CNN | 0.9131 | |||

| MSE | CNN | 0.0132 | ||

| TV | 0.0020 | |||

| TV-CNN | 0.0120 | |||

| PSNR | CNN | 18.7943 | ||

| TV | 26.9897 | |||

| TV-CNN | 19.2082 | |||

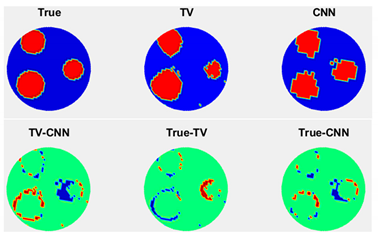

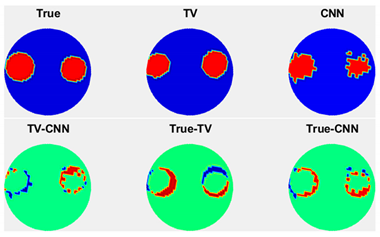

| 4 |  | SSIM | CNN | 0.9502 |

| TV | 0.9270 | |||

| TV-CNN | 0.9170 | |||

| MSE | CNN | 0.0064 | ||

| TV | 0.0116 | |||

| TV-CNN | 0.0132 | |||

| PSNR | CNN | 21.9382 | ||

| TV | 19.3554 | |||

| TV-CNN | 18.7943 | |||

| 5 |  | SSIM | CNN | 0.9288 |

| TV | 0.8886 | |||

| TV-CNN | 0.8576 | |||

| MSE | CNN | 0.0092 | ||

| TV | 0.0184 | |||

| TV-CNN | 0.0276 | |||

| PSNR | CNN | 20.3621 | ||

| TV | 17.3518 | |||

| TV-CNN | 15.5909 | |||

| 6 |  | SSIM | CNN | 0.9538 |

| TV | 0.8735 | |||

| TV-CNN | 0.8668 | |||

| MSE | CNN | 0.0060 | ||

| TV | 0.0168 | |||

| TV-CNN | 0.0196 | |||

| PSNR | CNN | 22.2185 | ||

| TV | 17.7469 | |||

| TV-CNN | 17.0774 | |||

| 7 |  | SSIM | CNN | 0.7713 |

| TV | 0.6736 | |||

| TV-CNN | 0.6740 | |||

| MSE | CNN | 0.0388 | ||

| TV | 0.0568 | |||

| TV-CNN | 0.0660 | |||

| PSNR | CNN | 14.1117 | ||

| TV | 12.4565 | |||

| TV-CNN | 11.8046 | |||

| 8 |  | SSIM | CNN | 0.7660 |

| TV | 0.6240 | |||

| TV-CNN | 0.6574 | |||

| MSE | CNN | 0.0356 | ||

| TV | 0.0704 | |||

| TV-CNN | 0.0724 | |||

| PSNR | CNN | 14.4855 | ||

| TV | 11.5243 | |||

| TV-CNN | 11.4026 | |||

| 9 |  | SSIM | CNN | 0.7016 |

| TV | 0.5970 | |||

| TV-CNN | 0.4947 | |||

| MSE | CNN | 0.0472 | ||

| TV | 0.0604 | |||

| TV-CNN | 0.0868 | |||

| PSNR | CNN | 13.2606 | ||

| TV | 12.1896 | |||

| TV-CNN | 10.6148 | |||

| Case | Image Reconstruction Illustrations | Evaluation Metrics | ||

|---|---|---|---|---|

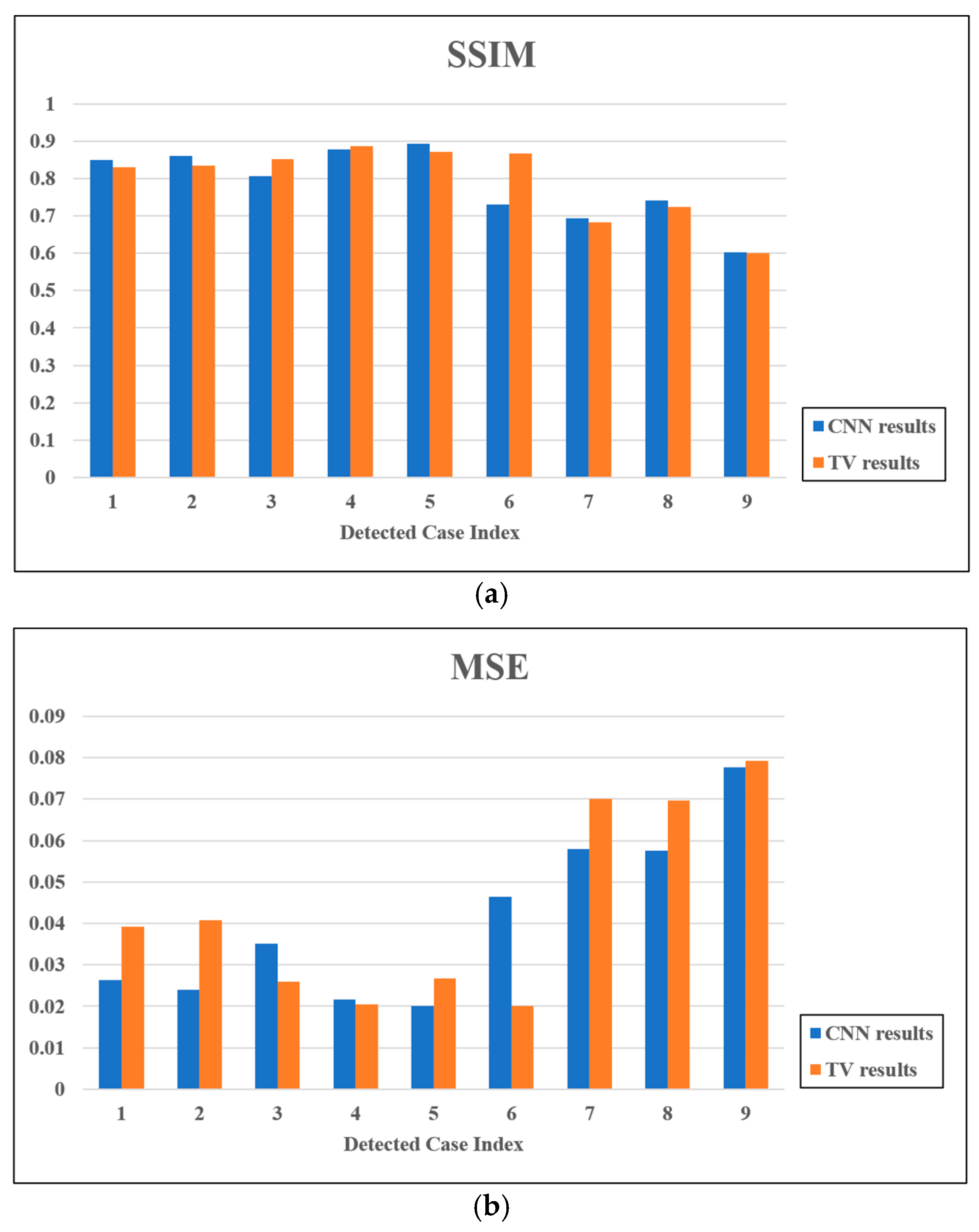

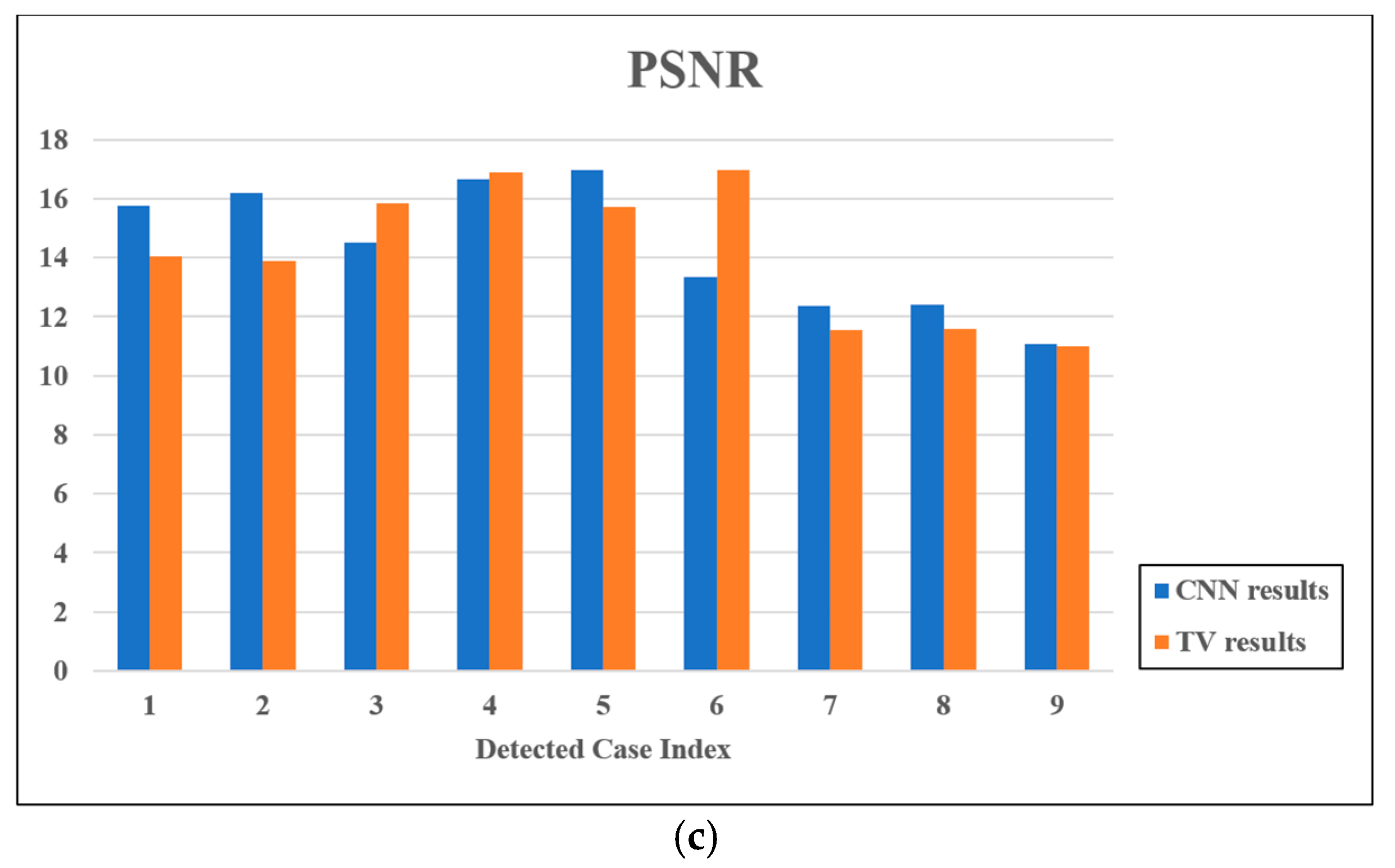

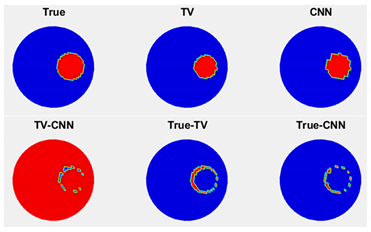

1 |  | SSIM | CNN | 0.8509 |

| TV | 0.8300 | |||

| TV-CNN | 0.8224 | |||

| MSE | CNN | 0.0264 | ||

| TV | 0.0392 | |||

| TV-CNN | 0.0400 | |||

| PSNR | CNN | 15.7840 | ||

| TV | 14.0671 | |||

| TV-CNN | 13.9794 | |||

2 |  | SSIM | CNN | 0.8599 |

| TV | 0.8357 | |||

| TV-CNN | 0.8729 | |||

| MSE | CNN | 0.0240 | ||

| TV | 0.0408 | |||

| TV-CNN | 0.0320 | |||

| PSNR | CNN | 16.1979 | ||

| TV | 13.8934 | |||

| TV-CNN | 14.9485 | |||

3 |  | SSIM | CNN | 0.8071 |

| TV | 0.8531 | |||

| TV-CNN | 0.8226 | |||

| MSE | CNN | 0.0352 | ||

| TV | 0.0260 | |||

| TV-CNN | 0.0268 | |||

| PSNR | CNN | 14.5346 | ||

| TV | 15.8503 | |||

| TV-CNN | 15.7187 | |||

4 |  | SSIM | CNN | 0.8778 |

| TV | 0.8879 | |||

| TV-CNN | 0.8581 | |||

| MSE | CNN | 0.0216 | ||

| TV | 0.0204 | |||

| TV-CNN | 0.0268 | |||

| PSNR | CNN | 16.6555 | ||

| TV | 16.9037 | |||

| TV-CNN | 15.7187 | |||

5 |  | SSIM | CNN | 0.8937 |

| TV | 0.8708 | |||

| TV-CNN | 0.9000 | |||

| MSE | CNN | 0.0200 | ||

| TV | 0.0268 | |||

| TV-CNN | 0.0284 | |||

| PSNR | CNN | 16.9897 | ||

| TV | 15.7187 | |||

| TV-CNN | 15.4668 | |||

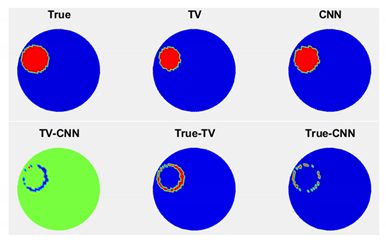

6 |  | SSIM | CNN | 0.7317 |

| TV | 0.8679 | |||

| TV-CNN | 0.7244 | |||

| MSE | CNN | 0.0464 | ||

| TV | 0.0200 | |||

| TV-CNN | 0.0464 | |||

| PSNR | CNN | 13.3348 | ||

| TV | 16.9897 | |||

| TV-CNN | 13.3348 | |||

7 |  | SSIM | CNN | 0.6939 |

| TV | 0.6830 | |||

| TV-CNN | 0.7469 | |||

| MSE | CNN | 0.0580 | ||

| TV | 0.0700 | |||

| TV-CNN | 0.0472 | |||

| PSNR | CNN | 12.3657 | ||

| TV | 11.5490 | |||

| TV-CNN | 13.2606 | |||

8 |  | SSIM | CNN | 0.7415 |

| TV | 0.7236 | |||

| TV-CNN | 0.7440 | |||

| MSE | CNN | 0.0576 | ||

| TV | 0.0696 | |||

| TV-CNN | 0.0496 | |||

| PSNR | CNN | 12.3958 | ||

| TV | 11.5739 | |||

| TV-CNN | 13.0452 | |||

9 |  | SSIM | CNN | 0.6037 |

| TV | 0.6000 | |||

| TV-CNN | 0.6500 | |||

| MSE | CNN | 0.0776 | ||

| TV | 0.0792 | |||

| TV-CNN | 0.0664 | |||

| PSNR | CNN | 11.1014 | ||

| TV | 11.0127 | |||

| TV-CNN | 11.7783 | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Z.; Ma, G.; Jiang, Y.; Wang, B.; Soleimani, M. Application of Deep Neural Network to the Reconstruction of Two-Phase Material Imaging by Capacitively Coupled Electrical Resistance Tomography. Electronics 2021, 10, 1058. https://doi.org/10.3390/electronics10091058

Chen Z, Ma G, Jiang Y, Wang B, Soleimani M. Application of Deep Neural Network to the Reconstruction of Two-Phase Material Imaging by Capacitively Coupled Electrical Resistance Tomography. Electronics. 2021; 10(9):1058. https://doi.org/10.3390/electronics10091058

Chicago/Turabian StyleChen, Zhuoran, Gege Ma, Yandan Jiang, Baoliang Wang, and Manuchehr Soleimani. 2021. "Application of Deep Neural Network to the Reconstruction of Two-Phase Material Imaging by Capacitively Coupled Electrical Resistance Tomography" Electronics 10, no. 9: 1058. https://doi.org/10.3390/electronics10091058

APA StyleChen, Z., Ma, G., Jiang, Y., Wang, B., & Soleimani, M. (2021). Application of Deep Neural Network to the Reconstruction of Two-Phase Material Imaging by Capacitively Coupled Electrical Resistance Tomography. Electronics, 10(9), 1058. https://doi.org/10.3390/electronics10091058