3D Sound Coding Color for the Visually Impaired

Abstract

1. Introduction

2. Background and Related Works

2.1. Review of Tactile and Sound Coding Color

2.2. Review of HRTF Systems

2.3. Review of the Sound Representations of Colors

3. Binaural Audio Coding Colors with Spatial Color Wheel

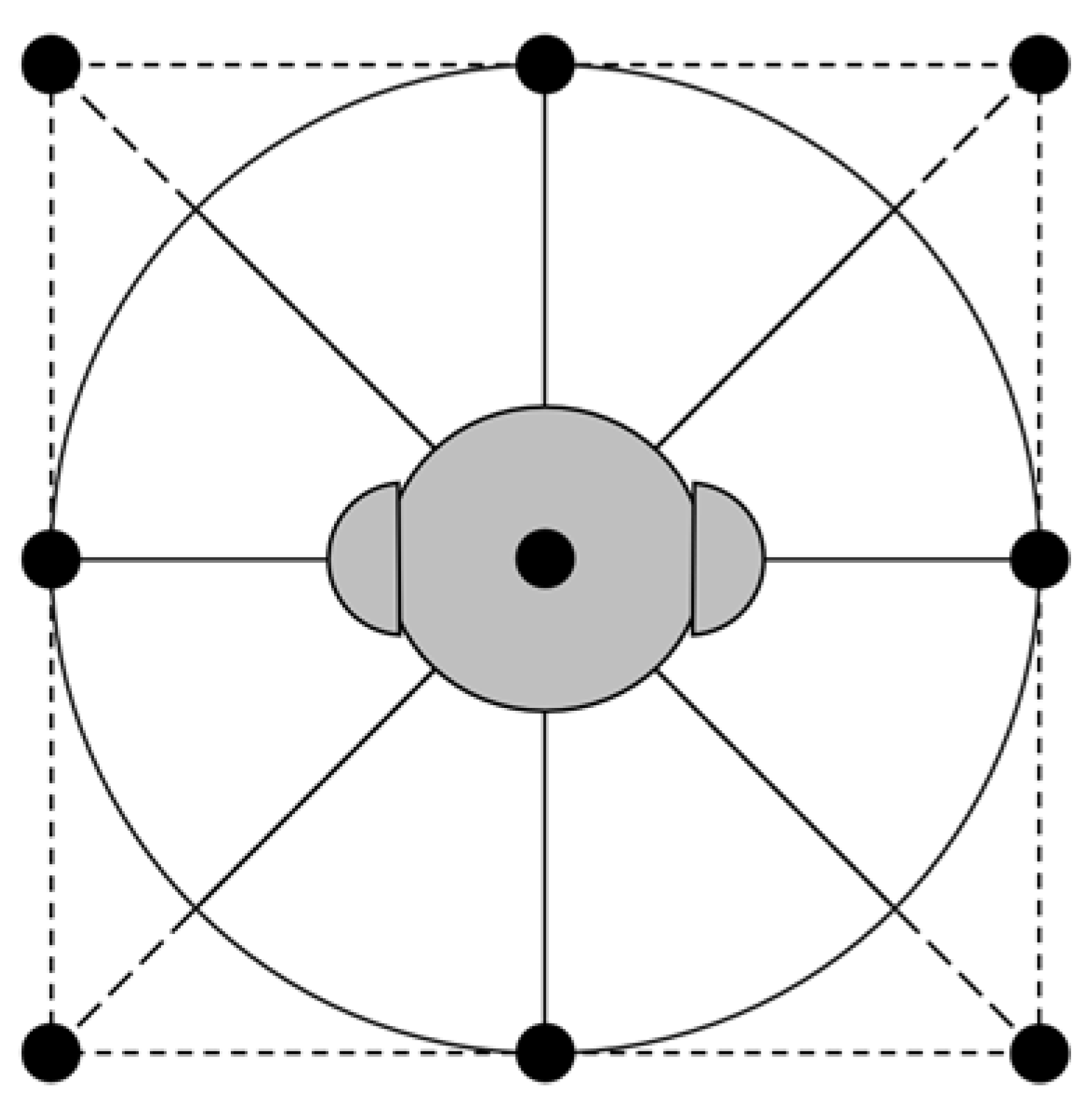

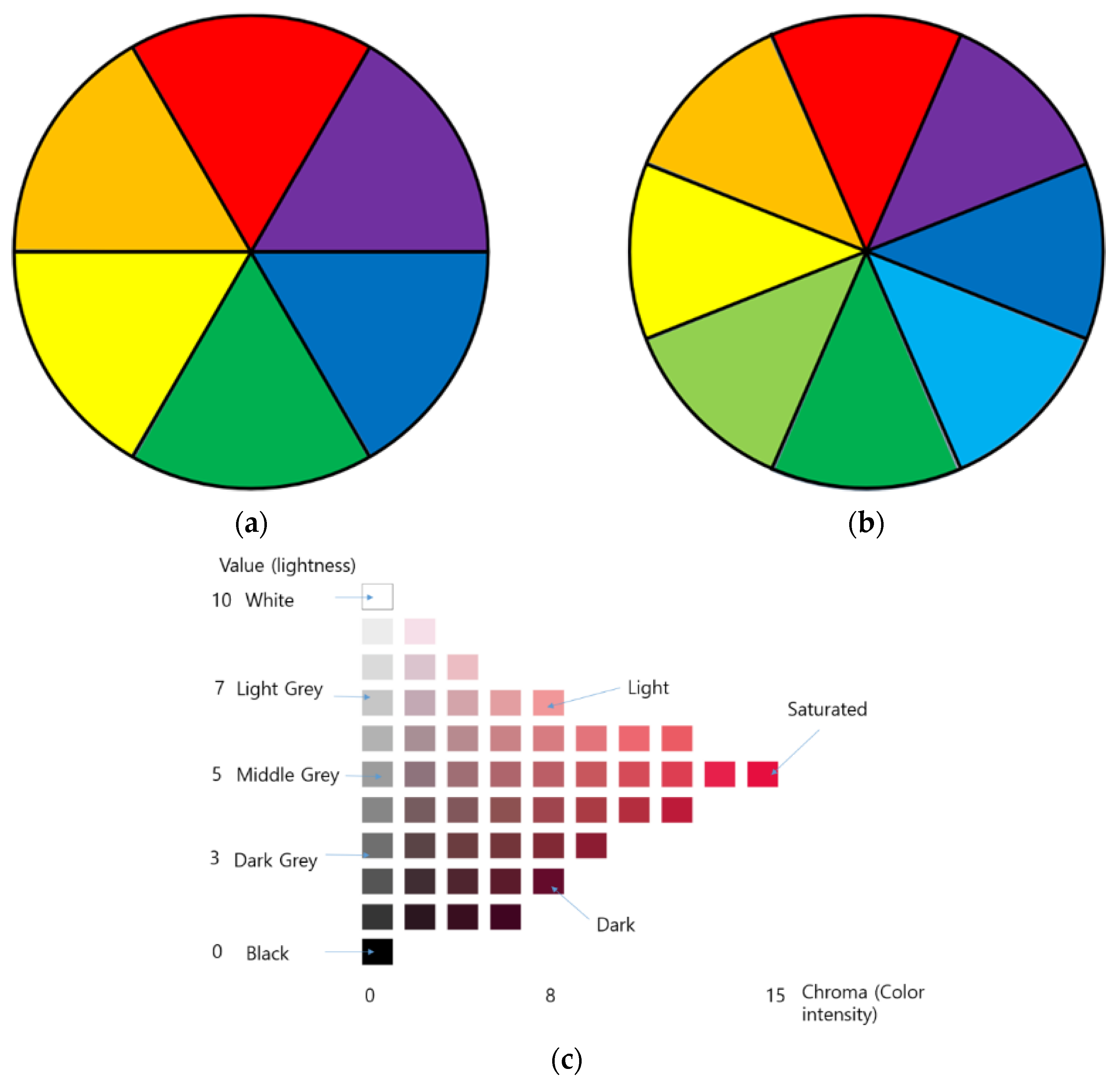

3.1. Spatial Sound Representations of Colors

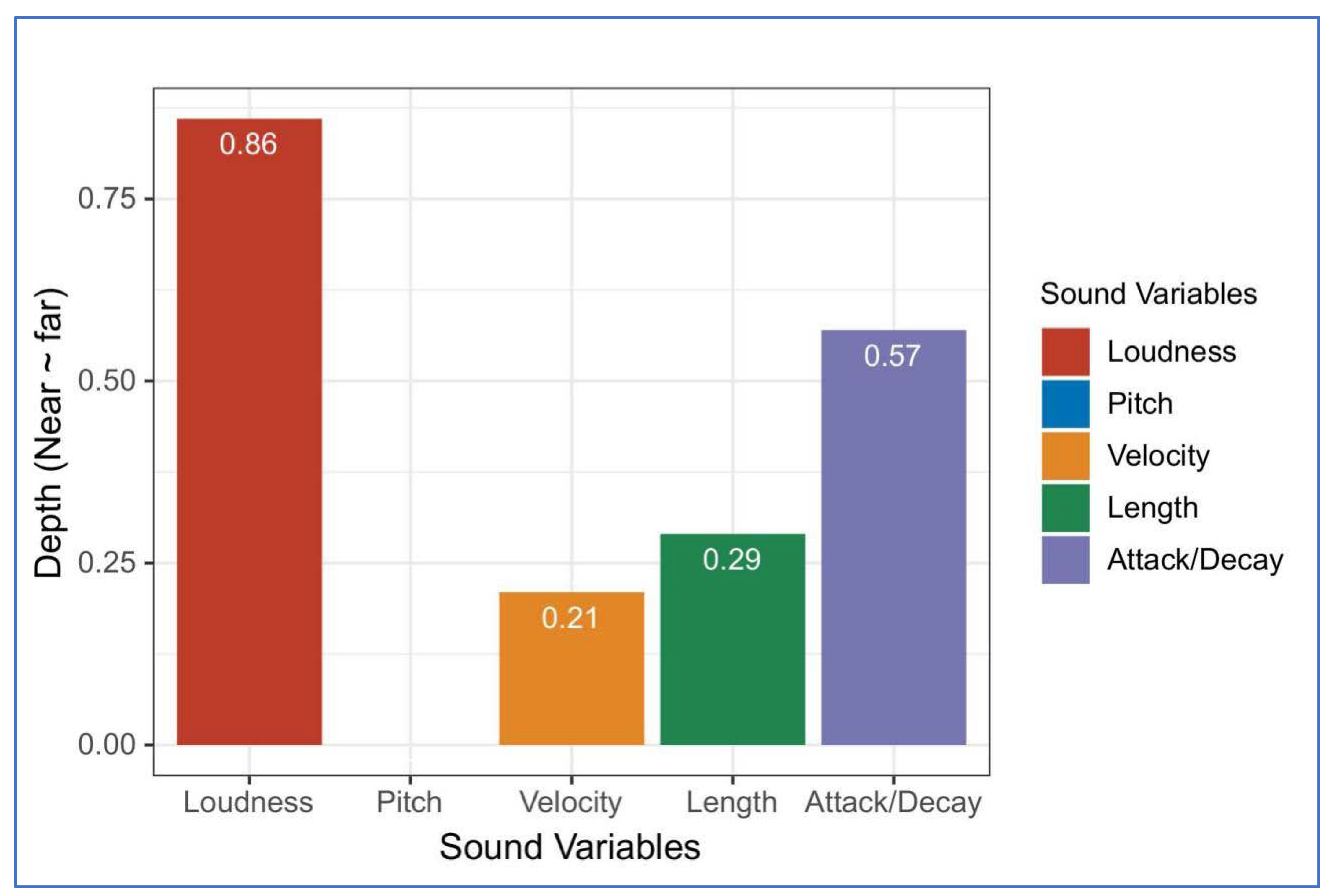

3.2. Sound Representations of Depth

3.2.1. Matching Test

Sound Stimuli

Semantic Stimuli

Experiment Participants and Results

3.2.2. Sound Representations of Color and Depth

3.3. Prototyping Process

4. User Test and Results

4.1. Participants

4.2. Identification Tests

4.3. Workload Assessment

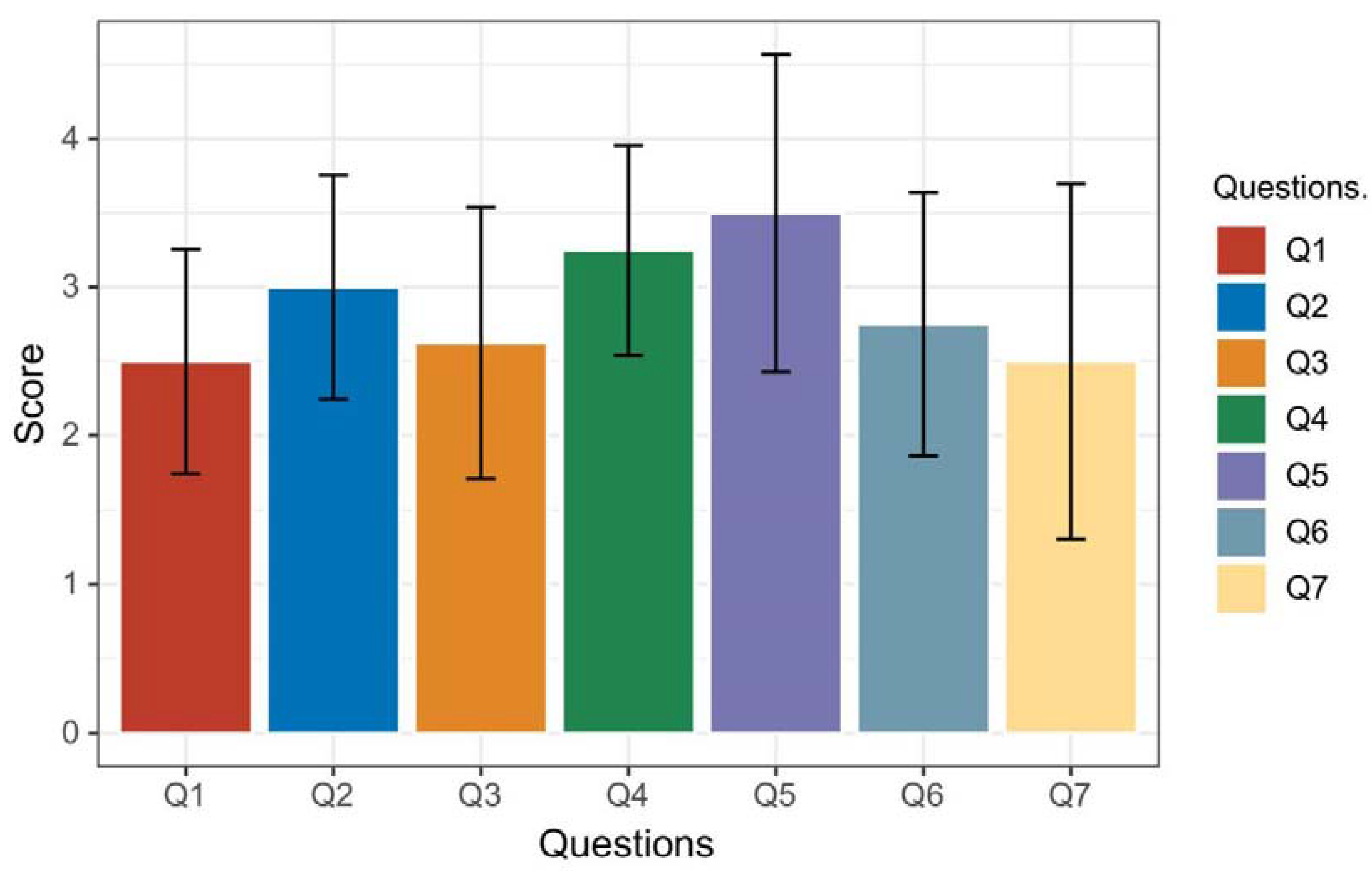

4.4. User Experience Test

- (1)

- I think that I would like to use this system frequently;

- (2)

- I found the complexity in this system appropriate;

- (3)

- I thought the system was easy to use;

- (4)

- I found that the various functions in this system were well integrated;

- (5)

- I thought that there was consistency in this system;

- (6)

- I would imagine that most people would learn to use this system very quickly;

- (7)

- I think this system was light to use.

5. Discussion

- (1)

- This study presented color, lightness, and depth information at the same time with 3D sound and voice modulation;

- (2)

- The virtual color wheel with 3D sound will help the user to understand the color composition;

- (3)

- Our method can be combined with tactile tools for multiple art enjoyment facets.

- (1)

- The relative use of many variations of sound, which also makes it relatively more complex than other single variable methods, and also has basic requirements for the level of hearing. Additionally, the quality of the headphones will also directly affect the use of the effect;

- (2)

- The existing and publicly available HRTF methods still have some drawbacks, i.e., they may have some effects when the gap with the selected HRTF specimen is too large. This study simplified the design of this, but there are still some limitations;

- (3)

- The focus on function and lack of emotion may be useful for people with acquired visual impairment, while people with congenital visual impairment may lack empathy for color perception.

- (1)

- The audibility and accuracy of the sound can be improved. Finding a more popular HRTF conversion method, or exploring the private custom HRTF, will lead to improvements in sound accuracy. Additionally, a better way to create sound accurately will greatly improve the user experience;

- (2)

- While implementing complex functions, a simplified solution is needed to alleviate the user’s difficulty in using them. The solution is to reduce the content of the expression to reduce the sound variables. Another is to use single-variable audio in the form of different forms of touch by the mobile app to play the corresponding variable audio;

- (3)

- In this work, there were no large-scale tests using mobile applications. However, from the feedback of previous mobile applications, it is clear that the mobile application format will greatly increase the usability of the sound code we developed in this paper.

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Global Data on Visual Impairment 2010. Available online: https://www.who.int/blindness/publications/globaldata/en/ (accessed on 6 April 2021).

- Coates, C. Best Practice in Making Museums More Accessible to Visually Impaired Visitors. 2019. Available online: https://www.museumnext.com/article/making-museums-accessible-to-visually-impaired-visitors/ (accessed on 30 November 2020).

- Accessible Guides at The British Museum. Available online: https://www.britishmuseum.org/visit/audio-guide (accessed on 26 January 2021).

- Audio Guides by The Metropolitan Museum of Art. Available online: https://www.metmuseum.org/visit/audio-guide (accessed on 26 January 2021).

- Art inSight and MoMA Audio by Museum of Modern Art. Available online: https://www.moma.org/visit/accessibility/index (accessed on 26 January 2021).

- Samantha Silverberg. A New Way to See: Looking at Museums through the Eyes of The Blind. 2019. Available online: https://www.pressreleasepoint.com/new-way-see-looking-museums-through-eyes-blind (accessed on 30 November 2020).

- Vaz, R.; Freitas, D.; Coelho, A. Blind and Visually Impaired Visitors’ Experiences in Museums: Increasing Accessibility through Assistive Technologies. Int. J. Incl. Mus. 2020, 13, 57–80. [Google Scholar] [CrossRef]

- Jadyn, L. Multisensory Met: The Development of Multisensory Art Exhibits. Available online: http://www.fondazionemarch.org/multisensory-met-the-development-of-multisensory-art-exhibits.php (accessed on 30 November 2020).

- Brule, E.; Bailly, G.; Brock, A.; Valentin, F.; Denis, G.; Jouffrais, C. MapSense: Multi-Sensory Interactive Maps for Children Living with Visual Impairments. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 445–457. [Google Scholar]

- Hayhoe, S. Blind Visitor Experiences st Art Museums; Rowman & Littlefield: Lanham, MD, USA, 2017. [Google Scholar]

- San Diego Museum of Art Talking Tactile Exhibit Panels. Available online: http://touchgraphics.com/portfolio/sdma-exhibit-panel/ (accessed on 23 April 2021).

- Dobbelstein, D.; Herrdum, S.; Rukzio, E. Inscent: A Wearable Olfactory Display as An Amplification for Mobile Notifica-Tions. In Proceedings of the 2017 ACM international Symposium on Wearable Computers, Maui, HI, USA, 11–15 September 2017. [Google Scholar]

- Anagnostakis, G.; Antoniou, M.; Kardamitsi, E.; Sachinidis, T.; Koutsabasis, P.; Stavrakis, M.; Vosinakis, S.; Zissis, D. Ac-Cessible Museum Collections for the Visually Impaired: Combining Tactile Exploration, Audio Descriptions and Mobile Gestures. In Proceedings of the 18th International Conference on Human-Computer Interaction with Mobile Devices and Services Adjunct, Florence, Italy, 6–9 September 2016; pp. 1021–1025. [Google Scholar]

- Reichinger, A.; Fuhrmann, A.; Maierhofer, S.; Purgathofer, W. A Concept for Reuseable Interactive Tactile Reliefs. Computers Helping People with Special Needs; Miesenberger, K., Bühler, C., Penaz, P., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 108–115. [Google Scholar]

- Cavazos Quero, L.; Bartolomé, L.C.; Cho, J.D. Accessible Visual Artworks for Blind and Visually Impaired People: Comparing a Multimodal Approach with Tactile Graphics. Electronics 2021, 10, 297. [Google Scholar] [CrossRef]

- Hammershoi, D.; Møller, H. Methods for binaural recording and reproduction. Acta Acust. United Acust. 2002, 88, 303–311. [Google Scholar]

- Ranjan, R.; Gan, W.S. Natural listening over headphones in augmented reality using adaptive filtering techniques. IEEE/ACM Trans. Audio Speech Lang. Process. 2015, 23, 1988–2002. [Google Scholar] [CrossRef]

- Lessard, N.; Paré, M.; Lepore, F.; Lassonde, M. Early-blind human subjects localize sound sources better than sighted subjects. Nature 1998, 395, 278–280. [Google Scholar] [CrossRef]

- Dong, M.; Wang, H.; Guo, R. Towards understanding the differences of using 3d auditory feedback in virtual environments between people with and without visual impairments. In Proceedings of the 2017 IEEE 3rd VR Workshop on Sonic Interactions for Virtual Environments (SIVE), Los Angeles, CA, USA, 19 March 2017. [Google Scholar]

- Taras, C.; Ertl, T. Interaction with Colored Graphical Representations on Braille Devices. In Proceedings of the International Conference on Universal Access in Human-Computer Interaction, San Diego, CA, USA, 19–24 July 2009; pp. 164–173. [Google Scholar]

- Ramsamy-Iranah, S.; Rosunee, S.; Kistamah, N. Application of assistive tactile symbols in a ’Tactile book’ on color and shapes for children with visual impairments. Int. J. Arts Sci. 2017, 10, 575–590. [Google Scholar]

- Shin, J.; Cho, J.; Lee, S. Please Touch Color: Tactile-Color Texture Design for the Visually Impaired. In Proceedings of the Extended Abstracts of the CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020. [Google Scholar]

- Cho, J.D.; Quero, L.C.; Bartolomé, J.I.; Lee, D.W.; Oh, U.; Lee, I. Tactile colour pictogram to improve artwork appreciation of people with visual impairments. Color Res. Appl. 2021, 46, 103–116. [Google Scholar] [CrossRef]

- Kandinsky, V. Concerning the Spiritual in Art; Dover Publications: Mineola, NY, USA, 1977. [Google Scholar]

- Donnell-Kotrozo, C. Intersensory perception of music: Color me trombone. Music Educ. J. 1978, 65, 32–37. [Google Scholar] [CrossRef]

- Deville, B.; Deville, B.; Bologna, G.; Bologna, G.; Vinckenbosch, M.; Vinckenbosch, M.; Pun, T.; Pun, T. See color: Seeing colours with an orchestra. In Human Machine Interaction; Springer: Berlin/Heidelberg, Germany, 2009; pp. 251–279. [Google Scholar]

- Cho, J.D.; Jeong, J.; Kim, J.H.; Lee, H. Sound Coding Color to Improve Artwork Appreciation by People with Visual Impairments. Electronics 2020, 9, 1981. [Google Scholar] [CrossRef]

- Jabbar, M.S.; Lee, C.H.; Cho, J.D. ColorWatch: Color Perceptual Spatial Tactile Interface for People with Visual Impairments. Electronics 2021, 10, 596. [Google Scholar] [CrossRef]

- Blauert, J. Spatial Hearing: The Psychophysics of Human Sound Localization; MIT Press: Cambridge, MA, USA, 1997. [Google Scholar]

- Murphy, D.; Neff, F. Spatial sound for computer games and virtual reality. In Game Sound Technology and Player Interaction: Concepts and Developments; IGI Global: Hershey, PA, USA, 2011; pp. 287–312. [Google Scholar]

- Jenny, C.; Reuter, C. Usability of individualized head-related transfer functions in virtual reality: Empirical study with perceptual attributes in sagittal plane sound localization. JMIR Serious Games 2020, 8, e17576. [Google Scholar] [CrossRef] [PubMed]

- Larsen, C.H.; Lauritsen, D.S.; Larsen, J.J.; Pilgaard, M.; Madsen, J.B. Differences in human audio localization performance between a HRTF-and a non-HRTF audio system. In Proceedings of the 8th Audio Mostly Conference; 2013; Association for Computing Machinery, New York, NY, USA, 8 September 2013; Article 5. pp. 1–8. [Google Scholar] [CrossRef]

- Mendonça, C.; Campos, G.; Dias, P.; Vieira, J.; Ferreira, J.P.; Santos, J.A. On the improvement of localization accuracy with non-individualized HRTF-based sounds. J. Audio Eng. Soc. 2012, 60, 821–830. [Google Scholar]

- Desloge, J.; Rabinowitz, W.; Zurek, P. Microphone-array hearing aids with binaural output. I. fixed-processing systems. IEEE Trans. Speech Audio Process. 1997, 5, 529–542. [Google Scholar] [CrossRef]

- Jeub, M.; Vary, P. Binaural dereverberation based on a dual-channel wiener filter with optimized noise field coherence. In Proceedings of the 2010 IEEE International Conference on Acoustics, Speech and Signal Processing, Dallas, TX, USA, 14–19 March 2010. [Google Scholar]

- Drossos, K.; Mimilakis, S.; Floros, A.; Kanellopoulos, N. Stereo goes mobile: Spatial enhancement for short-distance loudspeaker setups. In Proceedings of the 2012 Eighth International Conference on Intelligent Information Hiding and Multimedia Signal Processing, Piraeus-Athens, Greece, 18–20 July 2012; pp. 432–435. [Google Scholar]

- Drossos, K.; Floros, A.; Giannakoulopoulos, A.; Kanellopoulos, N. Investigating the impact of sound angular position on the listener affective state. IEEE Trans. Affect. Comput. 2015, 6, 27–42. [Google Scholar] [CrossRef]

- Li, B.; Zhang, X.; Muñoz, J.P.; Xiao, J.; Rong, X.; Tian, Y. Assisting blind people to avoid obstacles: An wearable obstacle stereo feedback system based on 3D detection. In Proceedings of the 2015 IEEE International Conference on Robotics and Biomimetics (ROBIO), Zhuhai, China, 6–9 December 2015; pp. 2307–2311. [Google Scholar]

- Drossos, K.; Zormpas, N.; Giannakopoulos, G.; Floros, A. Accessible games for blind children, empowered by binaural sound. In Proceedings of the 8th ACM International Conference on Pervasive Technologies Related to Assistive Environments, Corfu Greece, 1–3 July 2015; pp. 1–8. [Google Scholar]

- Gardner, B.; Martin, K. Hrtf Measurements of a Kemar Dummy-Head Microphone; Vol. 280. Vision and Modeling Group, Media Laboratory, Massachusetts Institute of Technology. 1994. Available online: https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.39.9751&rep=rep1&type=pdf (accessed on 10 February 2021).

- Newton, I. Opticks, or, a Treatise of the Reflections, Refractions, Inflections & Colours of Light; Courier Corporation: Chelmsford, MA, USA, 1952. [Google Scholar]

- Maryon, E. MARCOTONE the Science of Tone-Color; Birchard Hayes & Company: Boston, MA, USA, 1924. [Google Scholar]

- Peacock, K. Synesthetic perception: Alexander Scriabin’s color hearing. Music Percept. 1985, 2, 483–505. [Google Scholar] [CrossRef]

- Lavignac, A. Music and Musicians; Henry Holt and Company: New York, NY, USA, 1903. [Google Scholar]

- Anikin, A.; Johansson, N. Implicit associations between individual properties of color and sound. Atten. Percept. Psychophys. 2019, 81, 764–777. [Google Scholar] [CrossRef]

- Hamilton-Fletcher, G.; Witzel, C.; Reby, D.; Ward, J. Sound properties associated with equiluminant colors. Multisens. Res. 2017, 30, 337–362. [Google Scholar] [CrossRef] [PubMed]

- Giannakis, K. Sound Mosaics: A Graphical User Interface for Sound Synthesis Based on Audio-Visual Associations. Ph.D. Thesis, Middlesex University, London, UK, 2001. [Google Scholar]

- Jonas, C.; Spiller, M.J.; Hibbard, P. Summation of visual attributes in auditory–visual crossmodal correspondences. Psychon. Bull. Rev. 2017, 24, 1104–1112. [Google Scholar] [CrossRef] [PubMed]

- Cogan, R.D. Sonic Design: The Nature of Sound and Music; Prentice Hall: Upper Saddle River, NJ, USA, 1976. [Google Scholar]

- Itten., J. The Art of Color: The Subjective Experience and Objective Rationale of Color; Wiley: New York, NY, USA, 1974. [Google Scholar]

- Algazi, V.R.; Duda, R.O.; Thompson, D.M.; Avendano, C. The cipic hrtf database. In Proceedings of the 2001 IEEE Workshop on the Applications of Signal Processing to Audio and Acoustics (Cat. No. 01TH8575), New Platz, NY, USA, 24–24 October 2001. [Google Scholar]

- Listen HRTF Database. Available online: http://recherche.ircam.fr/equipes/salles/listen/index.html (accessed on 10 February 2021).

- HRTF Measurements of a KEMAR Dummy-Head Microphone. Available online: https://sound.media.mit.edu/resources/KEMAR.html (accessed on 10 February 2021).

- Bomhardt, R.; Klein, M.D.L.F.; Fels, J. A high-resolution head-related transfer function and three-dimensional ear model database. Proc. Meet. Acoust. 2016, 29, 050002. [Google Scholar]

- Berzborn, M.; Bomhardt, R.; Klein, J.; Richter, J.G.; Vorländer, M. The ITA-Toolbox: An open source MATLAB toolbox for acoustic measurements and signal processing. In Proceedings of the 43rd Annual German Congress on Acoustics, Kiel, Germany, 6 May 2017; Volume 6, pp. 222–225. [Google Scholar]

- Krygier, J.B. Sound and geographic visualization. In Visualization in modern cartography; MacEachren, A.M., Taylor, D.R.F., Eds.; Pergamon: Oxford, UK, 1994; pp. 149–166. [Google Scholar]

- Osgood, C.E.; Suci, G.J.; Tannenbaum, P.H. The Measurement of Meaning; University of Illinois: Urbana, IL, USA, 1957; Volume 12. [Google Scholar]

- NASA Task Load Index. Available online: https://humansystems.arc.nasa.gov/groups/tlx/index.php (accessed on 15 March 2021).

- System Usability Scale. Available online: https://www.questionpro.com/blog/system-usability-scale (accessed on 15 March 2021).

- Nielsen, J.; Landauer, T.K. A mathematical model of the finding of usability problems. In Proceedings of the INTERACT’93 and CHI’93 Conference on Human Factors in Computing Systems, Association for Computing Machinery, New York, NY, USA; 1993; pp. 206–213. [Google Scholar] [CrossRef]

- Lamontagne, C.; Sénécal, S.; Fredette, M.; Chen, S.L.; Pourchon, R.; Gaumont, Y.; Léger, P.M. User Test: How Many Users Are Needed to Find the Psychophysiological Pain Points in a Journey Map? In Human Interaction and Emerging Technologies; Springer: Cham, Switzerland, 2019; pp. 136–142. [Google Scholar]

- Greenwald, A.G.; Nosek, B.A.; Banaji, M.R. Understanding and using the implicit association test: I. An improved scoring algorithm. J. Personal. Soc. Psychol. 2003, 85, 197. [Google Scholar] [CrossRef]

- Schifferstein, H.N. Comparing mental imagery across the sensory modalities. Imagin. Cogn. Personal. 2009, 28, 371–388. [Google Scholar] [CrossRef]

- Brang, D.; Ramachandran, V.S. How do crossmodal correspondences and multisensory processes relate to synesthesia? In Multisensory Perception; Elsevier: Amsterdam, The Netherlands, 2020; pp. 259–281. [Google Scholar]

- Taggart, E. Synesthesia Artists Who Paint Their Multi-Sensory Experience. 2019. Available online: https://mymodernmet.com/synesthesia-art/ (accessed on 23 April 2021).

- Lawrence, E. Marks the Unity of the Senses/Interrelationships Among the Modalities, Series in Cognition and Perception; Academic Press: New York, NY, USA, 1978. [Google Scholar]

- Cho, J.D. A Study of Multi-Sensory Experience and Color Recognition in Visual Arts Appreciation of People with Visual Impairment. Electronics 2021, 10, 470. [Google Scholar] [CrossRef]

| Developer (Sense Used) | Basic Patterns (Concepts) | # of Colors Presented |

|---|---|---|

| Taras et al. 2009 [20] (Touch) | Dots (Braille) | 23 (6 Hues + 2 levels of lightness for each hue + 5 levels of achromatic colors) |

| Ramsamy-Iranah et al. 2016 [21] (Touch) | Polygons (Children’s Knowledge) | 14 (6 Hues+ 5 other colors + 3 levels of achromatic colors) |

| Shin et al. 2019 [22] (Touch) | Lines (Orientation, Grating) The first eight colors are expressed in eight different angles of directionality by dividing a rainbow-shaped semicircle at intervals of 20 degrees | 90 (8 hues + 4 levels of lightness, and 5 levels of saturation for each hue + 10 levels of brown and achromatic colors) |

| Cho et al. 2020 [23] (Touch) | Dots, lines, and curves (pictograms) | Simplified: 29 (6 hues + 2 levels of lightness, and 2 levels of saturation for each hue + 5 levels of achromatic colors) Extended: 53 (12 hues +2 levels of lightness, and 2 levels of saturation for each hue + 5 levels of achromatic colors) |

| Cho et al. 2020 [27] (Hear) | Classical music melodies played on different instruments | 23 (6 Hues + 2 levels of lightness for each hue + 5 levels of achromatic colors) |

| Jabber et al. [28] (Touch) | Embossed surface pattern by color wheel | Simplified: 24 (6 hues + 3 levels of lightness for each hue + 6 levels of achromatic colors) Extended: 32 (8 hues + 3 levels of lightness for each hue + 8 levels of achromatic colors) |

| This paper: 6-color wheel (Hear) | Spatial sound representation using binaural recoding in virtual environment | 21 (6 hues + 3 levels of lightness for each hue + 3 levels of achromatic colors) |

| This paper: 8-color wheel (Hear) | 27 (8 hues + 3 levels of lightness for each hue + 3 levels of achromatic colors) |

| Azimuth/Pitch | −3 | 0 | 3 |

|---|---|---|---|

| 0° | 1. Dark red | 2. Saturated red | 3. Light red |

| 0°–90° | 4. Dark orange | 5. Saturated orange | 6. Light orange |

| 90° | 7. Dark yellow | 8. Saturated yellow | 9. Light yellow |

| 120°–240° | 10. Dark green | 11. Saturated green | 12. Light green |

| 270° | 13. Dark blue | 14. Saturated blue | 15. Light blue |

| 360°–270° | 16. Dark violet | 17. Saturated violet | 18. Light violet |

| 360°–0° | 19. Black | 20. Gray | 21. White |

| Azimuth/Pitch | −3 | 0 | 3 |

|---|---|---|---|

| 0° | 1. Dark red | 2. Saturated red | 3. Light red |

| 0°–90° | 4. Dark orange | 5. Saturated orange | 6. Light orange |

| 90° | 7. Dark yellow | 8. Saturated yellow | 9. Light yellow |

| 90°–180° | 10. Dark yellow-green | 11. Saturated yellow-green | 12. Light yellow-green |

| 180° | 13. Dark green | 14. Saturated green | 15. Light green |

| 270°–180° | 16. Dark blue-green | 17. Saturated blue-green | 18. Light blue-green |

| 270° | 19. Dark blue | 20. Saturated blue | 21. Light blue |

| 360°–270° | 22. Dark violet | 23. Saturated violet | 24. Light violet |

| 360°–0° | 25. Black | 26. Gray | 27. White |

| Sound Variables | Introduction |

|---|---|

| Location | The location of a sound in a two- or three-dimensional space. |

| Loudness | The magnitude of a sound. |

| Pitch | The highness or lowness (frequency) of a sound. |

| Register | The relative location of a pitch in a given range of pitches. |

| Timbre | The general prevailing quality or characteristic of a sound. |

| Duration | The length of time a sound is (or is not) heard. |

| Rate of change | The relationship between the duration of sound and silence over time. |

| Order | The sequence of sounds over time. |

| Attack/Decay | The time it takes a sound to reach its maximum/minimum. |

| Evaluation | Potency | Activity |

|---|---|---|

| Bright~Dark | Strong~Weak | Fast/Agile~Slow/Dull |

| Clear~Cloudy | Hard~Soft | Noisy~Quiet |

| Joyful~Depressed | Rough~Smooth | Extroverted~Introverted |

| Calm~Tense | Pointed (Kiki)~Round (Bouba) Sharp~Dull | Centrifugal~Centripetal Dilated~Constricted |

| Comfortable~Anxious | Far~Near | Passionate~Depressed |

| Warm~Cool | High~Low (e.g., high-pitch~low-pitch) | Active~Inactive |

| Number | Sound Attributes |

|---|---|

| 1 | Fast/Agile~Slow/Dull |

| 2 | Strong~Weak |

| 3 | Warm~Cool |

| 4 | Tense~Calm |

| 5 | Active~Inactive |

| 6 | Noisy~Quiet |

| 7 | Clear~Cloudy |

| 8 | Pointed (Kiki)~Round (Bouba)Sharp~Dull |

| 9 | Dilated~Constricted(Centripetal~Centrifugal) |

| 10 | High~Low (e.g., high-pitch~low-pitch) |

| 11 | Near~Far |

| Loudness (Small Sound~Loud Sound) | Pitch (Low Sound~High Sound) | Velocity (Fast Sound~Slow Sound) | Length (Short Sound~Long Sound) | Attack/Decay (Decay~Attack) | |

|---|---|---|---|---|---|

| Fast/Agile~Slow/Dull | −0.29 | −0.71 | 1.43 | 0.29 | 0 |

| Strong~Weak | −1.71 | −0.14 | 0.43 | 0 | −0.71 |

| Warm-Cool | −0.14 | −0.14 | −0.71 | −0.57 | −0.14 |

| Tense~Calm | −0.57 | −0.57 | 1 | 1.29 | 0 |

| Active~Inactive | −0.86 | −1.14 | 1.14 | 0.14 | −1 |

| Noisy~Quiet | −1.14 | −0.29 | 0.57 | 0.14 | −0.57 |

| Clear~ Cloudy | 0 | −0.57 | 0.29 | 0.14 | −0.71 |

| Pointed (Kiki)~Round (Bouba) Sharp~Dull | 0 | 0.43 | −0.43 | −0.57 | −0.29 |

| Dilated~Constricted (Centripetal~Centrifugal) | 0 | 0.14 | 0.71 | 0.86 | −0.57 |

| High~Low (e.g., high-pitch~low-pitch) | −0.57 | −1.43 | 0.14 | −0.14 | −0.71 |

| Near~Far | −1.71 | 0 | 0.43 | 0.57 | −1.14 |

| Colors Sound | Color Dimensions Left: 6-Color Wheel; Right: 8-Color Wheel) | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Red | Orange | Yellow | Yellow-Green | Green | Blue-Green | Blue | Violet | Gray | ||||||||||

| Red | 5 | 4 | 1 | |||||||||||||||

| Orange | 5 | 4 | 1 | |||||||||||||||

| Yellow | 5 | 5 | ||||||||||||||||

| Yellow-green | 1 | 4 | ||||||||||||||||

| Green | 1 | 5 | 4 | |||||||||||||||

| Blue-green | 5 | |||||||||||||||||

| Blue | 5 | 5 | ||||||||||||||||

| Violet | 5 | 5 | ||||||||||||||||

| Gray | 1 | 5 | 4 | |||||||||||||||

| Average correct answers (%) | 100 | 80 | 100 | 80 | 100 | 100 | 80 | 100 | 80 | 100 | 100 | 100 | 100 | 100 | 100 | 80 | ||

| Total (%) | 100 | 86.67 | ||||||||||||||||

| Color + Lightness Colors Sound | Color Dimensions—Color | Color Dimensions—Lightness | ||||

|---|---|---|---|---|---|---|

| Red | Yellow | Blue | Dark | Saturated | Light | |

| Red—Dark | 10 | 10 | ||||

| Red—Saturated | 10 | 10 | ||||

| Red—Light | 10 | 10 | ||||

| Yellow—Dark | 10 | 10 | ||||

| Yellow—Saturated | 10 | 10 | ||||

| Yellow—Light | 10 | 10 | ||||

| Blue—Dark | 10 | 10 | ||||

| Blue—Saturated | 10 | 10 | ||||

| Blue—Light | 10 | 10 | ||||

| Average correct answers (%) | 100 | 100 | 100 | 100 | 100 | 100 |

| Total (%) | 100 | 100 | ||||

| Color + Lightness + Depth Colors Sound | Color Dimensions—Color | Color Dimensions—Lightness | Color Dimensions—Depth | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Red | Yellow | Blue | Dark | Saturated | Light | Near | Mid | Far | |

| Red—Dark—Near | 9 | 1 | 10 | 9 | 1 | ||||

| Red—Dark—Mid | 8 | 2 | 10 | 9 | 1 | ||||

| Red—Dark—Far | 8 | 2 | 10 | 10 | |||||

| Red—Saturated—Near | 10 | 10 | 10 | ||||||

| Red—Saturated—Mid | 10 | 10 | 10 | ||||||

| Red—Saturated—Far | 9 | 1 | 10 | 10 | |||||

| Red—Light—Near | 10 | 1 | 9 | 10 | |||||

| Red—Light—Mid | 9 | 1 | 10 | 10 | |||||

| Red—Light—Far | 9 | 1 | 10 | 10 | |||||

| Yellow—Dark—Near | 10 | 10 | 10 | ||||||

| Yellow—Dark—Mid | 10 | 10 | 1 | 8 | 1 | ||||

| Yellow—Dark—Far | 1 | 9 | 10 | 10 | |||||

| Yellow—Saturated—Near | 10 | 10 | 9 | 1 | |||||

| Yellow—Saturated—Mid | 10 | 10 | 10 | ||||||

| Yellow—Saturated—Far | 10 | 10 | 10 | ||||||

| Yellow—Light—Near | 10 | 10 | 10 | ||||||

| Yellow—Light—Mid | 1 | 9 | 10 | 10 | |||||

| Yellow—Light—Far | 10 | 10 | 1 | 1 | 8 | ||||

| Blue—Dark—Near | 10 | 10 | 10 | ||||||

| Blue—Dark—Mid | 10 | 10 | 1 | 8 | 1 | ||||

| Blue—Dark—Far | 10 | 10 | 10 | ||||||

| Blue—Saturated—Near | 10 | 10 | 10 | ||||||

| Blue—Saturated—Mid | 10 | 10 | 10 | ||||||

| Blue—Saturated—Far | 10 | 10 | 10 | ||||||

| Blue—Light—Near | 10 | 10 | 9 | 1 | |||||

| Blue—Light—Mid | 10 | 10 | 10 | ||||||

| Blue—Light—Far | 2 | 8 | 1 | 9 | 1 | 9 | |||

| Average correct answers (%) | 91.11 | 97.78 | 97.78 | 100 | 100 | 97.78 | 96.67 | 94.44 | 96.67 |

| Total (%) | 95.56 | 99.26 | 95.93 | ||||||

| Total Tests | 6-Color Wheel (43 Tests) | 8-Color Wheel (45 Tests) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| S1 | S2 | S3 | S4 | S5 | S6 | S7 | S8 | S9 | S10 | ||

| Color | Correct answer | 40 | 43 | 43 | 37 | 42 | 45 | 45 | 45 | 41 | 42 |

| Rate (%) | 93.02 | 100 | 100 | 86.05 | 97.67 | 100 | 100 | 100 | 95.35 | 97.67 | |

| Lightness | Correct answer | 36 | 36 | 36 | 34 | 36 | 36 | 36 | 36 | 36 | 36 |

| Rate (%) | 100 | 100 | 100 | 95.35 | 100 | 100 | 100 | 100 | 100 | 100 | |

| depth | Correct answer | 23 | 27 | 27 | 20 | 27 | 27 | 27 | 27 | 27 | 27 |

| Rate (%) | 90.70 | 100 | 100 | 83.72 | 100 | 100 | 100 | 100 | 100 | 100 | |

| Rate (%) | 94.57 | 100 | 100 | 88.37 | 99.22 | 100 | 100 | 100 | 98.45 | 99.22 | |

| Total Rate (%) | 96.43 | 99.53 | |||||||||

| 97.98 | |||||||||||

| Positive User Feedback | Negative User Feedback |

|---|---|

| I do not think it’s too complicated. Once you get used to it, it’s easy to use. | It takes a while to get used to it at first and requires frequent viewing of the photos. |

| The distinction between color, brightness, and depth is very clear. | In some cases, sound confusion can occur. |

| It’s very easy to use with just a good headset. | The sounds used in the experiment were too monotonous. The experience should be better with the prototype. |

| Expressing all three characteristics at the same time allows you to convey information efficiently. | For congenitally visually impaired people, there is a lack of experience with color. Therefore, for them, this method may not make much sense. |

| It’s interesting to feel the depth with the sound. | There is no difficulty in distinguishing, but it was a little difficult to distinguish when hearing fatigue occurred. |

| Conflicted User Feedbacks | Conflict Resolution (Future Works) |

|---|---|

| It takes a while to get used to it at first and requires frequent viewing of the photos. | The unfamiliarity of first-time use may take some time for the user to adapt. Therefore, it is necessary to provide a concise learning tutorial along with the mobile app. |

| In some cases, sound confusion can occur. | It is possible that the sound on the right side of the HRTF sample is a bit louder than the sound on the left side, which makes the right side similar to the front sound in the case of reverberation. Early users cannot rule out the possibility that the color is difficult to recognize when adding a depth variable to the voice modulation. For this reason, firstly, the ratio and setting of the volume and reverberation variables in the depth variables will be adjusted so that the effect of the addition of the depth variable on the other variables is reduced. Secondly, individual sounds that are particularly similar will be adjusted accordingly. |

| The sounds used in the experiment were too monotonous. The experience should be better with the prototype. | It is correct to carry out the development of mobile applications. The final version will be complete and tested with the mobile app after the audio is improved later. Additionally, the study will add more artworks for practical application. |

| For congenitally visually impaired people, there is a lack of experience with color. Therefore, for them, this method may not make much sense. | Congenitally blind people understand colors through physical and abstract associations. Color audition means the reaction of feeling color in one sound [27]. In the future, the study will not only focus on functionality but will also add emotional things into it. Adding sensual sounds such as music to connect colors with emotions will make the color expression more vivid. |

| There is no difficulty in distinguishing, but it was a little difficult to distinguish when hearing fatigue occurred. | Switching between the simultaneous performance of multiple variables and performance of a single variable will be added, reducing user auditory fatigue. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, Y.; Lee, C.-H.; Cho, J.D. 3D Sound Coding Color for the Visually Impaired. Electronics 2021, 10, 1037. https://doi.org/10.3390/electronics10091037

Lee Y, Lee C-H, Cho JD. 3D Sound Coding Color for the Visually Impaired. Electronics. 2021; 10(9):1037. https://doi.org/10.3390/electronics10091037

Chicago/Turabian StyleLee, Yong, Chung-Heon Lee, and Jun Dong Cho. 2021. "3D Sound Coding Color for the Visually Impaired" Electronics 10, no. 9: 1037. https://doi.org/10.3390/electronics10091037

APA StyleLee, Y., Lee, C.-H., & Cho, J. D. (2021). 3D Sound Coding Color for the Visually Impaired. Electronics, 10(9), 1037. https://doi.org/10.3390/electronics10091037