CoLL-IoT: A Collaborative Intruder Detection System for Internet of Things Devices

Abstract

1. Introduction

- Present CoLL-IoT, a collaborative system that detects malicious activities that are targeting IoT devices.

- Implement different machine learning algorithms to achieve the best results in terms of time and space complexities.

- Evaluate the proposed system on UNSW-NB15 [8] dataset that was recently generated using the data of real traffic.

- Deploy and execute CoLL-IoT on a low powered device and effectively detect most of the malicious activities with low type II error rate.

- Achieve a better detection rate than existing tools by using the same benchmark dataset.

2. Background

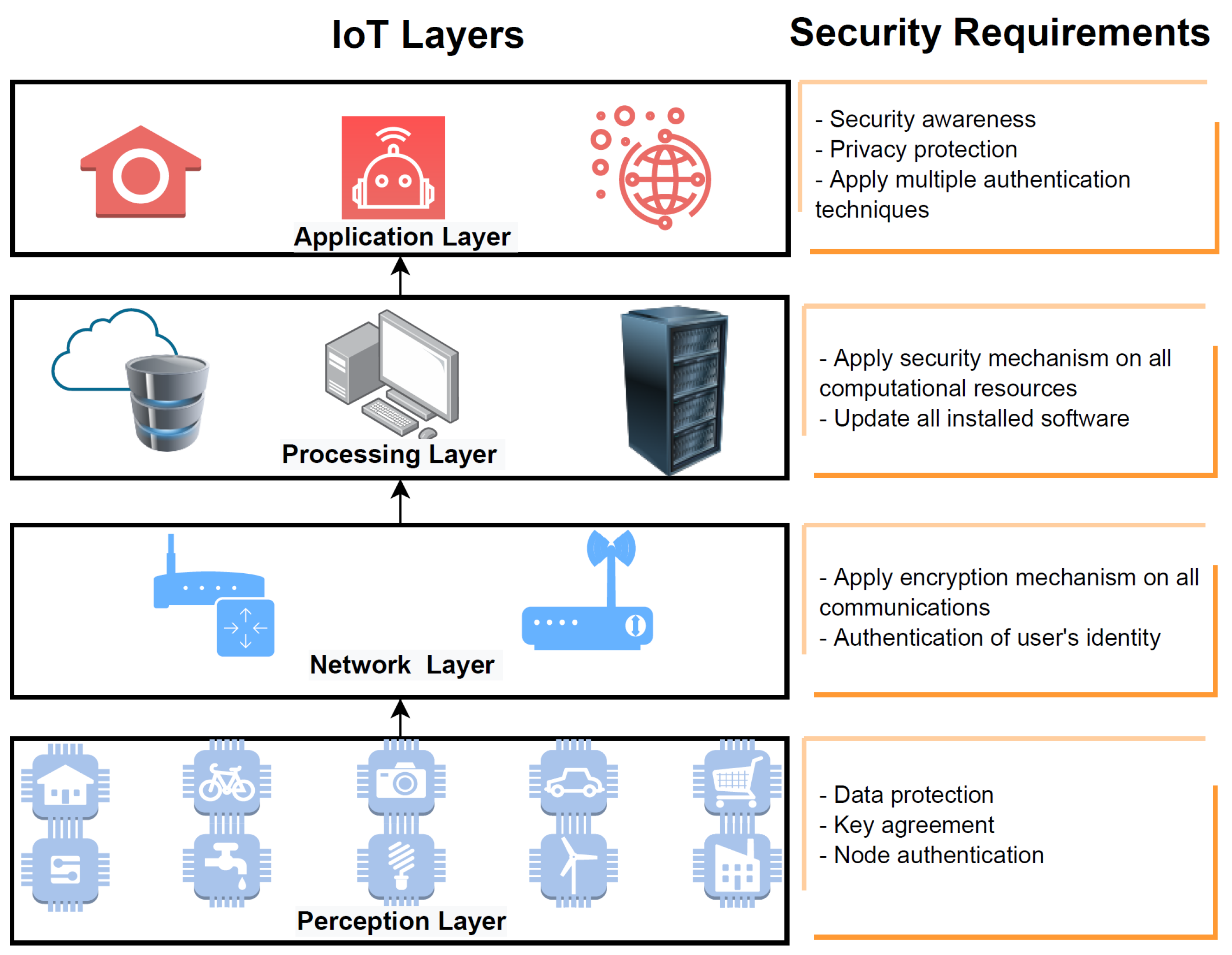

2.1. Internet of Things (IoT)

2.1.1. Perception Layer

2.1.2. Network Layer

2.1.3. Processing Layer

2.1.4. Application Layer

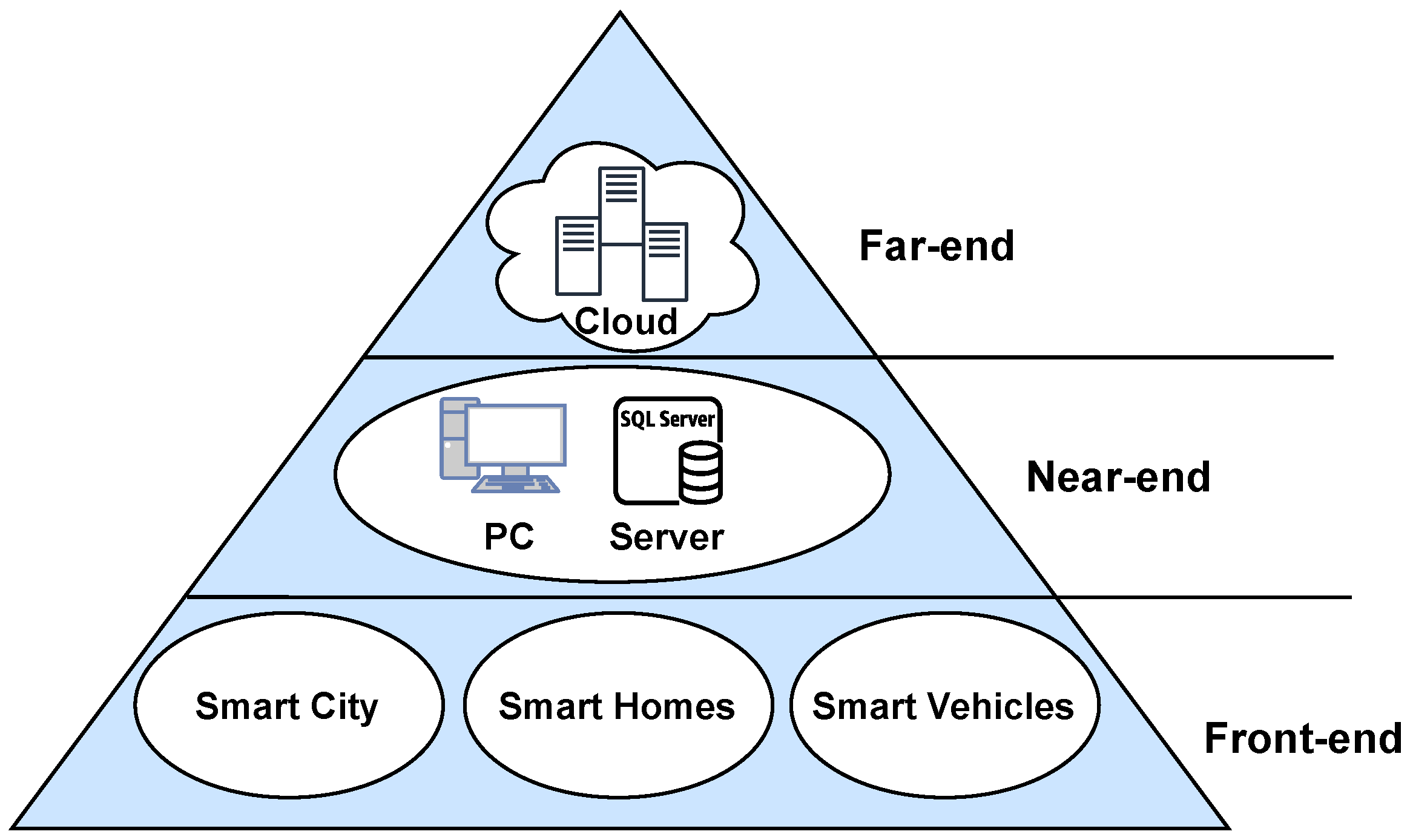

2.2. Edge Computing

2.2.1. Front-End

2.2.2. Near-End

2.2.3. Far-End

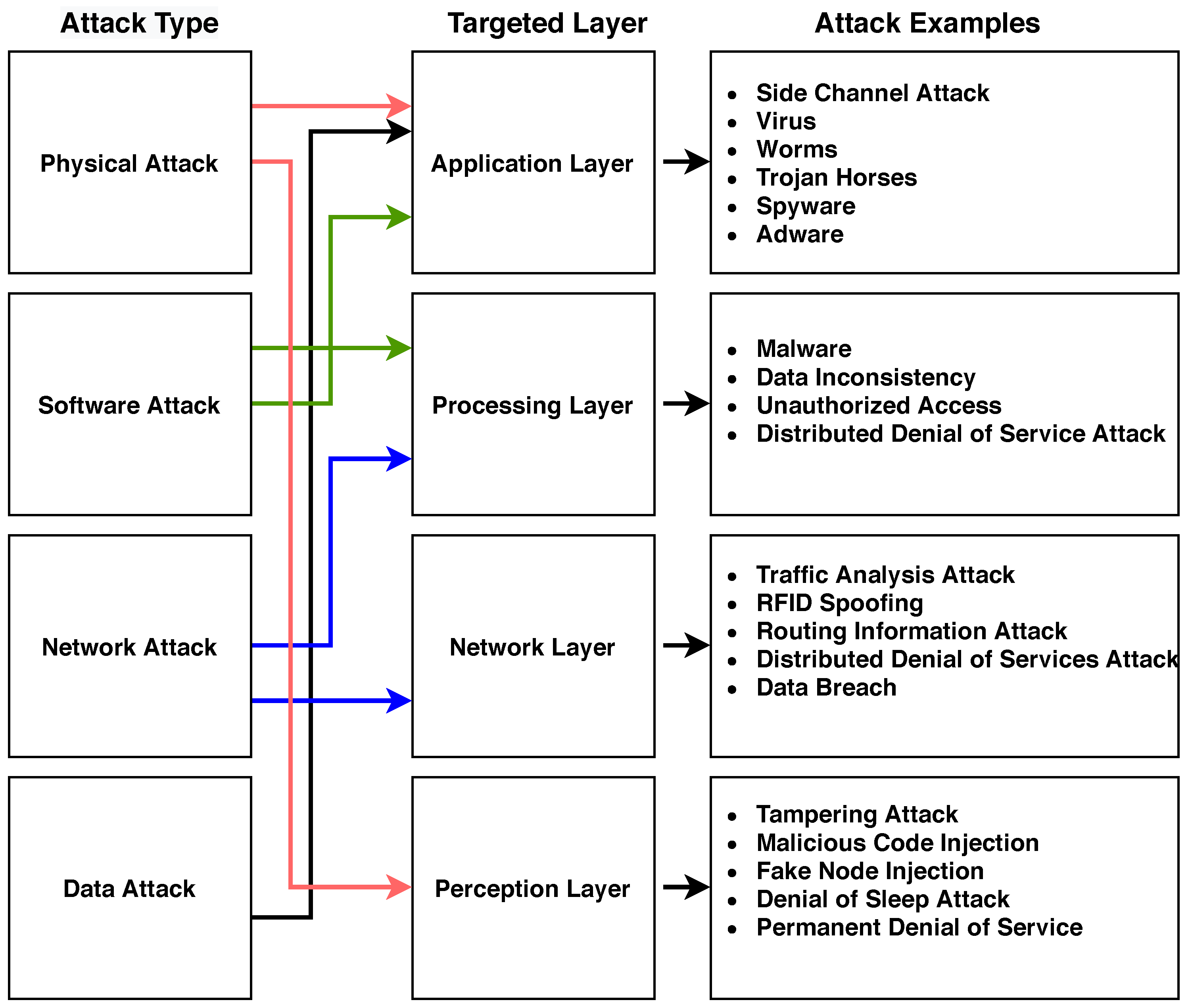

2.3. IoT Attacks

3. Related Work

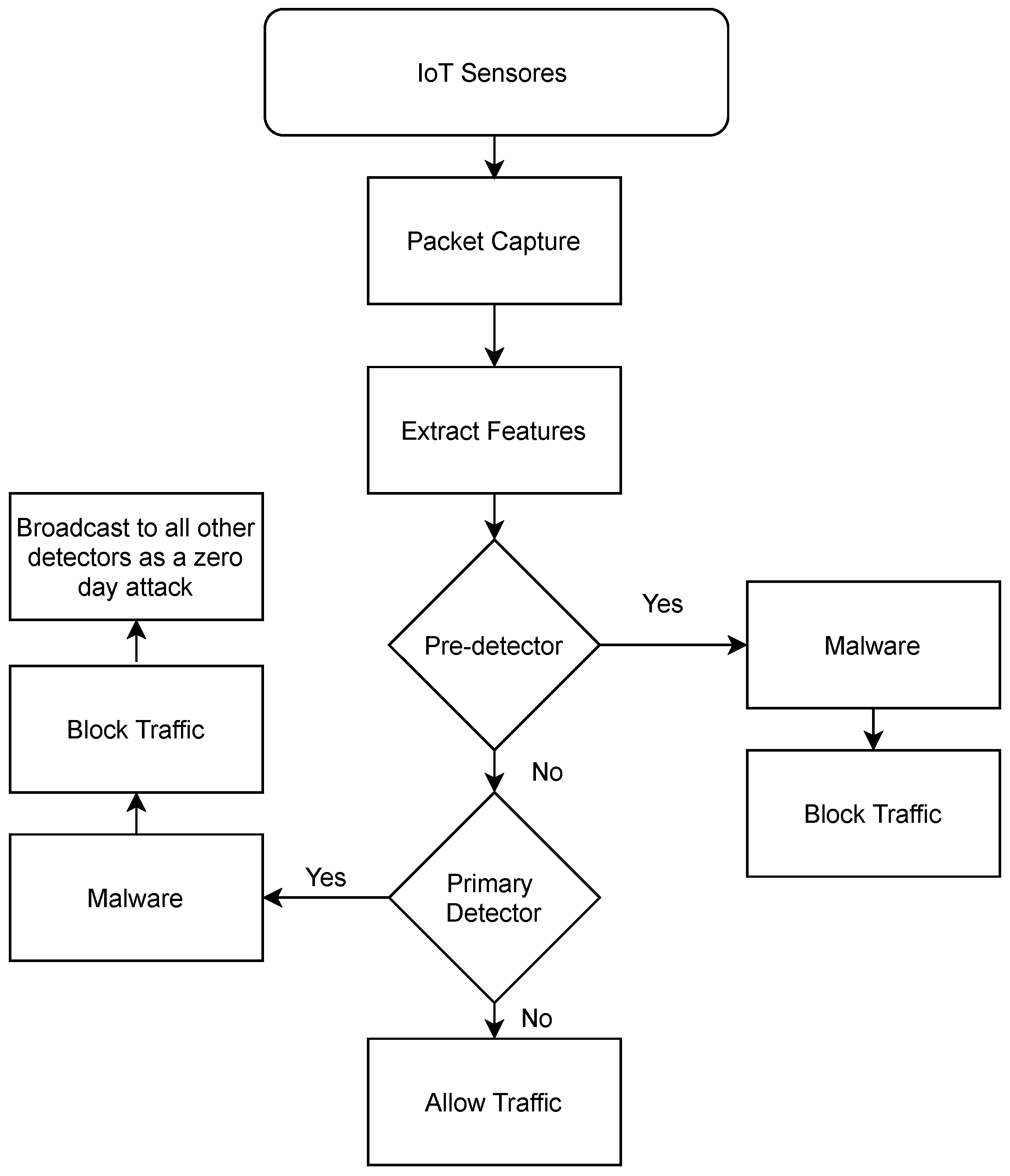

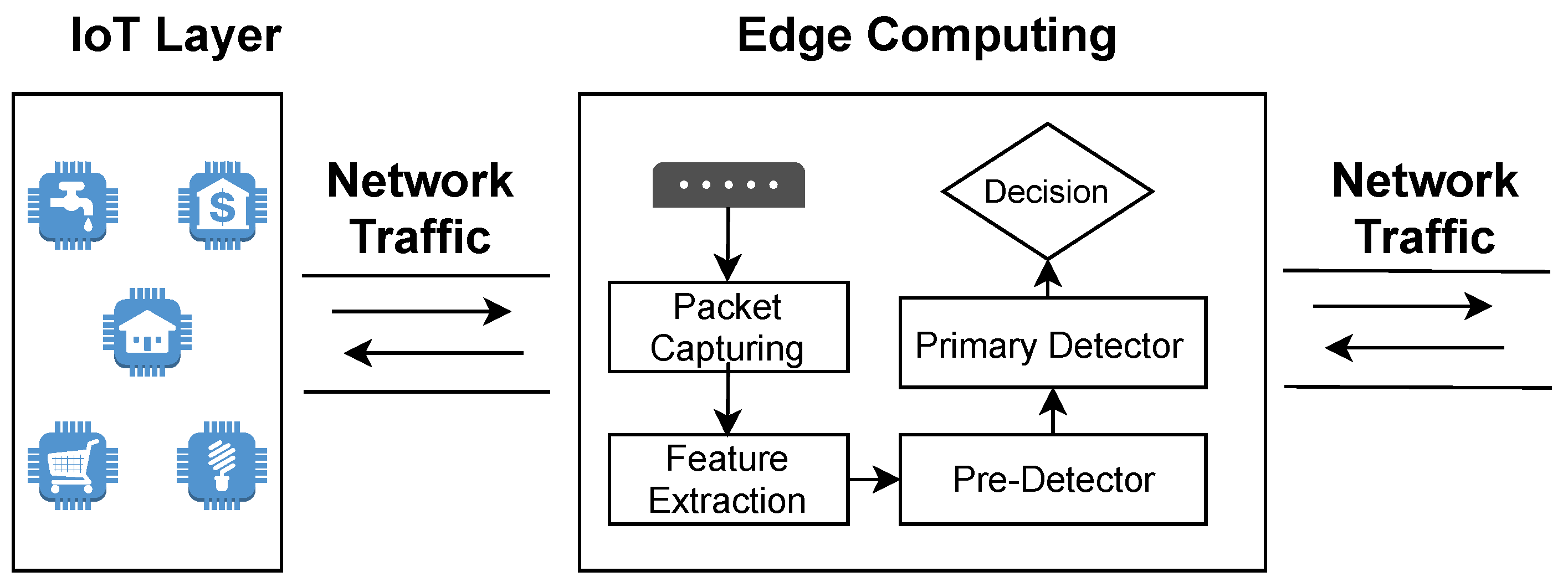

4. Proposed Method

| Algorithm 1 CoLL-IoT Detection Procedures |

Input: NT: Captured Network Traffic; Output: Result: 0-Normal; 1-Malicious;

|

4.1. Machine Learning

4.1.1. K-Nearest Neighbors (K-NN)

4.1.2. Random Forests

4.1.3. Logistic Regression (LR)

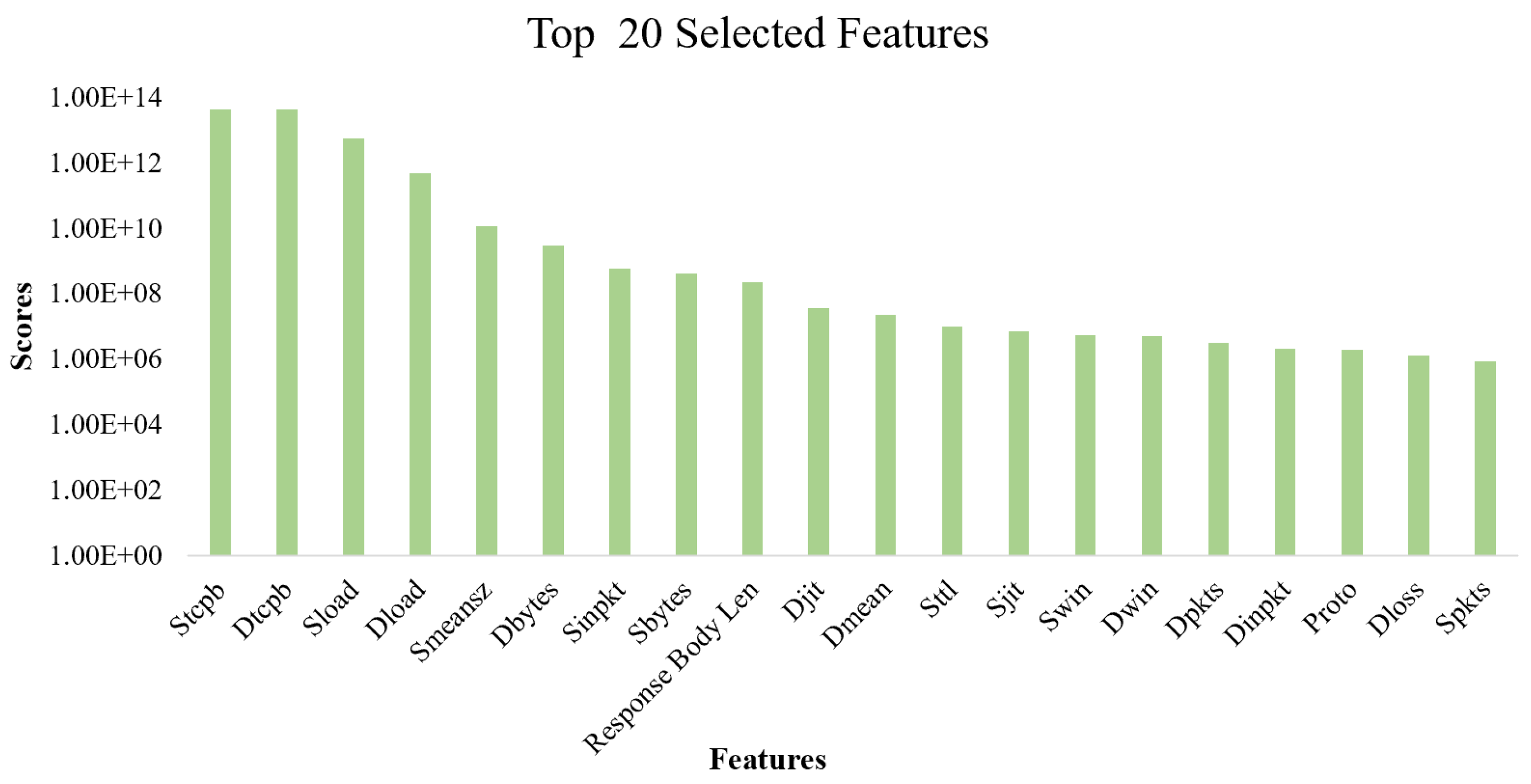

4.2. Feature Selection

4.3. Dataset

4.4. Evaluation Metrics

- Accuracy: the total number of samples that are correctly classified to the total number of samples. Accuracy was calculated using Equation (3):where TP refers to true positive. This means that the model correctly classifies malicious samples as malicious. TN refers to true negative, which means that the model correctly classifies benign samples as benign. FP refers to a false positive, which means that the model could not classify a benign sample as benign. FN refers to false negative, which means that the model could not classify a malicious sample as malicious.

- Type I Error or FP Rate: the total number of benign samples that are not classified correctly to the total number of all the benign samples. This was calculated using Equation (4):

- Type II Error or FN Rate: the total number of malicious samples that are not classified correctly as compared to the total number of all the malicious samples. This was calculated using Equation (5):

- F1-Score: this refers to how discriminative the model is and it was calculated using Equation (6):where precision represents the ratio of the malicious samples that are classified correctly to the total number of all samples that are classified as malicious; and, recall represents the ratio of the malicious samples that are correctly classified to the total number of malicious samples.

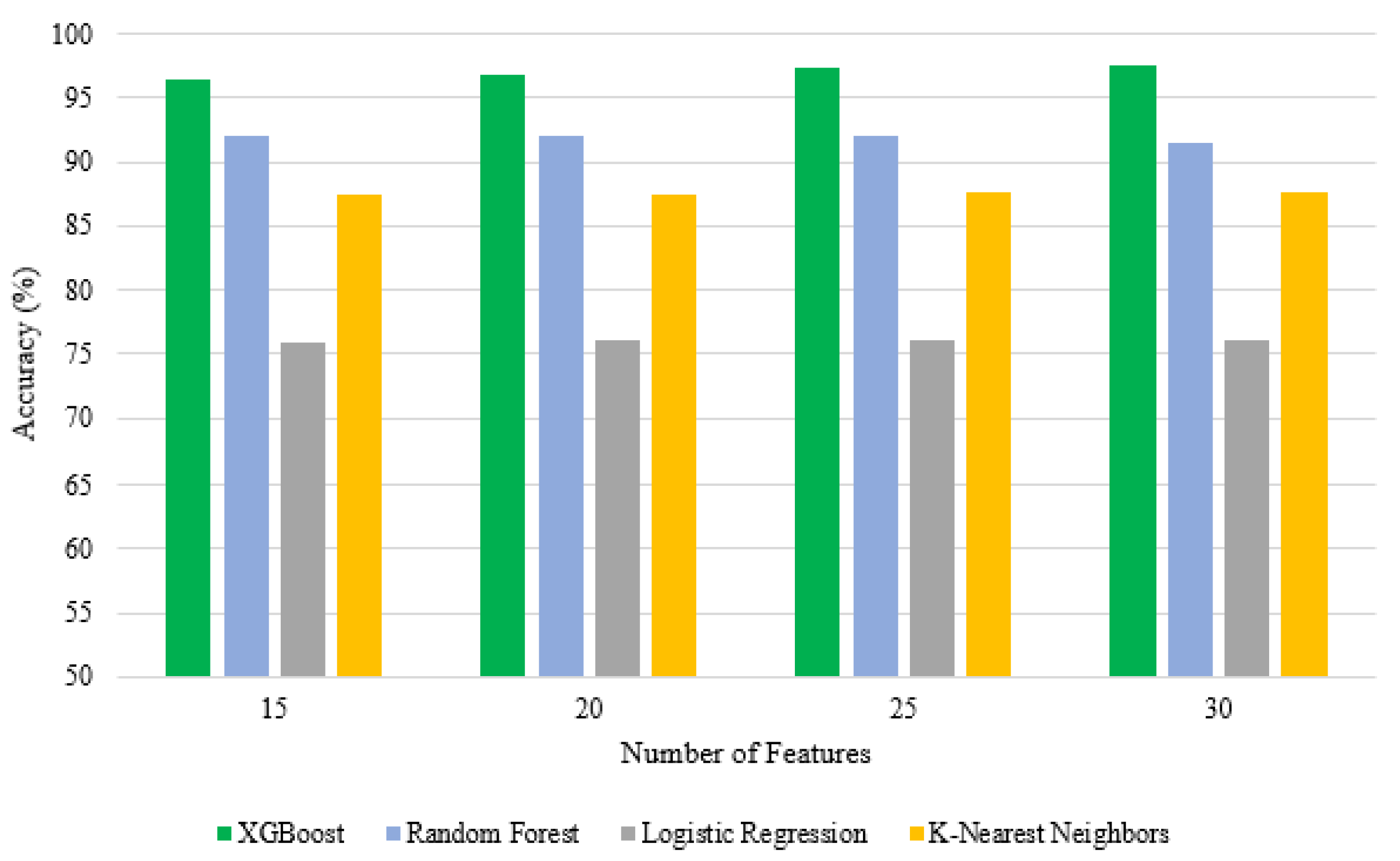

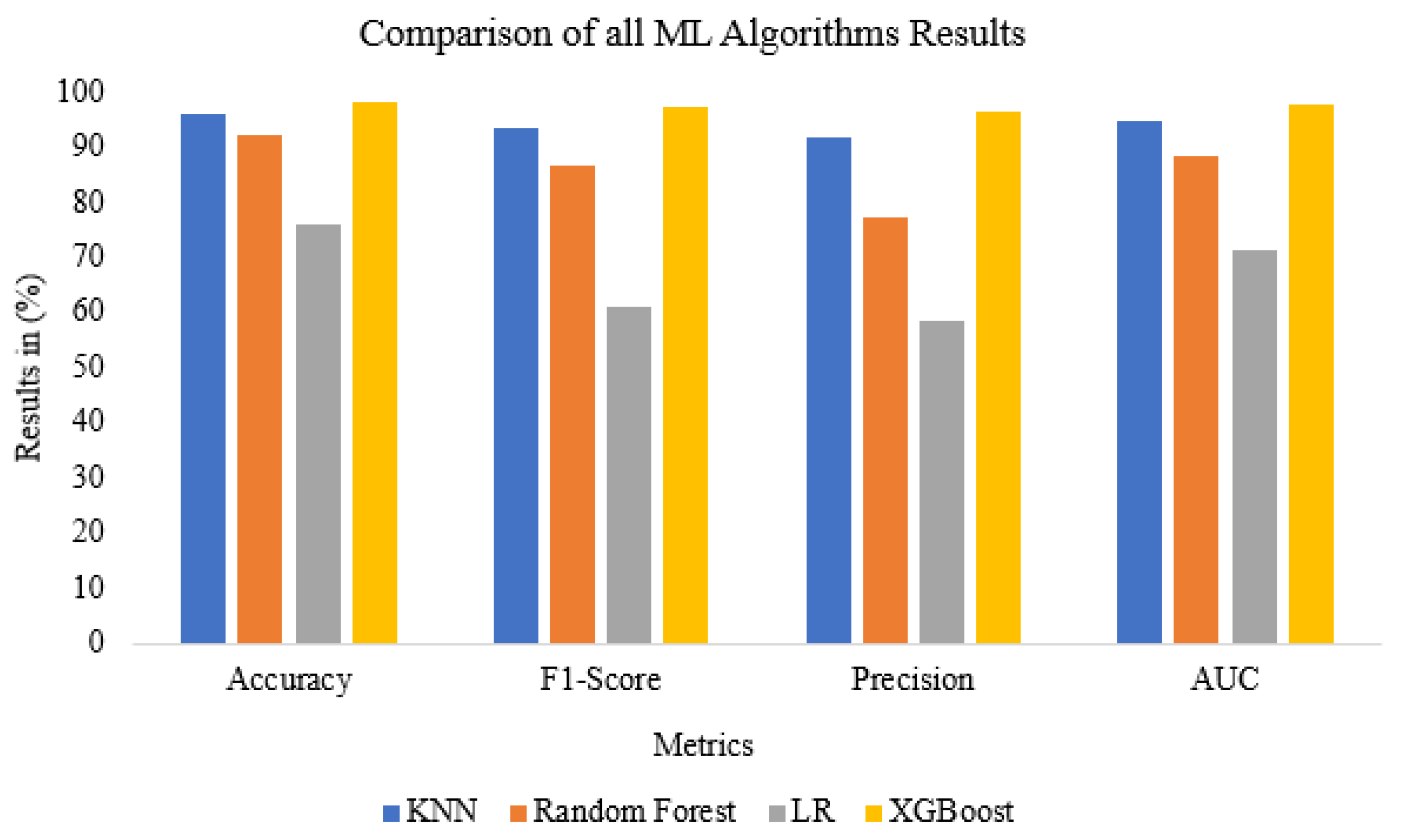

5. Results and Discussion

5.1. Results

5.2. Discussion

5.3. Hardware Resource Utilization

6. Conclusions

Funding

Conflicts of Interest

References

- Rose, K.; Eldridge, S.; Chapin, L. The internet of things: An overview. Internet Soc. (ISOC) 2015, 80, 1–50. [Google Scholar]

- Sengupta, J.; Ruj, S.; Bit, S.D. A Comprehensive survey on attacks, security issues and blockchain solutions for IoT and IIoT. J. Netw. Comput. Appl. 2020, 149, 102481. [Google Scholar] [CrossRef]

- Chen, M.; Miao, Y.; Humar, I. OPNET IoT Simulation; Springer Nature: Singapore, 2019. [Google Scholar]

- Hassan, N.; Gillani, S.; Ahmed, E.; Yaqoob, I.; Imran, M. The Role of Edge Computing in Internet of Things. IEEE Commun. Mag. 2018, 56, 110–115. [Google Scholar] [CrossRef]

- Eskandari, M.; Janjua, Z.H.; Vecchio, M.; Antonelli, F. Passban IDS: An intelligent anomaly-based intrusion detection system for IoT edge devices. IEEE Internet Things J. 2020, 7, 6882–6897. [Google Scholar] [CrossRef]

- Alrashdi, I.; Alqazzaz, A.; Aloufi, E.; Alharthi, R.; Zohdy, M.; Ming, H. AD-IoT: Anomaly Detection of IoT Cyberattacks in Smart City Using Machine Learning. In Proceedings of the 2019 IEEE 9th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 7–9 January 2019; pp. 305–310. [Google Scholar] [CrossRef]

- Parker, L.R.; Yoo, P.D.; Asyhari, T.A.; Chermak, L.; Jhi, Y.; Taha, K. Demise: Interpretable deep extraction and mutual information selection techniques for IoT intrusion detection. In Proceedings of the 14th International Conference on Availability, Reliability and Security, Canterbury, UK, 26–29 August 2019; pp. 1–10. [Google Scholar]

- Moustafa, N.; Slay, J. UNSW-NB15: A comprehensive data set for network intrusion detection systems (UNSW-NB15 network data set). In Proceedings of the 2015 Military Communications and Information Systems Conference (MilCIS), Canberra, Australia, 10–12 November 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Yu, W.; Liang, F.; He, X.; Hatcher, W.G.; Lu, C.; Lin, J.; Yang, X. A Survey on the Edge Computing for the Internet of Things. IEEE Access 2018, 6, 6900–6919. [Google Scholar] [CrossRef]

- Satyanarayanan, M.; Bahl, P.; Caceres, R.; Davies, N. The case for vm-based cloudlets in mobile computing. IEEE Pervasive Comput. 2009, 8, 14–23. [Google Scholar] [CrossRef]

- Nishio, T.; Shinkuma, R.; Takahashi, T.; Mandayam, N.B. Service-oriented heterogeneous resource sharing for optimizing service latency in mobile cloud. In Proceedings of the First International Workshop on Mobile Cloud Computing & Networking, Bangalore, India, 29 July–1 August 2013; pp. 19–26. [Google Scholar]

- Griffin, D.; Rio, M.; Simoens, P.; Smet, P.; Vandeputte, F.; Vermoesen, L.; Bursztynowski, D.; Schamel, F. Service oriented networking. In Proceedings of the 2014 European Conference on Networks and Communications (EuCNC), Bologna, Italy, 23–26 June 2014; pp. 1–5. [Google Scholar]

- Kasongo, S.M.; Sun, Y. Performance Analysis of Intrusion Detection Systems Using a Feature Selection Method on the UNSW-NB15 Dataset. J. Big Data 2020, 7, 1–20. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd ACM Sigkdd International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Moustafa, N.; Slay, J. The evaluation of Network Anomaly Detection Systems: Statistical analysis of the UNSW-NB15 data set and the comparison with the KDD99 data set. Inf. Secur. J. Glob. Perspect. 2016, 25, 18–31. [Google Scholar] [CrossRef]

- Brugger, T. KDD Cup’99 dataset (Network Intrusion) considered harmful. KDnuggets Newsl. 2007, 7, 15. [Google Scholar]

- Papamartzivanos, D.; Mármol, F.G.; Kambourakis, G. Dendron: Genetic trees driven rule induction for network intrusion detection systems. Future Gener. Comput. Syst. 2018, 79, 558–574. [Google Scholar] [CrossRef]

- Tavallaee, M.; Bagheri, E.; Lu, W.; Ghorbani, A.A. A detailed analysis of the KDD CUP 99 data set. In Proceedings of the 2009 IEEE Symposium on Computational Intelligence for Security and Defense Applications, Ottawa, ON, Canada, 8–10 July 2009; pp. 1–6. [Google Scholar]

- Kolias, C.; Kambourakis, G.; Stavrou, A.; Gritzalis, S. Intrusion Detection in 802.11 Networks: Empirical Evaluation of Threats and a Public Dataset. IEEE Commun. Surv. Tutor. 2016, 18, 184–208. [Google Scholar] [CrossRef]

- Zhou, Y.; Cheng, G.; Jiang, S.; Dai, M. Building an efficient intrusion detection system based on feature selection and ensemble classifier. Comput. Netw. 2020, 174, 107247. [Google Scholar] [CrossRef]

- Rahman, M.A.; Asyhari, A.T.; Leong, L.; Satrya, G.; Tao, M.H.; Zolkipli, M. Scalable machine learning-based intrusion detection system for iot-enabled smart cities. Sustain. Cities Soc. 2020, 61, 102324. [Google Scholar] [CrossRef]

- Anthi, E.; Williams, L.; Słowińska, M.; Theodorakopoulos, G.; Burnap, P. A Supervised Intrusion Detection System for Smart Home IoT Devices. IEEE Internet Things J. 2019, 6, 9042–9053. [Google Scholar] [CrossRef]

- Nguyen, T.D.; Marchal, S.; Miettinen, M.; Fereidooni, H.; Asokan, N.; Sadeghi, A. DÏoT: A Federated Self-learning Anomaly Detection System for IoT. In Proceedings of the 2019 IEEE 39th International Conference on Distributed Computing Systems (ICDCS), Dallas, TX, USA, 7–10 July 2019; pp. 756–767. [Google Scholar] [CrossRef]

- Jiang, J.C.; Kantarci, B.; Oktug, S.; Soyata, T. Federated Learning in Smart City Sensing: Challenges and Opportunities. Sensors 2020, 20, 6230. [Google Scholar] [CrossRef] [PubMed]

- Lyu, L.; Yu, H.; Yang, Q. Threats to federated learning: A survey. arXiv 2020, arXiv:2003.02133. [Google Scholar]

- Catak, F.O.; Mustacoglu, A.F. Distributed denial of service attack detection using autoencoder and deep neural networks. J. Intell. Fuzzy Syst. 2019, 37, 3969–3979. [Google Scholar] [CrossRef]

- Asaithambi, S. The VirusTotal Homepage. Available online: https://www.virustotal.com/gui/ (accessed on 24 March 2021).

- Asaithambi, S. Why, How and When to Apply Feature Selection. Available online: https://www.shorturl.at/qzEI6 (accessed on 21 September 2019).

- Cen, L.; Gates, C.; Si, L.; Li, N. A probabilistic discriminative model for android malware detection with decompiled source code. IEEE Trans. Dependable Secur. Comput. 2015, 12, 400–412. [Google Scholar] [CrossRef]

- Kotsiantis, S. Supervised Machine Learning: A Review of Classification Techniques. In Proceedings of the 2007 Conference on Emerging Artificial Intelligence Applications in Computer Engineering: Real Word AI Systems with Applications in eHealth, HCI, Information Retrieval and Pervasive Technologies; IOS Press: Amsterdam, The Netherlands, 2007; pp. 3–24. [Google Scholar]

- Chandra, B.; Gupta, M. An efficient statistical feature selection approach for classification of gene expression data. J. Biomed. Inform. 2011, 44, 529–535. [Google Scholar] [CrossRef]

- Scikit-Learn Machine Learning in Python. Available online: https://scikit-learn.org/stable/ (accessed on 4 March 2021).

- Kumar, V.; Sinha, D.; Das, A.K.; Pandey, S.C.; Goswami, R.T. An integrated rule based intrusion detection system: Analysis on UNSW-NB15 data set and the real time online dataset. Clust. Comput. 2020, 23, 1397–1418. [Google Scholar] [CrossRef]

- Meftah, S.; Rachidi, T.; Assem, N. Network based intrusion detection using the UNSW-NB15 dataset. Int. J. Comput. Digit. Syst. 2019, 8, 478–487. [Google Scholar]

- Godard, S. Sar Collect, Report, or sAve System Activity Information. Available online: https://linux.die.net/man/1/sar (accessed on 6 February 2021).

- Damasevicius, R.; Venckauskas, A.; Grigaliunas, S.; Toldinas, J.; Morkevicius, N.; Aleliunas, T.; Smuikys, P. LITNET-2020: An annotated real-world network flow dataset for network intrusion detection. Electronics 2020, 9, 800. [Google Scholar] [CrossRef]

| Metrics | Front-End | Near-End | Far-End |

|---|---|---|---|

| Deployment | WiFi access points, cellular base station, or radio access network | Routers, access points, or mobile nodes | Cloud data centers or local data centers |

| Network | WiFi, LTE, or any other technologies | Multiple wireless access points including WiFi, 3G, or LTE | Enterprise networks and WiFi hotspots |

| Example | Cloudlet [10] | Mobile Cloud [11] | FUSION [12] |

| Tool | Approach | Datasets | Algorithm | Limitations |

|---|---|---|---|---|

| [6] | ML | UNSW-NB15 | XGBoost | High latency as |

| traffic analyzed in fog layer | ||||

| [13] | ML | UNSW-NB15 | XGBoost | Low detection rate |

| [15] | ML | UNSW-NB15 | DT | Low detection rate |

| KDD99 | ||||

| [17] | ML | KDD99 | DT | Low detection rate |

| UNSW-NB15 | ||||

| NSL-KDD | ||||

| [7] | ML | AWID | LR | Time consuming |

| [20] | ML | KDD99 | RF | Does not consider typical network attacks |

| [22] | ML | NA | J48 | Considers only five types of attacks |

| [23] | FL | NA | GRU | Vulnerable to poisoning and inference attacks |

| [26] | ANN | UNSW-NB15 | Autoencoder | High error rate if features are independent on each other |

| Features | Description | Features | Description |

|---|---|---|---|

| dmeansz | The mean of raw packets | swin | The frame value of |

| transmitted by the destination size | TCP at the source | ||

| proto | Protocol of the transaction | dwin | The frame value of |

| TCP at the destination | |||

| sbytes | The size from the source | stcpb | The base sequence number |

| to the destination | of source TCP | ||

| dbytes | The size from the | dtcpb | The base sequence number |

| destination to the source | of destination TCP | ||

| dloss | Number of packets | smeansz | The mean of raw packets |

| dropped or delegated | transmitted by the source size | ||

| sload | Source bits/sec. | response body len | The size of the response by the server |

| dload | Destination bits/sec. | sjit | Source jitter (ms) |

| spkts | Number of packets at the source | djit | Destination jitter (ms) |

| dpkts | Number of packets at | sintpkt | Arrival time between |

| the destination | source layers (ms) | ||

| dintpkt | Arrival time between | sttl | Time from source to |

| destination layers (ms) | destination to live value |

| Attack | Number of Records |

|---|---|

| DoS | 1167 |

| Fuzzers | 5051 |

| Backdoors | 534 |

| Exploits | 5409 |

| Analysis | 526 |

| Generic | 7522 |

| Worms | 24 |

| Shellcode | 223 |

| Reconnaissance | 1759 |

| Classifier | Best Parameters |

|---|---|

| K-NN | metric_params = None, n_jobs = 8, n_neighbors = 5, p = 2, |

| weights = ‘uniform’ | |

| Random Forests | n_estimator = 8, max_depth = None, max_depth = None, |

| max_features = ‘auto’, max_leaf_nodes = None | |

| LR | intercept_scaling = 1, max_iter = 100 |

| XGBoost | colsample_bytree = 1, learning_rate = 0.1, max_depth = 3, |

| n_estimators = 100, n_jobs = 1 |

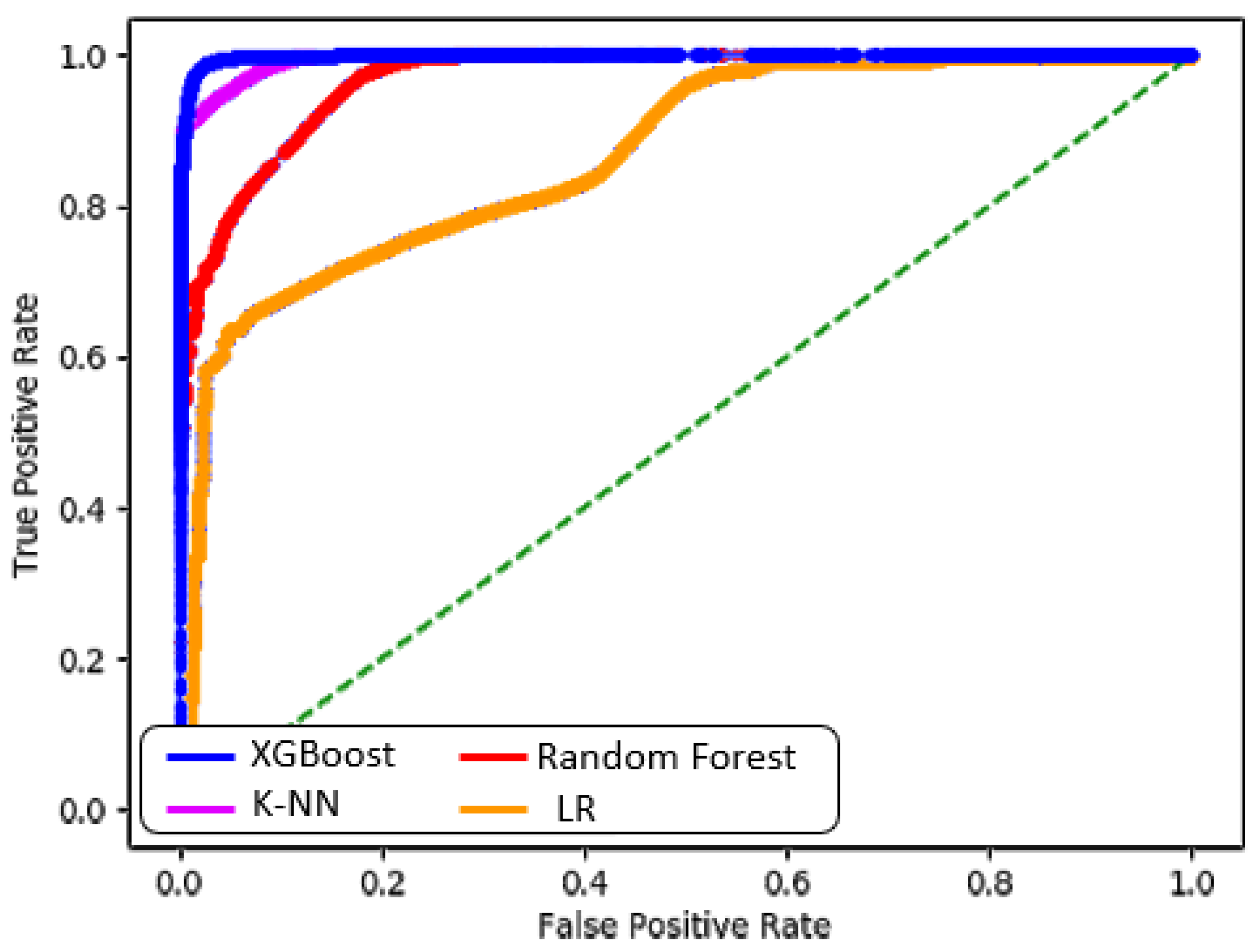

| Metrics | Algorithm | |||

|---|---|---|---|---|

| K-NN | Random Forests | LR | XGBoost | |

| True Positives | 30,856 | 25,985 | 19,655 | 32,413 |

| True Negatives | 70,253 | 71,188 | 60,517 | 71,053 |

| False Positives | 2770 | 7641 | 13,971 | 1213 |

| False Negatives | 1326 | 391 | 11,062 | 526 |

| Sensitivity (%) | 95.88 | 98.52 | 63.99 | 98.40 |

| Specificity (%) | 96.21 | 90.31 | 81.24 | 98.32 |

| Precision (%) | 91.76 | 77.28 | 58.45 | 96.39 |

| Type I Error (%) | 3.76 | 9.69 | 18.76 | 3.61 |

| Type II Error (%) | 4.12 | 1.48 | 36.01 | 1.6 |

| Accuracy (%) | 96.11 | 92.37 | 76.21 | 98.35 |

| F1-Score (%) | 93.78 | 86.61 | 61.09 | 97.39 |

| AUC (%) | 94.95 | 88.36 | 71.49 | 97.82 |

| Time Complexity | ||||

| Space Complexity | ||||

| Existing Tools | Metrics | |||

|---|---|---|---|---|

| Accuracy (%) | F1-Score (%) | Precision (%) | Type I Error (%) | |

| Kasongo & Sun [13] | 90.85 | 88.45 | 80.33 | NA |

| Moustafa & Slay [15] | 85.56 | NA | NA | 15.78 |

| Kumar et al. [33] | 84.83 | NA | NA | 2.01 |

| Dimitrios et al. [17] | 84.33 | 48.81 | NA | 2.61 |

| Souhail et al. [34] | 82.11 | NA | NA | NA |

| Ferhat and Ahmet [26] | 97.44 | 89.85 | 89.24 | NA |

| CoLL-IoT | 98.35 | 97.39 | 96.39 | 3.61 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alshahrani, H.M. CoLL-IoT: A Collaborative Intruder Detection System for Internet of Things Devices. Electronics 2021, 10, 848. https://doi.org/10.3390/electronics10070848

Alshahrani HM. CoLL-IoT: A Collaborative Intruder Detection System for Internet of Things Devices. Electronics. 2021; 10(7):848. https://doi.org/10.3390/electronics10070848

Chicago/Turabian StyleAlshahrani, Hani Mohammed. 2021. "CoLL-IoT: A Collaborative Intruder Detection System for Internet of Things Devices" Electronics 10, no. 7: 848. https://doi.org/10.3390/electronics10070848

APA StyleAlshahrani, H. M. (2021). CoLL-IoT: A Collaborative Intruder Detection System for Internet of Things Devices. Electronics, 10(7), 848. https://doi.org/10.3390/electronics10070848