Data-Driven Construction of User Utility Functions from Radio Connection Traces in LTE

Abstract

:1. Introduction

2. Characterization of Quality of Experience

3. Trace Collection Process

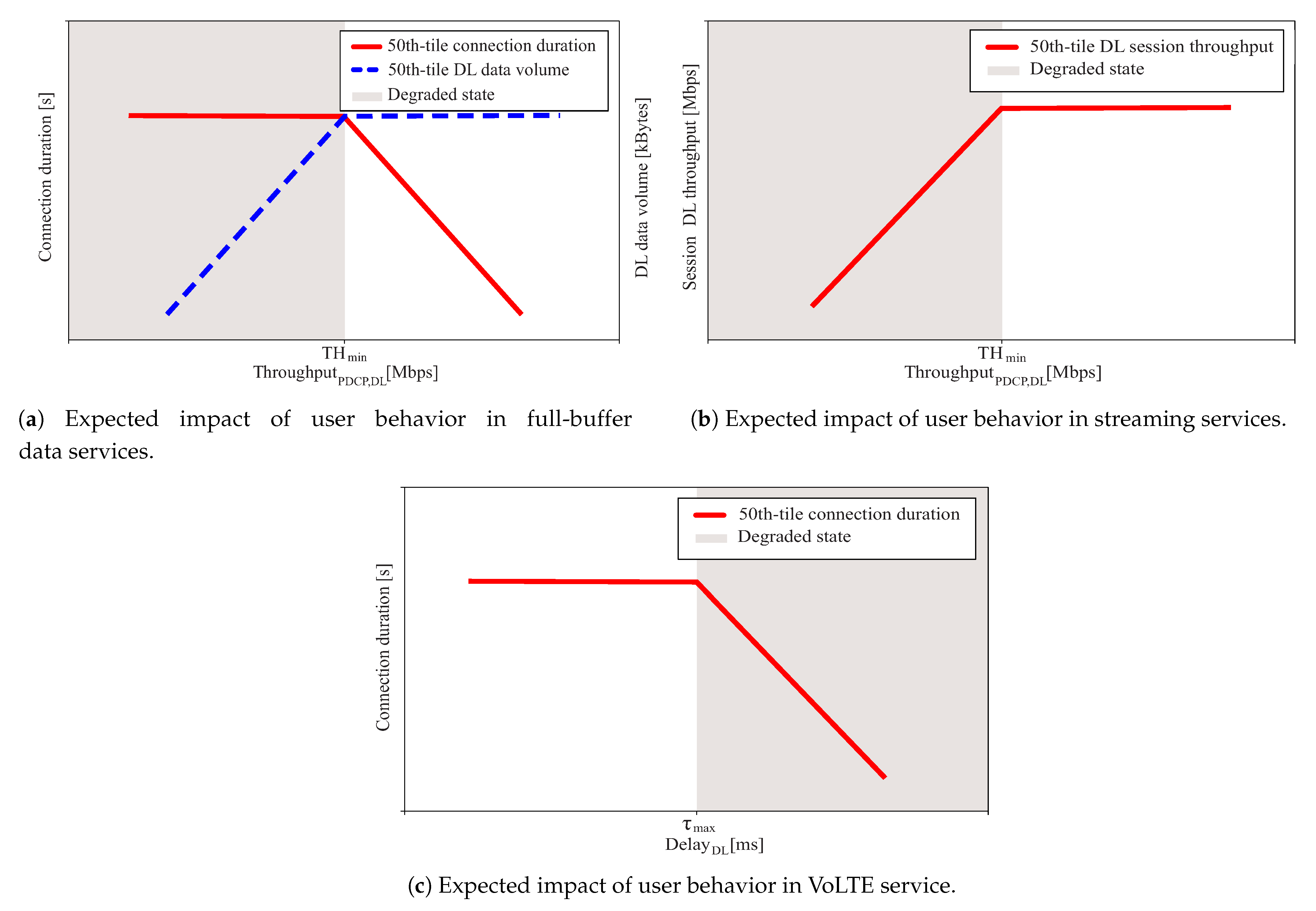

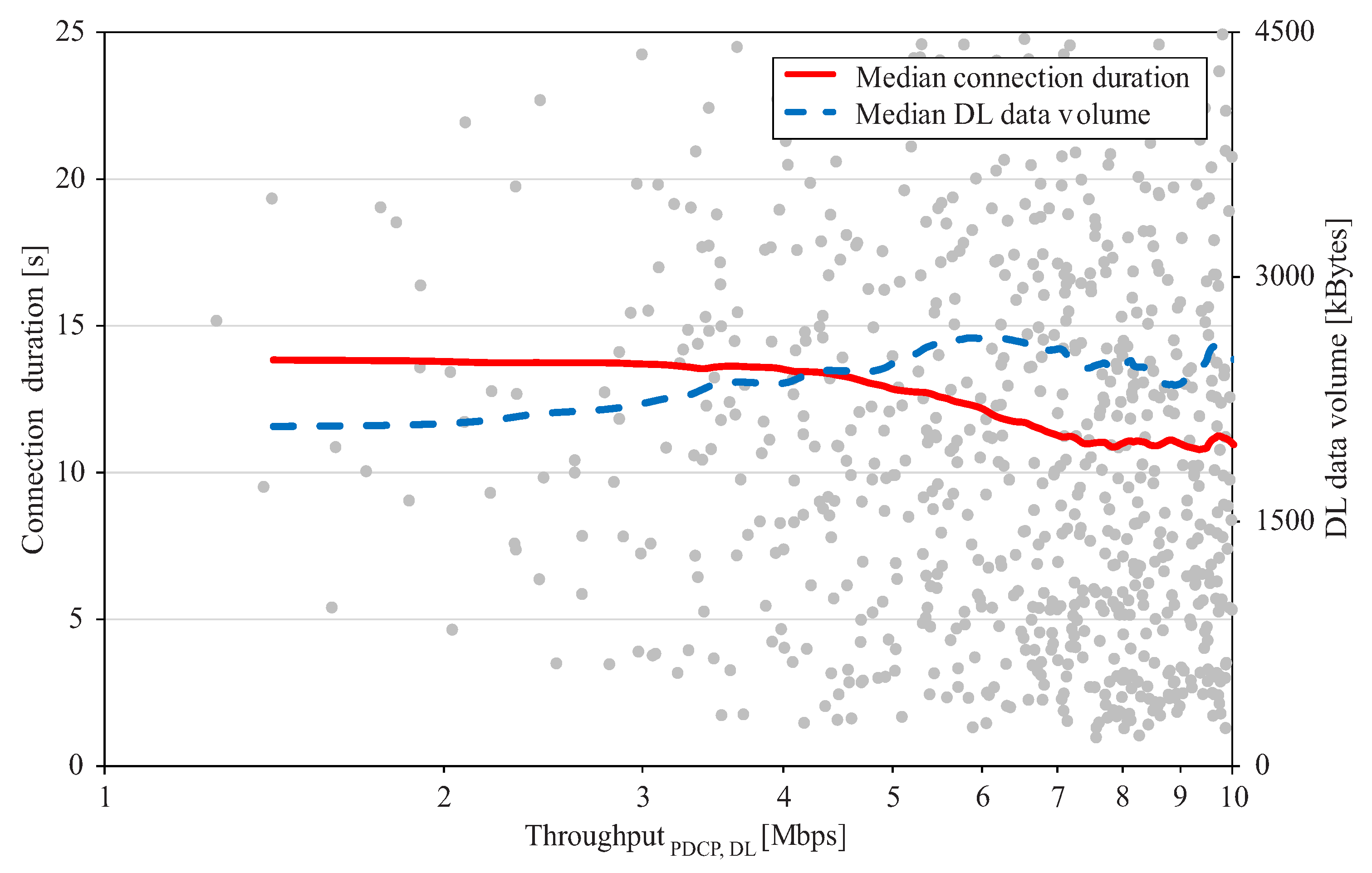

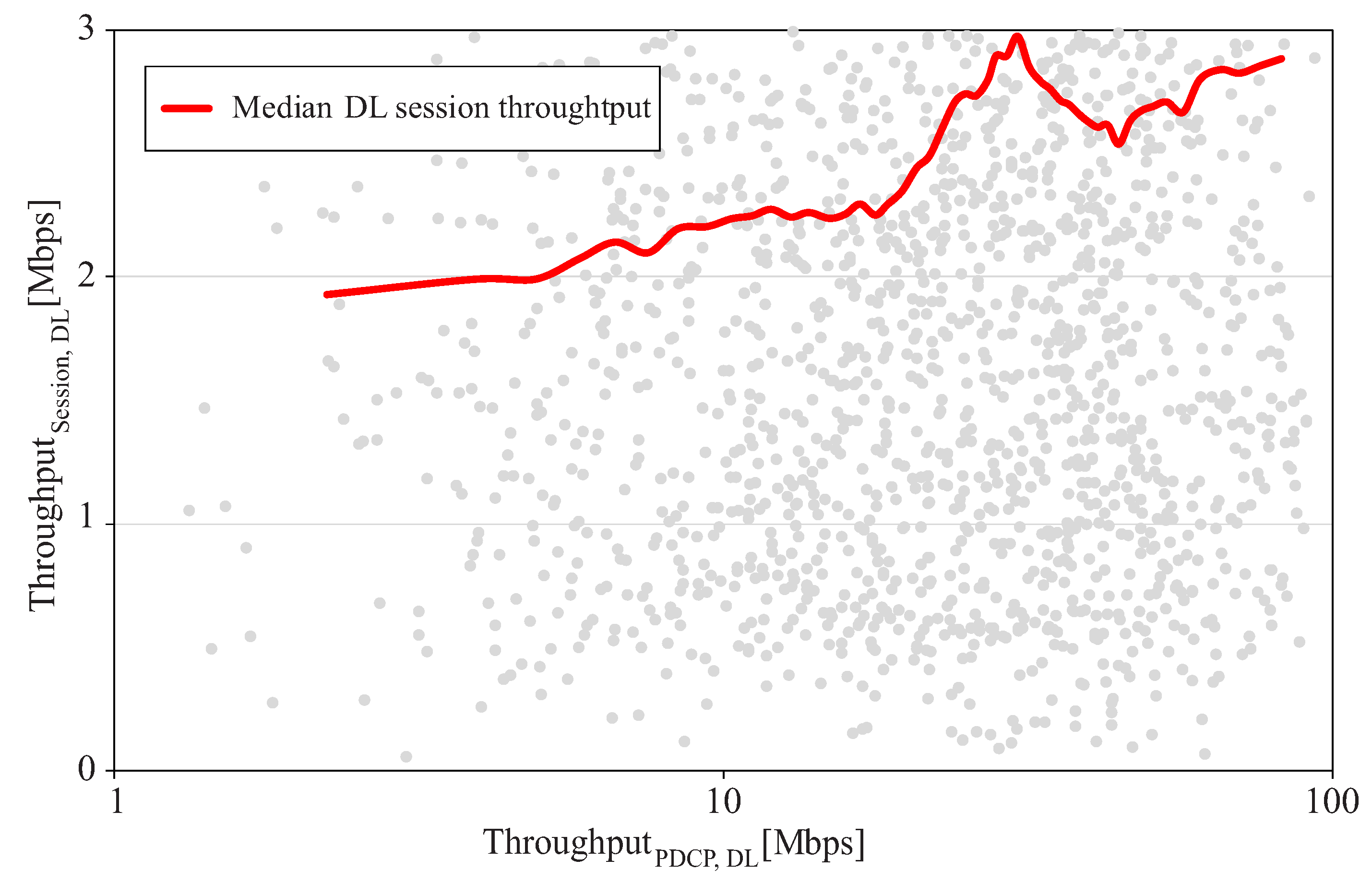

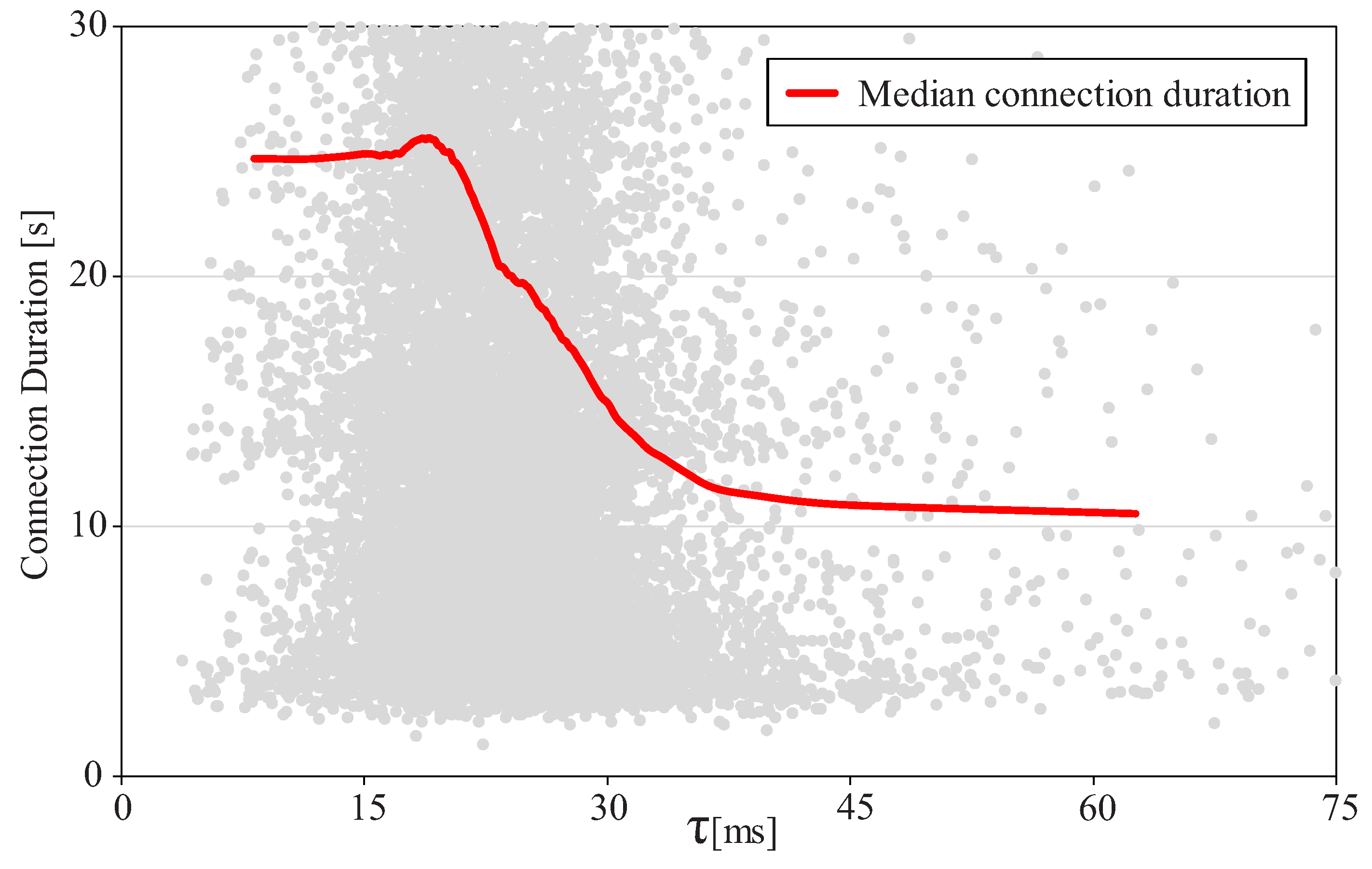

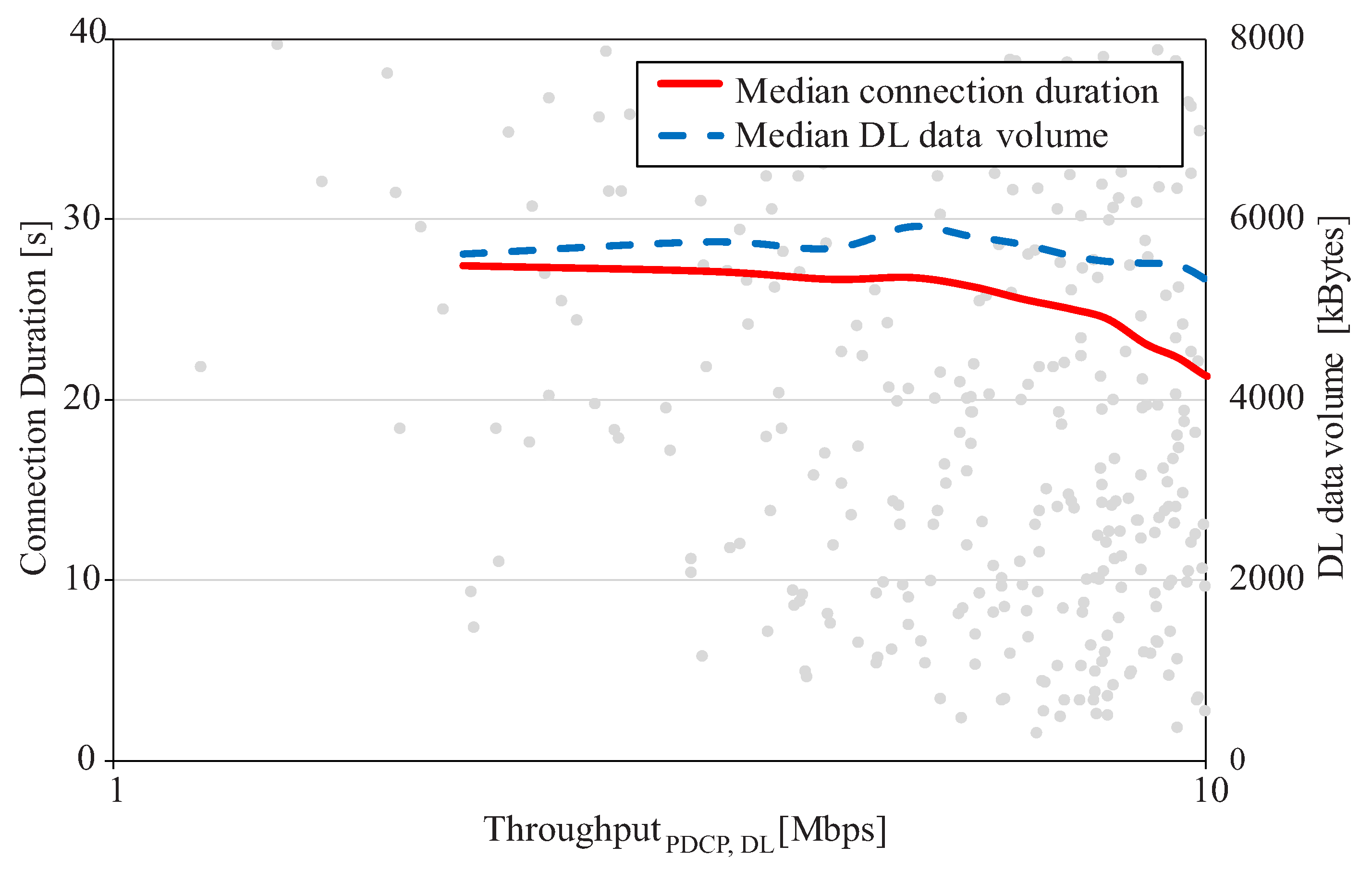

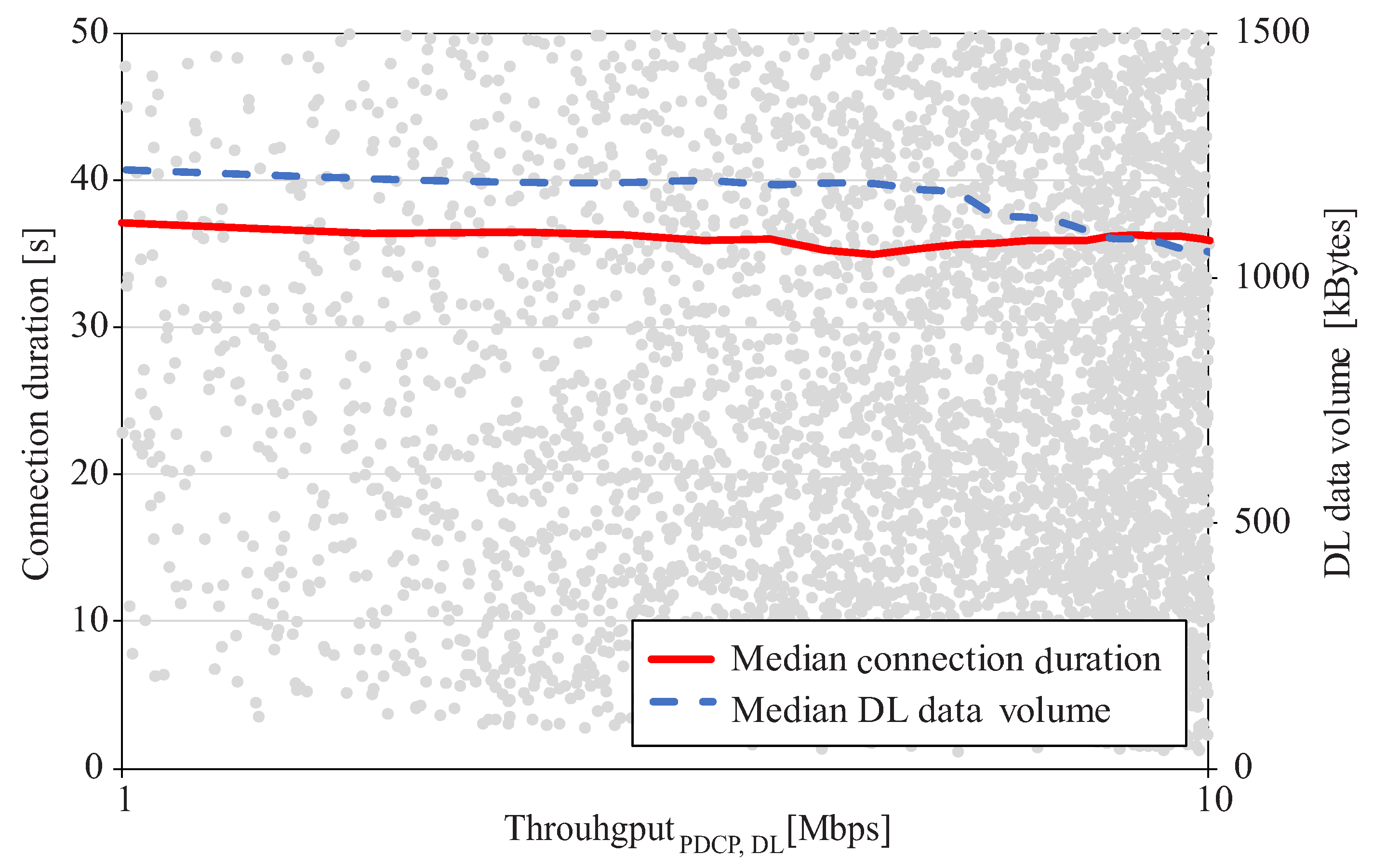

4. Estimation of QoS Thresholds on a Per-Service Basis

4.1. Step 1: Classification of Connection Traces

- The RRC connection time;

- The total DL traffic volume at the packet data converge protocol level;

- The UL traffic volume ratio [%], computed as

- The DL traffic volume ratio transmitted in last TTIs, , computed as

- The DL activity ratio, , computed as the ratio between active TTIs and the effective duration of the connection,

- The session DL throughput, (in bps).

4.2. Step 2: Estimation of Minimum Qos Thresholds

5. Performance Assessment

5.1. Analysis Set-Up

5.2. Results

5.3. Implementation Issues

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| BDA | Big Data Analytics |

| CDR | Charge Data Record |

| CEM | Customer Experience Management |

| CM | Configuration Management |

| CRM | Customer Relationship Management |

| CTF | Configuration Trace File |

| CTR | Cell Traffic Recording |

| DL | Downlink |

| DTF | Data Trace File |

| eNB | evolved Nodes B |

| IMSI | International Mobile Subscriber Identity |

| IoT | Internet of Things |

| KPI | Key Performance Indicator |

| LTE | Long Term Evolution |

| MAC | Medium Access Control |

| ML | Machine Learning |

| MOS | Mean Opinion Score |

| PM | Performance Management |

| OSS | Operations Support System |

| PDCP | Packet Data Control Protocol |

| QCI | QoS Class Identifier |

| QoE | Quality of Experience |

| QoS | Quality of Service |

| RLC | Radio Link Control |

| ROP | Reporting Output Period |

| RRC | Radio Resource Control |

| TTI | Transmission Time Interval |

| UE | User Equipment |

| UETR | User Equipment Traffic Recording |

| UL | Uplink |

| VoIP | Voice over IP |

| VoLTE | Voice over LTE |

References

- Cisco Systems Inc. Cisco Visual Networking Index: Global Mobile Data Traffic Forecast Update, 2017–2022. Available online: https://s3.amazonaws.com/media.mediapost.com/uploads/CiscoForecast.pdf (accessed on 29 March 2021).

- Hossain, E.; Hasan, M. 5G cellular: Key enabling technologies and research challenges. IEEE Instrum. Meas. Mag. 2015, 18, 11–21. [Google Scholar] [CrossRef] [Green Version]

- Sesia, S.; Toufik, I.; Baker, M. LTE-the UMTS Long Term Evolution: From Theory to Practice; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Liotou, E.; Tsolkas, D.; Passas, N.; Merakos, L. Quality of experience management in mobile cellular networks: Key issues and design challenges. IEEE Commun. Mag. 2015, 53, 145–153. [Google Scholar] [CrossRef]

- Gupta, A.; Jha, R.K. A survey of 5G network: Architecture and emerging technologies. IEEE Access 2015, 3, 1206–1232. [Google Scholar] [CrossRef]

- Imran, A.; Zoha, A.; Abu-Dayya, A. Challenges in 5G: How to empower SON with big data for enabling 5G. IEEE Netw. 2014, 28, 27–33. [Google Scholar] [CrossRef]

- Banerjee, A. Revolutionizing CEM with Subscriber-Centric Network Operations and QoE Strategy. Available online: http://www.accantosystems.com/wp-content/uploads/2016/10/Heavy-Reading-Accanto-Final-Aug-2014.pdf (accessed on 29 March 2021).

- Baraković, S.; Skorin-Kapov, L. Survey and challenges of QoE management issues in wireless networks. J. Comput. Netw. Commun. 2013, 2013, 165146. [Google Scholar] [CrossRef] [Green Version]

- Jin, H.; Su, L.; Chen, D.; Nahrstedt, K.; Xu, J. Quality of information aware incentive mechanisms for mobile crowd sensing systems. In MobiHoc’15, Proceedings of the 16th ACM International Symposium on Mobile Ad Hoc Networking and Computing, Hangzhou, China, 22–25 June 2015; Association for Computing Machinery: New York, NY, USA, 2015. [Google Scholar]

- Collange, D.; Costeux, J.L. Passive Estimation of Quality of Experience. J. UCS 2008, 14, 625–641. [Google Scholar]

- Brunnström, K.; Beker, S.A.; De Moor, K.; Dooms, A.; Egger, S.; Garcia, M.N.; Hossfeld, T.; Jumisko-Pyykkö, S.; Keimel, C.; Larabi, M.C.; et al. Qualinet White Paper on Definitions of Quality of Experience. Available online: https://hal.archives-ouvertes.fr/hal-00977812 (accessed on 29 March 2021).

- Li, Y.; Kim, K.H.; Vlachou, C.; Xie, J. Bridging the data charging gap in the cellular edge. In SIGCOMM’19, Proceedings of the ACM Special Interest Group on Data Communication, Beijing, China, 19–23 August, 2019; Association for Computing Machinery: New York, NY, USA, 2019. [Google Scholar]

- Wang, J.; Zheng, Y.; Ni, Y.; Xu, C.; Qian, F.; Li, W.; Jiang, W.; Cheng, Y.; Cheng, Z.; Li, Y.; et al. An active-passive measurement study of tcp performance over lte on high-speed rails. In Proceedings of the 25th Annual International Conference on Mobile Computing and Networking, Los Cabos, Mexico, 21–25 October 2019; pp. 1–16. [Google Scholar]

- Hori, T.; Ohtsuki, T. QoE and throughput aware radio resource allocation algorithm in LTE network with users using different applications. In Proceedings of the 2016 IEEE 27th Annual International Symposium on Personal, Indoor, and Mobile Radio Communications (PIMRC), Valencia, Spain, 4–8 September 2016; pp. 1–6. [Google Scholar]

- Reichl, P.; Tuffin, B.; Schatz, R. Logarithmic laws in service quality perception: Where microeconomics meets psychophysics and quality of experience. Telecommun. Syst. 2013, 52, 587–600. [Google Scholar] [CrossRef]

- Fiedler, M.; Hossfeld, T.; Tran-Gia, P. A generic quantitative relationship between quality of experience and quality of service. IEEE Netw. 2010, 24, 36–41. [Google Scholar] [CrossRef] [Green Version]

- Casas, P.; Seufert, M.; Schatz, R. YOUQMON: A system for on-line monitoring of YouTube QoE in operational 3G networks. ACM Sigmetrics Perform. Eval. Rev. 2013, 41, 44–46. [Google Scholar] [CrossRef]

- Baer, A.; Casas, P.; D’Alconzo, A.; Fiadino, P.; Golab, L.; Mellia, M.; Schikuta, E. DBStream: A holistic approach to large-scale network traffic monitoring and analysis. Comput. Netw. 2016, 107, 5–19. [Google Scholar] [CrossRef]

- Skorin-Kapov, L.; Varela, M.; Hoßfeld, T.; Chen, K.T. A survey of emerging concepts and challenges for QoE management of multimedia services. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2018, 14, 1–29. [Google Scholar] [CrossRef]

- Oliver-Balsalobre, P.; Toril, M.; Luna-Ramírez, S.; Avilés, J.M.R. Self-tuning of scheduling parameters for balancing the quality of experience among services in LTE. EURASIP J. Wirel. Commun. Netw. 2016, 2016, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Baldo, N.; Giupponi, L.; Mangues-Bafalluy, J. Big data empowered self organized networks. In Proceedings of the European Wireless 2014: 20th European Wireless Conference, Barcelona, Spain, 14–16 May 2014; pp. 1–8. [Google Scholar]

- Witten, I.H.; Frank, E.; Mark, A.; Hall, M.A. Data Mining: Practical machine learning tools and techniques. Morgan Kaufmann Burlingt. MA 2011, 10, 1972514. [Google Scholar]

- Nokia Siemens Networks. Quality of Experience (QoE) of Mobile Services: Can It be Measured and Improved. Available online: https://docplayer.net/25986899-White-paper-quality-of-experience-qoe-of-mobile-services-can-it-be-measured-and-improved.html (accessed on 29 March 2021).

- Fiedler, M.; Chevul, S.; Radtke, O.; Tutschku, K.; Binzenhöfer, A. The network utility function: A practicable concept for assessing network impact on distributed services. In Proceedings of the 19th International Teletraffic Congress (ITC19), Beijing, China, 29 August–2 September 2005. [Google Scholar]

- Navarro-Ortiz, J.; Lopez-Soler, J.M.; Stea, G. Quality of experience based resource sharing in IEEE 802.11 e HCCA. In Proceedings of the 2010 European Wireless Conference (EW), Lucca, Italy, 12–15 April 2010; pp. 454–461. [Google Scholar]

- 3GPP. TS25.331, Technical Specification Group Radio Access Network; Radio Resource Control (RRC); Protocol specification; V11.4.0, Rel-11. Available online: https://portal.3gpp.org/desktopmodules/Specifications/SpecificationDetails.aspx?specificationId=1180 (accessed on 29 March 2021).

- 3GPP. TS36.413, Technical Specification Group Radio Access Network; Evolved Universal Terrestrial Radio Access Network (E-UTRAN); S1 Application Protocol (S1AP); V8.4.0, Rel-8. Available online: https://portal.3gpp.org/desktopmodules/Specifications/SpecificationDetails.aspx?specificationId=2446 (accessed on 29 March 2021).

- 3GPP. TS36.423, Technical Specification Group Radio Access Network; Evolved Universal Terrestrial Radio Access Network (E-UTRAN); X2 Application Protocol (X2AP); V9.2.0, Rel-9. Available online: https://portal.3gpp.org/desktopmodules/Specifications/SpecificationDetails.aspx?specificationId=2452 (accessed on 29 March 2021).

- 3GPP. TS32.423, Digital Cellular telecommunications system (Phase 2+); Universal Mobile Telecommunications System (UMTS); LTE; Telecommunication Management; Subscriber and Equipment Trace; Trace Data Definition and Management; V10.5.0, Rel-10. Available online: https://portal.3gpp.org/desktopmodules/Specifications/SpecificationDetails.aspx?specificationId=2010 (accessed on 29 March 2021).

- 3GPP. TS32.421, Telecommunication Management; Subscriber and Equipment Trace; Trace Concepts and Requirements; V6.7.0, Rel-6. Available online: https://portal.3gpp.org/desktopmodules/Specifications/SpecificationDetails.aspx?specificationId=2008 (accessed on 29 March 2021).

- Sánchez, P.A.; Luna-Ramírez, S.; Toril, M.; Gijón, C.; Bejarano-Luque, J.L. A data-driven scheduler performance model for QoE assessment in a LTE radio network planning tool. Comput. Netw. 2020, 173, 107186. [Google Scholar] [CrossRef]

- Ekstrom, H. QoS control in the 3GPP evolved packet system. IEEE Commun. Mag. 2009, 47, 76–83. [Google Scholar] [CrossRef]

- 3GPP. TS23.203, Technical Specification Group Services and System Aspects; Policy and Charging Control Architecture; V13.7.0, Rel-13. Available online: https://portal.3gpp.org/desktopmodules/Specifications/SpecificationDetails.aspx?specificationId=810 (accessed on 29 March 2021).

- Palmieri, F.; Fiore, U. A nonlinear, recurrence-based approach to traffic classification. Comput. Netw. 2009, 53, 761–773. [Google Scholar] [CrossRef]

- Nguyen, T.T.; Armitage, G. A survey of techniques for internet traffic classification using machine learning. IEEE Commun. Surv. Tutorials 2008, 10, 56–76. [Google Scholar] [CrossRef]

- García, A.J.; Toril, M.; Oliver, P.; Luna-Ramírez, S.; García, R. Big data analytics for automated QoE management in mobile networks. IEEE Commun. Mag. 2019, 57, 91–97. [Google Scholar] [CrossRef]

- Taylor, V.F.; Spolaor, R.; Conti, M.; Martinovic, I. Appscanner: Automatic fingerprinting of smartphone apps from encrypted network traffic. In Proceedings of the 2016 IEEE European Symposium on Security and Privacy (EuroS&P), Saarbruecken, Germany, 21–24 March 2016; pp. 439–454. [Google Scholar]

- Gijón, C.; Toril, M.; Solera, M.; Luna-Ramírez, S.; Jiménez, L.R. Encrypted Traffic Classification Based on Unsupervised Learning in Cellular Radio Access Networks. IEEE Access 2020, 8, 167252–167263. [Google Scholar] [CrossRef]

- Jiménez, L.R. Web Page Classification based on Unsupervised Learning using MIME type Analysis. In Proceedings of the 2021 International Conference on COMmunication Systems & NETworkS (COMSNETS), Bangalore, India, 5–9 January 2021; pp. 375–377. [Google Scholar]

- Jiménez, L.R.; Solera, M.; Toril, M. A network-layer QoE model for YouTube live in wireless networks. IEEE Access 2019, 7, 70237–70252. [Google Scholar] [CrossRef]

- Na, S.; Yoo, S. Allowable propagation delay for VoIP calls of acceptable quality. In International Workshop on Advanced Internet Services and Applications; Springer: Berlin/Heidelberg, Germany, 2002; pp. 47–55. [Google Scholar]

- MathWorks. Matlab. Available online: https://www.mathworks.com/products/matlab.html (accessed on 10 November 2020).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

García, A.J.; Gijón, C.; Toril, M.; Luna-Ramírez, S. Data-Driven Construction of User Utility Functions from Radio Connection Traces in LTE. Electronics 2021, 10, 829. https://doi.org/10.3390/electronics10070829

García AJ, Gijón C, Toril M, Luna-Ramírez S. Data-Driven Construction of User Utility Functions from Radio Connection Traces in LTE. Electronics. 2021; 10(7):829. https://doi.org/10.3390/electronics10070829

Chicago/Turabian StyleGarcía, Antonio J., Carolina Gijón, Matías Toril, and Salvador Luna-Ramírez. 2021. "Data-Driven Construction of User Utility Functions from Radio Connection Traces in LTE" Electronics 10, no. 7: 829. https://doi.org/10.3390/electronics10070829