A Survey on Deep Learning Based Approaches for Scene Understanding in Autonomous Driving

Abstract

1. Introduction

2. Basic CNN Models

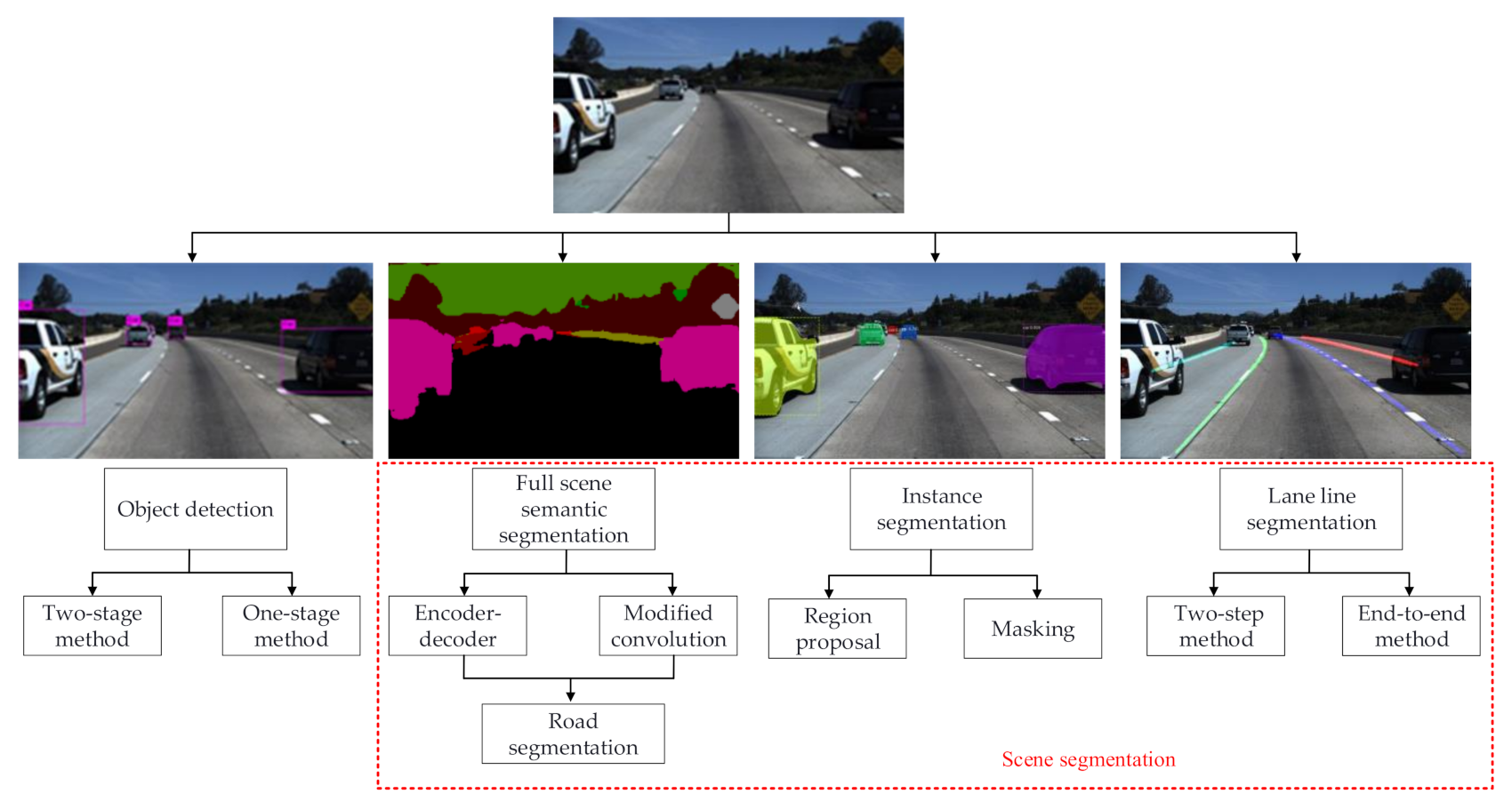

3. Scene Understanding in Autonomous Driving

3.1. Object Detection

3.1.1. Two-Stage Method

3.1.2. One-Stage Method

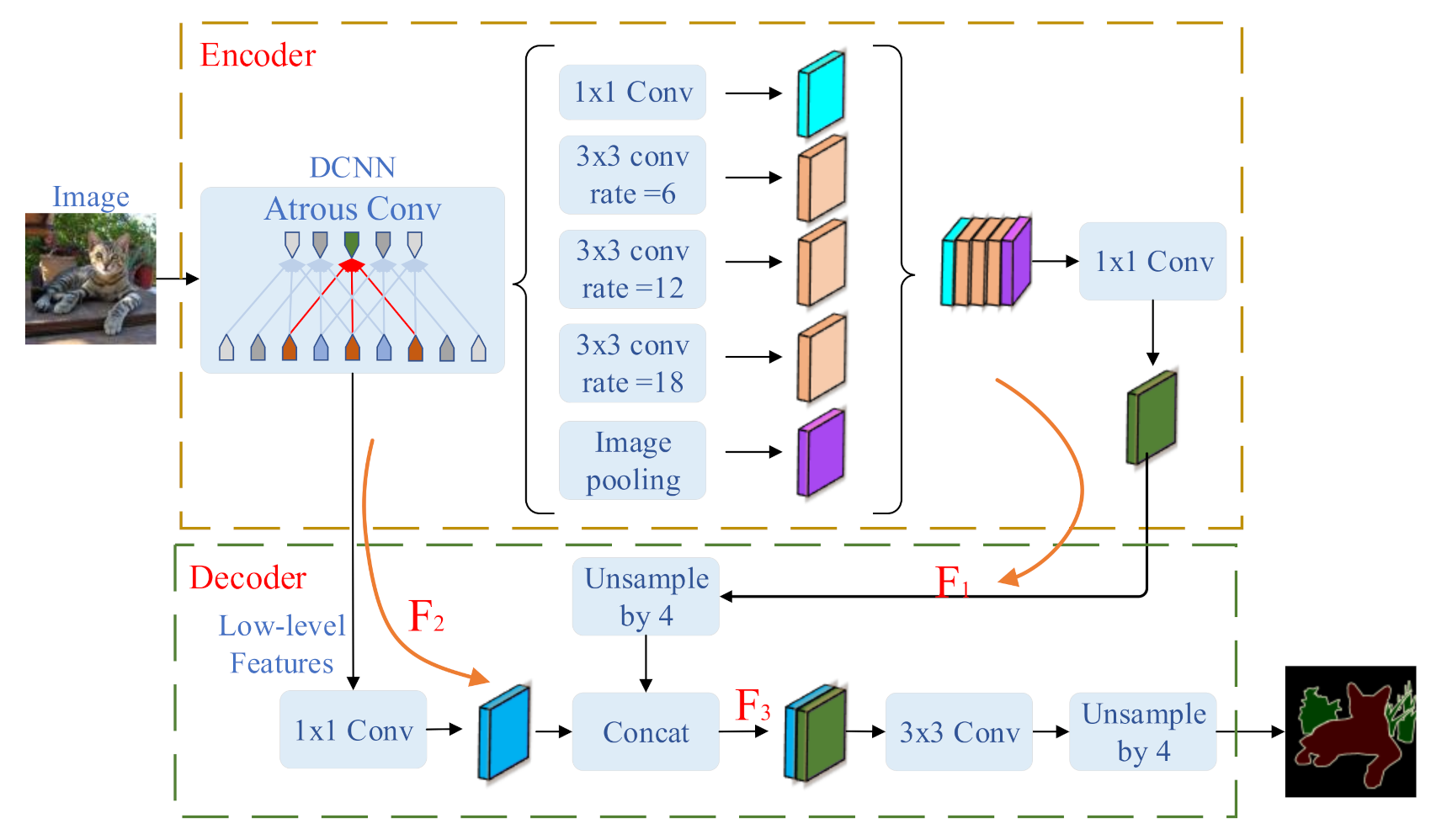

3.2. Full-Scene Semantic Segmentation

3.2.1. Encoder–Decoder Structure Models

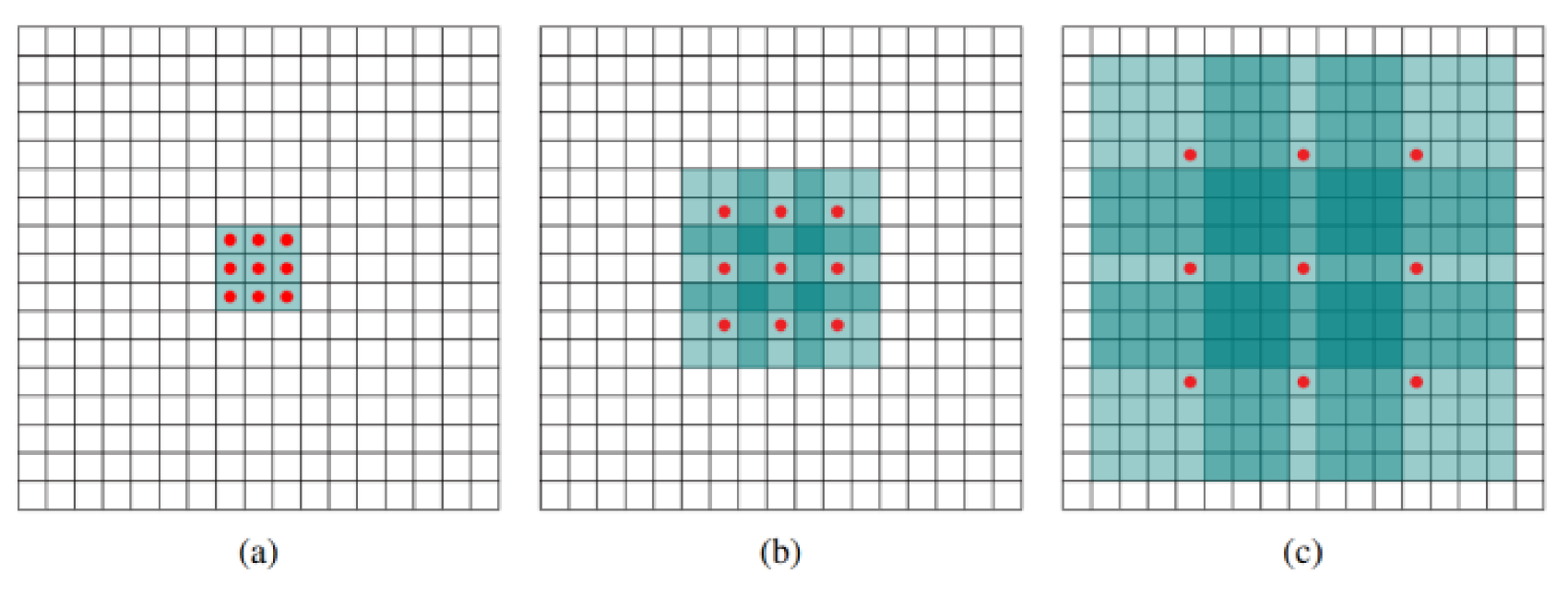

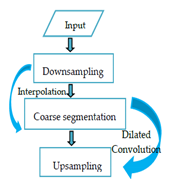

3.2.2. Modified Convolution Structure Models

3.2.3. Road Segmentation

3.3. Instance Segmentation

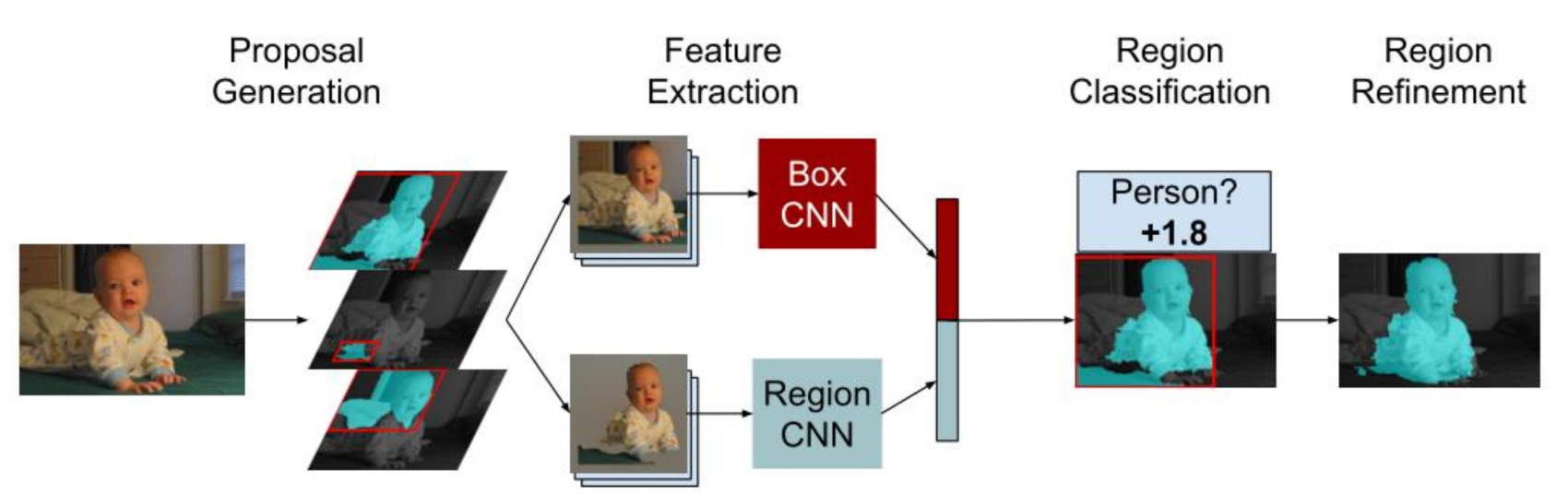

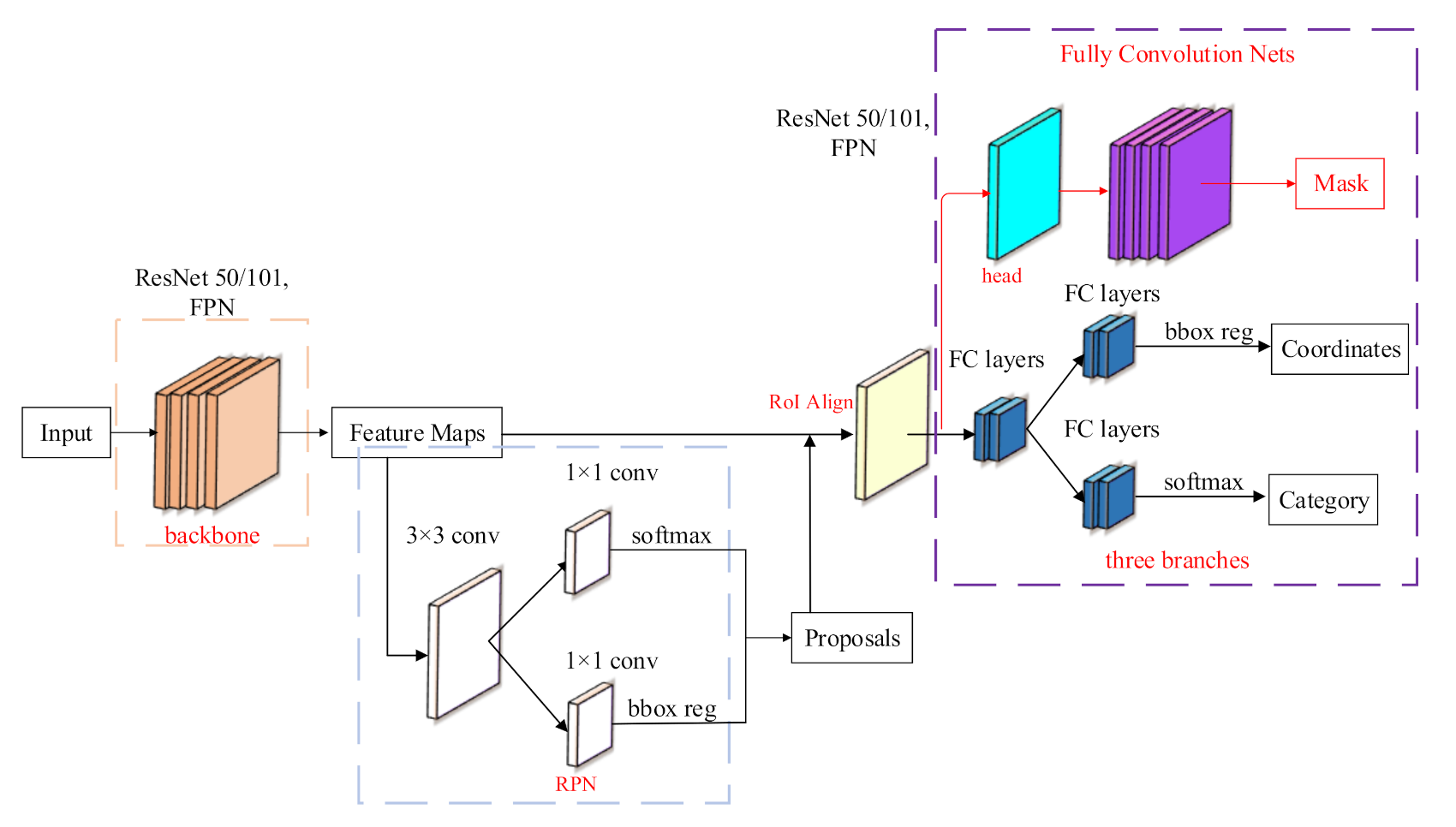

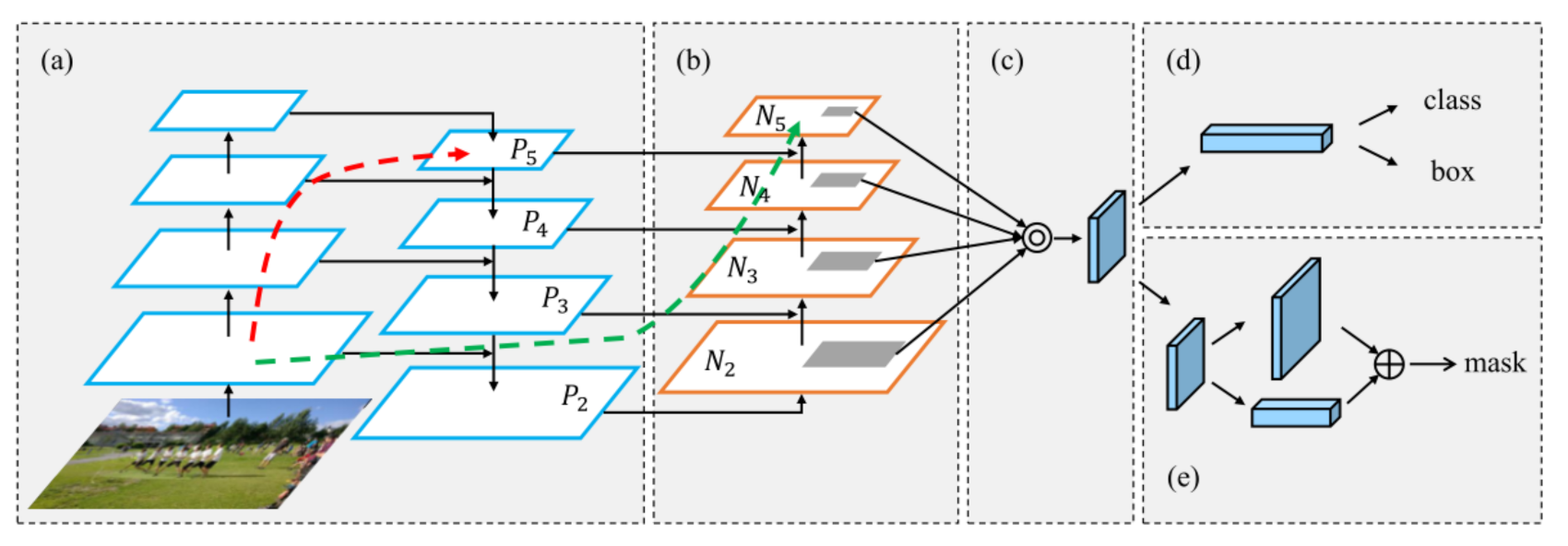

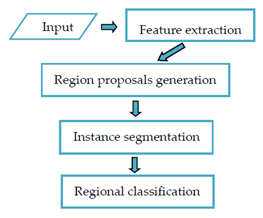

3.3.1. Region Proposal-Based Method

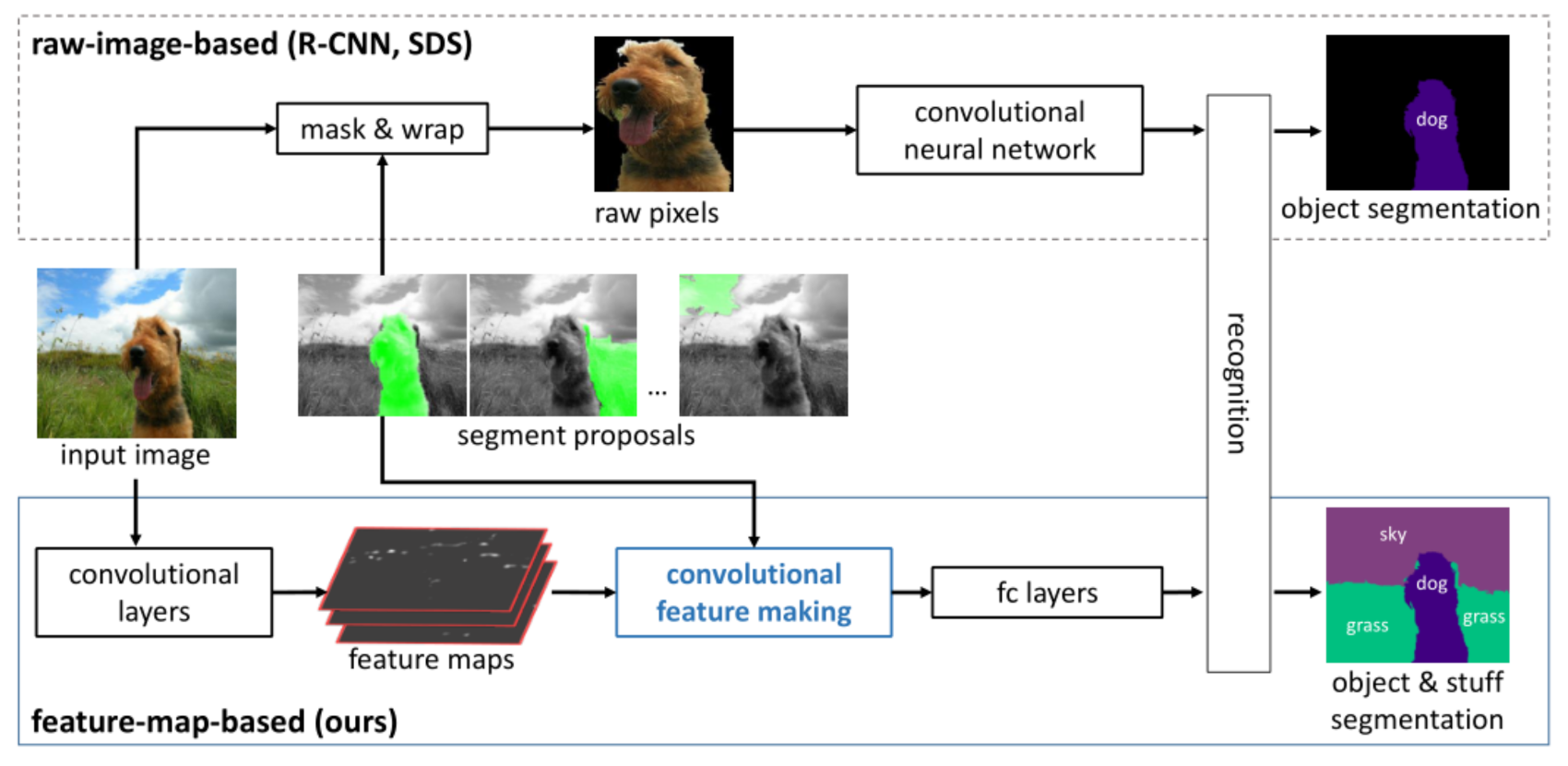

3.3.2. Masking-Based Method

3.4. Lane Line Segmentation

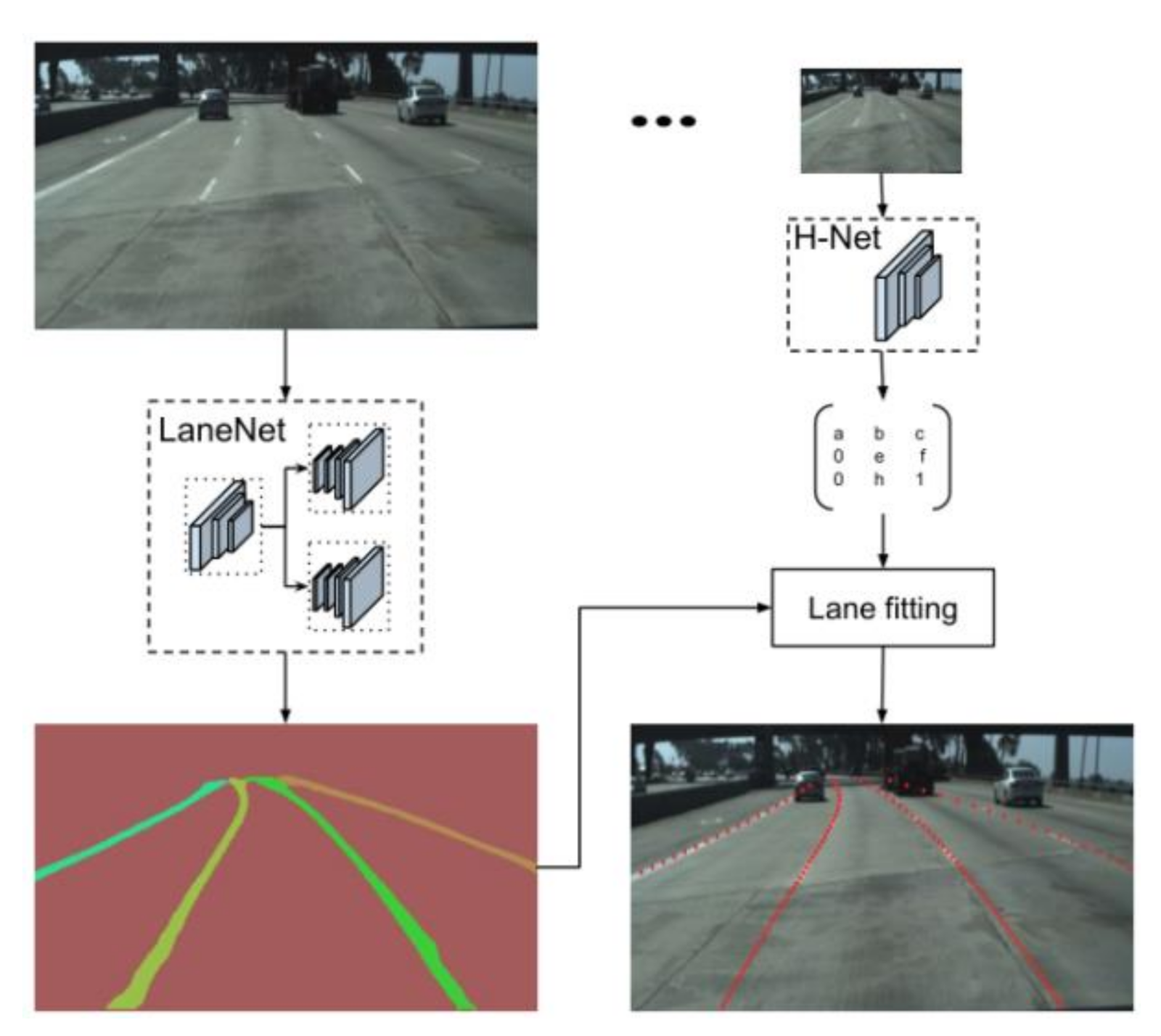

3.4.1. Two-Step Method

3.4.2. End-to-End Method

4. Datasets and Evaluation Criteria

4.1. Datesets

- CamVid [97], or the Cambridge Driving Label Video Database, is the first video collection with semantic labels. There are 32 semantic categories with a total of 710 images. Most of the videos were taken with a fixed-position camera, which partly solved the need for experimental data. However, compared with the datasets released in recent years, there are gaps in the number of labels and the completeness of the labeling;

- The Karlsruhe Institute of Technology and Toyota Technological Institute (KITTI) [63] dataset was co-founded by the Karlsruhe Institute of Technology in Germany and the Toyota American Institute of Technology. It is one of the most widely used datasets in the field of autonomous driving. It covers object detection, semantic segmentation, and object tracking, among other things. It consists of 389 pairs of stereo images and optical flow maps, a 39.2 km visual ranging sequence, 400 pixel-level segmented maps, and 15,000 traffic scene pictures labeled with bounding boxes;

- The Cityscapes dataset [62] is comprised of a large, diverse set of stereo video sequences recorded in streets from 50 different cities. It defines 30 visual classes for annotation, which are grouped into eight categories. Of these images, 5000 have high-quality, pixel-level annotations, and 20,000 additional images have coarse annotations. At present, more researchers will use Cityscapes to evaluate algorithm performance in the field of automatic driving;

- The Mapillary Vistas dataset [98], or Mapillary, is a large-scale, street-level image dataset released in 2017. It has a total of 25,000 high-resolution color images divided into 66 categories, of which 37 categories are specific instance-attached labels. Label annotations for objects can be densely and finely drawn using polygons. It also contains images from all over the world captured under various conditions, including images of different weather, seasons, and times;

- BDD100K [99] is a large-scale, self-driving dataset with the most diverse content, released by UC Berkeley in 2019. The dataset includes a total of 100,000 videos in complex scenes, such as different weather and times, each about 40 seconds in length. It is divided into 10 categories with about 1.84 million calibration frames. There are a total of 100,000 pictures of high-definition and blurred real driving scenes with different weather, scenes, and times, including 70,000 training sets, 20,000 test sets, and 10,000 validation sets;

- The ApolloScape open dataset [100], or ApolloScape, was published by Baidu in 2018 for semantic segmentation datasets, specifically for autonomous driving scenarios. Regardless of the amount of data, accuracy of the annotation, or complexity of the scene, it exceeds datasets such as KITTI, Cityscapes, and BDD100K. It contains much larger and richer labeling, including holistic semantic dense point clouds for each site, stereo, per pixel semantic labeling, lane mark labeling, instance segmentation, 3D car instancing, and highly accurate locations for every frame in various driving videos from multiple sites, cities, and daytimes;

- The Tusimple lane dataset [86] came to be in June 2018, when Tucson held a competition for camera detection using camera image data and later disclosed some of the data. The Tusimple lane dataset consists of 3626 images. The marked lane lines do not distinguish the lane line categories. Each line is synthesized by the coordinates of the point sequence, not a collection of lane line areas;

- The Caltech dataset [101] is a dataset on lane lines published by the California Institute of Technology in 2008. It includes 1225 road pictures taken at different locations during the day;

- The CULane dataset [85] is a large-scale, challenging traffic lane detection theoretical research dataset collected by the Chinese University of Hong Kong in 2017. It consists of more than 55 hours of video collected by six different vehicles and 133,235 extracted frames. There are 88,880 training sets, 9675 validation sets, and 34,680 test sets.

- Currently, the object detection task uses the KITTI dataset as the mainstream. Many excellent models are continuously compared in this dataset. The semantic segmentation task uses the Cityscapes dataset as the mainstream. This dataset has pixel-level annotations for each category in the scene. The annotations are fine and easy to use. The Tusimple lane dataset focuses on the lane line segmentation task with accurate and fine annotation.

4.2. Evaluation Criteria

4.2.1. Evaluation Criteria for Object Detection and Semantic Segmentation

4.2.2. Evaluation Criteria for Lane Detection

5. Performance Comparison

5.1. Comparison of the Full-Scene Segmentation Algorithms

5.2. Comparison of the Instance Segmentation Algorithms

6. Conclusion Remarks

6.1. 3D Segmentation

6.2. Panoptic Segmentation

6.3. Multitasking Joint Model

6.4. Tracking and Behavior Analysis

Author Contributions

Funding

Conflicts of Interest

References

- Janai, J.; Fatma, G.; Behl, A.; Geiger, A. Computer vision for autonomous vehicles: Problems, datasets and state-of-the-art. Found. Trends Comput. Graph. Vis. 2017, 12, 1–3. [Google Scholar] [CrossRef]

- Grigorescu, S.; Trasnea, B.; Cocias, T.; Macesanu, G. A survey of deep learning techniques for autonomous driving. J. Field Robot 2020, 37, 362–386. [Google Scholar] [CrossRef]

- Long, S.; He, X.; Yao, C. Scene text detection and recognition: The deep learning era. INT. J. Comput. Vision 2021, 129, 161–184. [Google Scholar] [CrossRef]

- Neven, D.; Brabandere, B.-D.; Georgoulis, S.; Proesmans, M. Towards End-to-End Lane Detection: An Instance Segmentation Approach. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 286–291. [Google Scholar]

- Lecun, Y.; Boser, B.; Denker, J.; Henderson, D.; Howard, R.; Hubbard, W. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Lake Tahoe, CA, USA, 3–6 December 2012. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.; Li, K.; Feifei, L. ImageNet: A large-scale hierarchical image database, In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition. Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Network for Large-Scale Image Recognition. Available online: https://arxiv.org/abs/1409.1556 (accessed on 4 September 2014).

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5987–5995. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 424–437. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.-A. Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 4278–4284. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Huang, G.; Liu, Z.; Der Maaten, L.-V.; Weinberger, K.-Q. Densely Connected Convolutional Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Zoph, B.; Vasudevan, V.-K.; Shlens, J.; Le, Q.-V. Learning Transferable Architectures for Scalable Image Recognition. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8697–8710. [Google Scholar]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-excitation networks. IEEE T. Pattern Anal. 2017, 32, 99–113. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 20–23 June 2014; pp. 580–587. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun., J. Intelligence, M. Spatial Pyramid Pooling in Deep Convolutional Network for Visual Recognition. IEEE T. Pattern. Anal. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. In Proceedings of the Conference and Workshop on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 1137–1149. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving Into High Quality Object Detection. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6154–6162. [Google Scholar]

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S. SSD: Single shot multibox detector. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.M.; Dollar, P. Focal loss for dense object detection. IEEE Trans. Pattern Ana. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. Available online: https://arxiv.org/abs/1804.02767 (accessed on 8 April 2018).

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully Convolutional One-Stage Object Detection. In Proceedings of the 2019 IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 9627–9636. [Google Scholar]

- Yang, Y.; Deng, H. GC-YOLOv3: You Only Look Once with Global Context Block. Electronics 2020, 9, 1235. [Google Scholar] [CrossRef]

- Chen, Y.; Han, C.; Wang, N.; Zhang, Z. Revisiting Feature Alignment for One-stage Object Detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. Centernet: Keypoint triplets for object detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019. [Google Scholar]

- Uijlings, J.; Sande, K.-E.; Gevers, T. Selective Search for Object Recognition. INT. J. Comput. Vision 2013, 104, 154–171. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Badrinarayanan, V.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Berlin/Heidelberg, Germany, 2015; pp. 3019–3037. [Google Scholar]

- Paszke, A.; Chaurasia, A.; Kim, S.; Culurciello, E. Enet: A deep neural network architecture for real-time semantic segmentation. arXiv 2016, arXiv:1606.02147. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 518–534. [Google Scholar]

- Wu, H.; Zhang, J.; Huang, K.; Liang, K. Computer Vision and Pattern Recognition. FastFCN: Rethinking Dilated Convolution in the Backbone for Semantic Segmentation. Available online: https://arxiv.org/abs/1903.11816 (accessed on 28 March 2019).

- Khan, A.; Ilyas, T.; Umraiz, M.; Mannan, Z.-I.; Kim, H. CED-Net: Crops and Weeds Segmentation for Smart Farming Using a Small Cascaded Encoder-Decoder Architecture. Electronics 2020, 9, 1602. [Google Scholar] [CrossRef]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual Attention Network for Scene Segmentation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Oliveira, G.-L.; Burgard, W.T.; Brox., T. Efficient deep models for monocular road segmentation. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 4885–4891. [Google Scholar]

- Caltagirone, L.; Bellone, M.; Svensson, L.; Wahde, M.J.R.; Systems, A. LIDAR-Camera Fusion for Road Detection Using Fully Convolutional Neural Network. Robot. Auton. Syst. 2019, 111, 125–131. [Google Scholar] [CrossRef]

- Munozbulnes, J.; Fernandez, C.-I.; Parra, I.; Fernandezllorca, D.; Sotelo, M.-A. Deep fully convolutional network with random data augmentation for enhanced generalization in road detection. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017; pp. 366–371. [Google Scholar]

- Luc, P.; Couprie, C.; Chintala, S.; Verbeek, J. Semantic Segmentation using Adversarial Network. Available online: https://arxiv.org/abs/1412.7062 (accessed on 22 December 2014).

- Yu, Y.; Koltun, V. Multi-scale context aggregation by dilated convolutions. Available online: https://arxiv.org/abs/1511.07122 (accessed on 23 November 2015).

- Chen, L.-C.; Papandreou, G.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Chen, L.-C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. Available online: https://arxiv.org/abs/1706.05587 (accessed on 17 June 2017).

- Zheng, S. Conditional Random Fields as Recurrent Neural Network. In Proceedings of the 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1529–1537. [Google Scholar]

- Yu, F.; Koltun, V.; Funkhouser, T. Dilated Residual Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 636–644. [Google Scholar]

- Wang, P.; Chen, P.; Yuan, Y.; Liu, D.; Huang, Z.; Hou, X. Understanding Convolution for Semantic Segmentation. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, CA, USA, 12–15 May 2018; pp. 1451–1460. [Google Scholar]

- Ladicky, L.; Russell, C.; Kohli, P.; Torr, P. Associative hierarchical CRFs for object class image segmentation. In Proceedings of the 2009 IEEE International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 739–746. [Google Scholar]

- Shotton, J.; Winn, J.; Rother, C.; Criminisi, A. TextonBoost for Image Understanding: Multi-Class Object Recognition and Segmentation by Jointly Modeling Texture, Layout, and Context. INT. J. Compute. Vision 2009, 81, 2–23. [Google Scholar] [CrossRef]

- Farabet, C.; Couprie, C.; Najman, L.; Lecun, Y. Learning Hierarchical Features for Scene Labeling. IEEE T. Pattern Anal. 2013, 35, 1915–1929. [Google Scholar]

- Gupta, S.; Girshick, R.; Arbelaez, P.; Malik, J. Learning Rich Features from RGB-D Images for Object Detection and Segmentation. In European Conference on Computer Vision; Springer: Zurich, Switzerland, 2014; pp. 345–360. [Google Scholar]

- Lin, G.; Milan, A.; Shen, C.; Reid, I. RefineNet: Multi-path Refinement Network for High-Resolution Semantic Segmentation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5168–5177. [Google Scholar]

- Ren, G.; Dai, T.; Barmpoutis, P.; Stathaki, T. Salient Object Detection Combining a Self-Attention Module and a Feature Pyramid Network. Electronics 2020, 9, 1702. [Google Scholar] [CrossRef]

- Krahenbuhl, P.; Koltun, V. Efficient Inference in Fully Connected CRFs with Gaussian Edge Potentials. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Granada, Spain, 12–15 December 2011; pp. 109–117. [Google Scholar]

- Cordts, M. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 3213–3223. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C. Vision meets robotics: The KITTI dataset. Int. J. Rob. Res 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Hariharan, B.; Arbelaez, P.; Girshick, R.; Malik, J. Simultaneous Detection and Segmentation. In European Conference on Computer Vision; Springer: Zurich, Switzerland, 2014; pp. 297–312. [Google Scholar]

- Hariharan, B.; Arbelaez, P.; Girshick, R.; Malik, J. Hypercolumns for object segmentation and fine-grained localization. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 447–456. [Google Scholar]

- Dai, J.; He, K.; Sun, J. Instance-Aware Semantic Segmentation via Multi-task Network Cascades. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 3150–3158. [Google Scholar]

- Dai, J.; He, K.; Li, Y.; Ren, S.; Sun, J. Instance-Sensitive Fully Convolutional Network. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 534–549. [Google Scholar]

- Li, Y.; Qi, H.; Dai, J.; Ji, X.; Wei, Y. Fully Convolutional Instance-Aware Semantic Segmentation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4438–4446. [Google Scholar]

- He, K.; Gkioxari, P.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2980–2988. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Dai, J.; He, K.; Sun, J. Convolutional feature masking for joint object and stuff segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3992–4000. [Google Scholar]

- Pinheiro, P.-O.; Collobert, R.; Dollar, P. Learning to segment object candidates. In NIPS’15: Proceedings of the 28th International Conference on Neural Information Processing Systems; Association for Computing Machinery (ACM): New York, NY, USA, 2018; pp. 1990–1998. [Google Scholar]

- Pinheiro, P.-O.; Lin, T.; Collobert, R.; Dollar, P. Learning to Refine Object Segments. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 75–91. [Google Scholar]

- Zagoruyko, S. A MultiPath Network for Object Detection. IEEE Conf. Compute. Vision. Pattern Recognit. 2016, 214–223. [Google Scholar]

- Hu, R.; Dollar, P.; He, K.; Darrell, T.; Girshick, R. Learning to Segment Every Thing. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4233–4241. [Google Scholar]

- Lee, Y.; Park, J. CenterMask: Real-Time Anchor-Free Instance Segmentation. Available online: https://arxiv.org/abs/1911.06667 (accessed on 15 November 2019).

- Arbelaez, P.; Ponttuset, J.; Barron, J.; Marques, F.; Malik, J. Multiscale Combinatorial Grouping. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Columbus, OH, USA, 23–28 June 2014; pp. 328–335. [Google Scholar]

- Chiu, K.; Lin, S. Lane detection using color-based segmentation. In Proceedings of the 2005 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 6–8 June 2005; pp. 706–711. [Google Scholar]

- Lopez, A.-M.; Serrat, J.; Canero, C.; Lumbreras, F. Robust lane markings detection and road geometry computation. INT J. Auto. Tech-Kor. 2010, 11, 395–407. [Google Scholar] [CrossRef]

- Liu, G.; Worgotter, F.; Markelic, I. Combining Statistical Hough Transform and Particle Filter for robust lane detection and tracking. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium (IV), San Diego, CA, USA, 21–24 June 2010; pp. 993–997. [Google Scholar]

- Danescu, R.; Nedevschi, S. Probabilistic Lane Tracking in Difficult Road Scenarios Using Stereovision. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 10, 272–282. [Google Scholar] [CrossRef]

- Romera, E.; Alvarez, J.-M.; Bergasa, L.-M.; Arroyo, R. Efficient ConvNet for real-time semantic segmentation. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 21–26 June 2017; pp. 1789–1794. [Google Scholar]

- Luc, P.; Couprie, C.; Chintala, S.; Verbeek, J. Semantic Segmentation using Adversarial Network. Proc. Adv. Neural Inf. Process. Syst. 2016, 4, 216–228. [Google Scholar]

- Lee, S.; Kim, J.; Yoon, J.S.; Shin, S.; Bailo, O.; Kim, N.; Lee, T.-H.; Hong, S.H.; Han, S.-H.; Kweon, I.S. VPGNet: Vanishing Point Guide Network for Lane and Road Marking Detection and Recognition. In Proceedings of the 2015 IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1965–1973. [Google Scholar]

- Pan, X.; Shi, J.; Luo, P.; Wang, X.; Tang, X. Spatial As Deep: Spatial CNN for Traffic Scene Understanding. In Proceedings of the National Center of Artificial Intelligence-NCAI, Islamabad, Pakistan; 2018; pp. 7276–7283. [Google Scholar]

- The tuSimple lane challenge. Available online: http://ben-chmark.tusimple.ai/ (accessed on 4 July 2018).

- Brabandere, B.-D.; Neven, D.; Recognition, P. Semantic Instance Segmentation with a Discriminative Loss Function. Available online: https://arxiv.org/abs/1708.02551 (accessed on 8 August 2017).

- Wang, Z.; Ren, W.; Qiu, Q. LaneNet: Real-Time Lane Detection Network for Autonomous Driving. Available online: https://arxiv.org/abs/1807.01726 (accessed on 4 July 2018).

- Ghafoorian, M.; Nugteren, C.; Baka, N.; Booij, O. EL-GAN: Embedding Loss Driven Generative Adversarial Network for Lane Detection. Available online: https://arxiv.org/abs/1806.05525 (accessed on 5 July 2018).

- Ko, Y.; Jun, J.; Ko, D. Key Points Estimation and Point Instance Segmentation Approach for Lane Detection. Available online: https://arxiv.org/abs/2002.06604 (accessed on 16 February 2020).

- Jung, J.; Bae, S.-H. Real-time road lane detection in Urban areas using LiDAR data. Electronics 2018, 7, 325–337. [Google Scholar] [CrossRef]

- Naver Map. Available online: https://map.naver.com (accessed on 1 July 2018).

- Schlosser, J.; Chow, C.-K.; Kira, Z. Fusing LIDAR and images for pedestrian detection using convolutional neural network. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 2198–2205. [Google Scholar]

- Van Gansbeke, W.; De Brabandere, B.; Neven, D.; Proesmans, M.; Van Gool, L. End-to-end Lane Detection through Differentiable Least-Squares Fitting. In Proceedings of the 2019 IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 4213–4225. [Google Scholar]

- Garnett, N.; Cohen, R.; Peer, T.; Lahav, R.; Levi, D. 3D-LaneNet: End-to-End 3D Multiple Lane Detection. In Proceedings of the 2019 IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 2921–2930. [Google Scholar]

- Qin, Z.; Wang, H.; Li, X. Ultra Fast Structure-aware Deep Lane Detection. In European Conference on Computer Vision; Springer: Glasgow, UK, 2020; pp. 276–291. [Google Scholar]

- Brostow, G.-J.; Shotton, J.; Fauqueur, J.; Cipolla, R. Segmentation and Recognition Using Structure from Motion Point Clouds. In European Conference on Computer Vision; Springer: Verlag, Germany, 2018; pp. 534–549. [Google Scholar]

- Neuhold, G.; Ollmann, T.; Bulo, S.-R. The Mapillary Vistas Dataset for Semantic Understanding of Street Scenes. In Proceedings of the 2017 IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5000–5009. [Google Scholar]

- Yu, F.; Chen, H.; Wang, X.; Xian, W.; Chen, Y.; Liu, F. Bdd100k: A diverse driving dataset for heterogeneous multitask learning. arXiv 2019, arXiv:1805.04687. [Google Scholar]

- Huang, X.; Cheng, X.; Geng, Q. The ApolloScape Dataset for Autonomous Driving. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 954–960. [Google Scholar]

- Aly, M. Real time detection of lane markers in urban streets. In Proceedings of the 2008 Intelligent Vehicles Symposium, Eindhoven, The Netherlands, 4–6 June 2008; pp. 7–12. [Google Scholar]

- Turpin, A.; Scholer, F. User performance versus precision measures for simple search tasks. In Proceedings of the 29th annual international ACM SIGIR conference on Research and development in information retrieval, Seattle, WA, USA, 6–11 August 2006; pp. 11–18. [Google Scholar]

- Kirillov, A.; He, K.; Girshick, R. Panoptic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 9404–9413. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common objects in context. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Kato, H.; Ushiku, Y.; Harada, T. Neural 3d mesh renderer. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3907–3916. [Google Scholar]

- Asvadi, A.; Garrote, L.; Premebida, C.; Peixoto, P.; Nunes, U.J. Multimodal vehicle detection: Fusing 3D-LIDAR and color camera data. Pattern. Recogn. Lett. 2017, 115, 20–29. [Google Scholar] [CrossRef]

- Ji, S.; Xu, W.; Yang, M. 3D Convolutional Neural Network for Human Action Recognition. IEEE T. Pattern. Anal. 2013, 35, 221–231. [Google Scholar] [CrossRef]

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-End Learning for Point Cloud Based 3D Object Detection. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 21–23 June 2018; pp. 4490–4499. [Google Scholar]

- Chen, Y.; Liu, S.; Shen, X.; Jia, J. Fast Point R-CNN. In Proceedings of the 2019 IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–3 November 2019; pp. 9775–9784. [Google Scholar]

- Charles, R.-Q.; Su, H.; Kaichun, M.; Guibas, L.-J. Volumetric and Multi-view CNNs for Object Classification on 3D Data. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 5648–5656. [Google Scholar]

- Charles, R.-Q.; Su, H.; Kaichun, M.; Guibas, L.-J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 77–85. [Google Scholar]

- Wang, Z.; Jia, K. Frustum ConvNet: Sliding Frustums to Aggregate Local Point-Wise Features for Amodal 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 6399–6408. [Google Scholar]

- Wang, Y.; Sun, Y.; Liu, Z.; Sarma, S.-E.; Bronstein, M.-M.; Solomon, J.M. Dynamic Graph CNN for Learning on Point Clouds. ACM Trans. Graphic 2019, 38, 146–159. [Google Scholar] [CrossRef]

- Landrieu, L.; Simonovsky, M. Large-Scale Point Cloud Semantic Segmentation with Superpoint Graphs. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4558–4567. [Google Scholar]

- Yang, T.-J.; Collins, M.D.; Zhu, Y.; Hwang, Y.-Y.; Liu, T.; Zhang, X.; Sze, V.; Papendreou, G.; Chen, L.-C. DeeperLab: Single-Shot Image Parser. Available online: https://arxiv.org/abs/1902.05093 (accessed on 11 February 2019).

- De Geus, D.; Meletis, P. Panoptic segmentation with a joint semantic and instance segmentation network. Available online: https://arxiv.org/abs/1809.02110 (accessed on 9 September 2018).

- Kirillov, A.; Girshick, R.; He, K.; Dollar, P. Panoptic Feature Pyramid Network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 6399–6408. [Google Scholar]

- Teichmann, M.; Weber, M.; Zollner, M.; Cipolla, R.; Urtasun, R. MultiNet: Real-time Joint Semantic Reasoning for Autonomous Driving. In Proceedings of the 2018 Intelligent Vehicles Symposium, Changshu, Suzhou, China, 26–30 June 2018; pp. 1013–1020. [Google Scholar]

- Chen, L.; Yang, Z.; Ma, J.; Luo, Z. Driving Scene Perception Network: Real-Time Joint Detection, Depth Estimation and Semantic Segmentation. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, CA, USA, 12–15 March 2018; pp. 1283–1291. [Google Scholar]

- Saleh, K.; Hossny, M.; Nahavandi, S. Intent Prediction of Pedestrians via Motion Trajectories Using Stacked Recurrent Neural Network. IEEE T. Intell. Transp. 2018, 3, 414–424. [Google Scholar] [CrossRef]

- Zhang, J.; Xu, Y.; Ni, B.; Duan, Z. Geometric Constrained Joint Lane Segmentation and Lane Boundary Detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 502–518. [Google Scholar]

- Riveiro, M.; Lebram, M.; Elmer, M. Anomaly Detection for Road Traffic: A Visual Analytics Framework. IEEE Trans. Intell. Transp. Syst. 2017, 18, 2260–2270. [Google Scholar] [CrossRef]

| Classical Methods | Year | Background | Algorithm Characteristics | Contributions |

|---|---|---|---|---|

| LetNet [5] | 1998 | This classical CNN was originally proposed for character recognition | 5-layer CNN Simple architecture, less parameters | The basic structure of the modern CNN |

| AlexNet [6] | 2012 | The championship in ILSVRC 2012 | layer CNN Use Relu and Dropout functions for reducing overfitting. | It brought the research boom of deep learning today |

| VGGNet [7] | 2014 | The championship of location project in ILSVRC 2014 | Repeatedly superimposing the convolutional layer and the pooling layer | The relationship between the depth of the CNN and the performance of the model is studied |

| GoogLeNet [9] | 2014 | The championship of classification project in ILSVRC 2014 | Inception V1 module | Efficient use of 1 × 1, 3 × 3, and 5 × 5 convolution. The efficiency reached the human level and subsequently developed into V2 [13], V3 [14], V4 [15], and Xception [16]. |

| ResNet [10] | 2015 | The championship in ILSVRC 2015 | Residual Uint | Learning the difference between the input and output. Subsequently, ResNeXt [11] was proposed by combining it with Inception. |

| DenseNet [17] | 2016 | Proposed by Gao et al. | DenseBlock | Realization of reuse between features |

| NASNet [18] | 2017 | Proposed by Google | ResNet + Inception | Combination of previous network Structures |

| ShuffleNet [12] | 2017 | Proposed by Zhang et al. | Channel shuffle | Improving network information blocking |

| SeNet [19] | 2017 | The championship in ILSVRC 2017 | Squeeze and excitation module | The relationship between the feature channels is studied |

| Method Category | Typical Work | Characteristics | Advantages and Disadvantages | Basic Framework |

|---|---|---|---|---|

| Two-stage | R-CNN [20] SPPNet [21] Fast R-CNN [22] Faster R-CNN [23] Cascade R-CNN [24] FPN [25] |

| Advantages: High detection accuracy Disadvantages: Multistage pipeline training Slow object detection |  |

| One-stage | YOLO-V1 [26] SSD [27] RetinaNet [28] YOLO-V2 [29] YOLO-V3 [30] FCOS [31] GC-YOLOv3 [32] AlignDet [33] CenterNet [34] |

| Advantages: Fast object detection Disadvantages: Relatively low detection accuracy |  |

| Method Category | Typical Work | Characteristics | Core Technology and Functions | Basic Framework | Road Segmentation |

|---|---|---|---|---|---|

| Encoder– Decoder | FCN [36] SegNet [37] U-Net [38] ENet [39] PSPNet [40] Deeplab-V3+ [41] Fast FCN [42] CED-Net [43] DANet [44] | End-to-end dense pixel output The pyramid pooling module can ensure global information integrity |

|  | Up-Conv-Poly [45] LidCamNet [46] DEEP-DIG [47] |

| Modified Convolution | Deeplab-V1 [48] Dilated convolution [49] Deeplab-V2 [50] Deeplab-V3 [51] CRFasRNN [52] DRN [53] HDC [54] Deeplab-V3+ [41] | Ensure local information correlates through modified convolution |

|  |

| Method Category | Typical Work | Characteristics | Advantages and Disadvantages | Basic Framework |

|---|---|---|---|---|

| Region Proposals | SDS [64] HyperColumns [65] MNC [66] ISFCN [67] FCIS [68] Mask RCNN [69] PANet [70] | Common detection methods such as R-CNN [20], SSD [27], R-FCN [24], FPN [25], and so on Pixel classification in identified regions | Advantages:

|  |

Disadvantages:

| ||||

| Masking | CFM [71] DeepMask [72] SharpMask [73] MultipathNet [74] Mask-X RCNN [75] CenterMask [76] |

| Advantages: The refining module optimizes the rough segmentation masks and can process the hidden information in various sizes and background pictures. Disadvantages: Low positioning accuracy |  |

| Method Category | Typical Work | Dataset | Algorithm Characteristics | Core Technology and Function | |

|---|---|---|---|---|---|

| Core Technology | Function | ||||

| Two-step Method | VPGNet [84] | VPGNet Dataset [84] | Road marking detection is guided by a vanishing point under adverse weather conditions. | Inducing grid-level annotation | Vanishing point prediction task |

| SCNN [85] | CULane [85] |

| Slice-by-slice convolutions | Messages between pixels can pass across rows and columns in a layer | |

| Cubic spline curve | Trajectory prediction | ||||

| LaneNet+ H-Net [4] | TuSimple Lane Dataset [86] | Turning the lane line detection problem into an instance segmentation problem | E-Net [39] | Segmentation network | |

| DLF [87] | Clustering the lane embedding | ||||

| H-Net [4] | Learning projection matrix | ||||

| LaneNet [88] | 5000 road images | Lane edge proposal and lane line localization | Lane proposal and localization network | Detection and localization | |

| EL-GAN [89] | TuSimple Lane Dataset [86] | Discriminating based on learned embedding of both the labels and the prediction | GAN [83] | Segmentation network | |

| DenseNet [17] | Feature extraction | ||||

| PINet [90] | TuSimple Lane Dataset [86] | Casting a clustering problem for the generated points as a point cloud instance segmentation problem | Lane Instance Point Network | Exact points of the lanes | |

| Reference [91] | Approximately 69,000 points | A practical real-time working prototype for road lane detection using LiDAR data | 3D LiDAR sensor | Scan 3D point clouds | |

| Reference [92] | Naver Map [92] | Road lane detection using LiDAR data | a 3D LiDAR sensor | Categorizing the points of the drivable region | |

| FusionLane [93] | 14000 road images | A lane marking semantic segmentation method based on LIDAR and camera fusion | Deeplab-V3+ [41] | Lane line segmentation | |

| LIDAR–camera fusion | Conversion into LIDAR point clouds | ||||

| End-to-End Method | Reference [94] | TuSimple Lane Dataset [86] | Directly regressing the lane parameters | Differentiable least squares fitting | Estimating the curvature parameters of the lane lines |

| ERFNet [74] | Lane line segmentation | ||||

| 3D-LaneNet [95] | Synthetic-3D- lanes Dataset [95] | Directly predicting the 3D layout of lanes in a road scene from a single image | Anchor-based lane representation | Replacing common heuristics | |

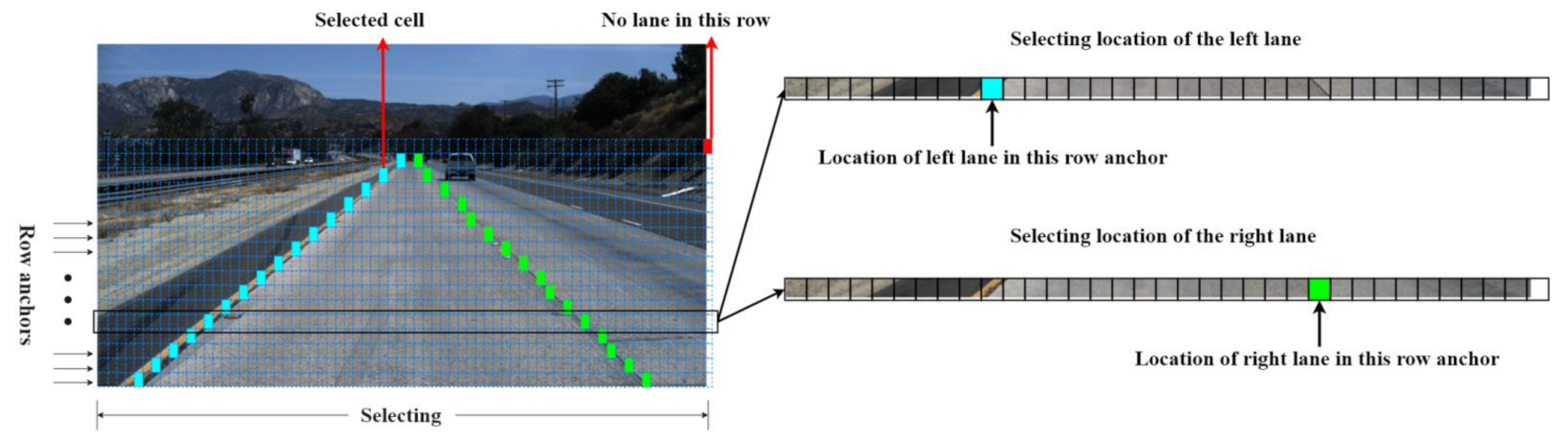

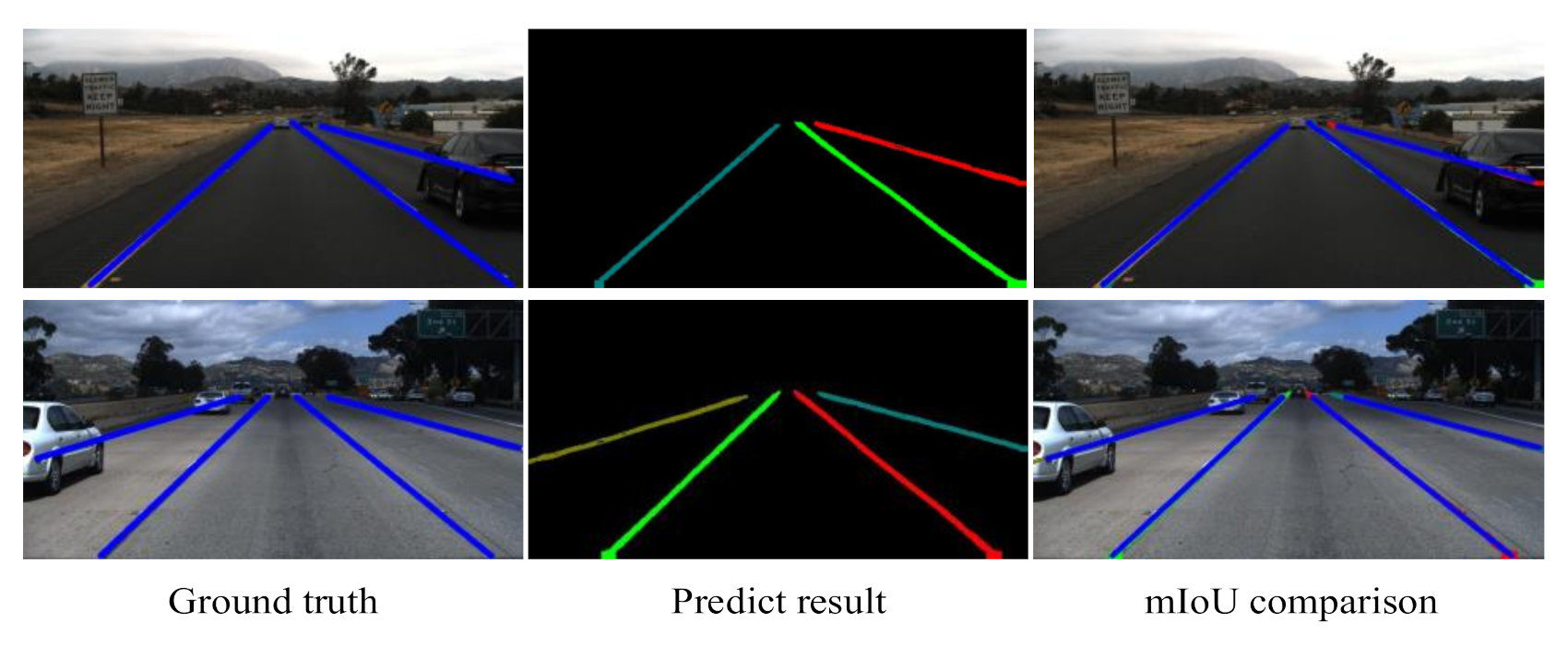

| Reference [96] | TuSimple Lane Dataset [86] | Treating the process of lane detection as a row-based selection problem | Structural loss | Utilizing prior information of lanes | |

| Dataset | Pic lg | Diversity | Annotation | |||

|---|---|---|---|---|---|---|

| 3D | 2D | Video | Lane | |||

| CamVid [97] | 960 × 720 | Day time | No | Pixel: 710 | √ | 2D/2 classes |

| KITTI [63] | 1242 × 375 | Day time | 80k 3D box | Pixel: 400 | - | No |

| Cityscapes [62] | 2048 × 1024 | Day time | No | Pixel: 25k | - | No |

| Mapillary [98] | 1920 × 1080 | Various weather, day and night | No | Pixel: 25k | - | 2D/2 classes |

| BDD100K [99] | 1280 × 720 | Various weather, four regions in the US | No | Pixel:100k | √ | 2D/2 classes |

| ApolloScape [100] | 3384 × 2710 | Various weather, four regions in China | 3Dsemantic point 70K 3D fitted cars | Pixel:143k | √ | 3D / 2D video 35 classes |

| TuSimple Lane [86] | 1280 × 720 | Various weather | No | Pixel: 3626 | √ | 2D/2 classes |

| Caltech Lanes [101] | 640 × 480 | Day time | No | Pixel: 1225 | - | 2D/2 classes |

| CULane [85] | 640 × 590 | Various weather | No | Pixel:133k | √ | 2D/2 classes |

| Method Category | Typical Work | Year | Core Technology | mIoU (%) | Speed (fps) |

|---|---|---|---|---|---|

| Encoder–Decoder | FCN [36] | 2014 | VGG + Skip Connected | 65.3 | 2 |

| SegNet [37] | 2015 | FCN + Deconvolution Upsampling | 57 | 16.7 | |

| ENet [39] | 2016 | FCN + Dilated Residual | 58.3 | 76.9 | |

| PSPNet [40] | 2017 | ResNet + Pyramid Pooling Module | 78.4 | 0.45 | |

| Deeplab-V3+ [41] | 2018 | Xception + ASPP | 82.1 | N/A | |

| FastFCN [42] | 2019 | ResNet | 80.9 | 7.5 | |

| DANet [44] | 2019 | Dual Attention Network | 81.5 | N/A | |

| Modified Convolution | Deeplab-V1 [48] | 2014 | ResNet + Dilated Convolution | 63.1 | 0.25 |

| CRFasRNN [52] | 2015 | ResNet + CRF + RNN | 68.2 | 1.4 | |

| Deeplab-V2 [50] | 2016 | ResNet + ASPP | 70.4 | 0.25 | |

| DNR [53] | 2017 | ResNet + Dilated Residual | 70.9 | 0.4 | |

| Deeplab-V3 [51] | 2017 | ResNet + ASPP | 81.3 | N/A | |

| HDC [54] | 2017 | ResNet + DUC + HDC | 80.1 | 1.1 | |

| Deeplab-V3+ [41] | 2018 | Xception +ASPP | 82.1 | N/A | |

| FastFCN [42] | 2019 | ResNet | 80.9 | 7.5 |

| Method Category | Typical Work | Year | Core Technology | Datasets | Evaluation Criteria | Accuracy (%) |

|---|---|---|---|---|---|---|

| Region proposal-based method | HyperColumns [65] | 2015 | SDS + SVM | VOC2012 [104] | mAP | 60.0 |

| MNC [66] | 2015 | RPN + ROI | mAP | 63.5 | ||

| ISFCN [67] | 2016 | Positive-sensitive score map | MS COCO [105] | AR | 39.2 | |

| FCIS [68] | 2017 | Positive-sensitive inside and outside score maps | AP | 29.2 | ||

| Mask RCNN [69] | 2017 | ResNext + FPN + ROI Align | AP | 35.7 | ||

| PAN [70] | 2018 | FPN + AF Pooling + FC Fusion | AP | 41.4 | ||

| Masking-based method | DeepMask [72] | 2015 | Top-down refinement module | AR | 33.1 | |

| SharpMask [73] | 2016 | Refine segmentation mask | AR | 66.4 | ||

| MultipathNet [74] | 2016 | Fast R-CNN + DeepMask | mPA | 45.4 | ||

| CenterMask [76] | 2019 | FCOS+SAG-Mask | AP | 53.1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, Z.; Huang, Y.; Hu, X.; Wei, H.; Zhao, B. A Survey on Deep Learning Based Approaches for Scene Understanding in Autonomous Driving. Electronics 2021, 10, 471. https://doi.org/10.3390/electronics10040471

Guo Z, Huang Y, Hu X, Wei H, Zhao B. A Survey on Deep Learning Based Approaches for Scene Understanding in Autonomous Driving. Electronics. 2021; 10(4):471. https://doi.org/10.3390/electronics10040471

Chicago/Turabian StyleGuo, Zhiyang, Yingping Huang, Xing Hu, Hongjian Wei, and Baigan Zhao. 2021. "A Survey on Deep Learning Based Approaches for Scene Understanding in Autonomous Driving" Electronics 10, no. 4: 471. https://doi.org/10.3390/electronics10040471

APA StyleGuo, Z., Huang, Y., Hu, X., Wei, H., & Zhao, B. (2021). A Survey on Deep Learning Based Approaches for Scene Understanding in Autonomous Driving. Electronics, 10(4), 471. https://doi.org/10.3390/electronics10040471