Robust Active Shape Model via Hierarchical Feature Extraction with SFS-Optimized Convolution Neural Network for Invariant Human Age Classification

Abstract

1. Introduction

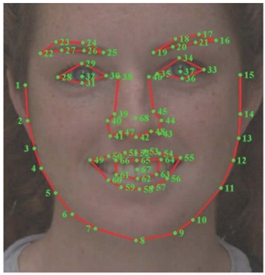

- Texture feature vectors are not enough for better age classification accuracy. For accurate and robust age classification results, we have specified 35 landmark facial features points.

- We map a multi-perspective views Active Shape Model (ASM) on the face for better age classification accuracy.

- Our salient texture and landmarks localization feature vectors provide far better accuracy than other state-of-the art techniques.

- The selection of the ideal set of features is achieved using a Sequential Forward Selection (SFS) algorithm along with the CNN classifier for human age classification.

2. Related Work

2.1. Age Classification via Classical Machine Learning Algorithms

2.2. Age Classification via Classical Deep Learning Algorithms

3. Materials and Methods

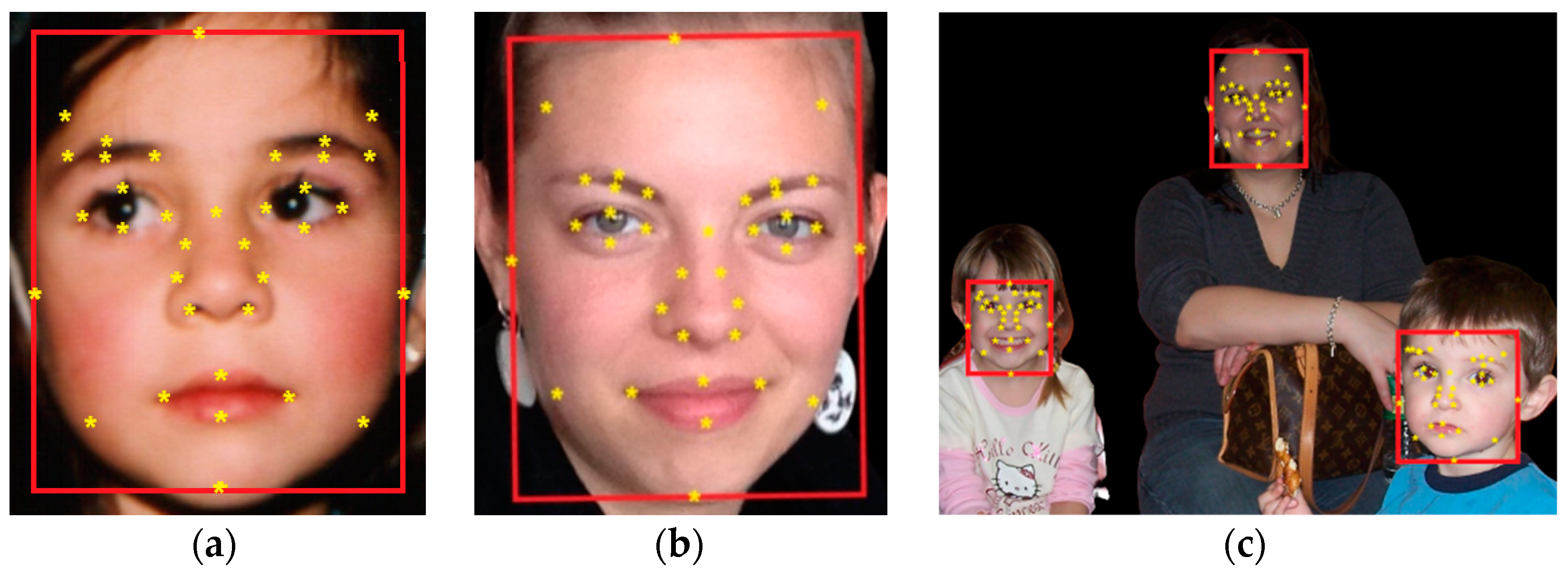

3.1. Pre-Processing and Face Detection

3.2. Landmark Localization

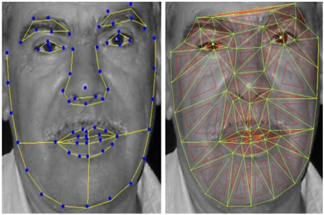

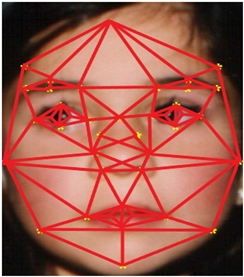

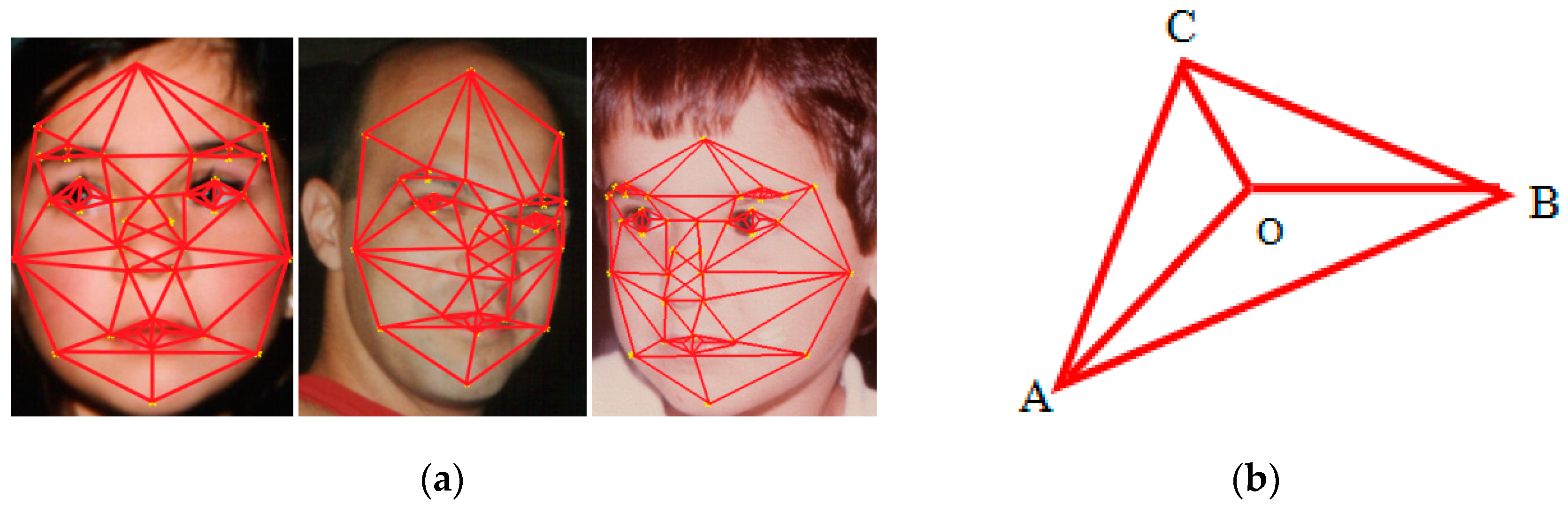

3.3. Active Shape Model

| Algorithm 1. Active Shape Model |

| 1:Input: Y: Position of 35 landmark points on face named Q={qi, i=0,1,2,…,n−1}; |

| 2: Output: Triangular Mesh of Q: TM(Q); |

| 3: begin |

| 4: Find the three outside points of a triangle (q1,q2,q3); |

| 5: TM(Q):=[q1,q2,q3]; |

| 6: /*initialize TM(Q) to a large triangle.*/ |

| 7: Compute the random permutation of q0,q1,q2,…,qn-1 of Q; |

| 8: for a=0 to n–1 do |

| 9: begin |

| 10: /*insert qr into TM(Q).*/ |

| 11: Locate the triangle points qiqjqkTM(Q) containing qr; |

| 12: If qr lies inside the interior of qiqjqk then; |

| 13: begin |

| 14: Add edges from qr to the vertices qiqjqk and Subdivide qiqjqk into smaller three triangles; |

| 15: Localize_edge(qr,qiqj,TM(Q)); |

| 16: Localize_edge(qr,qjqk,TM(Q)); |

| 17: Localize_edge(qr,qkqi,TM(Q)); |

| 18: end; |

| 19: end; |

| 20: Remove q1,q2,q3 and all incident triangles and edges from TM(Q); |

| 21: end; |

3.4. Feature Extraction Using Image Representation

3.5. Feature Extraction Using Aging Pattern

| Algorithm 2. Feature Extraction |

| 1: Input: Y: Position of 35 landmark points on face; |

| 2: Output: Generated Features; |

| 3: /* Active Shape Model*/ |

| 4: /* Anthropometric features*/ |

| 5: /* Carnio facial development*/ |

| 6: /* Interior angle formulation*/ |

| 7: /* Heat Maps*/ |

| 8: /*Wrinkle detection*/ |

| 9: Active Shape Model |

| 10: /*Polygon meshes andperpendicular bisection of triangles are evaluated*/ |

| 11: TM(Q):=[q1,q2,q3]; |

| 12: Localize_edge(qr,qiqj,TM(Q)); |

| 13: Localize_edge(qr,qjqk,TM(Q)); |

| 14: Localize_edge(qr,qkqi,TM(Q)); |

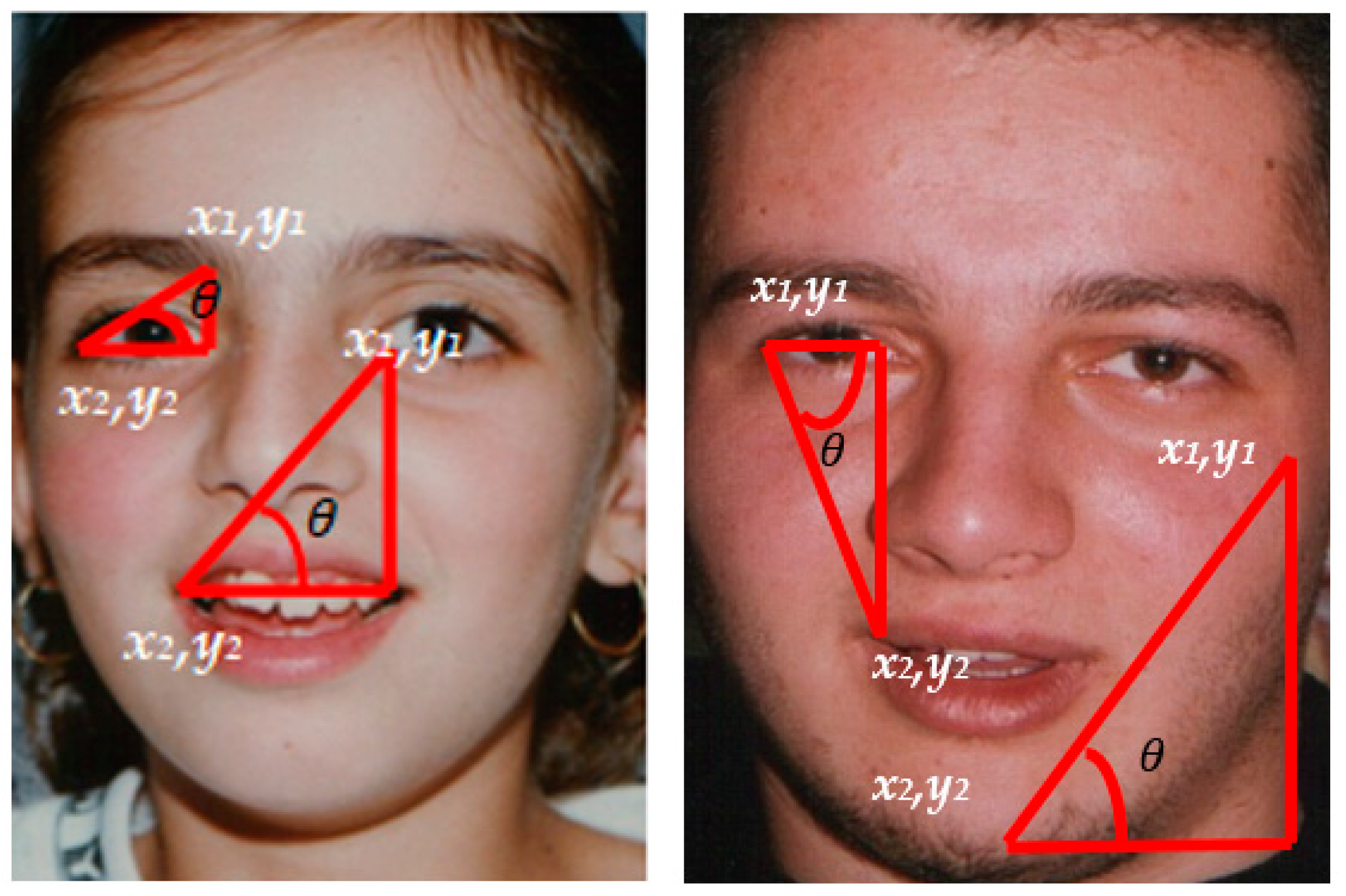

| 15: Anthropometric features: |

| 16: /* Distancebetweendifferent anatomical named features and angle of inclination is calculated*/ |

| 17: |

| 18: ; |

| 19: Carnio-facial development: |

| 20: /* Finding the variation on the growth of the face by measuring the radius and circumference from infancy to adulthood*/ |

| 21: ; |

| 22: Interior angle formulation |

| 23: /* Finding the variation in the interior angles formed on face by using the face mask. */ |

| 24: ; |

| 25: ; |

| 26: ; |

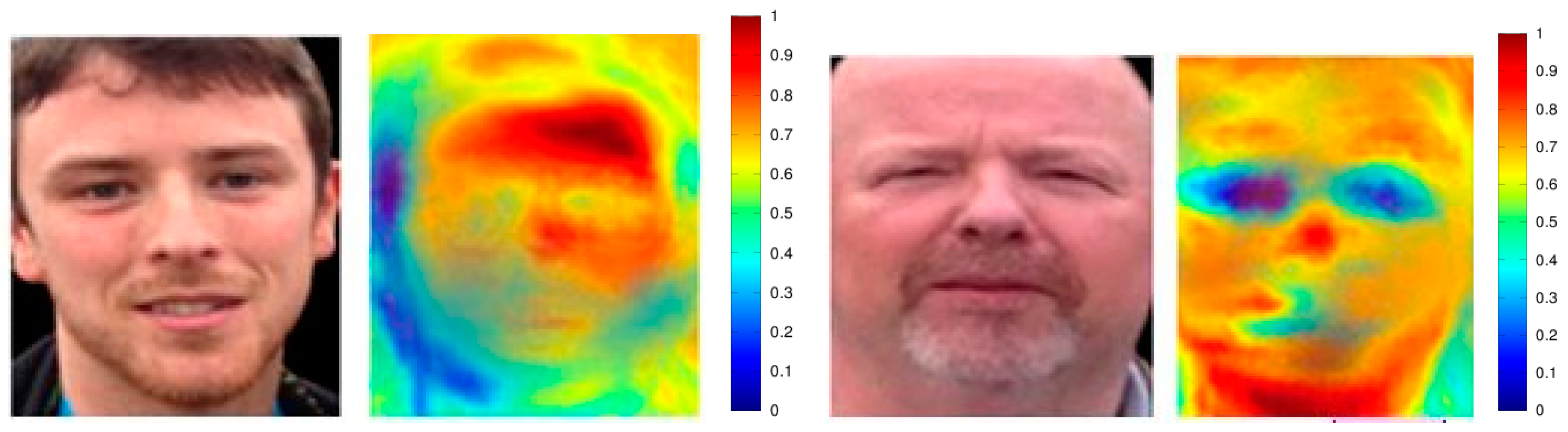

| 27: Heat Map |

| 28: ; |

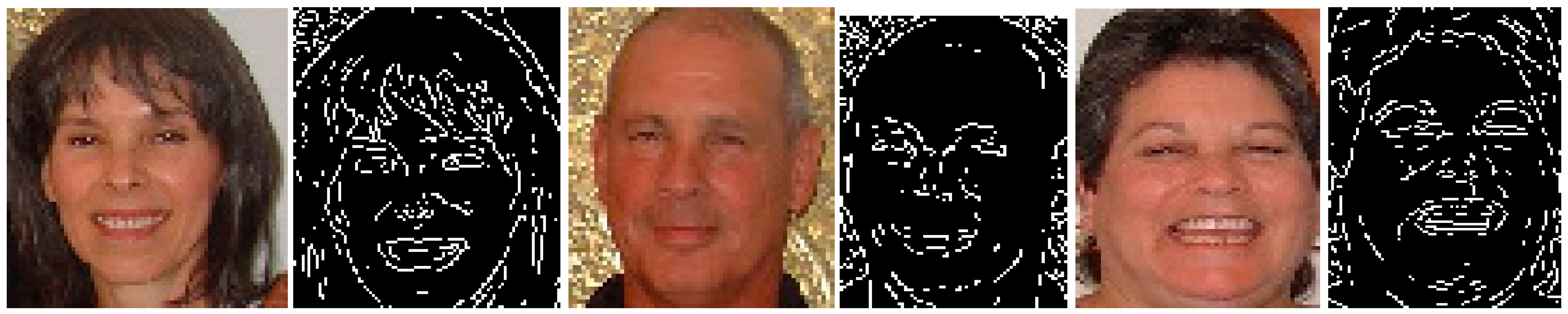

| 29: Wrinkle detection |

| 30: /* Finding the variation of wrinkles over different facial features and finding the accumulative score of the wrinkles detected */ |

| 31: ; |

| 32: Augment all features extracted; |

| 33: ; |

| 34: Project A on Sequential Forward Selection (SFS); |

| 35: ; |

| 36: Project Z features on CNN; |

| 37: Return Q; |

| 38: end; |

3.6. Feature Selection Using Sequential Forward Selection (SFS)

3.7. Age Estimation Modeling

4. Experimental Results

4.1. Datasets’ Descriptions

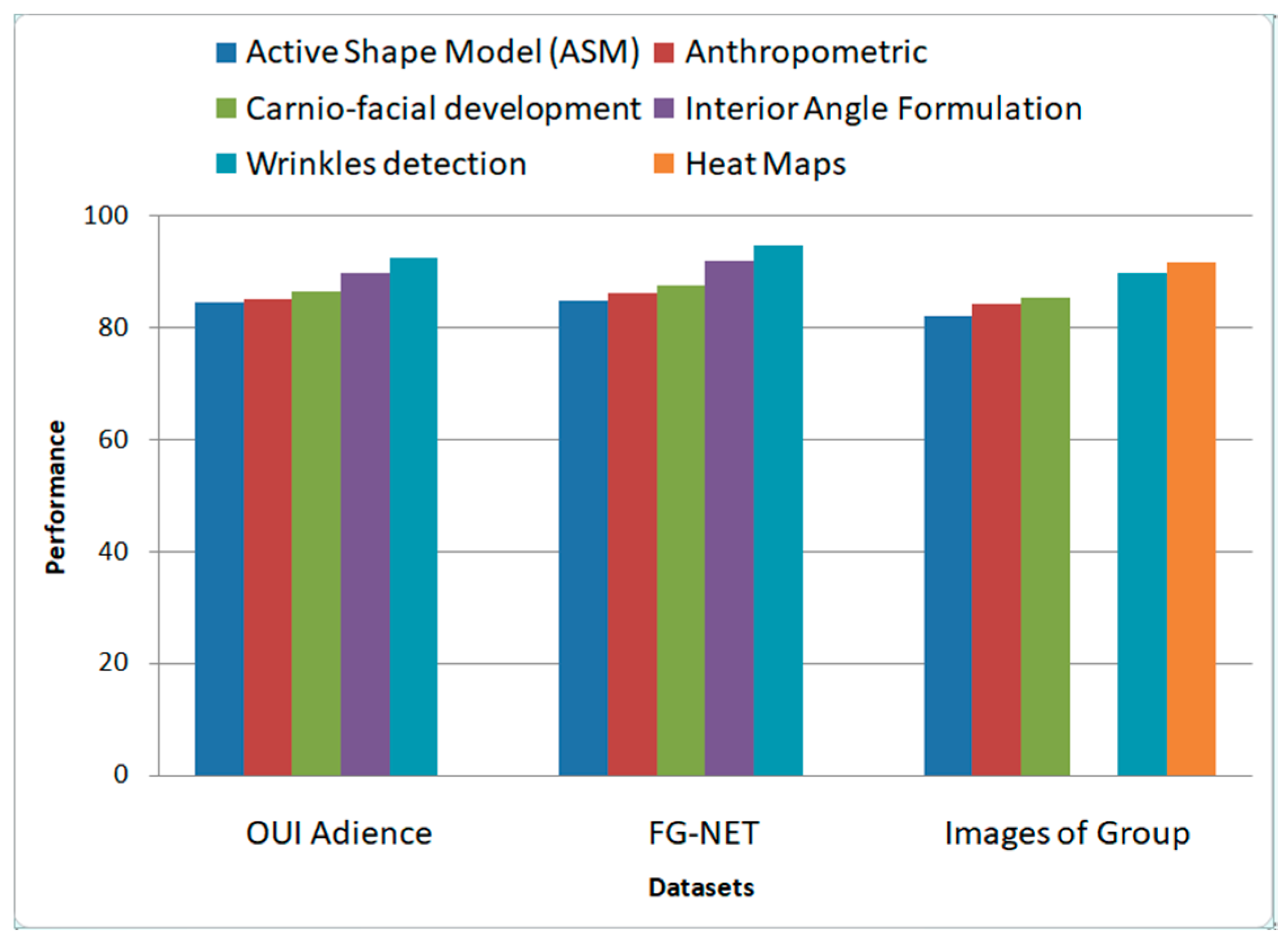

4.2. Experiment I: Experimental Results Obtained Using the Proposed Model and the Other Three Competing Approaches over Benchmark Datasets

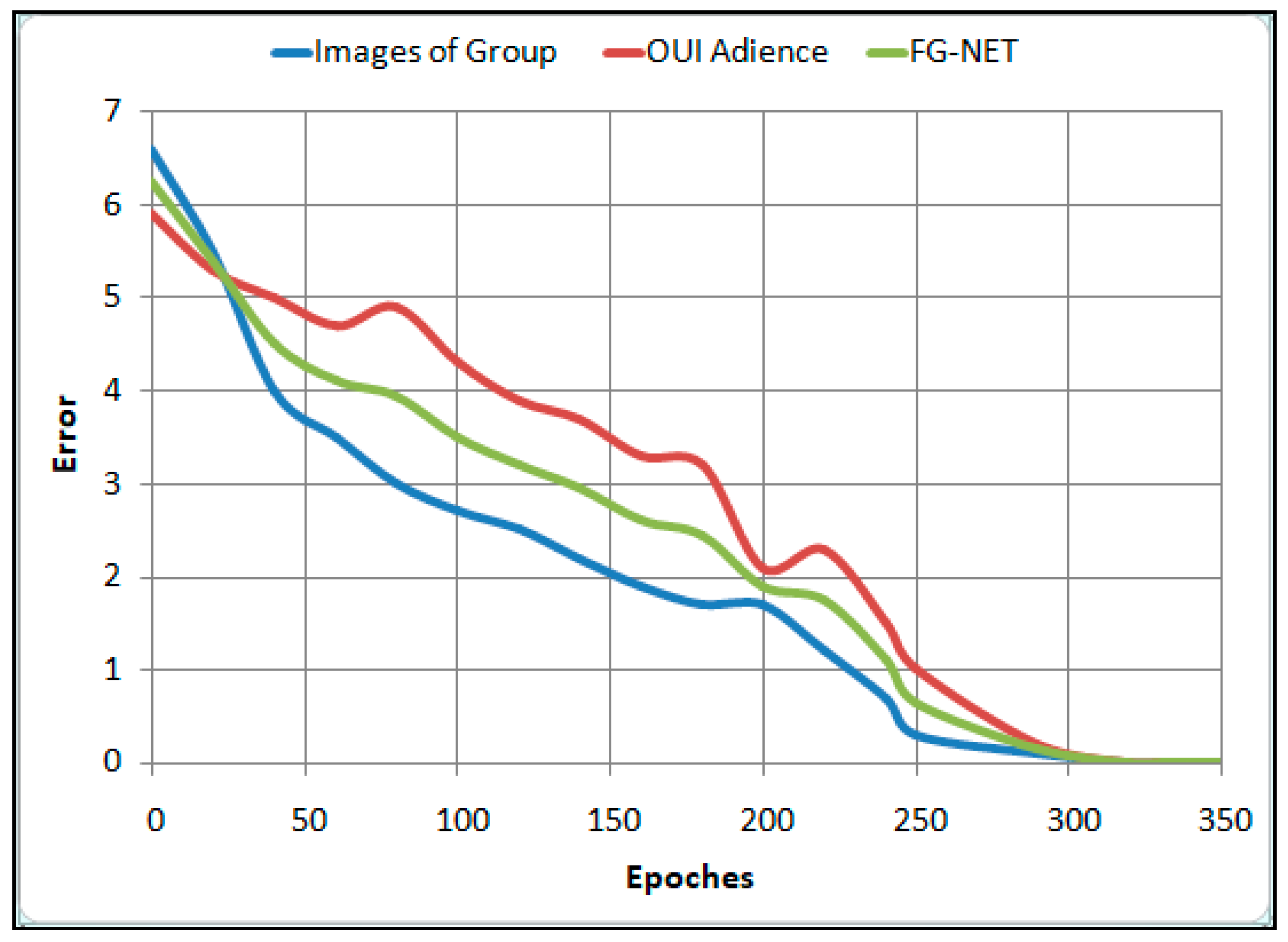

4.3. Experiment II: Error Resilience between the Proposed Active Shape Model with Other Well-Known Face Masking Techniques

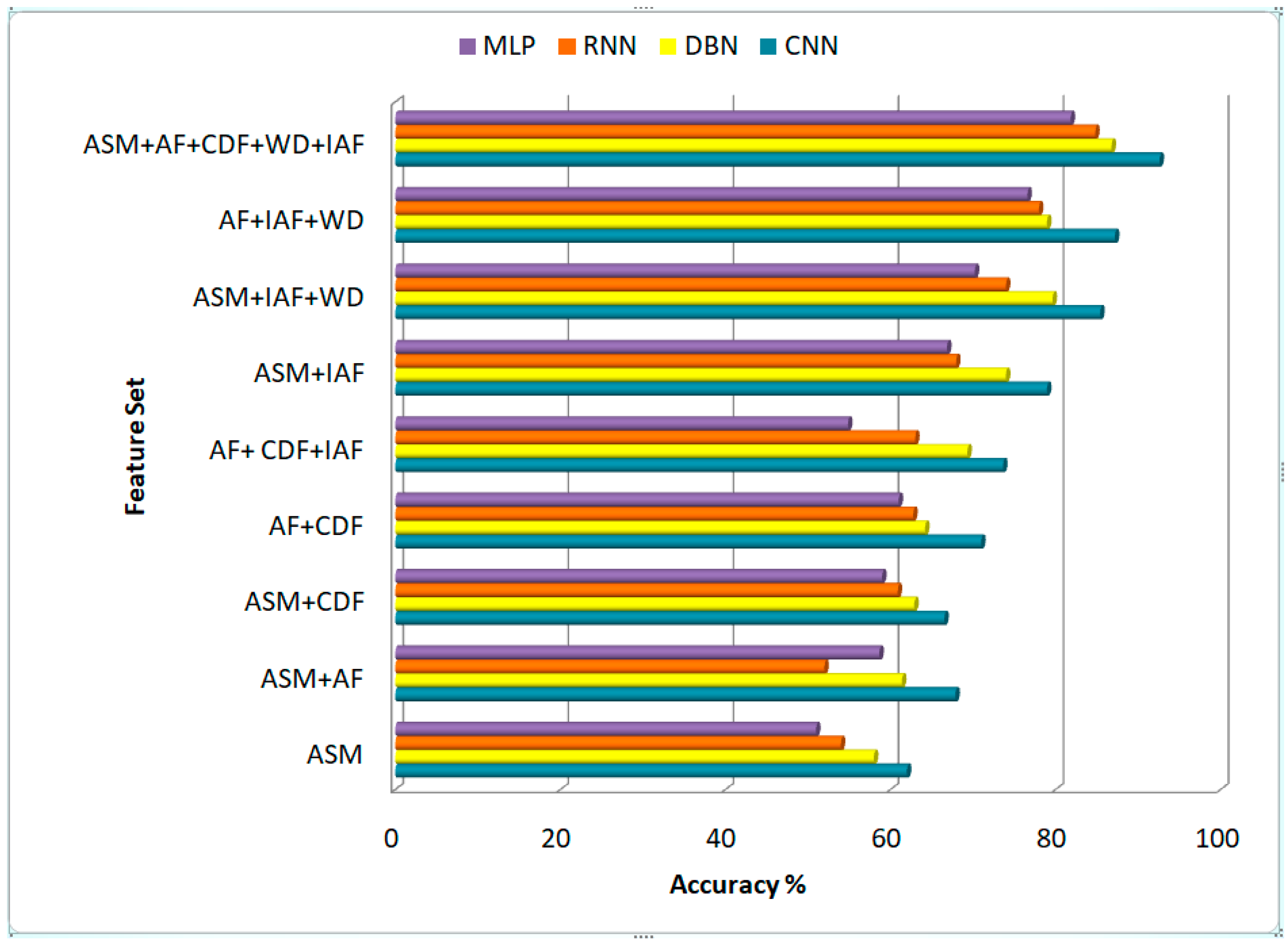

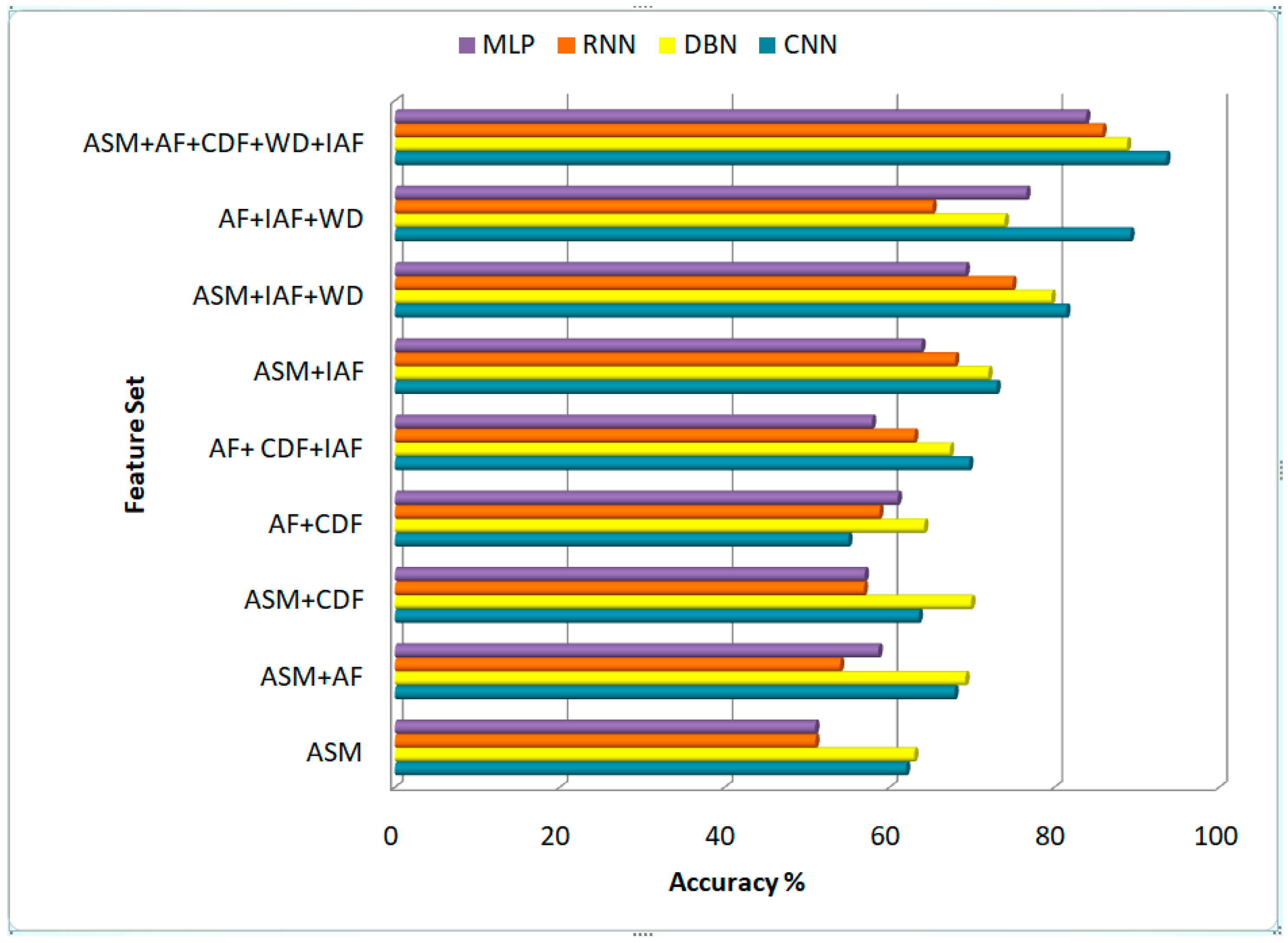

4.4. Experiment III: Comparison of Age Classification Performance Using CNN in Different Features Sets

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Park, U.; Tong, Y.; Jain, A.K. Age Invariant Face Recognition. Int. J. Trend Sci. Res. Dev. 2019, 3, 971–976. [Google Scholar]

- Albert, A.; Ricanek, K.; Patterson, E. A review of the literature on the aging adult skull and face: Implications for forensic science research and applications. Forensic Sci. Int. 2007, 172, 1–9. [Google Scholar] [CrossRef]

- Rhodes, M. Age estimation of faces: A review. Appl. Cogn. Psychol. 2009, 23, 1–12. [Google Scholar] [CrossRef]

- Ramanathan, N.; Chellappa, R.; Biswas, S. Computational methods for modeling facial aging: A survey. J. Vis. Lang. Comput. 2009, 20, 131–144. [Google Scholar] [CrossRef]

- Tahir, S.; Jalal, A.; Kim, K. Wearable Inertial Sensors for Daily Activity Analysis Based on Adam Optimization and the Maximum Entropy Markov Model. Entropy 2020, 22, 579. [Google Scholar] [CrossRef]

- Shokri, M.; Tavakoli, K. A Review on the Artificial Neural Network Approach to Analysis and Prediction of Seismic Damage in Infrastructure. Int. J. Hydromechatron. 2019, 2, 178–196. [Google Scholar] [CrossRef]

- Quaid, M.; Jalal, A. Wearable sensors based human behavioral pattern recognition using statistical features and reweighted genetic algorithm. Multimed. Tools Appl. 2019, 79, 6061–6083. [Google Scholar] [CrossRef]

- Jalal, A.; Quaid, M.; Tahir, S.; Kim, K. A Study of Accelerometer and Gyroscope Measurements in Physical Life-Log Activities Detection Systems. Sensors 2020, 20, 6670. [Google Scholar] [CrossRef]

- Jalal, A.; Kamal, S.; Kim, D. A Depth Video Sensor-Based Life-Logging Human Activity Recognition System for Elderly Care in Smart Indoor Environments. Sensors 2014, 14, 11735–11759. [Google Scholar] [CrossRef]

- Yun, F.; Guo, G.; Huang, T. Age Synthesis and Estimation via Faces: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1955–1976. [Google Scholar] [CrossRef]

- Tingting, Y.; Junqian, W.; Lintai, W.; Yong, X. Three-stage network for age estimation. CAAI Trans. Intell. Technol. 2019, 4, 122–126. [Google Scholar] [CrossRef]

- Choi, S.; Lee, Y.; Lee, S.; Park, K.; Kim, J. Age estimation using a hierarchical classifier based on global and local facial features. Pattern Recognit. 2011, 44, 1262–1281. [Google Scholar] [CrossRef]

- Txia, J.; Huang, C. Age Estimation Using AAM and Local Facial Features. In Proceedings of the 5th International Conference on Intelligent Information Hiding and Multimedia Signal Processing, Kyoyo, Japan, 12–14 September 2020; pp. 885–888. [Google Scholar]

- Choi, S.; Lee, Y.; Lee, S.; Park, K.; Kim, J. A Comparative Study of Local Feature Extraction for Age Estimation. In Proceedings of the 2010 11th International Conference on Control Automation Robotics & Vision, Singapore, 7–10 December 2010; pp. 1280–1284. [Google Scholar]

- Gunay, A.; Nabiyev, V. Automatic Age Classification with LBP. In Proceedings of the 2008 23rd International Symposium on Computer and Information Sciences, Istanbul, Turkey, 27–29 October 2008; pp. 1–4. [Google Scholar]

- Jalal, A.; Quaid, M.; Kim, K. A Wrist Worn Acceleration Based Human Motion Analysis and Classification for Ambient Smart Home System. J. Electr. Eng. Technol. 2019, 14, 1733–1739. [Google Scholar] [CrossRef]

- Nadeem, A.; Jalal, A.; Kim, K. Accurate Physical Activity Recognition using Multidimensional Features and Markov Model for Smart Health Fitness. Symmetry 2020, 12, 1766. [Google Scholar] [CrossRef]

- Jalal, A.; Sarif, N.; Kim, J.; Kim, T. Human Activity Recognition via Recognized Body Parts of Human Depth Silhouettes for Residents Monitoring Services at Smart Home. Indoor Built Environ. 2012, 22, 271–279. [Google Scholar] [CrossRef]

- Jalal, A.; Batool, M.; Kim, K. Sustainable Wearable System: Human Behavior Modeling for Life-Logging Activities Using K-Ary Tree Hashing Classifier. Sustainability 2020, 12, 10324. [Google Scholar] [CrossRef]

- Jalal, A.; Batool, M.; Kim, K. Stochastic Recognition of Physical Activity and Healthcare Using Tri-Axial Inertial Wearable Sensors. Appl. Sci. 2020, 10, 7122. [Google Scholar] [CrossRef]

- Angulu, R.; Tapamo, J.; Adewumi, A. Age estimation via face images: A survey. EURASIP J. Image Video Process. 2018, 2018, 42. [Google Scholar] [CrossRef]

- Taister, M.; Holliday, S.; Borrman, H. Comments on Facial Aging in Law Enforcement Investigation. Forensic Sci. Commun. 2000, 2, 1463–1469. [Google Scholar]

- Fuller, H. Multiple factors influencing successful aging. Innov. Aging 2019, 3, S618. [Google Scholar] [CrossRef]

- Gunn, D.; Rexbye, H.; Griffiths, C.; Murray, P.; Fereday, A.; Catt, S.; Tomlin, C.; Strongitharm, B.; Perrett, D.; Catt, M.; et al. Why Some Women Look Young for Their Age. PLoS ONE 2009, 4, e8021. [Google Scholar] [CrossRef] [PubMed]

- Tin, K.; Htake, D. Gender and Age Estimation Based on Facial Images. Acta Tech. Napoc. 2011, 52, 37–40. [Google Scholar]

- Reade, S.; Veriri, S. Hybrid Age Estimation Using Facial Images. In International Conference Image Analysis and Recognition. ICIAR 2015, ICIAR 2015: Image Analysis and Recognition; Lecture Notes in Computer Science, 9164; Springer: Cham, Switzerland, 2015; pp. 239–246. [Google Scholar]

- Tin, H. Subjective Age Prediction of Face Images Using PCA. Int. J. Inf. Electron. Eng. 2012, 2, 296–299. [Google Scholar] [CrossRef]

- Dib, M.; Saban, M. Human Age Estimation Using Enhanced Bio-Inspired Features (EBIF). In Proceedings of the 2010 IEEE International Conference on Image Processing, Hong Kong, China, 26–29 September 2010; pp. 1589–1592. [Google Scholar]

- Zhang, K.; Gao, C.; Guo, L.; Sun, M.; Yuan, X.; Han, T.; Zhzo, Z.; Li, B. Age Group and Gender Estimation in the Wild With Deep RoR Architecture. IEEE Access 2017, 5, 22492–22503. [Google Scholar] [CrossRef]

- Bekhouche, S.; Ouafi, A.; Benlamoudi, A.; Ahmed, A.T. Automatic Age Estimation and Gender Classification in the Wild. In Proceedings of the International Conference on Automatic Control, Telecommunications and Signals (ICATS15), Annaba, Algeria, 16–18 November 2015. [Google Scholar]

- Levi, G.; Hassncer, T. Age and Gender Classification Using Convolutional Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Boston, MA, USA, 7–12 June 2015; pp. 34–42. [Google Scholar]

- Horng, W.; Lee, C.; Chen, C. Classification of Age Groups Based on Facial Features. Tamkang J. Sci. Eng. 2001, 4, 183–191. [Google Scholar]

- Fu, Y.; Xu, Y.; Huang, T. Estimating Human Age by Manifold Analysis of Face Pictures and Regression on Aging Features. In Proceedings of the International Conference on Multimedia and Expo, Beijing, China, 2–5 July 2007; pp. 1383–1386. [Google Scholar]

- Huerta, I.; Fernandez, C.; Segura, C.; Hernando, J.; Prati, A. A deep analysis on age estimation. Pattern Recognit. Lett. 2015, 68, 239–249. [Google Scholar] [CrossRef]

- Yılmaz, A.G.; Nabiyev, V. Age Estimation Based on AAM and 2D-DCT Features of Facial Images. Int. J. Adv. Comput. Sci. Appl. 2015, 6, 113–119. [Google Scholar]

- Eidinger, E.; Enbar, R.; Hassner, T. Age and Gender Estimation of Unfiltered Faces. IEEE Trans. Inf. Forensics Secur. 2014, 9, 2170–2179. [Google Scholar] [CrossRef]

- Shan, C. Learning Local Features for Age Estimation on Real-Life Faces. In Proceedings of the 1st ACM International Workshop on Multimodal Pervasive Video Analysis, Firenze, Italy, 25–29 October 2010; pp. 23–28. [Google Scholar]

- Rizwan, S.; Jalal, A.; Kim, K. An Accurate Facial Expression Detector using Multi-Landmarks Selection and Local Transform Features. In Proceedings of the 2020 3rd International Conference on Advancements in Computational Sciences (ICACS), Lahore, Pakistan, 17–19 February 2020; pp. 1–6. [Google Scholar]

- Jalal, A.; Khalid, N.; Kim, K. Automatic Recognition of Human Interaction via Hybrid Descriptors and Maximum Entropy Markov Model Using Depth Sensors. Entropy 2020, 22, 817. [Google Scholar] [CrossRef]

- Jalal, A.; Kim, Y.; Kim, D. Ridge Body Parts Features for Human Pose Estimation and Recognition from RGB-D Video Data. In Proceedings of the Fifth International Conference on Computing, Communications and Networking Technologies (ICCCNT), Hefei, China, 11–13 July 2014; pp. 1–6. [Google Scholar]

- Mahmood, M.; Jalal, A.; Kim, K. WHITE STAG model: Wise human interaction tracking and estimation (WHITE) using spatio-temporal and angular-geometric (STAG) descriptors. Multimed. Tools Appl. 2019, 79, 6919–6950. [Google Scholar] [CrossRef]

- Jalal, A.; Uddin, M.; Kim, T. Depth video-based human activity recognition system using translation and scaling invariant features for life logging at smart home. IEEE Trans. Consum. Electron. 2012, 58, 863–871. [Google Scholar] [CrossRef]

- Ahmed, M.; Viriri, S. Age Estimation Using Facial Images: A Survey of the State-of-the-Art. In Proceedings of the Sudan Conference on Computer Science and Information Technology (SCCSIT), Elnihood, Sudan, 17–19 November 2017; pp. 1–8. [Google Scholar]

- Lee, W.; Lee, B.; Yang, X.; Jung, H.; Bok, I.; Kim, C.; Kwon, O.; You, H. A 3D anthropometric sizing analysis system based on North American CAESAR 3D scan data for design of head wearable products. Comput. Ind. Eng. 2018, 117, 121–130. [Google Scholar] [CrossRef]

- Ballin, A.; Carvalho, B.; Dolci, J.; Becker, R.; Berger, C.; Mocellin, M. Anthropometric study of the caucasian nose in the city of Curitiba: Relevance of population evaluation. Braz. J. Otorhinolaryngol. 2018, 84, 486–493. [Google Scholar] [CrossRef] [PubMed]

- Osterland, S.; Weber, J. Analytical analysis of single-stage pressure relief valves. Int. J. Hydromechatron. 2019, 2, 32–53. [Google Scholar] [CrossRef]

- Susan, S.; Agrawal, P.; Mittal, M.; Bansal, S. New shape descriptor in the context of edge continuity. CAAI Trans. Intell. Technol. 2019, 4, 101–109. [Google Scholar] [CrossRef]

- Jana, R.; Basu, A. Automatic Age Estimation from Face Image. In Proceedings of the 2017 International Conference on Innovative Mechanisms for Industry Applications (ICIMIA), Bangalore, India, 21–23 February 2017; pp. 87–90. [Google Scholar]

- Bouchrika, I.; Harrati, N.; Ladjailia, A.; Khedairia, S. Age Estimation from Facial Images Based on Hierarchical Feature Selection. In Proceedings of the 16th International Conference on Sciences and Techniques of Automatic Control and Computer Engineering (STA), Monastir, Tunisia, 21–23 December 2015; pp. 393–397. [Google Scholar]

- Ahmed, A.; Jalal, A.; Kim, K. A Novel Statistical Method for Scene Classification Based on Multi-Object Categorization and Logistic Regression. Sensors 2020, 20, 3871. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, A.; Jalal, A.; Kim, K. Region and Decision Tree-Based Segmentations for Multi-Objects Detection and Classification in Outdoor Scenes. In Proceedings of the 2019 International Conference on Frontiers of Information Technology (FIT), Islamabad, Pakistan, 16–18 December 2019; pp. 209–295. [Google Scholar]

- Jalal, A.; Akhtar, I.; Kim, K. Human Posture Estimation and Sustainable Events Classification via Pseudo-2D Stick Model and K-ary Tree Hashing. Sustainability 2020, 12, 9814. [Google Scholar] [CrossRef]

- Uddin, M.; Khaksar, W.; Torresen, J. Facial Expression Recognition Using Salient Features and Convolutional Neural Network. IEEE Access 2017, 5, 26146–26161. [Google Scholar] [CrossRef]

- Zhu, C.; Miao, D. Influence of kernel clustering on an RBFN. CAAI Trans. Intell. Technol. 2019, 4, 255–260. [Google Scholar] [CrossRef]

- Gallagher, A.; Chen, T. Understanding Images of Groups of People. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 22–24 June 2009; pp. 256–263. [Google Scholar]

- Pontes, J.; Britto, A.; Fookes, C.; Koerich, A. A flexible hierarchical approach for facial age estimation based on multiple features. Pattern Recognit. 2016, 54, 34–51. [Google Scholar] [CrossRef]

- Luu, K.; Seshadri, K.; Savvides, M.; Bui, T.; Suen, C. Contourlet Appearance Model for Facial Age Estimation. In Proceedings of the 2011 International Joint Conference on Biometrics (IJCB), Washington, DC, USA, 11–13 October 2011; pp. 1–8. [Google Scholar]

- Luu, K.; Ricanek, K.; Bui, T.; Suen, C. Age Estimation Using Active Appearance Models and Support Vector Machine Regression. In Proceedings of the 2009 IEEE 3rd International Conference on Biometrics: Theory, Applications, and Systems, Washington, DC, USA, 28–30 September 2009; pp. 1–5. [Google Scholar]

| Age Classes | CNN | DBN | RNN | MLP | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| The Images of Groups Dataset | ||||||||||||

| Age | Precision | Recall | F1 Measure | Precision | Recall | F1 Measure | Precision | Recall | F1 Measure | Precision | Recall | F1 Measure |

| 0–2 | 0.93 | 0.94 | 0.93 | 0.89 | 0.81 | 0.84 | 0.78 | 0.78 | 0.78 | 0.89 | 0.80 | 0.84 |

| 3–7 | 0.94 | 0.92 | 0.93 | 0.750 | 0.750 | 0.750 | 0.70 | 0.70 | 0.70 | 0.75 | 0.76 | 0.75 |

| 8–12 | 0.94 | 0.96 | 0.95 | 0.79 | 0.70 | 0.74 | 0.65 | 0.77 | 0.70 | 0.69 | 0.75 | 0.72 |

| 13–19 | 0.91 | 0.91 | 0.9 | 0.90 | 0.88 | 0.90 | 0.80 | 0.61 | 0.69 | 0.90 | 0.81 | 0.85 |

| 20–26 | 0.91 | 0.90 | 0.91 | 0.76 | 0.76 | 0.76 | 0.50 | 0.75 | 0.60 | 0.76 | 0.76 | 0.76 |

| 37–65 | 0.94 | 0.87 | 0.90 | 0.67 | 0.82 | 0.74 | 0.53 | 0.72 | 0.54 | 0.57 | 0.62 | 0.59 |

| 66+ | 0.89 | 0.97 | 0.93 | 0.80 | 0.80 | 0.80 | 0.75 | 0.69 | 0.71 | 0.80 | 0.80 | 0.80 |

| Age Classes | CNN | DBNs | RNNs | MLPs | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| OUI Adience Dataset | ||||||||||||

| Age | Precision | Recall | F1 Measure | Precision | Recall | F1 Measure | Precision | Recall | F1 Measure | Precision | Recall | F1 Measure |

| 0–2 | 0.95 | 0.96 | 0.95 | 0.88 | 0.88 | 0.88 | 0.68 | 0.58 | 0.63 | 0.72 | 0.72 | 0.72 |

| 4–6 | 0.93 | 0.93 | 0.93 | 0.75 | 0.75 | 0.75 | 0.70 | 0.70 | 0.70 | 0.67 | 0.82 | 0.74 |

| 8–13 | 0.92 | 0.93 | 0.92 | 0.79 | 0.79 | 0.79 | 0.72 | 0.77 | 0.74 | 0.69 | 0.75 | 0.72 |

| 15–20 | 0.92 | 0.94 | 0.93 | 0.90 | 0.88 | 0.90 | 0.80 | 0.61 | 0.69 | 0.79 | 0.70 | 0.74 |

| 25–32 | 0.95 | 0.95 | 0.95 | 0.72 | 0.72 | 0.72 | 0.60 | 0.67 | 0.60 | 0.90 | 0.88 | 0.90 |

| 38–43 | 0.94 | 0.89 | 0.91 | 0.67 | 0.82 | 0.74 | 0.53 | 0.72 | 0.54 | 0.76 | 0.76 | 0.76 |

| 48–53 | 0.92 | 0.88 | 0.90 | 0.85 | 0.85 | 0.85 | 0.75 | 0.69 | 0.71 | 0.78 | 0.78 | 0.78 |

| 60– | 0.9 | 1.0 | 0.97 | 0.78 | 0.74 | 0.76 | 0.69 | 0.90 | 0.81 | 0.88 | 0.68 | 0.58 |

| Age Classes | CNN | DBNs | RNNs | MLPs | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| OUI Adience Dataset | ||||||||||||

| Age | Precision | Recall | F1 Measure | Precision | Recall | F1 Measure | Precision | Recall | F1 Measure | Precision | Recall | F1 Measure |

| 0–13 | 0.59 | 0.96 | 0.73 | 0.89 | 0.81 | 0.84 | 0.78 | 0.78 | 0.78 | 0.67 | 0.82 | 0.74 |

| 14–21 | 0.96 | 0.58 | 0.73 | 0.67 | 0.82 | 0.74 | 0.70 | 0.70 | 0.70 | 0.69 | 0.75 | 0.72 |

| 22–39 | 0.96 | 0.96 | 0.96 | 0.85 | 0.85 | 0.85 | 0.75 | 0.75 | 0.75 | 0.69 | 0.75 | 0.72 |

| 40–69 | 0.96 | 0.98 | 0.97 | 0.90 | 0.88 | 0.90 | 0.79 | 0.79 | 0.79 | 0.90 | 0.81 | 0.85 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rizwan, S.A.; Jalal, A.; Gochoo, M.; Kim, K. Robust Active Shape Model via Hierarchical Feature Extraction with SFS-Optimized Convolution Neural Network for Invariant Human Age Classification. Electronics 2021, 10, 465. https://doi.org/10.3390/electronics10040465

Rizwan SA, Jalal A, Gochoo M, Kim K. Robust Active Shape Model via Hierarchical Feature Extraction with SFS-Optimized Convolution Neural Network for Invariant Human Age Classification. Electronics. 2021; 10(4):465. https://doi.org/10.3390/electronics10040465

Chicago/Turabian StyleRizwan, Syeda Amna, Ahmad Jalal, Munkhjargal Gochoo, and Kibum Kim. 2021. "Robust Active Shape Model via Hierarchical Feature Extraction with SFS-Optimized Convolution Neural Network for Invariant Human Age Classification" Electronics 10, no. 4: 465. https://doi.org/10.3390/electronics10040465

APA StyleRizwan, S. A., Jalal, A., Gochoo, M., & Kim, K. (2021). Robust Active Shape Model via Hierarchical Feature Extraction with SFS-Optimized Convolution Neural Network for Invariant Human Age Classification. Electronics, 10(4), 465. https://doi.org/10.3390/electronics10040465