Automatic Code Generation of Safety Mechanisms in Model-Driven Development

Abstract

1. Introduction

- An integration of safety mechanisms within the application model. This supports the development of safety-critical systems by offering features like semi-formal methods (UML) and automatic code generation (the MDD generation process). Both semi-formal methods and automatic code generation are highly recommended by the safety standard IEC 61508 [7]. Thus, our approach helps developers to comply with this safety standard and others derived from IEC 61508, e.g., ISO26262 [6].

- Due to the automatic generation of the safety mechanisms, developers require less knowledge about their implementation details. This is important because knowledge about safety is only a very minor topic in current computer science and software engineering curricula [13,14]. Automating the implementation of safety mechanisms means fewer opportunities for developers to make implementation mistakes due to insufficient knowledge in the safety domain. Thus, our approach may contribute to improving the overall safety of the system.

2. Background

2.1. Safety Mechanisms

- The calculation of a Cycling Redundancy Checksum (CRC) for the attribute when it is accessed and which is checked when the attribute is modified (CRC check).

- The triplication of the attribute and a comparison of whether these replicas are the same when the attribute is accessed, i.e., Triple Modular Redundancy (TMR).

- A numeric range check that is performed when the attribute is accessed (Range check).

- A time-based check that determines whether an attribute that is accessed has been modified within a certain time frame (Update check).

2.2. Terminology: Software- and Hardware-Implemented Safety Mechanisms

2.3. Automatic Code Generation from UML Models

3. Related Work

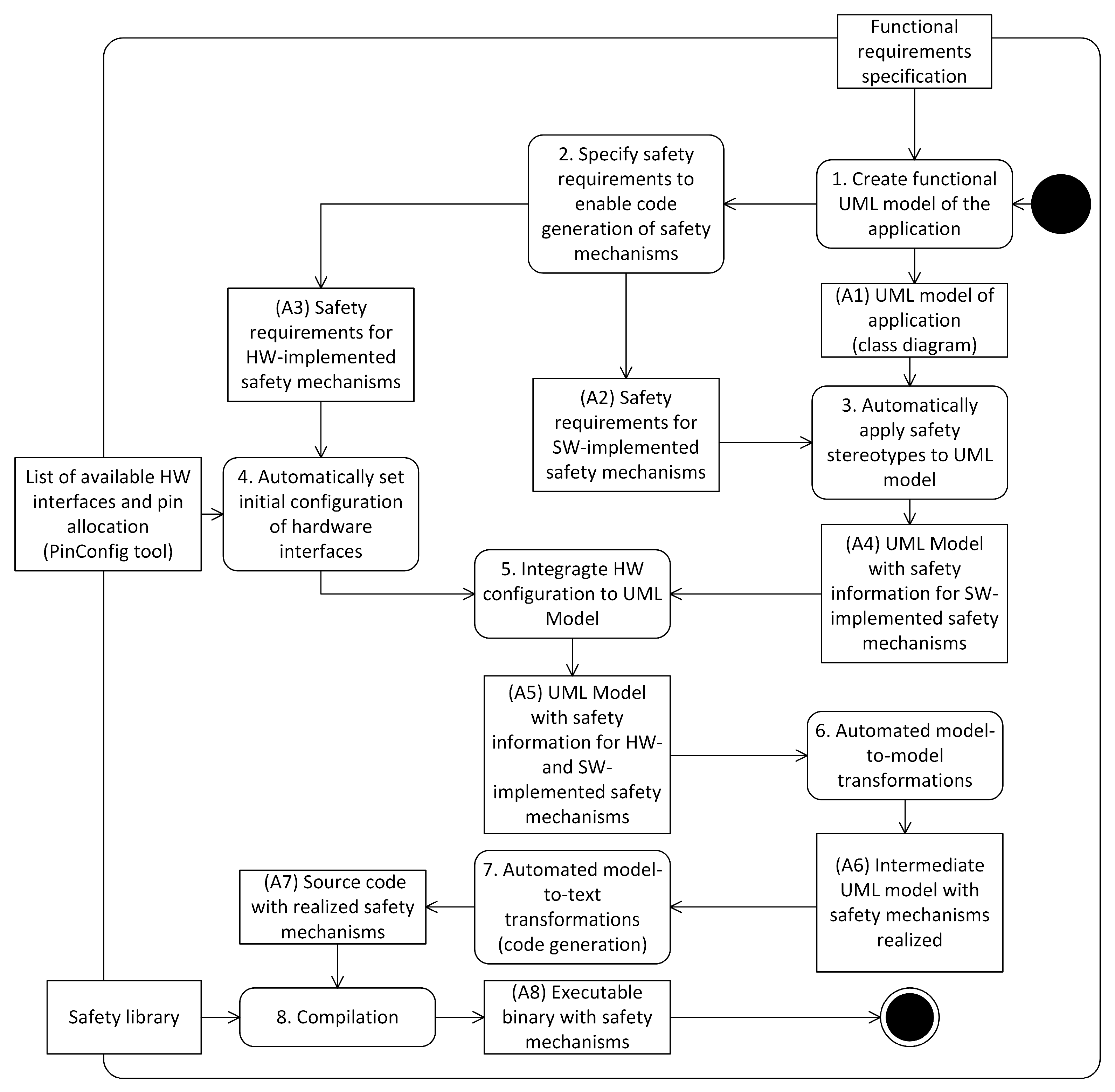

4. Workflow and Application Example

4.1. Developer Workflow

4.2. Applying the Workflow to an Application Example

4.2.1. Application Example

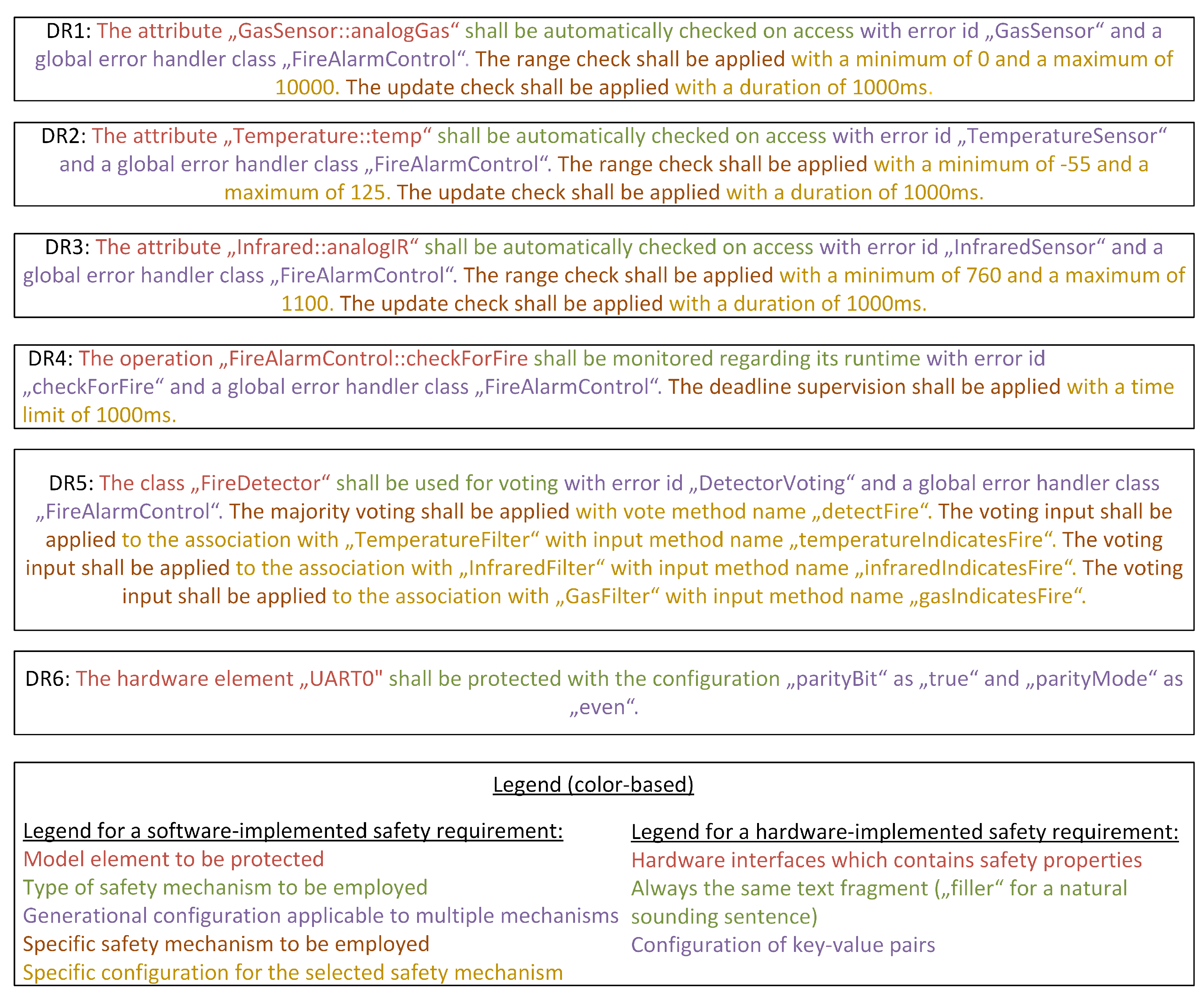

4.2.2. Example Requirements for the Application Example

- HR1:

- The output of the CO sensor shall be within its specified value range. Additionally, the CO sensor shall measure new values at least every second.

- HR2:

- The output of the temperature sensor shall be within its specified value range. Additionally, the temperature sensor shall measure new values at least every second.

- HR3:

- The output of the infrared sensor shall be within its specified value range. Additionally, the infrared sensor shall measure new values at least every second.

- HR4:

- The system shall check for a fire at least every second.

- HR5:

- The output of the sensors shall be compared in a voting process that determines the presence of a fire.

- HR6:

- The communication of the UART with the external hardware module sending an alarm SMS shall be protected with error-detecting codes.

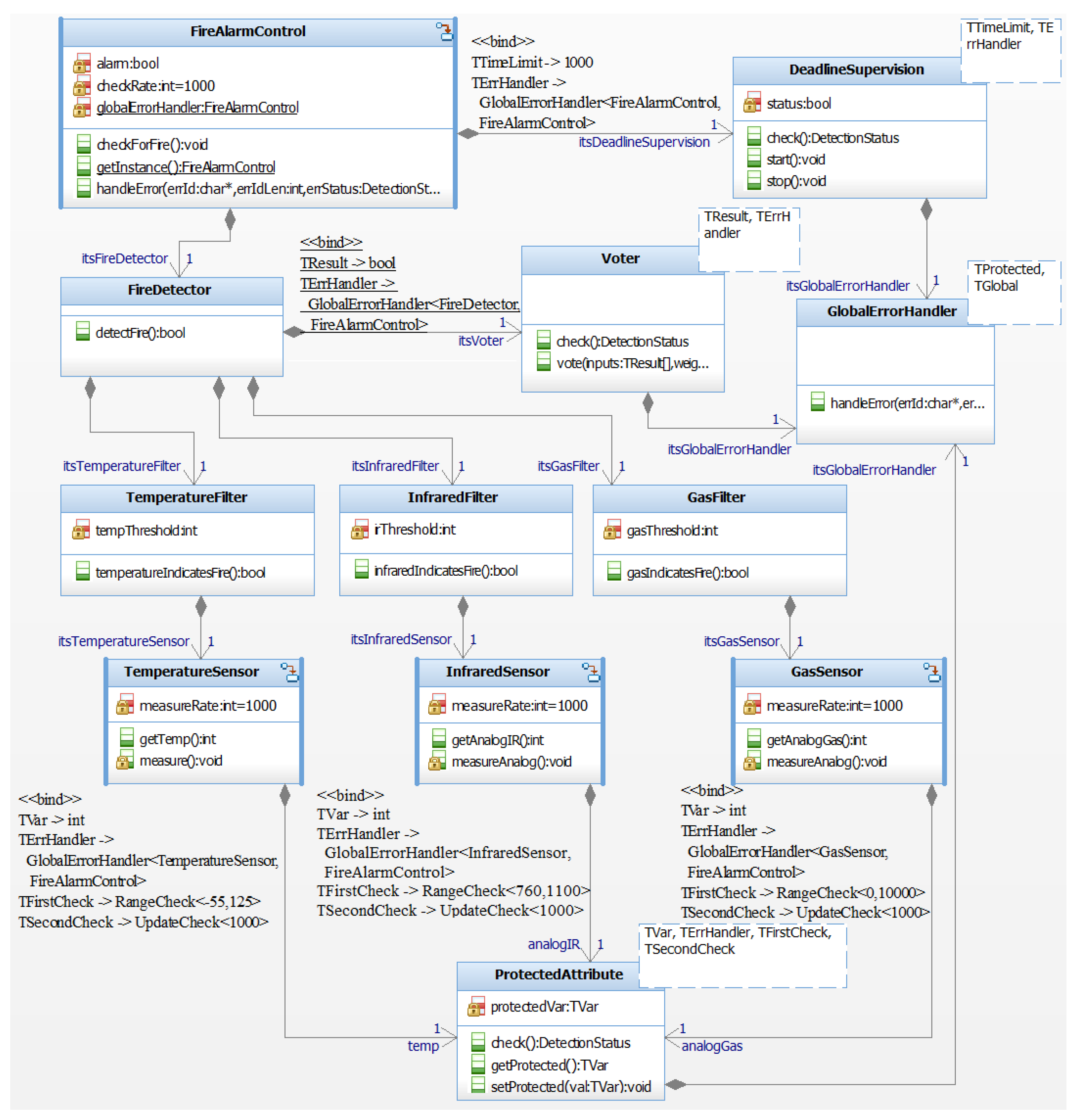

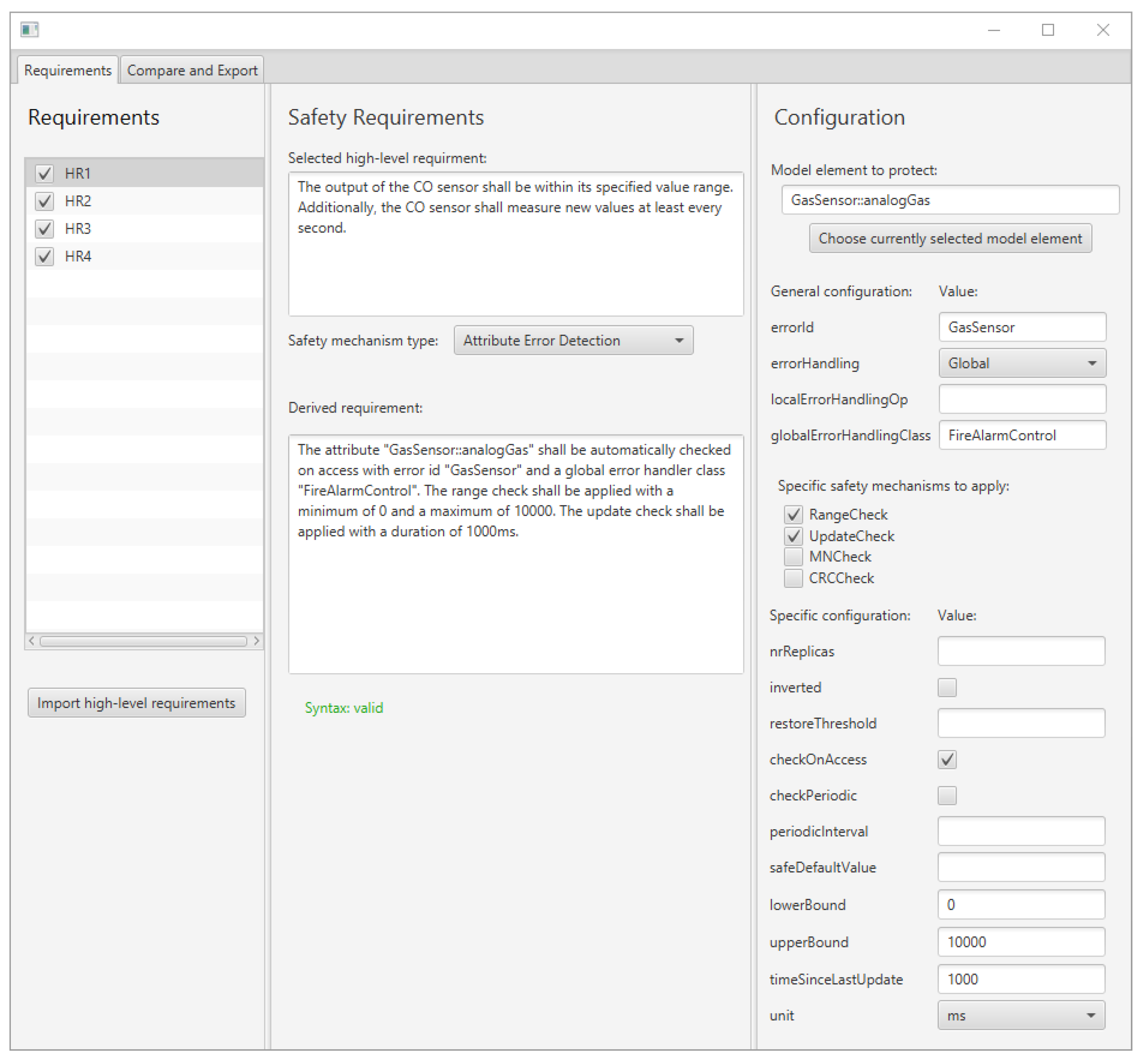

4.2.3. Automatically Generating Software-Implemented Safety Mechanisms in the Application Example

- Requirements DR1, DR2 and DR3 lead to the application of the RangeCheck and UpdateCheck stereotype to the attribute that represents the measured sensor value in the GasSensor, TemperatureSensor, and InfraredSensor classes.

- Requirement DR4 leads to the application of the DeadlineSupervision stereotype to the operation checkForFire() in the class FireAlarmControl.

- Requirement DR5 leads to the application of the MajorityVoter stereotype to the class FireDetector. Furthermore, the VotingInput stereotypes are applied to the association between FireDetector and the classes TemperatureFilter, InfraredFilter, and GasFilter.

- The attributes with the RangeCheck and UpdateCheck stereotypes are replaced by instances of the class ProtectedAttribute, which performs the specified checks whenever the attribute is accessed, i.e., the operation getProtected() is called.

- An instance of DeadlineSupervision has been added to the class FireAlarmControl. The method checkForFire(), to which the DeadlineSupervision stereotype is applied in Figure 4, has been automatically modified to start the monitoring process at is beginning and to stop and evaluate the monitoring at its end.

- An instance of the class Voter has been added to the class FireDetector. Its vote() method performs majority voting, which has been indicated by the MajorityVoter stereotype applied to FireDetector in Figure 4. The method detectFire() has been automatically modified to pass the necessary inputs to vote() and return the value upon which the voting process agreed.

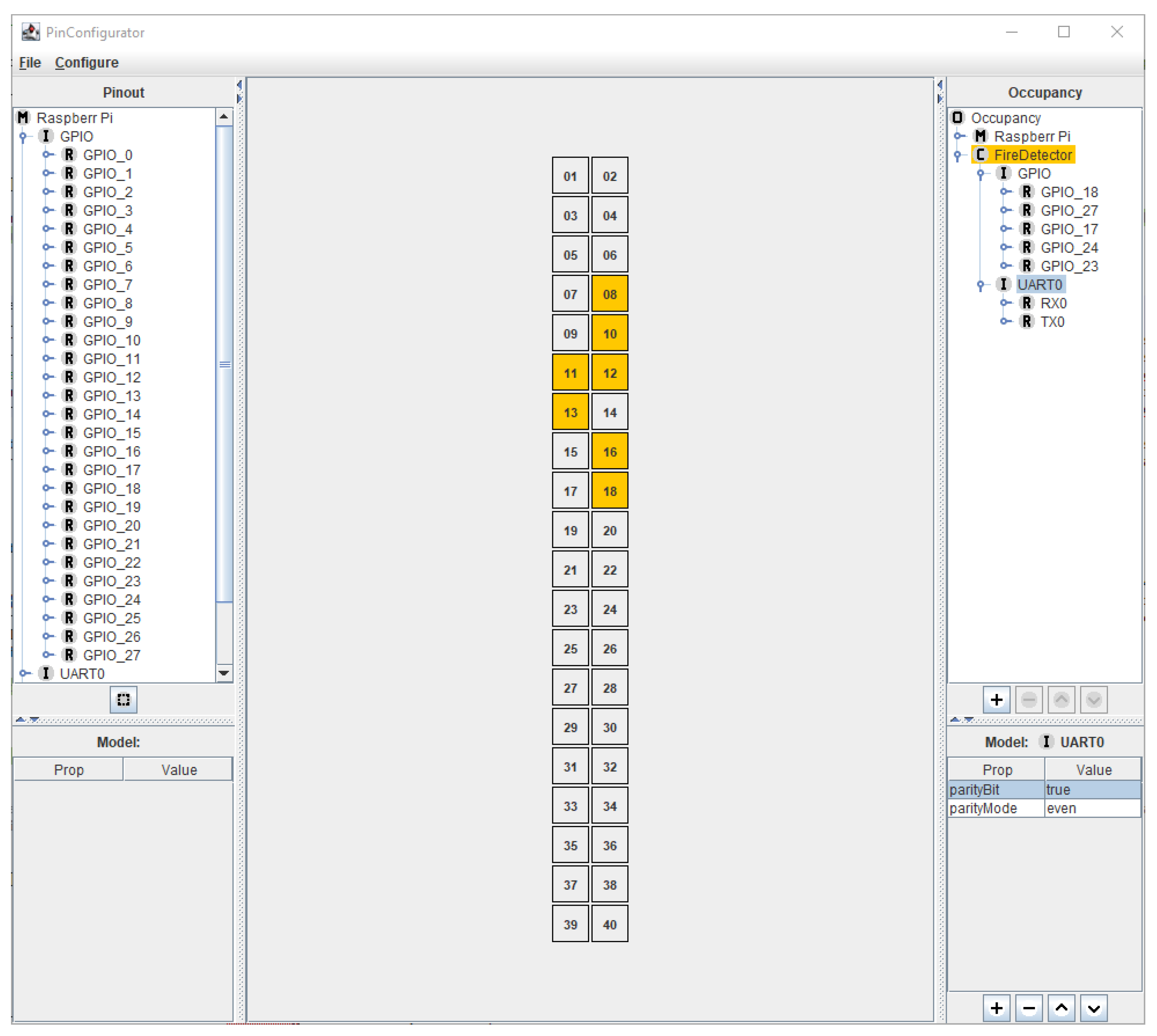

4.2.4. Automatically Configuring Hardware-Implemented Safety Mechanisms in the Application Example

| pinMode(2, INPUT); |

5. Specifying Safety Requirements to Enable Automatic Code Generation

5.1. Sentence Templates for Specifying Safety Mechanisms

- Both software- and hardware-implemented safety mechanisms have to be expressible with the sentence templates. For example, the high-level requirements HR1–HR5 presented in Section 4.2.2 refer to software-implemented safety mechanisms, while HR6 refers to a hardware-implemented safety mechanism.

- The type of model element that should be protected has to be expressible with the sentence template. For example, the first sentence of HR1 refers to specific values measured by the sensor, i.e., an attribute in the model. Requirement HR4, in contrast, refers to an action, i.e., checking for fire. This corresponds to a method within the model.

- The specific type of safety mechanism has to be expressible with the sentence templates. For example, HR6 only refers to “error detecting codes”. However, “error detecting codes” refers to a category of safety mechanisms with distinct realizations, e.g., whether a Cycling Redundancy Check (CRC), a Hamming code or a parity bit is used.

- The configuration of safety mechanisms has to be expressible with the sentence templates. For example, for HR1 it is not sufficient to only specify which element should be protected regarding the sensor’s output range. Instead, the upper and lower limit of this range also have to be specified. Another example is HR6. Even a specific error-detecting code, e.g., CRC, may have multiple configuration options, e.g., the number of bits used for the code.

5.1.1. Distinction between Hardware- and Software-Implemented Safety Mechanisms

| req: swReq | hwReq |

5.1.2. Sentence Templates for Hardware-Implemented Safety Mechanisms

| The hardware element <Name of the hardware interface to be protected> shall be protected with the configuration "<key>" as "<value>". |

| hwReq: ‘The hardware element ‘hwId’ shall be protected with the configuration’ hwConfig ‘.’; hwId: QSTRING; hwConfig: hwConfigEntry ((‘,’ | ‘and’) hwConfig)*; hwConfigEntry: QSTRING ‘as’ QSTRING; |

5.1.3. Sentence Templates for Software-Implemented Safety Mechanisms

| The <model element to be protected> <general safety mechanism type> <general configuation>. <specific safety mechanism to be applied> <specific configuration>. |

| swReq: introReq (addReq)* introReq: ‘The ‘location’ shall be ‘introHow’ with ‘swSharedConfig’’.; addReq: ‘The ‘type’ shall be applied ‘(‘to the’ location)?’ with ‘swConfig’’.; location: locationType SPACE locationPath; locationType: ‘class’ | ‘attribute’ | ‘operation’ | ‘association with’: locationPath: QSTRING; introHow: ‘automatically checked on access’ | ‘periodically checked every TIME_UNIT ’ | ‘used for voting’ | ‘monitored regarding its runtime’; swSharedConfig: swSharedConfigEntry ((‘,’ | ‘and’) sharedConfig)*; type: TYPE_CHECKS | TYPE_VOTING | TYPE_TIMING_MONITORING; swConfig: swConfigEntry ((‘,’ | ‘and’) swConfig)*; |

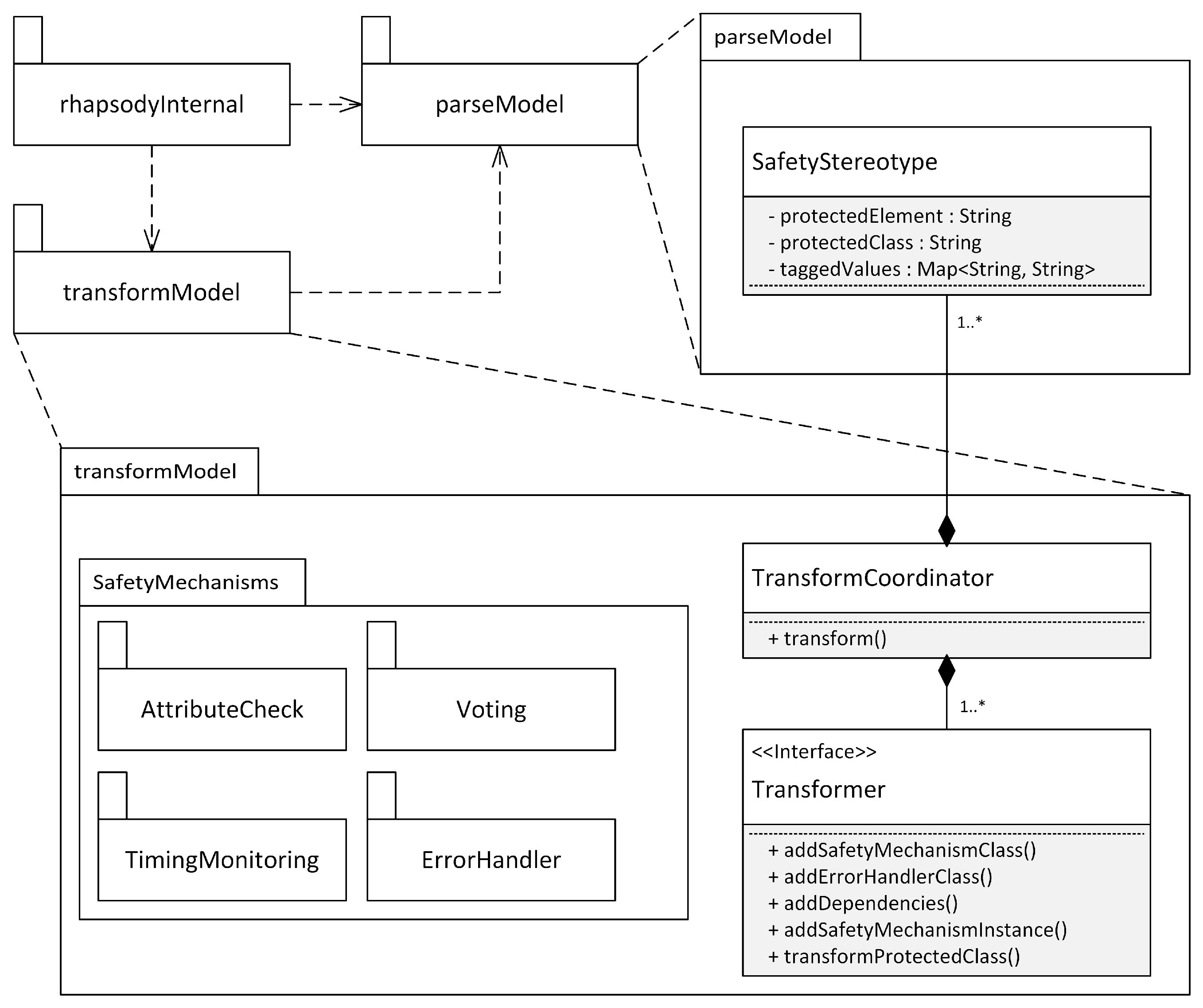

5.2. Prototype Implementation

6. Model-Driven Code Generation of Software-Implemented Safety Mechanisms

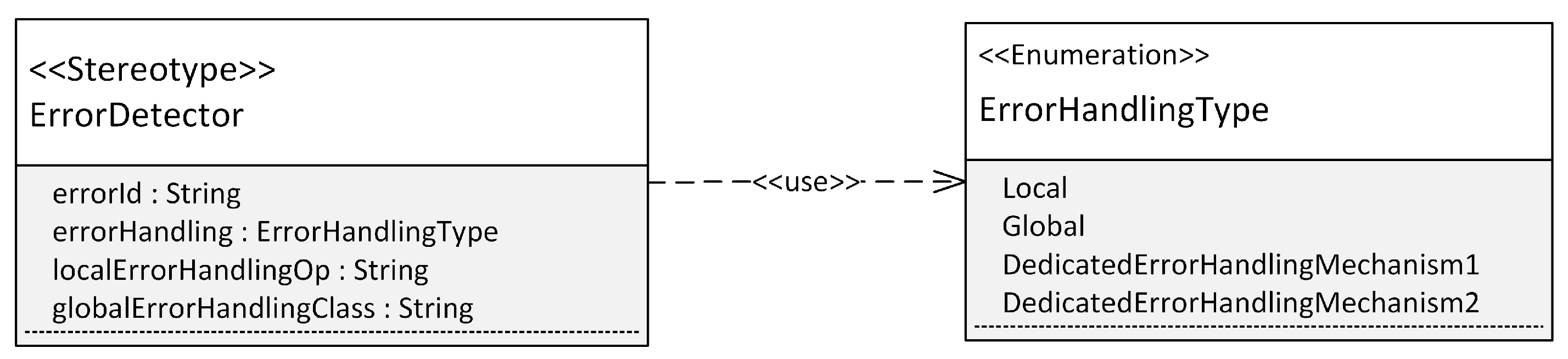

6.1. Automatically Applying Safety Stereotypes to the Application Model

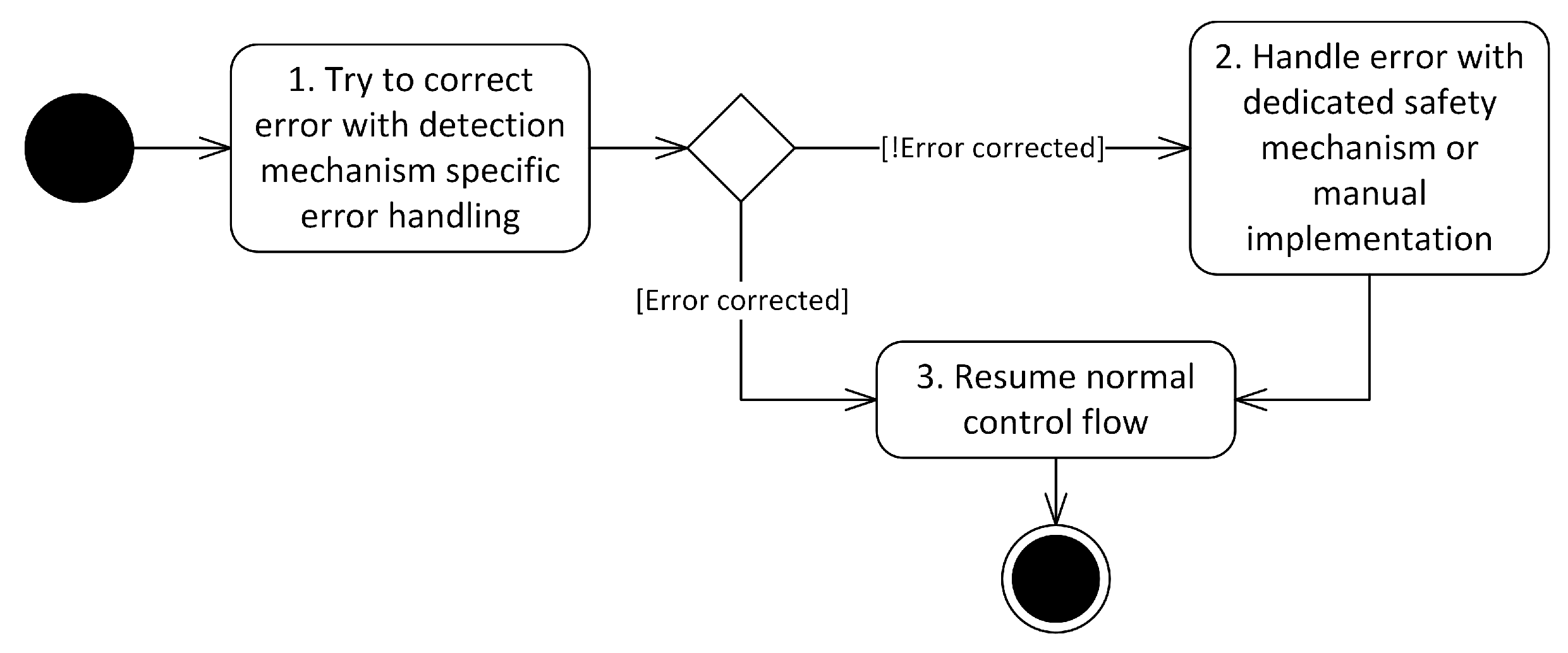

6.2. Error Detection and Error Handling: Runtime Behavior

- EH1:

- Error handling executed as part of the error detection mechanism. Some error detection mechanisms, e.g., CRC, are capable of handling errors without any extra safety mechanism. In the case of CRC, this would be the correction of the malformed bits. This type of error handling may be generated alongside the generation of error detection mechanisms.

- EH2:

- Error handling executed by a dedicated error handling safety mechanism. Some safety mechanisms that focus on error handling strategies may be generated automatically, e.g., graceful degradation [16].

- EH3:

- Error handling that is manually implemented. In some cases, error handling may require application-specific knowledge. This prevents full automatic code generation. For example, before an application exhibits fail-stop behavior, application-specific shut down procedures may have to be triggered. As these may vary for different applications, some amount of manually implemented code is not avoidable. The approach described in this article aims to support such manually implemented error handling by automatically generating the infrastructure around the actual error handling. Developers only have to implement the error handling method. The code for the invocation of this method, i.e., when an error has been detected, is generated automatically. This article presents two types of manually implemented error handling. The first type functions in a local scope, i.e., a manually implemented operation inside the class in which an error is detected. The second type functions at a global scope via a global singleton and may therefore affect larger parts of the application. This error handling at the global scope is realized in the application example described in Section 4.2.1, where the code for the handleError() method inside the class FireAlarmControl had to be written manually. However, the invocation of this method at the right moment, i.e., after an error has been detected, has been automatically generated.

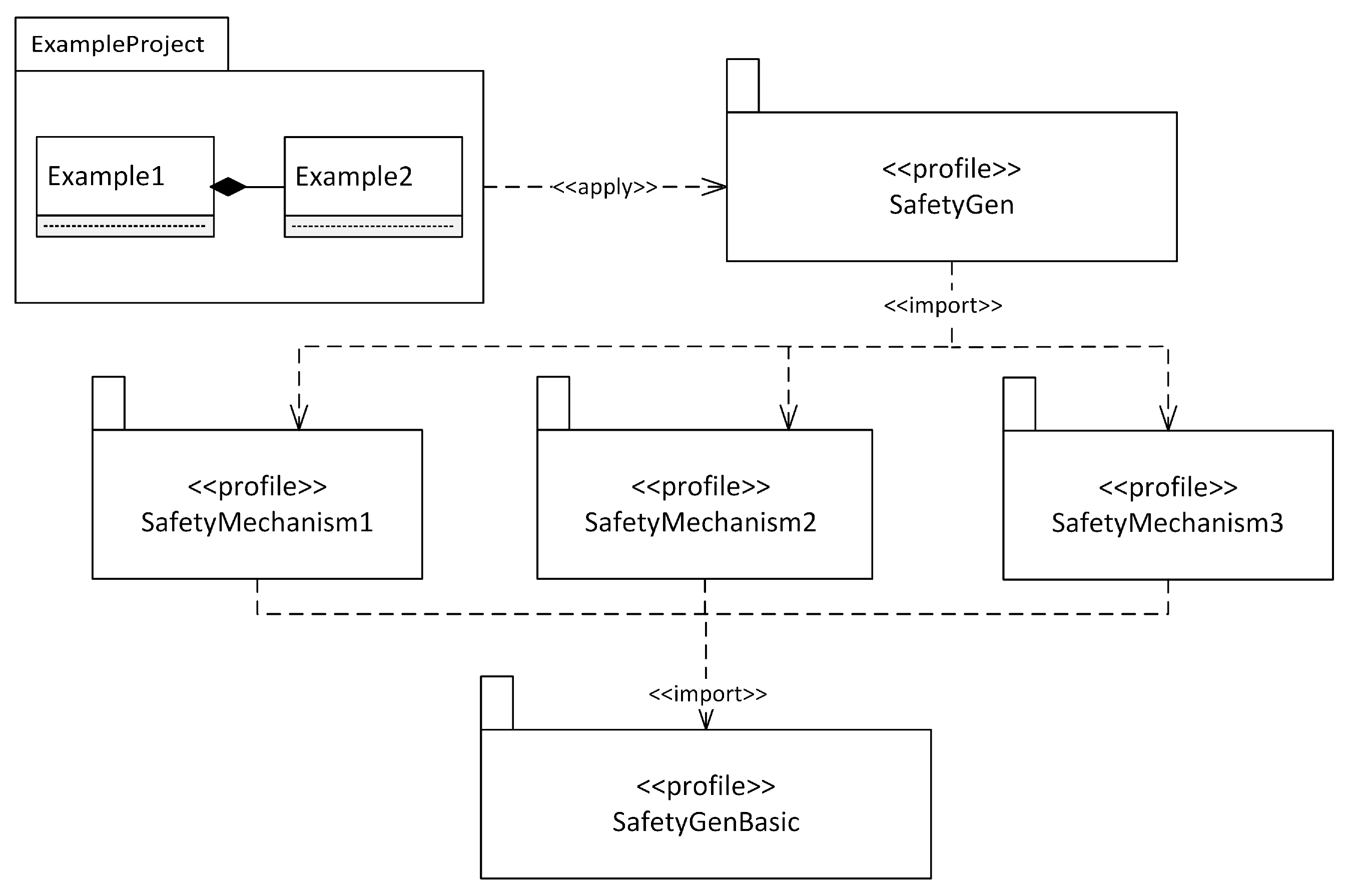

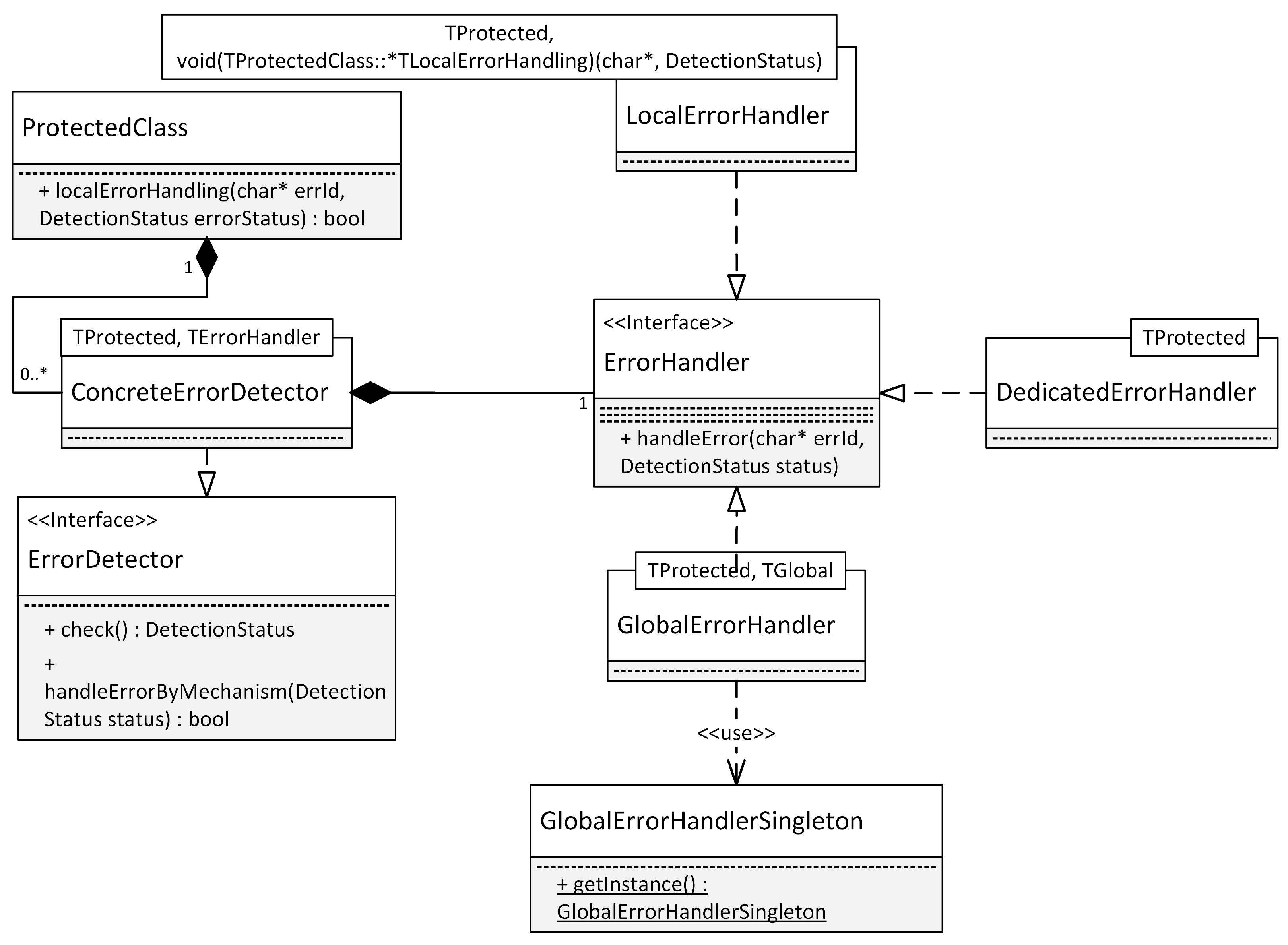

6.3. Model Representation

6.4. Software Architecture

6.5. Model Transformations

- The addition of a class that represents the safety mechanism modeled by the stereotype (cf. ConcreteErrorDetector) in Figure 11.

- The addition of a class that represents the error handler used by the safety mechanism (cf. ErrorHandler and its interface realizations in Figure 11).

- The addition of the necessary dependencies to the class that should be protected (cf. ProtectedClass in Figure 11). This includes any dependencies to classes created by the model transformations, e.g., the two classes mentioned above. The class that should be protected may be directly marked with a stereotype, e.g., class FireDetector in Figure 4. Alternatively, the protected class is the class that contains the model element with a safety stereotype. For example, in Figure 4, the sensor classes are classes that should be protected. The reason for this is that they each contain an attribute with at least one safety stereotype, i.e., RangeCheck and UpdateCheck.

- The addition of an instance to the protected class that should be protected. The instance added to this class realizes the safety mechanism. In terms of Figure 11, an instance of ConcreteErrorDetector is added to ProtectedClass. ConcreteErrorDetector uses template parameters to reflect the configuration options of the tagged values of the safety stereotypes. Thus, these template parameters also have to be set accordingly.

- Additional changes to the protected class may be necessary, depending on the generated safety mechanism. For example, consider the getter methods of the sensors in the application example shown in Figure 11. These have to be modified to no longer directly return the value of an attribute. Instead, they have to call the getProtected() method of the corresponding ProtectedAttribute instance, which performs the error detection checks before returning the value of the attribute.

6.6. Prototype Framework

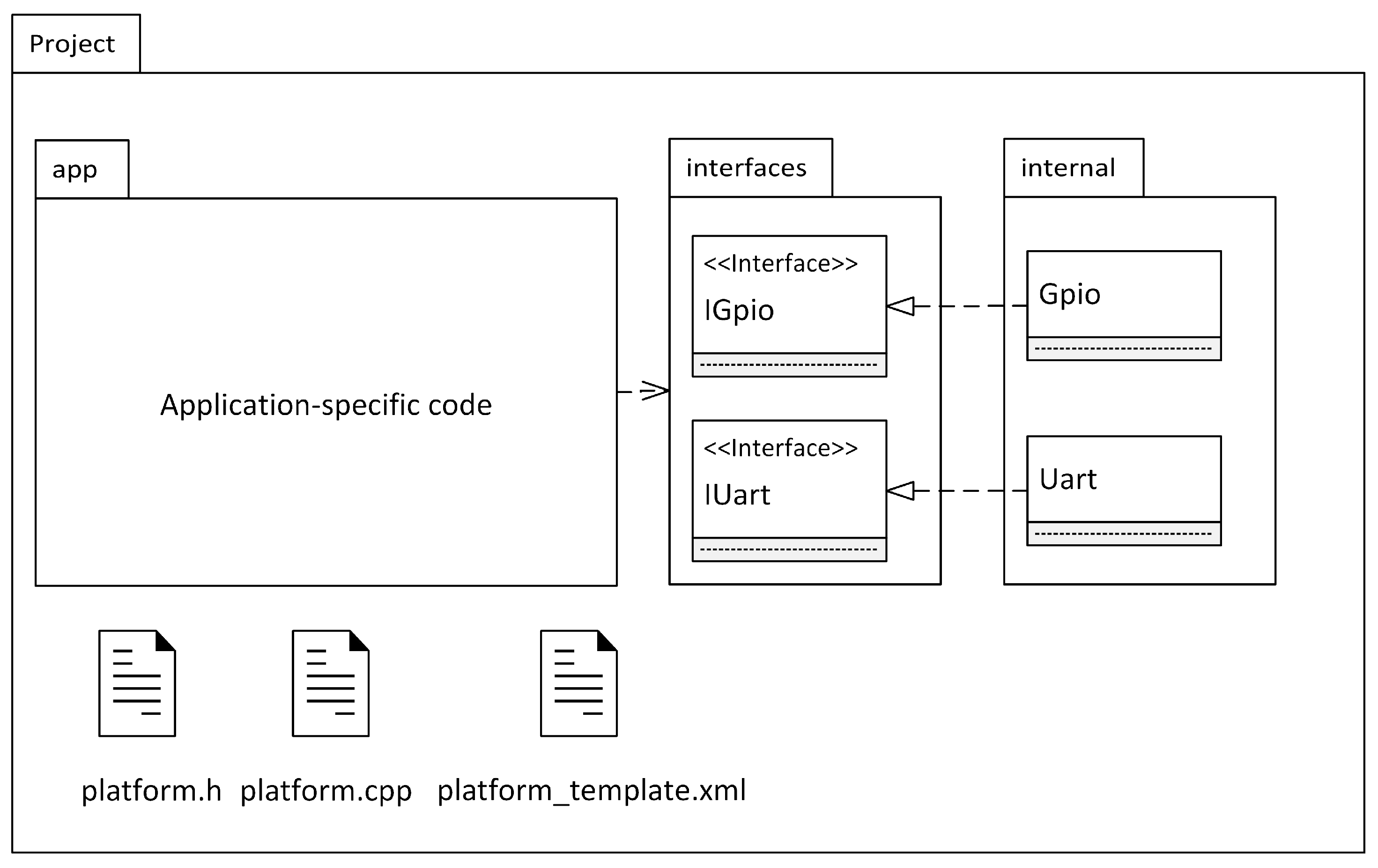

7. Automatic Code Generation of the Initial Configuration of Hardware-Implemented Safety Mechanisms

7.1. Automatically Configuring Hardware Interfaces in the PinConfig Tool from Requirements

7.2. Code Generation of the Initial Configuration of Hardware Interfaces

8. Evaluation

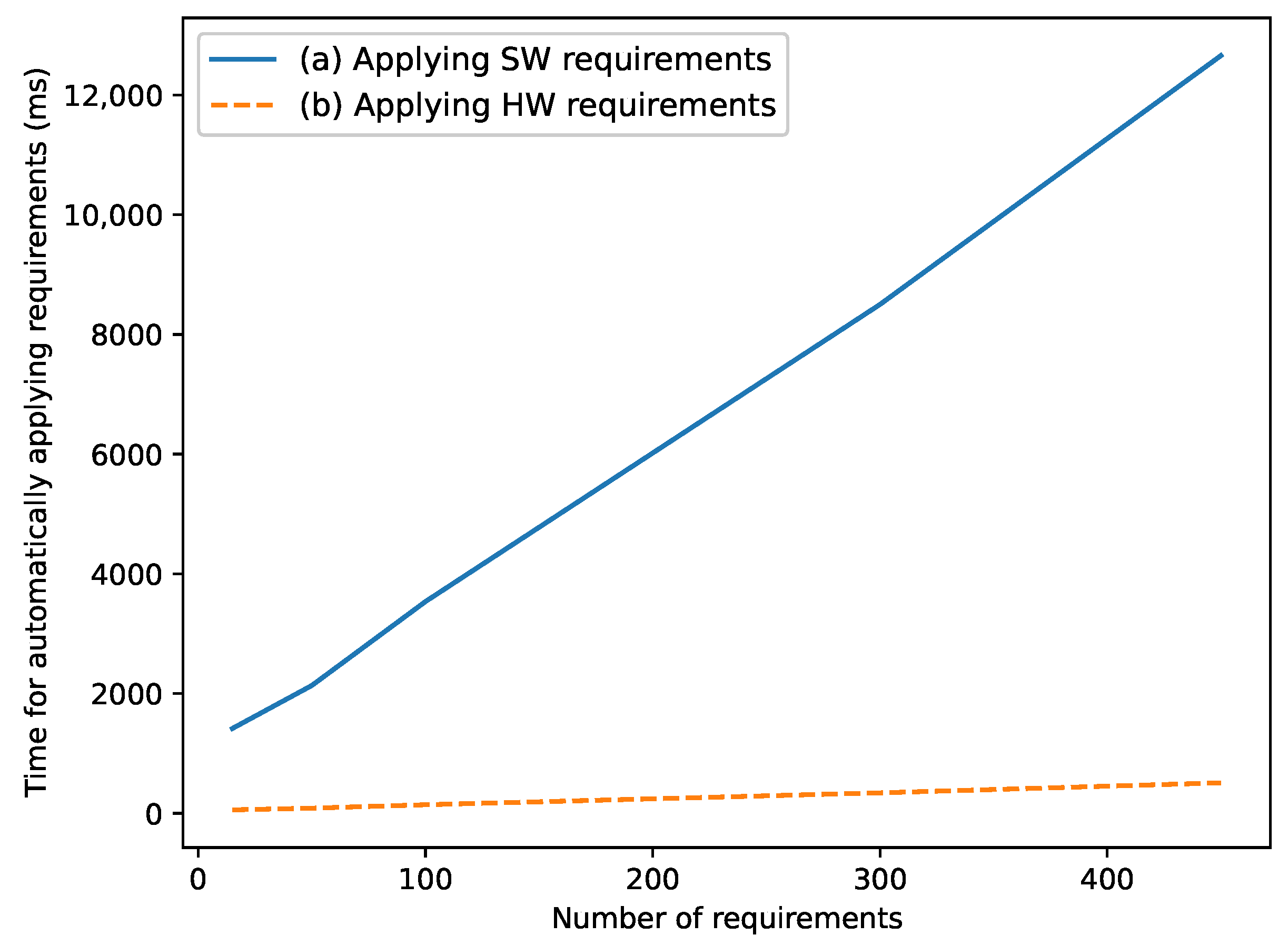

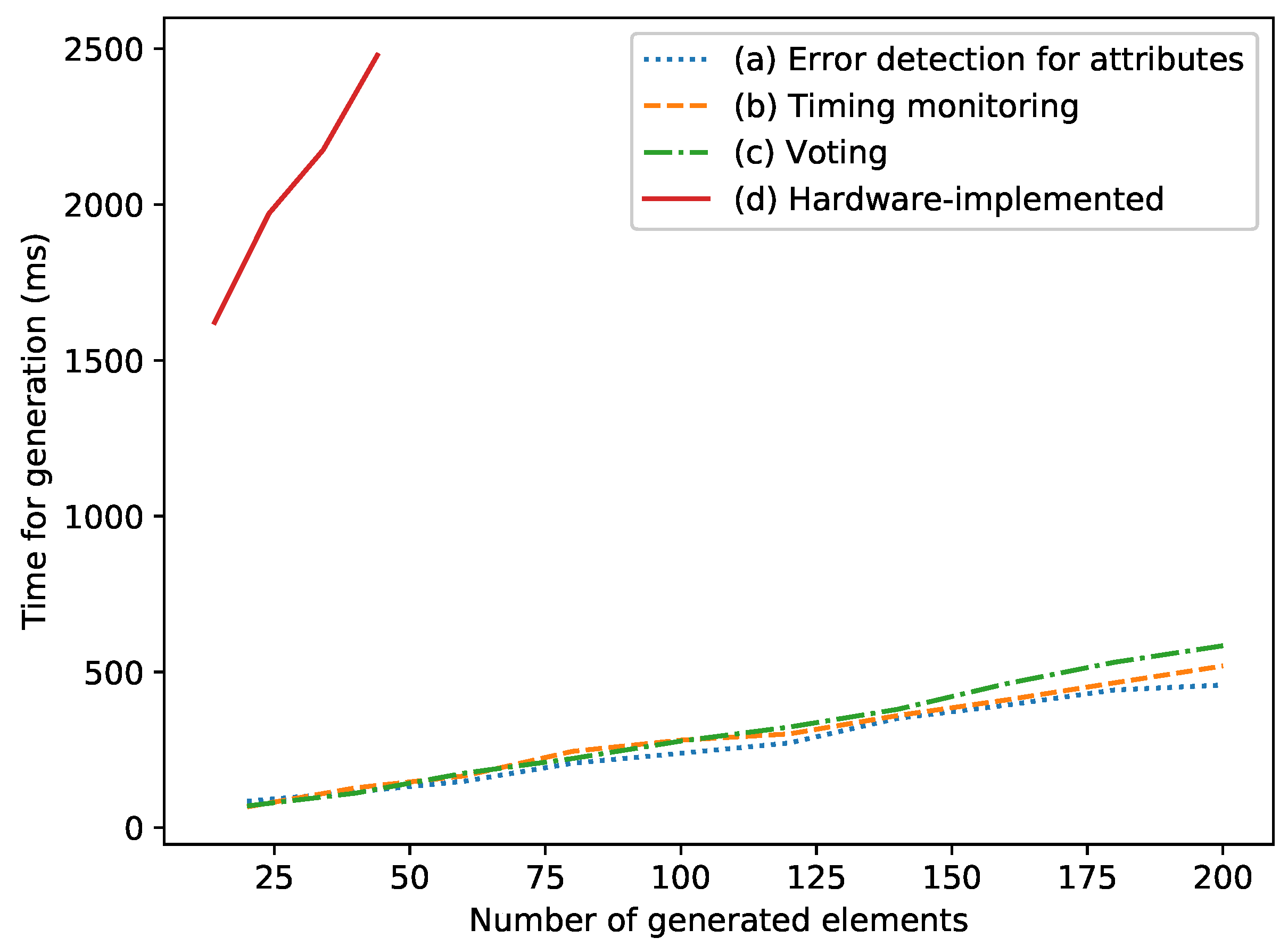

8.1. Scalability of Model Transformations

8.1.1. Runtime of Requirements Parsing

8.1.2. Runtime of Model Transformations

8.2. Setup for Evaluating the Overhead of the Generated Code at Target-Level

8.3. Evaluation of the Memory Overhead at Target-Level

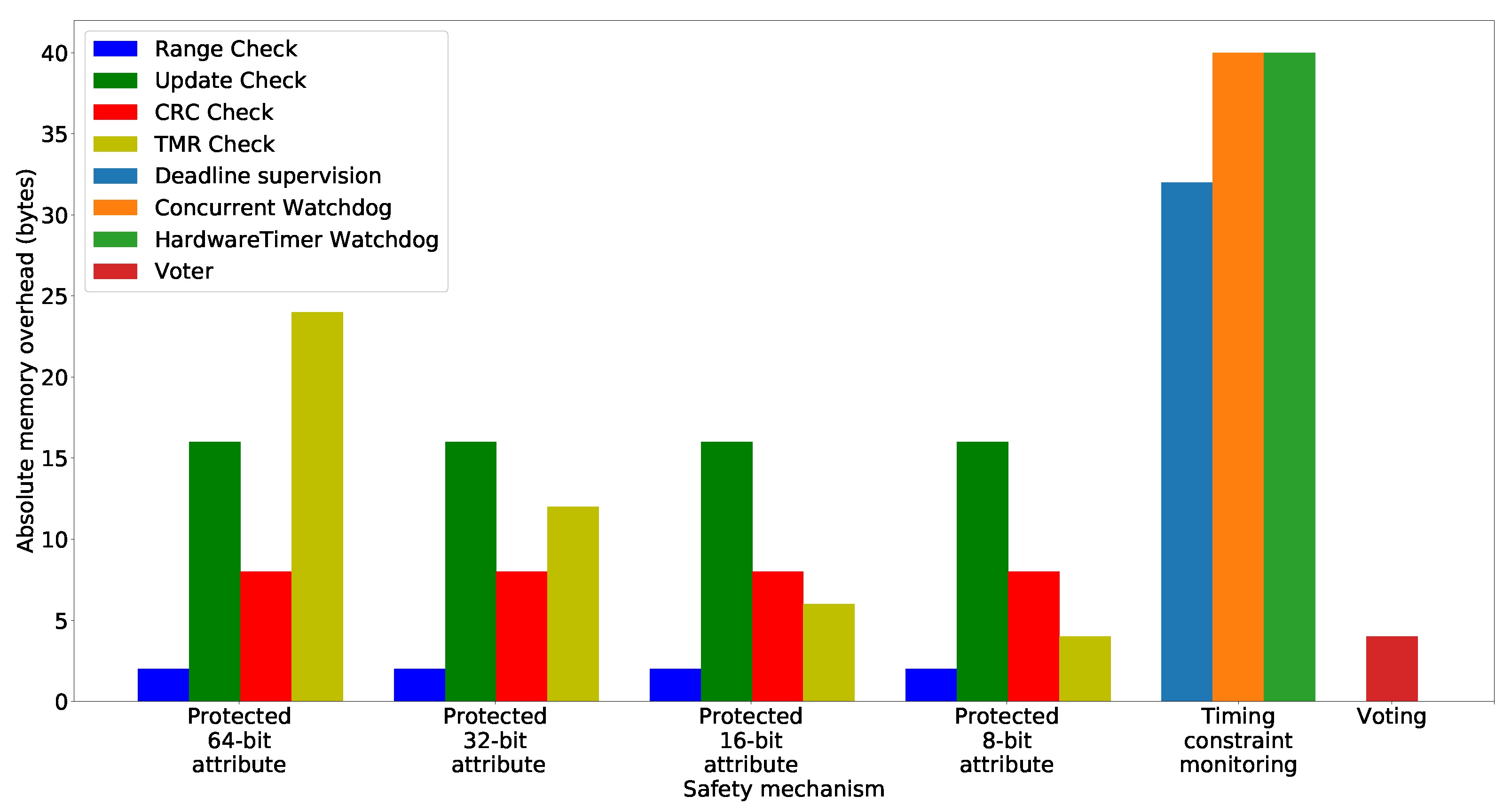

8.3.1. Results: Absolute Memory Overhead

8.3.2. Results: Relative Memory Usage

8.3.3. Discussion of the Memory Overhead

8.4. Evaluation of the Runtime Overhead

8.4.1. Results: Absolute Runtime Overhead

8.4.2. Results: Relative Runtime Overhead

8.4.3. Discussion of the Runtime Overhead

9. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Storey, N. Safety-Critical Computer System; Addison-Wesley: Harlow, UK, 1996. [Google Scholar]

- Johnston, P.; Harris, R. The Boeing 737 MAX Saga: Lessons for Software Organizations. Softw. Qual. Prof. Mag. 2019, 21, 4–12. [Google Scholar]

- Neumann, P.G. Computer Related Risks; ACM Press/Addison-Wesley Publishing Co.: New York, NY, USA, 1995. [Google Scholar]

- International Electrotechnical Commission. Medical Device Software—Software Life-Cycle Processes: IEC 62304; International Electrotechnical Commission: Geneva, Switzerland, 2011. [Google Scholar]

- Radio Technical Commission for Aeronautics; European Organization for Civil Aviation Equipment. Software Considerations in Airborne Systems and Equipment Certification: DO-178; Radio Technical Commission for Aeronautics: Washington, DC, USA, 2006. [Google Scholar]

- International Organization for Standardization. ISO 26262 Road Vehicles—Functional Safety, 2nd ed.; ISO: Geneva, Switzerland, 2018. [Google Scholar]

- International Electrotechnical Commission. IEC 61508 Edition 2.0. Functional Safety for Electrical/Electronic/Programmable Electronic Safety-Related Systems; International Electrotechnical Commission: Geneva, Switzerland, 2010. [Google Scholar]

- IBM. Rational Rhapsody Developer. 2021. Available online: https://www.ibm.com/us-en/marketplace/uml-tools (accessed on 12 October 2021).

- The Eclipse Foundation. Eclipse Papyrus Modeling Environment. 2021. Available online: https://www.eclipse.org/papyrus (accessed on 12 October 2021).

- Huning, L.; Osterkamp, T.; Schaarschmidt, M.; Pulvermüller, E. Seamless Integration of Hardware Interfaces in UML-based MDSE Tools. In Proceedings of the 16th International Conference on Software Technologies, ICSOFT 2021, Online Streaming, 6–8 July 2021; Fill, H., van Sinderen, M., Maciaszek, L.A., Eds.; 2021; pp. 233–244. [Google Scholar] [CrossRef]

- Bunse, C.; Gross, H.G.; Peper, C. Applying a Model-based Approach for Embedded System Development. In Proceedings of the 33rd EUROMICRO Conference on Software Engineering and Advanced Applications (EUROMICRO 2007), Lubeck, Germany, 28–31 August 2007; pp. 121–128. [Google Scholar] [CrossRef]

- Karsai, G.; Sztipanovits, J.; Ledeczi, A.; Bapty, T. Model-integrated development of embedded software. Proc. IEEE 2003, 91, 145–164. [Google Scholar] [CrossRef]

- Hatcliff, J.; Wassyng, A.; Kelly, T.; Comar, C.; Jones, P. Certifiably Safe Software-dependent Systems: Challenges and Directions. In Proceedings of the Conference on The Future of Software Engineering (FOSE 2014), Hyderabad, India, 31 May–7 June 2014; ACM: New York, NY, USA, 2014; pp. 182–200. [Google Scholar] [CrossRef]

- Cleland-Huang, J.; Rahimi, M. A Case Study: Injecting Safety-Critical Thinking into Graduate Software Engineering Projects. In Proceedings of the 2017 IEEE/ACM 39th International Conference on Software Engineering: Software Engineering Education and Training Track (ICSE-SEET), Buenos Aires, Argentina, 20–28 May 2017; pp. 67–76. [Google Scholar]

- Huning, L.; Iyenghar, P.; Pulvermueller, E. UML Specification and Transformation of Safety Features for Memory Protection. In Proceedings of the 14th International Conference on Evaluation of Novel Approaches to Software Engineering, Heraklion, Greece, 4–5 May 2019; pp. 281–288. [Google Scholar]

- Huning, L.; Iyenghar, P.; Pulvermueller, E. A UML Profile for Automatic Code Generation of Optimistic Graceful Degradation Features at the Application Level. In Proceedings of the 8th International Conference on Model-Driven Engineering and Software Development—Volume 1: MODELSWARD, Valetta, Malta, 25–27 February 2020; pp. 336–343. [Google Scholar] [CrossRef]

- Huning, L.; Iyenghar, P.; Pulvermueller, E. A Workflow for Automatically Generating Application-level Safety Mechanisms from UML Stereotype Model Representations. In Proceedings of the 15th International Conference on Evaluation of Novel Approaches to Software Engineering—Volume 1: ENASE, Online Streaming, 5–6 May 2020; pp. 216–228. [Google Scholar] [CrossRef]

- Huning, L.; Iyenghar, P.; Pulvermüller, E. A Workflow for Automatic Code Generation of Safety Mechanisms via Model-Driven Development. In Evaluation of Novel Approaches to Software Engineering; Ali, R., Kaindl, H., Maciaszek, L.A., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 420–443. [Google Scholar]

- Huning, L.; Iyenghar, P.; Pulvermueller, E. UML-based Model-Driven Code Generation of Error Detection Mechanisms. In Proceedings of the 15th International Conference on Software Engineering Advances, Porto, Portugal, 18–22 October 2020; pp. 98–105. [Google Scholar]

- Jouault, F.; Allilaire, F.; Bezivin, J.; Kurtev, I. ATL: A model transformation tool. Sci. Comput. Program. 2006, 72, 31–39. [Google Scholar] [CrossRef]

- Borchert, C.; Schirmeier, H.; Spinczyk, O. Generative Software-based Memory Error Detection and Correction for Operating System Data Structures. In Proceedings of the 2013 43rd Annual IEEE/IFIP International Conference on Dependable Systems and Networks (DSN), Washington, DC, USA, 24–27 June 2013; pp. 1–12. [Google Scholar] [CrossRef]

- Trindade, R.; Bulwahn, L.; Ainhauser, C. Automatically Generated Safety Mechanisms from Semi-Formal Software Safety Requirements. In Computer Safety, Reliability, and Security; Bondavalli, A., Di Giandomenico, F., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 278–293. [Google Scholar]

- Hu, T.; Bertolott, I.C.; Navet, N. Towards seamless integration of N-version programming in model-based design. In Proceedings of the 2017 22nd IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Limassol, Cyprus, 12–15 September 2017; pp. 1–8. [Google Scholar] [CrossRef]

- Mader, R.; Grießnig, G.; Armengaud, E.; Leitner, A.; Kreiner, C.; Bourrouilh, Q.; Steger, C.; Weiß, R. A Bridge from System to Software Development for Safety-Critical Automotive Embedded Systems. In Proceedings of the 2012 38th Euromicro Conference on Software Engineering and Advanced Applications, Izmir, Turkey, 5–8 September 2012; pp. 75–79. [Google Scholar] [CrossRef]

- Pezzé, M.; Wuttke, J. Model-driven generation of runtime checks for system properties. Int. J. Softw. Tools Technol. Transf. 2016, 18, 1–19. [Google Scholar] [CrossRef]

- Wang, K.; Shen, W. Runtime Checking of UML Association-Related Constraints. In Proceedings of the 5th International Workshop on Dynamic Analysis, Minneapolis, MN, USA, 20–26 May 2007; p. 3. [Google Scholar] [CrossRef]

- Hein, C.; Ritter, T.; Wagner, M. System monitoring using constraint checking as part of model based system management. In Models in Software Engineering; Springer Berlin Heidelberg: Berlin/Heidelberg, Germany, 2008; pp. 206–211. [Google Scholar]

- Richters, M.; Gogolla, M. Aspect-Oriented Monitoring of UML and OCL Constraints. Available online: https://www.researchgate.net/publication/2908480_Aspect-Oriented_Monitoring_of_UML_and_OCL_Constraints (accessed on 12 October 2021).

- Rosenblum, D.S. A Practical Approach to Programming with Assertions. IEEE Trans. Softw. Eng. 1995, 21, 19–31. [Google Scholar] [CrossRef]

- Jeffrey, M.V.; Keith, W.M. Putting assertions in their place. In Proceedings of the 1994 IEEE International Symposium on Software Reliability Engineering, Monterey, CA, USA, 6–9 November 1994; pp. 152–157. [Google Scholar] [CrossRef]

- Tanzi, T.J.; Textoris, R.; Apvrille, L. Safety properties modelling. In Proceedings of the 2014 7th International Conference on Human System Interactions (HSI), Lisbon, Portugal, 16–18 June 2014; pp. 198–202. [Google Scholar] [CrossRef]

- Beckers, K.; Côté, I.; Frese, T.; Hatebur, D.; Heisel, M. Systematic Derivation of Functional Safety Requirements for Automotive Systems. In Computer Safety, Reliability, and Security; Bondavalli, A., Di Giandomenico, F., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 65–80. [Google Scholar]

- Yakymets, N.; Perin, M.; Lanusse, A. Model-driven multi-level safety analysis of critical systems. In Proceedings of the 9th Annual IEEE International Systems Conference, Vancouver, BC, Canada, 13–16 April 2015; pp. 570–577. [Google Scholar] [CrossRef]

- Kan, S.; Huang, Z. Detecting safety-related components in statecharts through traceability and model slicing. Softw. Pract. Exp. 2018, 48, 428–448. [Google Scholar] [CrossRef]

- Elektrobit. EB Tresos Safety. 2021. Available online: https://www.elektrobit.com/products/ecu/eb-tresos/functional-safety (accessed on 12 October 2021).

- Vector. PrEEVision. 2021. Available online: https://www.vector.com/int/en/products/products-a-z/software/preevision/ (accessed on 12 October 2021).

- SAFEADAPT EU-Project (2013–2017). Safe Adaptive Software for Fully Electric Vehicles. 2017. Available online: www.safeadapt.eu (accessed on 12 October 2021).

- Penha, D.; Weiss, G.; Stante, A. Pattern-Based Approach for Designing Fail-Operational Safety-Critical Embedded Systems. In Proceedings of the 2015 IEEE 13th International Conference on Embedded and Ubiquitous Computing, Porto, Portugal, 21–23 October 2015; pp. 52–59. [Google Scholar] [CrossRef]

- Weiss, G.; Schleiss, P.; Drabek, C. Towards Flexible and Dependable E/E-Architectures for Future Vehicles. In Proceedings of the 4th International Workshop on Critical Automotive Applications: Robustness & Safety (CARS 2016), Göteborg, Sweden, 6 September 2016. [Google Scholar]

- Ruiz, A.; Juez, G.; Schleiss, P.; Weiss, G. A safe generic adaptation mechanism for smart cars. In Proceedings of the 2015 IEEE 26th International Symposium on Software Reliability Engineering (ISSRE), Gaithersbury, MD, USA, 2–5 November 2015; pp. 161–171. [Google Scholar] [CrossRef]

- SAFURE EU-Project (2015–2018). Safety and Security by Design for Interconnected Mixed-Critical Cyber-Physical Systems. 2018. Available online: https://cordis.europa.eu/project/id/644080 (accessed on 12 October 2021).

- Moestl, M.; Thiele, D.; Ernst, R. Invited: Towards fail-operational Ethernet based in-vehicle networks. In Proceedings of the 2016 53nd ACM/EDAC/IEEE Design Automation Conference (DAC), Austin, TX, USA, 5–9 June 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Thiele, D.; Ernst, R.; Diemer, J. Formal Worst-Case Timing Analysis of Ethernet TSN’s Time-Aware and Peristaltic Shapers. In Proceedings of the IEEE Vehicular Networking Conference (VNC), Kyoto, Japan, 16–18 December 2016; pp. 251–258. [Google Scholar] [CrossRef]

- Thiele, D.; Ernst, R. Formal analysis based evaluation of software defined networking for time-sensitive Ethernet. In Proceedings of the 2016 Design, Automation & Test in Europe Conference & Exhibition (DATE), Dresden, Germany, 5–9 June 2016; pp. 31–36. [Google Scholar] [CrossRef]

- Fernandez, G.; Abella, J.; Quinones, E.; Fossati, L.; Zulianello, M.; Vardanega, T.; Cazorla, F.J. Seeking Time-Composable Partitions of Tasks for COTS Multicore Processors. In Proceedings of the 2015 IEEE 18th International Symposium on Real-Time Distributed Computing, Auckland, New Zealand, 13–17 April 2015; pp. 208–217. [Google Scholar] [CrossRef]

- Girbal, S.; Jean, X.; Le Rhun, J.; Pérez, D.G.; Gatti, M. Deterministic platform software for hard real-time systems using multi-core COTS. In Proceedings of the 2015 IEEE/AIAA 34th Digital Avionics Systems Conference (DASC), Prague, Czech Republic, 13–17 September 2015; pp. 8D4-1–8D4-15. [Google Scholar] [CrossRef]

- Fernandez, G.; Jalle, J.; Abella, J.; Quinones, E.; Vardanega, T.; Cazorla, F.J. Computing Safe Contention Bounds for Multicore Resources with Round-Robin and FIFO Arbitration. IEEE Trans. Comput. 2016. [Google Scholar] [CrossRef]

- Antonino, P.O.; Keuler, T.; Nakagawa, E.Y. Towards an approach to represent safety patterns. In Proceedings of the Seventh International Conference on Software Engineering Advances, Lisbon, Portugal, 18–23 November 2012; pp. 228–237. [Google Scholar]

- Subasi, O.; Unsal, O.; Labarta, J.; Yalcin, G.; Cristal, A. CRC-Based Memory Reliability for Task-Parallel HPC Applications. In Proceedings of the 2016 IEEE International Parallel and Distributed Processing Symposium (IPDPS), Chicago, IL, USA, 23–27 May 2016; pp. 1101–1112. [Google Scholar] [CrossRef]

- Pattabiraman, K.; Grover, V.; Zorn, B.G. Samurai: Protecting Critical Data in Unsafe Languages. In Proceedings of the 3rd ACM SIGOPS/EuroSys European Conference on Computer Systems 2008, Glasgow, UK, 1–4 April 2008; pp. 219–232. [Google Scholar] [CrossRef]

- Chen, D.; Messer, A.; Bernadat, P.; Fu, G.; Dimitrijevic, Z.; Lie, D.J.F.; Mannaru, D.; Riska, A.; Milojicic, D. JVM Susceptibility to Memory Errors. In Proceedings of the 2001 Symposium on JavaTM Virtual Machine Research and Technology Symposium, Monterey, CA, USA, 23–24 April 2001; pp. 67–78. [Google Scholar]

- Arora, A.; Kulkarni, S. Detectors and Correctors: A Theory of Fault-Tolerance Components. In Proceedings of the 18th International Conference on Distributed Computing Systems ICDCS’98, Amsterdam, The Netherlands, 26–29 May 1998; pp. 436–443. [Google Scholar] [CrossRef]

- Arora, A.; Kulkarni, S. Component Based Design of Multitolerant Systems. IEEE Trans. Softw. Eng. 1998, 24, 63–78. [Google Scholar] [CrossRef][Green Version]

- Lin, Y.; Kulkarni, S.; Jhumka, A. Automation of fault-tolerant graceful degradation. Distrib. Comput. 2019, 32, 1–25. [Google Scholar] [CrossRef]

- Chen, J.; Kulkarni, S. MR4UM: A Framework for Adding Fault Tolerance to UML State Diagrams. Theor. Comput. Sci. 2013, 496, 17–33. [Google Scholar] [CrossRef]

- Noyer, A.; Iyenghar, P.; Engelhardt, J.; Pulvermueller, E.; Bikker, G. A model-based framework encompassing a complete workflow from specification until validation of timing requirements in embedded software systems. Softw. Qual. J. 2016, 25, 671–701. [Google Scholar] [CrossRef]

- Iyenghar, P.; Wessels, S.; Noyer, A.; Pulvermueller, E. Model-based tool support for energy-aware scheduling. In Proceedings of the Forum on Specification and Design Languages, Bremen, Germany, 14–16 September 2016. [Google Scholar]

- Iyenghar, P.; Pulvermueller, E. A Model-Driven Workflow for Energy-Aware Scheduling Analysis of IoT-Enabled Use Cases. IEEE Internet Things J. 2018, 5, 4914–4925. [Google Scholar] [CrossRef]

- O’Shea, D.; Ortin, F.; Geary, K. A virtualized test automation framework: A DellEMC case study of test automation practice. Softw. Pract. Exp. 2018, 49, 329–337. [Google Scholar] [CrossRef]

- Nooraei Abadeh, M.; Ajoudanian, S. A model-driven framework to enhance the consistency of logical integrity constraints: Introducing integrity regression testing. Softw. Pract. Exp. 2019, 49, 274–300. [Google Scholar] [CrossRef]

- Uzun, B.; Tekinerdogan, B. Architecture conformance analysis using model-based testing: A case study approach. Softw. Pract. Exp. 2019, 49, 423–448. [Google Scholar] [CrossRef]

- Robinson, R.M.; Anderson, K.J. SIL Rating Fire Protection Equipment. In Proceedings of the 8th Australian Workshop on Safety Critical Systems and Software—Volume 33, Canberra, Australia, 1 October 2003; pp. 89–97. [Google Scholar]

- Kim, S.; Kim, Y. A case study on an evaluation procedure of hardware SIL for fire detection system. Int. J. Appl. Eng. Res. 2017, 12, 359–364. [Google Scholar]

- Parr, T. ANTLR. 2021. Available online: https://www.antlr.org/index.html (accessed on 12 October 2021).

- Armoush, A. Design Patterns for Safety-Critical Embedded Systems. Ph.D. Thesis, RWTH Aachen University, Aachen, Germany, 2010. [Google Scholar]

- Kolovos, D.; Rose, L.; Garcia-Dominguez, A.; Paige, R. The Epsilon Book. Available online: https://www.eclipse.org/epsilon/doc/book/ (accessed on 12 October 2021).

- Iyenghar, P.; Huning, L.; Pulvermueller, E. Early Synthesis of Timing Models in AUTOSAR-based Automotive Embedded Software Systems. In Proceedings of the 8th International Conference on Model-Driven Engineering and Software Development—Volume 1: MODELSWARD, Valetta, Malta, 25–27 February 2020; pp. 26–38. [Google Scholar] [CrossRef]

- Iyenghar, P.; Huning, L.; Pulvermueller, E. Automated End-to-End Timing Analysis of AUTOSAR-based Causal Event Chains. In Proceedings of the 15th International Conference on Evaluation of Novel Approaches to Software Engineering—Volume 1: ENASE, Online Streaming, 5–6 May 2020; pp. 477–489. [Google Scholar] [CrossRef]

- Iyenghar, P.; Huning, L.; Pulvermueller, E. Model-Based Timing Analysis of Automotive Use Case Developed in UML. In Evaluation of Novel Approaches to Software Engineering; Ali, R., Kaindl, H., Maciaszek, L.A., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 360–385. [Google Scholar]

- Borchert, C.; Schirmeier, H.; Spinczyk, O. Generic soft-error detection and correction for concurrent data structures. IEEE Trans. Dependable Secur. Comput. 2017, 14, 22–36. [Google Scholar] [CrossRef]

- Object Management Group. OMG Systems Modeling Language Version 1.6; Technical Report; Object Management Group: Needham, MA, USA, 2019. [Google Scholar]

| Safety Mechanism | Time (s) | Safety Mechanism | Time (s) |

|---|---|---|---|

| Range Check (access) | Range Check (modify) | ||

| Update Check (access) | Update Check (modify) | ||

| CRC Check (access) | CRC Check (modify) | ||

| TMR Check (access) | TMR Check (modify) | ||

| Deadline Supervision | Majority Voting | ||

| Concurrent Watchdog | Median Voting | ||

| HWTimer Watchdog | Average Voting |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huning, L.; Pulvermueller, E. Automatic Code Generation of Safety Mechanisms in Model-Driven Development. Electronics 2021, 10, 3150. https://doi.org/10.3390/electronics10243150

Huning L, Pulvermueller E. Automatic Code Generation of Safety Mechanisms in Model-Driven Development. Electronics. 2021; 10(24):3150. https://doi.org/10.3390/electronics10243150

Chicago/Turabian StyleHuning, Lars, and Elke Pulvermueller. 2021. "Automatic Code Generation of Safety Mechanisms in Model-Driven Development" Electronics 10, no. 24: 3150. https://doi.org/10.3390/electronics10243150

APA StyleHuning, L., & Pulvermueller, E. (2021). Automatic Code Generation of Safety Mechanisms in Model-Driven Development. Electronics, 10(24), 3150. https://doi.org/10.3390/electronics10243150