Abstract

Medical imaging is considered one of the most important advances in the history of medicine and has become an essential part of the diagnosis and treatment of patients. Earlier prediction and treatment have been driving the acquisition of higher image resolutions as well as the fusion of different modalities, raising the need for sophisticated hardware and software systems for medical image registration, storage, analysis, and processing. In this scenario and given the new clinical pipelines and the huge clinical burden of hospitals, these systems are often required to provide both highly accurate and real-time processing of large amounts of imaging data. Additionally, lowering the prices of each part of imaging equipment, as well as its development and implementation, and increasing their lifespan is crucial to minimize the cost and lead to more accessible healthcare. This paper focuses on the evolution and the application of different hardware architectures (namely, CPU, GPU, DSP, FPGA, and ASIC) in medical imaging through various specific examples and discussing different options depending on the specific application. The main purpose is to provide a general introduction to hardware acceleration techniques for medical imaging researchers and developers who need to accelerate their implementations.

1. Introduction

Medical imaging is a set of techniques and methods that noninvasively acquire a variety of images of the body’s internal aspects by means of several effects and interactions with different tissues, revolutionizing modern diagnostic imaging, radiology, and nuclear medicine [1,2]. Currently, there are a wide range of imaging modalities, i.e., ultrasound (US), conventional radiology, arthroscopy, computed tomography (CT), magnetic resonance imaging (MR), bone scintigraphy, positron emission tomography (PET), and combined technologies such as PET/MR, that provide complementary information, being the radiologists who determine the most appropriate examination in a specific clinical situation [3]. For example, CT images provide information on the densities of different tissues; MR images can provide anatomical, functional, perfusion or diffusion information depending on the different sequences used; and PET images provide metabolic information, perfusion or receptor occupation and binding by detecting the concentration of radiotracers within the body.

Medical imaging applications are very demanding systems. Earlier prediction and treatment have been driving the acquisition of higher image resolutions as well as the fusion of different modalities, raising the need for sophisticated software/hardware systems for medical image registration, storage, analysis, and processing [4,5]. They demand complex computations and real-time processing of the images, whose number, size, resolution, and bit depth tend to increase with the evolution of the technology scenario, and given the new clinical pipelines and the huge clinical burden of hospitals, these systems are often required to provide both highly accurate and real-time processing of large amounts of imaging data. This is crucial because it is directly related to the uncomfortable patient experience and generally higher clinical costs, hence the importance of achieving faster and better imaging studies. Additionally, lowering the prices of each part of imaging equipment, as well as its development and implementation, and increasing their lifespan is crucial to grant more affordable and accessible healthcare in general [6,7]. Given these circumstances, the development and implementation of novel hardware architectures have been an essential requirement when dealing with imaging technologies and real-time image processing in healthcare applications.

In fact, the most common and flexible platform to code or develop on is probably the personal computer or PC. As such, many applications are initially developed and refined for Central Processing Units (CPUs). While their computing capabilities might not match up to more specialized platforms, CPUs must not be underestimated. Their computational performance has increased drastically since the dawning of this technology, either by increasing their clock frequency or by increasing the number of cores. Currently, most CPUs achieve a compromise between the number of cores and clock frequency, allowing different combinations of performance and power-draw to be achieved. This trend has also enabled multi-processing using threads.

In this respect, to reduce the computation time, it is usually thought of first using hardware with high computational power. Although the processing power of CPUs in PCs continues to increase, this option may not be the best choice depending on the specific applications. This is particularly relevant when it comes to designing a system that requires low power consumption and high performance.

In the literature, the most discussed hardware accelerators for computer vision and image processing algorithms can be grouped into Graphics Processing Units (GPUs), Digital Signal Processors (DSP), and Field Programmable Gate Arrays (FPGAs) [8]. In this review, Application Specific Integrated Circuits (ASICs) are also included, as they have been widely used for the implementation of certain medical imaging equipment parts.

Despite being a more specialized technology, the GPUs platforms fit perfectly in the medical imaging niche, offering a way to speed up certain computational tasks and algorithms (compared to CPUs) and still maintain a certain amount of flexibility. As such, GPUs are often used when CPUs fail to meet the needs of a specific application, but another specialized platform cannot be used either.

While usually not the fastest platform, DSPs specialize in digital signal processing, and as such, are the best performers in that field. They barely offer any flexibility, so it is common to use them combined with other hardware solutions in complex designs where fast digital signal processing is needed, yet it is not the only kind of processing performed.

FPGAs are integrated circuits capable of post-fabrication reconfiguration, both interconnect and hardware functionality, using hardware description languages (HDLs). This type of device is based on an array of logic blocks, such as lookup tables (LUTs), flip flops and logic gates, connected through programmable interconnects along with input/output ports. Therefore, FPGAs allow custom hardware design with the flexibility to make modifications due to design errors or improvements. Thus, it is recommended to use FPGAs when the system specifications demand high performance, usually with real-time processing. Indeed, they are used to avoiding becoming involved in the complexities and lower flexibility of ASIC design (as it will be described now).

ASICs are devices made specifically to fulfill the required functionality; thus, as its name suggests, they are integrated circuits tailored for specific applications. Consequently, ASICs provide smaller form factors, higher performance, and less power consumption since they are manufactured to custom design specifications. Furthermore, their implementation offers a significantly lower cost per unit for very high-volume designs. However, designing an ASIC is a long, complex, and high-risk endeavor that requires dedicating many resources.

Nowadays, modern hardware architectures have evolved to provide competitive clock rates for use in high-capacity imaging equipment. In addition, all architectures have benefited from increased logic density, as well as other features such as integration of dedicated memory and high-speed serial input/output. As such, all modern hardware architectures may offer competitive solutions in the image processing space depending on system requirements and flexibility.

Several reviews on hardware accelerators can be found in the state-of-the-art. However, none of these reviews offers a general overview of all the hardware technologies in the field of medical imaging. While the most comprehensive reviews analyze the different hardware technologies [9,10,11], they do it in the field of artificial intelligence and/or deep learning algorithms in general.

On the other hand, those reviews focused on medical image processing generally do not cover all the hardware possibilities but only one of them. Different surveys can be found focused on the use of GPU for medical imaging in general [12,13], but also more specifically on medical image reconstruction [14], segmentation [15] or registration [16,17]. Similar results appear related to the use of FPGA, both in general medical image processing [18] and in specific applications [19]. A specific review on photon counting ASICs for spectroscopic X-ray imaging, with emphasis on the CT medical imaging application, is presented in [20]. To the best of our knowledge, there are no specific reviews on DSP technologies focused on medical image processing.

The present paper offers an introduction on the different hardware accelerators and their applications on medical imaging that will be of high interest for those researchers and developers in the field of medical imaging that are concerned about using different hardware architectures to speed up their implementations. In the next section, a brief presentation explaining the evolution of each one of the technologies is offered, followed by several examples of their application in medical imaging. In the discussion, a comparison of the technologies is detailed in depth, highlighting strengths and drawbacks, and general guidelines for selecting the proper technology for every need are presented.

2. Evolution of Hardware Architectures and Their Applications in Real-Time Medical Imaging

In this section, the evolution of the different architectures (CPUs, GPUs, DSPs, FPGAs and ASICs) and their use and performance in medical imaging through several specific examples are explained.

2.1. Central Processing Units (CPUs)

2.1.1. Evolution of CPU Architectures

Consumer CPU architectures have traditionally powered their performance by increasing the frequency clock as well as including parallel capabilities since the 1990s. In the Intel x86 family, the Single Instruction Multiple Data (SIMD) introduction was coined MultiMedia eXtensions (MMX) in 1996 for the release of the Intel Pentium MMX processor [21]. They were composed of eight 64-bit wide registers for integer data with an instruction set of 57 operations. They were focused on the early multimedia needs, mainly in the video game industry and imagery from the Internet irruption. They could execute one instruction in 64-bit integer data (such as 8 independent bytes or 2 32-bits words) simultaneously, saving loop iterations in integer data processing (such as images) [22].

With the 3D video game industry in mind, in 1998, AMD released an extension for supporting floating-point operations on the MMX registers called 3DNow! technology [23]. In 1999, Intel released the Pentium III processor with new 128-bit floating-point registers for 4 single precision 32-bit floating-point data called SSE (Streaming SIMD Extensions) and an instruction set of 70 operations. After several updates of the SSE instruction set for packing different integer and floating-point data configurations in the 128-bit registers, Intel launched the Advanced Vector eXtensions (AVX) in 2011 [24]. AVX registers double the 128-bit wide SSE registers to 256-bits, adding extra updates to the instruction set, which were again renewed in 2014 with the AVX2 SIMD technology. Initially for the Intel Xeon Phi HPC platform, an update of 256 to 512-bit wide registers was released with the AVX-512 extensions [25].

All these SIMD facilities were added for parallel processing and were especially suited for image processing tasks. An optimized compiler configuration would try to automate the use of these instructions and registers, though sometimes a good data layout is needed to ease the automatic vectorization.

For many years, Moore’s law and Dennard scaling have delivered greater performance because a greater number of transistors per chip can be packed, and they could perform faster with less power consumption almost every two years [26]. However, the physical limits have been reached, and obtaining a constant free speedup increase with new computer generation has slowed down considerably [27,28]. In the past two decades, it has been seen how CPUs have evolved from single-core architectures (only one processor in die to process instructions) to multicore architectures combining several independent cores in a single die. Nowadays, multicore architectures have become very popular, and desktop CPUs as well as high-end computing machines improve their computational performance by means of parallel resources.

On the other hand, as stated by Amdahl’s Law, the performance of parallel computing is limited by its serial components [29,30]. Although multicore CPUs offer outstanding instruction execution speed with reduced power consumption, optimizing performance of individual processors and then incorporating them by interconnection between processors and access to shared resources on a single die is a non-trivial task [31,32].

In this scenario, concurrent primitives such as mutex, locks, and monitors, etc., could be employed for synchronization of computing threads and avoidance of race conditions in shared memory problems. However, their use becomes extremely difficult when scaling from two to more computing threads. This situation led to the development of solutions from the low-level POSIX Threads (Pthreads) [33] to higher level open proposals such as the Open Multi-Processing specification (OpenMP) [34], or proprietary solutions such as Intel Threading Building Blocks (TBB) [35]. Moreover, simultaneous multithreading technologies such as 2-way Intel Hyper-threading or 4-way IBM BlueGene can also spread the parallel capabilities of a desktop processor. Based on this, if an average quad-core (four cores) processor is being used, it is possible to run 4, 6 or up to 8 threads that would perform optimally (with gains up to 30% [36]). Likewise, if a hexa-core (six cores), octa-core (eight cores) or deca-core (ten cores) processor is being used, 12, 16 or 20 threads could be run up with a good performance, respectively. Currently, it is even possible to find processors with up to 128 cores (ARM Ampere Altra, single threaded cores) or with 64 cores and 128 threads (AMD Ryzen). Thus, OpenMP is an example of how to parallelize the computation successfully, efficiently, and easily, thus, speeding up CPU-based implementations.

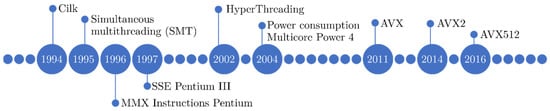

Figure 1 shows the most significant milestones over time on CPUs that have contributed significantly to CPU-based hardware acceleration for image processing.

Figure 1.

Most significant milestones over time on CPU-based hardware acceleration for image processing.

2.1.2. CPU Architectures in Medical Imaging

Medical imaging has been historically tied to CPU computing, as it has been the more accessible and flexible architecture to prototype and deploy algorithms. In this sense, several studies have considered and studied the effect of multicore architectures and parallel processing in several medical imaging applications.

Membarth et al. performed a comprehensive assessment of the use of different frameworks for multicore architectures to single-core implementations in an X-ray image registration problem [37]. They compared OpenMP, Cilk++, TBB, RapidMind and OpenCL as parallelization languages for multicore processors and provided with usability, performance and overhead estimations, showing a speedup of ~6 times for an octa-core processor and up to ~18 times when using 24 cores compared to a single-core processor sequential implementation. In addition, the study performed by S. Ekström demonstrated how the combination of a GPU with a CPU can accelerate the image registration process by parallelizing the tasks instead of only using a GPU or a CPU [38]. Thus, they performed the matching algorithm in the GPU while the registration optimization was computed in the CPU. The performance of their method was evaluated on brain images. They sped up the process by a factor of four and eight compared to the GPU-only and the advanced normalization tools (ANTs) implementations, respectively.

Kegel et al. showed how the use of multicore architectures improved the computational performance of the list-mode Ordered Subset Expectation Maximization (OSEM) algorithm for 3D PET image reconstruction [39]. In this study, the authors compared the use of Pthreads, OpenMP and TBB, demonstrating a speedup of parallel implementations for a single subset iteration of up to approximately five times on a dual quad-core processor.

Kalamkar et al. showed the speedup obtained by using multicore systems to improve the performance of the Non-Uniform Fast Fourier Transform (NUFFT) in iterative 3D non-Cartesian MRI reconstruction [40]. The high performance of their algorithm implementation relied on an efficient SIMD utilization rate and high parallel efficiency, demonstrating a speedup of more than four times on a 12-core processor compared to the best implementation in that time.

Saxena et al. compared the use of multicores in a method for kidney segmentation from abdominal images [41]. The authors showed how some segmentation tasks could be more efficient when each core is responsible for an individual region, demonstrating a speedup of ~2–4 times on a quad-core processor. Moreover, several groups have proven the efficiency and acceleration of fuzzy c-means and fuzzy c-means based segmentations when they are implemented on hybrid CPU-GPU designs. This way, promising results have been obtained in different parts of the body, such as brain and breast [42,43].

CPUs are accessible hardware architectures. Hence, the use of CPUs is much more widespread in the clinic than that of GPUs due to their lower complexity and cost. For that reason, Vaze et al. designed a CNN and implemented it on a CPU system for real-time ultrasound segmentation [44]. They reduced the computation time by 9 and the memory requirements by 420 compared to the traditional U-net method. Thus, images were processed at 30 fps, enabling real-time applications suitable for ultrasound imaging in the clinical environment.

All these publications confirm that the use of multicore architectures increases the CPU performance up to a point where serial component restrictions limit the performance growth, even lowering it. The combination of GPU with CPU designs also reduces the computation time in medical image processing. Moreover, the accessibility of CPUs compared to other architectures makes them convenient for implementations in the clinic, even for deep learning applications.

2.2. Graphics Processing Units (GPUs)

2.2.1. Evolution of GPU Architectures

At the same time CPUs were evolving into more efficient architectures. GPUs evolved dramatically to allow tackling problems other than computer graphics and video games. The GPU architecture has fundamental differences in comparison with CPU architecture. First, GPUs possess more Arithmetic Logic Units (ALU), making it possible to compute more operations, but on the contrary, they lose flow control [45]. Second, GPUs are equipped with small caches, focused on producing a better bandwidth output instead of reducing latency [46].

To use this hardware, algorithms must be adapted to this new paradigm. Two parts in this CPU–GPU computing ecosystem can be distinguished: host and device. The host is the CPU that controls the device computation, whereas the device is the graphics hardware that processes the data. The instructions to accomplish a specific procedure executed are encoded in kernels.

The first generation of GPUs was designed as simpler peripherals to display information on monitors. After this, the second generation incorporated dedicated memory and processors to render effects. They were configurable but not programmable. At the same time, some 3D OpenGL [47], and DirectX [48] libraries were released. The third generation came with two specific graphic processors allowing the implementation of two stages: vertex shaders and fragment shaders through a rendering pipeline [49]. In the next generation, a unified device architecture was created after merging these two kinds of processors into a single scalar processor.

Programming the GPU for general purpose applications using this graphics context was called GPGPU (general-purpose computation on GPUs). Although a wide range of functions had been added, the programming was still complicated. There was an effort in the software direction to create a model to better manipulate the resources and let the programmers map more problems without describing them in terms of graphics (GPU Computing).

Since then, there has been a constant evolution both in hardware and in software. There are currently several GPU manufacturers: AMD/ATI, Intel, and NVIDIA, to name the most relevant ones. AMD stream computing technology came to the market a long time after NVIDIA introduced CUDA (originally Compute Unified Device Architecture) [50]. As a result, NVIDIA has far more applications available for CUDA than AMD/ATI (HIP programming language) does for its competing stream technology [51].

Each generation includes more processors for computation, increasing parallelism and scalability, for example, from NVIDIA Tesla C870 (2007) with a processor size of 90 nm and 681 million transistors to Tesla A100 (2020), which includes 54.2 billion transistors with a processor size of 7 nm [52]. These graphics cards deliver 0.345 and 19.5 TFlops, respectively. Furthermore, there has also been an effort to reduce development time costs using unified memory space (Unified Virtual Addressing in NVIDIA or Heterogeneous Unified Memory Access for AMD). Global memory in the graphic card (DRAM technology) is conceptually organized into a sequence of byte segments and connected to the host through a high-speed IO bus slot, typically a PCI-Express and, in current high-performance systems, NVLink for NVIDIA [53]. Large differences can be shown, from Tesla C870 (PCIe 1.0 x16) 76.80 GB/s to Tesla A100 (NVLink 3rd) 1555.80 GB/s. The memory cache system has been improved, imitating the evolution in CPU, and in the new graphic cards, the complexity of the caches allows having no alignment memory access patterns with fewer penalizations [54].

This evolution has also been on the software side to exploit spatial locality with efficient representations, i.e., the multiplication of the vector-matrix with new ways to store the spare matrix [55], highlighting the importance of the low occupancy for specific problems using instruction-level parallelism [56], creating more sophisticated libraries for parallel primitives such as reduction, sort, etc., for example, Thrust [57] or low-level primitives to improve the codification of collaborative algorithms on the GPU side [58].

The development of highly optimized libraries for computation has increased every year and helped to tackle new problems in a more productive way. Apart from libraries for Fourier transform (FFT), basic linear algebra (BLAS) or random number generation (rand), to name a few, there also exists the possibility of integrating Matlab with native kernels, which allows reducing the time of the computation for those expensive functions [59].

As it has happened to other fields in computer science, there has been a common effort to standardize the GPU Computing model. Although the standard OpenCL was released in 2008 [60], it is still a work in progress. The OpenCL idea promotes multi-platform adoption rather than being bound to a single vendor, and it is a collaboration among software vendors, computer system designers (including designers of mobile platforms) and microprocessors (embedded, accelerator, CPU and GPU) manufacturers. AMD’s ROCm (Radeon Open Compute) is an open software platform to provide a heterogeneous ecosystem for computing on GPU with portability and flexibility [61]. Furthermore, there are also some libraries to alleviate the difficulties in programming the algorithms in the parallel landscape using GPUs. For example, OpenACC exposes a transparent way to parallelize code such as OpenMP, which is very convenient for a large community in research [62]. With the emerge of machine learning approaches, the use of GPUs for training and inferring has increased. This is the reason why there are also many libraries to make the most out of the GPUs with machine learning frameworks, i.e., Caffe [63], Tensorflow [64] or PyTorch [65].

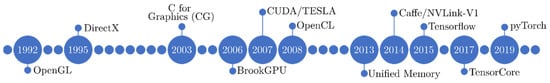

Figure 2 shows the most significant milestones over time on different technologies (libraries, GPUs, frameworks, etc.) that have contributed significantly to the development of applications requiring GPU-based hardware acceleration for image processing.

Figure 2.

Most significant milestones over time on GPU-based hardware acceleration for image processing.

2.2.2. GPU Architectures in Medical Imaging

Many research fields have progressed and included GPU-based acceleration techniques for parallel programming with very significant results. In such scenario, medical imaging has been one of the most demanding fields for GPU computation, with GPUs found in nearly all imaging modalities, bringing high computation capabilities to the edge equipment in several applications [12,13,15]. Here, a few examples where GPUs played a crucial role in achieving faster computations and leading to real-time implementations are provided.

In 2010, Johnson et al. proposed an iterative GPU-based fat and water decomposition with an echo asymmetry and least squares reconstruction (IDEAL) scheme. They approximated the fat and water parameters and compared the Brent method with the search for the golden section to optimize the unknown parameter of MR field inhomogeneity (psi) in the IDEAL equations. They stated that their algorithm was more robust to the ambiguities of fatty water using a modified planar extrapolation of the psi method. Their experiments showed that the grease-water rebuild time of their GPU deployment methods could be rapidly and strongly reduced by a factor of 11.6 on a GPU compared to CPU-based rebuild [14,66].

Herraiz et al. presented a GPU-based implementation of the OSEM iterative reconstruction algorithm for 3D PET image reconstruction [67]. In this study, the authors compared a GPU implementation using CUDA against a single-core CPU implementation, demonstrating a speedup factor of up to 72 times on a NVIDIA Tesla C1060 compared to an Intel Core i7.

Alcaín et al. proposed a very fast implementation of an algorithm for modality propagation/synthesis based on a groupwise patch-based approach and a multi-atlas dictionary [68,69]. They proposed and implemented an accelerated version of the patch-based modality propagation algorithm to compare the benefits of using multicore CPUs and CUDA-based GPU models, using both a global memory and a shared memory version. The evaluation of these GPU-based implementations demonstrated how the use of these techniques gained up to 15.9 times of speedup against a multicore CPU solution and up to about 75 times against a single-core CPU solution.

Punithakumar et al. compared an implementation of an image registration algorithm on the GPU versus the CPU. The goal of their research is to develop computationally efficient approaches for deformable image registration to find the point correspondence between image slices with the delineated cardiac right ventricle. The proposed approach offered a computational performance improvement of about 19 times compared to the CPU implementation while maintaining the same level of segmentation accuracy [70].

Florimbi et al. developed a multi-GPU support system that provides accurate brain cancer delimitation based on hyperspectral imaging constrained to providing a real-time response to avoid prolonging the surgery [71]. Their most efficient implementation showed the ability to classify images in less than three seconds.

Torti et al. presented a parallel pipeline for skin cancer detection that exploits hyperspectral imaging [72]. They showed how adopting multicore and many-core technologies, such as OpenMP and CUDA paradigms, and combining them led to a significant reduction in computational times, showing that a hybrid parallel approach can classify hyperspectral images in less than 1 s.

Zachariadis et al. introduced a novel implementation of B-spline interpolation (BSI) on GPUs to accelerate the computation of the deformation field in non-rigid image registration algorithms for Image Guided Surgery. Its implementation of BSI on GPUs minimizes the data that must be moved between memory and processing cores during input mesh loading and takes advantage of the GPUs large on-chip register file to reuse input values. They succeeded in reducing computational complexity and increasing accuracy. They evaluated the method on liver deformation caused by pneumoperitoneum, i.e., inflation of the abdomen. They managed to improve BSI performance by an average of 6.5 times and interpolation accuracy by 2 times compared to three state-of-the-art GPU implementations. Through preclinical validation, they were able to demonstrate that their optimized interpolation accelerates a non-rigid image registration algorithm, which is based on the free-form deformation (FFD) method, by up to 34%. Thus, the study showed the achievement of significant performance and accuracy gains with the novel parallelization scheme presented that makes effective use of the GPU resources. They showed that the method improves the performance of real medical imaging registration applications used in practice [73].

Milshteyn et al. proposed a fast, patient-specific workflow for online specific absorption rate supervision using a fast electromagnetic (EM) solver [74]. The MARIE® package used in their approach solves the EM fields in the patient accelerated on an NVIDIA Tesla P100 GPU, and the EM simulations required an average and standard deviation of 290.3 ± 67.3 s.

Another example of 3D image registration is proposed by Brunn et al. [75], who presented an implementation of a mixed-precision Gauss–Newton–Krylov solver for diffeomorphic two-image registration. The work extended the publicly available CLAIRE library to GPU architectures. Their algorithms managed to significantly reduce the execution time of the two main computational kernels of CLAIRE: derivative computation and sparse data interpolation. First, they implemented highly optimized, mixed-precision GPU kernels for the evaluation of sparse data interpolation; second, they replaced the first-order derivatives based on the fast Fourier transform (FFT) with optimized eighth-order finite differences; and finally, they compared them with state-of-the-art CPU and GPU implementations. They showed that it is possible to record 2563 clinical images in less than 6 s on a single NVIDIA Tesla V100. This is a speedup of more than 20× over the current version of CLAIRE and more than 30 times over existing GPU implementations.

With the development and improvement of new modern approaches, such as deep learning (DL) research in medical imaging, more efficient and improved approaches that were not previously feasible are being developed thanks to the evolution of GPUs. In this sense, training a DL model takes hours or even days, depending on its complexity and the amount of training data; however, presenting a new input to the already trained model provides results in seconds, which has led to a decrease in time acquisition and processing for several approaches.

Martinez-Girones et al. recently showed how the use of DL architectures took around 90 h for training a DL model used for head and neck MR-based pseudo-CT synthesis, but around 1 min to reconstruct the whole pseudo-CT volume in a NVIDIA RTX 2080Ti [76]. These results remark an affordable way to generate images of specific modalities from other different ones in real-time.

These works settle how the use of GPU architectures has enabled promising results in medical imaging acquisition, processing and enhancement in the past ten years due to their high efficiency in low time rates and the heterogeneity of their applications. Due to this fact, GPUs are powering the next generation of medical image algorithms, having a significant impact in medical imaging modalities such as CT, MRI, PET, SPECT, and US, etc. and, consequently, opening the way to innovative medical imaging applications.

2.3. Digital Signal Processors (DSPs)

2.3.1. Evolution of DSPs Architectures

While theories concerning digital signal processing date back as far as the 1960s, it was during the late 1970s that Speak and Spell™ from Texas Instruments first proved that DSPs could operate in real-time and be cost effective [77,78]. In fact, DSPs are a class of microprocessors whose architectures have been optimized for numeric processing operations and algorithms, with this way being faster, cheaper, and more energy efficient than usual microprocessors while still being reprogrammable.

DSPs are designed to measure, filter and/or compress continuous signals. They can obtain data and execute instructions simultaneously with low latency and power consumption. As a result, these devices typically do not require a demanding power system or cooling system, making them suitable for use in portable devices. Furthermore, this characteristic makes them less risky—in terms of their re-programmability and lifespan—and more cost-effective than ASICs for small productions [79,80]. Their evolution has been motivated by the custom algorithms they execute, as every feature is designed to increase the performance of the heavy computations carried out by the processor.

The main improvements performed over the years in the architectures of DSP processors are mostly related to parallelization of processes, enhancing memory access, and reducing the number of clock cycles per operation in the most common operations, multiply and accumulate (MAC) [8,81]. DSPs are specifically designed for digital signal processing, and particularly in image analysis, one of the common calculation algorithms is filtering, where multiply and accumulate (MAC) operations are commonly used.

In this way, DSP processors evolved to achieve a single clock cycle MAC, first introduced in 1982 by Texas Instruments. This feature is so important that modern DSPs have at least one dedicated MAC unit, and the number of MACs per second has become a measuring unit in the field. Despite these efforts, the MAC block still lies in most DSP critical paths to this day, impacting both their overall speed and power draw [82].

To be able to perform such specific operations as fast as possible, the architectures implement several execution units to parallelize the operations. Multicore chips have been critical since the early 1990s, when Texas Instruments launched the TMS320C40, the first multicore DSP capable of parallel processing [83]. As stated above, DSP processors excel at MAC-intensive operations. Due to this, adding multiple cores sharply increases system performance through parallelization. At the same time, adding multiple-core architecture is the simplest way to achieve the above-mentioned parallelization [8,78]. These platforms also have at least three data buses for each computational unit so, with the same instruction, and in the same clock cycle, the data sample and the filter coefficient can be fetched, and the MAC result can be stored in the memory [84].

Each manufacturer offers different families of DSPs for general and specialized use. Typically, the general-purpose ones allow filtering, correlation, convolution, and FFT operations [85]. However, those for specialized use are more oriented to a more specific use in audio or video processing. For example, Texas-Instruments has designed various DSP families with different ranges of processing power and functionalities: ultra-low-power DSPs (cheap but low performance), optimized power DSPs (portable and mobile devices), and Open Multimedia Application Platforms (OMAP) [86,87,88,89]. Other processors are Digital Media Processors (DMP), which are designed for multimedia applications such as image and video capture as well as their processing. DMPs can perform more complex tasks at the expense of higher power consumption, i.e., hardware image and video codecs (MPEG, H.264 and JPEG) and hardware accelerators for video processing [90]. There are also multicore DSPs that are optimized for computationally complex tasks and high-performance computing (HPC) [91], which allow them to perform tasks in parallel. The maximum computational performance in multicore DSPs is obtained if the task can be fully parallelized so that the threads run on different cores simultaneously. However, this is often not feasible in practice, and advanced parallel programming techniques are required to optimize the computational speed of these DSPs [8].

Another factor to consider is the type of arithmetic calculation support (fixed point or floating-point operation). Floating-point operational support in DSPs makes algorithm implementation easier and increases precision compared to fixed-point units. In contrast, fixed point units can perform operations with fewer bits and higher speed.

Current multicore DSP systems can combine DSP cores with other types of cores, such as GPU or microcontroller cores. These are known as “heterogeneous” platforms. The most common type of heterogeneous platform combines CPU cores (ARM) and DSP cores. The former handles user interaction, protocol processing as well as controlling the platform. The latter handles compute-intensive tasks [78,92]. Furthermore, since a single MAC operation requires accessing two memory reads to obtain the data, plus the multiplication, the addition and the writing of the result in the memory in the same clock cycle, special long instructions have been developed, and DSP processors have their own specialized instruction sets. Furthermore, for better memory access, they incorporate direct memory access (DMA).

Some known manufacturers have developed a subgroup of processors known as Crossover MCUs (‘crossover’ embedded processors), which guarantee high performance (>400 MHz multicore CPU) and low consumption. These devices combine the best of the microcontroller world with high-performance DSPs that are ideal for machine learning and artificial intelligence applications. The price is affordable compared to other hardware platforms [93]. For instance, NXP offers an i.MX RT family based on a Cortex-M7/M33, supporting RTOS and providing 2D graphics support, a camera interface, and high-performance audio support [93]. These devices are application processors built with an MCU core, architected to deliver high performance and functional capabilities of applications processors but with the ease-of-use and real-time low-power operation of traditional MCUs. Further, crossover processors are designed to reduce system cost by eliminating the need for flash, external DDR memory, and power management ICs. Some of these elements appear on general embedded designs for mass-market applications such as metering, medical equipment, and IoT gateways.

As a result, modern DSP systems have a small power draw compared to other solutions, while also excelling at handling digital signal processing. Multicore solutions also offer the ease-of-use attributes associated with microcontrollers, one of the drawbacks traditional DSP solutions have suffered from.

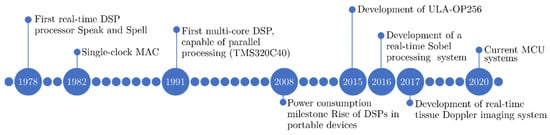

Figure 3 shows the most significant milestones over time that have contributed significantly to the development of imaging applications requiring DSP-based hardware acceleration systems.

Figure 3.

Most significant milestones over time on DSP-based hardware acceleration for image processing.

2.3.2. DSPs in Medical Imaging

There are several methods needed in medical imaging that depend on digital signal processing (DSP), such as convolution, discrete Fourier transform (DFT), fast Fourier transform (FFT), finite impulse response (FIR) and infinite impulse response (IIR) filters, FFT recursive and non-recursive digital filters, FFT processing, random signal theory, adaptive filters, upsampling and downsampling, etc. [94].

The DSPs’ rising performance has allowed them to undertake intense real-time tasks, which, coupled with their inherent proficiency in arithmetic operations, has made them a desirable platform for certain medical imaging algorithms implementation.

Recursive and non-recursive digital filters are mainly used to acquire interest signals and block unwanted signals, i.e., noise. In general, low pass, high pass, band pass and band reject filters are implemented for filtering functions. It is common to employ these filters using DSPs for biomedical engineering fields such as MRI, ultrasound, CT, X-ray or PET imaging, as well as the analysis and processing of signals derived from these or genetic signals [94].

Berg et al. [95] proposed an optimization-based image registration algorithm using a least-squares data term and implemented it on an embedded distributed multicore digital signal processor (DSP) architecture. All relevant parts were optimized, ranging from mathematics, algorithmics, and data transfer to hardware architecture and electronic components. They evaluated the performance using histological slices of cancer tissue.

Chandrashekara and Sreedevi implemented contrast-limited adaptive histogram equalization on a TMS320C6713T DSP processor to improve contrast in brain magnetic resonance images [96]. They showed promising results for several image quality metrics and subjective visualization.

DSPs have often been used together with another accelerator. Liang et al. described a digital magnetic resonance imaging spectrometer based on a DSP along with an FPGA [97]. The DSP was utilized as the pulse programmer on which a pulse sequence is executed as a subroutine.

Ali et al.’s white paper and Pailoor et al.’s application report provided a description of portable ultrasound imaging equipment with emphasis on the signal processing strategies [98,99]. They reported how DSPs, oftentimes, can be complemented by a reduced instruction set computer (RISC) processor. This way, the DSP can perform the more demanding algorithmic and real-time control tasks, even though ensuring a low energy consumption, while the RISC processor handles the tasks the DSP core is less suited for, making up for its downsides without adding another device.

In this line, Boni et al. described the 256-channel ULtrasound Advanced Open Platform (ULA-OP 256), intended to afford high performance in a small size [100]. This research scanner integrates several homogeneous multicore DSP and FPGA devices, where DSPs handle real-time operations (i.e., demodulation, filtering) as well as arithmetic operations. The ULA-OP 256 was successfully used in several consequent real-time image processing applications, such as the ones carried out by Ricci et al. [101], performing real-time blood velocity vector measurement over a 2-D region; Ramalli et al. [102], presenting a real-time implementation of the tissue Doppler imaging modality; and Tortoli et al. [103], comparing vector Doppler methods with conventional spectral Doppler approaches and achieving real-time estimations.

Arulkumar et al. proposed an ALU-based FIR filter for Biomedical Image Filtering application [104]. The ALU design operation includes accumulation, subtraction, displacement, multiplication and filtering. The FIR filter is designed to perform retina image filtering. This process allows DSP uses for improved visualization of the medical field. They concluded that the design and analysis of the ALU-based FIR filter provides an efficient result in the way of achieving the factors such as static and dynamic power, delay area utilization, MSE and PSNR.

On another note, Beddad and Hachemi showed encouraging performance results when implementing several medical image algorithms (such as Fuzzy C-Means or Level sets) for brain tumor detection in MR images [105].

All these works confirm that the use of DSPs is especially envisioned when designing specialized devices, particularly as their low power draw makes them exceptionally well-suited for portable devices. Additionally, there are many tools available for programming C/C ++ code for DSP, and the libraries available include common general-purpose algorithms for computer vision. In general, the development time for a simple task in single-core DSP is relatively short; nevertheless, developing optimized code using parallel programming techniques for multicore DSPs becomes hard and requires advanced programming skills. Moreover, free libraries have been developed to help programmers with optimized basic functions. Some of these libraries are: DSPLIB and MSP-DSPLIB (including functions for some digital signal processing tasks, such as FFT and convolution), IMGLIB and MATHLIB (basic math operations and basic practical calculations).

2.4. Field Programmable Gate Arrays (FPGAs)

2.4.1. Evolution of FPGA Architectures

FPGAs were introduced in 1985; however, a variety of Programmable Logic Devices (PLDs) appeared earlier [106,107]. The first PLDs were just Programmable Read-Only Memories (PROMs) that, rather than being used as computer memories, were used to implement simple logical functions. These devices integrate in the same integrated circuit a non-programmable memory decoder consisting of AND gates and a programmable array consisting of OR gates. Using the ROM input addresses as independent signals of a Boolean function and the memory outputs interfaced to the programmable array as a result of this Boolean function, it is possible to make independent logic circuits from the input signals. However, in some cases, they may be larger and slower than dedicated logic circuits and only a part of the capacity is used, which provides an inefficient use of the device.

New kind of PLDs appeared later in the 1970s to reduce the size of the PROMs or increase the configurability, such as Programmable Logic Array (PLA) and Programmable Array Logic (PAL) [108], which allow programming the AND gate array or the memory decoder, and the PALs also allow programming the output logic, reducing the number of components. The PROM, PLA and PAL are classified as Simple PLDs (SPLD).

In the 1980s, more advanced PLDs appeared, which were called Complex PLDs (CPLD) [109]. The architecture of CPLDs consists of various SPLDs blocks surrounded by a programmable interconnection matrix. This allows the development of larger and more complex logic circuits, reducing cost, size and increasing design reliability. Although the internal structure of a CPLD varies from vendor to vendor, the general structure is characterized by the following blocks: SPLD blocks, such as PROM, PLA or PAL; input and output (I/O) blocks that connect the I/O to the SPLDs; and the programmable interconnect matrix responsible for interconnecting the inputs and outputs of the SPLDs, as well as the I/O blocks.

CPLDs hierarchical structure, combining registered SPLDs with a programmable interconnect matrix, produced a big leap forward in the capabilities of programmable logic devices. Nevertheless, CPLDs were not yet suitable for complex circuits that require many flip flops. At that moment, there was still a big gap between the circuits that could be designed using PLDs and ASICs, leading to the development of FPGAs.

The most common FPGA architecture is called island-style FPGA architecture, in which the logic blocks are surrounded by a grid of routing interconnect, such as connection boxes or switch boxes. The smallest unit of logic block is the Configurable Logic Block, which consists of a LUT, a flip flop and a multiplexer [107]. LUTs are composed of SRAM cells that store the function outputs and a multiplexer that selects the output value from the inputs, and it can implement any function from its inputs.

FPGAs accomplish the goal of implementing complex digital circuits on a single chip that was previously only achievable using ASICs. At the same time, its high configurability allows for fast design and modification times. However, this flexibility comes at a cost since most of the FPGA area is dedicated to the programmable routing interconnections, which not only implies much higher use of silicon but also higher power consumption and slower speeds than ASICs [110].

On the other hand, the flexibility of FPGAs is the main advantage compared to ASICs, since if the design had to be modified due to design errors or improvements, the FPGA could just be reprogrammed, but a custom digital circuit would need to be redesigned from scratch [111]. In addition, since nowadays FPGAs can be reprogrammed on-site within seconds, their design costs are significantly lower than ASICs’. Thus, ASIC devices are only economical when high production volumes are needed or where the ASICs performance cannot be matched by an FPGA [112].

The FPGAs have evolved from the homogeneous structure to a more heterogeneous architecture with a wide range of specific blocks. Nowadays, FPGAs contain embedded memory blocks (block RAM), multipliers (DSP), and even embedded processors cores, known as System-on-Chip FPGA (SoC FPGA) [113]. In addition, current multiprocessor system-on-chip (MPSoC) systems also include real-time processors, GPUs or AI modules [114,115] on the same die. These devices are growing more and more complex; therefore, the use of high-level synthesis tools allows further abstraction using C, C++ or SystemC to transform algorithm descriptions into register-transfer level code, allowing to speed up the hardware design process on the FPGA.

Semiconductor manufacturers are exploring alternative architectures, including specific accelerators for vector processing (DSPs, GPUs) and programmable logic (FPGA). Xilinx has introduced in recent years a new heterogeneous architecture called Adaptive Compute Acceleration Platform (ACAP), which is expected to be a solution for the current applications [116,117].

ACAP is a hybrid platform that integrates an FPGA, together with a programmable processor and software programmable accelerator engines (DSP and GPU) prepared for vector computation. In addition, a communication network (NoC) interconnects all the parts, which makes it possible to establish an efficient data exchange channel. This new architecture allows performing acceleration interfaces for Artificial Intelligence and Machine Learning applications, as well as Automotive Driver Assist System and 5G wireless communications, among others. Therefore, this new architecture can be an alternative for future medical image processing applications, since it includes all the architectures described in this article (DSPs, GPUs, FPGA and CPU) and the manufacturers are tending to integrate more elements in the same chip.

Therefore, FPGAs are now widely used in the implementation of image processing applications. This is especially focused on real-time applications, where latency and power consumption are important parameters of design. For instance, an FPGA built into a smart camera can do much of the processing of the captured image, allowing the camera to deliver a stream of processed output data rather than a sequence of raw images.

Unfortunately, implementing an algorithm to an FPGA can produce disappointing results because many image processing algorithms have been optimized for a serial processor execution and for a specific image dimension. Generally, it could be necessary to transform the algorithm to efficiently exploit the parallelism and resources available in the FPGA.

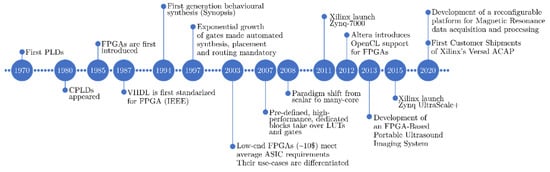

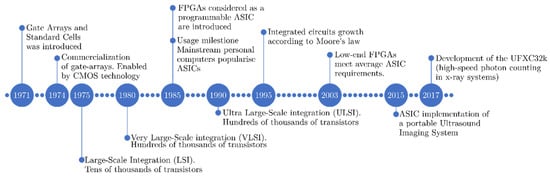

Figure 4 presents the most significant milestones over time that have contributed significantly to the development of imaging applications using FPGA-based acceleration systems.

Figure 4.

Most significant milestones over time on FPGA-based hardware acceleration for image processing.

2.4.2. FPGA in Medical Imaging

The evolution of FPGAs has motivated an increase in the use of these devices, whose architecture allows the development of hardware solutions optimized for complex tasks, such as 3D MRI image segmentation [118], 3D discrete wavelet transform [119], tomographic image reconstruction [18,120], or PET/MRI systems [121,122]. The developed solutions can perform intensive computation tasks with parallel processing, are dynamically reprogrammable, and have a low cost, all while meeting the hard real-time requirements associated with medical imaging.

Moreover, its reduced size and single chip capabilities make them the perfect platform to implement portable testing devices [123] or even surgical assisting imaging devices [124]. Configurable hardware solutions offer a compromise between the flexibility of software and the high processing speed of ASICs, at a lower cost than DSPs, and as reconfigurable devices, new algorithms can be implemented. Furthermore, parallelism and pipelining techniques become possible, increasing the FPGAs performance, even at slower clock rates. This makes FPGAs capable of performing more than 33 million operations per second [125], which is the number of operations per second needed to execute a single operation in real-time 768 × 576 color video at 25 frames per second.

FPGAs have been designed to perform image registration of medical images as well. Specific processes as affine transformations or mutual information calculations can be accelerated during real-time image registration, implementing the algorithms in FPGAs [126,127]. Nevertheless, recently, Mondal and Banerjee proposed an efficient and complete hardware-based image registration [128]. They used angular and radial projections without converting the image into polar coordinates. Therefore, they adaptively adjusted the number of samples according to the angle and the radial length in parallel. Moreover, they sped up the process by avoiding the calculation of geometric transformations in each iteration, which also reduces the amount of hardware resources that are required. Hence, they presented a high-speed hardware architecture able to perform the whole image registration process.

Gebhardt et al. proposed a system based on FPGAs to solve the electromagnetic interference problems to which PET detectors and the MRI radio frequency (RF) subsystem in multimodal PET-MRI systems are exposed, resulting in a deterioration of the signal-to-noise ratio (SNR) [129]. The methods presented employed an FPGA that replaces clock frequency and phase shifting of the digital silicon photomultipliers (dSiPM) used in PET modules. A reduction in RF interference was achieved based on the principles of coupling and decoupling of the EM field from the RF receive coils. For this purpose, the clock frequencies were modified, and the clock phase relationships of the digital circuits were changed.

Zhou et al. solved the problem of detecting nuclei in high-resolution histological images by implementing a generalized Gaussian Laplacian algorithm on FPGA. The results demonstrated a significant improvement in processing time without loss of detection accuracy [130].

In addition, real-time image processing can be implemented in MRI normal/abnormal immediate classification, lowering the false-negative rates and increasing tumor detection by double reading the images [131]. FPGAs are ideal devices to implement functions and operations, as convolutions, or even neural networks for machine learning and DL, due to parallelism and pipelining where they outperform CPUs and GPUs [132]. Several proposals have been presented for MR image reconstruction. Li and Wyrwicz designed a single FPGA chip with a 2D Fast Fourier Transform (FFT) core to reconstruct multi-slice images without the need of additional hardware components [133]. More recently, different groups have presented FPGA designs intended to accelerate the MRI reconstruction through sensitivity encoding (SENSE) [134,135]. Thus, Inam et al. obtained results that were comparable to those acquired with conventional CPU-based implementations but achieved lower computational time [135]. Furthermore, FPGAs have been used for other MRI applications, such as spectrometry. Liang et al. use a DSP along with an FPGA-based design to build a digital magnetic resonance imaging spectrometer [136]. The FPGA oversaw the gradient control, RF generation and RF receiving.

According to other imaging modalities, innovative applications have been developed for CT with field programmable gate arrays. Choi et al. showed an FPGA design with several block RAMs to make 3D CT reconstruction faster and reduce the radiation exposure [137]. Likewise, FPGAs can be used to accelerate data acquisition of ultrafast X-ray CT in real-time, as Windisch et al. demonstrated [138]. This way, real-time control of the scanning process can be enhanced, which is one of the main limitations of this modality. In addition, Goel et al. proposed a new method to speed up the COVID-19 diagnostic process. For that purpose, they developed a deep convolutional neural network to classify infected patients from CT images [139]. They tested different hardware-based implementations, including multicore CPU, many-core GPU, and even FPGA. They obtained satisfactory results with the different platforms, demonstrating a competitive optimization of the network training and inference performance with the FPGA.

Moreover, many PET and PET/MR scanners include FPGA systems to decode the signals arriving at photomultipliers [140]. Thus, several groups have implemented FPGA-only digitizers for signal digitization, processing and communication. Moreover, the latest research built these highly integrated data acquisition (DAQ) boards using single-ended memory interface (SeMI) input receivers instead of low-voltage differential signaling (LVDS) input receivers [141,142]. Furthermore, different machine learning algorithms have been implemented for photon position and arriving time estimation, scatter correction, attenuation correction and noise reduction taking advantage of FPGAs [140].

FPGAs are also introduced to speed up the acquisition of US imaging as well as to reduce the computational cost. Thus, Assef et al. designed an envelope detector for US imaging using a Hilbert Transform finite impulse response filter on an FPGA [143]. The results showed high efficiency for real-time envelope detection with a cost minimization of up to 75%. In addition, the implementation of an FPGA system allowed Wu et al. to build an intravascular US device able to perform photoacoustic and ultrasound imaging with forward- and side-viewing capability (contrary to conventional only forward-looking intravascular US) [144]. The system presented high-speed, low-cost, flexible programmability and compactness, making the device suitable for both research and clinical environments.

Henceforth, the scope of FPGA applications in the medical image field is becoming increasingly widespread. They enable to design-specific processes separately and in parallel, speeding-up acquisition and processing. Moreover, recent developments have proven their capability to combine tasks in order to complete whole procedures as image registration. Therefore, FPGAs offer a broad range of possibilities to improve the current imaging techniques.

2.5. Application Specific Integrated Circuits (ASICs)

2.5.1. Evolution of ASICs

The beginnings of ASICs could be traced back at least 20 years before the development of masked read-only memory (ROM). In the early 1970s, the concept of Gate Arrays and Standard Cells was introduced, with the first ASICs using diode-transistor logic and transistor-transistor logic technology. On the other hand, complementary metal oxide semiconductor (CMOS) technology enabled the commercialization of gate arrays, with the first CMOS gate arrays being developed in 1974. However, ASICs acquired a prominent place in the integrated circuit market worldwide during the 1980s, when the first low-end personal computers became commercially available using circuits based on gate-array circuits. The evolution of ASIC designs and technology can be characterized by the continuous growth and development of various ASIC design styles, which can be classified into four groups: standard-cell designs, gate-array or semi-custom design, structured design and full-custom design [145,146,147].

The standard cell design was the first method that allowed enhancing this technology since, until then, to design an ASIC it was necessary to choose a manufacturer and use the design tools that it provided. However, manufacturers implemented standard cells that were functional blocks with known electrical characteristics, which could easily be represented in the developed tools. Standard Cell-based design is the use of these functional blocks to achieve very high gate densities while achieving good electrical performance. Standard Cells produce a design density with comparatively lower cost and can also integrate Intellectual Property (IP) and SRAM cores in an effective way, unlike gate arrays.

The gate-array design is a method where the transistors and the other active elements are predefined, and the wafers containing these elements are kept in stock prior to metallization, while the design process defines the interconnection of the elements in the final device. Fixed costs are significantly lower, and the production cycles are shorter. It should be noted that minimal propagation delays can be achieved with this method, compared to commercially available FPGA-based solutions.

In the structured design of ASICs [148], the manufacturing cycle as well as the design cycle are reduced compared to cell-based ASICs, due to the existence of predefined metal layers, which reduces the fabrication time, and a pre-characterization of the silicon, which reduces the design time. It has lower fixed costs than chips based on standard cells or fully custom-made, the predefined metallization is used mainly to reduce the cost of the mask set, and it is also used to reduce the development cycle. In addition, the tools used for structured ASICs can substantially reduce and facilitate the design since the tool does not have to perform all the functions required for cell-based ASICs. Besides, it enables the use of IP cores, which are common for certain applications or industry segments, instead of designing these cores from scratch.

Finally, the full-custom ASIC includes custom logic cells, as well as the customization of all mask layers, making them very expensive to manufacture and to design. An example of a full-custom ASIC is a microprocessor, where many hours of design time are involved to maximize the performance of the microprocessor area. This allows to include analog circuits or optimized memory cells in the same device, obtaining more compact designs although requiring a long design time.

Since feature sizes of ASICs are shrinking and design tools have improved over the years, the overall complexity and functionality of an ASIC has grown from 5000 logic gates to more than 100 million. Current ASICs often include complete microprocessors, memory blocks, as well as other large building blocks. For this reason, ASICs are sometimes called system-on-chip (SoC) since the current trend is to integrate all or most of the modules that make up a computer into a single integrated circuit [149].

Figure 5 presents the most significant milestones over time that have contributed significantly to the development of imaging applications using ASIC-based hardware acceleration systems.

Figure 5.

Most significant milestones over time on ASIC-based hardware acceleration for image processing.

2.5.2. ASICs in Medical Imaging

Despite the recent technological advances in CPUs, GPUs, FPGAs and DSPs, ASICs are still used to support a few more specific and more challenging processing tasks. Thus, ASICs have been traditionally used to support the high computational and data rate requirements in medical X-ray spectroscopy, CT scans, MRI, ultrasounds, and PET imaging systems, with the focus on the front-end receiver electronics. In this sense, several studies have proposed dedicated architectures to solve specific problems with very strict real-time constraints.

Kmon et al. presented an ultra-fast 32k channels chip for an X-ray hybrid pixel detector, which was designed to obtain a high count-rate performance [150]. Their dedicated and fast front-end circuit could be bump-bonded to a silicon pixel detector allowing for single-photon counting readout for hybrid semiconductor detectors in low-energy X-ray imaging systems. Another proposal in this field is described by Sundberg et al. in [151], where they use an already evaluated ASIC architecture capable of photon-counting [152] and propose adjusting the shaping time to counteract the increased noise that results from decreasing the power consumption.

In clinical and preclinical nuclear imaging applications, there is a rising demand for high-resolution room-temperature solid-state detectors (RTSDs), especially CZT and CdTe detectors. As the demand for SPECT systems with higher spatial resolution, energy resolution and sensitivity continue to rise, there is an increasing need to develop high-performance small-pixel CZT and CdTe detectors and corresponding high-speed readout electronics. The multi-channel readout circuitry reported in [153] is based on the pre-existing High Energy X-ray Imaging Technology (HEXITEC) ASIC [154]. Pushkar et al. offered a different solution to the same problem, although there are not many details of their SPECT ASIC [155].

Kang et al. described a novel SoC solution for a portable US imaging system that was compact and had low power consumption [156]. In this case, their solution comprised all the signal processing elements, including the transmit and dynamic receive beamformer modules, mid- and back-end processors, and color Doppler processors, in addition to an efficient architecture for hardware-based imaging methods, including dynamic delay computation, multi-beamforming, and coded excitation and compression. Kim et al. presented an ultrasound transceiver ASIC directly integrated with an array of 12 × 80 piezoelectric transducer elements to enable next-generation ultrasound probes for 3D carotid artery imaging. It is a second-generation ASIC that employed an improved switch design to minimize clock feedthrough and charge-injection effects of high-voltage metal–oxide–semiconductor field-effect transistors, which in the first-generation ASIC caused imaging artifacts [157]. Rothberg et al. described the design of the first ultrasound-on-chip to be cleared by the FDA for 13 indications, comprising a two-dimensional array of silicon-based microelectromechanical systems ultrasonic sensors directly integrated into complementary metal–oxide–semiconductor-based control and processing electronics to enable an inexpensive whole-body imaging probe [158]. The beamformers of ultrasound imaging are conventionally implemented using FPGA. However, the minimum delay time provided by an FPGA chip is about 2 ns, which is not suitable for high-frequency US (HFUS) imaging. As a result, an ASIC is needed to provide an appropriate delay time to excite the array transducer elements in the HFUS array system. Sheng et al. proposed an all-digital transmit-beamforming integrated circuit with high resolution for high-frequency ultrasound imaging systems, not only more suitable for portable ultrasound system application but also at a lower cost [159].

Cela et al. reported a compact detector module, including a FlexToT ASIC designed for time-of-flight (TOF) PET [160]. Novel TOF sensors allowed for an additional level of detail in PET imaging, as they can add the actual time difference between the detection of photons released during an annihilation event (compared to normal detectors that only measure the direction and attenuation of photons). Their module provided a fast, low-power front-end readout for silicon photomultiplier (SiPM) arrays in scintillator-based PET detectors. An evolution of this ASIC, named HRFlexToT, was presented in 2021. It offers a linear Time-over-Threshold (ToT) with an extended dynamic range for energy measurement, low power consumption, and an excellent timing response [161]. Nemallapudi et al. [162] described the use of STiC, an ASIC with four channels dedicated to fast timing discrimination in PET using silicon photomultipliers [163], for range verification in proton therapy.

All these works confirm that the use of ASICs is still needed for specific and more challenging processing endeavors and serves as examples of very specific tasks where other hardware architectures could fail to meet the strict real-time constraints.

3. Discussion

Earlier prediction and treatment have been driving the acquisition of higher image resolutions as well as the fusion of different modalities. On the other side, with the demand for healthcare services increasing, providers require medical imaging equipment that acquires images faster and improves image quality. In this context, the rising need for sophisticated software/hardware systems for medical image acquisition and storage, as well as novel and nearly real-time medical imaging analysis tools, has led to a change of paradigm when choosing the most efficient hardware architectures while keeping healthcare affordable and accessible.

3.1. Hardware Architectures Comparison

Today, manufacturing technologies are evolving rapidly, and competition between different manufacturers has brought better development tools, which allow creating solutions in a reasonable time and equipped with the latest technological advances. However, each hardware accelerator is designed to be efficient for certain algorithms or implementations but not for others. In fact, choosing a hardware accelerator is often a compromise between several factors, including computing power, speed, development time, power consumption, and price. Therefore, this makes choosing a suitable hardware accelerator for a specific application relatively difficult.

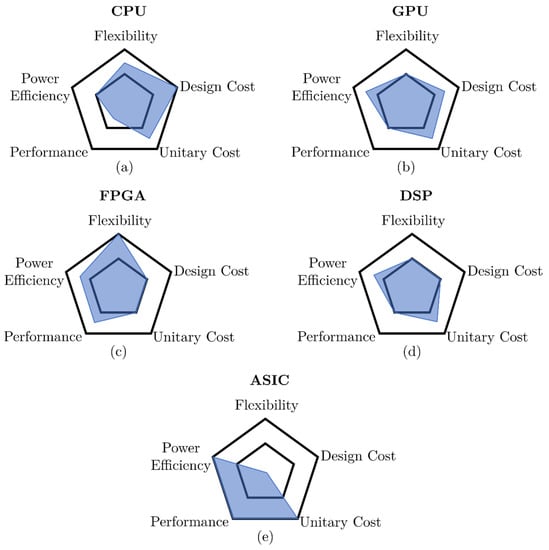

One of the main challenges lies in finding a suitable solution for an application that requires the use of hardware accelerators. It is difficult to define an indicator that makes it easy to choose one hardware accelerator over others. A more realistic analysis indicates that processing speed alone is not enough as a criterion of choice, especially when they belong to different families. Thus, there are other objective factors ranging from power consumption and price to more subjective ones related to the skill of the programmer and the design tools available. Figure 6 shows how each of the five hardware architectures discussed in this review excels in different parameters, so aspiring designers should consider the strengths of each one before choosing a platform to develop their prototype and, eventually, their final application. In this sense, the following definitions of the parameters shown in Figure 6 are considered for comparison purposes:

Figure 6.

Summary of the comparison between the five hardware architectures discussed in this paper, based on different parameters to be considered when implementing image processing for medical imaging applications. In detail, (a) Central Processing Units (CPUs) are probably the most widespread technology, as every PC is equipped with one of them. Their availability, flexibility and ease-of-use make them the perfect prototyping candidates when deploying algorithms or developing code. (b) Graphics Processing Units (GPUs) offer more raw power than a CPU while being less flexible. (c) Digital Signal Processors (DSPs) are cheap and easy to use but do not perform well outside their niche. (d) Field Programmable Gate Arrays (FPGAs) offer a unique combination of flexibility and high performance, as they can be programmed to perform similarly to a given highly specialized platform. (e) Application Specific Integrated Circuits (ASICs) are extremely geared towards high-performance and low unitary cost for a high production so that the low variable costs may offset the high fixed costs.

- Flexibility: Ability to adapt the platform to different scenarios and use cases while keeping performance high enough to be a competitive option (higher is better).

- Design cost: Economic cost of resources needed to produce and program the device. This includes coding easiness (higher rating means lower requirements).

- Unitary cost: Cost per manufactured unit. This cost does not include coding or implementation expenses and is influenced by common manufacturing runs (higher rating means lower cost).

- Performance: Ability to complete a given (appropriate) task (higher is better).

- Power efficiency: Power required to operate (higher rating means lower power consumption).

CPUs have been typically chosen as the most common and flexible platform to code or develop on due to their inherent flexibility and easiness to program new methods. Additionally, their computational performance has increased considerably in the past decades (Figure 6a).

GPUs offer a further dedicated technology providing a way to improve certain computational tasks and algorithms while maintaining a certain amount of flexibility (Figure 6b). However, the development and implementation of algorithms in GPU architectures has been very complex until the development of a unified architecture, which helped to have a better load-balancing in exchange for complex hardware. Furthermore, the use of GPUs by a broader public has driven the development of new functionalities as well as the creation of several highly optimized libraries such as OpenACC, Caffe, Tensorflow or PyTorch.

DSPs specialize, as mentioned earlier, in digital signal processing, and as such, are the best performers in that field. Nevertheless, they barely offer any flexibility, so they are often used together with other architectures in composite designs where fast digital signal processing is desired, yet it is not the only kind of processing performed (Figure 6c).

FPGAs have less performance, higher power consumption and unitary cost than ASICs but advantages of higher flexibility, lower design effort and time-to-market. Moreover, since FPGA design times are considerably shorter than dedicated ASICs’, they are also used to prototype the ASIC design. Compared to CPUs and GPUs, FPGA performance can be higher, but with the cost of a higher design effort (Figure 6d). In addition, it is possible to simulate functionality in the early stages of the design and validation process [106], including the hardware–software interactions and communication with other devices. These features provide FPGA-based devices ideal for a large application range, from prototyping to aerospace and defense [164,165]. In general, FPGAs are used in communications, video, and image processing because of their parallelism, which allows them to meet real-time requirements.

ASICs provide smaller form factors, higher performance, and less power consumption since they are tailored for a specific application and implemented based on custom design specifications. However, ASICs have a long time to market as there is a need for the layout of masks and manufacturing steps, with its subsequent risk of lost revenue due to being late to market, implementation flexibility and future obsolescence. In summary, ASICs should only be considered by experienced engineers developing high volume-specific devices, especially as they only add up in very well tested systems/subsystems where a possible change or update does not make much sense and, above all, security is not compromised (Figure 6e).

Table 1 provides a summary comparison chart for all five hardware architectures discussed in this review, including a brief overview of the architecture, the specific processing characteristics, programming capabilities, supported peripherals, strengths, weaknesses, and most common medical applications.

Table 1.

Summary comparison chart for CPU, GPU, DSP, FPGA and ASIC hardware architectures.

Despite having analyzed each of the different hardware architectures separately, nowadays, it is difficult to find any one of the five types of devices discussed in this paper working independently of each other in image processing pipelines. An example of this is GPUs, which also need memory and usually work together with a CPU to manage data transactions. Moreover, frequently, there is no optimal solution for a specific application but a well-suited solution for a compromise between different requirements. It is also worth bearing in mind in what way these selections line up in numerous shared applications. As exposed in Table 1, developers can often base their designs on several or all the choices either alone or, often, in combination.

On the other hand, a recent trend of using FPGAs as partially reconfigurable, highly specialized circuits has set them under the spotlight. MPSoC devices are characterized by integrating different elements on the same chip. Although they have less computational power than CPUs or GPUs, however, when combined with a programmable logic of an FPGA, they are a very flexible solution. In addition, the current microprocessors integrate SIMD units, making these devices more commonly used for real-time image processing. These platforms tend to use FPGAs to speed up algorithm implementation while leveraging their onboard CPU to perform general tasks or run software applications. Their advantages include the ability to provide high-speed customizable solutions due to the parallelism it allows in the design. The programmable logic in the same device makes it possible to design hardware accelerators combining the advantages of FPGAs together with those of multicore systems.

Semiconductor device manufacturing techniques require increasingly complex techniques to allow the integration of a greater number of transistors, functional blocks, memories and buses, all on the same chip, obtaining devices with higher computational performance [166]. This increases the cost of most CPUs, GPUs, MPSoCs or FPGAs but also allows higher performance and the ability to parallelize and speedup algorithms, as well as greater flexibility in the different fields of application.