Abstract

Perspective correction of images is an important preprocessing task in computer vision applications, which can resolve distortions caused by shooting angles, etc. This paper proposes a hardware implementation of perspective transformation based on central projection, which is simpler than the homography transformation method. In particular, it does not need to solve complex equations, thus no software assistance is required. The design can be flexibly configured with different degrees of parallelism to meet different speed requirements. Implemented on the Xilinx Zynq-7000 platform, 2893 Look-up Tables (LUTs) are required when the parallelism is one, and it can process a 20 Hz video with a resolution of 640 × 480 in real time. When the parallelism is eight, it can process 157 Hz video and requires 11,223 LUTs. The proposed design can well meet the actual needs.

1. Introduction

With the development of science and technology, the applications of images and videos in daily life are more and more extensive. However, due to the shooting angle and other issues, there are often deviations between the captured image and the real image, including perspective distortion [1] and barrel distortion [2]. Barrel distortion can be corrected by adding optical lenses [3] or using mathematical algorithms [4]. The perspective distortion is greatly affected by the shooting angle and shooting distance, and the degree of distortion of the captured picture is uncertain; thus, mathematical algorithms are often used to compensate. This paper mainly discusses the perspective transformation of images.

The perspective transformation of images has a wide range of application scenarios. In [1,5], the technology is used for license plate recognition, and the license plate image with perspective distortion is corrected into a rectangle, which increases the accuracy of license plate recognition. The method of perspective transformation is applied to the projector in [6,7,8,9,10,11,12,13,14] to solve the problem of distortion of the projected image caused by the poor placement of the projector. In [15], applying it to the field of architectural engineering imaging measurement is beneficial to better record the important features and functions of the building. In addition, perspective transformation can also be used for the correction of various types of text images; for example, Refs. [16,17] are used for the correction of Chinese and English documents, respectively. This also has some hardware design: Ref. [18] complete the Application Specific Integrated Circuit (ASIC) design to address the optical distortion and perspective distortion present in the microscopic system. Moreover, Refs. [19,20,21] are all implemented on the Field Programmable Gate Array (FPGA), which are used to generate the Bird’s-Eye View (BEV), correction of input video from two cameras, and obstacle detection, respectively.

The transformation of the image includes two parts, coordinate transformation and image interpolation. Coordinate transformation usually uses homography transformation and linear average stretching. The linear average stretch is corrected according to the difference between the representative points of the image before and after the transformation, and its variable range is limited. In comparison, the homography transformation is more widely used. In the papers mentioned above, Refs. [9,18] use linear average stretching, and [1,5,6,7,8,10,11,12,13,14,15,16,17,19,20,21] use homography transformation. However, the homography transformation requires the use of a transformation matrix, but the transformation matrix contains eight unknowns, and its solution is very complicated. Most of the existing schemes use a pure software approach to achieve homography transformation [1,5,6,7,8,10,11,12,13,14,15,16,17]. In addition, a small number of papers present a solution to implement the homography transformation in hardware [19,20,21], but they all require software assistance to calculate the transformation matrix. This paper proposes a hardware design of perspective transformation based on central projection. It does not need to calculate the transformation matrix or software-assisted calculation, and the hardware can independently perform various basic transformations of the image.

The coordinates of the pixel points in the perspective view obtained by coordinate transformation are not necessarily integers. At this time, to calculate the pixel value of the point, an interpolation algorithm is required. Common interpolation algorithms in image processing include the nearest neighbor interpolation [22], linear interpolation [23], bilinear interpolation [24], bicubic interpolation [25] and spline interpolation [26]. The nearest neighbor interpolation is the simplest interpolation algorithm, which directly uses the pixel value of the integer point closest to the current point as the pixel value of the current point. Linear interpolation is an interpolation algorithm widely used in mathematical calculations and computer graphics, which makes full use of the information of other nearby pixels. A more accurate image can be recovered, but a division operation is required, and the resource occupancy and time required are worse than the nearest neighbor interpolation. Both bilinear interpolation and bicubic interpolation are developed on the basis of linear interpolation, and are suitable for image processing with high precision requirements. Relatively speaking, the images obtained by using these two algorithms will be clearer, but the calculation is more complicated, and the hardware implementation is not friendly. Spline interpolation can get a finer picture than bilinear interpolation and bicubic interpolation in some specific cases, but it is also computationally complex. The hardware structure proposed in this paper is compatible with any of the above-mentioned interpolation algorithms, but considering the low resource occupation and real-time performance, this paper only discusses and designs the nearest neighbor interpolation.

In more detail, this paper has made the following contributions:

- The transformation method of the central projection has been improved, and two variable parameters have been added, so that it can achieve a similar effect to the homography transformation.

- The hardware design of perspective transformation based on the central projection transformation is proposed. Compared with the most used homography transformation, it is simpler, and the hardware does all the calculations.

- It has excellent compatibility. According to actual application scenarios, the calculation speed and required resources can be flexibly adjusted by increasing or decreasing the number of pixel calculation modules. When the degree of parallelism is one, it requires 2893 Look-up Tables (LUTs), which can process resolution video at 20 Hz, and when the degree of parallelism is eight, it is 11,223 LUTs, which can process 157 Hz video streams.

- The design of this paper is more practical; it can complete many image transformations, such as scaling, translation, tilt correction, BEV and rotation. The specific change implementation methods have been given.

The rest of the paper is organized as follows: Section 2 describes the perspective transformation method based on central projection and nearest neighbor interpolation, the specific hardware design is described in Section 3, the results and discussions are in Section 4, and the conclusion is in Section 5.

2. Perspective Transformation and Interpolation Algorithm

To realize the correction of the image more easily, this paper directly performs the perspective transformation of the image according to the geometric relationship of the image before and after the central projection [27,28].

2.1. Perspective Transformation Based on Central Projection

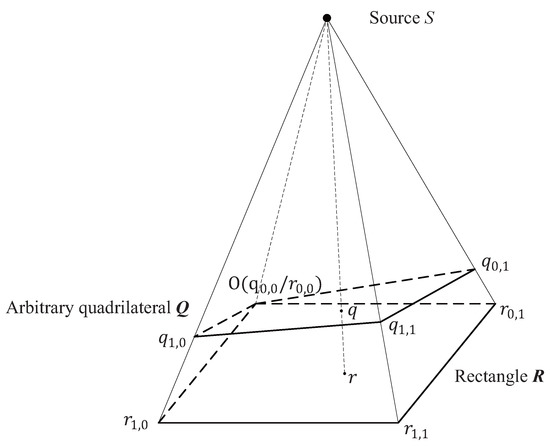

Assuming that there is a light source S and an arbitrary quadrilateral , a rectangle is obtained under the projection of the light source S. A schematic diagram is shown in Figure 1.

Figure 1.

Schematic diagram of the center projection.

It can be observed that each point in the mapped image can find its corresponding point in the original image . In order to calculate the value of the pixel in the transformed image, the best way is to find the mapping relationship from to .

To simplify the calculation, it is assumed that the four vertices , , and of are represented in the three-dimensional coordinate system as , , and , respectively. Since the concern is the coefficient of the linear combination of vectors and at any point r in the quadrilateral , such a representation does not affect the final result. Any point r in the quadrilateral can be expressed as

where and are the coefficients of the linear combination of vectors, independent of the coordinate system.

Similarly, any point q in the original quadrilateral can be expressed as

where and are the coefficients of the linear combination of vectors.

The four vertices in the original image are coplanar, so satisfy

where and are the coefficients of the linear combination of vectors, and the values of both are constants for a given quadrilateral.

In addition, r can also be expressed as

where t is the multiplication coefficient.

Thus, according to the relationship between the four vertices of the image before and after transformation, four equations can be obtained,

where , , and are multiplication coefficients.

Since and coincide at point O, . Multiply the left and right sides of Equation (5) by the normal vector of the plane and solve it to obtain

Because , and are linearly independent, their coefficients are all 0, so

Knowing the pixel coordinates in the transformed image, the corresponding pixel coordinates in the original image can be calculated from Equation (10). Combined with a reasonable interpolation algorithm, we can restore the transformed image from the original image.

The central projection transformation can be used to correct the distorted image into a rectangle or to perform geometric transformations such as the rotation of the original image, in order to preserve the background outside the distorted subject in the original image and adjust the size of the transformed image. On the one hand, and in Equation (10) can take negative values, which makes the possible pixel values of image and extend outward, and the transformed image can retain the background. On the other hand, parameters and are introduced to control the size of the transformed image , and the values of and need to be less than . Assuming that the width of the transformed image is W, the height is H, and the position of the pixel in the image is , then the formula for calculating of this point is

2.2. Nearest Neighbor Interpolation

The nearest neighbor interpolation is the simplest interpolation algorithm. This method is used to search for the nearest integer pixel point to the current pixel point, and directly use the pixel value of this point as the current point pixel value. The advantage of this method is that the hardware implementation is simple.

3. The Hardware Implementation

The structure proposed in this paper is suitable for images of different sizes, and only needs to change , , H and W in Equation (11) for images of different sizes. Next, an image with the size of in format will be used as an example to introduce the specific design.

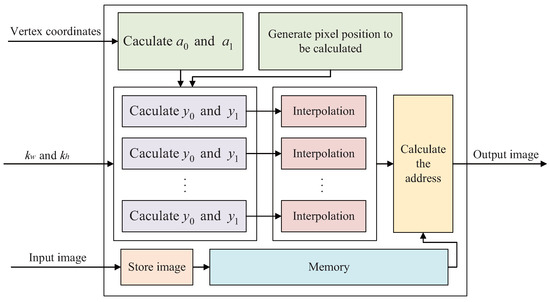

The overall hardware structure is shown in Figure 2. Described by Verilog HDL language, it adopts fixed-point arithmetic, and retains nine decimal places during the calculation. First, the pixels in the original image are stored in the memory in the order from left to right and top to bottom, and the memory can be Block Random Access Memory (BRAM), Double Data Rate (DDR) or other memory. Second, according to the input coordinates of the four vertices, and are calculated by Equation (3), and the vertex coordinates can be the vertices of the distorted subject or the manually inputted coordinates. Third, determine the parallelism. The parallelism of this paper refers to the number of groups of and calculation modules and interpolation modules, and the parallelism of n means that there are n groups participating in the computation at the same time. As the parallelism increases, the throughput of the design increases and the required hardware resources also increase; for every doubling of the parallelism, the throughput basically doubles. This method can meet the needs of different application scenarios. To complete the calculation correctly, at least one group is required, and the maximum number of groups cannot exceed the number of clock cycles required by the and calculation modules and the interpolation module. This is because it is necessary to ensure that the generation time difference of the addresses of two adjacent valid pixel points is greater than or equal to one clock cycle. Fourth, the pixel positions in the transformed image are sequentially assigned to the parallel and calculation modules; use Equation (11) to obtain and , and then use Equation (10) to obtain and . Finally, use an interpolation algorithm to calculate the coordinates of the pixel closest to the point, and then read the pixel value from memory. In the specific hardware design, we also use some techniques to reduce the hardware cost [29,30], including optimizing the calculation process to reduce the number of adders and multipliers, as well as multiplexing the dividers that require large resources; the specific optimization will be introduced in detail below.

Figure 2.

The overall hardware structure of perspective transformation.

3.1. Caculate and

The values of and are determined by Equation (3), assuming that the direction is the x axis, and the direction is the y axis. The coordinates of the four points , , and are expressed as , , and respectively, then

Let , , , , , , then it can be solved by Equation (12)

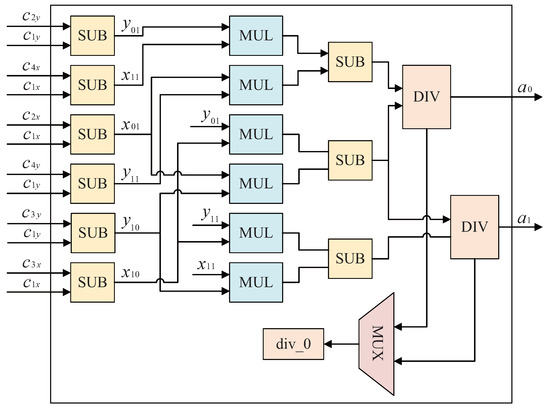

From Equations (12) and (13), directly calculating and from four vertex coordinate values requires 8 multipliers, 10 subtractors and 2 dividers. In order to reduce the hardware cost, on the one hand, the calculation process can be optimized. It is noted that the denominators of the two formulas in Equation (13) are the same, so two multipliers and one subtractor can be reduced. On the other hand, the values of and only need to be calculated once when performing a perspective transformation on an image, and the latency has little impact on throughput. Therefore, the method of time division multiplexing is used to calculate the two divisions separately and share a divider. The optimized design is shown in Figure 3, which only needs six multipliers, nine subtractors and one divider to complete the calculation of and .

Figure 3.

Hardware structure for calculating and .

3.2. Generate the Pixel Position to Be Calculated

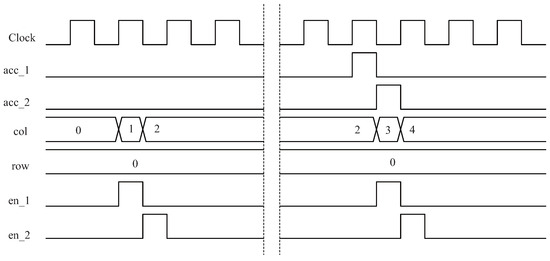

This module is used to generate the x and y axis coordinates of the pixel to be calculated and the enable signal, and complete the scheduled work. Taking the parallel calculation of two groups of and calculation modules and interpolation modules as an example, the timing diagram is shown in Figure 4.

Figure 4.

Timing diagram when there are two sets of and calculation modules and interpolation modules.

In the Figure 4, and are inputs, which are the output enable of the interpolation module, and the high is valid, which means that an interpolation operation is completed. and are outputs, representing the column and row information of the pixel, respectively. and are outputs, which represent the input enable signals of the first and second and calculation modules, respectively. At the beginning, and were pulled high, one after another, and the two and calculation modules started to work successively. After the two successively complete the calculation of pixel points, and are pulled high, then the values of and increase, and at the same time, a new round of calculation is enabled again until the complete image is calculated.

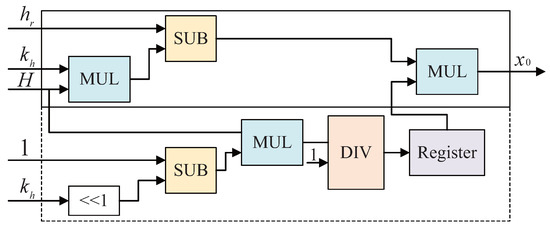

3.3. Caculate and

To calculate the values of and , first obtain and from Equation (11), and then calculate Equation (10). The hardware structure for calculating is shown in Figure 5, and the same structure can also be used to calculate . According to Equation (11), a division operation is required for each calculation of and , and the delay of the divider is very high. For a certain and , the denominator is a fixed value, so in the first operation, the denominator is calculated and its reciprocal is stored in the register. Then, the subsequent calculations can change the division operation into a multiplication operation, which is represented by a dotted line.

Figure 5.

Hardware structure for calculating ; the dashed part is run only once.

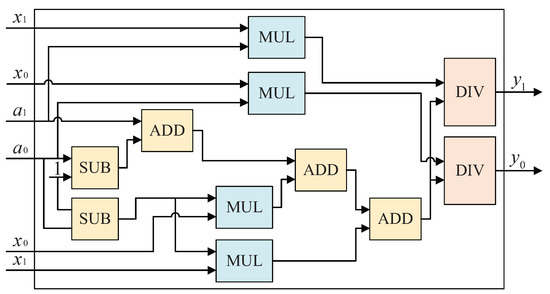

The hardware structure for calculating and is shown in Figure 6. It is worth noting that the bit width of the divider used in Figure 6 is the same as that of the divider used in Figure 5, and both used the same time division multiplexing technique as in Figure 3. and share a divider, and and share a divider; thus, the structures shown in Figure 5 and Figure 6 use a total of two dividers. The values of and are calculated from Equation (10). To increase the operating frequency, avoid executing too much combinational logic in a single clock cycle when calculating the denominator of Equation (10). Therefore, when calculating and , two additions are distributed over two clock cycles, and one addition operation is performed in each clock cycle.

Figure 6.

Hardware structure for calculating and .

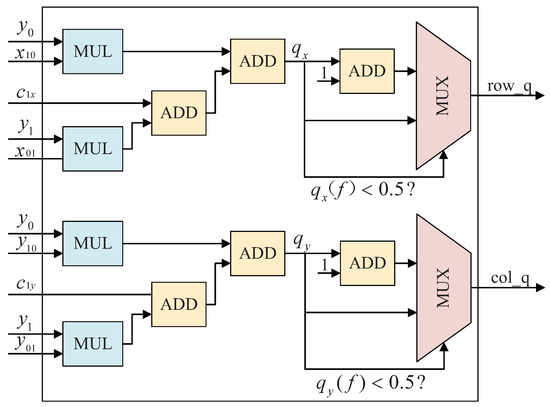

3.4. Interpolation

After obtaining the values of and , it is necessary to use Equation (2) to calculate the real coordinates of the pixel in the original image, and then use the interpolation algorithm to obtain the pixel value; a simple and efficient nearest neighbor interpolation is used in this paper. Let the coordinates of the corresponding point q in the original image be , by Equation (2)

The hardware structure is shown in Figure 7. First, use Equation (14) to calculate and in parallel. Note at this step that the values of , , and have been calculated in the structure shown in Figure 3, as , , and , respectively. Therefore, the register can be used for buffering, and it is input as a known value when calculating Equation (14), which can reduce four subtractions. The coordinates at this time are not necessarily integers. It is necessary to use an interpolation algorithm to replace its value with the value of the nearest pixel. The value of the pixel on which side it should take is determined according to rounding, so the position of the nearest pixel is related to the value of the fractional part of and . Take out the fractional part of and compare it with . If it is greater than or equal to , the x-axis coordinate of the nearest pixel is the integer part of , otherwise it is the integer part of . The calculation method of the y-axis coordinate is the same.

Figure 7.

Calculate the coordinates of the nearest pixel using an interpolation algorithm. represents the fractional part of , represents the fractional part of , and represent the x-axis and y-axis coordinates of the closest pixel, respectively.

3.5. Calculate the Address

To take out the value of the nearest pixel, it is also necessary to calculate its address in the memory. For the original image with a size of ,

then, the pixel value of the address is taken out from the memory, and this value is the pixel value of the transformed image.

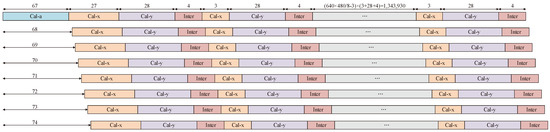

3.6. Timing Diagram of the Overall Structure

Time diagrams can be a good representation of how each module works and its time dependencies [31,32]. In each of the above modules, the structure shown in Figure 3 is only calculated once for an image, and its delay has little effect on the overall calculation speed. In order to ensure the accuracy, the divider of this module is 32 bits wide, so the overall delay is 67 cycles. The bit width of the divider in Figure 5 and Figure 6 is 24 bits, so the delay of the structure in Figure 5 is 27 cycles in the first calculation, and 3 cycles in subsequent calculations, and the delay of the structure of Figure 6 is 28 cycles. The structure shown in Figure 7 has a delay of four cycles. Taking the parallelism of eight as an example, the timing diagram of calculating an image in this design is shown in Figure 8.

Figure 8.

Timing diagram when parallelism is eight. Among them, Cal-a represents calculating and using the structure in Figure 3, Cal-x represents calculating and using the structure in Figure 5, Cal-y represents calculating and using the structure in Figure 6, and Inter represents the interpolation algorithm using the structure in Figure 7.

4. Results and Discussion

The proposed design was packaged as an Intellectual Property (IP) core for testing. On the one hand, the functional verification is carried out, and the achievable functions are introduced and verified using the FPGA development board. On the other hand, the performance of the design of this paper is compared with the existing scheme, including hardware resources and processing speed.

4.1. Functional Verification

First, simulate the hardware circuit to verify the correctness of its function. The original image is converted into a binary file and then input to the hardware circuit. After the simulation is completed, the output binary file is converted into an image, and stitched together with the original image to demonstrate the features of the design in this paper. Then, use the FPGA development board to verify the correctness of the circuit to prove its practicability under real conditions.

4.1.1. Features

Depending on the input, the design of this paper can perform different functions, including scaling, translation, tilt correction, rotation and BEV.

- (1)

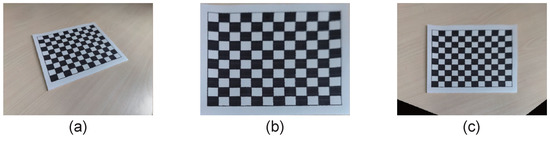

- Scaling

Adjust the value of and to change the size of the transformed image. When their value is greater than 0, it is reduced, and when it is less than 0, it is enlarged. Generally, , otherwise it will cause the picture ratio to be out of balance. The zoom in and zoom out of the picture are shown in Figure 9.

Figure 9.

Scaling of the image. (a) is the original image, (b) is the enlarged image, set , (c) is the reduced image, and set .

- (2)

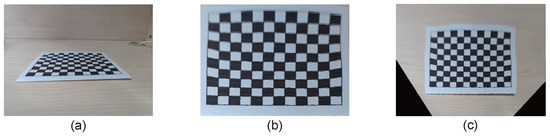

- Translation

The translation of the image can be achieved by adjusting the coordinates of the four vertices of the original image. Because the original image and the transformed image are relative to each other, if the x axis coordinates of the four vertex coordinates of the original image are increased at the same time, the transformed image will move upward, and if it is reduced, the image will move downward. As the y coordinate increases, the image moves to the left, otherwise it moves to the right. Set , first increase the x axis coordinates of the four vertices of the original image by 50, and then decrease the y axis coordinates by 100. The transformed images are shown in Figure 10.

Figure 10.

Translation of the image. (a) Is the original image, (b) is the transformed image obtained by increasing the x axis coordinate of the original image vertex by 50 and (c) is the transformed image obtained by reducing the y axis coordinate by 100 on the basis of (b).

- (3)

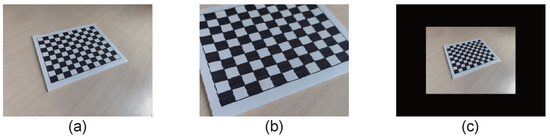

- Tilt correction

Due to the shooting angle, the black and white grid in the original image is distorted. Input the coordinates of the four vertices of the distorted object in turn, and select the appropriate and values to correct them. Set and , respectively, and the converted images are shown in Figure 11. It can be observed that when , the corrected image has only the target subject and no background, which loses some image elements and does not conform to human habits. After adding and , the corrected image can be adjusted more flexibly.

Figure 11.

Correction of the image. (a) Is the original image, (b) is the corrected image when and (c) is the corrected image when .

- (4)

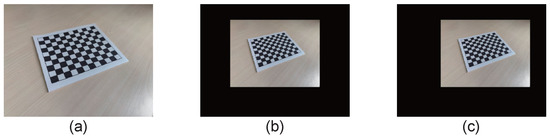

- BEV

The method of generating BEV is the same as that of tilt correction. It is necessary to input the coordinates of the four vertices of the target to be transformed and select the appropriate values of and . The results of BEV are shown in Figure 12.

Figure 12.

BEV of the image. (a) Is the original image, (b) is the BEV when and (c) is the BEV when .

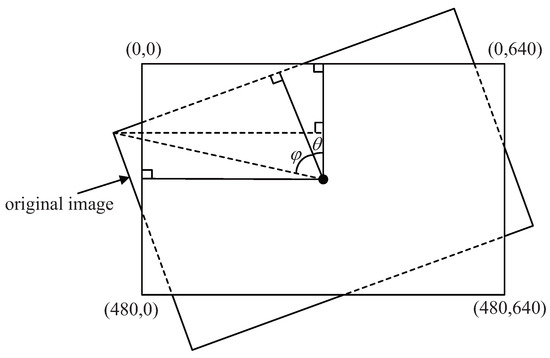

(5) Rotation

The design also implements image rotation by entering specific vertex coordinates of the original image. The size of the image is , so the vertex coordinates of the rotated image are , , and . Assuming that the angle of image rotation is , the schematic diagram of rotation is shown in Figure 13, where .

Figure 13.

Schematic diagram of image rotation.

Then, the formula for calculating the coordinates of the original image can be obtained,

In order to test the design proposed in this paper, an external test circuit is built to implement Equation (16), which uses a Look-up table to store all possible data in the Read Only Memory (ROM). Set the precision of the rotation angle to 1° and its value range to [−179,180], then a total of data needs to be stored. These data are stored in ROM as a 12-bit signed number, which requires 54K BRAMs on the FPGA. The eight data corresponding to each angle are stored in the ROM from top to bottom in the order of Equation (16). The address of the first data are

where is the angle value to be rotated, and the addresses of other data can be obtained by successively accumulating.

Set the rotation angles to 30° and −135°, respectively, , and input the coordinates obtained in the Look-up Table into the design of this paper. The results are shown in Figure 14.

Figure 14.

Rotation of the image. (a) Is the original image, (b) is the image rotated by 30° and (c) is the image rotated by −135°.

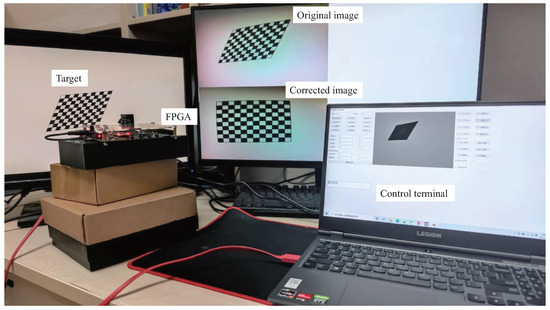

4.1.2. Verify on FPGA

The design of this paper is verified on the FPGA, as shown in Figure 15. In this test environment, the real-time video can be processed, the OV5640 camera is used to capture the image, the DDR is used to cache the image, and the original video and the processed video can be displayed on the screen through the High Definition Multimedia Interface (HDMI) at the same time. In addition, the system is connected with the notebook computer through the Universal Asynchronous Receiver/Transmitter (UART), and the whole system can be controlled by the control terminal running on the notebook computer. The experimental results demonstrate that the design can correct images with perspective distortion in real time.

Figure 15.

Test environment for the proposed design.

4.2. Analysis of Performance

The proposed design has greater flexibility. The parallel operation of multiple and calculation modules can be realized by simply modifying the pixel scheduling module. With the increase in the degree of parallelism, the calculation speed increases obviously, and the required resources increase at the same time. We analyzed the performance of 1-way parallel and 8-way parallel, called and , respectively, and compared with the existing schemes, as shown in Table 1.

In [18], the input image is divided into grids, then the image is corrected in the grid. Moreover, the hardware design scheme is given, which can solve the optical distortion and perspective distortion to a certain extent. The homography transformation method is used in [19,20,21], which needs to be realized by matrix multiplication, and different transformation matrices can realize different perspective transformations. The result obtained by this method is more in line with the observation habits of the human eye, but the solution of the transformation matrix needs to solve the 8-variable linear equation system, which is extremely difficult for hardware. Thus, they all use software to solve the transformation matrix first, and then use hardware to calculate the corrected image. Specifically, ref. [19] supports video stream input in the Video Graphic Array (VGA) format, and generates the required BEV. In [20], the video stream of 30 frames in Full High Definition (FHD) format is supported, and the parameters are calculated by the software and then passed to the hardware module to realize the correction of the pictures captured by the two cameras. A BEV can be generated and used for obstacle detection using [21]. Compared with the existing design, the design of this paper uses the central projection to realize the perspective transformation, which is in line with the habit of the human eye, and does not introduce complex calculations; thus, the overall required resources are few and no software calculation is required. At the same time, the structure can also flexibly choose the degree of parallelism to achieve different computing speeds. can process 20 frames of VGA video, and can process 157 frames of VGA video. The required resources are also smaller than [18]. The hardware resources required at the same speed are basically the same as [19,20,21], but all calculations are completely implemented by hardware, which is a great advantage.

Table 1.

Comparison of the performance of different hardware implementations.

Table 1.

Comparison of the performance of different hardware implementations.

| Design | [18] | [19] | [20] | [21] | ||

|---|---|---|---|---|---|---|

| Function | OD 1 and PT 2 | BEV | PT | BEV/PT | BEV/PT | BEV/PT |

| WSRCP 3 | No | Yes | Yes | Yes | No | No |

| Platform | ASIC | Zynq-7000 | Stratix III | Virtex 6 | Zynq-7000 | Zynq-7000 |

| LUTs | 30,000 | 2280 | 6987 | 2983 | 2893 | 11,223 |

| Registers | - 4 | - | 7922 | 5684 | 1376 | 5821 |

| BRAM | - | 4 | - | 0 | 0 | 0 |

| DSP | - | 37 | 128 | 9 | 8 | 64 |

| Video Resolution | HD 5 (FHD 6) | VGA 7 | FHD | VGA | VGA | VGA |

| Maximum frequency | 140 MHz | 100 MHz | 74.25 MHz | - | 215.8 MHz | 212.2 MHz |

| Maximum frame rate | 60 Hz | - | 30 Hz | 30 Hz | 20 Hz | 157 Hz |

1 OD: Optical Distortion. 2 PT: Perspective Transformation. 3 WSRCP: Whether Software is Required to Calculate

Parameters. 4 -: Not given in the paper. 5 HD: High Definition, the resolution is 1366 × 768. 6 FHD: the resolution

is 1920 × 1080. 7 VGA: the resolution is 640 × 480.

5. Conclusions

This paper presents a hardware design for perspective transformation that can be used to process images in real time. First, a perspective transformation method based on a central projection is given, which is also optimized for better hardware implementation. Then, its hardware design structure is proposed, taking many measures to minimize resource consumption and reduce latency. In order to adapt to more situations, a flexible and configurable hardware structure is designed, which can adapt to different degrees of parallelism to improve the processing speed. Finally, the features of the design are presented and compared with existing hardware designs for perspective correction. The design of this paper has obvious advantages in computational complexity because it does not require solving complex equations, and can perform operations such as scaling, translation, tilt correction, BEV, and rotation of the image. In addition, the design can process the images captured by the camera in real time: and can process 20 Hz and 157 Hz video with a resolution of , respectively.

Future improvements will be to complete the ASIC design, while continuing to optimize resources and speed, and apply it to computer vision processing systems.

Author Contributions

Conceptualization, R.J. and C.X.; methodology, Z.L.; software, Z.L.; validation, W.W., C.X. and R.J.; formal analysis, C.X.; investigation, W.W.; resources, W.W.; data curation, C.X.; writing—original draft preparation, Z.L.; writing—review and editing, R.J.; visualization, Z.L.; supervision, R.J.; project administration, W.W.; funding acquisition, W.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Chongqing Natural Science Foundation under Grant cstc2021jcyj-msxmX1090.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| LUTs | Look-up Tables |

| ASIC | Application Specific Integrated Circuit |

| FPGA | Field Programmable Gate Array |

| BEV | Bird’s-Eye View |

| BRAM | Block Random Access Memory |

| DDR | Double Data Rate |

| IP | Intellectual Property |

| ROM | Read Only Memory |

| HDMI | High Definition Multimedia Interface |

| UART | Universal Asynchronous Receiver/Transmitter |

| VGA | Video Graphic Array |

| FHD | Full High Definition |

| OD | Optical Distortion |

| PT | Perspective Transformation |

| WSRCP | Whether Software is Required to Calculate Parameters |

| HD | High Definition |

References

- Dick, K.; Tanner, J.B.; Green, J.R. To Keystone or Not to Keystone, that is the Correction. In Proceedings of the 2021 18th Conference on Robots and Vision (CRV), Burnaby, BC, Canada, 26–28 May 2021; pp. 142–150. [Google Scholar]

- Kim, T.H. An Efficient Barrel Distortion Correction Processor for Bayer Pattern Images. IEEE Access 2018, 6, 28239–28248. [Google Scholar] [CrossRef]

- Park, J.; Byun, S.C.; Lee, B.U. Lens distortion correction using ideal image coordinates. IEEE Trans. Consum. Electron. 2009, 55, 987–991. [Google Scholar] [CrossRef]

- Xu, Y.; Zhou, Q.; Gong, L.; Zhu, M.; Ding, X.; Teng, R.K. High-speed simultaneous image distortion correction transformations for a multicamera cylindrical panorama real-time video system using FPGA. IEEE Trans. Circuits Syst. Video Technol. 2013, 24, 1061–1069. [Google Scholar] [CrossRef]

- Yang, S.J.; Ho, C.C.; Chen, J.Y.; Chang, C.Y. Practical homography-based perspective correction method for license plate recognition. In Proceedings of the 2012 International Conference on Information Security and Intelligent Control, Yunlin, Taiwan, 14–16 August 2012; pp. 198–201. [Google Scholar]

- Chae, S.H.; Yoon, S.I.; Yun, H.K. A Novel Keystone Correction Method Using Camera—Based Touch Interface for Ultra Short Throw Projector. In Proceedings of the 2021 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 10–12 January 2021; pp. 1–3. [Google Scholar]

- Kim, J.; Hwang, Y.; Choi, B. Automatic keystone correction using a single camera. In Proceedings of the 2015 12th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Goyangi, Korea, 28–30 October 2015; pp. 576–577. [Google Scholar]

- Zhang, B.L.; Ding, W.Q.; Zhang, S.J.; Shi, H.S. Realization of Automatic Keystone Correction for Smart mini Projector Projection Screen. In Applied Mechanics and Materials; Trans Tech Publications: Zurich, Switzerland, 2014; Volume 519, pp. 504–509. [Google Scholar]

- Ye, Y. A New Keystone Correction Algorithm of the Projector. In Proceedings of the 2014 International Conference on Management of e-Commerce and e-Government, Shanghai, China, 31 October–2 November 2014; pp. 206–210. [Google Scholar]

- Li, Z.; Wong, K.H.; Gong, Y.; Chang, M.Y. An effective method for movable projector keystone correction. IEEE Trans. Multimed. 2010, 13, 155–160. [Google Scholar] [CrossRef]

- Jagannathan, L.; Jawahar, C. Perspective correction methods for camera based document analysis. In Proceedings of the First International Workshop on Camera-Based Document Analysis and Recognition, Seoul, Korea, 29 August 2005; pp. 148–154. [Google Scholar]

- Li, B.; Sezan, I. Automatic keystone correction for smart projectors with embedded camera. In Proceedings of the 2004 International Conference on Image Processing (ICIP’04), Singapore, 24–27 October 2004; Volume 4, pp. 2829–2832. [Google Scholar]

- Winzker, M.; Rabeler, U. Electronic distortion correction for multiple image layers. J. Soc. Inf. Disp. 2003, 11, 309–316. [Google Scholar] [CrossRef]

- Sukthankar, R.; Mullin, M.D. Automatic keystone correction for camera-assisted presentation interfaces. In International Conference on Multimodal Interfaces; Springer: Berlin/Heidelberg, Germany, 2000; pp. 607–614. [Google Scholar]

- Soycan, A.; Soycan, M. Perspective correction of building facade images for architectural applications. Eng. Sci. Technol. Int. J. 2019, 22, 697–705. [Google Scholar] [CrossRef]

- Zhang, W.; Li, X.; Ma, X. Perspective correction method for Chinese document images. In Proceedings of the 2008 International Symposium on Intelligent Information Technology Application Workshops, Shanghai, China, 21–22 December 2008; pp. 467–470. [Google Scholar]

- Miao, L.; Peng, S. Perspective rectification of document images based on morphology. In Proceedings of the 2006 International Conference on Computational Intelligence and Security, Guangzhou, China, 3–6 November 2006; Volume 2, pp. 1805–1808. [Google Scholar]

- Eo, S.W.; Lee, J.G.; Kim, M.S.; Ko, Y.C. Asic design for real-time one-shot correction of optical aberrations and perspective distortion in microdisplay systems. IEEE Access 2018, 6, 19478–19490. [Google Scholar] [CrossRef]

- Bilal, M. Resource-efficient FPGA implementation of perspective transformation for bird’s eye view generation using high-level synthesis framework. IET Circuits Devices Syst. 2019, 13, 756–762. [Google Scholar] [CrossRef]

- Hübert, H.; Stabernack, B.; Zilly, F. Architecture of a low latency image rectification engine for stereoscopic 3-D HDTV processing. IEEE Trans. Circuits Syst. Video Technol. 2012, 23, 813–822. [Google Scholar] [CrossRef]

- Botero, D.; Piat, J.; Chalimbaud, P.; Devy, M.; Boizard, J.L. Fpga implementation of mono and stereo inverse perspective mapping for obstacle detection. In Proceedings of the 2012 Conference on Design and Architectures for Signal and Image Processing, Karlsruhe, Germany, 23–25 October 2012; pp. 1–8. [Google Scholar]

- Rukundo, O.; Cao, H. Nearest neighbor value interpolation. Int. J. Adv. Comput. Sci. Appl. 2012, 3, 25–30. [Google Scholar]

- Blu, T.; Thévenaz, P.; Unser, M. Linear interpolation revitalized. IEEE Trans. Image Process. 2004, 13, 710–719. [Google Scholar] [CrossRef] [PubMed]

- Mastyło, M. Bilinear interpolation theorems and applications. J. Funct. Anal. 2013, 265, 185–207. [Google Scholar] [CrossRef]

- Huang, Z.; Cao, L. Bicubic interpolation and extrapolation iteration method for high resolution digital holographic reconstruction. Opt. Lasers Eng. 2020, 130, 106090. [Google Scholar] [CrossRef]

- Behjat, H.; Doğan, Z.; Van De Ville, D.; Sörnmo, L. Domain-informed spline interpolation. IEEE Trans. Signal Process. 2019, 67, 3909–3921. [Google Scholar] [CrossRef]

- Eberly, D. Perspective Mappings. Available online: https://www.geometrictools.com/Documentation/PerspectiveMappings.pdf (accessed on 1 April 2019).

- Barreto, J.P. A unifying geometric representation for central projection systems. Comput. Vis. Image Underst. 2006, 103, 208–217. [Google Scholar] [CrossRef][Green Version]

- Chen, M.; Zhang, Y.; Lu, C. Efficient architecture of variable size HEVC 2D-DCT for FPGA platforms. Aeu-Int. J. Electron. Commun. 2017, 73, 1–8. [Google Scholar] [CrossRef]

- Li, Z.; Wang, W.; Jiang, R.; Ren, S.; Wang, X.; Xue, C. Hardware Acceleration of MUSIC Algorithm for Sparse Arrays and Uniform Linear Arrays. IEEE Trans. Circuits Syst. Regul. Pap. 2022, 1–14. [Google Scholar] [CrossRef]

- Zhang, Y.; Lu, C. Efficient algorithm adaptations and fully parallel hardware architecture of H. 265/HEVC intra encoder. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 3415–3429. [Google Scholar] [CrossRef]

- Pastuszak, G.; Abramowski, A. Algorithm and architecture design of the H. 265/HEVC intra encoder. IEEE Trans. Circuits Syst. Video Technol. 2015, 26, 210–222. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).