Abstract

Satellite instruments monitor the Earth’s surface day and night, and, as a result, the size of Earth observation (EO) data is dramatically increasing. Machine Learning (ML) techniques are employed routinely to analyze and process these big EO data, and one well-known ML technique is a Support Vector Machine (SVM). An SVM poses a quadratic programming problem, and quantum computers including quantum annealers (QA) as well as gate-based quantum computers promise to solve an SVM more efficiently than a conventional computer; training the SVM by employing a quantum computer/conventional computer represents a quantum SVM (qSVM)/classical SVM (cSVM) application. However, quantum computers cannot tackle many practical EO problems by using a qSVM due to their very low number of input qubits. Hence, we assembled a coreset (“core of a dataset”) of given EO data for training a weighted SVM on a small quantum computer, a D-Wave quantum annealer with around 5000 input quantum bits. The coreset is a small, representative weighted subset of an original dataset, and its performance can be analyzed by using the proposed weighted SVM on a small quantum computer in contrast to the original dataset. As practical data, we use synthetic data, Iris data, a Hyperspectral Image (HSI) of Indian Pine, and a Polarimetric Synthetic Aperture Radar (PolSAR) image of San Francisco. We measured the closeness between an original dataset and its coreset by employing a Kullback–Leibler (KL) divergence test, and, in addition, we trained a weighted SVM on our coreset data by using both a D-Wave quantum annealer (D-Wave QA) and a conventional computer. Our findings show that the coreset approximates the original dataset with very small KL divergence (smaller is better), and the weighted qSVM even outperforms the weighted cSVM on the coresets for a few instances of our experiments. As a side result (or a by-product result), we also present our KL divergence findings for demonstrating the closeness between our original data (i.e., our synthetic data, Iris data, hyperspectral image, and PolSAR image) and the assembled coreset.

1. Introduction

Remotely sensed images are used for EO and acquired by means of aircraft or satellite platforms. The acquired images from satellites are available in digital format and consist of information on the number of spectral bands, radiometric resolution, spatial resolution, etc. A typical EO dataset is big, massive, and hard to classify by using ML techniques when compared with conventional non-satellite images [1,2]. In principle, ML techniques are a set of methods for recognizing and classifying common patterns in a labeled/unlabeled dataset [3,4]. However, they are computationally expensive and intractable to train big massive data. Recently, several studies proposed to use only a coreset (“core of a dataset”) of an original dataset for training ML methods and tackling intractable posterior distributions via Bayesian inference [5,6,7], even for a Support Vector Machine (in short, SVM) [8]. The coreset is a small, representative weighted subset of an original dataset, and ML methods trained on the coreset yield results being competitive with the ones trained on the original dataset. The concept of a coreset opens a new paradigm for training ML models by using small quantum computers [9,10] since currently available quantum computers offered by D-Wave Systems (D-Wave QA) and by IBM quantum experience (a gate-based quantum computer) comprise very few quantum bits (qubits) (https://cloud.dwavesys.com/leap, https://quantum-computing.ibm.com/, accessed on 30 August 2021). In particular, quantum computers promise to solve some intractable problems in ML [11,12,13], and to train an SVM even better/faster than a conventional computer when its input data volume is very small (“core of a dataset”) [14,15]. Training ML methods by using a quantum computer or by exploiting quantum information is called Quantum Machine Learning (QML) [16,17,18], and finding the solutions of the SVM on a quantum computer is termed a quantum SVM (qSVM), otherwise classical SVM (cSVM).

This work uses a D-Wave QA for training a weighted SVM since the D-Wave QA promises to solve a quadratic programming problem, and our method can be easily adapted and extended for a gate-based quantum computer. The D-Wave QA has a very small number of input qubits (around 5000) and a specific Pegasus topology for the connectivity of its qubits [19], and it is solely designed for solving a Quadratic Unconstrained Binary Optimization (QUBO) problem [12,20]. For practical EO data, there is a benchmark and a demonstration example for training an SVM with binary quantum classifiers when using a D-Wave QA [21,22]. Here, the SVM is a quadratic programming problem considered as a QUBO problem. Furthermore, there is a challenge to embed the variables of a given SVM problem into the Pegasus topology (i.e., the connectivity constraint of qubits), and to overcome this constraint of a D-Wave QA, the authors of [21] employed a k-fold approach to their EO data such that the size of variables in the SVM satisfies the connectivity constraint of qubits of a D-Wave QA.

In this article, we construct the coreset of an original dataset via sparse variational inference [6] and then employ this coreset for training the weighted SVM by using a D-Wave QA. Furthermore, we train the weighted SVM, posed as a QUBO problem, by using a D-Wave QA on the coreset instead of the original massive data, and we benchmark our classification results with respect to the weighted cSVM. As for practical and real-world EO data, we use synthetic data, Iris data, a Hyperspectral Image (HSI) of Indian Pine, and a Polarimetric Synthetic Aperture Radar (PolSAR) image of San Francisco characterized by its Stokes parameters [23].

Our paper is structured as follows: In Section 2, we present our datasets, and we construct the coresets of our datasets in Section 3. We introduce a weighted cSVM, and construct a weighted qSVM for our experiments in Section 4. Then, we train the weighted qSVM on our coresets by using a D-Wave QA and demonstrate our results with respect to the weighted cSVM in Section 5. Finally, we draw some conclusions in Section 6.

2. Our Datasets

We use four different datasets, namely synthetic data, Iris data, an Indian Pine HSI, and a PolSAR image of San Francisco characterized by its Stokes parameters [23,24]. The first two sets are used to understand the concept of a coreset, and the implementation of a weighted SVM on their coresets by using a D-Wave QA. Namely, we use the coresets of the first two to set the internal parameters of a D-Wave QA since the solutions generated by the D-Wave QA are affected by those internal parameters (called annealing parameters). The last two sets are employed as real-world EO data for constructing their coresets and for training the weighted qSVM on their coresets after the annealing parameters are set in a prior (see Figure 1 and Figure 2). In the next sections, we use a notation “weighted qSVM” meaning that “training a weighted SVM posed as a QUBO problem by using a D-Wave QA”.

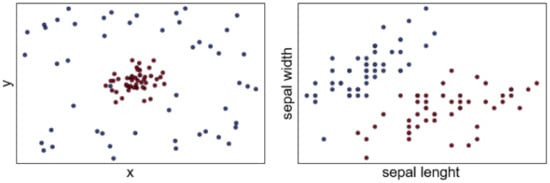

Figure 1.

Synthetic data with two classes, and Iris data with two classes (Iris Setosa, and Iris Versicolour) characterized by two features (Sepal lenght, Sepal width).

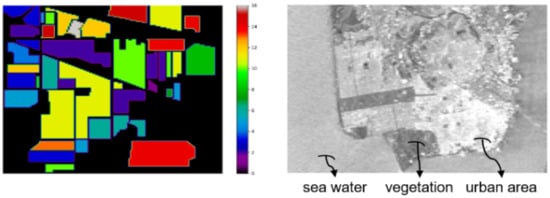

Figure 2.

Indian Pine HSI with 16 classes {1: Alfalfa, 2: Corn-notill, 3: Corn-mintill, 4: Corn, 5: Grass-Pasture, 6: Grass-Trees, 7: Grass-Pasture-mowed, 8: Hay-windrowed, 9: Oats, 10: Soybean-notill, 11: Soybean-mintill, 12: Soybean-clean, 13: Wheat, 14: Woods, 15: Building-Grass-Drives, 16: Stones-Steel-Towers}, and PolSAR image of San Francisco with three classes.

2.1. Synthetic Data

We generated synthetic data with two classes according to

where if , and if . is linearly spaced in for each class, and are samples drawn from a normal distribution with . We are replicating the data already demonstrated for training an SVM by using a D-Wave QA described in [25]. Moreover, we have data points shown in Figure 1 (Left) and in Table 1.

Table 1.

The two classes of Synthetic and Iris data.

2.2. Iris Data

Iris data consist of three classes (Iris Setosa, Iris Versicolour, and Iris Virginica), each of which has four features, namely sepal length, sepal width, petal length, and petal width. We consider a two-class dataset {Iris Setosa, Iris Versicolour} with a size of 100 data points, and each class is characterized by two features (sepal length, sepal width) shown in Figure 1 (Right) and Table 1.

2.3. Indian Pine HSI

An Indian Pine HSI obtained by the AVIRIS sensor comprises 16 classes; each class is characterized by 200 spectral bands (see Figure 2 (Left)). For simplicity, we use only two classes (see Table 2), and each class is characterized by two features instead of 200 spectral bands by exploiting Principal Component Analysis (PCA) [22].

Table 2.

The two classes of the Indian Pine HSI; represents {Alfalfa, Corn-notill}, represents {Corn-notill, Corn-mintill}, etc.

2.4. PolSAR Image of San Francisco

Each pixel of our PolSAR image is characterized by a scattering matrix as follows:

where the first index of represents the polarization state of the incident polarized beam, and its second index represents the polarization state of the reflected polarized beam on targets. The off-diagonal elements of S are equal since our PolSAR image of San Francisco is a fully-polarized PolSAR image obtained by a monostatic radar [26,27].

The incident/reflected polarized beam can be represented by its complex amplitude in a polarization basis by

The complex amplitude vector can be expressed by a so-called Jones vector

where are the phases of the polarized states. Furthermore, the scattering matrix S expressed in (2) is a mapping such that

where is an incident and a reflected Jones vector, respectively. In matrix form, (5) can be re-expressed as

The intensity of the reflected Jones vector is defined by

where stands for spatial averaging with a window size pixels, and for conjugation. Furthermore, we can re-express this intensity by

where , , and are called Stokes vectors. We normalize these Stokes vectors according to

and the normalized , , and are called Stokes parameters [23].

Moreover, in this study, we use two classes for our PolSAR image of San Francisco, and the two classes are {urban area, sea water}, and {vegetation, sea water} shown in Figure 2 (Right) and in Table 3. In addition, each class is characterized by its Stokes parameters () defined in (9).

Table 3.

The two classes of our PolSAR image.

3. Coresets of Our Datasets

In Bayesian inference, a posterior density is written for parameters and for data points with its labels by

where Z is a partition function, is a potential function, and is a prior. For big massive data, the estimation of the posterior distribution is intractable, and hence, in practice, a Markov Chain Monte Carlo (MCMC) method is widely used to obtain samples from the posterior expressed by (10) [28].

To reduce the computational time of an MCMC method, the authors of [5,6,7] proposed to run the MCMC method on a small, weighted subset (i.e., coreset) of big massive data. They derived a sparse vector of nonnegative weights w such that only are non-zero, where M is the size of a coreset. Furthermore, the authors proposed to approximate the weighted posterior distribution and run the MCMC method on the approximate distribution as follows:

We denote the full distribution of an original big massive dataset as . More importantly, this posterior becomes tractable.

For assembling the coresets of our datasets presented in Table 1, Table 2 and Table 3, we use an algorithm via sparse variational inference for finding the sparse vector of nonnegative weights w and for approximating the posterior distribution (11) proposed by [6]. Here, the sparse vector of nonnegative weights w is found by optimizing a sparse variational inference problem:

where is an optimal sparse vector weight, and is the Kullback–Leibler (KL) divergence which measures the similarity between two distributions (smaller is better):

Moreover, the smaller value of the KL divergence implies that we can estimate the parameters in (11) by using a coreset yielding similar results with respect to the ones in (10) by using its original massive dataset.

We derived the optimal sparse vector weights and the coreset of our dataset such that

where represents an original dataset, while is our newly assembled coreset. In addition, we computed the similarity between our datasets and the corresponding coresets by using their KL divergences (see Table 4). Our results show that our coresets are very small in size compared with our original datasets, and the KL divergences between the original dataset and our coresets are comparatively small in most instances.

4. Weighted Classical and Quantum SVMs on Our Coresets

4.1. Weighted Classical SVMs

In the previous section, we assembled the coreset of our original datasets shown in Table 4 as

To train a weighted SVM for our coresets represented via (15) by using a conventional computer, we express a weighted SVM as

where is a regularization parameter, and is the kernel function of the SVM [28]. This formulation of the SVM is called a weighted cSVM [29]; sometimes, it is called a kernel-based weighted cSVM. The point with is called a support vector.

After training the weighted cSVM, for a given test point , the decision function for its class label is defined by:

where if , if , and otherwise. The decision boundary is an optimum hyperplane drawn by data points such that [28]. The bias b is expressed following [25]:

The kernel-based weighted cSVM is a powerful technique since the kernel function maps non-separable features to higher dimensional separable features, and the decision boundary is less sensitive to outliers due to the weighted constraints [25,29]. Furthermore, the choice of the kernel function has a huge impact on the decision boundary, and the types of the kernel function are linear, polynomial, Matern, and a radial basis function (rfb) [28]. A widely-used kernel is an rbf defined by

where is a parameter.

4.2. Weighted Quantum SVMs

A weighted quantum SVM (in short, weighted qSVM) is the training result of the weighted cSVM given in (16) on a D-Wave QA. The D-Wave QA is a quantum annealer with a specific Pegasus topology for the interaction of its qubits, and it is specially designed to solve a QUBO problem:

where are called logical variables, and includes a bias term and the interaction strength of the logical variables [19]. Physical states of the Pegasus topology are called physical variables, two-state qubits residing at the edges of the Pegasus topology; a QUBO problem is also called a problem energy. The D-Wave QA anneals (evolves) from an initial to its final energy (problem energy) according to

where is an initial energy, T is the annealing time in microseconds, and is an annealing parameter in the range of .

Furthermore, to train the weighted qSVM on our coresets by using a D-Wave QA, we pose the weighted cSVM with an rbf kernel expressed by (16) and (19) as a QUBO problem. Here, we duplicate the formulation for posing the weighted cSVM as a QUBO problem in the article [25].

The variables of the weighted cSVM are decimal integers when the ones of the QUBO problem are binaries. Hence, we use a one-hot encoding form for the variables of the weighted cSVM

where K is the number of binary variables (bits), and B is the base. We insert this one-hot encoding form into the weighted cSVM given in (16), and formulate the second constraint of (16) as a squared penalty term

Note that the first constraint of (16) is satisfied automatically since the one-hot encoding form given in (22) is always greater than zero, and hence the maximum value for each is given by

For training the weighted qSVM, we concentrated on four hyperparameters which are the parameter of the RBF expressed by (19), the number of binary bits K, the base B, and the Lagrange multiplier given in (24); thus, we used the hyperparameters . For our applications, we set these hyperparameters to as proposed by [25] since these settings of the hyperparameters for the weighted qSVM generate competitive results with the ones generated by the weighted cSVM. For setting the bias defined in (18), we used the weighted cSVM.

In the next section, we train the weighted cSVM given in (16) and the weighted qSVM expressed by (24) on our coresets (see Table 4). In addition, we demonstrate how to program a D-Wave QA for obtaining a good solution of (24) since the solutions yielded by a D-Wave QA are greatly dependent upon the embedding of the logical variables into their corresponding physical variables, and the annealing parameters (annealing time, number of reads, and chain strength) [30].

5. Our Experiments

In our experiments, we trained our weighted cSVM and our weighted qSVM (models) on the coresets, and we tested our models on the original datasets (see Table 4). In addition, we set the hyperparameters of our weighted qSVM to , and for training (i.e., for setting of the bias) of the weighted cSVM, we used the Python module scikit-learn [31].

For defining the annealing parameters (annealing time, number of reads, and chain strength) of a D-Wave QA, we first ran quantum experiments on synthetic two-class data, and Iris data. Then, by leveraging these annealing parameters, we used our real-world EO data (the Indian Pine HSI and the PolSAR image of San Francisco) for evaluating our proposed method, “by training the weighted qSVM on the coreset of our EO data instead of a massive original EO data due to the small quantum computer (D-Wave QA) with only few qubits”.

5.1. Synthetic Two-Class Data and Iris Data

For training the weighted qSVM expressed by (24), we first experimented on our coresets of synthetic two-class data and Iris data shown in Table 4 in order to optimize the annealing parameters (annealing time, number of reads, and chain strength) of a D-Wave QA. In addition, we benchmarked the classification results generated by the weighted qSVM compared with the weighted cSVM. This had the advantage that we could easily tune the annealing parameters and visualize the generated results, both for quantum and classic settings.

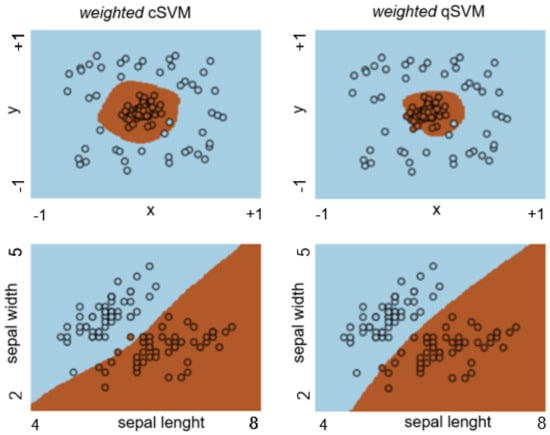

In Figure 3 (in Table 5), we show our results for the classification of synthetic two-class data and Iris data. Our results demonstrate that the weighted qSVM performs well in comparison with the weighted cSVM for both coresets (often better for Iris data).

Figure 3.

Top: Synthetic two data; Bottom: Iris data. The visual results of our experiments generated by the weighted cSVM given in (16) and weighted qSVM expressed by (24). Our visual results demonstrate that our weighted qSVM generalizes the decision boundary of a given dataset better than its counterpart weighted cSVM.

Table 5.

The classification accuracy of the weighted quantum svm (in short, qacc), and the weighted classical svm (in short, cacc) on our coresets.

To obtain these good solutions generated by our weighted qSVM, we set the annealing parameters of the D-Wave QA as follows:

- Annealing time: We controlled the annealing time by an anneal schedule. The anneal schedule is defined by the four series of pairs defined in (21). We set the annealing schedule accordingly:

- Number of reads: 10,000

- Chain strength: 50.

5.2. Indian Pine HSI and PolSAR Image of San Francisco

As real-world EO data, we used the coresets of an Indian Pine HSI, and a PolSAR image of San Francisco for training the weighted qSVM when setting the annealing parameters of a D-Wave QA set as described above. Initially, we ran a number of quantum experiments on our coresets. In Table 5, we show the classification accuracy of our weighted qSVM results in comparison with the ones yielded by the weighted cSVM.

Our results explicitly demonstrate that the coresets obtained via sparse variational inference are small and representative subsets of our original datasets validated by their KL divergences shown in Table 4. In addition, our weighted qSVM generates its decision results with the same classification accuracy as for the weighted cSVM; in some instances, the weighted qSVM outperforms the weighted cSVM. Furthermore, by exploiting the coresets, we reduced the computational time of training with the weighted qSVM and the MCMC method for inferring the parameters of the posterior distribution as proved theoretically and demonstrated experimentally in [5,6].

6. Discussion and Conclusions

Quantum algorithms (e.g., Grover’s search algorithm) are designed to process data in quantum computers, and they are known to achieve quantum advantages over their conventional counterparts. Motivated by these quantum advantages, quantum computers based on quantum information science are being built for solving some problems (or running some algorithms) more efficiently than a conventional computer. However, currently available quantum computers (a D-Wave quantum annealer, and a gate-based quantum computer) are very small in input quantum bits (qubits). A very specific type of a quantum computer is a D-Wave quantum annealer (QA); it is designed to solve a Quadratic Unconstrained Binary Optimization (QUBO) problem belonging to a family of quadratic programming problems better than conventional methods.

For Earth observation, satellite images obtained from aircraft or satellite platforms are massive and represent hard heterogeneous data to train ML models on a conventional computer. As a practical and real-world EO dataset, we used synthetic data, Iris data, a Hyperspectral Image (HSI) of Indian Pine, and a Polarimetric Synthetic Aperture Radar (PolSAR) image of San Francisco. One of the well-known methods in ML is a Support Vector Machine (SVM): This represents a quadratic programming problem. A global minimum of such a problem can be found by employing a classical method. However, its quadratic form allows us to use a D-Wave QA for finding the solution of an SVM better than a conventional computer. Thus, we can pose an SVM as a QUBO problem, and we named an SVM-to-QUBO transformation as a quantum SVM (qSVM). Then, we can train the qSVM on our real-world EO data by using a D-Wave QA. However, the number of the physical variables of the qSVM is much larger than that of the logical variables of a D-Wave QA due to the massive EO data and the very few qubits.

Therefore, in our paper, we employed the coreset (“core of a dataset") concept via sparse variational inference, where the coreset is a very small and representative weighted subset of the original dataset. By assembling and exploiting the coreset of synthetic data and Iris data shown in Table 4, we trained a weighted qSVM posed as a QUBO problem on these coresets in order to set the annealing parameters of a D-Wave QA. We then presented our obtained visual results and the classification accuracy of synthetic and Iris data in Figure 2 and in Table 5, respectively, in contrast to the ones of the weighted cSVM. Our results show that the weighted qSVM is competitive in comparison with the weighted cSVM − and for Iris data even better than the weighted cSVM.

Finally, we assembled the coresets of our real-world EO data (from an HSI of Indian Pine, and a PolSAR image of San Francisco), and demonstrated the similarity between our real-world EO data and its coreset by analyzing their KL divergence. The KL divergence test proved that our coresets are valid, small, and representative weighted subsets of our real-world EO data (see Table 4). Then, we trained the weighted qSVM on our coresets by using a D-Wave QA to prove that our weighted qSVM generates classification results being competitive with the weighted cSVM in Table 5. The annealing parameters of the D-Wave QA were already defined in the prior section. In some instances, one can see that our weighted qSVM outperforms the weighted cSVM.

As ongoing and future work, we intend to develop a novel method for assembling coresets with balanced labels via sparse variational inference since currently available techniques generate unbalanced labels. Furthermore, we plan to design hybrid quantum-classical methods for different real-world EO problems. These hybrid quantum-classical methods will perform a dimensionality-reduction of remotely-sensed images (in the spatial-dimension) by using our established methods, and will reduce the size of our training/test data by using a coreset generating balanced labels when we process these small datasets on a small quantum computer.

Author Contributions

Conceptualization, S.O.; methodology, S.O.; software, S.O.; investigation, S.O.; resources, M.D.; data curation, S.O.; writing—original draft preparation, S.O.; writing—review and editing, S.O.; supervision, M.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research has no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The hyperspectral image of Indian Pine is available online: https://aviris.jpl.nasa.gov/, accessed on 30 August 2021. The code for a coreset construction via sparse variational inference is available in: https://github.com/trevorcampbell/bayesian-coresets, accessed on 30 August 2021, and the code for a quantum Support Vector Machine is available: https://gitlab.jsc.fz-juelich.de/cavallaro1/quantum_svm_algorithms accessed on 30 August 2021.

Acknowledgments

We would like to greatly acknowledge Gottfried Schwarz (DLR, Oberpfaffenhofen) for his valuable comments and contributions for enhancing the quality of our paper. In addition, the authors gratefully acknowledge the Juelich Supercomputing Centre (https://www.fzjuelich.de/ias/jsc, accessed on 30 August 2021) for funding this project by providing computing time through the Juelich UNified Infrastructure for Quantum computing (JUNIQ) on a D-Wave quantum annealer.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Richards, J. Remote Sensing Digital Image Analysis; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar] [CrossRef]

- Cheng, G.; Xie, X.; Han, J.; Guo, L.; Xia, G.S. Remote Sensing Image Scene Classification Meets Deep Learning: Challenges, Methods, Benchmarks, and Opportunities. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3735–3756. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Marmanis, D.; Datcu, M.; Esch, T.; Stilla, U. Deep Learning Earth Observation Classification Using ImageNet Pretrained Networks. IEEE Geosci. Remote Sens. Lett. 2016, 13, 105–109. [Google Scholar] [CrossRef] [Green Version]

- Manousakas, D.; Xu, Z.; Mascolo, C.; Campbell, T. Bayesian Pseudocoresets. In Advances in Neural Information Processing Systems; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M.F., Lin, H., Eds.; Curran Associates, Inc.: New York, NY, USA, 2020; Volume 33, pp. 14950–14960. [Google Scholar]

- Campbell, T.; Beronov, B. Sparse Variational Inference: Bayesian Coresets from Scratch. In Advances in Neural Information Processing Systems; Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: New York, NY, USA, 2019; Volume 32. [Google Scholar]

- Campbell, T.; Broderick, T. Bayesian Coreset Construction via Greedy Iterative Geodesic Ascent. arXiv 2018, arXiv:1802.01737. [Google Scholar]

- Tukan, M.; Baykal, C.; Feldman, D.; Rus, D. On Coresets for Support Vector Machines. arXiv 2020, arXiv:2002.06469. [Google Scholar]

- Harrow, A.W. Small quantum computers and large classical data sets. arXiv 2020, arXiv:2004.00026. [Google Scholar]

- Tomesh, T.; Gokhale, P.; Anschuetz, E.R.; Chong, F.T. Coreset Clustering on Small Quantum Computers. Electronics 2021, 10, 1690. [Google Scholar] [CrossRef]

- Preskill, J. Quantum Computing in the NISQ era and beyond. Quantum 2018, 2, 79. [Google Scholar] [CrossRef]

- Denchev, V.S.; Boixo, S.; Isakov, S.V.; Ding, N.; Babbush, R.; Smelyanskiy, V.; Martinis, J.; Neven, H. What is the Computational Value of Finite-Range Tunneling? Phys. Rev. X 2016, 6, 031015. [Google Scholar] [CrossRef]

- Dunjko, V.; Briegel, H.J. Machine learning & artificial intelligence in the quantum domain: A review of recent progress. Rep. Prog. Phys. 2018, 81, 074001. [Google Scholar] [CrossRef] [Green Version]

- Rebentrost, P.; Mohseni, M.; Lloyd, S. Quantum Support Vector Machine for Big Data Classification. Phys. Rev. Lett. 2014, 113, 130503. [Google Scholar] [CrossRef]

- Huang, H.Y.; Broughton, M.; Mohseni, M.; Babbush, R.; Boixo, S.; Neven, H.; McClean, J.R. Power of data in quantum machine learning. Nat. Commun. 2021, 12, 2631. [Google Scholar] [CrossRef]

- Biamonte, J.; Wittek, P.; Pancotti, N.; Rebentrost, P.; Wiebe, N.; Lloyd, S. Quantum machine learning. Nature 2017, 549, 195–202. [Google Scholar] [CrossRef]

- Otgonbaatar, S.; Datcu, M. Natural Embedding of the Stokes Parameters of Polarimetric Synthetic Aperture Radar Images in a Gate-Based Quantum Computer. IEEE Trans. Geosci. Remote Sens. 2021, 1–8. [Google Scholar] [CrossRef]

- Otgonbaatar, S.; Datcu, M. Classification of Remote Sensing Images with Parameterized Quantum Gates. IEEE Geosci. Remote Sens. Lett. 2021, 1–5. [Google Scholar] [CrossRef]

- Boothby, K.; Bunyk, P.; Raymond, J.; Roy, A. Next-Generation Topology of D-Wave Quantum Processors. arXiv 2020, arXiv:2003.00133. [Google Scholar]

- Farhi, E.; Goldstone, J.; Gutmann, S.; Sipser, M. Quantum Computation by Adiabatic Evolution. arXiv 2000, arXiv:0001106. [Google Scholar]

- Cavallaro, G.; Willsch, D.; Willsch, M.; Michielsen, K.; Riedel, M. Approaching Remote Sensing Image Classification with Ensembles of Support Vector Machines on the D-Wave Quantum Annealer. In Proceedings of the IGARSS 2020–2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 1973–1976. [Google Scholar] [CrossRef]

- Otgonbaatar, S.; Datcu, M. A Quantum Annealer for Subset Feature Selection and the Classification of Hyperspectral Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 7057–7065. [Google Scholar] [CrossRef]

- Shang, F.; Hirose, A. Quaternion Neural-Network-Based PolSAR Land Classification in Poincare-Sphere-Parameter Space. IEEE Trans. Geosci. Remote Sens. 2014, 52, 5693–5703. [Google Scholar] [CrossRef]

- Cloude, S.R.; Papathanassiou, K.P. Polarimetric SAR interferometry. IEEE Trans. Geosci. Remote Sens. 1998, 36, 1551–1565. [Google Scholar] [CrossRef]

- Willsch, D.; Willsch, M.; De Raedt, H.; Michielsen, K. Support vector machines on the D-Wave quantum annealer. Comput. Phys. Commun. 2020, 248, 107006. [Google Scholar] [CrossRef]

- Lee, J.S.; Boerner, W.M.; Schuler, D.; Ainsworth, T.; Hajnsek, I.; Papathanassiou, K.; Lüneburg, E. A Review of Polarimetric SAR Algorithms and their Applications. J. Photogramm. Remote. Sens. China 2004, 9, 31–80. [Google Scholar]

- Moreira, A.; Prats-Iraola, P.; Younis, M.; Krieger, G.; Hajnsek, I.; Papathanassiou, K. A Tutorial on Synthetic Aperture Radar. IEEE Geosci. Remote Sens. Mag. (GRSM) 2013, 1, 6–43. [Google Scholar] [CrossRef] [Green Version]

- Sheykhmousa, M.; Mahdianpari, M.; Ghanbari, H.; Mohammadimanesh, F.; Ghamisi, P.; Homayouni, S. Support Vector Machine Versus Random Forest for Remote Sensing Image Classification: A Meta-Analysis and Systematic Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 6308–6325. [Google Scholar] [CrossRef]

- Yang, X.; Song, Q.; Cao, A. Weighted support vector machine for data classification. In Proceedings of the 2005 IEEE International Joint Conference on Neural Networks, Montreal, QC, Canada, 31 July–4 August 2005; Volume 2, pp. 859–864. [Google Scholar] [CrossRef]

- Otgonbaatar, S.; Datcu, M. Quantum annealer for network flow minimization in InSAR images. In Proceedings of the EUSAR 2021: 13th European Conference on Synthetic Aperture Radar, Online. 29 March–1 April 2021; pp. 1–4. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).