Parallel Multiphysics Simulation of Package Systems Using an Efficient Domain Decomposition Method

Abstract

1. Introduction

2. Mathematical Implementation

2.1. Boundary Value Problem of Electromagnetic Field

2.2. Electromagnetic–Thermal Stress Coupling

3. DDM Solver and Parallel Implementation

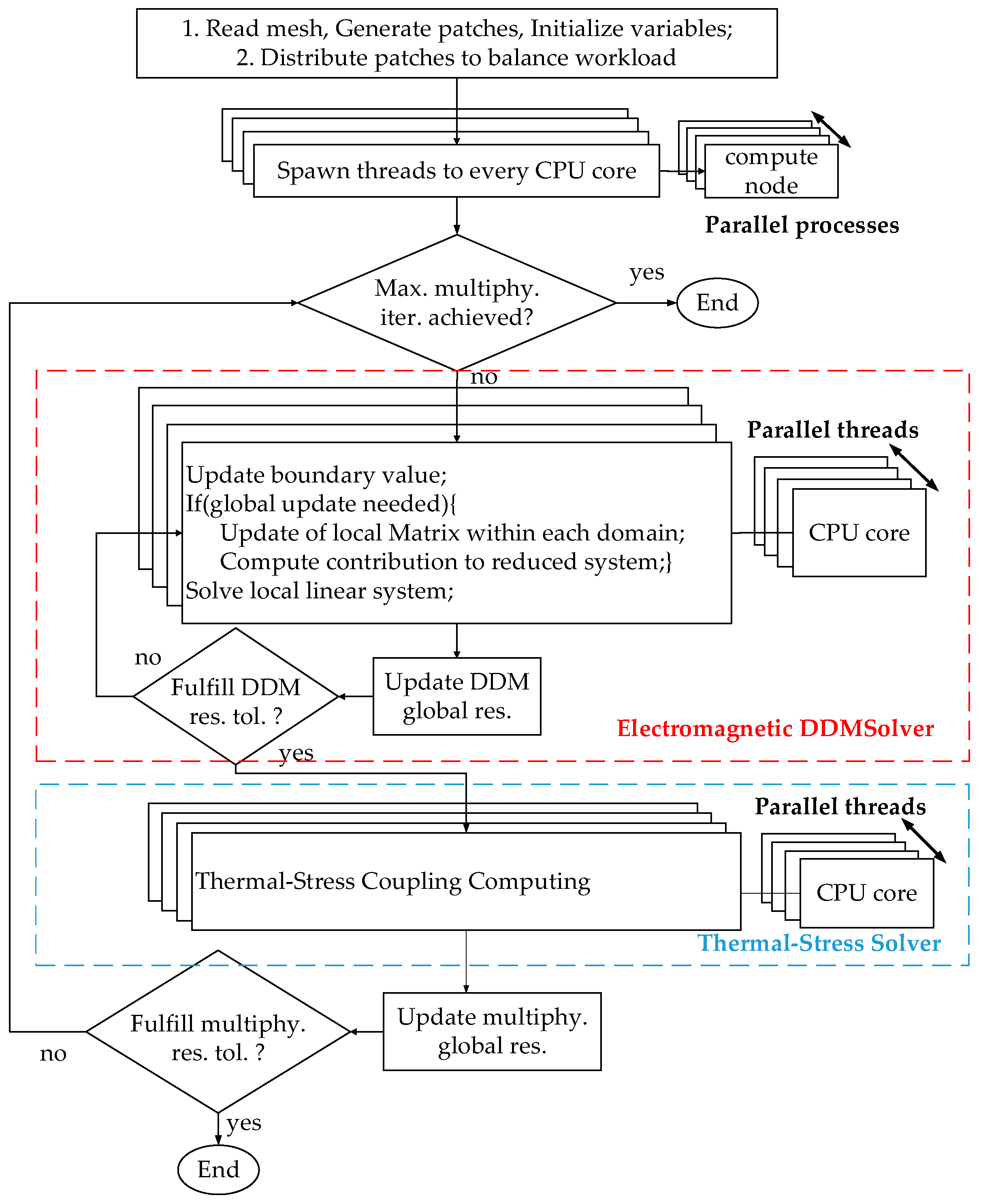

3.1. DDM Solver and Multiphysics Simulations

| Algorithm 1. Multiphysics loops based on the domain decomposition method (DDM) |

| Initial electromagnetic guess and set p = 0 |

| Do |

| Assemble linear system: |

| Solve: |

| Update boundary value: |

| Thermal stress computing |

| Update material properties and grid coordinates |

| Update: p = p + 1 |

| While |

| Multiphysics loops not converged |

| p: multiphysics loops; n: sub-domain number |

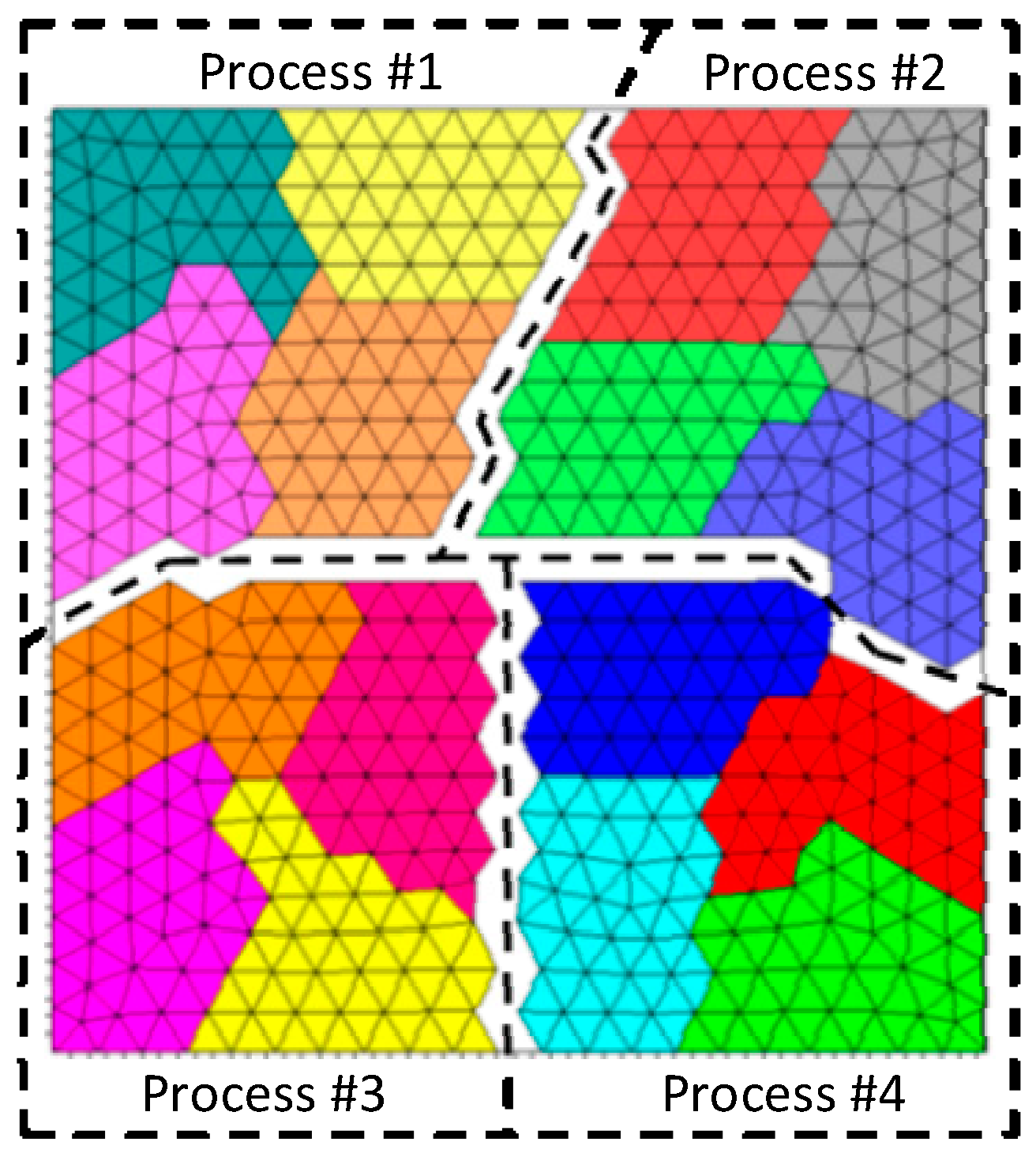

3.2. Parallel Infrastructure and Parallel Algorithm

4. Numerical Examples

4.1. Numerical Validation

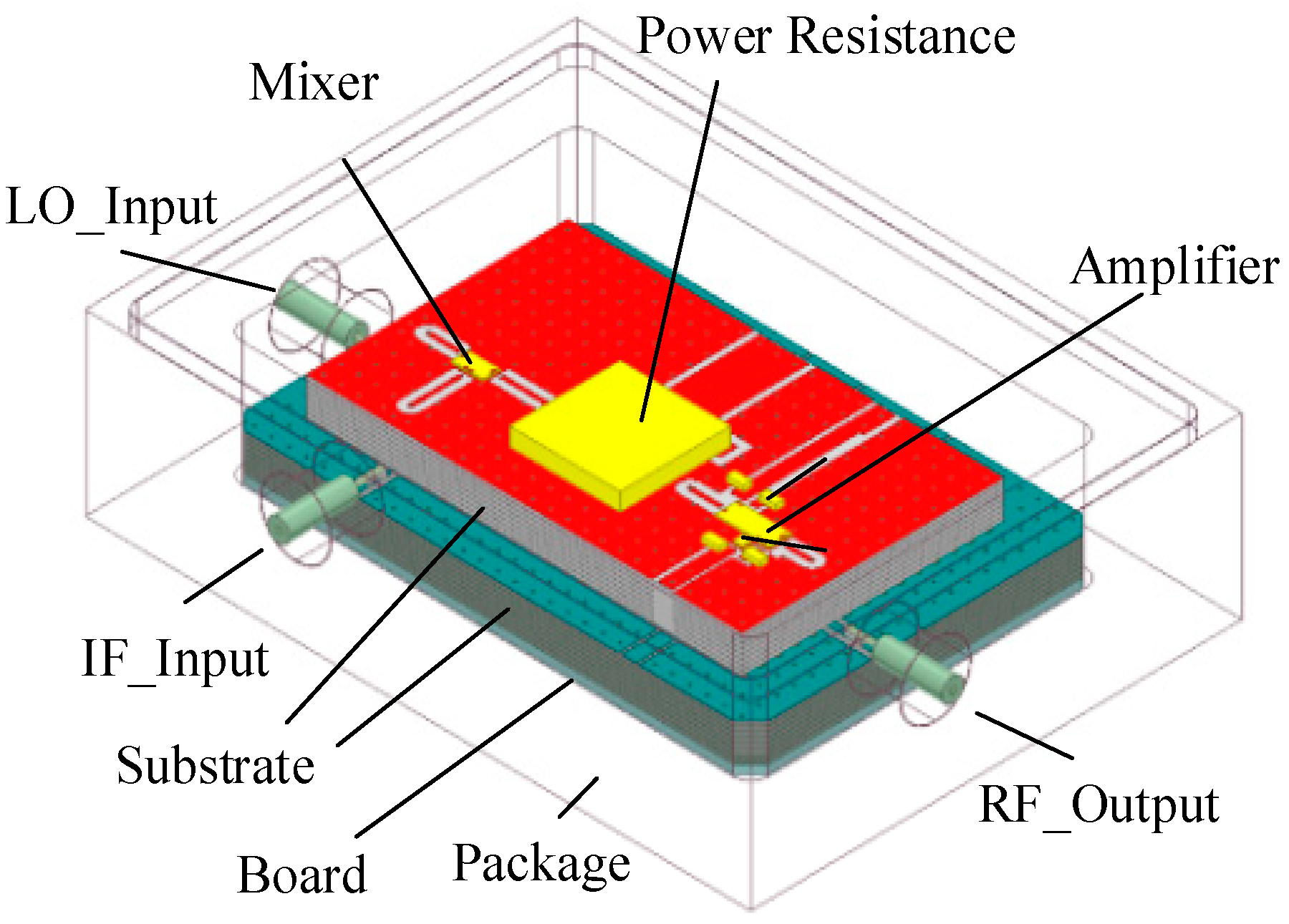

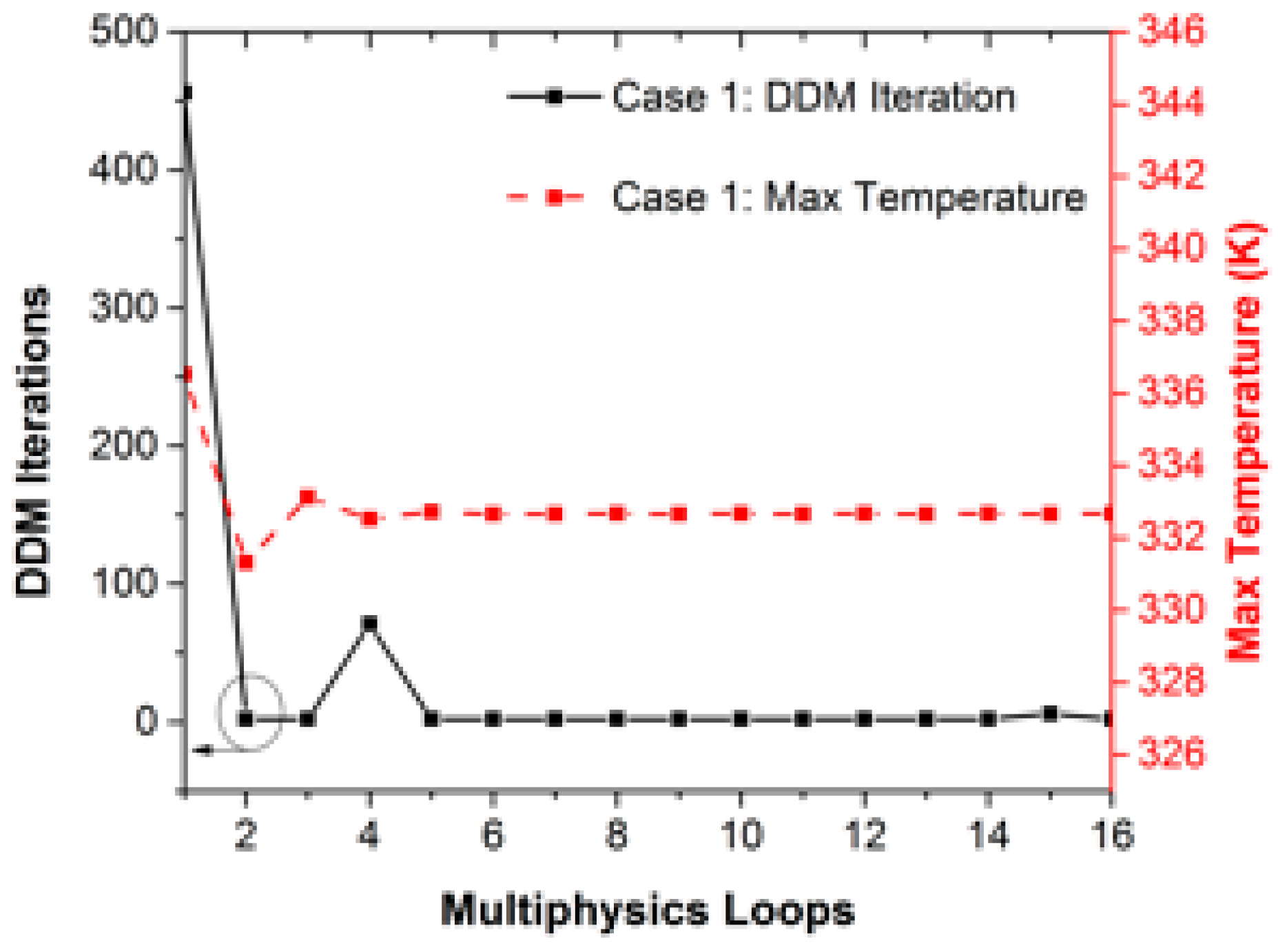

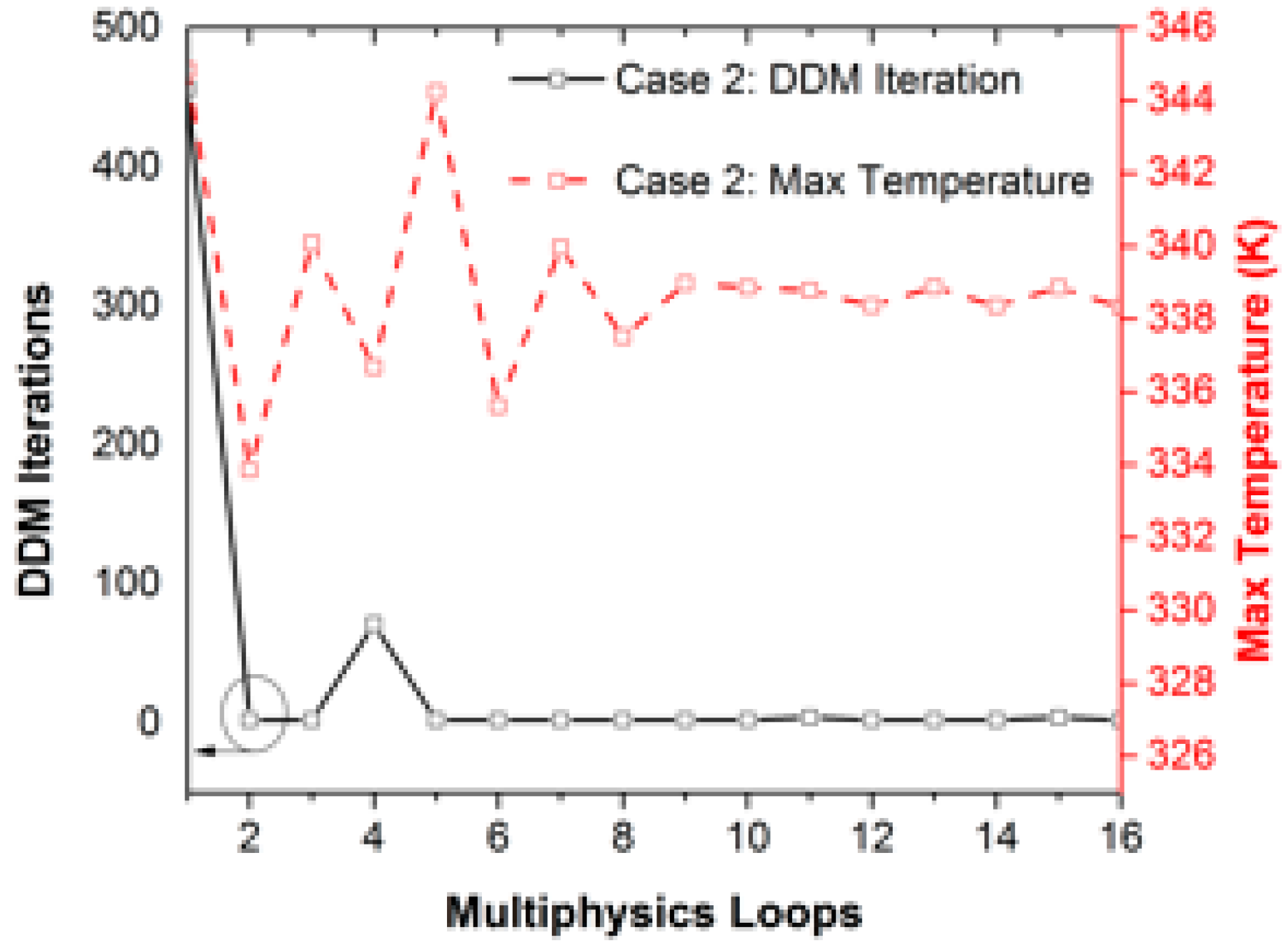

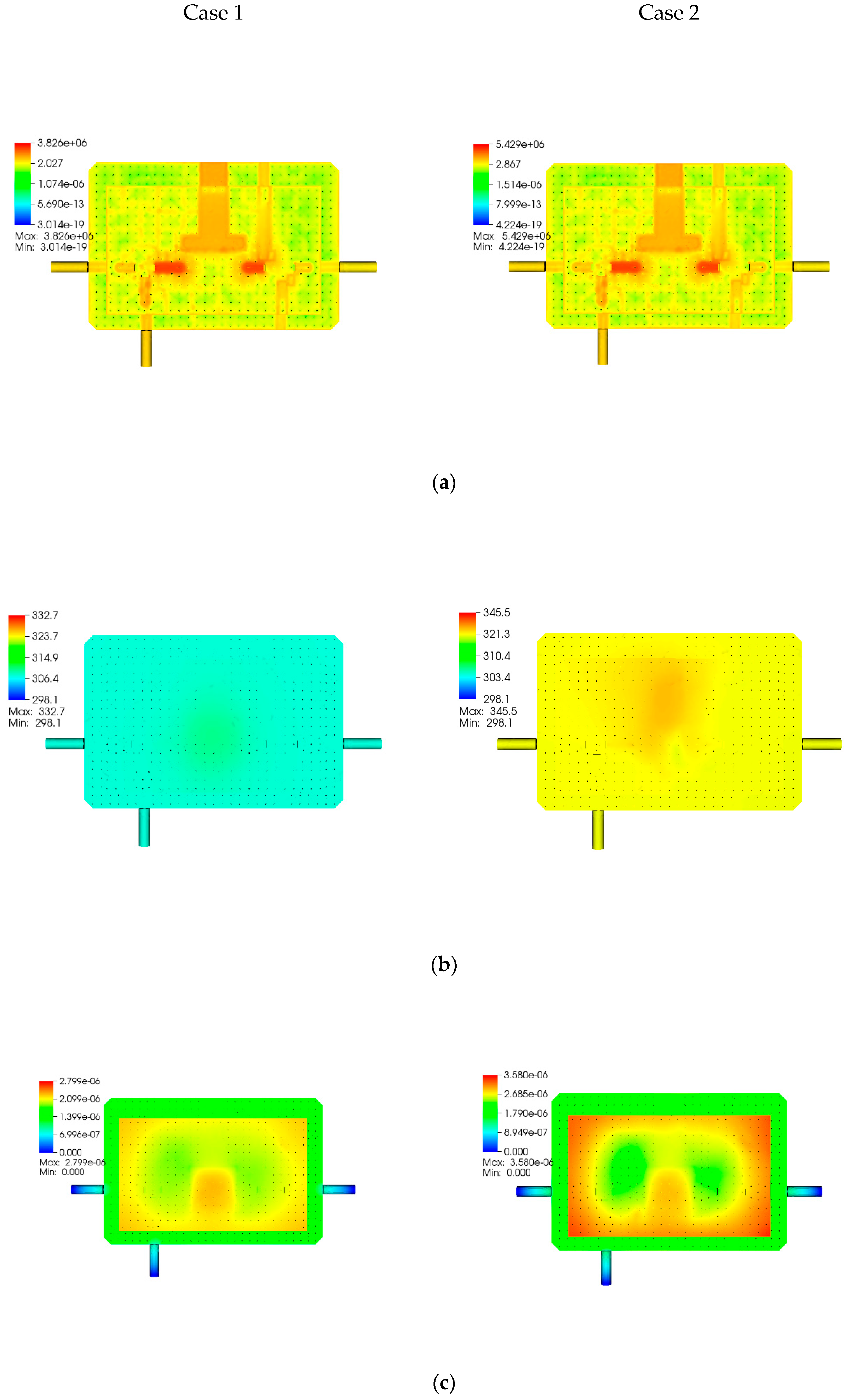

4.2. Multiphysics Simulation of Real-Life System-In-Package

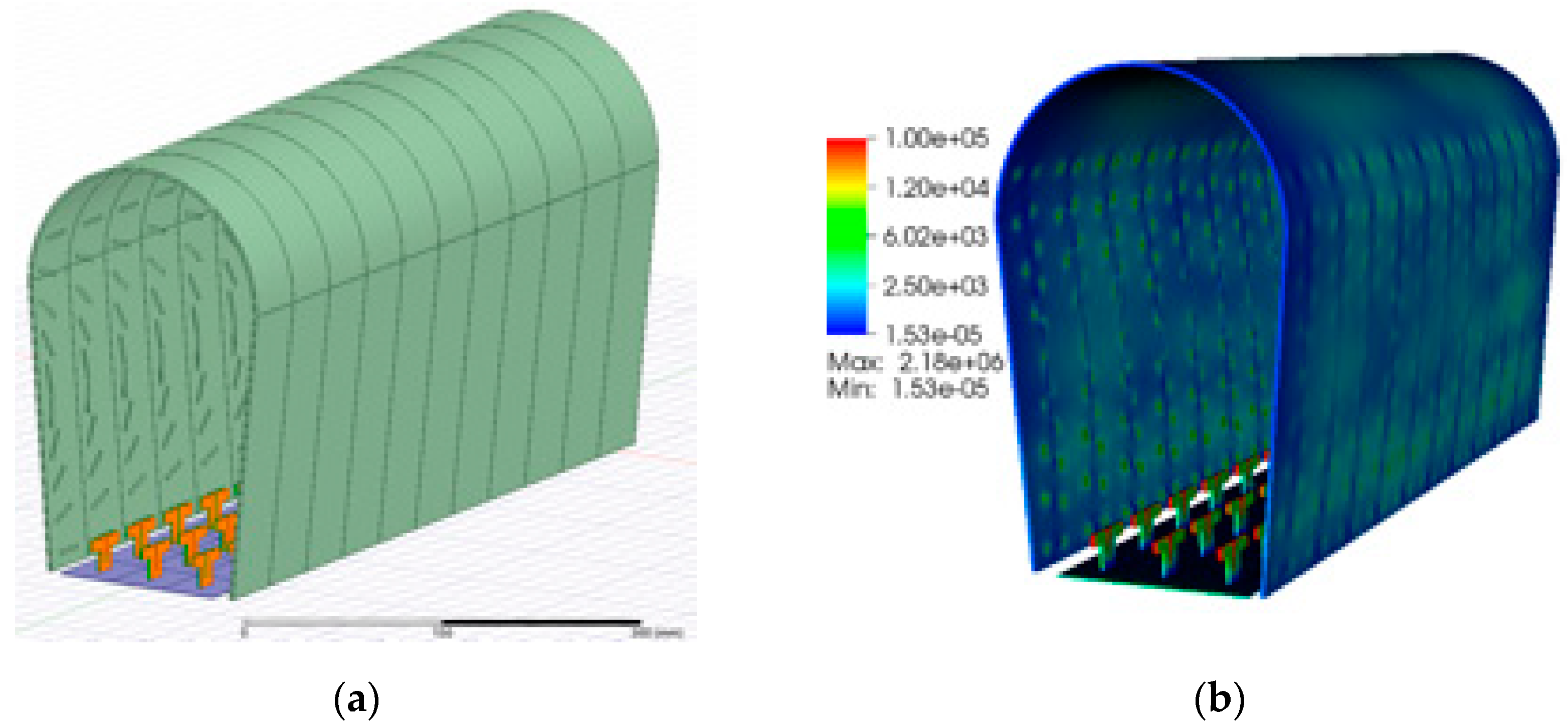

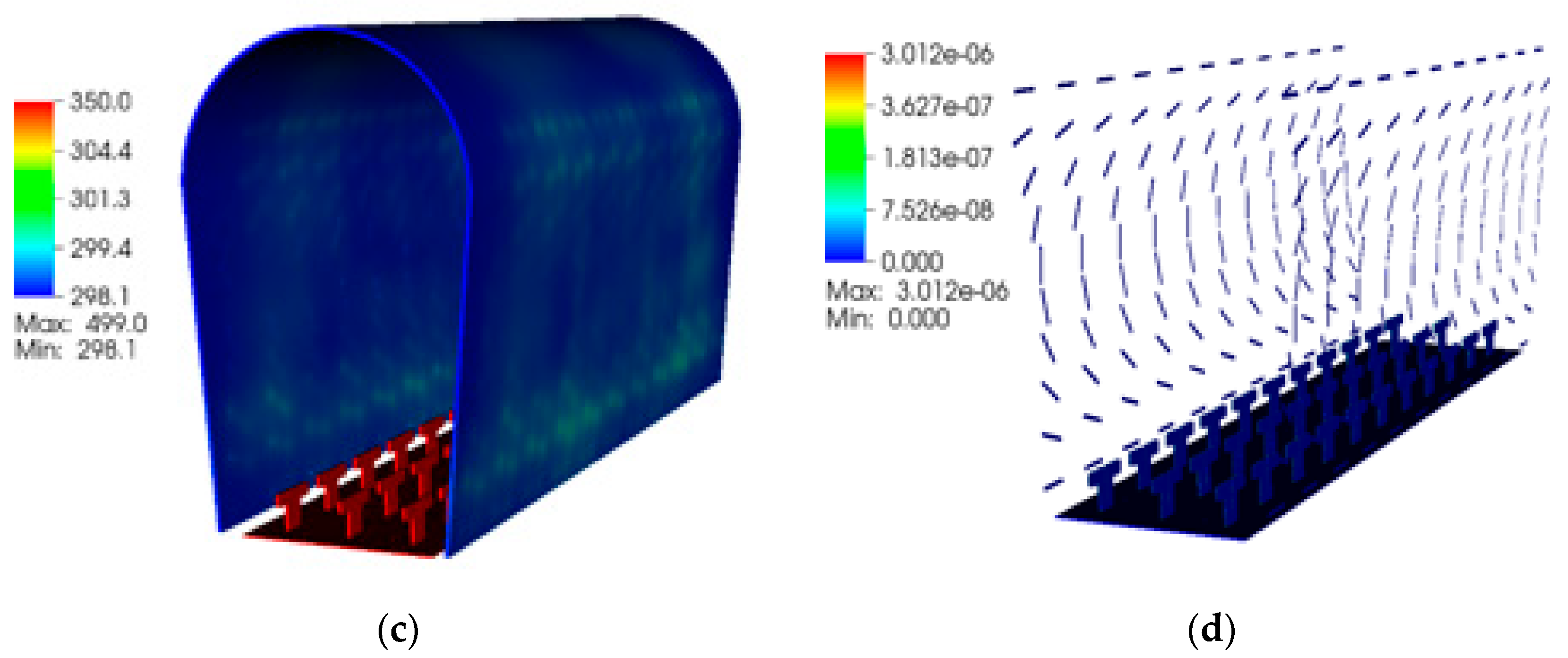

4.3. Multiphysics Simulation of Antenna Array and Radome

5. Conclusions and Future Works

Author Contributions

Funding

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Carson, F.; Kim, Y.C.; Yoon, I.S. 3-D stacked package technology and trends. Proc. IEEE 2009, 97, 31–42. [Google Scholar] [CrossRef]

- Meindl, J.D. Beyond Moore’s Law: The interconnect era. IEEE Comput. Sci. Eng. 2003, 5, 20–24. [Google Scholar] [CrossRef]

- Lu, J.-Q. 3-D hyperintegration and packaging technologies for micronano systems. Proc. IEEE 2009, 97, 18–30. [Google Scholar] [CrossRef]

- Wane, S.; Kuo, A.-Y.; Santos, P.D. Dynamic power and signal integrity analysis for chip-package-board co-design and co-simulation. In Proceedings of the 2009 European Microwave Integrated Circuits Conference (EuMIC), Rome, Italy, 28–29 September 2009. [Google Scholar]

- Ong, C.-J.; Wu, B.P.; Tsang, L.; Gu, X. Full-wave solver for microstrip trace and through-hole via in layered media. IEEE Trans. Adv. Packag. 2008, 31, 292–302. [Google Scholar]

- Huang, C.-C.; Lai, K.L.; Tsang, L.; Gu, X.; Ong, C.-J. Transmission and scattering on interconnects with via structures. Microw. Opt. Technol. Lett. 2005, 46, 446–452. [Google Scholar] [CrossRef]

- Interconnect Application Note: Z-pack HM-Zd PWB Footprint Optimization for Routing. Report, 20GC015-1, Rev. B. July 2003. Available online: http://agata.pd.infn.it/LLP_Carrier/New_ATCA_Carrier_web/Assemby_Docs/footprint_optimization.pdf (accessed on 1 December 2020).

- Blair, C.; Ruiz, S.L.; Morales, M. 5G, a multiphysics simulations vision from antenna element design to system link analysis. In Proceedings of the IEEE ICEAA, Granada, Spain, 9–13 September 2019. [Google Scholar]

- Baptista, E.; Buisman, K.; Vaz, J.C.; Fager, C. Analysis of thermal coupling effects in integrated MIMO transmitters. In Proceedings of the IEEE MTT-S IMS, Honololo, HI, USA, 1–4 June 2017. [Google Scholar]

- Jin, J.-M. The Finite Element Method in Electromagnetics, 3rd ed.; Wiley: New York, NY, USA, 2014. [Google Scholar]

- Peng, Z.; Shao, Y.; Gao, H.W.; Wang, S.; Lin, S. High-Fidelity, High-Performance Computational Algorithms for Intrasystem Electromagnetic Interference Analysis of IC and Electronics. IEEE Trans. Compon. Packag. Technol. 2017, 7, 653–668. [Google Scholar] [CrossRef]

- Yan, J.; Jiao, D. Fast explicit and unconditionally stable FDTD method for electromagnetic analysis. IEEE Trans. Microw. Theory Tech. 2017, 65, 2698–2710. [Google Scholar] [CrossRef]

- Dolean, V.; Gander, M.J.; Lanteri, S.; Lee, J.-F.; Peng, Z. Effective transmission conditions for domain decomposition methods applied to the time-harmonic curl-curl Maxwell’s equations. J. Comput. Phys. 2015, 280, 232–247. [Google Scholar] [CrossRef]

- Wang, W.J.; Xu, R.; Li, H.Y.; Liu, Y.; Guo, X.-Y.; Xu, Y.; Li, H.; Zhou, H.; Yin, W.-Y. Massively parallel simulation of large-scale electromagnetic problems using one high-performance computing scheme and domain decomposition method. IEEE Trans. Electromagn. Compat. 2017, 59, 1523–1531. [Google Scholar] [CrossRef]

- Xue, M.F.; Jin, J.M. A hybrid conformal/nonconformal domain decomposition method for multi-region electromagnetic modeling. IEEE Trans. Antennas Propag. 2014, 62, 2009–2021. [Google Scholar] [CrossRef]

- Zhou, B.D.; Jiao, D. Direct finite-element solver of linear compleixity for large-scale 3-d electromagnetic analysis and circuit extraction. IEEE Trans. Microw. Theory Tech. 2015, 63, 3066–3080. [Google Scholar] [CrossRef]

- Shao, Y.; Peng, Z.; Lee, J.-F. Signal Integrity Analysis of High-Speed Interconnects by Using Nonconformal Domain Decomposition Method. IEEE Trans. Compon. Packag. Technol. 2012, 2, 122–130. [Google Scholar] [CrossRef]

- Shao, Y.; Peng, Z.; Lee, J.-F. Thermal Analysis of High-Power Integrated Circuits and Packages Using Nonconformal Domain Decomposition Method. IEEE Trans. Compon. Packag. Technol. 2013, 3, 1321–1331. [Google Scholar] [CrossRef]

- Wang, W.J.; Zhao, Z.G.; Zhou, H.J. Multi-Physics Simulation of Antenna Arrays Using High-Performance Programm JEMS-FD. In Proceedings of the 2019 IEEE International Conference on Computational Electromagnetics (ICCEM), ShangHai, China, 20–22 March 2019; pp. 1–3. [Google Scholar]

- Staten, M.L.; Owen, S.J.; Shontz, S.M.; Salinger, A.G.; Coffey, T.S. A comparison of mesh morphing methods for 3D shape optimization. In Proceedings of the 20th International Meshing Roundtable, Paris, France, 23–26 October 2011; Springer: Berlin, Germany, 2011; pp. 293–310. [Google Scholar]

- Wang, W.; Vouvakis, M.N. Mesh morphing strategies for robust geometric parameter model reduction. In Proceedings of the 2012 IEEE International Symposium on Antennas and Propagation, Chicago, IL, USA, 8–14 July 2012; pp. 1–2. [Google Scholar]

- Lamecki, A. A mesh deformation technique based on solid mechanics for parametric analysis of high-frequency devices with 3-D FEM. IEEE Trans. Microw. Theory Tech. 2016, 64, 3400–3408. [Google Scholar] [CrossRef]

- Shao, Y.; Peng, Z.; Lee, J.-F. Full-wave real-life 3-d package signal integrity analysis using nonconformal domain decomposition method. IEEE Trans. Microw. Theory Tech. 2011, 59, 230–241. [Google Scholar] [CrossRef]

- Lu, J.Q.; Chen, Y.P.; Li, D.W.; Lee, J.-F. An Embedded Domain Decomposition Method for Electromagnetic Modeling and Design. IEEE Trans. Antennas Propagat. 2019, 67, 309–323. [Google Scholar] [CrossRef]

- Liu, Q.K.; Zhao, W.B.; Cheng, J.; Mo, Z.; Zhang, A.; Liu, J. A programming framework for large scale numerical simulations on unstructured mesh. In Proceedings of the 2nd IEEE International Conference on High Performance Smart Computing, New York, NY, USA, 9–10 April 2016. [Google Scholar]

- PETSc. Available online: https://www.mcs.anl.gov/petsc/ (accessed on 24 December 2020).

- Amestoy, P.R.; Buttari, A.; L’Excellent, J.Y.; Mary, T. Performance and scalability of the block low-rank multifrontal factorization on multicore architectures. ACM Trans. Math. Softw. 2019, 45, 1–26. [Google Scholar] [CrossRef]

- HYPRE: Scalable Linear Solvers and Multigrid Methods. Available online: https://computing.llnl.gov/projects/hypre-scalable-linear-solvers-multigrid-methods (accessed on 24 December 2020).

| Operating Frequency | Input Power | Highest Temperature | Highest Temperature (ANSYS) |

|---|---|---|---|

| 3.0 GHz | 50 W | 318.2 K | 319.0 K |

| Number of CPU Cores | Input Power (W) | Highest Temperature (K) | S11 (dB) | Time (s) | |

|---|---|---|---|---|---|

| Case 1 | 140 | 10.0 | 332.7 | −13.78 | 47652 |

| Case 2 | 140 | 50.0 | 345.3 | −12.83 | 47643 |

| Number of CPU Cores | Input Power (W) | Highest Temperature (K) | S11 (dB) | Time (s) |

|---|---|---|---|---|

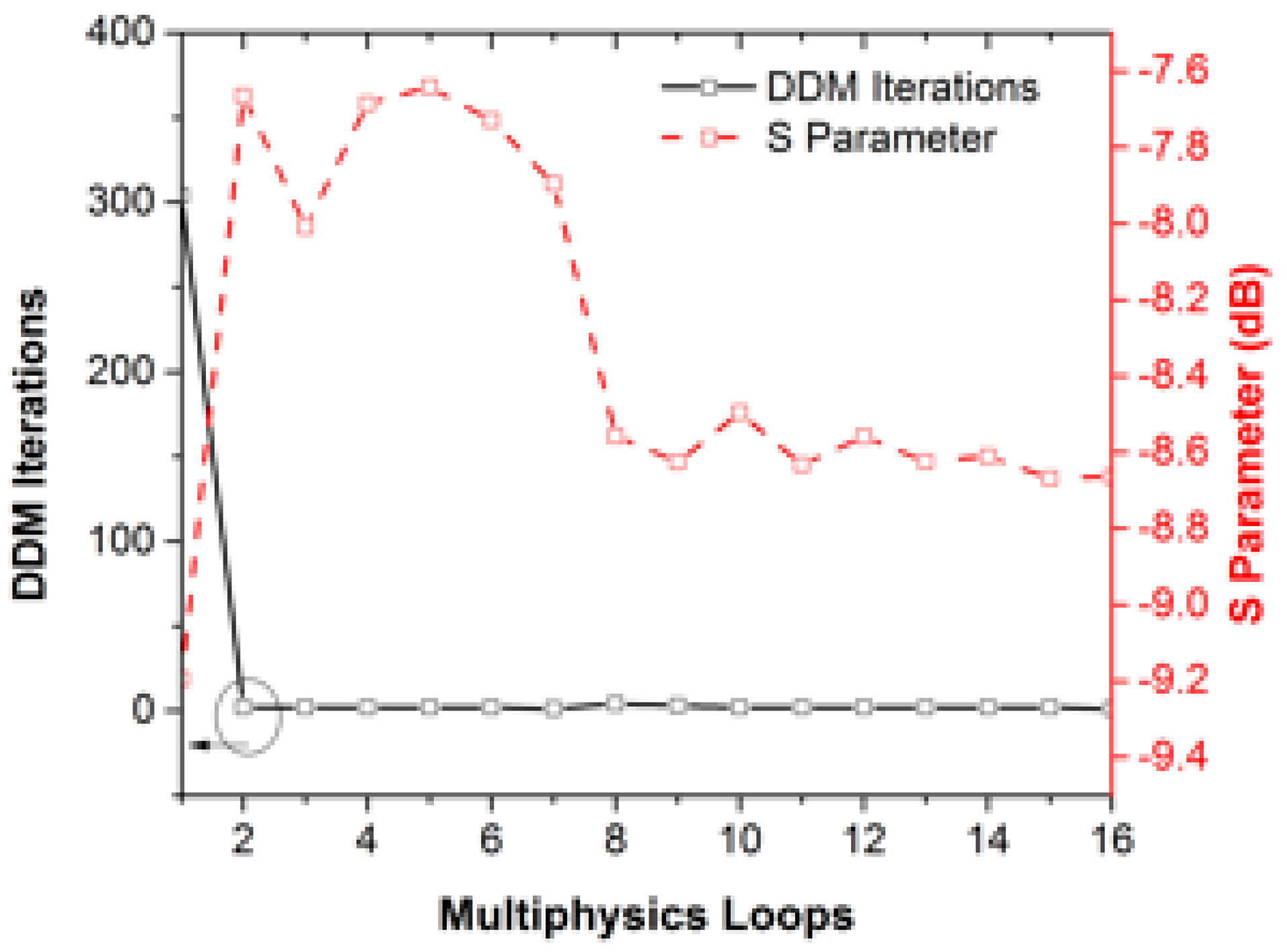

| 192 | 100.0 | 499.0 | −8.66 | 64,965 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, W.; Liu, Y.; Zhao, Z.; Zhou, H. Parallel Multiphysics Simulation of Package Systems Using an Efficient Domain Decomposition Method. Electronics 2021, 10, 158. https://doi.org/10.3390/electronics10020158

Wang W, Liu Y, Zhao Z, Zhou H. Parallel Multiphysics Simulation of Package Systems Using an Efficient Domain Decomposition Method. Electronics. 2021; 10(2):158. https://doi.org/10.3390/electronics10020158

Chicago/Turabian StyleWang, Weijie, Yannan Liu, Zhenguo Zhao, and Haijing Zhou. 2021. "Parallel Multiphysics Simulation of Package Systems Using an Efficient Domain Decomposition Method" Electronics 10, no. 2: 158. https://doi.org/10.3390/electronics10020158

APA StyleWang, W., Liu, Y., Zhao, Z., & Zhou, H. (2021). Parallel Multiphysics Simulation of Package Systems Using an Efficient Domain Decomposition Method. Electronics, 10(2), 158. https://doi.org/10.3390/electronics10020158