Abstract

Recent research on single-image super-resolution (SISR) using deep convolutional neural networks has made a breakthrough and achieved tremendous performance. Despite their significant progress, numerous convolutional neural networks (CNN) are limited in practical applications, owing to the requirement of the heavy computational cost of the model. This paper proposes a multi-path network for SISR, known as multi-path deep CNN with residual inception network for single image super-resolution. In detail, a residual/ResNet block with an Inception block supports the main framework of the entire network architecture. In addition, remove the batch normalization layer from the residual network (ResNet) block and max-pooling layer from the Inception block to further reduce the number of parameters to preventing the over-fitting problem during the training. Moreover, a conventional rectified linear unit (ReLU) is replaced with Leaky ReLU activation function to speed up the training process. Specifically, we propose a novel two upscale module, which adopts three paths to upscale the features by jointly using deconvolution and upsampling layers, instead of using single deconvolution layer or upsampling layer alone. The extensive experimental results on image super-resolution (SR) using five publicly available test datasets, which show that the proposed model not only attains the higher score of peak signal-to-noise ratio/structural similarity index matrix (PSNR/SSIM) but also enables faster and more efficient calculations against the existing image SR methods. For instance, we improved our method in terms of overall PSNR on the SET5 dataset with challenging upscale factor 8× as 1.88 dB over the baseline bicubic method and reduced computational cost in terms of number of parameters 62% by deeply-recursive convolutional neural network (DRCN) method.

1. Introduction

Image super-resolution plays a vital role in the field of image and computer vision-based applications because the high quality or high-resolution (HR) images have more pixel density level and contains more detailed information. The detailed information is applied in various fields of computer vision and image processing tasks, such as image restoration [1], security surveillance [2], closed-circuit television surveillance [3], and security systems [4], object recognition [5], object detection [6], satellite imaging [7], remote sensing imagery [8,9,10], autonomous driverless car [11], medical imaging [12,13,14], and atmospheric monitoring [15].

Single image super-resolution (SISR) is a method to reconstruct the visually pleasing high-quality or a high-resolution (HR) output image with rich and clear texture details from the low-quality or degraded version of an input image.

Traditional SISR methods can be divided into three categories: interpolation, [16,17,18,19], reconstruction [20,21,22], and learning-based methods [23,24,25,26,27,28,29,30,31]. Implementation of interpolation-based approaches is very simple; however, their resultant HR image is prone to blurriness, especially with the large upscale factor. Furthermore, interpolation-based approaches are limited in applications and suffer from low accuracy. These approaches are including as bicubic [19], bilinear, and nearest-neighbor interpolation techniques. The reconstruction-based method was specially designed in [32] and introducing prior knowledge to reduce the solution space. Such types of algorithms can recover details of the sharp edges but rapidly decrease the quality as increase the enlargement factor. Learning-based approaches or example-based approaches are trying to learn the mappings from millions of co-occurrences of low resolution (LR) to HR example images and then used these learned mapping to reconstruct the desired HR output images. A variety of learning-based approaches have been suggested including the regression-based approaches [33,34,35,36,37,38,39,40,41,42] and sparse coding-based approaches [43,44,45,46,47,48,49,50,51].

Recently, deep convolutional neural network based approaches [23,24,25,26,27,52] have obtained remarkable contributions and significantly increased the progress in the area of image super-resolution (SR) tasks, because of their superior capability of the feature representation. First, successful shallow type deep learning-based architecture with three convolutional neural network (CNN) layers followed by two rectified linear units (ReLU) is presented by Dong et al., known as a super-resolution convolutional neural network (SRCNN) [23], to solve the SISR problem. The function of the first CNN layer is used to extract the patches which create the feature mapping information from input images. The non-linear mapping is the second layer and its function is to change the feature maps into high dimensional feature vectors. The function of the final layer is to aggregate the feature maps to reconstruct the HR output image. To improve the efficiency and speed of SRCNN [23], the same author suggested the faster version, known as accelerating the super-resolution convolutional neural network (FSRCNN) [24]. To increase the computational efficiency of the model, Shi et al. [25] introduced the efficient sub-pixel convolutional neural network (ESPCNN) [25]. Inspired from the architecture of visual geometry group net architecture (VGG-net), Kim et al., [26] first time designed the skip connection-based network architecture known as very deep super-resolution (VDSR), using the small kernel size of the order (3 × 3), which address the problem of vanishing gradient in the wider and deeper network architectures.

To deal with the multi-scale image super-resolution problem, Lai et al. introduced the concept of pyramidal-based network architecture known as the deep Laplacian pyramid super-resolution network (LapSRN) [28]. Pyramidal-based network architecture is known as the deep Laplacian pyramid super-resolution network (LapSRN) [28]. This architecture used three sub-branched networks, which can progressively predict the intermediate values of an image up to scale factor 8×. Three basic CNN layers are used to design the whole framework, i.e., the CNN layers, leaky ReLU [53] layers, and deconvolution layers. Ahn et al. introduced a new cascading mechanism for the local and global level feature extraction from the multiple layers known as cascading residual network (CARN) [54]. Inspired from CARN [54] Zhang et al. introduced the concept of residual channel attention network (RCAN) [31].

Although, the deep learning-based image super-resolution research has been greatly improved in the recent decades, but remains a great challenge to capture high-resolution images in some cases, such as video security cameras (security surveillance) and human interaction with a computer. ResNet architecture was introduced by He et al. [55] and achieved an extraordinary performance in the recent decade, due to its ability to avoid the vanishing-grading problem during the training. However, ResNet still has some challenges that depend on the batch normalization (BN) layer following the ReLU activation function. BN consumes more training time, because it has requires two times iteration through input data, first for calculating the statistics of batch and second for normalizing its output. Additionally, the batch normalization layer increases the computational cost and memory consumption. Fan et al. [56] suggested that BN is not suitable for image super-resolution tasks. Inception blocks are borrowed from GoogLeNet, the winner of the imagenet large scale visual recognition challenge (ILSVRC)-2014 competition, and the main objective of this architecture was to achieve high accuracy with a low computational cost [57]. Inception block still faces challenges of max-pooling layer, because it selects the maximum values of the pixel and drops other values of the feature maps. To address these drawbacks, we suggest multi-path deep CNN with residual inception network for single image super-resolution architecture, namely, MCISIR, which uses the ResNet block without BN layer and Inception block without the max-pooling layer to speed up the feature extraction process, as well as reduce the computational complexity of the model. The extensive quantitative and qualitative evaluations on five benchmark datasets show that our proposed model obtained better perceptual quality, as well as reduce the computational cost of the network during the training.

In summary, in this paper, we establish a novel multi-path deep CNN with residual inception network for single image super-resolution, which yields a noticeable performance in terms of number of the parameters, PSNR/SSIM, speed, and accuracy.

The main contributions of our proposed method can be summarized as follows:

- Inspired by the ResNet and Inception network architecture, we propose a multi-path deep CNN with Residual and inception network for the SISR method with two upsampling layers to reconstruct the desired HR output images;

- We introduce a new multipath schema to effectively boost the feature representation of the HR image. The multipath schema consists of two layers such as deconvolution layer and upsampling layer to reconstruct the high quality of HR image features;

- Conventional deep CNN methods used the batch normalization Layer and max-pooling layer followed by the ReLU activation function, but our approach removes both batch normalization and max-pooling layer, to reduce the computational burden of the model and the conventional ReLU activation function is replaced with the leaky ReLU activation function to avoid the vanishing gradient problem during the training efficiently.

2. Related Work

Single image super-resolution is the key technique to estimate the mapping relationship between low-resolution and high-resolution images. Recently image super-resolution (SR) has been achieved remarkable attention from the research community. The main target of image super-resolution is to reconstruct the high quality or high-resolution output image with better perceptual quality and refined details from a given input low quality or low-resolution image. The image super-resolution is also known as upscaling factor, upsampling process, interpolation, enlargement factor, or zooming process. Moreover, image super-resolution has played a dynamic role in the area of digital image processing, machine learning and computer vision-based application, such as security surveillance videos for face recognition purposes [58], object detection and segmentation in different scenes [59], especially for small objects [60], astronomical images [61], medical imaging [14], forensics [62], and remote sensing images [63].

2.1. Deep Learning-Based Image SR

The rapid development of deep convolutional neural networks has made a breakthrough and various methods based on image super-resolution have been introduced by researchers. The pioneering work of image SR is presented by Dong et al. [23], named as SRCNN. The network architecture of SRCNN [23] consists of three convolutional neural network layers, where each layer is known as feature extraction type layer, non-linear mapping type layer, and reconstruction layer. The input of SRCNN [23] is used as a bicubic upsampled version of the image, which introduces the extra new noises in the model and adds extra computational cost. To address this issue and improved the speed, as well as the perceptual quality of the LR image, the same author suggested the concept of a fast super-resolution convolutional neural network [24]. The designed network architecture of FSRCNN [24] is very simple and consists of four CNN layers, namely i.e., feature extraction type layer, shrinking layer, non-linear mapping layer, and deconvolution layer. FSRCNN [24] methods does not use any interpolation technique as a pre-processing step. Shi et al. proposed a fast super-resolution approach that can operate in real-time images and videos known as a sub-pixel convolutional neural network (ESPCNN) [25]. Conventional image SR approaches used the pre-processing step to upscale the LR image to HR image by using bicubic interpolation and learn the super-resolution model in HR space, due to this computational efficiency decreased. ESPCN [25] used an alternate approach to extract the features in the LR space and then used the sub-pixel convolution layer at the final stage to reconstruct the HR image. ESPCN provides competitive results as compared to earlier approaches. Unlike the shallow type network architectures proposed in SRCNN and FSRCNN.

Follow the architecture of VGG-net, Kim et al. [26] introduced the fixed-kernel size of the order (3 × 3) in all 20 CNN layers and enlarges the receptive field by increasing the network depth known as VDSR. VDSR extracts the features by global residual learning to ease the training complexity of their network. Although VDSR [26] has achieved great success, it only extracts single-scale features and ignores the information that is contained in the features at different scales. The deeply-recursive convolutional network (DRCN) [27] suggested a handless deep CNN architecture recursively to share the depth of the network in terms of network parameters. Pyramidal-based network architecture is known as the deep Laplacian pyramid super-resolution network (LapSRN) [28]. This architecture used three sub-branched networks that progressively predict the value of the image up to enlargement factor 8×. LapSRN architecture used three types of CNN layers, i.e., the convolution layers, leaky ReLU [53] layers, and deconvolution layers. The deep recursive residual network (DRRN) [64] recursively builds two residual blocks and they handle the pre-processing problem caused by interpolation. Zhang et al. [42] introduced feed-forward denoising convolutional neural networks architecture, known as DnCNN, which is very similar to SRCNN architecture and stacks the convolutional neural network layer side-by-side, followed by batch normalization and ReLU layers. Although the model reported favorable results, their performance is depending on the accuracy of noise estimation and is computationally expensive due to the use of batch normalization layer after every CNN layer.

2.2. Residual Skip Connection Based Image SR

Lim et al. introduced deeper and wider network architectures known as enhanced deep SR network (EDSR) [29] and a multi-scale deep SR network (MDSR) [29], both consisted of 1000 convolution layers. These deep SISR networks improve performance by simply stacking the different blocks. Ahn et al. proposed a lightweight scenario-based architecture known as cascading residual network (CARN) [54]. The basic design of a CARN [54] architecture is used as a cascading residual block, whose output of each intermediate layer is shifted to the consequent CNN layers.

Residual neural network for image super-resolution residual network (SRResNet) with more than 100 layers was proposed by Ledig et al. [65]. They adopted the generator part of the super-resolution generative adversarial network (SRGAN) as the model structure and employed the residual connections between layers. Musunuri et al. [66] introduced the concept of deep residual dense network architecture for single image super-resolution abbreviated as DRDN. The network architecture is based on the combination of residual and dense blocks with skip connections. In this architecture, authors evaluate qualitative performance with another new matrix, like perception-based image quality evaluation (PIQE) and universal image quality index (UIQI).

2.3. Multi-Branch Based Image SR

In contrast to a linear or single path with skip-connection-based image super-resolution architecture, the multi-branch-based image SR type architecture obtains a different feature at multi-scales. The resultant multi-path or multi-scale information is then combined to reconstruct the HR image. Cascaded multi-scale cross-network architecture, known as CMSC, which is composed of three stages: feature extraction stage, cascaded subnets stage, and reconstruction network stage. Ren et al. proposed a combination of SRCNN in different layers network known as context-wise network fusion (CNF) model [67]. The resultant output of each SRCNN is passed through a single convolution layer and finally fused as a sum-pooling operation.

The Information Distillation Network, abbreviated as IDN, was proposed by Hui et al. [68] and used three blocks named: feature extraction, multiple stacked information distillation, and reconstruction type blocks. Inspired by GoogLeNet [57], Muhammad et al. [69] presented an inception-based multi-path approach to reconstruct the HR image. In this approach, the author used ResNet block and standard convolution operation replaced with asymmetric convolution operation to reduce the computation complexity of the model. In recent years, attention mechanism-based models achieved attractive performance in various computer vision tasks, such as image reconstruction [2], natural language processing, and also for image super-resolution tasks [23,24,25,26,27]. Follow the concepts of CARN [54] network architecture, Zhang et al. suggested the idea of a residual channel attention network, abbreviated as (RCAN) [31]. In this framework, authors used residual in residual (RIR) type structure, which consists of different groups of residuals long, as well as short skip connections.

Anwar et al. designed a densely residual Laplacian attention network (DRLN) to resolve the super-resolution images [70]. More recently, Zha et al., proposed a lightweight dense connected approach with attention to single image super-resolution (LDCASR) [71], to resolve the redundant and useless information in the dense network architecture. Furthermore, the authors used a recursive dense group, which is dependent on dense attention blocks to extract the detailed features for reconstructing the HR image. The application of DenseNet based architecture also more contribute in the area of image super-resolution, specially SRDenseNet [72], in which the authors claim that skip connection mitigates the vanishing gradient problem, as well as boosts the training performance.

A persistent memory type network for image SR is known as MemNet, which is proposed by Tai et al. [73]. The MemNet architecture designed is divided into three stages like SRCNN. The first stage is the feature extraction stage, which extracts the features information from the original input image. The second stage is to stack the memory blocks in series wise connection. The final stage is the recursive stage which is same as ResNet type architecture. The MemNet architecture used the mean squared error (MSE) as a loss function. There is a total number of six memory blocks used in the architecture. Xin et al. used the concept of recurrent neural network type architecture to state the idea of a deep recurrent fusion network of SISR with large factors known as DRFN [74]. It consists of three parts: The first part is called joint feature extraction and upsampling, the second is the recurrent mapping of the image in high-resolution feature space, and the final part is the multi-level fusion reconstruction. For the training purpose, DRFN used the same training dataset which is used by VDSR [26] with data augmentation in terms of rotation and flipping. The iterative kernel correction (IKC) method for single image super-resolution was initiated by Gu et al. [75], which consists of a super-resolution model, predictor model, and corrector model. In this approach, the author used the principal component analysis approach to reduce the dimensionality of the kernel. Jin et al. suggested a new framework known as a multi-level feature fusion recursive network abbreviated as MFFRnet [76] for single image super-resolution without pre-processing any scale of the image. The network architecture of MFFRnet [76] depends on four basic building blocks: coarse feature extraction, recursive feature extraction, multi-level feature fusion, and reconstruction blocks. Stacking different shallow type network architecture named as HCNN presented by Liu et al. [77]. In this architecture, three types of functional networks were used for extraction, reinforcement edges, and image reconstruction. The edge extraction branch consists of 11 CNN layers with 32 kernels of size 3 × 3. The edge reinforcement network is used 5 CNN layers with 32 kernels of size 3 × 3. The final branch is the image reconstruction which has 20 CNN layers with 64 kernels of size 3 × 3.

Lin et al. proposed a fast and accurate image SR method known as split-concate-residual super-resolution (SCRSR) [78]. In this approach, the authors used 58 layers and increase the receptive field significantly, because the receptive field is proportional to image details. The overall network architecture is divided into four parts: input CNN layer, downsampling type sub-network, upsampling type sub-network, and output CNN layer. Qiu et al. suggested the multiple improved residual network abbreviated as MIRN [79] for single image super-resolution. First, they are designed multiple improved residual blocks in the network architecture and the total number of blocks are eight with upsampling blocks. Stochastic gradient descent (SGD) algorithm is used to train the MIRN [79] network architecture with an adjustable learning rate. Inspired by these methods, specially ResNet blocks based architecture, we remove the BN layers from the ResNet architecture and the ReLU activation function is replaced with the Leaky ReLU activation function, which can reduce the training time and avoid the vanishing-gradient problem during the training. Additionally, we remove the max-pooling layers from the inception block to efficiently extract the high-level features and improve the reconstructing, as well as the visually pleasing quality of the HR image.

3. Proposed Method

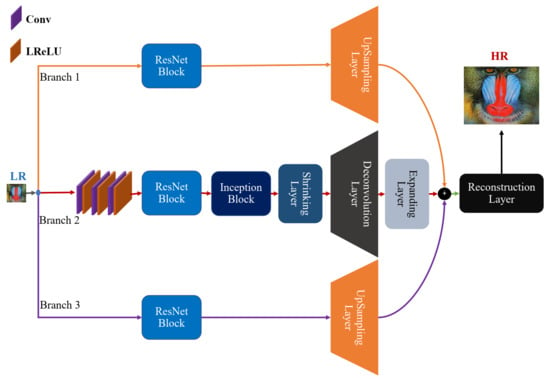

In this section, we describe the motivation and design methodology of the proposed model architecture. Earlier deep learning-based model architectures depend on a single or linear path and stacked the CNN layers side by side to create the deeper network architecture. Traditional ResNet and Inception blocks increase the computational cost and reduce the perceptual quality of the reconstructed SR image. The design architecture of single path or branch architecture is simple, but it discards the more useful information, such as edges of the image and other high-frequency features content. Additionally, batch normalization and max-pooling layer is not best the option for image super-resolution techniques. To solve, these problems, we propose three branch network architectures (Branch1, Branch2, and Branch3) to enhance the feature information, which is named as multi-path deep CNN with residual inception network for single image super-resolution (MCISIR), as shown in Figure 1.

Figure 1.

The proposed network architecture of our method with three parallel paths or branches.

3.1. Architecture Overview

The main purpose of single image super-resolution is to predict the HR image () from the corresponding LR image (). Suppose is the low-resolution image followed by an upsampling factor of to reconstruct the HR image . Moreover, HR and LR is the pair of the image with color channels C of and , they can be represented in the tensor of the size as C and , respectively. In this section, we describe the design methodology of the proposed model architecture. To reconstruct the HR output image, we used a multi-path deep CNN with residual and inception network for single image super-resolution to learn the mapping relationship between the LR and HR images.

The overall network architecture is presented in Figure 1. Branch 1 (HR) and Branch 3 (HR) only used in the ResNet block with upsampling layer. Branch 2 (HR) used three basic CNN layers to extract the initial low-level features. The reconstructed basic low-level features are fed to the ResNet blocks followed by the Inception block. For upscaling purposes, the use of shrinking and expanding layer before and after the deconvolution layer to further reduce the number of model parameters. In our proposed architecture remove the batch normalization layer from the ResNet block to reduce the memory consumption of graphics processing unit (GPU), ReLU activation function is replaced with a leaky ReLU activation function to avoid the vanishing gradient problem and take out the max-pooling layer from the inception block for the best reconstruction of HR image. Resultantly output HR images of three branches are concatenate followed by a reconstruction layer to generate the HR output image.

3.2. Feature Extraction

Following the principle in [80], we used three CNN layers followed by leaky ReLU [53] of kernel sizes is 3 × 3 with 64 number of channels to reconstruct the feature maps of the main branch (Branch2). The feature maps of these three CNN layers passed through ResNet and Inception blocks to generate the multi-scale hierarchical features.

3.3. Residual Learning Paths

Earlier approaches are used global residual learning paths with a single CNN layer having a kernel size is bigger than 5 × 5 to extract the low-level features. The single CNN layer with a bigger kernel size of 5 × 5 is not suitable for low-level feature extraction, as well as increases the computational cost of the model. To overcome this problem, we used a small kernel size of order 3 × 3 followed by upsampling and deconvolution layer to upscale the LR image. This type of upsampling strategy improved the accuracy, as well as computational efficiency of the model in terms of the number of parameters.

3.3.1. ResNet Block

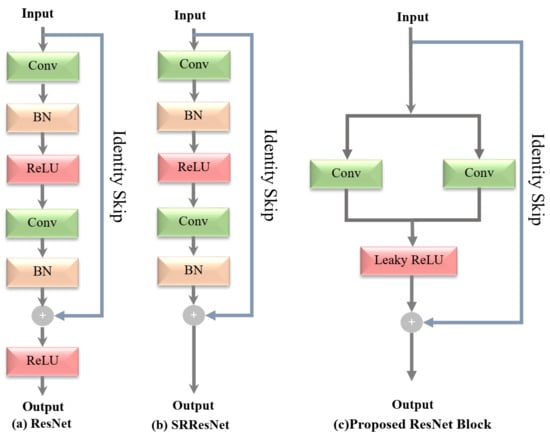

Residual learning [55] is the best way to increase computational efficiency and ease the training complexity. He et al. [55] proposed a ResNet architecture of residual learning for the image classification task. In [26], Kim et al. proposed a global skip connection to predicting the residual image. In Figure 2, we compare the building blocks of each network model from the original ResNet block [55], SRResNet block [65], and our proposed ResNet block. The original ResNet block, as shown in Figure 2a, used the two layers of convolution, batch normalization, and ReLU activation after the element-wise addition.

Figure 2.

The comparison of residual blocks in ResNet, SRResNet, and our proposed (ResNet).

SRResNet [65] block is the modified version of the original ResNet block and removes the ReLU activation layer after the element-wise addition, as shown in Figure 2b. For improved performance and numerical stability of the training in SR, we proposed a new design of ResNet Block by removing both BN layers proposed by Nah et al. [81], to provide the clean path, because BN layer is not suitable for the SR task and have a more memory consumption. Furthermore, in the proposed block the original information split into two branches and followed two convolution layers parallelly. The cumulative sum of both CNN layers is followed by one common activation function Leaky ReLU as shown in Figure 2c. Leaky ReLU [53] gives a better response than ReLU because it uses a learnable slope parameter instead of a constant slope parameter, which reduces the risk of over-fitting in the training.

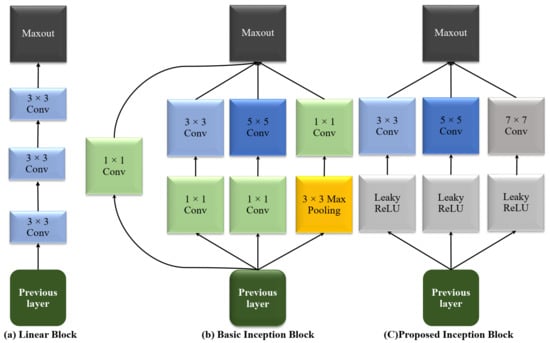

3.3.2. Inception Block

GoogLeNet was the winner of the 2014-ILSVRC competition and the main objective of this architecture was to achieve high accuracy with a reduced computational cost [57]. They introduced the new concept of inception block in CNN, whereby it incorporates multi-scale convolutional transformations using split, transform, and merge ideas. Additionally, it consists of different parallel convolutional branches with different sizes of the kernel which are then concatenated to increase the width of the network, finally fused the information, respectively.

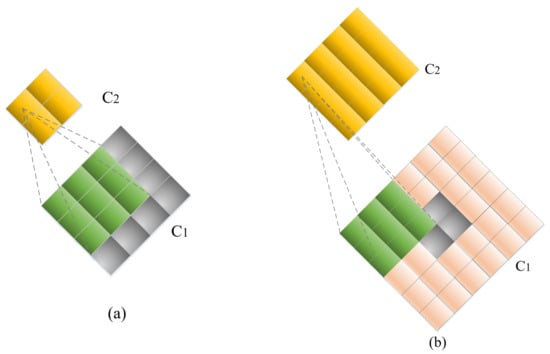

In the image SR task, most of the earlier approaches used a single kernel size to extract the features for reconstructing the HR image. However, single kernel size feature extraction is not an efficient way to restore the information completely. The design of our proposed block is inspired by GoogLeNet [57] architecture that helps to extract the feature information on different kernels for capturing better content and structure information from the image. The inception block of our proposed architecture does not contain the max-pooling layer, because it reduces the ability of the network to learn detailed information, so it is not suitable for image super-resolution tasks. Figure 3a and Figure 3b show a simple plain network architecture and stacked different CNN layers in a single path and a conventional inception block to extract the multi-scale feature information, respectively. The drawback in such types of designed blocks is that they contain more parameters, which means the model is more computationally expensive. Furthermore, these blocks used the max-pooling layer, this layer is not suitable for image super-resolution tasks. Therefore, in our proposed block, we removed the max-pooling layer, because the pooling operator considered only the maximum element from the pooling area and ignores others element’s information, as shown in Figure 3c. The proposed block consists of several filters of different sizes. It extracts the features from the previous layer’s output. In our proposed inception block is used three types of kernel sizes having the order of 3 × 3, 5 × 5, and 7 × 7 followed by LReLU. Later, the output of the inception block is mixed in a concatenation layer, and it leads to an increase in the efficiency of the blocks.

Figure 3.

Comparison of different types of blocks used for feature extraction (a) Linear or single path type block, (b) Inception-based multipath block, and (c) Our proposed multipath Inception block.

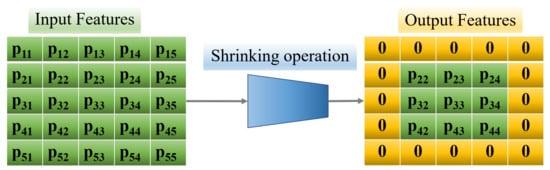

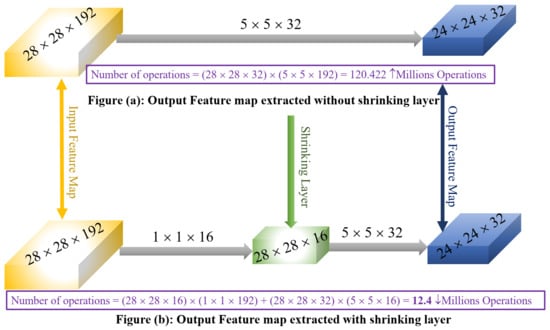

3.3.3. Shrinking Layer

If a large number of feature maps are directly fed into the deconvolution layer, it will significantly increase the computational cost and size of the model. The computational complexity and model size can greatly be increased if a large number of feature maps are directly fed into the deconvolution layer. To maintain the model compactness and enhance computational efficiency, we used the bottleneck or shrinking layer, which is a convolution layer having a kernel size of the order 1 × 1 [82]. Figure 4 shows the basic operations of shrinking layer that reduces the dimension of the extracted feature maps. The input feature maps in Figure 4 is the order of 5 × 5 and the extracted new feature maps output are the order of 3 × 3, simply insert the zeros to the outside of the boundary. From the computational complexity point of view, we draw a two layers network one is without any shrinking layer and the other one is with a shrinking layer, as shown in Figure 5. The number of operations in Figure 5a is million operations, a very high figure as compared to Figure 5b which is million operations, due to the use of the shrinking layer.

Figure 4.

Demonstration of the shrinking layer operation (insert zeros to the outside boundary).

Figure 5.

Demonstration of the number of operations with and without the shrinking layer.

3.3.4. Deconvolution Layer

The deconvolution layer is also called the transposed convolutional layer. The main purpose of this layer is to upscale the LR image features into HR image features. The implementation principle of the deconvolution layer is shown in Figure 6. For deconvolution operation of the input feature map size of the order 2 × 2 with kernel size 3 × 3 and reconstructed output is 4 × 4. In the case of convolution operation, the input size is 4 × 4 with the kernel is 3 × 3 and the reconstructed output size is 2 × 2. Dark grey color represented input C and yellow color represented the reconstructed output C.

Figure 6.

Demonstration between two-dimensional operations of (a) deconvolution operation and (b) deconvolution operator.

Furthermore, earlier deep convolutional neural network-based image super-resolution approaches used an interpolation technique to upscale the input LR image into HR image, such as SRCNN [23], VDSR [26], REDNet [83], DRCN [27], and DRRN [64]. These types of architecture extract the features information from the interpolated version of the reconstructed image, which introduces the extra new noises in the model and does not achieve better performance, as well as increases the computational cost. Therefore, recent works [24,69,84] have introduced the operation of deconvolution layers to learn the upscaling filters and also extract the features detailed of the LR image efficiently. We added the deconvolution layer at the end of the network because our whole feature extraction process was performed in the LR space.

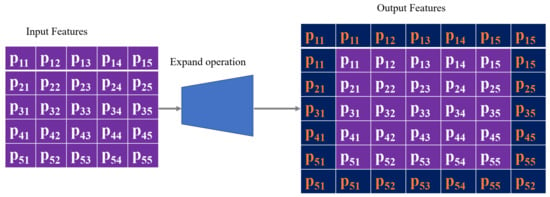

3.3.5. Expanding Layer

The function of expanding layer is the inverse operation of a shrinking layer. This operation greatly improves the reconstructed quality of the HR image. If we reconstruct the HR image directly from the LR features, the restoration quality will be poor. Generally, the shrinking layer reduces the dimension of the 64-channel input into 4 features outputs for upsampling purposes. After performing the upsampling operation, we now recover the original 64 feature map again from the 4-channel input feature map. For this purpose, we used the expanding layer of kernel size 1 × 1, followed by the leaky ReLU to increase the nonlinearity function. Furthermore, for a detailed explanation, as shown in Figure 7, the input feature map is the size of 5 × 5 and passes through the expand operation of the convolution layer when the kernel size is 1 × 1 to reconstruct the output feature maps of the order 7 × 7 by just padding the nearest neighbor pixels on the outside of the boundaries.

Figure 7.

Demonstration of expanding layer operation (pad nearest neighbors from the original input filter the outside boundary).

3.3.6. Upsampling Layer

To enhance the computational efficiency and reduce memory consumption, we used weight free layer known as UpSampling Layer followed by LReLU activation. The UpSampling layer upscales the features extracted from Branch 1 and Branch 3 through the ResNet block followed by a common leaky ReLu activation function. The UpSampling layer kernel size depends on the scale factor.

3.4. Concatenation Layer

Earlier approaches [23,26] uses only a single path to extract the feature information for reconstructing the HR output image. These types of network architectures are very simple, but they cannot extract the feature information completely and later end layers face severe problems and, in some cases, it works as dead layers. To resolve said problems we extract the features information from different routes/branches and concatenate it via the concatenation layer.

3.5. Reconstruction Layer

In our proposed model the resultant feature maps are used to reconstruct the high quality or high-resolution images via a reconstruction layer. The reconstruction layer is a basic type of CNN layer having a kernel size of the order 3 × 3.

4. Experiments

4.1. Training and Testing Datasets

In our proposed method, we combine two datasets of different color images, which are 200 images obtained from BSD200 [85] datasets and 91 images from Yang et al. [43] for training purposes. The dataset is split using a k-fold cross-validation approach and 80% for training and 20% for testing. To improve the quality of available data for training the model, we used data augmentation techniques, such as flipping, rotation, and cropping. For creating the training and testing datasets in coding we used Keras version 2.5 built-in function “image_dataset_from_directory” having main parameters required are crop_size, upscale_factor, input_size, and batch_size. After that, we rescale the images in the range of (0,1).

Increasing the training efficiency of the model, we convert RGB color image space into the YUV color space. For the input of low-resolution image data, we crop the image and retrieve the Y channel (luminance) and resize it by using the bicubic area method obtained from the Pillow, which is the Python imaging library. In our training model, we only consider on luminance channel in the YUV color space, because humans are more sensitive to luminance change. During the training, we also used the callbacks function to monitor our training process with the early stopping function having a patience value of 10. In the testing phase, five standard publicly available test datasets, including Set5 [86], Set14 [87], BSDS100 [88], Urban100 [89], and Manga109 [90]. The number of images in Set5, Set14, BSDS100, Urban100, and Manga109 is in the order of 5, 14, 100, 100, 109, respectively. Each of the five benchmark test datasets has its own set of characteristics. Natural scenes can be found in Set5 [86], Set14 [87] and BSDS100 [88]. The images in Urban100 [89] are challenging images with details in a variety of frequency bands. Finally, Manga109 [90], also known as Japanese comic images, is the class of multimodal type of artwork, which is collected from Japanese Manga.

4.2. Implementation Details

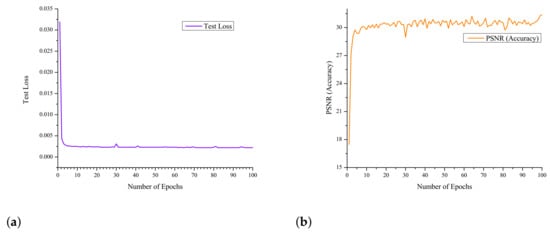

The LR images are generated by using the bicubic kernel of challenging enlargement scale factors 4× and 8×. To improve the quality of available data for training the model, we used data augmentation techniques such as flipping, rotation, and cropping. Training the deep CNN architecture we used the Adam optimizer [91] rather than stochastic gradient descent (SGD) because SGD is extremely time-consuming. The initial learning rate is set to be . The experimental setup was performed on Windows 10 operating system. The deep-learning framework used included (Keras, Tensorflow, and OpenCV), CUDA Version 10.2, Python 3.7, and an NVIDIA GeForce RTX 2070 GPU. During the training process, the curve of the test loss initially decreased rapidly, but after some epochs loss decreased gradually, as the number of epochs increases as shown in Figure 8a. In Figure 8b, the accuracy of the test dataset has been increased as the training epoch improved. We will observe that the best results can be obtained by increasing the number of epochs and providing a longer training time.

Figure 8.

Curves of testing loss and accuracy against the number of the epoch. (a) test loss as a function of epochs (b) test accuracy as a function of the epochs.

4.3. Comparisons with Current Existing State-of-the-Art Approaches

The PSNR/SSIM image quality matrix is the most generally used as a reference-quality metric in the field of image SR because they are directly related to the intensity of the image. Our proposed model evaluates five publicly available benchmark test datasets with challenging enlargement factors 4× and 8×. For quantitative comparison point of view, we have used thirteen different image super-resolution methods with the baseline method. The quantitative results of selected methods and our method, as shown in Table 1. Our proposed approach achieves a better PSNR/SSIM on an average scale than other image SR methods. Furthermore, our model can improvement overall PSNR on SET5 dataset with challenging upscale factor 8x as 1.88, 0.75, 0.90, 0.79, 0.69, 0.53, 0.95, 0.68, 0.35, 0.35, 0.14, 0.10, and 0.12 dB’s, bicubic, A+, RFL, SelfExSR, SCN, ESPCN, SRCNN, FSRCNN, VDSR, DRCN, LapSRN, DRRN, and MemNet, respectively.

Table 1.

Quantitative comparison of PSNR/SSIM of recent image super-resolution methods on challenging enlargement factor 4× and 8×. The first best result is indicated by bold with red color and the second-best result is described by blue color.

The performance of the image super-resolution model also correlates with the network depth. The deeper model is better compared to the shallow model proposed by Kim et al. [26] because the deeper model has a greater number of parameters compared to the shallow model. However, the deeper model has more parameters than the shallow model. Table 2 presents the existing image SR algorithms in terms of the number of filters, network depth (number of layers), network parameters, and type of loss functions. Our proposed method has significantly reduced the number of parameters, as well as network depth on same number the filters as compared to VDSR, DRCN, LapSRN, and MemNet, due to the multi-branch approach. In this approach, we used a combination of ResNet with Inception block followed by the leaky ReLU learning strategy, which greatly reduces the computational cost in terms of model parameters.

Table 2.

Comparison of existing well-known image super-resolution methods in terms of network parameters, number of layers (depth), number of filters, and type of loss function.

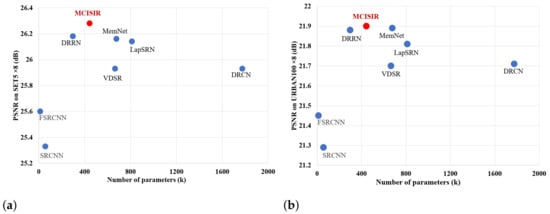

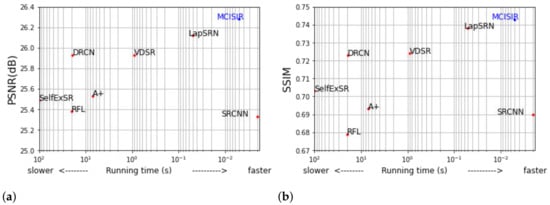

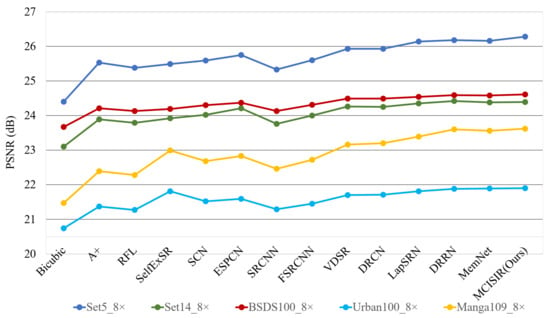

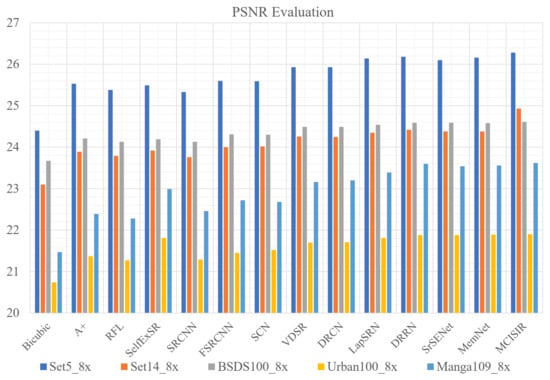

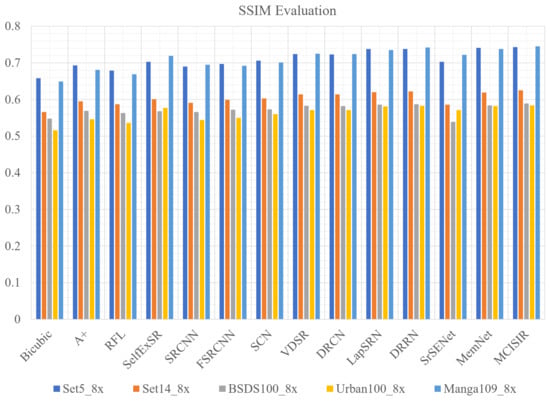

Figure 9, depicts the trade-off between the model performance (PSNR) versus the size of the model (Number of parameters). Both results are performed on the Set5 and URBAN100 datasets for challenging enlargement 8× scale factor. From quantitative evaluations, we observed that our model achieves outperforms than current state-of-the-art approaches. For example, our MCISIR achieves much better performance than VDSR, MemNet, LapSRN, and DRCN on a scale factor 8×, with the size of network parameters is decreased by 33%, 35%, 45%, and 62%, respectively. In Figure 10a,b, the performance of run time with other existing state-of-the-art methods is compared. We evaluated the performance of the Set5 dataset. The quantitative average value of PSNR/SSIM of our proposed method is significantly higher and the processing time is at a near to faster level. In Figure 11, Figure 12 and Figure 13, it is noticeable that our proposed model achieved better PSNR/SSIM on all public test datasets at a challenging scale factor 8×.

Figure 9.

Performance comparisons in terms of PSNR versus network model parameters on SET5 (a) and URBAN100 test datasets with enlargement factor 8× (b).

Figure 10.

Performance comparisons in terms of execution time versus PSNR (a)/SSIM (b) on SET5 dataset with enlargement scale factor 8×.

Figure 11.

Peak signal to noise ratio versus different algorithms on enlargement scale factor 8×.

Figure 12.

Plot the PSNR of all publicly available test image datasets versus different algorithms on enlargement scale factor 8×.

Figure 13.

Plot the SSIM of all publicly available test image datasets versus different algorithms on enlargement scale factor 8×.

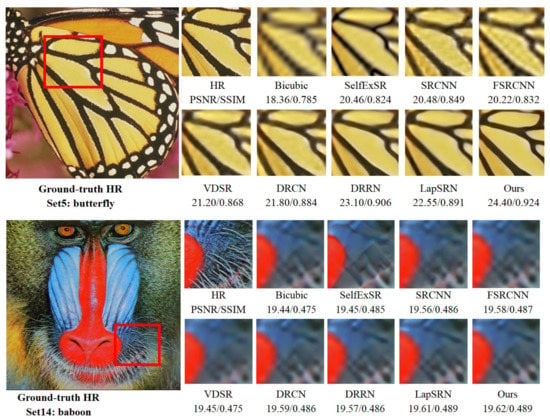

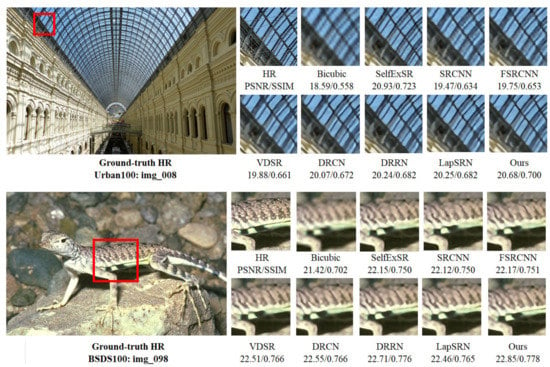

To further evaluate the perceptual quality of our proposed model with recent state-of-the-art methods as shown in Figure 14 and Figure 15. In Figure 14, we present the visual comparison performance of different approaches on butterfly and baboon images obtained from a publicly available dataset, Set5 and Set14 with enlargement factor 8×. The upscaled region of the image is indicated by a rectangle with red color, where high chances of texture expectation. In the case of the bicubic interpolation technique, hair present on the baboon beard fails to resolve the textures and generated a highly blurred output. The VDSR, DRCN, DRRN, and LapSRN approaches produce better texture results as compared to the baseline method, but still, results are largely blurry. In our proposed model reconstruct the texture details around the beard hair of the baboon. From a comparison point of view, our method reduces the effects of edge bending and reconstructs the high-frequency details efficiently. This is because of the multi-path arrangement of network architecture to reconstruct the HR image. The above results are verifying the superior performance of our MCISIR, especially with fine texture details of the reconstructed image patch. Similarly, the perceptual quality of the reconstructed images is evaluated on another two challenging datasets of Urban100 and BSDS100. Image_100 was obtained from the Urban100 dataset and image_098 is obtained from BSDS100. In Figure 15, the image of lizard (image_098) observed that our proposed method reconstructs the better patch result as compared to bicubic, SelfExSR, SRCN, and FSRCNN methods. Similarly, VDSR, DRCN, DRRN and LapSRN reconstructed results are fairly acceptable, but our method has a strong strip of lizard as compared to others.

Figure 14.

Presents the visual quality performance comparison for 8× image SR methods on Set5 and Set14 datasets.

Figure 15.

Presents the visual quality performance comparison for 4× image SR methods on Urban100 and BSDS100 datasets.

5. Conclusions and Future Work

In this paper, we proposed a novel deep learning-based CNN model called multi-path deep CNN with Residual and Inception Network for single image super-resolution. In our proposed network model, we predict the result of image super-resolution reconstruction through three branches. Branch 1 and 3 pass the original input LR image through the ResNet block and upscale the resultant features by the up-sampling layer. Branch 2 takes an original input image using two blocks, i.e., ResNet and Inception block without batch normalization and max-pooling layer, respectively, that is upscaled by deconvolution layer. The resultant output is finally combined to reconstruct a high-resolution image. This alternate strategy of the deeper network model is to further reduce the computational complexity and to avoid the vanishing gradient problem during the training. The experimental result of image super-resolution reconstruction shows that our proposed model has better reconstruction performance with a reduced number of parameters than other state-of-the-art deep learning-based image super-resolution algorithms.

However, our model obtained promising results on SR with the enlargement scale factor of 8× to reconstruct the HR images, but still exhibits some limitations, such as computational cost, speed, and visual perception. To address these limitations, in our future work, we will apply lightweight convolution operations, such as octave convolution and grouped convolution-based ResNet block with the Inception module, which will help to reduce computational cost and the improve perceptual quality of the LR images.

Author Contributions

Conceptualization, W.M. and Z.B.; methodology, W.M. and Z.B.; investigation, A.A. and R.K.; writing—original draft preparation, W.M., Z.B. and A.H.; writing—review and editing, S.A.R.S., M.L.M. and I.T.; supervision, W.M., Z.B. and S.A. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by Research Fund of the Balochistan University of Engineering and Technology, Khuzdar, Pakistan.

Acknowledgments

The authors extend their gratitude to the anonymous reviewers for their valuable and constructive comments, which helped us to improve the quality our manuscript.

Conflicts of Interest

Authors have no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| BN | Batch normalization |

| CARN | Cascading residual network |

| CMSC | Cascading multi-scale cross network |

| CNN | Convolutional neural network |

| CUDA | Compute unified device architecture |

| dB | Decibels |

| DnCNN | Denoising convolutional neural network |

| DRCN | Deeply recursive convolutional network |

| DRDN | Deeply residual dense network |

| DRFN | Deep recurrent fusion network |

| DRLN | Densely residual Laplacian network |

| DRRN | Deep recursive residual network |

| EDSR | Enhanced deep super resolution |

| ESPCNN | Efficient sub-pixel convolutional neural network |

| FSRCNN | Fast super-resolution convolutional neural network |

| GPU | Graphics processing unit |

| HCNN | Hierarchical convolutional neural network |

| IDN | Information distillation network |

| IKC | Iterative kernel correction |

| ILSVRC | ImageNet large scale visual recognition challenge |

| LapSRN | Laplacian pyramid super-resolution network |

| LDCASR | Lightweight dense connected approach with attention to single image super-resolution |

| LR | Low-resolution |

| LReLU | Leaky ReLU |

| MDSR | Multi-scale deep SR |

| MemNet | Memory network |

| MFFRnet | Multi-level feature fusion recursive network |

| MIRN | Multiple improved residual networks |

| MSE | Mean squared error |

| PIQE | Perception-based image quality evaluation |

| PSNR | Peak signal-to-noise ratio |

| RCAN | Residual channel attention networks |

| RED-Net | Residual encoding–decoding convolutional neural network |

| ReLU | Rectified linear units |

| RIR | Residual in residual |

| SCRSR | Split-concate-residual super resolution |

| SGD | Stochastic gradient descent |

| SISR | Single image super-resolution |

| SRCNN | Super-resolution convolutional neural network |

| SRGAN | Super-resolution generative adversarial network |

| SRDenseNet | Super-resolution dense network |

| SSIM | Structural similarity index matrix |

| UIQI | Universal image quality index |

| VDSR | Very deep super resolution |

| VGG-Net | Visual geometry group net architecture |

| ZSSR | Zero-shot SR |

References

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H.; Shao, L. Multi-stage progressive image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 11–17 October 2021; pp. 14821–14831. [Google Scholar]

- Zhang, L.; Zhang, H.; Shen, H.; Li, P. A super-resolution reconstruction algorithm for surveillance images. Signal Process. 2010, 90, 619–626. [Google Scholar] [CrossRef]

- Onie, S.; Li, X.; Liang, M.; Sowmya, A.; Larsen, M.E. The use of closed-circuit television and video in suicide prevention: Narrative review and future directions. JMIR Ment. Health 2021, 8, e27663. [Google Scholar] [CrossRef]

- Hazra, D.; Byun, Y.-C. Upsampling real-time, low-resolution CCTV videos using generative adversarial networks. Electronics 2020, 9, 1312. [Google Scholar] [CrossRef]

- Alom, M.Z.; Hasan, M.; Yakopcic, C.; Taha, T.M.; Asari, V.K. Inception recurrent convolutional neural network for object recognition. Mach. Vis. Appl. 2021, 32, 1–14. [Google Scholar] [CrossRef]

- Hou, Q.; Cheng, M.; Hu, X.; Borji, A.; Tu, Z.; Torr, P. Deeply supervised salient object detection with short connections. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Thornton, M.W.; Atkinson, P.M.; Holland, D.A. Sub-pixel mapping of rural land cover objects from fine spatial resolution satellite sensor imagery using super-resolution pixel-swapping. Int. J. Remote Sens. 2006, 27, 473–491. [Google Scholar] [CrossRef]

- Jiang, K.; Wang, Z.; Yi, P.; Jiang, J.; Xiao, J.; Yao, Y. Deep Distillation Recursive Network for Remote Sensing Imagery Super-Resolution. Remote Sens. 2018, 10, 1700. [Google Scholar] [CrossRef] [Green Version]

- Jiang, K.; Wang, Z.; Yi, P. A progressively enhanced network for video satellite imagery superresolution. IEEE Signal Process. Lett. 2018, 25, 1630–1634. [Google Scholar] [CrossRef]

- Jiang, K.; Wang, Z.; Yi, P.; Guangcheng, W.; Lu, T.; Jiang, J. Edge-enhanced GAN for remote sensing image superresolution. IEEE Trans. Geosci. Remote Sens. 2019, 8, 5799–5812. [Google Scholar] [CrossRef]

- Gallardo, N.; Gamez, N.; Rad, P.; Jamshidi, M. Autonomous decision making for a driver-less car. In Proceedings of the IEEE 12th System of Systems Engineering Conference (SoSE), Waikoloa, HI, USA, 18–21 June 2017; pp. 1–6. [Google Scholar]

- Luo, W.; Zhang, Y.; Feizi, A.; Göröcs, Z.; Ozcan, A. Pixel super-resolution using wavelength scanning. Light Sci. Appl. 2016, 5, e16060. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhou, F.; Yang, W.; Liao, Q. Interpolation-based image super-resolution using multisurface fitting. IEEE Trans. Image Process. 2012, 21, 3312–3318. [Google Scholar] [CrossRef]

- Hayit, G. Super-resolution in medical imaging. Comput. J. 2009, 52, 43–63. [Google Scholar]

- Dudczyk, J. A method of feature selection in the aspect of specific identification of radar signals. Bulletin of the Polish Academy of Sciences. Tech. Sci. 2017, 65, 113–119. [Google Scholar]

- Zhang, L.; Wu, X. An edge-guided image interpolation algorithm via directional filtering and data fusion. IEEE Trans. Image Process. 2006, 15, 2226–2238. [Google Scholar] [CrossRef] [Green Version]

- Andiga, F. A nonlinear algorithm for monotone piecewise bicubic interpolation. Appl. Math. Comput. 2016, 272, 100–113. [Google Scholar]

- Fattal, R. Image upsampling via imposed edge statistics. ACM Trans. Graph. 2007, 26, 95-es. [Google Scholar] [CrossRef]

- Keys, R. Cubic convolution interpolation for digital image processing. IEEE Trans. Acoust. Speech Signal Process. 2018, 29, 1153–1160. [Google Scholar] [CrossRef] [Green Version]

- Zhang, K.; Gao, X.; Tao, D.; Li, D. Single Image Super-Resolution With Non-Local Means and Steering Kernel Regression. IEEE Trans. Image Process. 2012, 21, 4544–4556. [Google Scholar] [CrossRef] [PubMed]

- Stark, H.; Oskoui, P. High-resolution image recovery from image-plane arrays, using convex projections. JOSA A 1989, 11, 1715–1726. [Google Scholar] [CrossRef]

- Yang, X.; Zhang, Y.; Zhou, D.; Yang, R. An improved iterative back projection algorithm based on ringing artifacts suppression. Neurocomputing 2015, 162, 171–179. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. [Google Scholar] [CrossRef] [Green Version]

- Dong, C.; Loy, C.C.; Tang, X. Accelerating the super-resolution convolutional neural network. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 391–407. [Google Scholar]

- Shi, W.; Caballero, J.; Huszar, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-time single image and video superresolution using an efficient sub-pixel convolutional neural network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1874–1883. [Google Scholar]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate image super-resolution using very deep convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Kim, J.; Lee, J.K.; Lee, K.M. Deeply-recursive convolutional network for image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Lai, W.; Huang, J.; Ahuja, N.; Yang, M. Deep laplacian pyramid networks for fast and accurate super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Lee, K.M. Enhanced deep residual networks for single image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Zhang, Y.; Tian, Y.; Kong, Y.; Zhong, B.; Fu, Y. Residual Dense Network for Image Super-Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Zhang, Y.; Li, K.; Li, K.; Wang, L.; Zhong, B.; Fu, Y. Image super-resolution using very deep residual channel attention networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 294–310. [Google Scholar]

- Sun, S.; Xu, Z.; Shum, H.Y. Image super-resolution using gradient profile prior. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Anchorage, AK, USA, 23–28 June 2008. [Google Scholar]

- Wang, H.; Gao, X.; Zhang, K.; Li, J. Single image super-resolution using Gaussian process regression. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Colorado Springs, CO, USA, 20–25 June 2011. [Google Scholar]

- Timofte, R.; Smet, V.D.; Gool, L.V. Image super-resolution using gradient profile prior. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Sydney, Australia, 1–8 December 2013. [Google Scholar]

- Timofte, R.; Smet, V.D.; Gool, L.V. A+: Adjusted anchored neighborhood regression for fast super-resolution. In Proceedings of the Asian Conference on Computer Vision (ACCV), Singapore, 1–5 November 2014; pp. 3791–3799. [Google Scholar]

- Karl, S.N.; Nguyen, T.Q. Image super-resolution using support vector regression. IEEE Trans. Image Process. 2007, 16, 1596–1610. [Google Scholar]

- Kim, I.M.; Kwon, Y. Single-image super-resolution using sparse regression and natural image prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1127–1133. [Google Scholar] [PubMed]

- Deng, C.; Xu, J.; Zhang, K.; Tao, D.; Li, X. Similarity constraints-based structured output regression machine: An approach to image super-resolution. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 2472–2485. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Schulter, S.; Leistner, C.; Bischof, H. Fast and accurate image upscaling with super-resolution forests. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3791–3799. [Google Scholar]

- Chang, H.; Yeung, D.Y.; Xiong, Y. Super-resolution through neighbor embedding. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Washington, DC, USA, 27 June–2 July 2004. [Google Scholar]

- Shi, W.; Caballero, J.; Theis, L.; Huszar, F.; Aitken, A.; Ledig, C.; Wang, Z. Is the deconvolution layer the same as a convolutional layer? arXiv 2016, arXiv:1609.07009. [Google Scholar]

- Zhang, K.; Zuo, W.; Chen, Y.; Meng, D.; Zhang, L. Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yang, J.; Wright, J.; Thomas, S.H.; Ma, Y. Image super-resolution via sparse representation. IEEE Trans. Image Process. 2010, 11, 2861–2873. [Google Scholar] [CrossRef]

- Dong, W.; Zhang, L.; Shi, G.; Li, X. Nonlocally centralized sparse representation for image restoration. IEEE Trans. Image Process. 2013, 22, 1620–1630. [Google Scholar] [CrossRef] [Green Version]

- Yang, W.; Tian, Y.; Zhou, F.; Liao, Q.; Chen, H.; Zheng, C. Consistent coding scheme for single-image super-resolution via independent dictionaries. IEEE Trans. Multimed. 2016, 3, 313–325. [Google Scholar] [CrossRef]

- Li, J.; Gong, W.; Li, W. Dual-sparsity regularized sparse representation for single image super-resolution. Inf. Sci. 2015, 298, 257–273. [Google Scholar] [CrossRef]

- Gong, W.; Tang, Y.; Chen, X.; Qiane, Y.; Weigong, L. Combining edge difference with nonlocal self-similarity constraints for single image super-resolution. Neurocomputing 2017, 249, 157–170. [Google Scholar] [CrossRef]

- Chen, C.L.P.; Liu, L.; Chen, L.; Tang, Y.Y.; Zhou, Y. Weighted couple sparse representation with classified regularization for impulse noise removal. IEEE Trans. Image Process. 2015, 24, 4014–4026. [Google Scholar] [CrossRef]

- Liu, L.; Chen, L.; Chen, C.L.P.; Tang, Y.Y.; Pun, C.M. Weighted Joint Sparse Representation for Removing Mixed Noise in Image. IEEE Trans. Cybern. 2017, 47, 600–611. [Google Scholar] [CrossRef]

- Zhang, Y.; Shi, F.; Cheng, J.; Li, W.; Yap, P.T.; Shen, D. Longitudinally guided guper-resolution of neonatal brain magnetic resonance images. IEEE Trans. Cybern. 2019, 49, 662–674. [Google Scholar] [CrossRef] [PubMed]

- Jiang, J.; Yu, Y.; Tang, J.; Aizawa, M.A.; Aizawa, K. Context-patch face hallucination based on thresholding locality constrained representation and reproducing learning. IEEE Trans. Cybern. 2018, 50, 324–337. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, Z.; Liu, D.; Yang, J.; Han, W.; Huang, T. Deep networks for image super-resolution with sparse prior. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 370–378. [Google Scholar]

- Mass, A.L.; Hannum, A.Y.; Ng, A.Y. Rectifier nonlinearities improve neural network acoustic models. In Proceedings of the 30th International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013. [Google Scholar]

- Ahn, N.; Kang, B.; Sohn, K.A. Fast, accurate, and lightweight super-resolution with cascading residual network. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 252–268. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Fan, Y.; Honghui, S.; Jiahui, Y.; Ding, L.; Wei, H.; Haichao, Y.; Zhangyang, W.; Xinchao, W.; Thomas, S.H. Balanced two-stage residual networks for image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 161–168. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Mudunuri, S.P.; Biswas, S. Low resolution face recognition across variations in pose and illumination. IEEE Trans. Pattern Anal. Machine Intell. 2015, 38, 1034–1040. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Region-based convolutional networks for accurate object detection and segmentation. IEEE Trans. Pattern Anal. Machine Intell. 2015, 38, 142–158. [Google Scholar] [CrossRef] [PubMed]

- Bai, Y.; Zhang, Y.; Ding, M.; Ghanem, B. Sod-mtgan: Small object detection via multi-task generative adversarial network. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 206–221. [Google Scholar]

- Lobanov, A.P. Resolution limits in astronomical images. arXiv 2005, arXiv:astro-ph/0503225. [Google Scholar]

- Swaminathan, A.; Wu, M.; Liu, K.R. Digital image forensics via intrinsic fingerprints. IEEE Trans. Inf. Forensics Secur. 2008, 1, 101–117. [Google Scholar] [CrossRef] [Green Version]

- Lillesand, T.; Kiefer, R.W.; Chipman, J. Remote Sensing and Image Interpretation; John Wiley & Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

- Tai, Y.; Yang, J.; Liu, X. Image super-resolution via deep recursive residual network. In Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photorealistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4681–4690. [Google Scholar]

- Musunuri, Y.; Kwon, O.S. Deep Residual Dense Network for Single Image Super-Resolution. Electronics 2021, 10, 555. [Google Scholar] [CrossRef]

- Ren, H.; Mostafa, E.; Lee, J. Image super resolution based on fusing multiple convolution neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Hui, Z.; Xiumei, W.; Xinbo, G. Fast and accurate single image super-resolution via information distillation network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 723–731. [Google Scholar]

- Muhammad, W.; Aramvith, S. Multi-scale inception based super-resolution using deep learning approach. Electronics 2019, 8, 892. [Google Scholar] [CrossRef] [Green Version]

- Anwar, S.; Barnes, N. Densely residual laplacian super-resolution. IEEE Trans. Pattern Anal. Mach. Intell. 2020. [Google Scholar] [CrossRef] [PubMed]

- Zha, L.; Yang, Y.; Lai, Z.; Zhang, Z.; Wen, J. A Lightweight Dense Connected Approach with Attention on Single Image Super-Resolution. Electronics 2021, 10, 1234. [Google Scholar] [CrossRef]

- Tong, T.; Li, G.; Liu, X.; Gao, Q. Image super-resolution using dense skip connections. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4799–4807. [Google Scholar]

- Tai, Y.; Yang, J.; Liu, X.; Xu, C. Memnet: A persistent memory network for image restoration. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4539–4547. [Google Scholar]

- Xin, Y.; Haiyang, M.; Jiqing, Z.; Ke, X.; Baocai, Y.; Qiang, Z.; Xiaopeng, W. DRFN: Deep recurrent fusion network for single-image super-resolution with large factors. IEEE Trans. Multimed. 2019, 21, 328–337. [Google Scholar]

- Gu, J.; Lu, H.; Zuo, W.; Dong, C. Blind super-resolution with iterative kernel correction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1604–1613. [Google Scholar]

- Jin, X.; Xiong, Q.; Xiong, C.; Li, Z.; Gao, Z. Single image superresolution with multi-level feature fusion recursive network. Neurocomputing 2019, 370, 166–173. [Google Scholar] [CrossRef]

- Liu, B.; Boudaoud, D.A. Effective image super resolution via hierarchical convolutional neural network. Neurocomputing 2020, 374, 109–116. [Google Scholar] [CrossRef]

- Lin, D.; Xu, G.; Xu, W.; Wang, Y.; Sun, X.; Fu, K. Scrsr: An efficient recursive convolutional neural network for fast and accurate image super-resolution. Neurocomputing 2020, 398, 399–407. [Google Scholar] [CrossRef]

- Qiu, D.; Zheng, L.; Zhu, J.; Huang, D. Multiple improved residual networks for medical image super-resolution. Future Gener. Comput. Syst. 2021, 116, 200–208. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Nah, S.; Kim, T.H.; Lee, K.M. Deep multi-scale convolutional neural network for dynamic scene deblurring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Mao, X.; Shen, C.; Yang, Y.B. Image restoration using very deep convolutional encoder-decoder networks with symmetric skip connections. Adv. Neural Inf. Process. Syst. 2016, 29, 2802–2810. [Google Scholar]

- Noh, H.; Hong, S.; Han, B. Learning deconvolution network for semantic segmentation. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Arbelaez, P.; Maire, M.; Fowlkes, C.; Malik, J. Contour detection and hierarchical image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 898–916. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bevilacqua, M.; Roumy, A.; Guillemot, C.; Alberi-Morel, M.L. Low-complexity single-image super-resolution based on nonnegative neighbor embedding. In Proceedings of the 23rd British Machine Vision Conference (BMVC), Surrey, UK, 3–7 September 2012. [Google Scholar]

- Zeyde, R.; Elad, M.; Protter, M. On single image scale-up using sparse-representations. In Proceedings of the International Conference on Curves and Surfaces, Avignon, France, 24–30 June 2010. [Google Scholar]

- Martin, D.; Fowlkes, C.; Tal, D.; Malik, J. A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In Proceedings of the 8th IEEE International Conference on Computer Vision (ICCVs), Vancouver, BC, Canada, 7–14 July 2001. [Google Scholar]

- Huang, J.B.; Singh, A.; Ahuja, N. Single image super-resolution from transformed self-exemplars. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5197–5206. [Google Scholar]

- Matsui, Y.; Ito, K.; Aramaki, Y.; Fujimoto, A.; Ogawa, T.; Yamasaki, T.; Aizawa, K. Sketch-based manga retrieval using manga109 dataset. Multimed. Tools Appl. 2017, 76, 21811–21838. [Google Scholar] [CrossRef] [Green Version]

- Kingma, D.P.; Adam, J.L.B. A method for stochastic optimization. arXiv 2015, arXiv:1412.6980. [Google Scholar]

Short Biography of Authors

| Wazir Muhammad is a Lecturer Electrical Engineering Department, Balochistan University of Engineering and Technology Khuzdar. He was received his doctoral degree from the Department of Electrical Engineering Chulalongkorn University Bangkok, Thailand in 2019. Previously he was obtained ME degree in the field of Communication Systems and Networks from Mehran University of Engineering and Technology, Jamshoro, Sindh, Pakistan respectively. His research interests lie in the areas of Electrical Engineering, Communication Systems, Neural Networks, and Machine Learning, specifically in Deep Learning image super-resolution. |

| Zuhaibuddin Bhutto received PhD in electronic engineering from Dong-A University South Korea in 2019, he also received B.E. degree in software engineering and M.E. degree in information technology from Mehran University of Engineering and Technology, Pakistan, in 2009 and 2011, respectively. Currently, he is pursuing a Ph.D. degree in electronics engineering from Dong-A University, South Korea. Since 2011, he has been an assistant professor at the Department of Computer System Engineering, Balochistan University of Engineering and Technology, Pakistan. His research interests include, image processing, MIMO technology; in particular, cooperative relaying, adaptive transmission techniques, energy optimization, machine learning, and deep learning. |

| Arslan Ansari received the B.S. degree in Electrical Engineering from the Mehran University of Engineering and Technology, Jamshoro, Pakistan, in 2011 and PhD degree in Electronic System Engineering from Hanyang University, Ansan, South Korea back in 2016. He has worked as a Lecturer with the Mehran University of Engineering and Technology back in 2010 and currently working as assistant professor in the Department of Electronic Engineering at Dawood University of Engineering and Technology, Karachi. His current research interests include multilevel inverters and grid-connected renewable energy systems. Mr. Ansari was a recipient of the Scholarship for the Integrated Master and Ph.D. Program by the Government of Pakistan. |

| Mudasar Latif Memon received a Ph.D. degree from Sungkyunkwan University, Korea, in 2019. He is currently working for Sukkur Institute of Business Administration University, Pakistan as Vice-Principal Technical, IBA Community College Naushahro Feroze. His research interests include artificial intelligence-based solutions to real-life engineering problems, emerging wireless networks, and healthcare systems. He has published 13 articles in international journals. |

| Ramesh Kumar received the B.E. degree in Computer Systems Engineering from Mehran University of Engineering and Technology, Jamshoro, Pakistan, in 2005, the M.S. degree in Electronic, Electrical, Control and Instrumentation Engineering from Hanyang University, Ansan, South Korea, in 2009, and the Ph.D. degree in Electronics and Computer Engineering from Hanyang University, Seoul, South Korea back in 2016. He has worked as a Lecturer at the Electrical Engineering Department, The University of Faisalabad, Faisalabad back in 2010 and currently working as an Associate Professor in the Department of Computer System Engineering at Dawood University of Engineering and Technology, Karachi. His current research interests include 6G Communication Systems, Ultra-Massive MIMO, RF/FSO mixed Communication Channels, and Block-chain technology. Mr. Kumar was a recipient of the Scholarship for both master’s and Ph.D. Program by the Higher Education Commission, Pakistan. |

| Ayaz Hussain received his Bachelor degree from Mehran University of Engineering and Technology, Jamshoro, in 2006, MS Engineering from Hanyang University, South Korea, in 2010, and PhD degree from Sungkyunkwan Univerisity, South Korea, in 2018. He is working as a professor in the Department of Electrical Engineering, Balochistan University of Engineering and Technology, Khuzdar, Pakistan. He is the author of many research articles. His research interests include a robust control system and wireless communication. |

| Syed Ali Raza Shah is an Associate Professor and Dean, Faculty of Engineering in Balochistan University of Engineering and Technology Khuzdar, Pakistan. He earned his B.E in Mechanical Engineering from Balochistan University of Engineering and Technology Khuzdar, Pakistan and M.E in Mechanical Engineering from Eastern Mediterranean University North Cyprus, Turkey. His research interests include Quality Management, Energy Management, Operational Management, Sustainable manufacturing, Small and Medium- Sized Enterprises (SMEs) and sustainability. He is a Professional member of Pakistan Engineering Council (PEC). |

| Imdadullah Thaheem received his B.E in Mechanical Engineering from Quaid-e-Awam University of Engineering Science and Technology Nawabshah in 2010. He achieved his M.E degree in Energy System Engineering from Mehran University of Engineering and Technology Jamshoro in 2015, and his Ph.D degree in Energy Science and Engineering from DGIST, South Korea in 2020. After his Ph.D, he joined as as a assistant professor in Energy systems Engineering Department of Balochistan University of Engineering Technology khuzdar, Pakistan since 2020. |

| Shamshad Ali an Assistant Professor at Balochistan University of Engineering and Technology, Khuzdar, Pakistan. He received his Ph.D. from University of Electronic Science and Technology of China in 2019. He received his master’s degree from Mehran University of Engineering and Technology, Pakistan in 2013. He is currently working on highly efficient nitrogen doped carbon anodes for lithium- and sodium-ion batteries. His research interests include lithium-ion batteries, lithium–sulfur batteries, sodium-ion batteries, wireless sensors, and image processing |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).