1. Introduction

There exists a wide variety of sensors for sensing and perception of the surrounding environment, such as camera, LiDAR, ultrasound, infrared (IR), thermal cameras, radar, accelerometers, gyroscopes, and global positioning system (GPS) to name a few. Although individual sensors are capable of extracting related features of the surrounding, they fail to obtain rich details necessary for reliable perception and navigation of autonomous systems [

1,

2,

3,

4]. When compared to ultrasound sensors that provide 1D information, vision-based sensors such as cameras provide more detailed 2D information, but they fail to perform under limited lighting conditions, such as during the night, and adverse weather such as rain and fog. Furthermore, spatio-temporal parameters of targets in the field of view can only be captured by lengthy computations, which can cause an unacceptable delay. Some of these problems, such as night vision or limited lighting conditions, can be solved to some extent by integrating IR cameras with cameras. Although machine learning techniques for training the model with images for object recognition and classification [

5], as well as traffic sign recognition, are well developed, they are insufficient for fully autonomous and cyber-physical systems operating in varying weather conditions [

6]. In addition, the camera fails to provide a 3D representation of the environment. In order to overcome these problems, LiDAR based sensing is one of the appealing approaches to acquiring a 3D map of the environment. However, there are still a number of challenges to overcome, such as reducing cost of the setup, reducing form factor, decreasing weight, and increasing number of channels for increased resolution while reducing latency when capturing the dynamics of objects in the FoV [

1,

2]. On the other hand, the mmWave radar sensors are single-chip radar sensors with extremely high resolution and low power consumption. These sensors work reliably in adverse weather conditions too, but no information on object morphology is obtained. The classification of different types of UAVs has been proposed by utilizing mmWave radars in [

7]. Machine learning techniques are used to categorize activities using radar data. Drone type classification has been proposed in [

8,

9,

10]. However, the proposed methods are computationally expensive. Recently, target classification using range-Doppler plots from a high density phase modulated continuous wave (PMCW) MIMO mmWave radar has been proposed in [

11]. However, range-Doppler is a 2D FFT processing based approach that is also computationally complex. On the other hand, the target classification by mmWave FMCW radars using machine learning on range-angle images has been proposed in [

12]. These range-angle images are created by utilizing range profiles obtained from a rotating radar. This approach is computationally less complex compared to previously mentioned approached but requires rotation. However, the various types of targets in classification under all weather conditions using low-complexity algorithms are still challenging.

In order o exploit the advantages of individual sensors, approaches based on sensor fusion has been implemented. For example authors in [

13,

14] fused data from camera and LiDAR to represent visual as well as depth information in a compact form and extracted the feature of the objects under interest. The disadvantages of this technique is that they require calibration and will result in enormous errors if not performed well. Similarly, radar-camera fusion for object detection, classification, and dynamics of environment is observed [

15]. The drawback of the sensor fusion based technique is that they are flooded by a huge amount of data, e.g., image, video, and point cloud require additional computational cost.

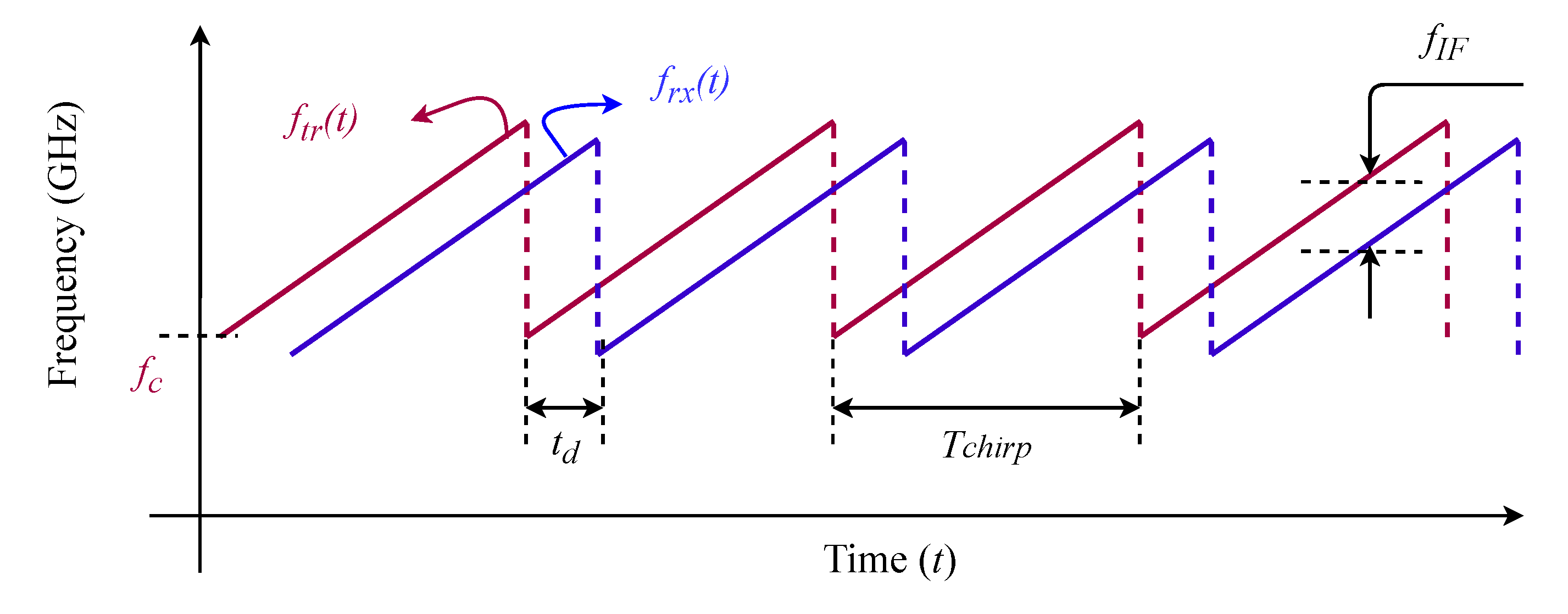

With the objective for detecting and classifying the objects with the limitations imposed by individual sensors, extensive signal processing of both the image and point cloud (2D or 3D data) radar, especially operating at the millimeter wave band, has been used recently for autonomous systems. Radars detect object parameters such as radial range, relative velocity, and the angle of arrival (AoA). While pulsed radar operate by finding the delay of the returned pulse from the remote object relative to the transmitted pulse, FMCW operates by transmitting frequency chirp and by beating the returned version with the transmitted ones, resulting in the intermediate frequency (IF), which contains information about the range and velocity of the objects. The FoV and/or beamwidth of mmWave FMCW radars are adjustable parameters up to 120° and maximum range of 300 m [

16]. Furthermore, multiple radars can be cascaded together to achieve a wider FoV.

The fast Fourier transformation (FFT) on the IF signal provides range profile. The peaks in the range profile determines the radial range of the objects. In addition, time-frequency analysis techniques such as the Micro-Doppler have been investigated in some cases where targets have specific repeating patterns. This, however, increases the signal processing complexity, resulting in unacceptable latency in some application scenarios [

17,

18,

19,

20,

21,

22]. Furthermore, such techniques are limited to static targets [

23,

24]. Machine learning techniques have recently been investigated by using mmWave radars data. Surface classification with millimeter-Wave radar has been accomplished through the use of temporal features [

25]. In [

26], it was proposed to classify small UAVs and birds by using micro-Doppler signatures derived from radar measurements. The use of micro-Doppler spectrograms in cognitive radar for deep learning-based classification of mini-UAVs has been proposed in [

27]. The cadence velocity diagram analysis has been proposed for detecting multiple micro-drones in [

28]. Convolutional neural networks with merged Doppler images have been proposed in [

8] for UAVs classification. The use of micro-Doppler analysis to classify UAVs in the Ka band has been proposed in [

29]. The detection of small UAVs has been proposed using a radar-based EMD Algorithm and the extraction of micro-Doppler signals in [

30]. The detection of small UAVs has been proposed using cyclostationary phase analysis on micro-Doppler parameters based on radar in [

31]. UAV detection has been proposed by using regularized 2D complex-log spectral analysis and micro-Doppler signature subspace reliability analysis in [

32]. A multilayer perceptron artificial neural network has been proposed for classifying single and multi-propelled miniature drones in [

33]. It has been proposed to use FMCW radar to classify stationary and moving objects in road environments in [

34]. The detection of road users such as pedestrians, cyclists, and cars using a 3D radar cube has been proposed by using CNN in [

35]. CNN is used for classification, followed by clustering. Based on the Euclidean distance softmax layer, a method for classifying human activity by using mmWave FMCW radar has been proposed in [

36]. Several deep learning-based methods for detecting human activity using radar are summarized in [

37]. All of these works, however, used spectrograms or time-frequency representations derived from spectrograms, such as cepstrogram and CVD, which necessitates additional signal processing. The additional features of an intermediate frequency (IF) signal’s range FFT profile have not been thoroughly investigated. It has been demonstrated in [

38] that by utilizing the features from the range FFT profile, additional information about the objects can be extracted.

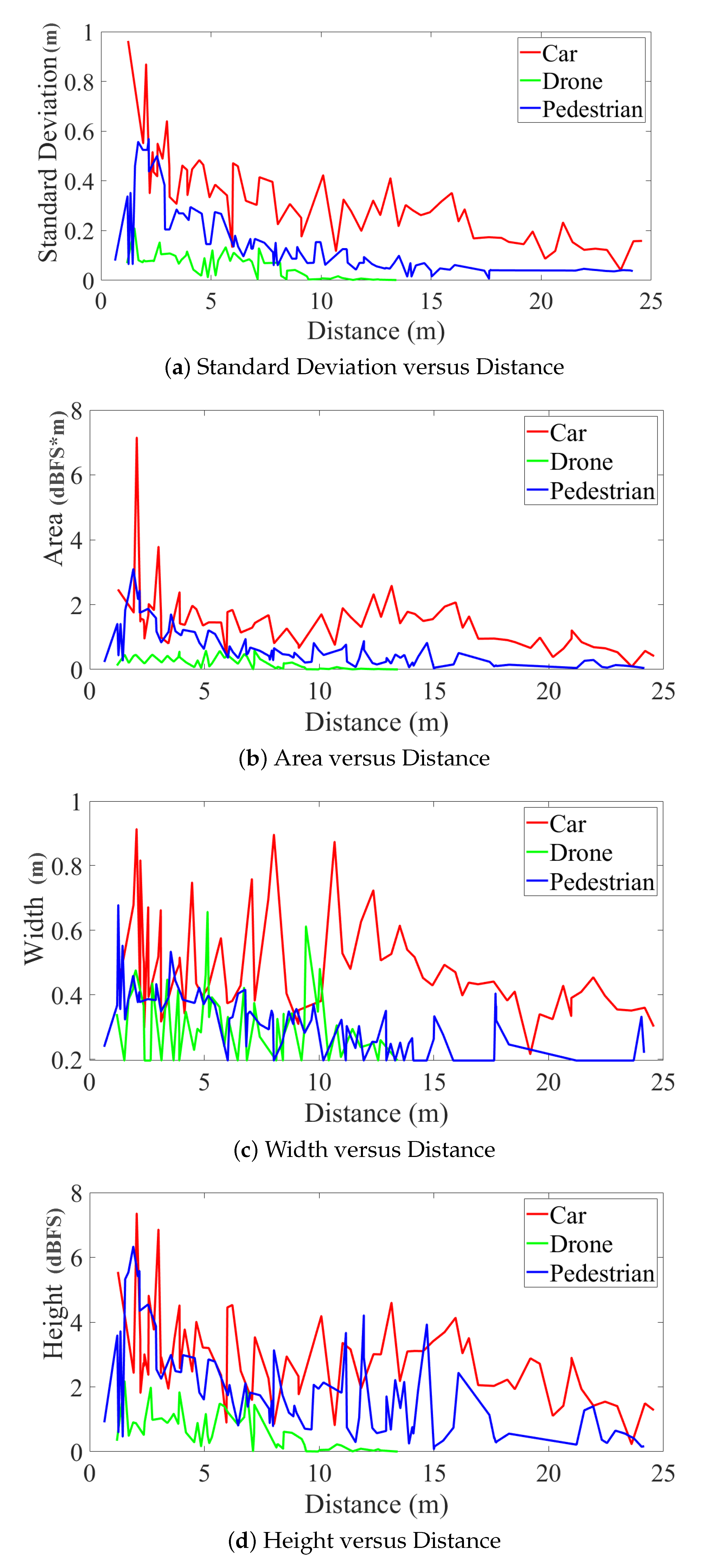

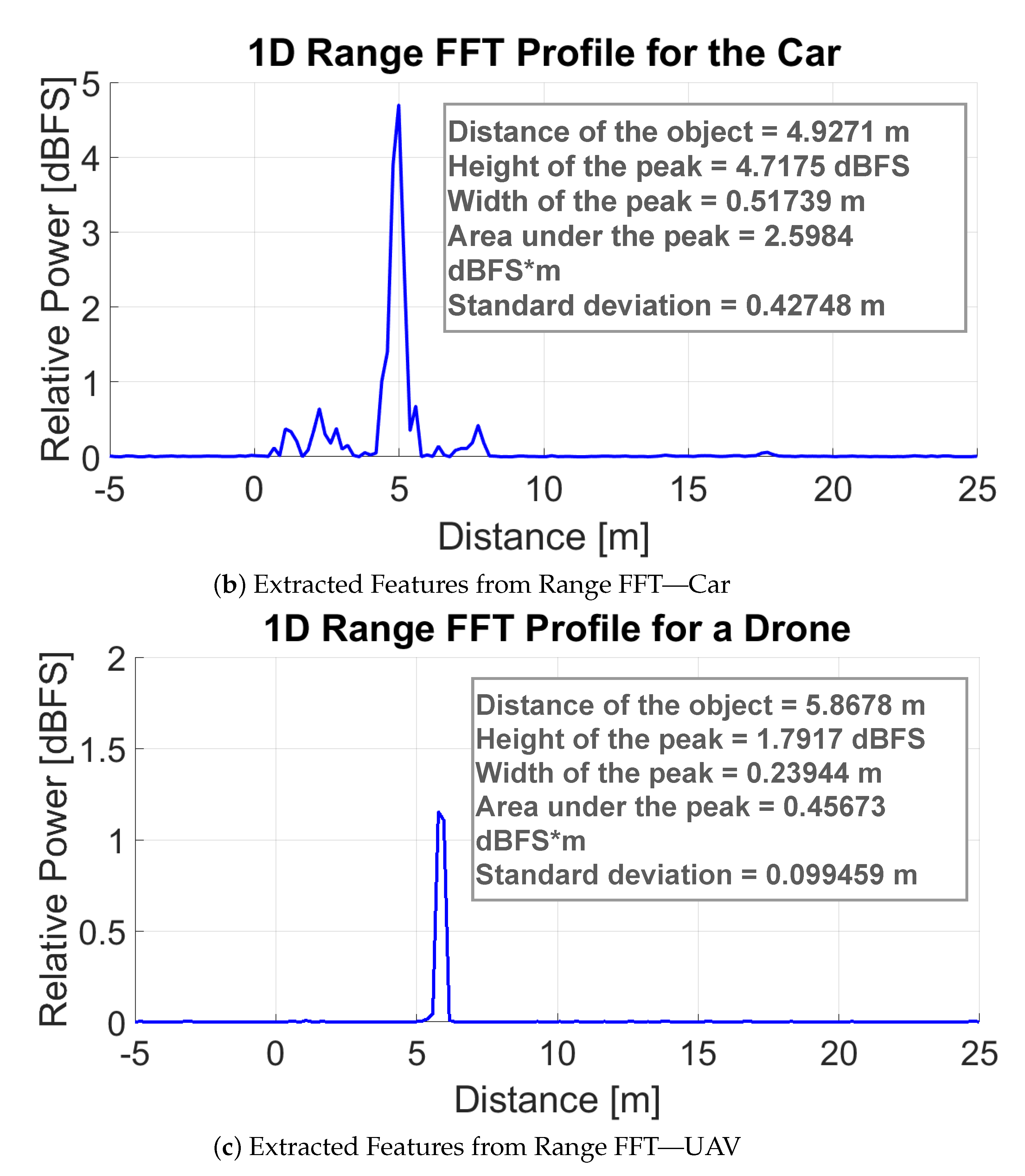

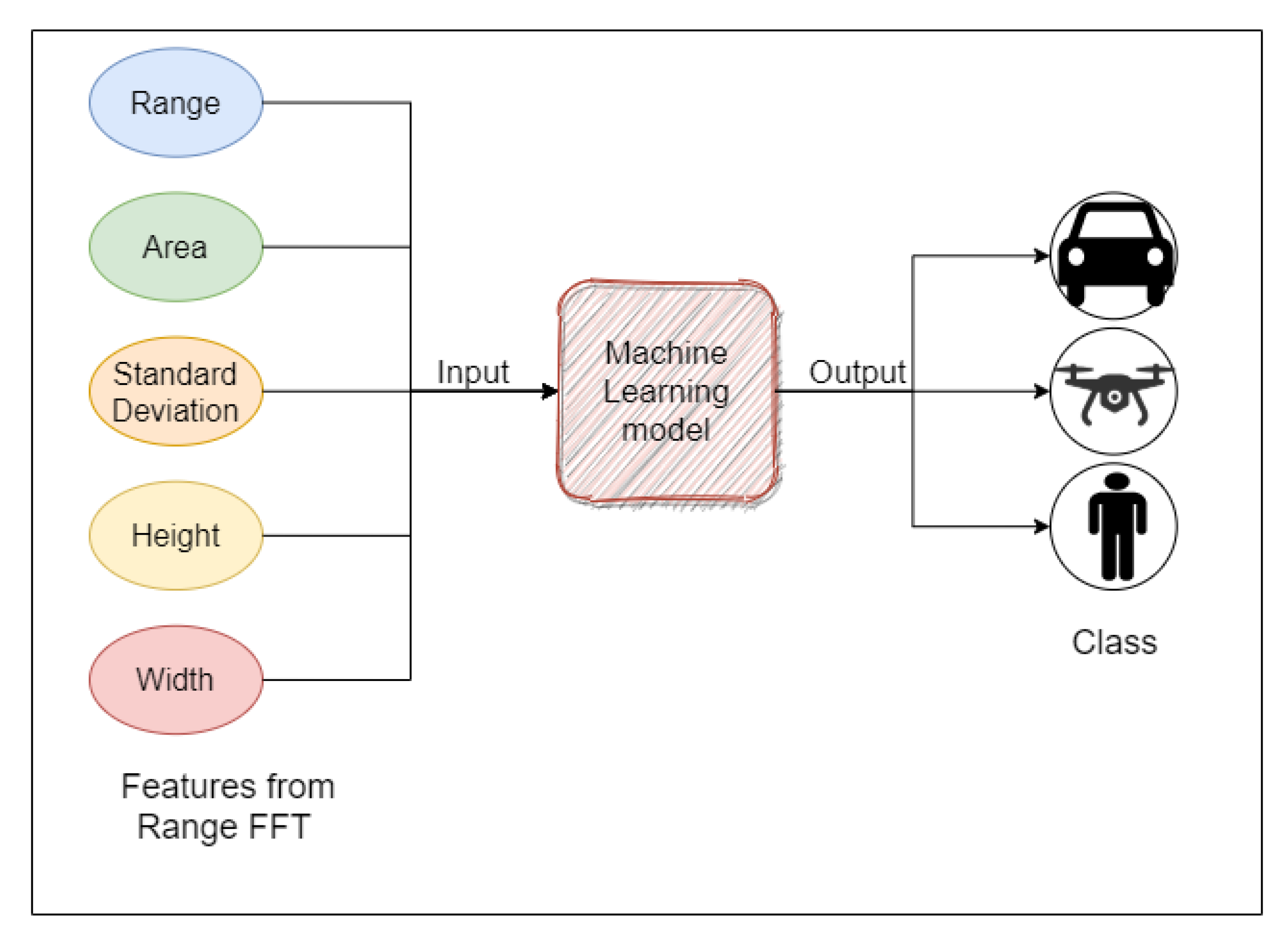

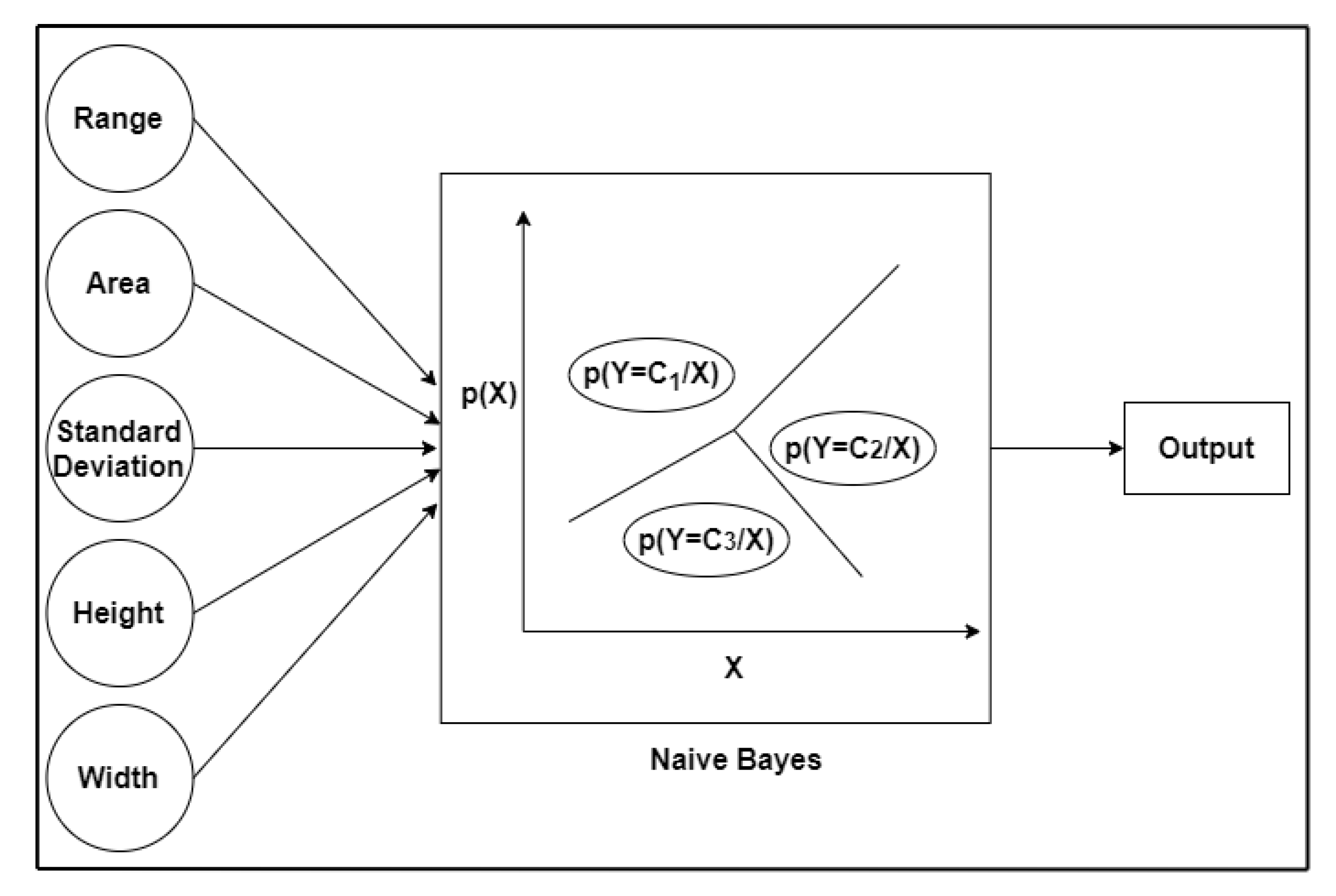

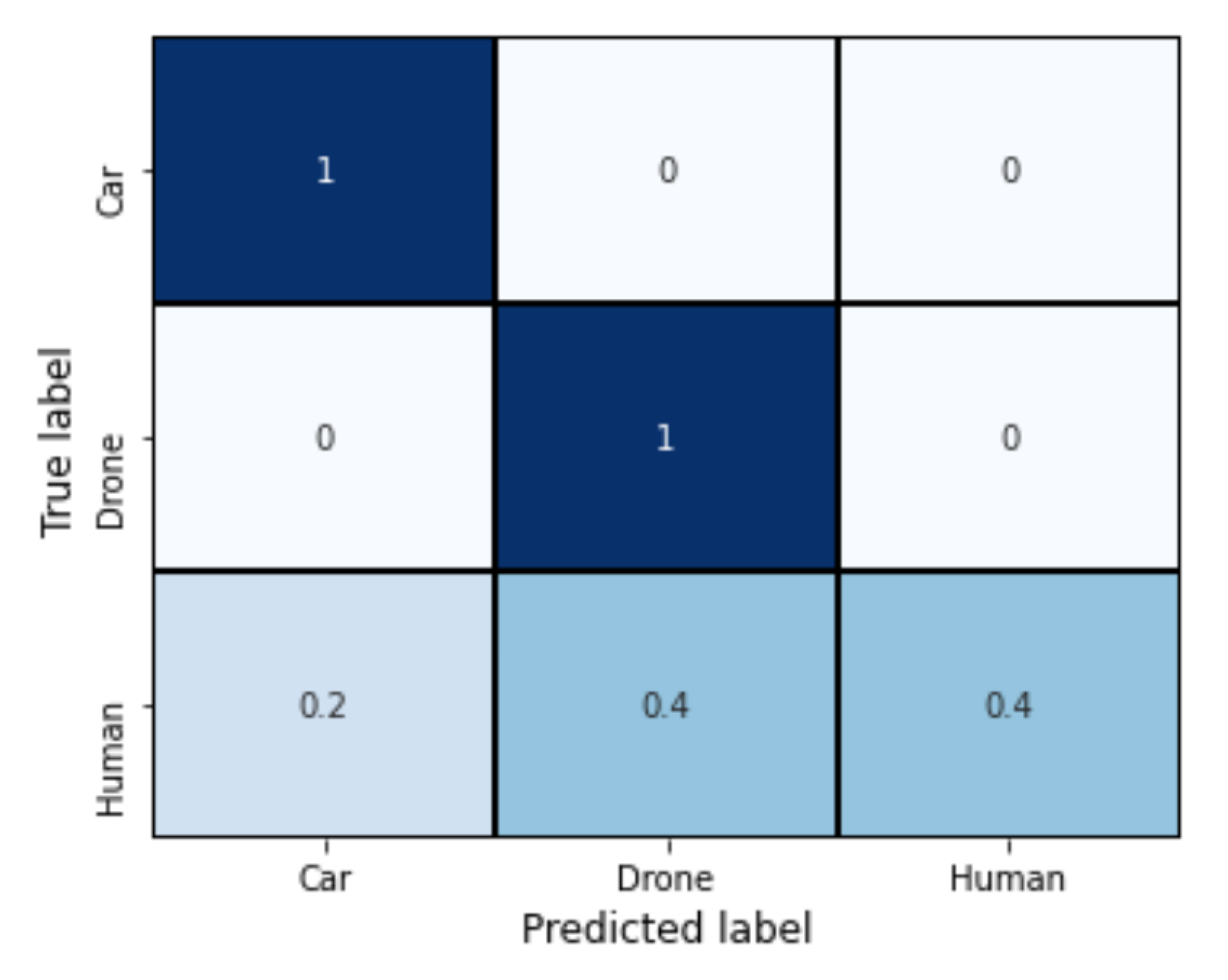

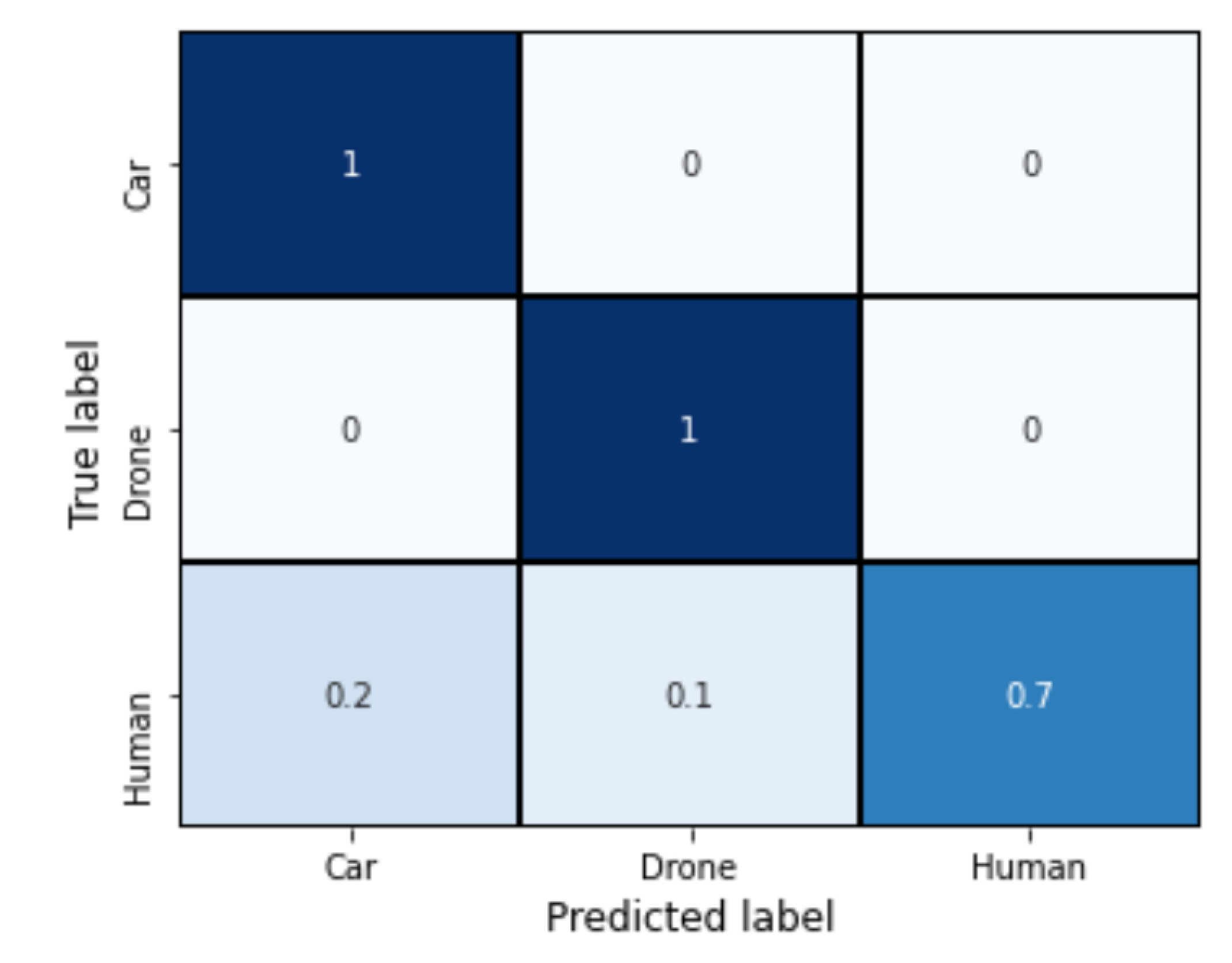

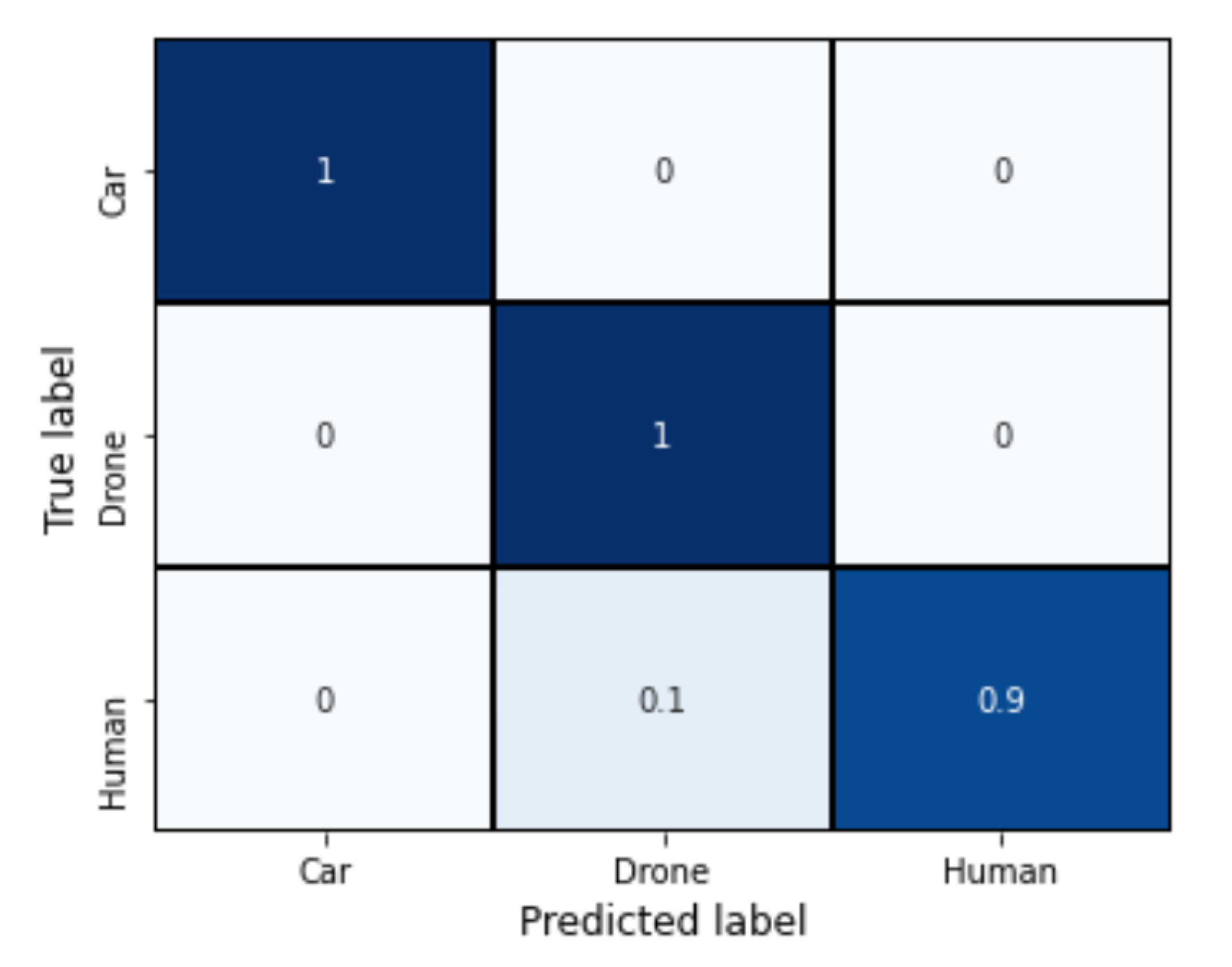

The ability to detect target features such as shape and size, as well as dynamic parameters of these targets, is critical. Such enhancements will improve the reliability and robustness of any system that utilizes radars. On the one hand, while the IF signal explicitly provides the object’s range, the distinguishing characteristics of the different objects are obtained by extracting statistical parameters from the range FFT plot, such as peak height, peak width, standard deviation, and area under peaks. Experiments have been carried out in order to categorize three common objects: an unmanned aerial vehicle (UAV), a car, and a pedestrian. A number of ML algorithms are used to classify the targets in combination with statistic features extracted from the IF signal range FFTs of the radar measures with different objects. Lightweight machine learning algorithms that have been investigated include Logistic Regression, Naive Bayes, support vector machine (SVM), and Light Gradient Boosted Machine (GBM). This is the first paper to use ML for classifying purposes with mmWave radars on the range FFT statistical features. The major contributions of the work are as follows:

Outdoor experiments have been carried out to categorize three common objects: an unmanned aerial vehicle (UAV), a car, and a pedestrian.

Extracting statistical parameters such as peak height, peak width, standard deviation, and area under peaks from the range profile of the radar data.

Classification of the targets by using the statistical features extracted from the IF signal range FFTs of the radar measures with different objects and various ML models.

In complex situations, however, range profiles may not provide higher classification accuracy. The combination of mmWave radars with additional sensors such as RGB cameras, thermal cameras, and infrared cameras improves reliability and classification accuracy.

The rest of this paper is structured as follows. The system is described in

Section 2. The experimental setup is described in detail in

Section 3.

Section 4 presents the data set, signal processing, details of the machine learning models, and the performances. The detailed data set and algorithms are available at

https://github.com/aveen-d/Radar_classification (accessed on 19 June 2021) [

39].

Finally, the conclusion remarks together with possible future works are discussed in

Section 5.

3. Measurements and Signal Processing

The measurement setup is lightweight and portable. It is made up of a mmWave FMCW radar with three transmitters and four receivers that operate in the frequency range of 77 to 81 GHz. The Texas Instruments’ mmWave Studio application is used to configure and control the radar setup. The configuration parameters of the radar used in these measurements are shown in

Table 1. The algorithm used for the feature extraction of the objects is shown in Algorithm 1. A flowchart is shown in

Figure 5 to explain the algorithm. Measurements are made with three common objects in an outdoor environment, as shown in

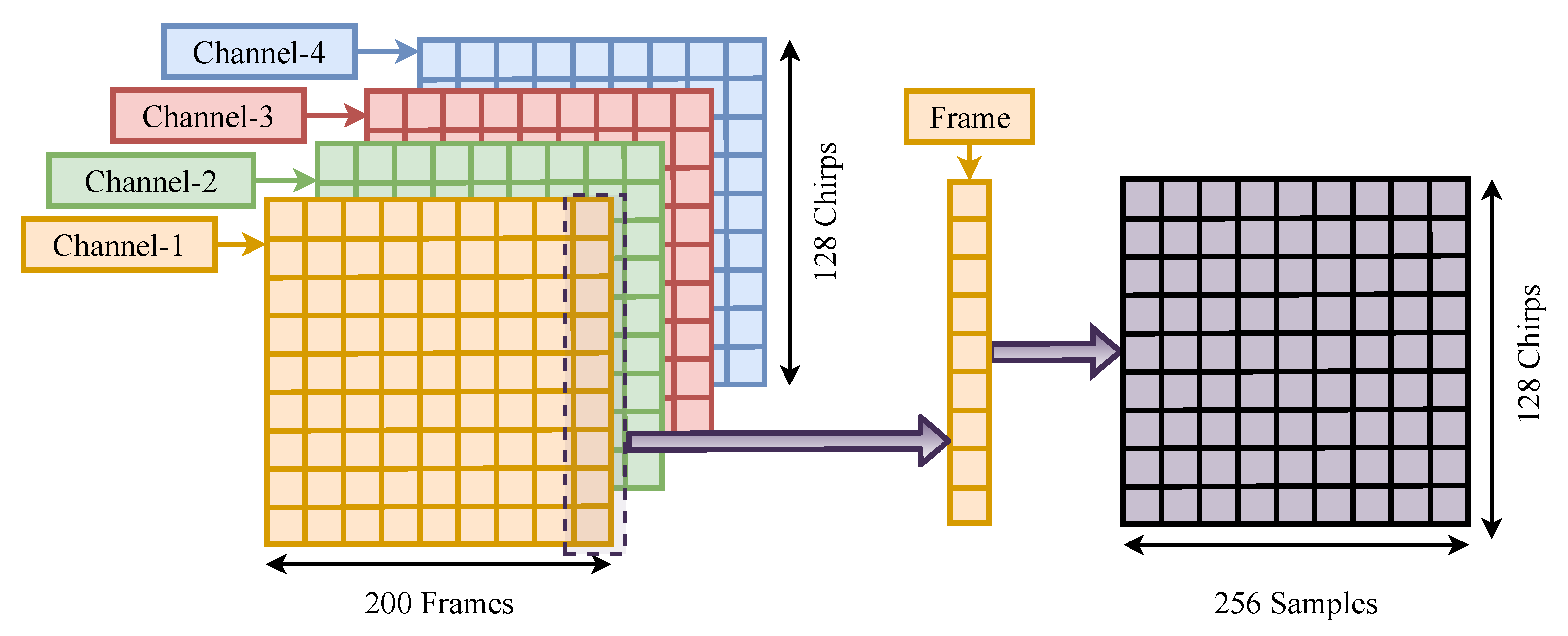

Figure 6. Drone used in the measurements is quite small in size, and it possesses a size of 214 × 91 × 84 mm when folded and 322 × 242 × 84 mm when unfolded. The vehicle used was a medium-sized automobile with dimensions of 4315 × 1780 × 1605 mm. Measurements for the pedestrian were taken with a 172 cm tall adult. All three objects are one of a kind, with distinct shapes and sizes. For each object, several measurements were taken in small range steps up to a range of 25 m, which was the measurement scene’s limitation. The radar station is fixed and objects were moved from the radar in small steps while taking the measurements. The data collected using mmWave sensor are arranged for four channels, and post processing is performed on 200*128 chirp loops of a channel. A Fast Fourier Transform is applied on these chirp loops consisting of 256 samples/chirp loop. Further dBFS and a mean of dBFS of all these chirploops is calculated for 256 samples. The mean dBFS vs. distance plot is obtained using MATLAB. The highest peak in the plot will indicate the object location. A sharp peak can be obtained after the removal of a static plane. The features of the highest peak are extracted from this plot. This work has established a relationship between these extracted peak features and the object. This relationship is used to identify the type of object present in the vicinity of the mmWave sensors. All the extracted features from the measurements are shown in

Figure 7. It is clear from

Figure 7 that features extracted from the range FFT plot, such as standard deviation of the peak, area under the peak, the peak width, and the peak height, provide distinguishable information about the targets. This makes sense because targets with a large cross-section reflect more power and, as a result, larger peaks in the range FFT plot.

| Algorithm 1 Object detection and features extraction from range FFT of mmWave FMCW radar. |

- Require:

from raw IF signal data u having = 200, = 128; for to do - Ensure:

Raw IF signal ADC data u contains complex data of the IF signal i.e., raw IF signal ADC data corresponding to receiver i = 1, 2, 3, 4, for = 200, = 128 of raw data u. for to do - Ensure:

FFT with Hanning window of raw IF signal data u for receiver i = 1, 2, 3, 4 of frame, chirp. dBFS values are calculated for each chirp FFT. end for end for - Ensure:

Mean dBFS is calculated for all frames and chirps. - Ensure:

Distance, height, width, and standard deviation of the peaks are calculated for the target detected using findpeaksSb function.

|

Figure 8 depicts a single outdoor measurement case for three targets: a car, a pedestrian, and an UAV (drone). According to

Figure 8, the areas under the peak extracted from range FFT for a car, a pedestrian, and a drone are 2.5984, 2.038, and 0.45673, respectively. It is proportional to the cross-section of the targets. Similarly, peak height, standard deviation, and width are also proportional to the target features such as shape. All of these extracted features are further processed by using machine learning techniques for target classification.