Abstract

Cardiovascular disease (CVD), which seriously threatens human health, can be prevented by blood pressure (BP) measurement. However, convenient and accurate BP measurement is a vital problem. Although the easily-collected pulse wave (PW)-based methods make it possible to monitor BP at all times and places, the current methods still require professional knowledge to process the medical data. In this paper, we combine the advantages of Convolutional Neural Networks (CNN) and Long Short-Term Memory (LSTM) networks, to propose a CNN-LSTM BP prediction method based on PW data. In detailed, CNN first extract features from PW data, and then the features are input into LSTM for further training. The numerical results based on real-life data sets show that the proposed method can achieve high predicted accuracy of BP while saving training time. As a result, CNN-LSTM can achieve convenient BP monitoring in daily health.

1. Introduction

Nowadays, work pressures and fast-paced lifestyles produce various health problems for most people, especially cardiovascular disease (CVD), which causes great damage to the human heart and brain [1]. Once CVD occurs, there is a high probability of severe cerebral hemorrhage or ischemia, which then seriously threatens the health of patients. The World Health Organization (WHO) considers CVD the primary cause of death all over the world, as it accounted for 31% of the global deaths reported in 2018 [2]. Blood pressure (BP), as one of the main characteristics of the cardiovascular system, is the main basis for clinical diagnosis and treatment. Controlling BP within a reasonable range is the primary goal in daily health [3,4]. Therefore, how to measure BP and predict the trend of BP values effectively has become a key point in preventing CVD [5].

BP measurement methods can be divided into two types: the invasive method and non-invasive method [6]. The invasive method refers to the arterial puncture method, which punctures or cuts the blood vessel and then puts the high-precision sensor of the measuring devices directly into the blood vessel [7,8]. Although the invasive method is the most accurate, it requires high professional skills and is not suitable for daily health monitoring. Non-invasive methods use various sensing technologies and signal processing methods to measure blood pressure indirectly, including the auscultatory method [9], oscillometric method [10], and pulse wave based method [11,12]. Among them, the pulse wave based method has attracted a wide range of research interests, due to its easy collection and accurate measurement results.

The pulse wave (PW), the regular pulsation of the arteries, is the cyclical change in the pressure caused by the contraction and relaxation of the ventricles [13]. Thanks to the PW signal containing important information, such as blood pressure and blood oxygen, some studies have already focused on BP measurement based on PW. In 1984, Tanaka et al. [14] proposed that BP could be measured by pulse wave velocity (PWV) for the first time. The PWV refers to the propagation speed of pulse waves along the arterial vessel wall, which is affected by such factors as the elasticity and thickness of the vessel wall. Furthermore, PWV can be calculated by the pulse wave transmit time (PWTT) and has a positive correlation with BP. As a result, Wibmer et al. [15] used PWTT to indirectly calculate the BP value. However, current PWTT methods based on wearable devices still lack clinical accuracy. Thus, a novel non-invasive BP monitoring method called CNAP2GO was proposed in [16], which has become a breakthrough for wearable sensors for BP monitoring in clinical settings. The characteristics can be extracted from the PW, and then models can be established based on the correlation between the characteristics and BP for dynamic BP measurement [17,18,19].

However, the PW data need to be pre-processed for current BP measurement methods, which is cumbersome and requires medical knowledge. Owing to the rapid development of artificial intelligence (AI), machine learning has become a potential solution for BP measurement and prediction, but the features still need to be manually extracted before inputting them into the neural network. Therefore, we consider introducing Convolutional Neural Networks (CNN) for features extraction. Generally, CNN can be used in the field of pattern recognition [20,21], natural language processing [22,23] and computer vision [24,25]. Furthermore, taking into account that PW is a time sequence, we can introduce the Long Short-Term Memory (LSTM) network to further process the features. LSTM is a specific type of Recurrent Neural Network (RNN), and has the ability to avoid the exploding gradient problem and the vanishing gradient problem in processing long sequences [26,27]. LSTM is widely used in the field of speech recognition [28,29] and time sequence forecasting [30,31]. Nowadays, some researchers combine the advantages of CNN and LSTM to design models for sentiment analysis [32], time sequences forecasting [33] and other fields. In particular, several kinds of CNN-LSTM methods are used for BP estimation based on electrocardiograms (ECGs) and photoplethysmography (PPG) [34,35], but these methods are still not convenient enough for BP monitoring. In this paper, we focus on designing CNN-LSTM methods for BP prediction based on PW directly. Our contributions are as follows:

- We introduce the state-of-art LSTM networks to predict blood pressure based on easy-to-collect pulse wave data so as to realize fast and convenient blood pressure monitoring;

- In order to avoid complicated processing of pulse wave data, we further use CNN to extract features from pulse wave before inputting to LSTM to achieve direct blood pressure prediction;

- We carry out experiments on real-life data sets and set two groups of benchmarks, where Group 1 only uses neural networks without CNN and Group 2 uses CNN to extract features first. The numerical results show that the proposed method can improve the predicted accuracy by up to 30.41% while saving training time.

The remainder of this paper is organized as follows. In Section 2, we introduce the related work about BP estimation and prediction. Section 3 describes the BP prediction problem based on PW data. Then CNN-LSTM prediction method is proposed in Section 4. We then show the numerical result and analyze the performance in Section 5. Finally, we make brief conclusions and look forward to future work in Section 6.

2. Related Work

Artificial intelligence (AI) has been used for BP measurement and prediction without professional medical skills. Zhang et al. [36] used the Genetic Algorithm-Back Propagation Neural Network to estimate BP after extracting 13 parameters from PPG signals. Chen et al. [37] proposed a continuous BP measurement method based on the K-nearest-neighbor (KNN) algorithm. The experimental results on the MIMIC II data set achieved a root mean square error of 2.47 mmHg. In Reference [38], the authors set up a support vector machine regression model and random forest regression model for BP prediction, and the average absolute error was less than 5 mmHg. It is worth noting that all of the above methods require data pre-processing.

In recent years, CNN has also been applied to BP estimation and BP risk level prediction. In Reference [39], the authors used CNN to generate features from PW automatically to estimate BP from PPG, and achieve better accuracy than the conventional method. Sun et al. [40] adopted a new kind of CNN based on Hilbert–Huang Transform (HHT) to predict blood pressure (BP) risk level from PPG. However, they did not make predictions about BP values. Generally speaking, CNN is rarely used in the field of BP measurement, but some researchers use CNN for features extraction of medical images.

Because of the advantages of LSTM for long sequences, it has been applied to BP estimation. Zhao et al. [41] utilized the efficient processing characteristics of LSTM for time series to predict the systolic and diastolic BP. However, the LSTM model is used to predict BP for adult goats, which cannot be applied to human. In Reference [42], the authors used LSTM for BP estimation. However, they designed a two-stage zero-order holding (TZH) algorithm to process the BP data before LSTM networks. Tanveer et al. [43] proposed a hierarchical Artificial Neural Network-Long Short Term Memory (ANN-LSTM) model for BP estimation, where ANNs layers were used to extract features from ECG and PPG waveforms, and LSTM layers were used to account for the time domain variation of the features. The mean absolute error (MAE) of systolic and diastolic blood pressure were 1.10 and 0.58 mmHg. Furthermore, Eom et al. proposed an end-to-end CNN-RNN architecture using raw signals (ECG, PPG, etc.) without the process of extracting features; the MAE values were 4.06 and 3.33 mmHg for systolic and diastolic BP [35].

However, current BP estimation methods have strong dependence on ECG and PPG, which cannot be applied to convenient BP estimation. Therefore, we introduce CNN-LSTM networks to predict BP based on easy-to-collect PW data.

3. Problem Description

In our work, the PW and the BP data are obtained from the Multi-parameter Intelligent Monitoring in Intensive Care (MIMIC) data set [44], which can be downloaded freely from PhysioBank (https://archive.physionet.org/cgi-bin/atm/ATM, accessed on 12 July 2021). The MIMIC data set collects physiological data of over 90 patients in the intensive care unit (ICU), and the patient is denoted by u, where . The sampling frequency of the data is 500 Hz, and thus we denote time as time slots, i.e., .

The PW data are obtained via fingertip pulse oximeter, and the unit is millivolt (mV). The PW data of patient u can be denoted by . The BP data used in this paper are the arterial blood pressure (ABP), which is obtained by the invasive method, and the unit is millimeter of mercury (mmHg), which is denoted by . When making BP predictions of u for time slot in time slot t, we use time slots PW data, i.e., , to predict . The BP prediction can described as a stochastic process, which is shown as follows:

where f is a non-linear and complicated function. is the white noise and is the parameter set. In other words, is obtained from the mapping of time sequence . The white noise obeys a normal distribution with a mean of zero and the standard deviation of , i.e., .

In general, the function f is difficult to obtain by traditional methods. Therefore, we introduce neural networks to obtain the approximate function , which is shown as follows:

In order to evaluate the performance of the approximate function , a function is constructed as follows:

where denotes the test set for the training of neural networks. Obviously, the smaller the , the better the performance of . Therefore, the aim of training neural networks is to minimize and find the optimal :

During the training process, is obtained based on feedforward propagation, and the neural networks update the weight parameters according to the gradient based on back propagation. By repeating this process, the weight parameters are continuously updated until the error of the loss function meets the precision requirement, which means that the approximate function is fitted [31].

4. Proposed CNN-LSTM Prediction Method

In this section, we first introduce Convolutional Neural Networks (CNN) and Long Short-Term Memory (LSTM) Networks. Next, the proposed prediction method, which is based on CNN and LSTM, is described.

4.1. Convolutional Neural Networks

Convolutional Neural Networks (CNNs) are a kind of feedforward neural network. In this work, we mainly consider the convolutional layer and pooling layer of CNN.

Convolutional Layer. In CNN, convolution is the most fundamental operation. Basically, the filter can be seen as the neuron of this layer, which has a weighted input and produces an output value. The essence of convolution is 2-D spatial filtering; filters can only slide on the x-axis and y-axis to extract features. The number of filters and their kernel size need to be carefully determined to form feature maps.

Pooling Layer. There are still too many parameters in feature maps after the convolutional layer; thus, the pooling layer is used for subsampling. On the one hand, pooling makes the feature maps smaller to reduce complexity, and on the other hand, it extracts important features. The general idea of pooling is to create a new feature map by taking the maximum or average value, i.e., max pooling and average pooling, respectively [22].

In summary, we can take advantage of CNN to extract the features of the input data for obtaining better models.

4.2. Long Short-Term Memory Networks

In a traditional neural networks model, it is fully connected between each layers but it is disconnected between the nodes in each layer [45]. As a result, it is inefficient for handling sequence problems. As mentioned above, the PW and BP data are both a time sequence. Accordingly, Recurrent Neural Networks (RNN) can be used for prediction. The nodes in the hidden layer of RNN are connected, which is different from traditional neural networks. What is more, the input of the hidden layer includes not only the output of the input layer, but also the output of the hidden layer of the previous time slot. The recurrent connections of RNN can add feedback and memory to the network over time. In summary, RNN has a strong learning ability and input generalization ability for sequence problems.

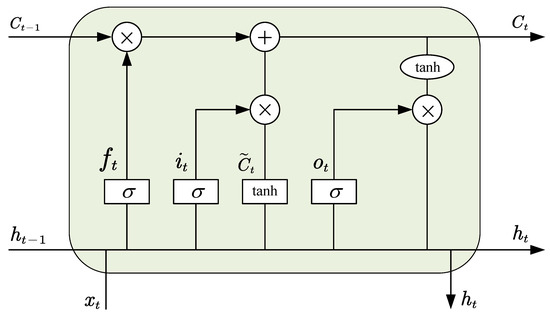

However, when back-propagation is used in a very deep RNN, the gradient of the neural network may become unstable, which will cause the exploding gradient problem or vanishing gradient problem [46], hence making the generated model unreliable. These problems can be solved by Long Short-Term Memory (LSTM) networks. As a variant of RNN, LSTM is composed of memory units and several gates. In Figure 1, we describe the structure of the LSTM unit.

Figure 1.

The structure of LSTM unit [27].

In time slot t, we presume that the input and the output are and , respectively. There are three gates in each unit, called input gate i, forget gate f and output o. The values of the three gates are calculated as follows:

where , , , , , are the correlative weight matrices and variable biases.

Then, the process of information updating in LSTM unit is as follows. In time slot t, the forget gate decides which part of to drop, according to . The input gate decides what information to store in the unit, according to , and is calculated as follows:

where and are the weight matrix and variable bias of the memory unit. In this way, the current unit is updated as follows:

in addition, the output data are calculated by the following:

after that, and pass to the next cell in the next time slot. In conclusion, each unit works like a state machine in which three gates have their own weights, so that LSTM can deal with sequence problems better.

4.3. CNN-LSTM Prediction Method

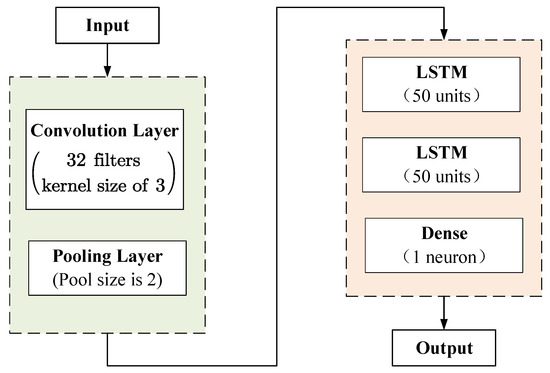

As mentioned above, we use a hybrid model that consists of CNN and LSTM to predict BP values based on PW data. After the PW data are input, the features extraction is performed by CNN first. Then, the features are input into LSTM for further training. The topology of proposed model is shown in Figure 2.

Figure 2.

The topology of the CNN-LSTM model.

In order to improve the accuracy of the BP prediction, we use the PW data of multiple recent time slots to predict the BP values of the next time slot, which is called the windows prediction method. As a result, we need to correlate the input data and output data to generate a data set for training and validation.

Subsequently, we use zero-mean (z-score) normalization to standardize the PW and BP data. Assume that the data are denoted by , for each input data . The idea of z-score normalization is shown in Equation (11):

where is the mean value of and are the standard deviation of . The mean of the processed data is 0, and the standard deviation is 1.

Notice that there are data in the input of the model, which will increase the complexity of the model. Furthermore, as boosts, the features in the input become sparse and difficult to extract. Hence, we use CNN to extract features from the PW data. In detailed, a convolutional layer with 32 filters and a kernel size of 3 is added, followed by a pooling layer with a pool size of 2 for further subsampling, which adopts the max pooling. At this point, the input features are successfully extracted and are more streamlined than the original input data.

Then, the features are passed to two LSTM hidden layers for further training, and each hidden layer has 50 units. Since the BP prediction problem is a regression problem, we use the dense layer (i.e., fully connected layer) with one neuron to receive the tensor from the LSTM hidden layer and output the BP value. Finally, the output is the predicted BP values. As a result, the proposed method is more efficient for the prediction of BP, due to the combination of the feature extraction capability of CNN with the advantage of LSTM for the time sequence.

5. Numerical Results and Performance Analysis

In this section, we evaluate the performance of proposed CNN-LSTM prediction method via numerical results.

5.1. Benchmarks and Parameter Settings

As mentioned above, the PW and BP data used in this work come from the Multi-parameter Intelligent Monitoring in Intensive Care (MIMIC) data set, which collects physiological data of over 90 patients in ICU. The sampling frequency of the data is 500 Hz. As shown in Table 1, the PW data (PLETH) are obtained by fingertip pulse oximeter, and the unit is millivolt (mV). The BP data used in this paper are the arterial blood pressure (ABP), and the unit is millimeter of mercury (mmHg). MIMIC also includes ECG (III) and respiration (RESP) data, which are not used in our work. We selected the first three patients (their numbers in the data set are 39, 41, 55) in the MIMIC data set in our work, and the length of the data of each patient is 30,000 time slots (10 min in total).

Table 1.

Data samples of the MIMIC data set.

In order to evaluate the performance of the proposed prediction method, we set the following five benchmarks, which is shown in Table 2. Obviously, the proposed method and benchmarks can be classified into two groups. Group 1 contains MLP, RNN, and LSTM, which only uses neural networks for prediction. Group 2 contains CNN-MLP (i.e., the classic CNN network.), CNN-RNN, and CNN-LSTM, which uses CNN to extract features first. We use group 1 to figure out the most suitable neural networks for sequence problems, and group 2 shows the effect of CNN on the prediction accuracy.

Table 2.

Proposed prediction method and the setup of benchmarks.

For parameters settings of the neural networks, we set the number of input layer nodes to be related to the window length . There is one hidden layer of each method, and the number of units of the hidden layers are all set to 50. The training epoch is set to 100. PW and BP data are divided into the training set and validation set, according to the order of the time series. The training set and validation set account for and , respectively.

5.2. Results and Analysis

In order to evaluate the performance of the proposed prediction method, we introduce three evaluation indexes, including the mean absolute error (MAE), the mean absolute percent error (MAPE), and the root mean square error (RMSE). The definitions of the three evaluation indexes are as follows:

where is the prediction values, and is the real values, respectively.

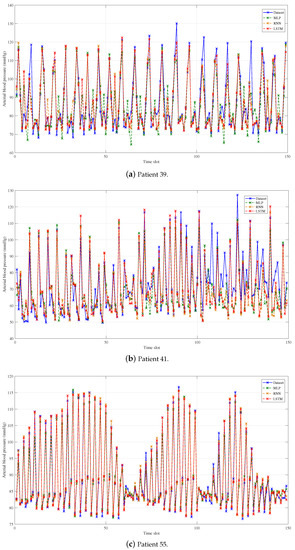

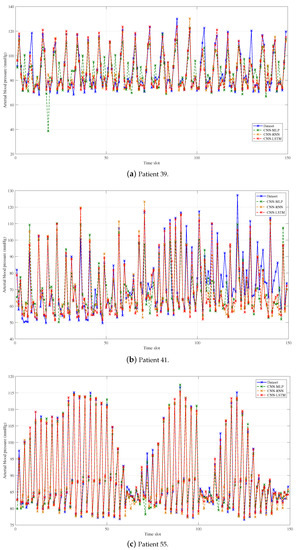

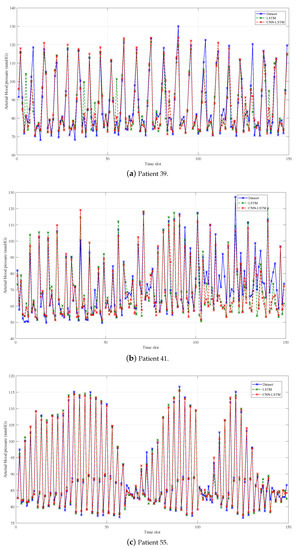

We make a decimation of the original and the predicted data with the decimation factor of 200 in order to show the predicted performance more clearly, which is shown in Figure 3, Figure 4 and Figure 5. What is more, the average predicted performance of all methods is shown in Table 3, and the evaluation indexes of all methods are shown in Table 4, Table 5 and Table 6. In general, the predicted BP values of all methods fit the original values of data set very well, and the evaluation is also acceptable as compared to the current methods, which proves the effectiveness of BP prediction based on PW data. Obviously, our proposed CNN-LSTM prediction method has the best performance among all methods, and there are some results worth noting.

Figure 3.

Prediction results of group 1: MLP, RNN, and LSTM.

Figure 4.

Prediction results of Group 2: CNN-MLP, CNN-RNN, and CNN-LSTM.

Figure 5.

Prediction results of LSTM and CNN-LSTM.

Table 3.

Average predicted performance of patients.

Table 4.

Predicted performance of patient 39.

Table 5.

Predicted performance of patient 41.

Table 6.

Predicted performance of patient 55.

As shown in Figure 3, group 1 shows that, without the help of CNN, LSTM has the best predictive performance of all evaluation indexes, while it requires the longest training time. Compared with MLP, LSTM can improve the training set MAE of three patients to , and , and can improve the validation set to , and . The training time of LSTM is more than 5 times that of MLP. That is because LSTM has a strong ability to deal with time sequences, but the network structure of LSTM is more complicated than that of MLP.

When we focus on group 2 in Figure 4, CNN-LSTM still has the best performance compared to other CNN-assisted methods. Compared with CNN-RNN, CNN-LSTM can improve the training set MAE to , and , and can improve the validation set to , and . Interestingly, the training time required for CNN-LSTM is lower than that of CNN-RNN by , and , respectively. The reason for the long training time of CNN-RNN is the deterioration in learning speed, which is caused by the unstable gradient problem, that is, the exploding gradient or vanishing gradient problem mentioned above.

When comparing the indexes between group 1 and group 2 in Figure 5, we can clearly find that combining neural networks with CNN can effectively improve the prediction performance. Remember that we set the window length to 20, i.e., the PW data of 20 time slots (40 ms) are entered into the network. The data are also spares, while containing useful information. Therefore, the filters in the convolution layer first extract features from the input data. Then, the pooling layer further subsamples the features before entering into the LSTM layers. As a result, LSTM accounts for the time correlation of the feature, which is better than directly considering the time correlation in the input data. That is because the information in the features is more concise and effective than the original input data. Specifically, compared with LSTM, CNN-LSTM can improve the training set MAE of three patients to , and , respectively. For the validation set, the improvements of MAE are , and . Additionally, the proposed method is a kind of end-to-end network and does not need to be trained separately. Effective feature extraction reduces the training time of the three patients, compared to LSTM, to , and . The numerical results prove the effectiveness of the proposed CNN-LSTM prediction method.

However, combining CNN with neural networks, especially MLP and RNN, does not necessarily improve the predicted performance. In Table 5, the performance of CNN-MLP on the training set is worse than MLP. Combining CNN with MLP is equivalent to classic Convolutional Neural Networks, which is usually used for image recognition rather than data prediction. In other words, the features extracted by CNN cannot be well learned by following MLP. In Table 6, CNN-RNN has worse performance than RNN in both the training set and validation set, which is caused by unstable gradient problem. These results confirm the correctness and effectiveness of the proposed CNN-LSTM method once again.

6. Conclusions and Discussion

In this paper, we have proposed a novel CNN-LSTM predicted model of blood pressure (BP) based on pulse wave (PW) data. In the CNN-LSTM model, a convolutional layer and a pooling layer are used to extract features from PW data. Then two LSTM hidden layers are used for further training. We set two groups of benchmarks. The experiment results based on the MIMIC data set show that the proposed method is close to or better than the existing BP estimation methods. In particular, the proposed method can significantly improve the predicted accuracy by up to while saving training time, due to combining the advantages of CNN and LSTM.

However, we need to train multiple models to perform BP prediction on different patients in our current work, which cannot be widely used for a large number of patients. In the future, we will consider introducing the state-of-art technologies in the area of AI, e.g., transfer learning, to improve the generalization ability of the model. According to place the generalized model in portable devices which can receive PW data from the fingertip pulse oximeter, we can provide convenient and real-time BP monitoring for a wider range of people.

Author Contributions

Conceptualization, J.Y. and H.M.; methodology, H.M.; software, H.M.; validation, H.M.; formal analysis, H.M.; investigation, H.M. and J.Y.; resources, H.M.; data curation, H.M. and J.Y.; writing—original draft preparation, H.M.; writing—review and editing, H.M. and J.Y.; visualization, H.M.; supervision, J.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Nabel, E.G. Cardiovascular disease. N. Engl. J. Med. 2003, 349, 60–72. [Google Scholar] [CrossRef] [PubMed]

- Al-Absi, H.R.H.; Refaee, M.A.; Rehman, A.U.; Islam, M.T.; Belhaouari, S.B.; Alam, T. Risk factors and comorbidities associated to cardiovascular disease in Qatar: A machine learning based case-control study. IEEE Access 2021, 9, 29929–29941. [Google Scholar] [CrossRef]

- Mancia, G.; Sega, R.; Milesi, C.; Cessna, G.; Zanchetti, A. Blood-pressure control in the hypertensive population. Lancet 1997, 349, 454–457. [Google Scholar] [CrossRef]

- SPRINT Research Group. A randomized trial of intensive versus standard blood-pressure control. N. Engl. J. Med. 2015, 373, 2103–2116. [Google Scholar] [CrossRef]

- Mahmood, S.N.; Ercelecbi, E. Development of blood pressure monitor by using capacitive pressure sensor and microcontroller. In Proceedings of the International Conference on Engineering Technology and their Applications (IICETA), Al-Najaf, Iraq, 8–9 May 2018; pp. 96–100. [Google Scholar]

- Zakrzewski, A.M.; Huang, A.Y.; Zubajlo, R.; Anthony, B.W. Real-time blood pressure estimation from force-measured ultrasound. IEEE Trans. Biomed. Eng. 2018, 65, 861. [Google Scholar] [CrossRef] [PubMed]

- Parasuraman, S.; Raveendran, R. Measurement of invasive blood pressure in rats. J. Pharmacol. Pharmacother. 2012, 3, 172. [Google Scholar]

- Takci, S.; Yigit, S.; Korkmaz, A.; Yurdakök, M. Comparison between oscillometric and invasive blood pressure measurements in critically ill premature infants. Acta Paediatr. 2012, 101, 132–135. [Google Scholar] [CrossRef]

- Sebald, D.J.; Bahr, D.E.; Kahn, A.R. Narrowband auscultatory blood pressure measurement. IEEE Trans. Biomed. Eng. 2002, 49, 1038–1044. [Google Scholar] [CrossRef]

- Sapinski, A. Standard algorithm of blood-pressure measurement by the oscillometric method. Med. Biol. Eng. Comput. 1992, 30, 671. [Google Scholar] [CrossRef]

- Hirata, K.; Kawakami, M.; O’Rourke, M.F. Pulse wave analysis and pulse wave velocity a review of blood pressure interpretation 100 years after Korotkov. Circ. J. 2006, 70, 1231–1239. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.; Wen, C.; Tao, G.; Bi, M.; Li, G. Continuous and noninvasive blood pressure measurement: A novel modeling methodology of the relationship between blood pressure and pulse wave velocity. Ann. Biomed. Eng. 2009, 37, 2222–2233. [Google Scholar] [CrossRef] [PubMed]

- Avolio, A.P.; Butlin, M.; Walsh, A. Arterial blood pressure measurement and pulse wave analysis—Their role in enhancing cardiovascular assessment. Physiol. Meas. 2009, 31, R1–R47. [Google Scholar] [CrossRef] [PubMed]

- Tanaka, H.; Sakamoto, K.; Kanai, H. Indirect blood pressure measurement by the pulse wave velocity method. Jpn. J. Med. Electron. Biol. Eng. 1984, 22, 13–18. [Google Scholar]

- Wibmer, T.; Kropf, C.; Stoiber, K.; Ruediger, S.; Lanzinger, M.; Rottbauer, W.; Schumann, C. Pulse transit time and blood pressure during cardiopulmonary exercise tests. Physiol. Res. 2014, 63, 287. [Google Scholar] [CrossRef] [PubMed]

- Fortin, J.; Rogge, D.E.; Fellner, C.; Flotzinger, D.; Saugel, B. A novel art of continuous noninvasive blood pressure measurement. Nat. Commun. 2021, 12, 1387. [Google Scholar] [CrossRef] [PubMed]

- Peltokangas, M.; Vehkaoja, A.; Verho, J.; Mattila, V.M.; Romsi, P.; Lekkala, J.; Oksala, N. Age dependence of arterial pulse wave parameters extracted from dynamic blood pressure and blood volume pulse waves. IEEE J. Biomed. Health Inform. 2017, 21, 142–149. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; Lai, S.; Wang, J.; Tan, T.; Huang, Y. The cuffless blood pressure measurement with multi-dimension regression model based on characteristics of pulse waveform. In Proceedings of the 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 6838–6841. [Google Scholar]

- Singla, M.; Sistla, P.; Azeemuddin, S. Cuff-less blood pressure measurement using supplementary ECG and PPG features extracted through wavelet transformation. In Proceedings of the 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 4628–4631. [Google Scholar]

- Raschman, E.; Durackova, D. New digital architecture of CNN for pattern recognition. In Proceedings of the MIXDES-16th International Conference Mixed Design of Integrated Circuits and Systems, Lodz, Poland, 25–27 June 2009; pp. 662–666. [Google Scholar]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Chen, T. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef] [Green Version]

- Sainath, T.N.; Mohamed, A.; Kingsbury, B.; Ramabhadran, B. Deep convolutional neural networks for LVCSR. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 8614–8618. [Google Scholar]

- Liao, S.; Wang, J.; Yu, R.; Sato, K.; Cheng, Z. CNN for situations understanding based on sentiment analysis of twitter data. Procedia Comput. Sci. 2017, 111, 376–381. [Google Scholar] [CrossRef]

- Luo, H.; Xiong, C.; Fang, W.; Love, P.E.; Zhang, B.; Ouyang, X. Convolutional neural networks: Computer vision-based workforce activity assessment in construction. Autom. Constr. 2018, 94, 282–289. [Google Scholar] [CrossRef]

- Khan, S.; Rahmani, H.; Shah, S.A.A.; Bennamoun, M. A guide to convolutional neural networks for computer vision. Synth. Lect. Comput. Vis. 2018, 8, 1–207. [Google Scholar] [CrossRef]

- Graves, A. Long short-term memory. In Supervised Sequence Labelling with Recurrent Neural Networks; Springer: Berlin, Germany, 2012; pp. 37–45. [Google Scholar]

- Mou, H.; Liu, Y.; Wang, L. LSTM for Mobility Based Content Popularity Prediction in Wireless Caching Networks. In Proceedings of the IEEE Globecom Workshops (GC Wkshps), Waikoloa, HI, USA, 9–13 December 2019; pp. 1–6. [Google Scholar]

- Graves, A.; Jaitly, N.; Mohamed, A. Hybrid speech recognition with Deep Bidirectional LSTM. In Proceedings of the IEEE Workshop on Automatic Speech Recognition and Understanding, Olomouc, Czech Republic, 8–12 December 2013; pp. 273–278. [Google Scholar]

- Zeyer, A.; Doetsch, P.; Voigtlaender, P.; Schlüter, R.; Ney, H. A comprehensive study of deep bidirectional LSTM RNNs for acoustic modeling in speech recognition. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 2462–2466. [Google Scholar]

- Zhao, Z.; Chen, W.; Wu, X.; Chen, P.C.; Liu, J. LSTM network: A deep learning approach for short-term traffic forecast. IET Intell. Transp. Syst. 2017, 11, 68–75. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Guan, L.; Hou, C.; Han, H.; Liu, Z.; Sun, Y.; Zheng, M. Wind power short-term prediction based on LSTM and discrete wavelet transform. Appl. Sci. 2019, 9, 1108. [Google Scholar] [CrossRef] [Green Version]

- Wang, J.; Yu, L.C.; Lai, K.R.; Zhang, X. Dimensional sentiment analysis using a regional CNN-LSTM model. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, Berlin, Germany, 7–12 August 2016; pp. 225–230. [Google Scholar]

- Kim, T.Y.; Cho, S.B. Predicting residential energy consumption using CNN-LSTM neural networks. Energy 2019, 182, 72–81. [Google Scholar] [CrossRef]

- Miao, F.; Wen, B.; Hu, Z.; Fortino, G.; Li, Y. Continuous blood pressure measurement from one-channel electrocardiogram signal using deep-learning techniques. Artif. Intell. Med. 2020, 108, 101919. [Google Scholar] [CrossRef]

- Eom, H.; Lee, D.; Han, S.; Hariyani, Y.S.; Lim, Y.; Sohn, I.; Park, K.; Park, C. End-To-End Deep Learning Architecture for Continuous Blood Pressure Estimation Using Attention Mechanism. Sensors 2020, 20, 2338. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; Wang, Z. A hybrid model for blood pressure prediction from a PPG signal based on MIV and GA-BP neural network. In Proceedings of the 13th International Conference on Natural Computation, Fuzzy Systems and Knowledge Discovery (ICNC-FSKD), Chongqing, China, 12–14 June 2020; pp. 1989–1993. [Google Scholar]

- Chen, Y.; Cheng, J.; Ji, W. Continuous blood pressure measurement based on photoplethysmography. In Proceedings of the 14th IEEE International Conference on Electronic Measurement and Instruments (ICEMI), Guilin, China, 29–31 July 2017; pp. 1656–1663. [Google Scholar]

- Chen, X.; Yu, S.; Zhang, Y.; Chu, F.; Sun, B. Machine Learning Method for Continuous Noninvasive Blood Pressure Detection Based on Random Forest. IEEE Access 2021, 9, 34112–34118. [Google Scholar] [CrossRef]

- Shimazaki, S.; Kawanaka, H.; Ishikawa, H.; Inoue, K.; Oguri, K. Cuffless blood pressure estimation from only the Waveform of photoplethysmography using CNN. In Proceedings of the 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 5042–5045. [Google Scholar]

- Sun, X.; Zhou, L.; Chang, S.; Liu, Z. Using CNN and HHT to predict blood pressure level based on photoplethysmography and its derivatives. Biosensors 2021, 11, 120. [Google Scholar] [CrossRef]

- Zhao, Q.; Hu, X.; Lin, J.; Deng, X.; Li, H. A novel short-term blood pressure prediction model based on LSTM. AIP Conf. Proc. 2019, 2058, 020003. [Google Scholar]

- Lo, F.P.W.; Li, C.X.T.; Wang, J.; Cheng, J.; Meng, M.Q.H. Continuous systolic and diastolic blood pressure estimation utilizing long short-term memory network. In Proceedings of the 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju, Korea, 23–27 July 2017; pp. 1853–1856. [Google Scholar]

- Tanveer, M.S.; Hasan, M.K. Cuffless blood pressure estimation from electrocardiogram and photoplethysmogram using waveform based ANN-LSTM network. Biomed. Signal Process. Control. 2019, 51, 382–392. [Google Scholar] [CrossRef] [Green Version]

- Moody, G.B.; Mark, R.G. A database to support development and evaluation of intelligent intensive care monitoring. In Proceedings of the Computers in Cardiology, Indianapolis, IN, USA, 8–11 September 1996; pp. 657–660. [Google Scholar]

- MacKay, D.J.C. A practical Bayesian framework for backpropagation networks. IEEE Trans. Netw. Sci. Eng. 1992, 4, 448–472. [Google Scholar] [CrossRef]

- Tsai, K.C.; Wang, L.; Han, Z. Caching for mobile social networks with deep learning: Twitter analysis for 2016 U.S. election. IEEE Trans. Netw. Sci. Eng. 2020, 7, 193–204. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).