Intelligent Measurement of Morphological Characteristics of Fish Using Improved U-Net

Abstract

1. Introduction

- The operation of contrast transformation and rotation are used to simulate the actual shooting environment, and a large number of training samples are generated for training by appropriate translation and scaling transformations;

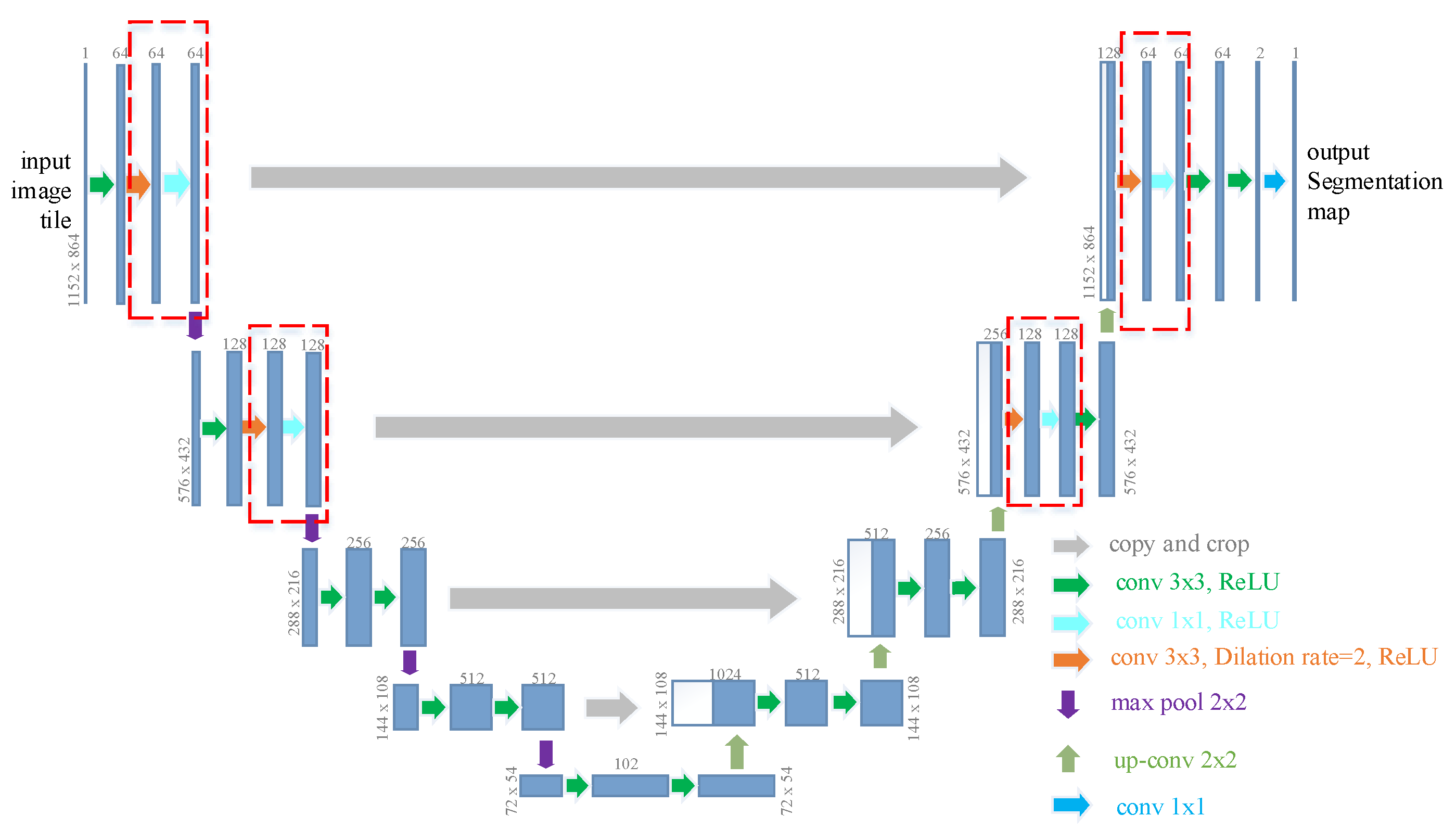

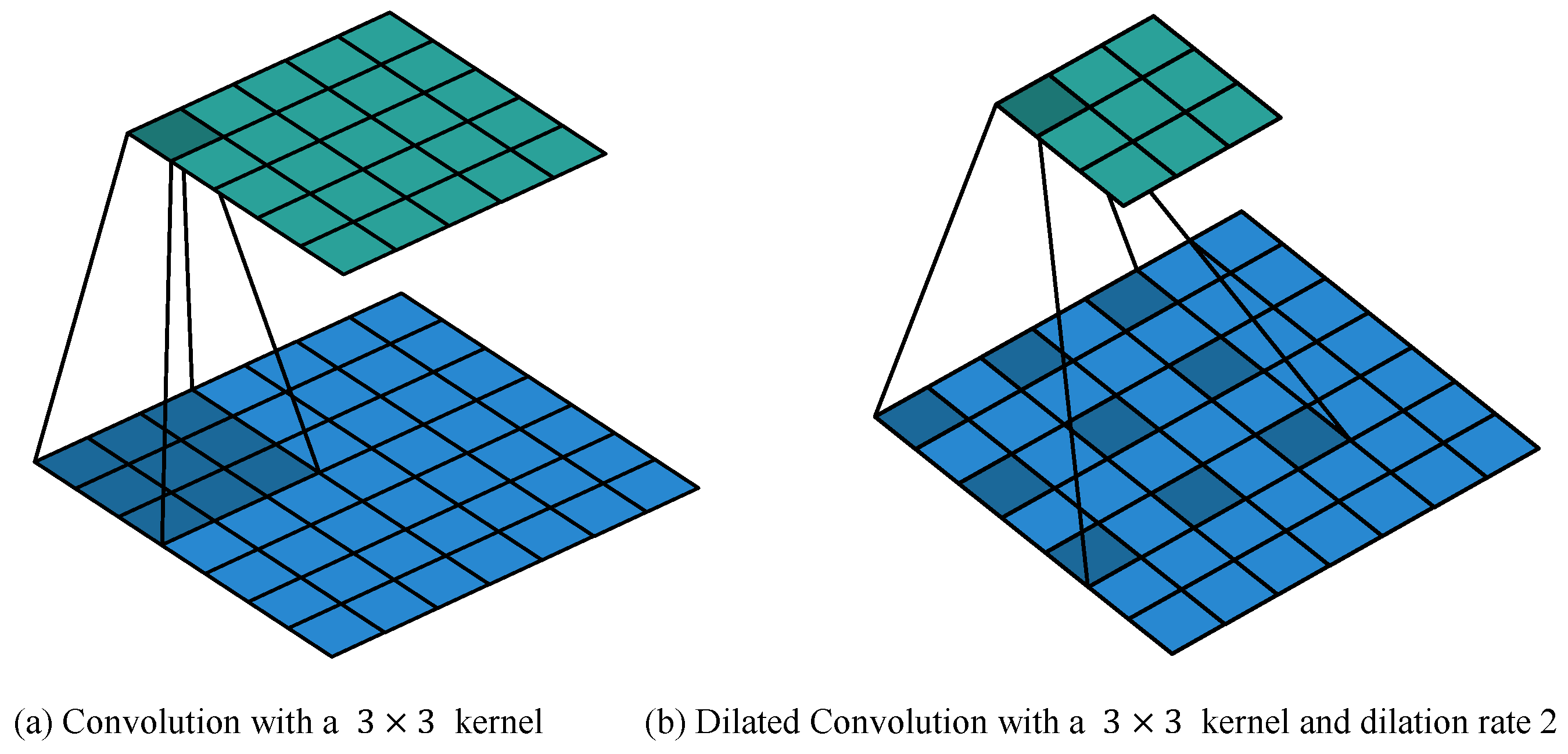

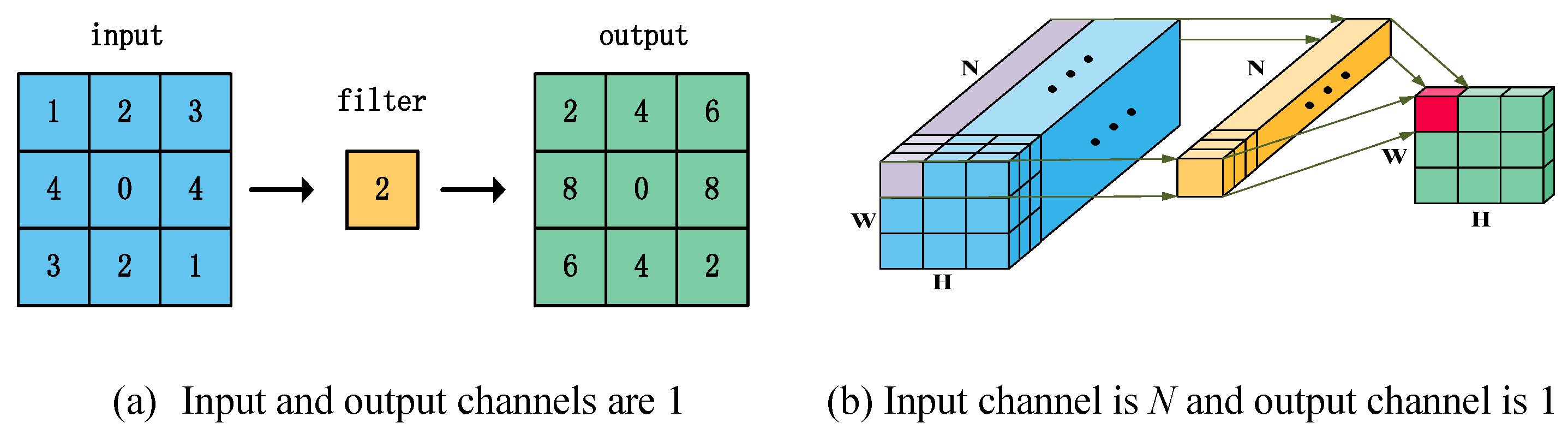

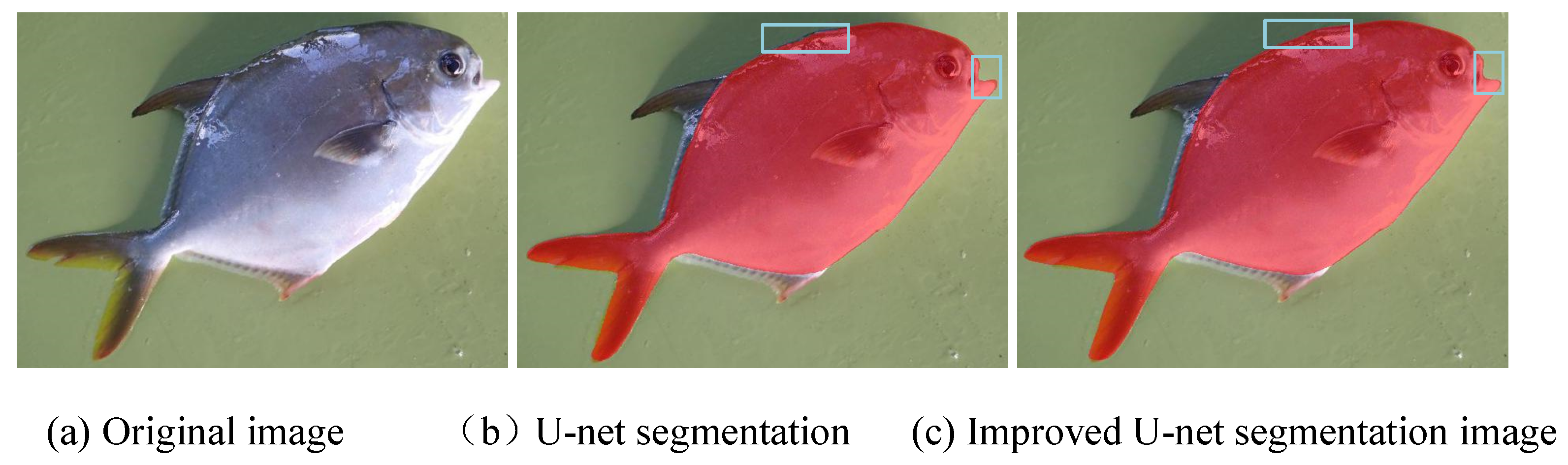

- According to the characteristics of the experimental dataset, the U-net network structure is improved by using a 3 × 3 dilated convolution with a dilation rate 2 and a 1 × 1 convolution to partially replace the 3 × 3 convolution in the original network, the partial convolution receptive field can be expanded to achieve a more accurate segmentation effect;

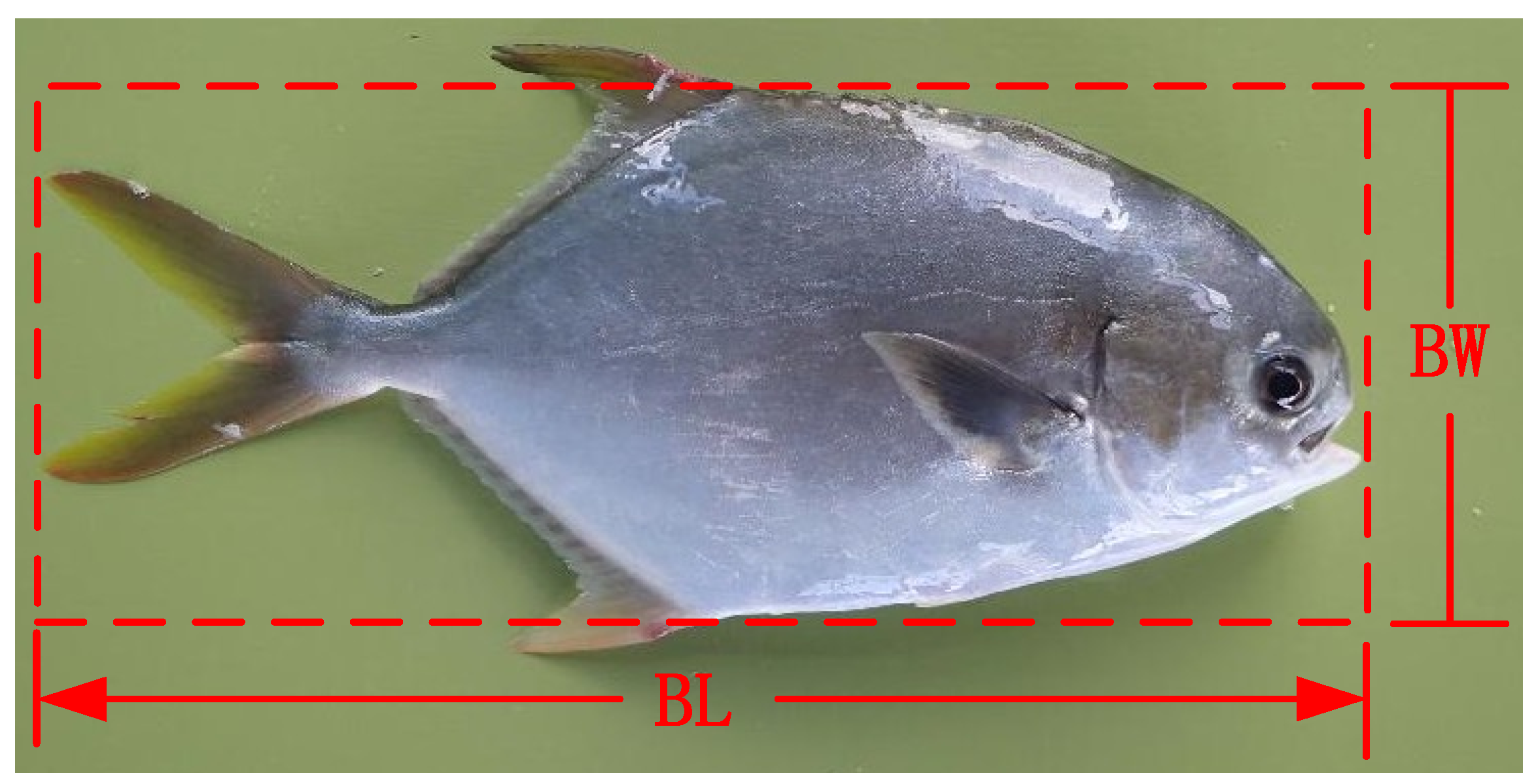

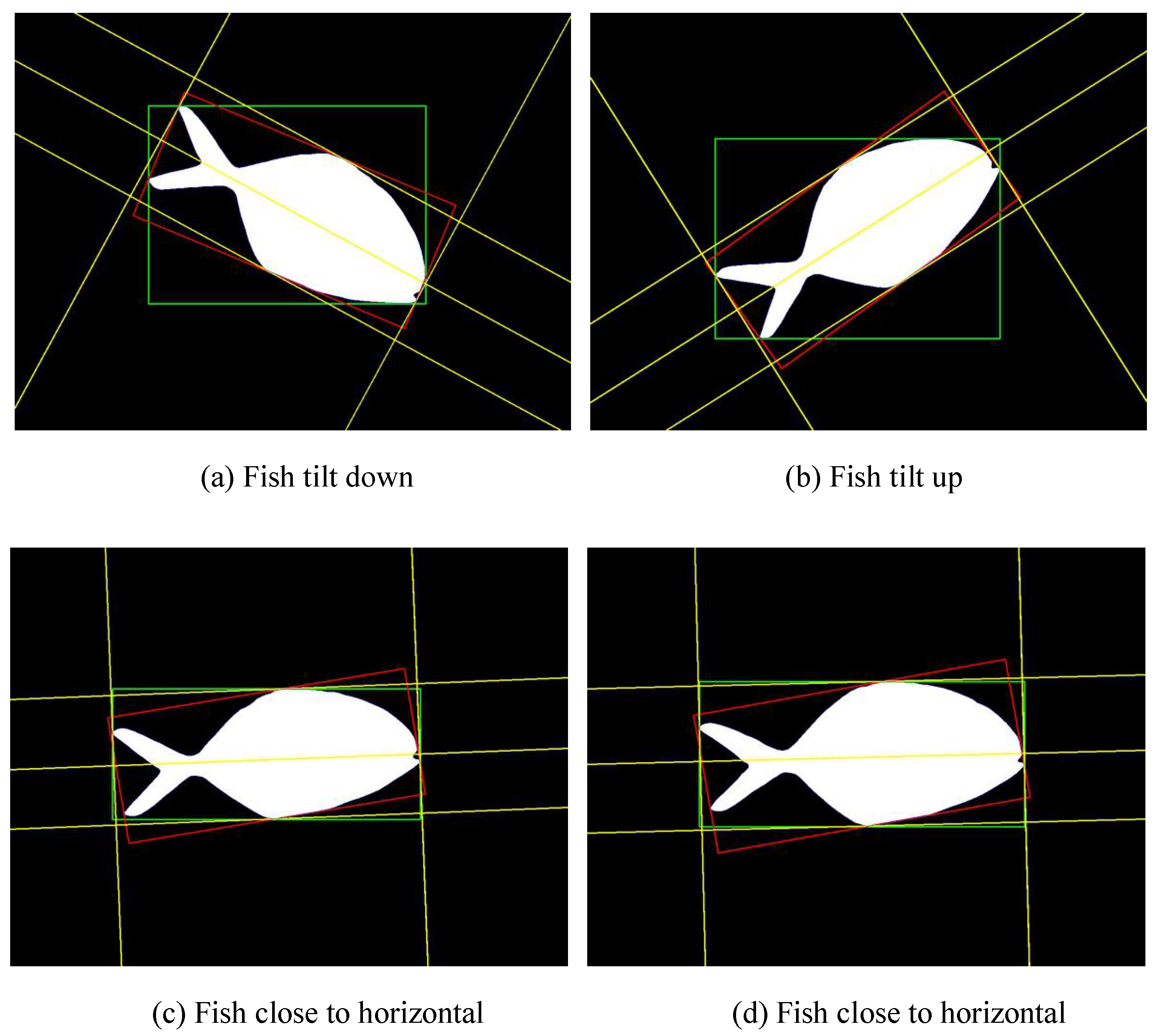

- Combined with the characteristics of fish body shape, the least squares line fitting method is adopted. The solution realizes accurate measurement of the BL and BW of the inclined fish.

2. Materials and Brief Description of Proposed Method

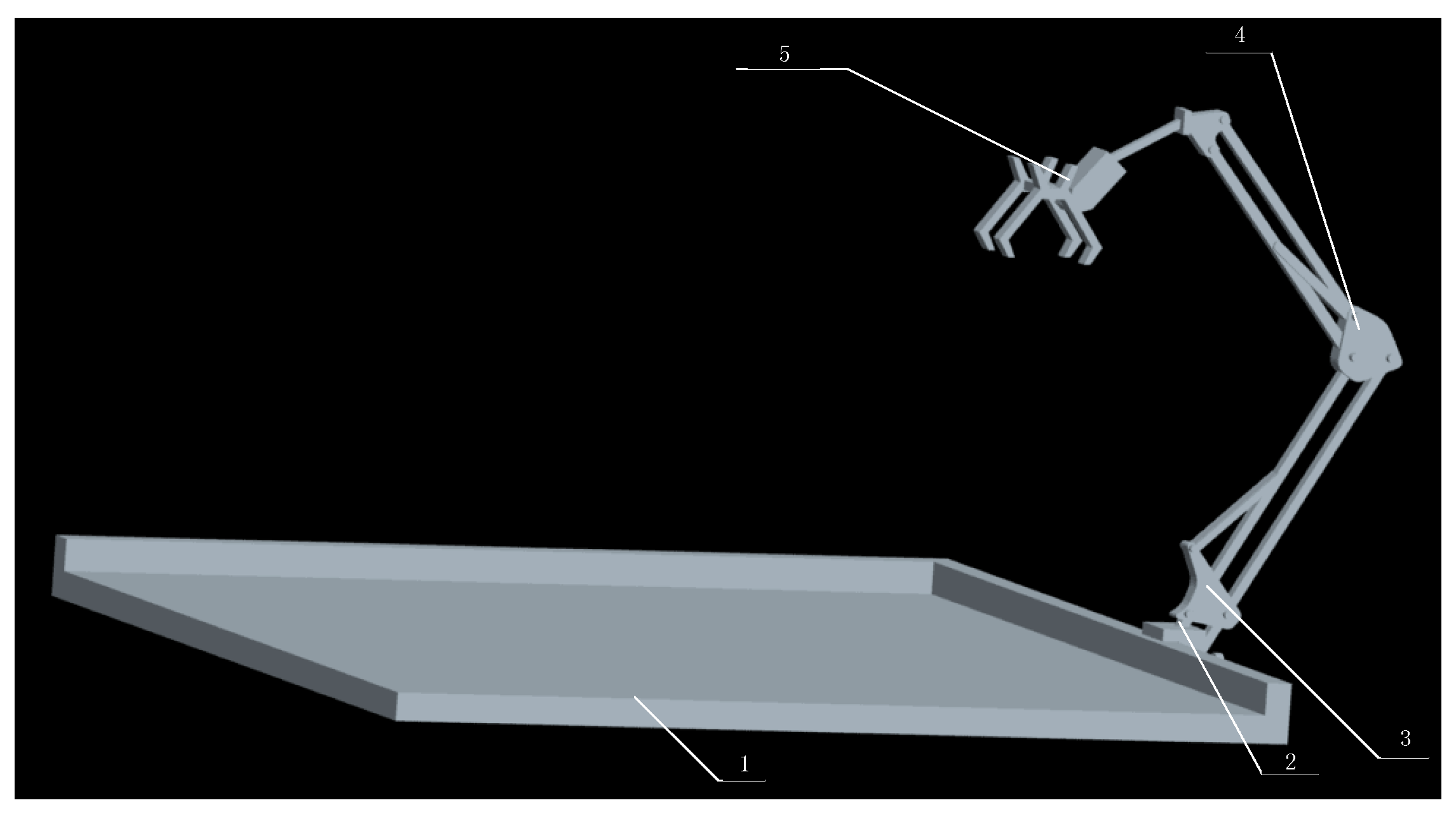

2.1. Data Acquisition

2.2. Proposed Scheme

3. Detailed Description of Proposed Measurement Method

3.1. Data Augmentation

3.1.1. Contrast Transformation

3.1.2. Rotation Transformation

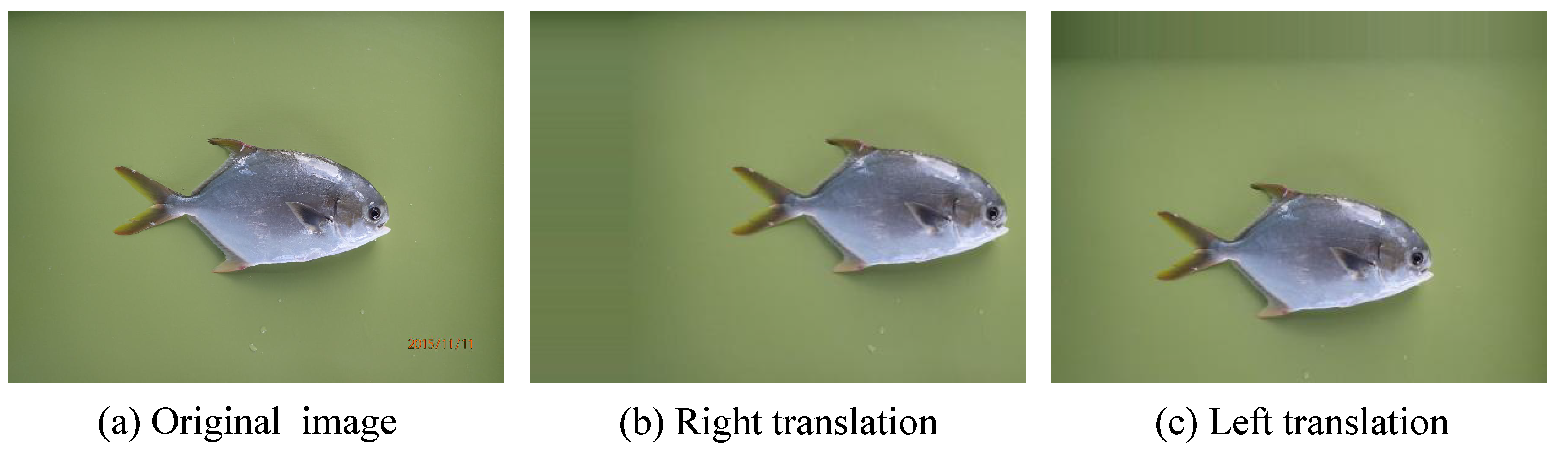

3.1.3. Translation Transformation

3.1.4. Scaling Transformation

3.2. Improved U-Net Network Structure

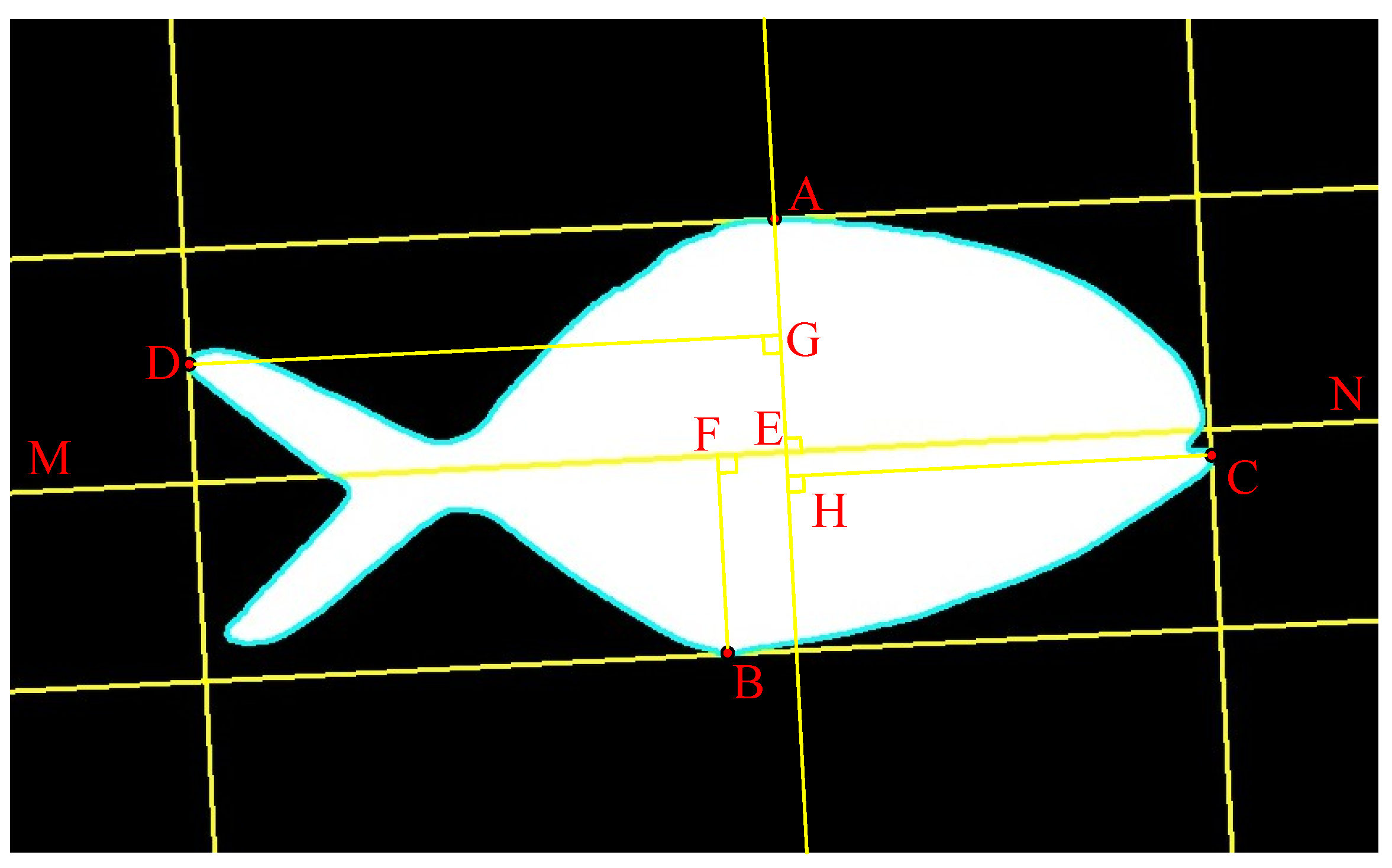

3.3. Line Fitting Scheme

| Algorithm 1 Pseudo code description of the line fitting scheme. |

| Input: Fitting Linear MN Equation by Least Square Method is . Output:. : Step 1: Obtain the body_width.

|

4. Experimental Results and Analysis

4.1. Experimental Environment and Parameter Settings

4.2. Evaluation Indicators

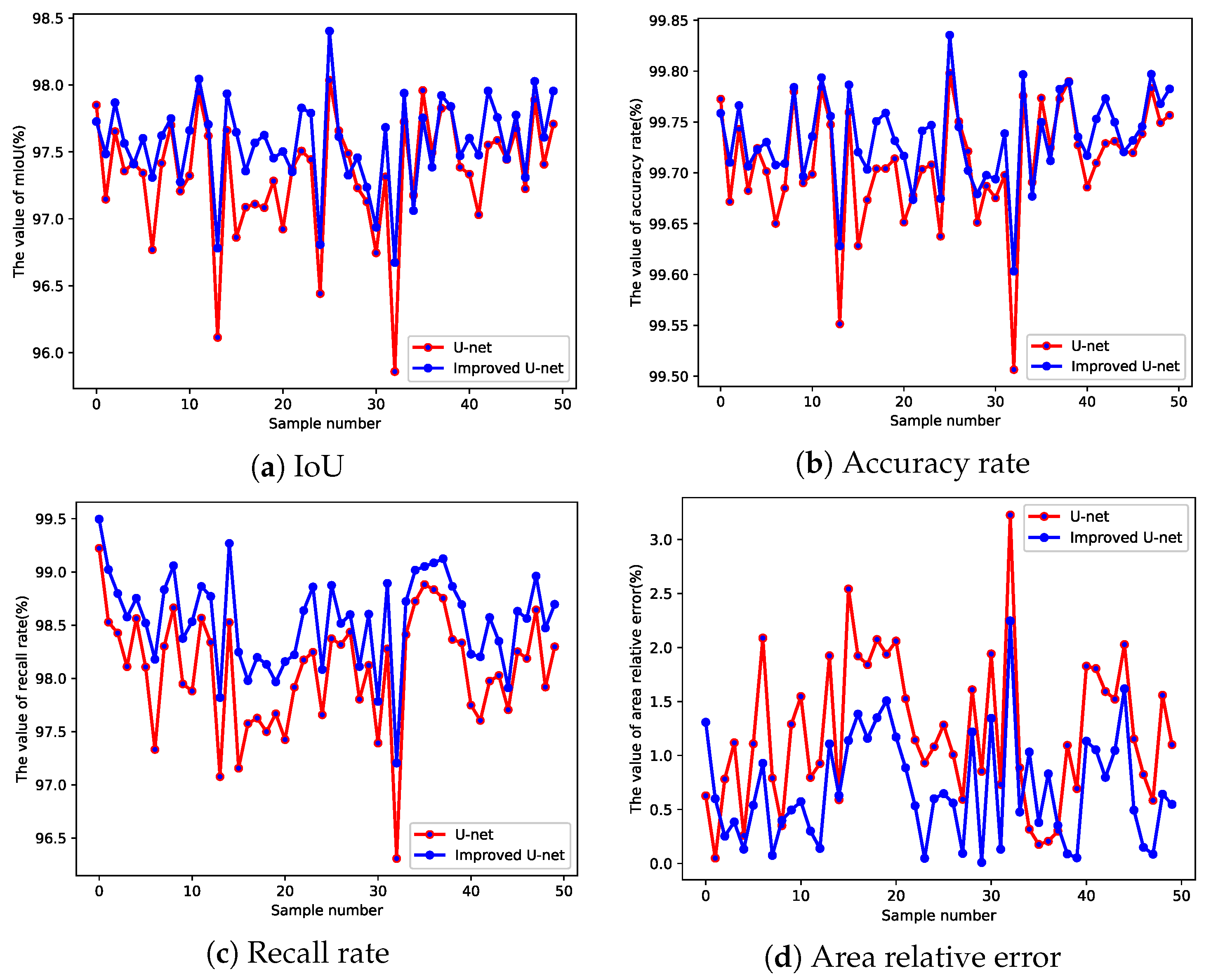

4.3. Improved U-Net Performance Verification

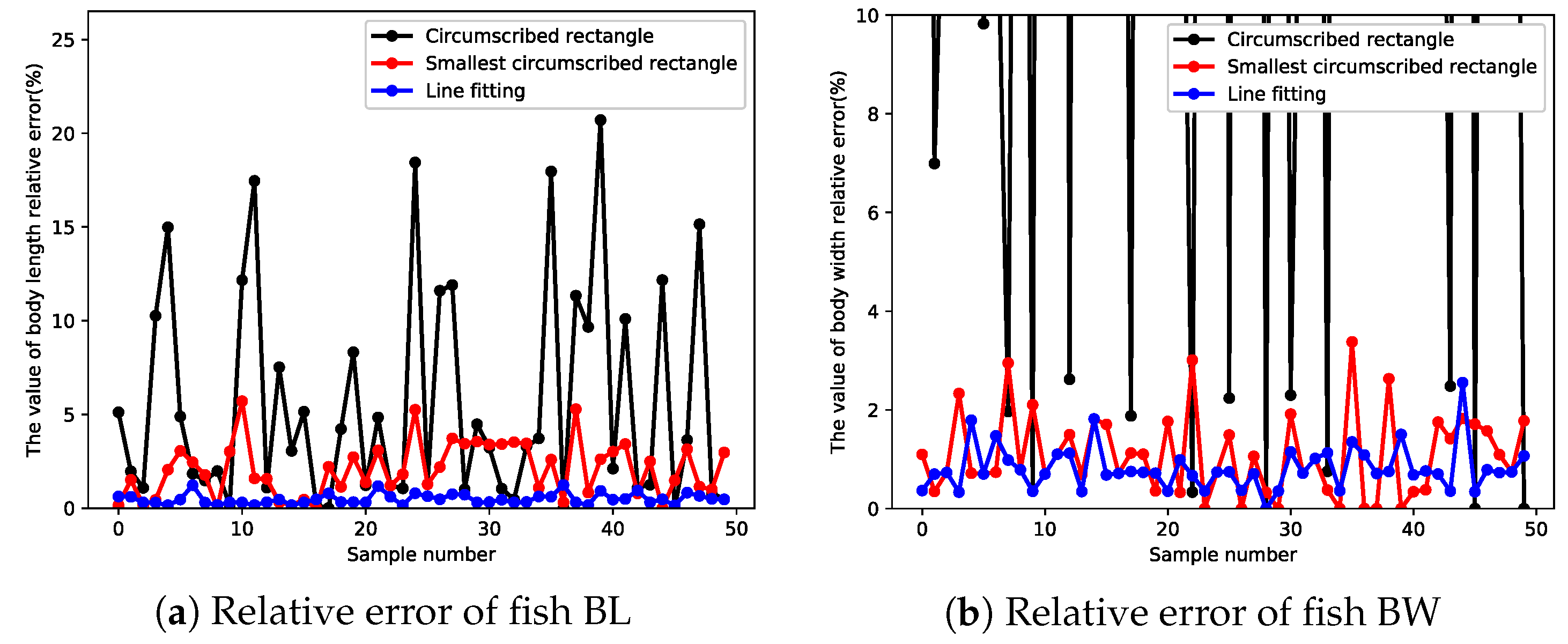

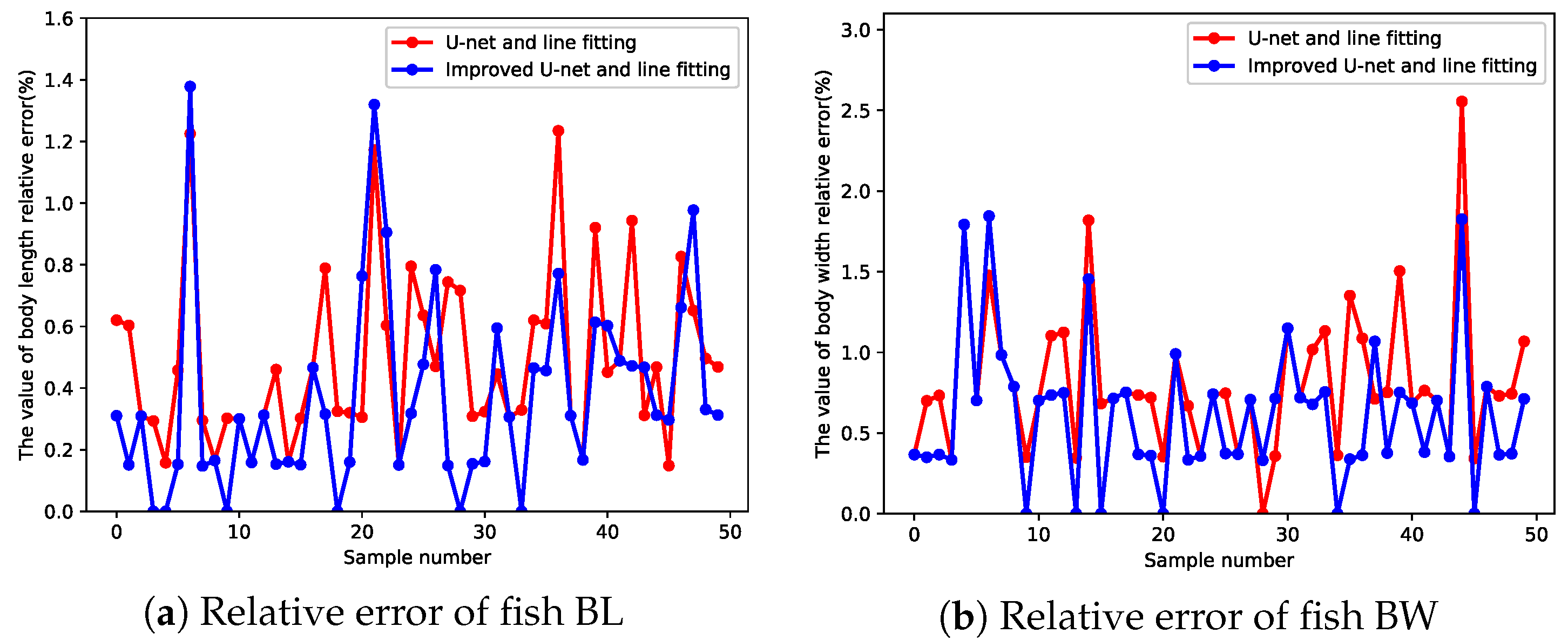

4.4. Feature Measurement for Tilted Fish

5. Conclusions and Future Work

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Antonio, S.; Rodolfo, P.; del Giudice, A.; Francesco, L.; Paolo, M.; Enrico, S.; Alberto, A. Semi-Automatic Guidance vs. Manual Guidance in Agriculture: A Comparison of Work Performance in Wheat Sowing. Electronics 2021, 10, 825. [Google Scholar]

- Ania, C.; Samuel, S. Use and Adaptations of Machine Learning in Big Data—Applications in Real Cases in Agriculture. Electronics 2021, 10, 552. [Google Scholar]

- Hu, Z.H.; Zhang, Y.R.; Zhao, Y.C.; Xie, M.S.; Zhong, J.Z.; Tu, Z.G.; Liu, J.T. A Water Quality Prediction Method Based on the Deep LSTM Network Considering Correlation in Smart Mariculture. Sensors 2019, 19, 1420. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.T.; Yu, C.; Hu, Z.H.; Zhao, Y.C.; Bai, Y.; Xie, M.S.; Luo, J. Accurate Prediction Scheme of Water Quality in Smart Mariculture With Deep Bi-S-SRU Learning Network. IEEE Access 2020, 8, 24784–24798. [Google Scholar] [CrossRef]

- Li, D.L.; Liu, C. Recent advances and future outlook for artificial intelligence in aquaculture. Smart Agric. 2020, 2, 1–20. [Google Scholar]

- Hu, Z.H.; Li, R.Q.; Xia, X.; Yu, C.; Fan, X.; Zhao, Y.C. A method overview in smart aquaculture. Environ. Monit. Assess. 2020, 192, 1–25. [Google Scholar] [CrossRef]

- Wageeh, Y.; Mohamed, H.E.D.; Fadl, A.; Anas1, O.; ElMasry, N.; Nabil, A.; Atia, A. YOLO fish detection with Euclidean tracking in fish farms. J. Ambient. Intell. Humaniz. Comput. 2021, 12, 5–12. [Google Scholar] [CrossRef]

- Hu, J.; Zhao, D.; Zhang, Y.; Zhou, C.; Chen, W. Real-time nondestructive fish behavior detecting in mixed polyculture system using deep-learning and low-cost devices. Expert Syst. Appl. 2021, 178, 115051. [Google Scholar] [CrossRef]

- Wu, H.; He, S.; Deng, Z.; Kou, L.; Huang, K.; Suo, F.; Cao, Z. Fishery monitoring system with AUV based on YOLO and SGBM. In Proceedings of the 2019 Chinese Control Conference (CCC), Guangzhou, China, 27–30 July 2019; pp. 4726–4731. [Google Scholar]

- Liu, S.; Li, X.; Gao, M.; Cai, Y.; Rui, N.; Li, P.; Yan, T.; Lendasse, A. Embedded online fish detection and tracking system via YOLOv3 and parallel correlation filter. In Proceedings of the OCEANS 2018 MTS/IEEE Charleston, Charleston, SC, USA, 22–25 October 2018; pp. 1–6. [Google Scholar]

- Hao, M.M.; Yu, H.L.; Li, D.L. The measurement of fish size by machine vision—A review. IFIP Adv. Inf. Commun. Technol. 2016, 479, 15–32. [Google Scholar]

- An, A.Q.; Yu, Z.T.; Wang, H.Q.; Nie, Y.F. Application of machine vision technology in agricultural machinery. J. Anhui Agric. Sci. 2007, 12, 3748–3749. [Google Scholar]

- Yu, X.J.; Wu, X.F.; Wang, J.P.; Chen, L.; Wang, L. Rapid Detecting Method for Pseudosciaena Crocea Morphological Parameters Based on the Machine Vision. J. Integr. Technol. 2014, 3, 45–51. [Google Scholar]

- Hu, Z.H.; Zhang, Y.R.; Zhao, Y.C.; Cao, L.; Bai, Y.; Huang, M.X. Fish eye recognition based on weighted constraint AdaBoost and pupil diameter automatic measurement with improved Hough circle transform. Trans. Chin. Soc. Agric. Eng. 2017, 33, 226–232. [Google Scholar]

- Hu, Z.H.; Cao, L.; Zhang, Y.R.; Zhao, Y.C. Study on fish caudal peduncle measuring method based on image processing and linear fitting. Fish. Mod. 2017, 44, 43–49. [Google Scholar]

- Yao, H.; Duan, Q.L.; Li, D.L.; Wang, J.P. An improved K-means clustering algorithm for fish image segmentation. Math. Comput. Model. 2013, 58, 790–798. [Google Scholar] [CrossRef]

- Cook, D.; Middlemiss, K.; Jaksons, P.; Davison, W.; Jerrett, A. Validation of fish length estimations from a high frequency multi-beam sonar (ARIS) and its utilisation as a field-based measurement technique. Fish. Res. 2019, 218, 59–68. [Google Scholar] [CrossRef]

- Yu, C.; Fan, X.; Hu, Z.H.; Xia, X.; Zhao, Y.C.; Li, R.Q.; Bai, Y. Segmentation and measurement scheme for fish morphological features based on Mask R-CNN. Inf. Process. Agric. 2020, 7, 523–534. [Google Scholar] [CrossRef]

- Tseng, C.H.; Hsieh, C.L.; Kuo, Y.F. Automatic measurement of the body length of harvested fish using convolutional neural networks. Biosyst. Eng. Inf. Process. Agric. 2020, 189, 36–47. [Google Scholar] [CrossRef]

- Hu, Z.H.; Cao, L.; Zhang, Y.R.; Zhao, Y.C.; Huang, M.X.; Xie, M.S. Study on eye feature detection method of Trachinotus ovatus based on computer vision. Fish. Mod. 2017, 44, 15–23. [Google Scholar]

- He, L.F.; Ren, X.W.; Zhao, X.; Yao, B.; Kasuya, H.; Chao, Y.Y. An efficient two-scan algorithm for computing basic shape features of objects in a binary image. J. Real Time Image Process. 2019, 16, 1277–1287. [Google Scholar] [CrossRef]

- Xu, S.Y.; Peng, C.L.; Chen, K.; Wang, L.Q.; Ren, X.F.; Duan, H.B. Measurement Method of Wheat Stalks Cross Section Parameters Based on Sector Ring Region Image Segmentation. Nongye Jixie Xuebao/Trans. Chin. Soc. Agric. Mach. 2018, 49, 53–59. [Google Scholar]

- Arbeláez, P.; Maire, M.; Fowlkes, C.; Malik, J. Contour Detection and Hierarchical Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 898–916. [Google Scholar] [CrossRef]

- Petkovic, T.; Lonc aric, S. Using Gradient Orientation to Improve Least Squares Line Fitting. In Proceedings of the 2014 Canadian Conference on Computer and Robot Vision, Montreal, QC, Canada, 6–9 May 2014; pp. 226–231. [Google Scholar]

- Fu, Y.; Li, X.T.; Ye, Y.M. A multi-task learning model with adversarial data augmentation for classification of fine-grained images. Neurocomputing 2020, 377, 122–129. [Google Scholar] [CrossRef]

- Pandey, P.; Dewangan, K.K.; Dewangan, D.K. Enhancing the quality of satellite images by preprocessing and contrast enhancement. In Proceedings of the 2017 International Conference on Communication and Signal Processing (ICCSP), Melmaruvathur, India, 6–8 April 2017; pp. 56–60. [Google Scholar]

- Zhu, Q.Y.; Li, T.T. Semi-supervised learning method based on predefined evenly-distributed class centroids. Appl. Intell. 2020. [Google Scholar] [CrossRef]

- Matsumoto, M. Cognition-based contrast adjustment using neural network based face recognition system. In Proceedings of the 2010 IEEE International Symposium on Industrial Electronics, Bari, Italy, 4–7 July 2010; pp. 3590–3594. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef]

- Wu, Y.P.; Jin, W.D.; Ren, J.X.; Sun, Z. A multi-perspective architecture for high-speed train fault diagnosis based on variational mode decomposition and enhanced?multi-scale structure. Appl. Intell. 2019, 49, 3923–3937. [Google Scholar] [CrossRef]

- Yu, F.; Koltun, V. Multi-scale context aggregation by dilated convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- Chen, L.C.; Zhu, Y.K.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; pp. 833–851. [Google Scholar]

- Lin, G.M.; Wu, Q.W.; Qiu, L.D.; Huang, X.X. Image super-resolution using a dilated convolutional neural network. Neurocomputing 2018, 275, 1219–1230. [Google Scholar] [CrossRef]

- Yu, F.; Koltun, V. Funkhouser T. Dilated residual network. arXiv 2017, arXiv:1705.09914. [Google Scholar]

- Ma, J.J.; Dai, Y.P.; Tan, Y.P. Atrous convolutions spatial pyramid network for crowd counting and density estimation. Neurocomputing 2019, 350, 91–101. [Google Scholar] [CrossRef]

- He, K.M.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. arXiv 2018, arXiv:1703.06870. [Google Scholar]

- Lin, M.; Chen, Q.; Yan, S.C. Network In Network. arXiv 2014, arXiv:1312.4400. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.Q.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. arXiv 2014, arXiv:1409.4842. [Google Scholar]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Deep residual learning for image recognition. In Proceedings of the the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26–30 June 2016; pp. 770–778. [Google Scholar]

- Hu, X.L.; Chen, Q.Y.; Shen, J. Study on Morphological characteristics and correlation analysis of trachurus japonicus from southern east china sea. Acta Zootaxonomica Sin. 2013, 38, 407–412. [Google Scholar]

- Song, S.M.; Wang, M.; Hu, X.W. Data reconstruction for nanosensor by using moving least square method. Process. Autom. Instrum. 2010, 31, 16–18. [Google Scholar]

- Mir, A.; Nasiri, J.A. KNN-based least squares twin support vector machine for pattern classification. Appl. Intell. 2018, 48, 4551–4564. [Google Scholar] [CrossRef]

- Hsu, T.S.; Wang, T.C. An improvement stereo vision images processing for object distance measurement. Int. J. Autom. Smart Technol. 2015, 5, 85–90. [Google Scholar]

- Torralba, A.; Russell, B.C.; Yuen, J. LabelMe: Online Image Annotation and Applications. Proc. IEEE 2010, 98, 1467–1484. [Google Scholar] [CrossRef]

- Zhuang, H.; Zhuang, H.; Wang, C.; Xie, J. Co-Occurrent Features in Semantic Segmentation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June; pp. 548–557.

- Ma, F. Research on Image Annotation Based on Regional Segmentation; National University of Defense Technology: Changsha, China, 2017. [Google Scholar]

- Wang, L.Q.; Li, S.P.; Lv, Z.J. Study of tool wear extent monitoring based on contour extraction. Manuf. Technol. Mach. Tool 2019, 11, 94–98. [Google Scholar]

- Qin, Y.; Na, Q.C.; Liu, F.; Wu, H.B.; Sun, K. Strain gauges position based on machine vision positioning. Integr. Ferroelectr. 2019, 200, 191–198. [Google Scholar] [CrossRef]

- Xiao, S.G. Position and Attitude Determination Based on Deep Learning for Object Grasping; Harbin Engineering University: Harbin, China, 2019. [Google Scholar]

| Abbreviations and Symbols | Initial Explanation |

|---|---|

| mIoU | Mean Intersection over Union |

| IoU | Intersection over Union |

| BL | Body Length |

| BW | Body Width |

| BA | Body Area |

| YOLO | You Only Look Once |

| F | Receptive field |

| Output feature size | |

| Input feature size |

| Indicators | mIoU (%) | Average Accuracy Rate (%) | Average Recall Rate (%) | Average Area Relative Error (%) |

|---|---|---|---|---|

| U-net | 97.56 | 99.73 | 98.14 | 1.29 |

| Improved U-net | 97.66 | 99.74 | 98.55 | 0.72 |

| Indicators | mIoU (%) | Average Accuracy Rate (%) | Average Recall Rate (%) | Average Area Relative Error (%) |

|---|---|---|---|---|

| U-net | 97.35 | 99.71 | 98.08 | 1.20 |

| Improved U-net | 97.57 | 99.73 | 98.54 | 0.69 |

| Schemes | U-net | Improved U-net | ||

|---|---|---|---|---|

| Average Relative Error of BL (%) | Average Relative Error of BW (%) | Average Relative Error of BL (%) | Average Relative Error of BW (%) | |

| Circumscribed rectangle | 5.56 | 39.91 | 5.56 | 40.08 |

| Smallest circumscribed rectangle | 2.07 | 1.09 | 1.99 | 1.07 |

| Line fitting | 0.49 | 0.81 | 0.37 | 0.61 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, C.; Hu, Z.; Han, B.; Wang, P.; Zhao, Y.; Wu, H. Intelligent Measurement of Morphological Characteristics of Fish Using Improved U-Net. Electronics 2021, 10, 1426. https://doi.org/10.3390/electronics10121426

Yu C, Hu Z, Han B, Wang P, Zhao Y, Wu H. Intelligent Measurement of Morphological Characteristics of Fish Using Improved U-Net. Electronics. 2021; 10(12):1426. https://doi.org/10.3390/electronics10121426

Chicago/Turabian StyleYu, Chuang, Zhuhua Hu, Bing Han, Peng Wang, Yaochi Zhao, and Huaming Wu. 2021. "Intelligent Measurement of Morphological Characteristics of Fish Using Improved U-Net" Electronics 10, no. 12: 1426. https://doi.org/10.3390/electronics10121426

APA StyleYu, C., Hu, Z., Han, B., Wang, P., Zhao, Y., & Wu, H. (2021). Intelligent Measurement of Morphological Characteristics of Fish Using Improved U-Net. Electronics, 10(12), 1426. https://doi.org/10.3390/electronics10121426