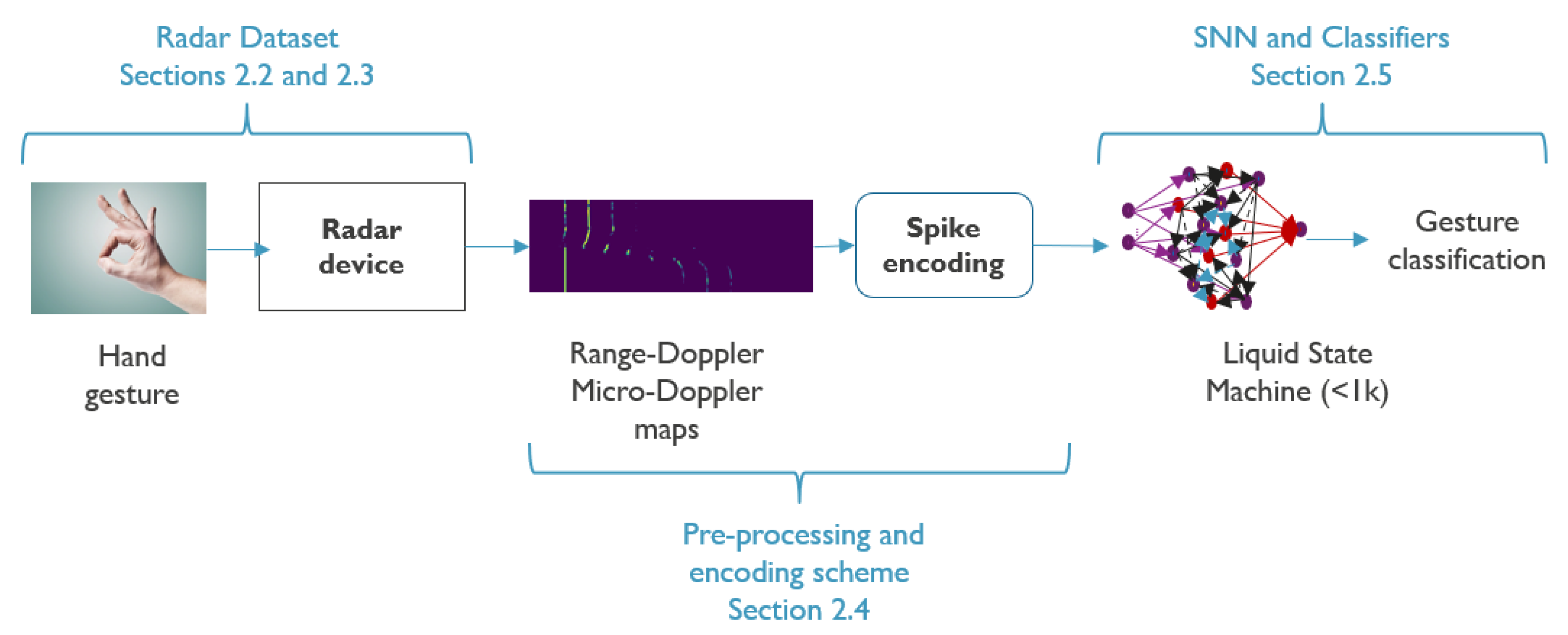

Radar-Based Hand Gesture Recognition Using Spiking Neural Networks

Abstract

1. Introduction

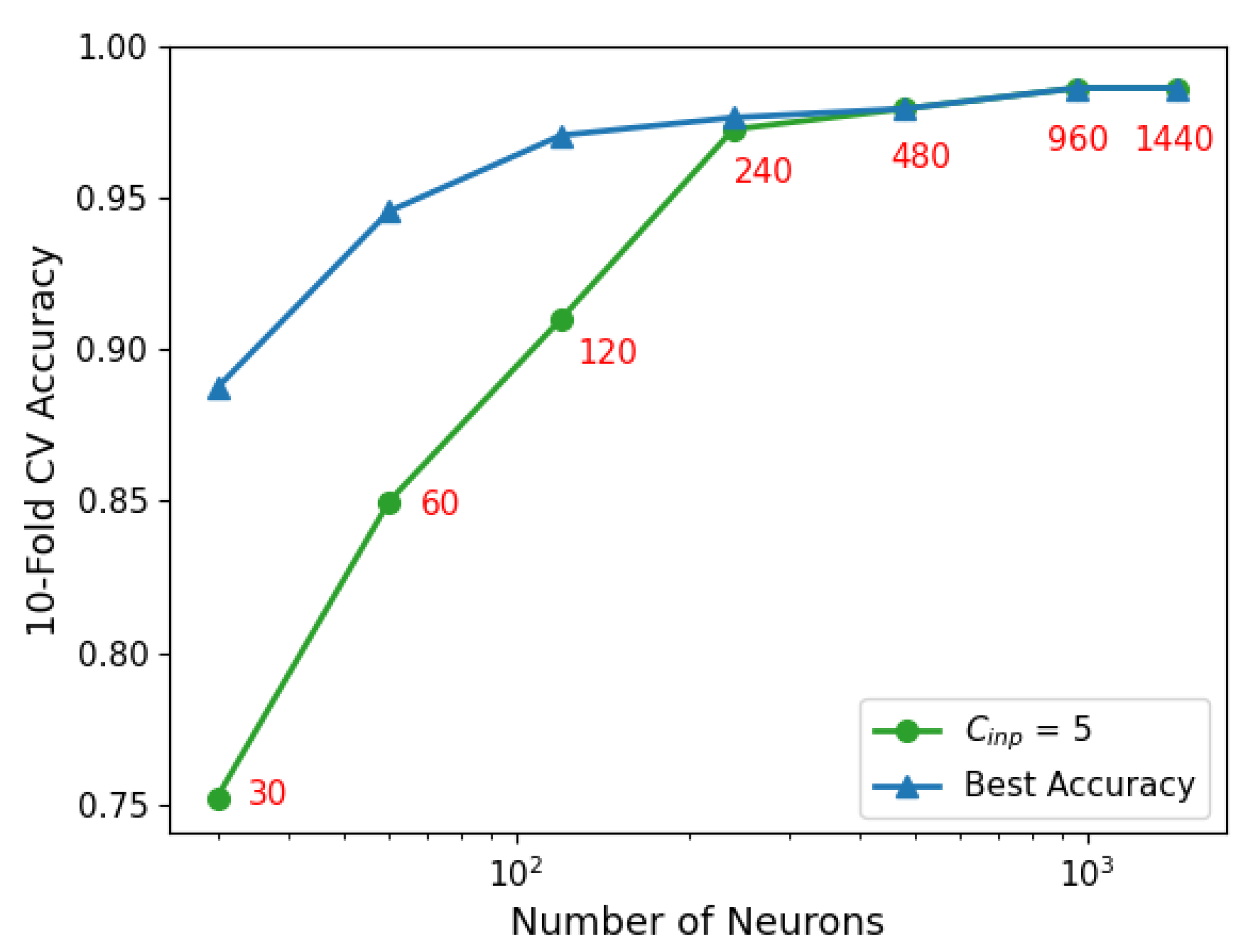

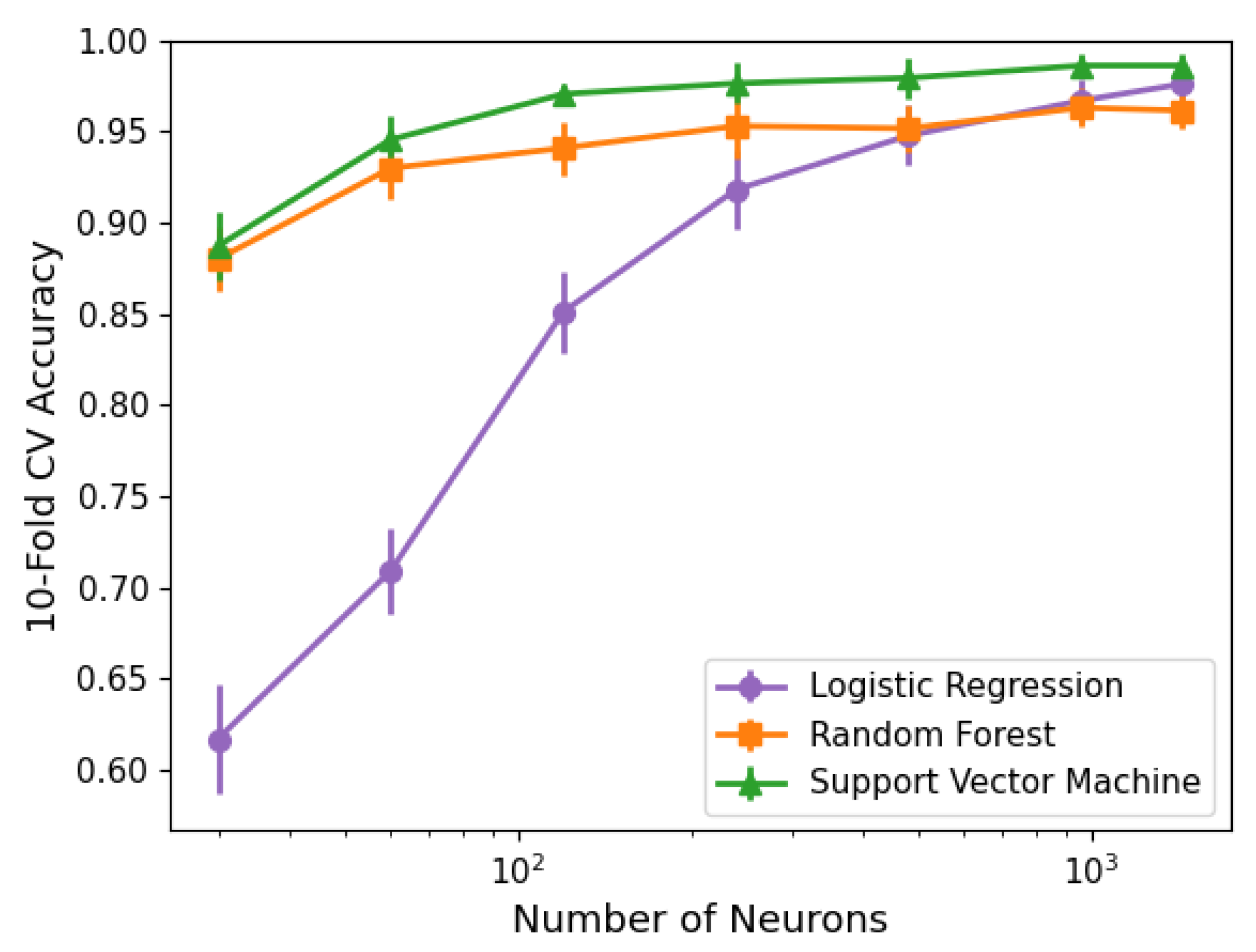

- We introduce a novel radar signal to spike representation, which, when used as input to a liquid state machine with a classifier, achieves better than state-of-the-art results for a radar-based HGR system.

- We present a much simpler processing pipeline for gesture recognition than most state-of-the-art neural-network solutions, with a small footprint in terms of neurons and synapses, thus with great potential for energy efficiency. It is based on LSM, in which training is performed only at the readout layer, simplifying the overall learning procedure and allowing easy personalization.

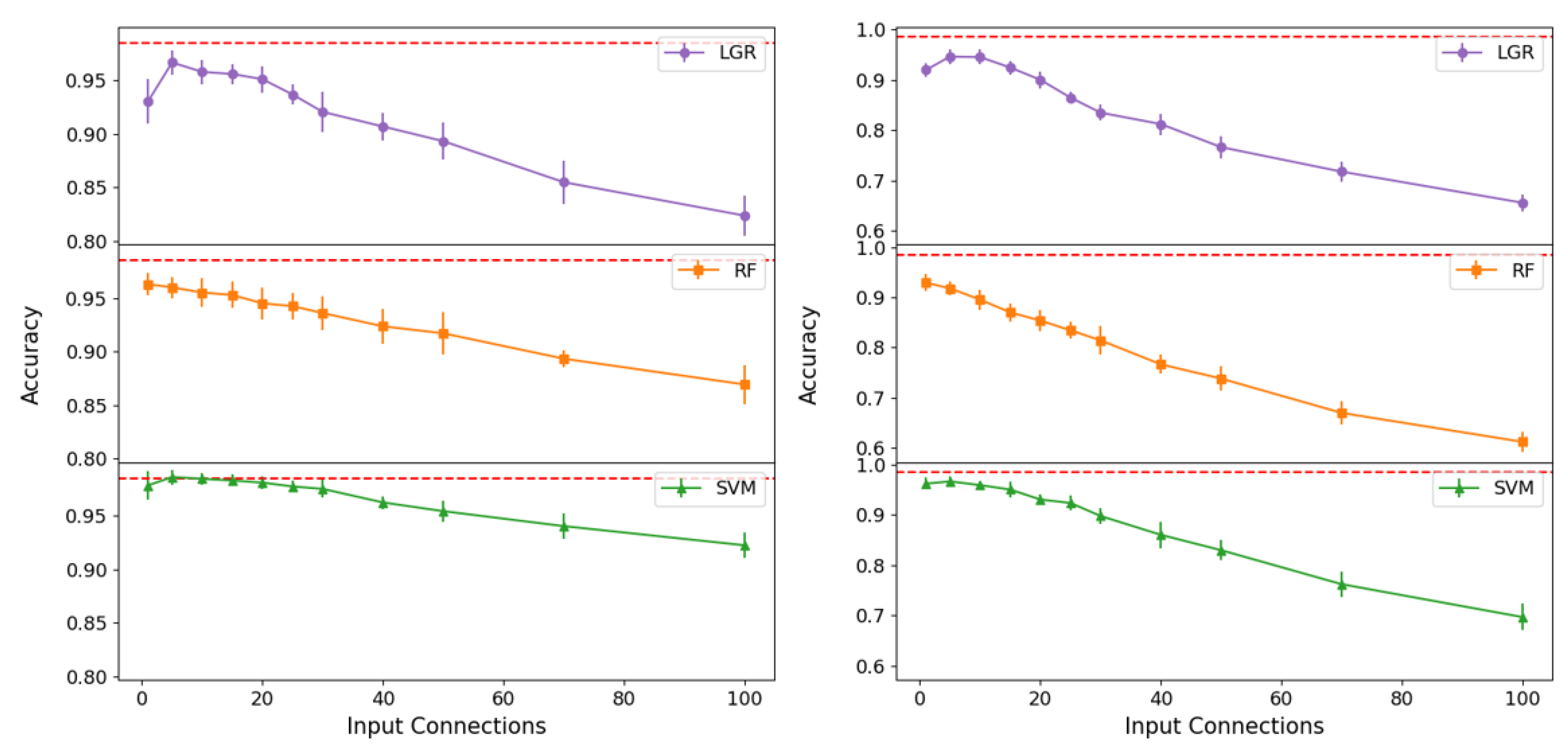

- We conduct performance analysis of our approach, making extensive simulations to demonstrate how the size, input signal, and classifiers affect the system’s overall capability.

2. Datasets, Spike Encodings, and Network Configuration

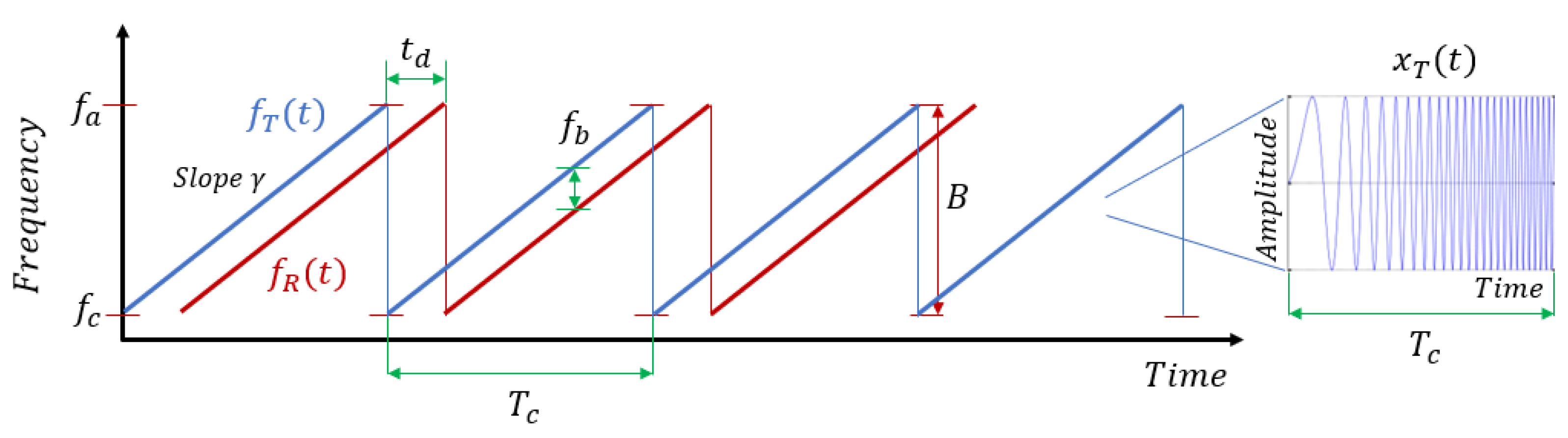

2.1. Radar System

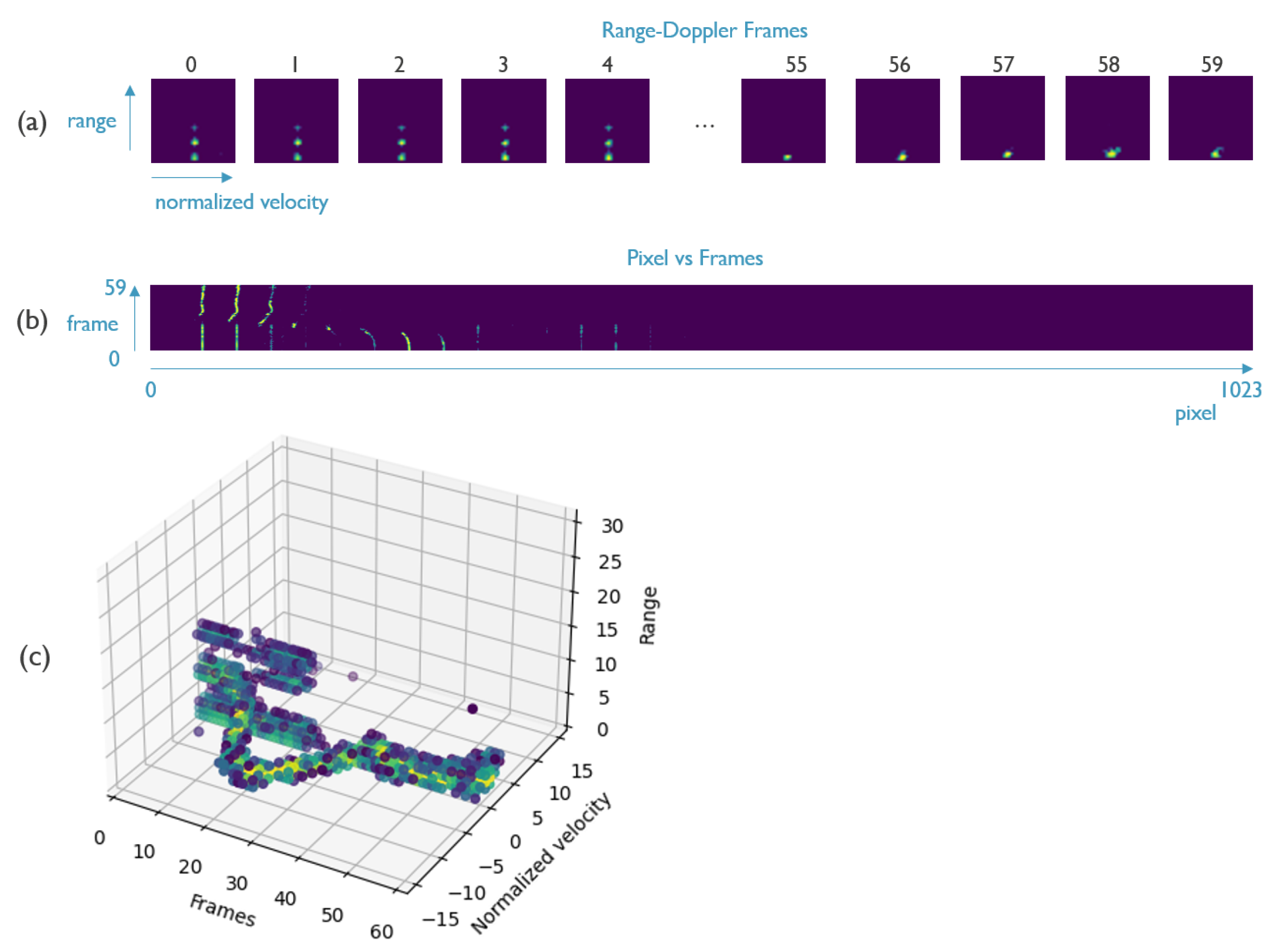

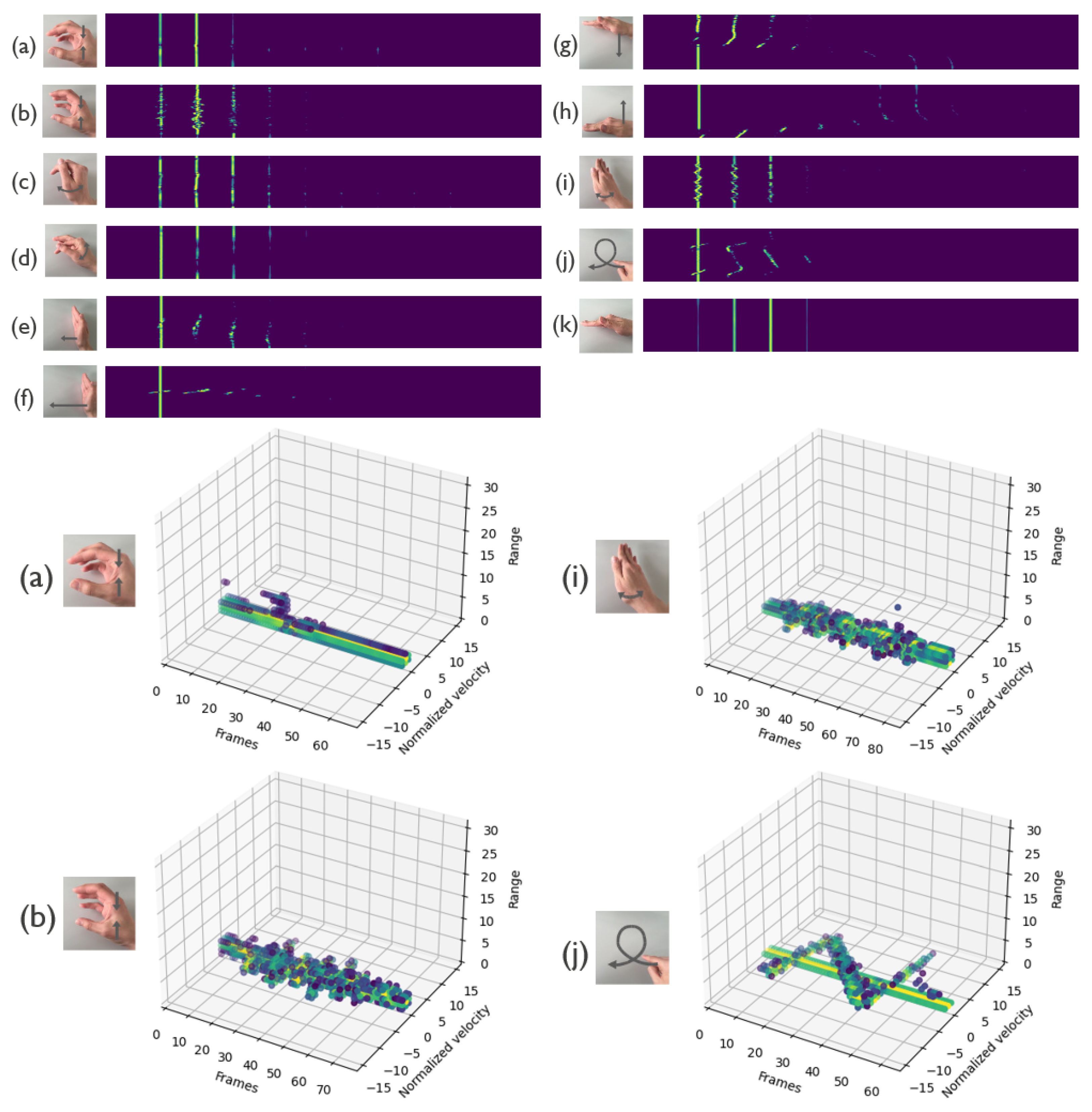

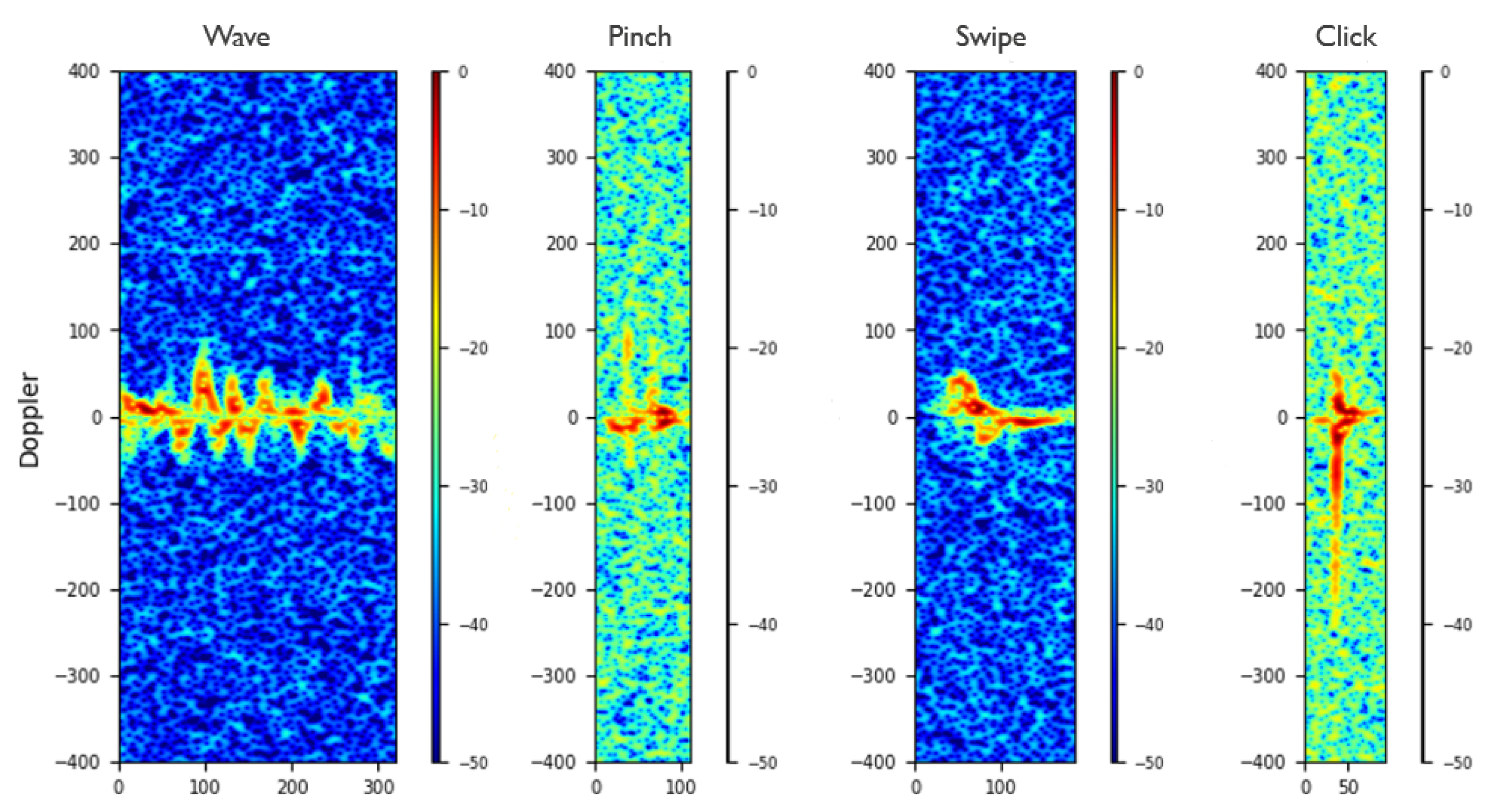

2.2. Soli Dataset

2.3. Dop-NET Dataset

2.4. Data and Spike Train Representation

2.5. Liquid State Machine

3. Experiments and Results

- K-fold cross-validation: partitioning the complete data set in complementary k-subsets using k-1 subsets for training and one subset for testing, repeat k time for cross-validation.

- Leave-one-subject-out cross-validation: training the system on all but one participant and testing the remaining participant. It indicates how well a pre-trained system on several users would perform for unknown users.

- Leave-one-session-out cross-validation: training the system for a particular user only, using all but one of the user’s sessions to train, and testing on the remaining one. It indicates how well the system could be used on a personal device trained for just one particular user.

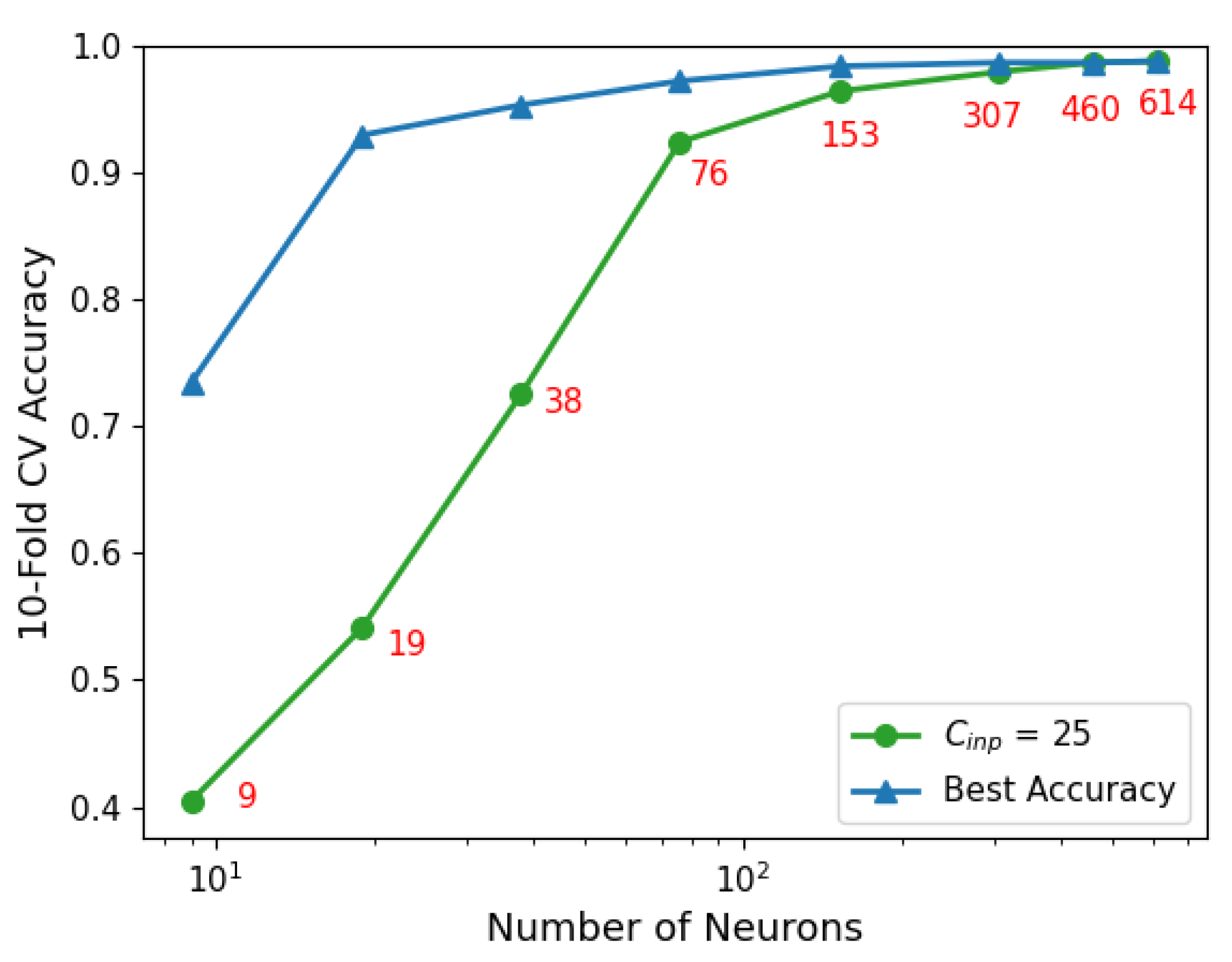

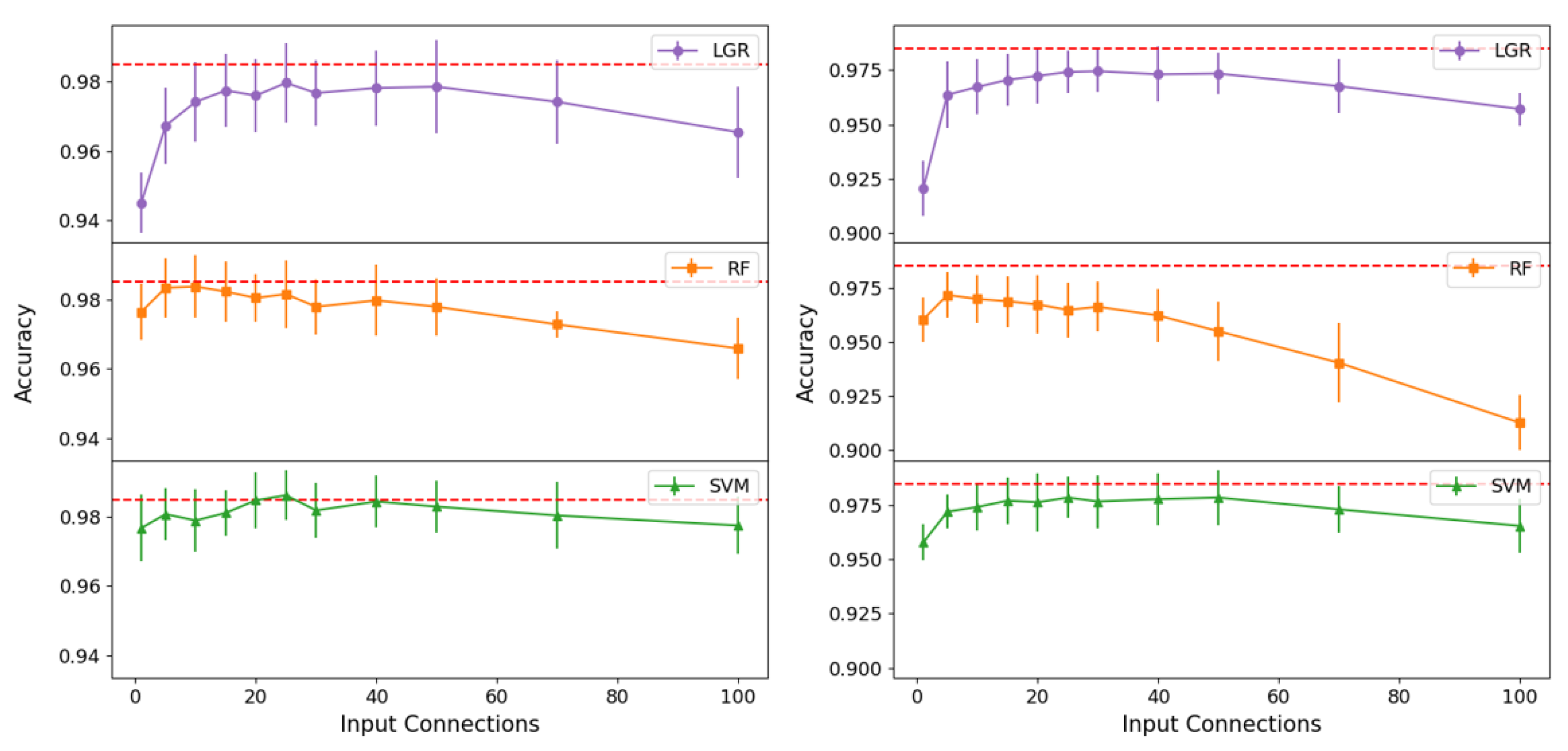

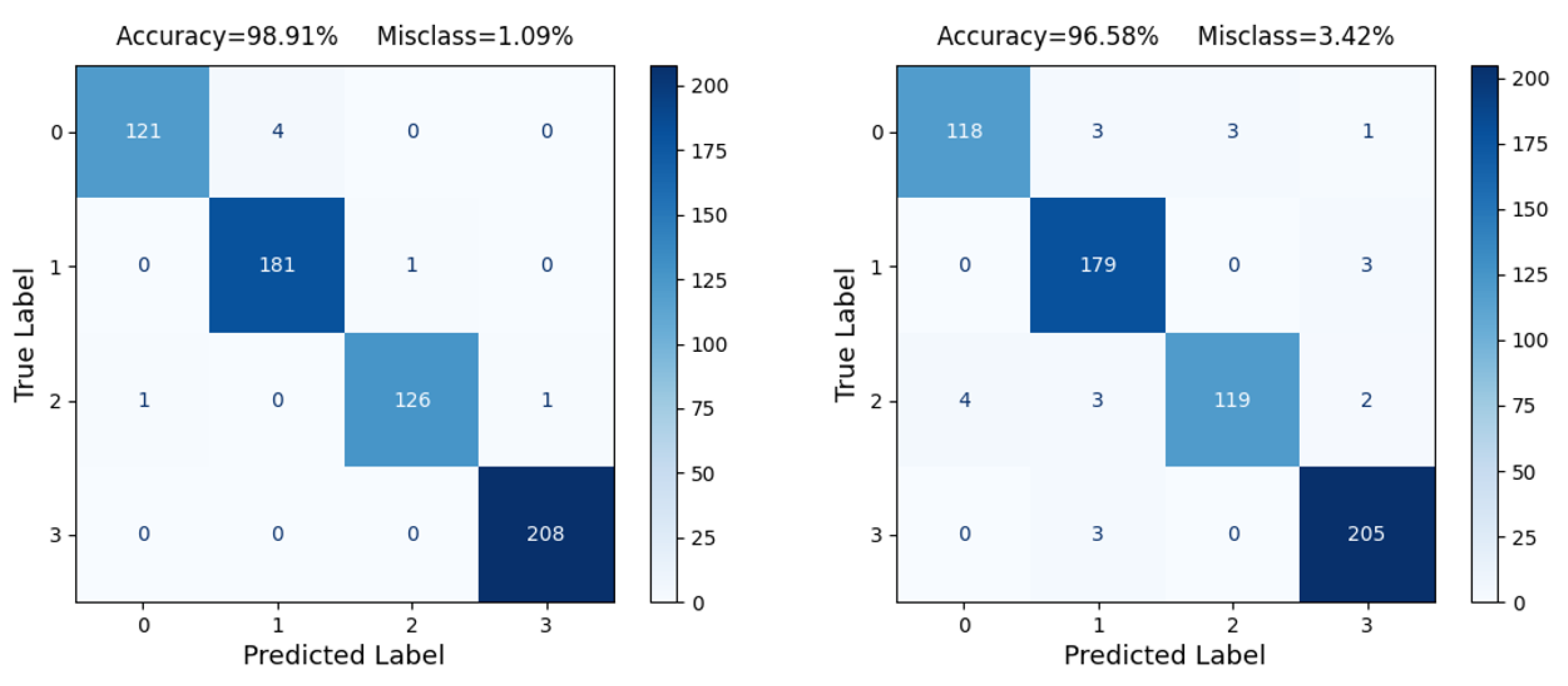

3.1. Soli

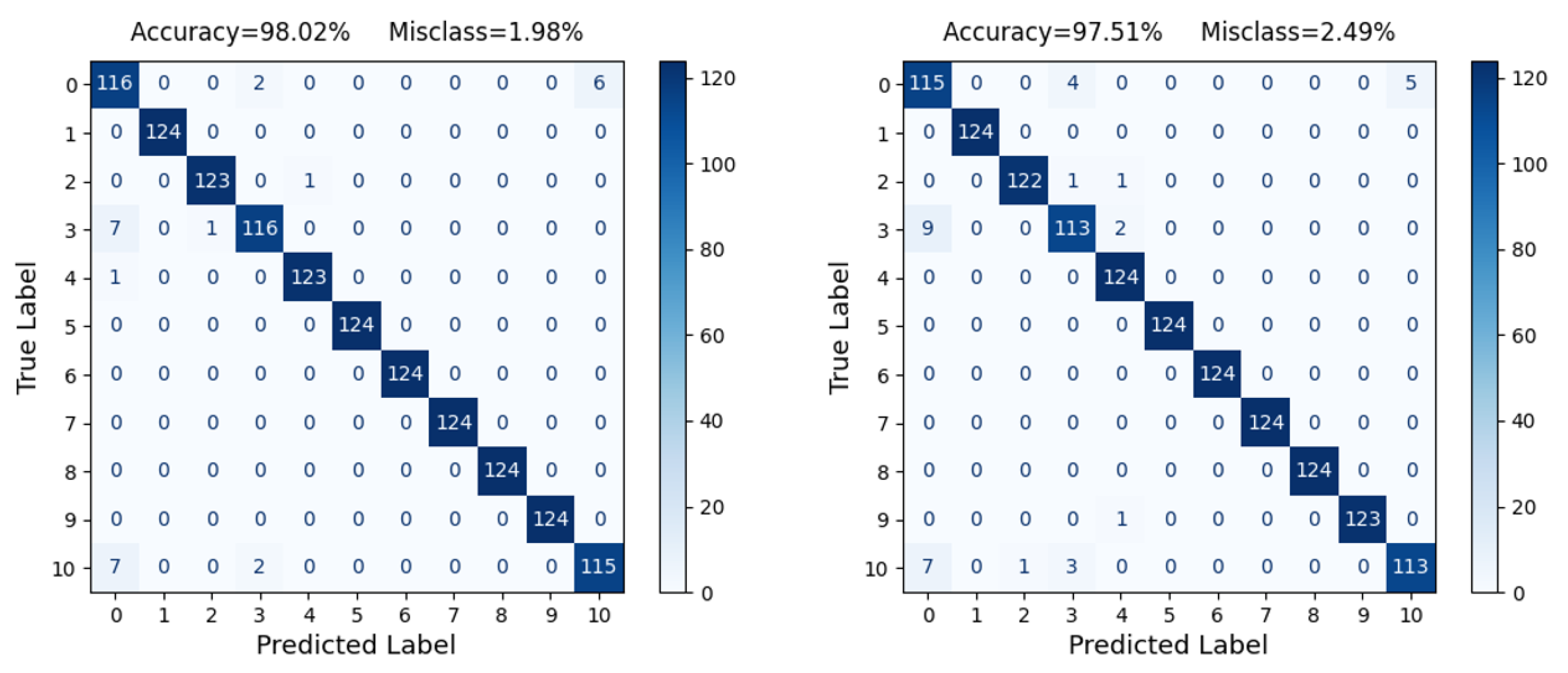

3.2. Dop-NET

3.3. State-of-the-Art Radar-Based Gesture Recognition

4. Discussion

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Molchanov, P.; Gupta, S.; Kim, K.; Pulli, K. Short-range FMCW monopulse radar for hand-gesture sensing. In Proceedings of the 2015 IEEE Radar Conference (RadarCon), Arlington, VA, USA, 10–15 May 2015; pp. 1491–1496. [Google Scholar] [CrossRef]

- Ritchie, M.; Jones, A.M. Micro-Doppler Gesture Recognition using Doppler, Time and Range Based Features. In Proceedings of the 2019 IEEE Radar Conference (RadarConf), Boston, MA, USA, 22–26 April 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Lien, J.; Gillian, N.; Karagozler, M.E.; Amihood, P.; Schwesig, C.; Olson, E.; Raja, H.; Poupyrev, I. Soli: Ubiquitous Gesture Sensing with Millimeter Wave Radar. ACM Trans. Graph. 2016, 35. [Google Scholar] [CrossRef]

- Molchanov, P.; Yang, X.; Gupta, S.; Kim, K.; Tyree, S.; Kautz, J. Online Detection and Classification of Dynamic Hand Gestures with Recurrent 3D Convolutional Neural Networks. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4207–4215. [Google Scholar] [CrossRef]

- Maqueda, A.I.; del Blanco, C.R.; Jaureguizar, F.; García, N. Human–computer interaction based on visual hand-gesture recognition using volumetric spatiograms of local binary patterns. Comput. Vis. Image Underst. 2015, 141, 126–137. [Google Scholar] [CrossRef]

- Amir, A.; Taba, B.; Berg, D.; Melano, T.; McKinstry, J.; Di Nolfo, C.; Nayak, T.; Andreopoulos, A.; Garreau, G.; Mendoza, M.; et al. A Low Power, Fully Event-Based Gesture Recognition System. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 7388–7397. [Google Scholar] [CrossRef]

- Massa, R.; Marchisio, A.; Martina, M.; Shafique, M. An Efficient Spiking Neural Network for Recognizing Gestures with a DVS Camera on the Loihi Neuromorphic Processor. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–9. [Google Scholar] [CrossRef]

- George, A.M.; Banerjee, D.; Dey, S.; Mukherjee, A.; Balamurali, P. A Reservoir-based Convolutional Spiking Neural Network for Gesture Recognition from DVS Input. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–9. [Google Scholar] [CrossRef]

- Hu, Y.; Liu, H.; Pfeiffer, M.; Delbruck, T. DVS Benchmark Datasets for Object Tracking, Action Recognition, and Object Recognition. Front. Neurosci. 2016, 10, 405. [Google Scholar] [CrossRef]

- Moin, A.; Zhou, A.; Rahimi, A.; Menon, A.; Benatti, S.; Alexandrov, G.; Tamakloe, S.; Ting, J.; Yamamoto, N.; Khan, Y.; et al. A wearable biosensing system with in-sensor adaptive machine learning for hand gesture recognition. Nat. Electron. 2021, 4, 54–63. [Google Scholar] [CrossRef]

- Cheng, L.; Liu, Y.; Hou, Z.G.; Tan, M.; Du, D.; Fei, M. A Rapid Spiking Neural Network Approach With an Application on Hand Gesture Recognition. IEEE Trans. Cogn. Dev. Syst. 2021, 13, 151–161. [Google Scholar] [CrossRef]

- Wang, S.; Song, J.; Lien, J.; Poupyrev, I.; Hilliges, O. Interacting with Soli: Exploring Fine-Grained Dynamic Gesture Recognition in the Radio-Frequency Spectrum. In Proceedings of the 29th Annual Symposium on User Interface Software and Technology, Tokyo, Japan, 16–19 October 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 851–860. [Google Scholar] [CrossRef]

- Min, Y.; Zhang, Y.; Chai, X.; Chen, X. An Efficient PointLSTM for Point Clouds Based Gesture Recognition. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 5760–5769. [Google Scholar] [CrossRef]

- Samuelsson, A. How to Build Advanced Hand Gestures Using Radar and TinyML. tinyML Talks. 2020. Available online: https://youtu.be/-XhbvlWcymY (accessed on 30 April 2021).

- Ahuja, K.; Jiang, Y.; Goel, M.; Harrison, C. Vid2Doppler: Synthesizing Doppler Radar Data from Videos for Training Privacy-Preserving Activity Recognition. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; Association for Computing Machinery: New York, NY, USA, 2021. [Google Scholar] [CrossRef]

- Ritchie, M.; Capraru, R.; Fioranelli, F. Dop-NET: A micro-Doppler radar data challenge. Electron. Lett. 2020, 56, 568–570. [Google Scholar] [CrossRef]

- Maass, W.; Sontag, E.D. Neural Systems as Nonlinear Filters. Neural Comput. 2000, 12, 1743–1772. [Google Scholar] [CrossRef] [PubMed]

- Maass, W.; Markram, H. On the computational power of circuits of spiking neurons. J. Comput. Syst. Sci. 2004, 69, 593–616. [Google Scholar] [CrossRef]

- Maass, W.; Natschläger, T.; Markram, H. Real-Time Computing Without Stable States: A New Framework for Neural Computation Based on Perturbations. Neural Comput. 2002, 14, 2531–2560. [Google Scholar] [CrossRef] [PubMed]

- Hazan, H.; Manevitz, L.M. Topological constraints and robustness in liquid state machines. Expert Syst. Appl. 2012, 39, 1597–1606. [Google Scholar] [CrossRef]

- Ahmed, S.; Kallu, K.D.; Ahmed, S.; Cho, S.H. Hand Gestures Recognition Using Radar Sensors for Human-Computer-Interaction: A Review. Remote Sens. 2021, 13, 527. [Google Scholar] [CrossRef]

- Jankiraman, M. FMCW Radar Design; Artech House: Boston, MA, USA, 2018. [Google Scholar]

- Gurbuz, S. Deep Neural Network Design for Radar Applications; The Institution of Engineering and Technology: Stevenage, UK, 2020. [Google Scholar]

- Fioranelli, H.F.; Griffiths, M.R.; Balleri, A. Micro-Doppler Radar and Its Applications; The Institution of Engineering and Technology: Stevenage, UK, 2020. [Google Scholar]

- Nasr, I.; Jungmaier, R.; Baheti, A.; Noppeney, D.; Bal, J.S.; Wojnowski, M.; Karagozler, E.; Raja, H.; Lien, J.; Poupyrev, I.; et al. A Highly Integrated 60 GHz 6-Channel Transceiver With Antenna in Package for Smart Sensing and Short-Range Communications. IEEE J. Solid-State Circuits 2016, 51, 2066–2076. [Google Scholar] [CrossRef]

- Gu, C.; Lien, J. A Two-Tone Radar Sensor for Concurrent Detection of Absolute Distance and Relative Movement for Gesture Sensing. IEEE Sens. Lett. 2017, 1, 1–4. [Google Scholar] [CrossRef]

- Wiggers, K. Google Unveils Pixel 4 and Pixel 4 XL with Gesture Recognition and Dual Rear Cameras. VentureBeat. 2019. Available online: https://venturebeat.com/2019/10/15/google-unveils-the-pixel-4-and-pixel-4-xl-with-gesture-recognition-and-dual-rear-cameras (accessed on 30 April 2021).

- Seifert, D. Google Nest Hub (2nd-Gen) Review: Sleep on It. The Verge. 2021. Available online: https://www.theverge.com/22357214/google-nest-hub-2nd-gen-2021-assistant-smart-display-review (accessed on 30 April 2021).

- Kittler, J.; Illingworth, J. Minimum error thresholding. Pattern Recognit. 1986, 19, 41–47. [Google Scholar] [CrossRef]

- Kittler, J.; Illingworth, J. On threshold selection using clustering criteria. IEEE Trans. Syst. Man Cybern. 1985, SMC-15, 652–655. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing; Pearson: New York, NY, USA, 2018. [Google Scholar]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Sezgin, M.; Sankur, B. Survey over image thresholding techniques and quantitative performance evaluation. J. Electron. Imaging 2004, 13, 146–165. [Google Scholar] [CrossRef]

- Tsodyks, T.; Uziel, A.; Markram, H. t Synchrony Generation in Recurrent Networks with Frequency-Dependent Synapses. J. Neurosci. 2000, 20, RC50. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Benjamin, B.V.; Gao, P.; McQuinn, E.; Choudhary, S.; Chandrasekaran, A.R.; Bussat, J.M.; Alvarez-Icaza, R.; Arthur, J.V.; Merolla, P.A.; Boahen, K. Neurogrid: A mixed-analog-digital multichip system for large-scale neural simulations. Proc. IEEE 2014, 102, 699–716. [Google Scholar] [CrossRef]

- Neckar, A.; Fok, S.; Benjamin, B.V.; Stewart, T.C.; Oza, N.N.; Voelker, A.R.; Eliasmith, C.; Manohar, R.; Boahen, K. Braindrop: A mixed-signal neuromorphic architecture with a dynamical systems-based programming model. Proc. IEEE 2018, 107, 144–164. [Google Scholar] [CrossRef]

- Qiao, N.; Mostafa, H.; Corradi, F.; Osswald, M.; Stefanini, F.; Sumislawska, D.; Indiveri, G. A reconfigurable on-line learning spiking neuromorphic processor comprising 256 neurons and 128K synapses. Front. Neurosci. 2015, 9, 141. [Google Scholar] [CrossRef]

- Meier, K. A mixed-signal universal neuromorphic computing system. In Proceedings of the 2015 IEEE International Electron Devices Meeting (IEDM), Washington, DC, USA, 7–9 December 2015; pp. 4–6. [Google Scholar]

- Maass, W. Liquid state machines: Motivation, theory, and applications. In Computability in Context: Computation and Logic in the Real World; Imperial College Press (ICP): London, UK, July 2011; pp. 275–296. [Google Scholar]

- Donati, E.; Payvand, M.; Risi, N.; Krause, R.; Burelo, K.; Indiveri, G.; Dalgaty, T.; Vianello, E. Processing EMG signals using reservoir computing on an event-based neuromorphic system. In Proceedings of the 2018 IEEE Biomedical Circuits and Systems Conference (BioCAS), Cleveland, OH, USA, 17–19 October 2018; pp. 1–4. [Google Scholar]

- Corradi, F.; Pande, S.; Stuijt, J.; Qiao, N.; Schaafsma, S.; Indiveri, G.; Catthoor, F. ECG-based Heartbeat Classification in Neuromorphic Hardware. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Gewaltig, M.O.; Diesmann, M. NEST (NEural Simulation Tool). Scholarpedia 2007, 2, 1430. [Google Scholar] [CrossRef]

- Fardet, T.; Vennemo, S.B.; Mitchell, J.; Mørk, H.; Graber, S.; Hahne, J.; Spreizer, S.; Deepu, R.; Trensch, G.; Weidel, P.; et al. NEST 2.20.1; Zenodo CERN: Genève, Switzerland, 2020. [Google Scholar]

| Training Data | ||||

|---|---|---|---|---|

| Person | Wave | Pinch | Swipe | Click |

| A | 56 | 98 | 64 | 105 |

| B | 112 | 116 | 72 | 105 |

| C | 85 | 132 | 80 | 137 |

| D | 70 | 112 | 71 | 93 |

| E | 56 | 98 | 91 | 144 |

| F | 87 | 140 | 101 | 208 |

| Test Data | ||||

| 643 | ||||

| Soli | Normalized | Variable | |

|---|---|---|---|

| Training and evaluation | |||

| 10-fold cross-validation | |||

| Leave-one-subject-out | |||

| Leave-one-session-out |

| Dop-NET | Normalized | Variable | |

|---|---|---|---|

| Training–Testing | |||

| 10-fold cross-validation | |||

| Leave-one-subject-out | |||

| Leave-one-session-out |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tsang, I.J.; Corradi, F.; Sifalakis, M.; Van Leekwijck, W.; Latré, S. Radar-Based Hand Gesture Recognition Using Spiking Neural Networks. Electronics 2021, 10, 1405. https://doi.org/10.3390/electronics10121405

Tsang IJ, Corradi F, Sifalakis M, Van Leekwijck W, Latré S. Radar-Based Hand Gesture Recognition Using Spiking Neural Networks. Electronics. 2021; 10(12):1405. https://doi.org/10.3390/electronics10121405

Chicago/Turabian StyleTsang, Ing Jyh, Federico Corradi, Manolis Sifalakis, Werner Van Leekwijck, and Steven Latré. 2021. "Radar-Based Hand Gesture Recognition Using Spiking Neural Networks" Electronics 10, no. 12: 1405. https://doi.org/10.3390/electronics10121405

APA StyleTsang, I. J., Corradi, F., Sifalakis, M., Van Leekwijck, W., & Latré, S. (2021). Radar-Based Hand Gesture Recognition Using Spiking Neural Networks. Electronics, 10(12), 1405. https://doi.org/10.3390/electronics10121405