Abstract

In a non-Gaussian environment, the accuracy of a Kalman filter might be reduced. In this paper, a two- dimensional Monte Carlo Filter is proposed to overcome the challenge of the non-Gaussian environment for filtering. The two-dimensional Monte Carlo (TMC) method is first proposed to improve the efficacy of the sampling. Then, the TMC filter (TMCF) algorithm is proposed to solve the non-Gaussian filter problem based on the TMC. In the TMCF, particles are deployed in the confidence interval uniformly in terms of the sampling interval, and their weights are calculated based on Bayesian inference. Then, the posterior distribution is described more accurately with less particles and their weights. Different from the PF, the TMCF completes the transfer of the distribution using a series of calculations of weights and uses particles to occupy the state space in the confidence interval. Numerical simulations demonstrated that, the accuracy of the TMCF approximates the Kalman filter (KF) (the error is about 10−6) in a two-dimensional linear/ Gaussian environment. In a two-dimensional linear/non-Gaussian system, the accuracy of the TMCF is improved by 0.01, and the computation time reduced to 0.067 s from 0.20 s, compared with the particle filter.

1. Introduction

Bayesian inference is one of the most popular theories in data fusion [1,2,3,4,5]. For a linear Gaussian dynamic system, the Bayesian filter can be achieved in terms of the well-known updating equations of the Kalman Filter (KF) perfectly [6]. However, the analytical solution of the Bayesian filter is impossible to be obtained in a non-Gaussian scenario [7]. This problem has attracted considerable attention for a few decades because of the wide application in signal processing [8,9], automatic control systems [10,11], biological information engineering [12], economic data analysis [13], and other subjects [14]. Approximation is one of the most effective approaches for solving the nonlinear/non-Gaussian filter problem.

The linearization of the state model is an important strategy for solving the nonlinear/non-Gaussian filtering problem. The extended Kalman filter (EKF) was introduced to approximate the nonlinear model using the first-order term of the Taylor expansion of the state and observation equations in [15]. In [16], the unscented Kalman filter (UKF) was proposed to reduce the truncation error by introducing the unscented transformation (UT) [17]. The cubature Kalman filter based on the third-degree spherical–radial cubature rule was proposed in [18]. The third-degree cubature rule is a special form of the UT and has better numerical stability in the application of filtering [19]. The Gauss–Hermite filter and central difference filter (CDF) were proposed by Kazufumi Ito and Kaiqi Xiong in [20] and made the Gaussian assumption for the noise model.

Sequential Monte Carlo (SMC) provides another important strategy for the nonlinear/non-Gaussian filtering problem and can approximate any probability density function (PDF) conveniently using weighted particles. Particle Filter (PF) [21,22] is an algorithm derived from the recursive Bayesian filter based on the SMC approach that is used to solve data/information fusion in a nonlinear/non-Gaussian environment [23]. The SMC approach was introduced in filtering to tackle a nonlinear dynamic system that is analytically intractable. The core idea of PF is to describe the transformation of the state distribution through the propagation of particles in a nonlinear dynamic system, and represent the posterior probability using weighted particles. As a flexible approach for avoiding solving complex integral problems, PF is widely used in the data/information fusion of nonlinear systems, such as fault detection [24], cooperative navigation and localization, visual tracking, and melody extraction. In [25] and [26], EKF and UKF were introduced, respectively, to optimize the proposal distribution for the PF framework. The feedback PF was designed based on an ensemble of controlled stochastic systems [27]. Additionally, because of the advantage of the resampling technique in solving the degeneracy problem, various resampling schemes were proposed in [28,29,30].

Both strategies are based on Bayesian theory, and approximation is also their main approach for solving the nonlinear filtering problem [31]. However, the perspectives of these two strategies are different, which results in different characteristics. For the first strategy, the Kalman filter (KF) is considered as the representative of Bayesian inference. Obtaining an analytical solution that is close to the real posterior distribution is the trick that the first strategy attempts to solve [32]. Researchers have attempted to approximate complex nonlinear non-Gaussian problems to linear Gaussian problems that can be directly solved using the KF [33]. This approximation inevitably leads to a truncation error. Therefore, many improved algorithms based on the first strategy have been proposed, mainly to reduce the effect of the truncation error on nonlinear filtering [34]. However, it is difficult to accurately obtain the posterior distribution of a nonlinear system [35]. The first strategy is always accompanied by linearization errors. Reducing the influence of the Gaussian assumption of non-Gaussian noise on filtering performance is also a major problem to be considered in the first strategy [36]. For the second strategy, the SMC method is used to solve the difficult problem of integration in Bayesian filtering [37]. Theoretically, this strategy (PF and its improved algorithms) is not constrained by the model of nonlinearity and non-Gaussian environment [38]. However, PF has been plagued by sample degeneracy and impoverishment since it was proposed. Many scholars have proposed several improvement methods to mitigate the two problems [39,40,41]. Increasing the particle number is an original approach to solve the problems, but it is not very effective because the particle number needs to increase exponentially to alleviate the two problems, which inevitably affects the efficiency of the filter [42]. The two main approaches for solving the two problems are improving the proposal distribution and resampling [43,44]. The improvement of the proposal distribution might greatly alleviate the impoverishment problem to improve the performance of the filter [45]. Hence, related improved algorithms have been widely used in engineering practice, such as EPF and UPF [46]. Resampling, as an important means to alleviate the sample degeneracy of PF has been widely studied by many scholars [47,48]. However, the model used to improve the proposal distribution must be based on some known noise model (such as the Gaussian model in EPF and Gaussian mixture model in UPF), which cannot fully solve the impoverishment problem [49]. The resampling step might alleviate the sample degeneracy, accompanied by the introduction of the resampling error [31]. The main problem of the second strategy is that the particle utilization efficiency is not high, which affects the effect and efficiency of the filtering [40].

Among the existing algorithms, the PF is the most flexible. The main procedure of PF can be roughly summed up as: (1) obtain particles according to a proposed distribution; (2) calculate the prior weights and the likelihood weights according to the process noise model and measurement noise model respectively and mix them; and (3) divide the mixed weights by the corresponding density of the proposed distribution and normalization. After that, the posterior distribution is reflected from these weighted particles. From this procedure, we can observe that the process of select particles is random, but the weights are calculated precisely according to the noise model. This phenomenon might cause random disturbances which could affect the filtering accuracy.

To overcome the aforementioned problem, the two-dimensional Monte Carlo (TMC) method is proposed to improve the efficiency of the sampled particles. Then, the TMC filter (TMCF) algorithm is proposed to solve the non-Gaussian filter problem based on the principle of the TMC method. The main contributions arising from this study are as follows:

- (1)

- The TMC method, as a deterministic sampling method, is proposed to improve the efficacy of particles. Particles are sampled in the confidence interval uniformly according to the sampling interval. Then, the posterior weight of each particle is calculated based on Bayesian inference. Subsequently, any probability distribution can be described by a small number of weighted particles.

- (2)

- A discrete solution to the problem of how to describe a known probability distribution transmitted in a linear or nonlinear state model is proposed. First, a small number of original weighted particles are obtained according to TMC method. Then, the confidence interval of the next time step for a fixed confidence is calculated according to the state model. Some new particles are then set in this confidence interval uniformly in terms of the sampling interval. After that, the weights of these new particles are obtained using a series of calculations based on Bayesian inference. Then, the transferred probability distribution is described by these new weighted particles.

- (3)

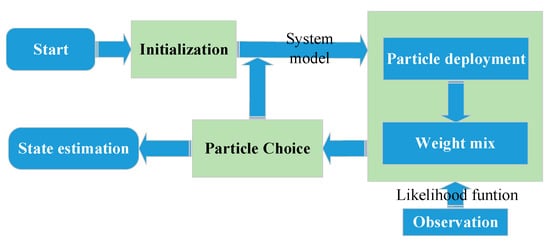

- The TMCF algorithm is proposed based on the above two points. The proposed algorithm can be divided into four parts: initialization, particle deployment, weight mixing, and state estimation. The TMC method is used in the initialization step to generate the efficacy weighted particles. Particle deployment solves the problem of state space transfer for a certain degree of confidence and deploys particles in the confidence interval. The weight mixing step achieves the fusion of several arbitrary continuous probability densities in a discrete domain. Some invalid weighted particles are omitted in the particle choice step and the state is estimated using the remaining weighted particles.

- (4)

- The performance of TMCF was verified using a numerical simulation. The results demonstrated that the proposed algorithm with the approach of fewer particles and less computation estimated accuracy better than the PF in linear and Gaussian systems and performed better than the KF and PF in linear and Gaussian mixture noise model.

The outline of this paper is as follows. In Section 2, the problem statement and Bayesian filter are presented. The TMC method is introduced in Section 3. In Section 4, the TMCF algorithm is introduced in detail. The numerical simulation is described in Section 5, and the validity of the proposed framework is demonstrated. In Section 6, the conclusion of this study is presented.

2. Problem Statement and Bayesian Filter

2.1. Problem Statement

For filtering algorithms introduced in this paper, the state space model is defined as: [37]

where and denote the state variable and observation at time step , respectively; and denote the dimensions of the state vectors and observation, respectively; and denote the system noise and observation noise, respectively; and the mappings and describe the state transition equation and observation equation, respectively; denotes the observation at time step .

In this paper, and are independent of each other, and the probability distributions of and are and , respectively. Meanwhile, the probability distribution of the initial state is known. The goal is to obtain the approximate Bayesian estimation in the filtering process in a nonlinear and non-Gaussian environment.

2.2. Bayesian Estimation

Recursive Bayesian filtering provides an effective guide for the real-time fusion of the state equation and observation. The procedure of the Bayesian filter framework can be divided into prediction and update steps as follows:

where denotes the state transition PDF, denotes the posterior PDF at time step , denotes the prior PDF at time step , denotes the likelihood PDF and

For a linear and Gaussian environment, this procedure can be accurately operated by the celebrated KF as the integral problem of Equation (1), and the likelihood probability can be solved conveniently. For a nonlinear and non-Gaussian environment, it is impossible to solve Equation (1) directly.

3. Two-Dimensional Monte Carlo Method

The Monte Carlo approach provides a convenient track inference of the posterior PDF in a non-Gaussian environment. PF is a branch of the family of filter algorithms and is based on the Monte Carlo approach. It is used to process the nonlinear and non-Gaussian system filter problem. Several improved particle filter algorithms exist. The core of the PF approach is to sample particles according to the difference in the proposal distribution. Particles are used to describe the transition of the PDF in the system model. The integration of the observation depends on the likelihood weight. The concept of weight provides the possibility for the application of Monte Carlo to the filtering problem, which plays an important role. In the following, the TMC method is introduced to make full use of the weight and the noise model to enhance particle efficiency.

Suppose is the PDF of a two-dimensional noise model. Its marginal PDF can be expressed as:

where and denote the marginal PDF of and , respectively.

The confidence interval for confidence can be defined as:

where:

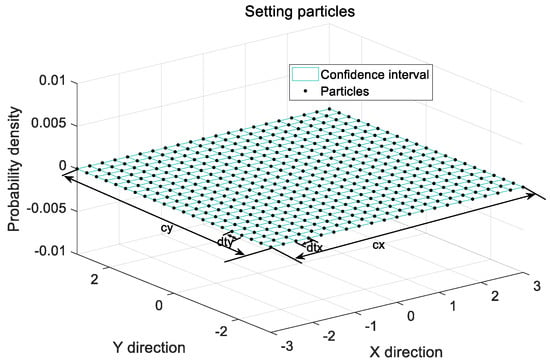

Particles can be set according to sampling interval for , as shown in Figure 1. Additionally,

where denotes the particle number.

Figure 1.

Sketch map of setting particles.

The weight of these particles is calculated as:

where:

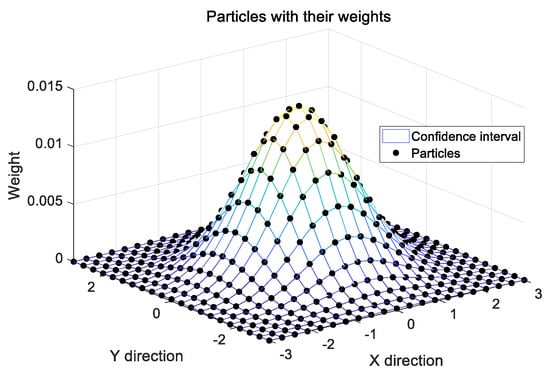

Then, is used to describe with the accuracy of in the confidence interval for confidence discretely. The sketch map of the particles and their weights is shown in Figure 2.

Figure 2.

Sketch map of particles and their weights.

Theorem 1.

Whenand, then and

Proof.

Suppose the probability space of is divided into small squares in terms of , where . When , and

□

As and are independent of ,

Hence,

Thus, .

Similarly,

.

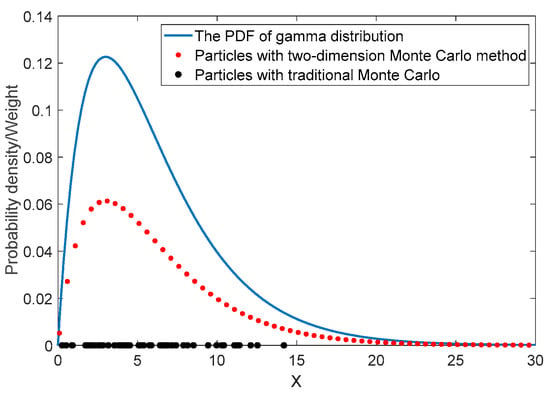

A simple sample is used to further demonstrate that TMC improves particle efficiency. Consider a one-dimensional gamma distribution:

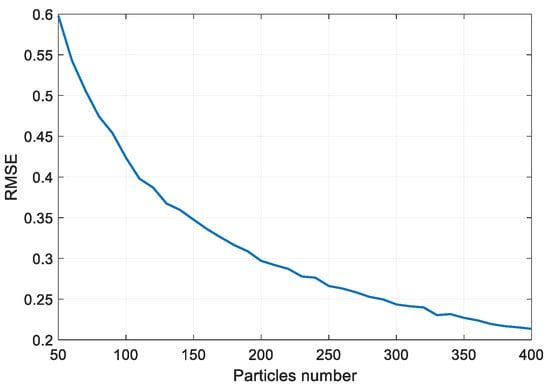

The MC and TMC methods are used to generate particles from the gamma distribution. Figure 3 shows the sampling results from the two sampling methods. For the MC method, the selection of particles is random. Increasing the particle number allows for a better description of the gamma distribution, and this is the only way to mitigate the indeterminacy. For the TMC method, the position of particles is determined when the confidence and sampling interval are provided. The task of describing the probability distribution is transferred to the weights corresponding to the particles. The estimation results for the expectation errors of the Monte Carlo method for the gamma distribution are shown in Figure 4. Considering the indeterminacy of MC, it is run 10,000 times and the RMSE is used to reflect the size of the error:

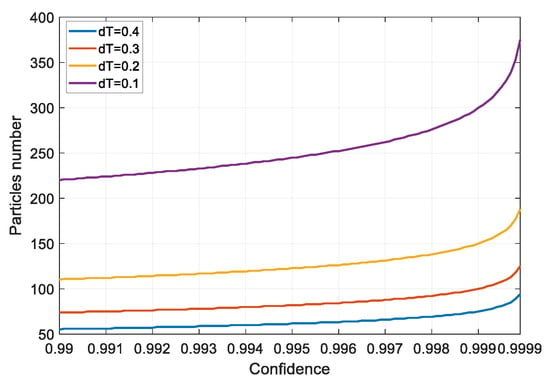

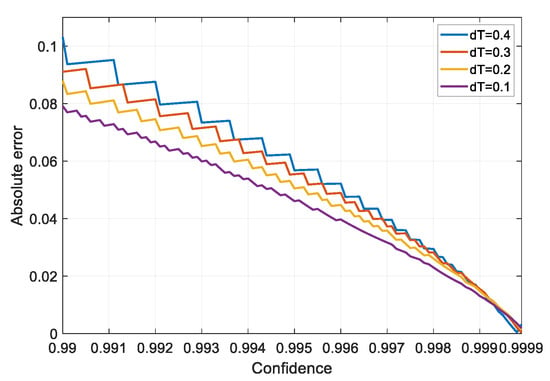

where denotes particle number and denotes the Monte Carlo number. The RMSE decreases as the particle number increases. Figure 4 shows that the RMSE is about 0.21 when the particle number is 400. For TMC, the relationship between the magnitude of confidence and the mean error is shown in Figure 5 and Figure 6. The absolute expectation error decreases rapidly as the confidence increases. Meanwhile, the particle number increases slowly as confidence increases over a fixed sampling interval. The absolute expectation error can be reduced to 0.015 using only 75 particles for the confidence of 0.999, whereas the sampling interval is 0.4.

Figure 3.

Sampling results from TMC and MC.

Figure 4.

Variation of the expectation RMSE with the particle number for MC.

Figure 5.

Variation of the particle number with confidence for TMC.

Figure 6.

Variation of the absolute expectation error with confidence for TMC.

The results demonstrate that particles generated by TMC can describe the noise distribution more efficiently than particles generated by MC.

4. Proposed Filter Algorithm

Each of the efficient particles from TMC is a possible state estimation. The weight of each particle is the probability that the particle becomes the state estimation. The continuous probability distribution is discretized in terms of these particles and their weights. The TMCF is further designed as shown in Figure 7. The entire filter system can be divided into four parts: initialization, particle deployment, weight mixing and state estimation. In this section, the four parts are explained in detail and the TMCF algorithm is proposed.

Figure 7.

TMCF system block diagram.

4.1. Initialization

The target of initialization is to set several efficient particles to describe the initial probability distribution discretely to facilitate the subsequent filtering process. After the confidence and the sampling interval are set, initial particles and their weights can be obtained in the confidence interval using the TMC method according to the known initial probability . Additionally, the confidence interval for the system noise probability of can be obtained according to Equation (8), and then the amplification of interval is defined as:

where denotes the ith column of matrix/vector and denotes the expectation of .

The real state now exists in the confidence interval for the probability . is described by for the accuracy of . The probability of each particle’s existence is described by its weight.

4.2. Particle Deployment

The target of this step is to analyze the transition of the confidence interval from time step to time step , and then deploy particles. At time step , the confidence interval can be written as:

in terms of the principle of the TMC method. where denotes the minimum value of each row of matrix/vector and denotes the maximum value of each row of . When the particles are transferred through the system model without system noise, the transferred particles can be expressed as:

Each particle in then is considered to be a possible state estimation without system noise at time step , and the probability of each particle is the weight of . Considering system noise, the confidence interval of each particle for confidence is

where denotes the confidence interval for confidence corresponding to . Then, the complete confidence interval for can be obtained by calculating the union of all confidence intervals:

where denotes the number of particles in set .

For simplicity, the complete confidence interval also can be estimated roughly by:

where .

As . Hence, confidence corresponding to the confidence interval is greater than or equal to . However, the amplification of the confidence interval might increase the number of deployed particles. Sometimes this phenomenon, particularly in the case of high dimensions, leads to too many particles, which might result in the failure of filtering.

Then, particles can be deployed according to the confidence interval or and at time step . Generally, is set to a constant vector:

When the confidence interval is unstable (increases or decreases over time), a specific strategy corresponding to the specific system needs to be designed to change the size of the sampling interval.

In this step, the deployed particles are distributed in this confidence interval uniformly, which is preparation for the subsequent step.

4.3. Weight Mix

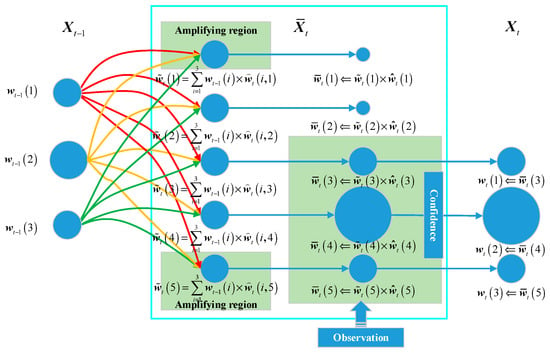

In the weight mix step, the relationship between and is analyzed to solve the prior weight corresponding to , the likelihood weight is calculated and the posterior weight is obtained.

As the distribution of the system noise is continuous, each particle in set might arrive at any particle in set , in theory. The probability of each particle in set arriving at each particle in set can be expressed as:

where denotes the probability of the ith particle in being transferred to the jth particle in . denotes the number of particles in set . As shown in Figure 8, the prior weight is calculated as:

Figure 8.

Schematic diagram of particle and weight changes.

The likelihood weight is written as:

Additionally, the posterior weight is mixed by:

Then, the posterior distribution of the state at time step is described by discretely.

4.4. Particle Choice and State Estimation

describes the posterior distribution after the fusion of the prior distribution and the likelihood distribution. All the distribution information is concentrated in the weight , and the role of the particles is to only occupy the distribution space. Generally, many very low weighted particles emerge after fusion. Additionally, these particles with very low weights have very little effect on the accurate description of the distribution, so they can simply be omitted. particles are chosen in the order of largest to smallest so that the sum of the weights of the particles is :

Then, the weight is normalized:

The state estimation can be obtained by

In conclusion, the TMCF algorithm is summarized in Algorithm 1.

| Algorithm 1 | |

| 1 | Initialization: |

| 2 | Setting and |

| 3 | Generate and according to TMC method and Equation (21) |

| 4 | //Over all time steps: |

| 5 | for to do |

| 6 | Setting , or other strategy is used to select |

| 7 | Confidence interval choice according to Equation (25) or (26) |

| 8 | Particle deployment according to |

| 9 | Weight fusion according to Equations (28)–(31) |

| 10 | Particles and their weights choice according to Equations (32) and (33) |

| 11 | State estimation according to Equation (34) |

| 12 | End |

5. Numerical Simulation

In this section, a two-dimensional linear system is used to assess the performance of the TMCF [43]:

where and denote the state and observation at time step , respectively; denotes the system noise sequence at time step ; and denotes the observation noise sequence at time step . In the two experiments, and the initial state is . The initial probability satisfies . It is well known that the KF is the optimal filter for a linear Gaussian system based on the Bayesian filter principle. Hence, the performance of the TMCF is first assessed in a linear and Gaussian system. The estimation results of the KF are used as a reference to evaluate the approximation degree of the TMCF algorithm and Bayesian filtering in the linear and Gaussian system. Because the TMCF algorithm is a filter based on the Monte Carlo principle, the performances of the TMCF algorithm and PF algorithm are compared in this experiment. Second, a heavy-tailed distribution (non-Gaussian environment) is considered in this linear system. The performance of the TMCF is compared with that of the KF and PF in this linear and non-Gaussian system. Four sets of parameters are selected for the TMCF algorithm, which are shown in Table 1. Two forms of mean square errors (MSE) are used to evaluate the performance of the algorithms:

Table 1.

Parameters of the TMCF.

In this section, MATLAB is used to build the simulation environment. The performance (including the filtering precision, the number of samples, filter time) of the TMCF, KF and PF are verified and compared by this simulation environment. All the data are generated by the simulated program. The configurations of the simulation computer can be seen in Table 2.

Table 2.

Configuration environment.

5.1. Gaussian Distribution System

In this experiment, the Gaussian model is selected for both system noise and observed noise: , and .

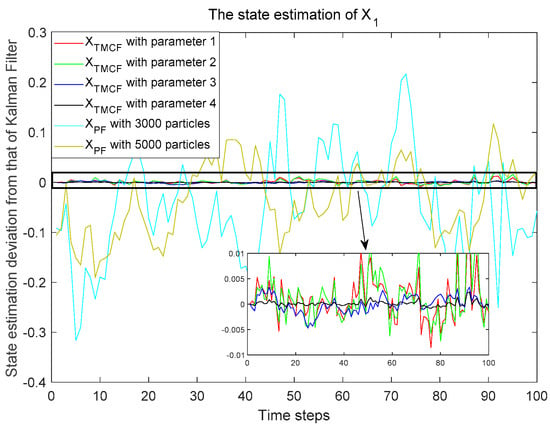

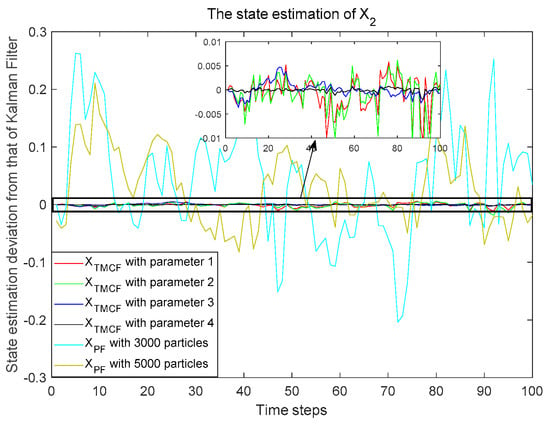

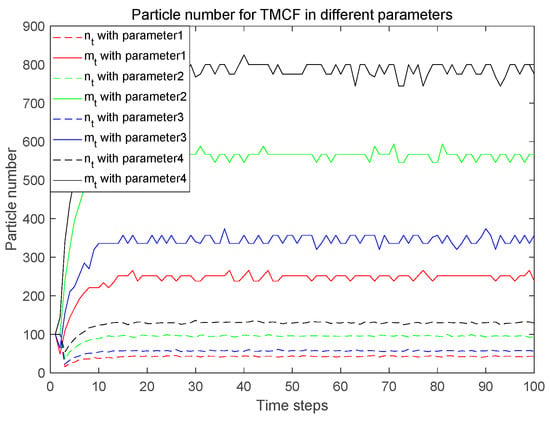

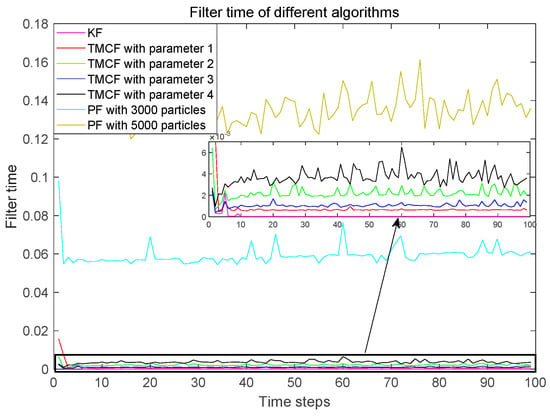

PFs with 3000 and 5000 particles are used as the comparison algorithms of the TMCF. Figure 9 and Figure 10 show that the deviation between each filtering result of the different algorithms and the KF results for and , respectively. The results show that it is difficult for the PF to approximate the performance of the KF in the linear and Gaussian system. Compared with the KF, although the number of particles is 3000, the results of the PF deviate by about 0.15 from that of the KF in each filtering process. Meanwhile, as the number of particles increases dramatically, this deviation declines very slowly. The deviation is about 0.1 when the number of particles is 5000. This is caused by the indeterminacy of the Monte Carlo method. The indeterminacy is greatly reduced when the TMC method is used to generate particles. Using the TMC method, the results of the TMCF are very close to those of the KF. The difference between the TMCF and KF is less than 0.01 for all four parameters selected. The deviation decreases as the confidence increases and the sampling interval decreases. Particularly, the deviation is less than 0.001 when the confidence is 0.9999 and the sampling interval is 0.8. Figure 11 shows that only about 40 particles need to be transferred in each filtering process for parameter 1, and the number of set particles is about 250. The number of particles required increases as the sampling interval decreases and the confidence increases. For parameter 4, the number of transferred particles is about 130 and the number of set particles is only about 800. Figure 12 shows the time consumed in each filtering process by the different algorithms on a computer using the same configuration. The computation time of the TMCF is much less than that of PF. Table 3 shows the filtering results of 5000 time steps processed by Equations (37) and (38). The is about 0.01 for PF with 5000 particles, and the computation time is about 0.1 s for each filtering process. The reaches for the TMCF with parameter 4, and the computation time is only about 0.0035 s. The results demonstrate that the TMCF can approximate the KF algorithm better with fewer particles and less computation in linear and Gaussian systems compared with PF. Different from the KF, the TMCF does not use the propagation characteristics of the conditional means and covariances of Gaussian noise in linear systems. Therefore, this method is also applicable to non-Gaussian noise.

Figure 9.

Difference between the state estimation results of the different algorithms and those of KF for .

Figure 10.

Difference between the state estimation results of different algorithms and those of KF for .

Figure 11.

Number of particles required for the TMCF algorithm with different parameters.

Figure 12.

Computation time for the TMCF algorithm with different parameters.

Table 3.

Performance of the different filter algorithms with different parameters in the linear/Gaussian system.

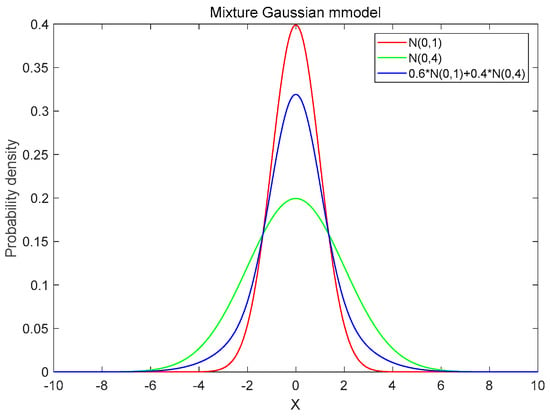

5.2. Gaussian Mixture Distribution System

In this experiment, the Gaussian mixture model is selected for both system noise and observed noise: , and .

The noise model is shown in Figure 13. Similar to Table 3 for the previous experiment, Table 4 shows the filtering results of 5000-time steps processed by Equation (37). The of the PF with 3000 particles is greater than that of the KF. The performance of the TMCF with parameter 1 is better than that of PF with 5000 particles and KF. Meanwhile, the number of transferred particles is only 110 and the number of set particles is only 650. The computation time is 0.006 s, that is, much less than that of the PF. With the decrease of the sampling interval and the increase of confidence, the accuracy of filter is improved, and the computation time is increased. For the parameters 4 of TMCF, the accuracy of the TMCF is improved by 0.01, and the computation time reduced to 0.067 s from 0.20 s, comparing with the particle filter (5000 particles).

Figure 13.

Probability model of Gaussian mixture noise.

Table 4.

Performance of the different filter algorithms with different parameters in the linear/mixture Gaussian system.

6. Conclusions

The TMCF algorithm was proposed to overcome the challenge of the non-Gaussian filtering in this paper. First, the TMC method was proposed to sample particles in the confidence interval according to the sampling interval. The performance of the TMC method has been simulated and the property of the TMC method has been proved. Second, the TMCF algorithm was proposed by introducing the TMC method into the PF algorithm. Different from the PF, the TMCF algorithm completes the transfer of the distribution using a series of calculations of weights and particles were used to occupy the state space in the confidence interval. Third, Numerical simulations demonstrated that the MSE of the TMCF was about 10−6 compared with that of the Kalman filter (KF) in a two-dimensional linear/Gaussian system. In a two-dimensional linear/non-Gaussian system, the MSE of the TMCF for parameter 4 was 0.04 and 0.01 less than that of the KF and PF with 5000 particles, respectively. The single filter times of the TMCF and PF with 5000 particles were 0.006 s and 0.2 s, respectively.

In this paper, we have designed an improved PF algorithm, we called TMCF algorithm. In the non- Gaussian filter environment, it can not only improve the accuracy of filter, but also reduce the computation time. In the future development of new disciplines such as artificial intelligence, multi-sensor data fusion, and multi-target tracking, there are more and more types of data and more and more complex sources of data. the quality of nonlinear non-Gaussian filtering method becomes more and more important in data fusion. Our work lays a theoretical foundation for nonlinear/non-Gaussian filter and can be used to improve the filtering precision under the condition of reducing computation time in some non-Gaussian filter environments. In the future, we will try to apply the algorithm to the integrated navigation system to improve the positioning accuracy of satellite navigation.

Author Contributions

Conceptualization, R.X. and X.Q.; methodology, R.X.; software, X.Q.; validation, R.X., X.Q. and Y.Z.; formal analysis, X.Q.; writing—original draft preparation, X.Q.; writing—review and editing, R.X. and X.Q.; supervision, Y.Z.; funding acquisition, R.X., X.Q. and Y.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Key Research and Development Program of China, grant number 2017YFB0503400, and in part by the National Natural Science Foundation of China, grant numbers U2033215, U1833125 and 61803037.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhu, T.; Pimentel, M.A.F.; Clifford, G.D.; Clifton, D.A. Unsupervised Bayesian Inference to Fuse Biosignal Sensory Estimates for Personalizing Care. IEEE J. Biomed. Health Inform. 2019, 23, 47–58. [Google Scholar] [CrossRef]

- Gao, Y.; Wen, Y.; Wu, J. A Neural Network-Based Joint Prognostic Model for Data Fusion and Remaining Useful Life Prediction. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 117–127. [Google Scholar] [CrossRef] [PubMed]

- Lan, H.; Sun, S.; Wang, Z.; Pan, Q.; Zhang, Z. Joint Target Detection and Tracking in Multipath Environment: A Variational Bayesian Approach. IEEE Trans. Aerosp. Electron. Syst. 2019, 56, 2136–2156. [Google Scholar] [CrossRef]

- Nitzan, E.; Halme, T.; Koivunen, V. Bayesian Methods for Multiple Change-Point Detection with Reduced Communication. IEEE Trans. Signal Process. 2020, 68, 4871–4886. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, H. Accurate Smoothing for Continuous-Discrete Nonlinear Systems with Non-Gaussian Noise. IEEE Signal Process. Lett. 2019, 26, 465–469. [Google Scholar] [CrossRef]

- Yin, X.; Zhang, Q.; Wang, H.; Ding, Z. RBFNN-Based Minimum Entropy Filtering for a Class of Stochastic Nonlinear Systems. IEEE Trans. Autom. Control 2020, 65, 376–381. [Google Scholar] [CrossRef]

- Huang, Y.; Zhang, Y.; Li, N.; Chambers, J. Robust student’s t based nonlinear filter and smoother. IEEE Trans. Aerosp. Electron. Syst. 2016, 52, 2586–2596. [Google Scholar] [CrossRef]

- Ouahabi, A. Signal and Image Multiresolution Analysis; ISTE-Wiley: London, UK, 2012. [Google Scholar]

- Gao, M.; Cai, Q.; Zheng, B.; Shi, J.; Ni, Z.; Wang, J.; Lin, H. A Hybrid YOLOv4 and Particle Filter Based Robotic Arm Grabbing System in Nonlinear and Non-Gaussian Environment. Electronics 2021, 10, 1140. [Google Scholar] [CrossRef]

- Shoushtari, H.; Willemsen, T.; Sternberg, H. Many Ways Lead to the Goal—Possibilities of Autonomous and Infrastructure-Based Indoor Positioning. Electronics 2021, 10, 397. [Google Scholar] [CrossRef]

- Adeli, H.; Ghosh-Dastidar, S.; Dadmehr, N. A Wavelet-Chaos Methodology for Analysis of EEGs and EEG Subbands to Detect Seizure and Epilepsy. IEEE Trans. Biomed. Eng. 2007, 54, 205–211. [Google Scholar] [CrossRef] [PubMed]

- Khorshidi, R.; Shabaninia, F.; Vaziri, M.; Vadhva, S. Kalman-Particle Filter Used for Particle Swarm Optimization of Economic Dispatch Problem. In Proceedings of the IEEE Global Humanitarian Technology Conference, Seattle, WA, USA, 21–24 October 2012; pp. 220–223. [Google Scholar]

- Ouahabi, A. A Review of Wavelet Denoising in Medical Imaging. In Proceedings of the International Workshop on Systems, Signal Processing and Their Applications (IEEE/WOSSPA’13), Algiers, Algeria, 12–15 May 2013; pp. 19–26. [Google Scholar]

- Sidahmed, S.; Messali, Z.; Ouahabi, A.; Trépout, S.; Messaoudi, C.; Marco, S. Nonparametric denoising methods based on contourlet transform with sharp frequency localization: Application to electron microscopy images with low exposure time. Entropy 2015, 17, 2781–2799. [Google Scholar] [CrossRef]

- Julier, S.; Uhlmann, J. A New Extension of the Kalman Filter to Nonlinear Systems. Proc. SPIE 1997, 3068, 182–193. [Google Scholar]

- Wan, E.A.; van der Merwe, R. The Unscented Kalman Filter for Nonlinear Estimation. In Proceedings of the IEEE 2000 Adaptive Systems for Signal Processing, Communications, and Control Symposium (Cat. No.00EX373), Lake Louise, AB, Canada, 4 October 2000; pp. 153–158. [Google Scholar]

- Julier, S.J.; Uhlmann, J.K. The Scaled Unscented Transformation. In Proceedings of the 2002 American Control Conference, Anchorage, AK, USA, 8–10 May 2002; pp. 4555–4559. [Google Scholar]

- Arasaratnam, I.; Haykin, S. Cubature Kalman Filters. IEEE Trans. Autom. Control 2009, 54, 1254–1269. [Google Scholar] [CrossRef]

- Jia, B.; Xin, M.; Cheng, Y. High-degree cubature Kalman filter. Automatica 2013, 49, 510–518. [Google Scholar] [CrossRef]

- Ito, K.; Xiong, K. Gaussian filters for nonlinear filtering problems. IEEE Trans. Automat. Control 2000, 45, 910–927. [Google Scholar] [CrossRef]

- Gordon, N.J.; Salmond, D.J.; Smith, A.F.M. Novel Approach to Nonlinear/non-Gaussian Bayesian State Estimation. IEE Proc. F 1993, 140, 107–113. [Google Scholar] [CrossRef]

- Carpenter, J.; Clifford, P.; Fearnhead, P. Improved particle filter for nonlinear problems. Proc. Inst. Elect. Eng. Radar Sonar Navig. 1999, 146, 2–7. [Google Scholar] [CrossRef]

- Chen, Z. Bayesian Filtering: From Kalman Filters to Particle Filters, and Beyond; McMaster Univ.: Hamilton, ON, USA, 2003. [Google Scholar]

- Abdzadeh-Ziabari, H.; Zhu, W.; Swamy, M.N.S. Joint Carrier Frequency Offset and Doubly Selective Channel Estimation for MIMO-OFDMA Uplink with Kalman and Particle Filtering. IEEE Trans. Signal Process. 2018, 66, 4001–4012. [Google Scholar] [CrossRef]

- Freitas, J.D.; Niranjan, M.; Gee, A.H.; Doucet, A. Sequential Monte Carlo Methods to Train Neural Network Models. Neural Comput. 2000, 12, 955–993. [Google Scholar] [CrossRef]

- Van Der Merwe, R.; Doucet, A.; De Freitas, N.; Wan, E.A. The Unscented Particle Filter. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2001; pp. 584–590. [Google Scholar]

- Zhang, C.; Taghvaei, A.; Mehta, P.G. Feedback Particle Filter on Riemannian Manifolds and Matrix Lie Groups. IEEE Trans. Autom. Control 2017, 63, 2465–2480. [Google Scholar] [CrossRef]

- Li, T.; Bolic, M.; Djuric, P.M. Resampling Methods for Particle Filtering: Classification, implementation, and strategies. IEEE Signal Process. Mag. 2015, 32, 70–86. [Google Scholar] [CrossRef]

- Liu, S.; Tang, L.; Bai, Y.; Zhang, X. A Sparse Bayesian Learning-Based DOA Estimation Method With the Kalman Filter in MIMO Radar. Electronics 2020, 9, 347. [Google Scholar] [CrossRef]

- Kitagawa, G. Monte Carlo filter and smoother for non-Gaussian nonlinear state space models. J. Comput. Graph. Statist. 1996, 5, 1–25. [Google Scholar]

- Bergman, N.; Doucet, A.; Gordon, N. Optimal estimation and Cramer-Rao bounds for partial non-Gaussian state space models. Ann. Inst. Statist. Math. 2001, 53, 97–112. [Google Scholar] [CrossRef]

- Kulikov, G.Y.; Kulikova, M.V. The Accurate Continuous-Discrete Extended Kalman Filter for Radar Tracking. IEEE Trans. Signal Process. 2016, 64, 948–958. [Google Scholar] [CrossRef]

- Wang, J.; Wang, J.; Zhang, D.; Shao, X. Stochastic Feedback Based Kalman Filter for Nonlinear Continuous-Discrete Systems. IEEE Trans. Autom. Control 2018, 63, 3002–3009. [Google Scholar] [CrossRef]

- Arasaratnam, I.; Haykin, S.; Hurd, T.R. Cubature Kalman Filtering for Continuous-Discrete Systems: Theory and Simulations. IEEE Trans. Signal Process. 2010, 58, 4977–4993. [Google Scholar] [CrossRef]

- Gultekin, S.; Paisley, J. Nonlinear Kalman Filtering with Divergence Minimization. IEEE Trans. Signal Process. 2017, 65, 6319–6331. [Google Scholar] [CrossRef]

- Fasano, A.; Germani, A.; Monteriù, A. Reduced-Order Quadratic Kalman-Like Filtering of Non-Gaussian Systems. IEEE Trans. Autom. Control 2013, 58, 1744–1757. [Google Scholar] [CrossRef]

- Vo, B.; Singh, S.; Doucet, A. Sequential Monte Carlo methods for multitarget filtering with random finite sets. IEEE Trans. Aerosp. Electron. Syst. 2005, 41, 1224–1245. [Google Scholar]

- Tulsyan, A.; Huang, B.; Gopaluni, R.B.; Forbes, J.F. A Particle Filter Approach to Approximate Posterior Cramer-Rao Lower Bound: The Case of Hidden States. IEEE Trans. Aerosp. Electron. Syst. 2013, 49, 2478–2495. [Google Scholar] [CrossRef]

- Li, K.; Pfaff, F.; Hanebeck, U.D. Unscented Dual Quaternion Particle Filter for SE(3) Estimation. IEEE Control Syst. Lett. 2021, 5, 647–652. [Google Scholar] [CrossRef]

- Ahwiadi, M.; Wang, W. An Enhanced Mutated Particle Filter Technique for System State Estimation and Battery Life Prediction. IEEE Trans. Instrum. Meas. 2019, 68, 923–935. [Google Scholar] [CrossRef]

- Haque, M.S.; Choi, S.; Baek, J. Auxiliary Particle Filtering-Based Estimation of Remaining Useful Life of IGBT. IEEE Trans. Ind. Electron. 2018, 65, 2693–2703. [Google Scholar] [CrossRef]

- Li, Y.; Coates, M. Particle Filtering with Invertible Particle Flow. IEEE Trans. Signal Process. 2017, 65, 4102–4116. [Google Scholar] [CrossRef]

- Yang, T.; Mehta, P.G.; Meyn, S.P. Feedback Particle Filter. IEEE Trans. Autom. Control 2013, 58, 2465–2480. [Google Scholar] [CrossRef]

- Vitetta, G.M.; Viesti, P.D.; Sirignano, E.; Montorsi, F. Multiple Bayesian Filtering as Message Passing. IEEE Trans. Signal Process. 2020, 68, 1002–1020. [Google Scholar] [CrossRef]

- Lin, Y.; Miao, L.; Zhou, Z. An Improved MCMC-Based Particle Filter for GPS-Aided SINS In-Motion Initial Alignment. IEEE Trans. Instrum. Meas. 2020, 69, 7895–7905. [Google Scholar] [CrossRef]

- Lim, J.; Hong, D. Gaussian Particle Filtering Approach for Carrier Frequency Offset Estimation in OFDM Systems. IEEE Signal Process. Lett. 2013, 20, 367–370. [Google Scholar] [CrossRef]

- Andrieu, C.; de Freitas, N.; Doucet, A. Rao-Blackwellised particle filtering via data augmentation. Adv. Neural Inform. Process. Syst. 2002, 14, 561–567. [Google Scholar]

- Kouritzin, A.M. Residual and stratified branching particle filters. Comp. Stat. Data Anal. 2017, 111, 145–165. [Google Scholar] [CrossRef]

- Qiang, X.; Zhu, Y.; Xue, R. SVRPF: An Improved Particle Filter for a Nonlinear/Non-Gaussian Environment. IEEE Access 2019, 7, 151638–151651. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).