Cybersafety Approach to Cybersecurity Analysis and Mitigation for Mobility-as-a-Service and Internet of Vehicles

Abstract

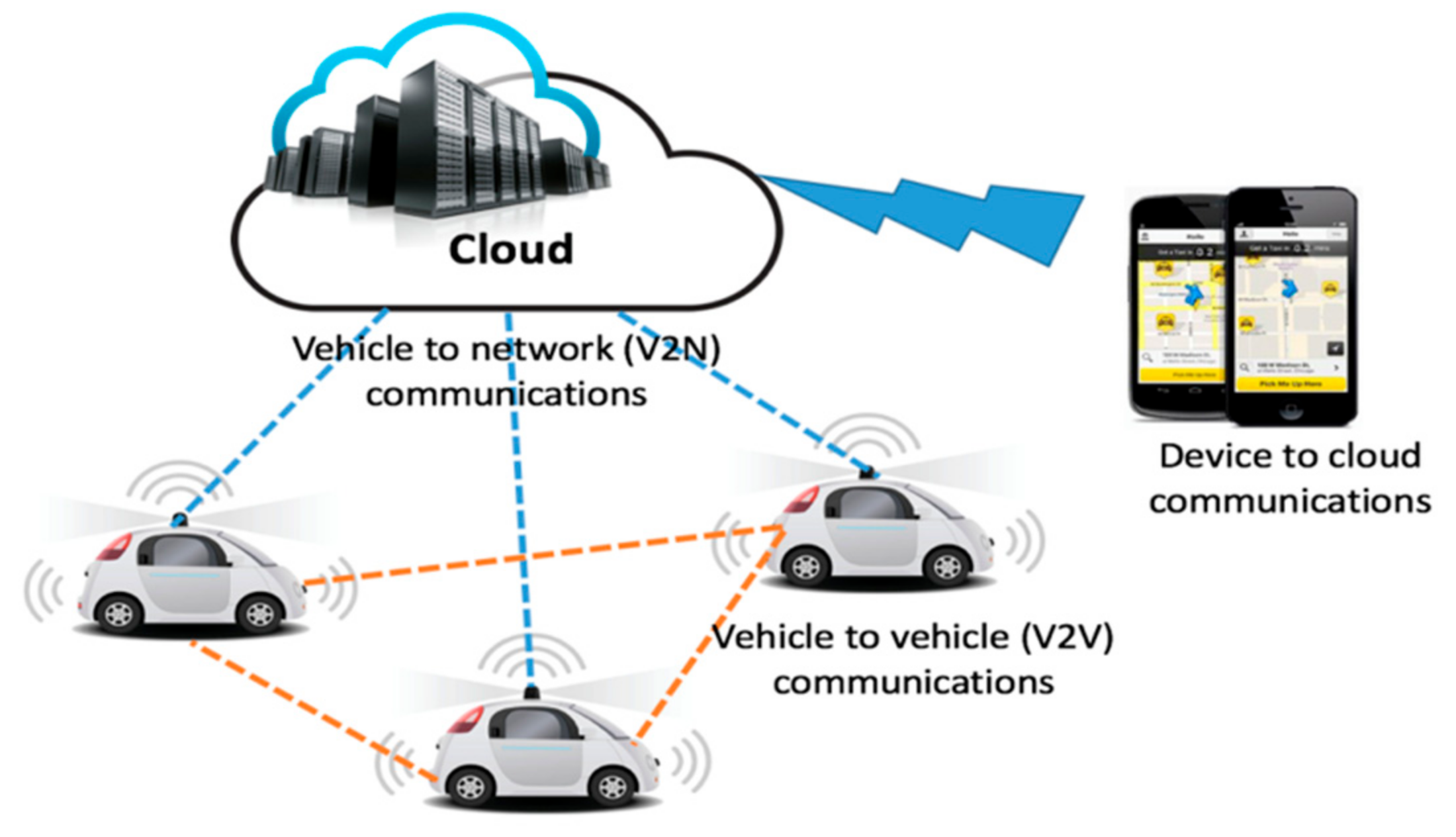

1. Introduction: Autonomous Vehicles for Urban Mobility

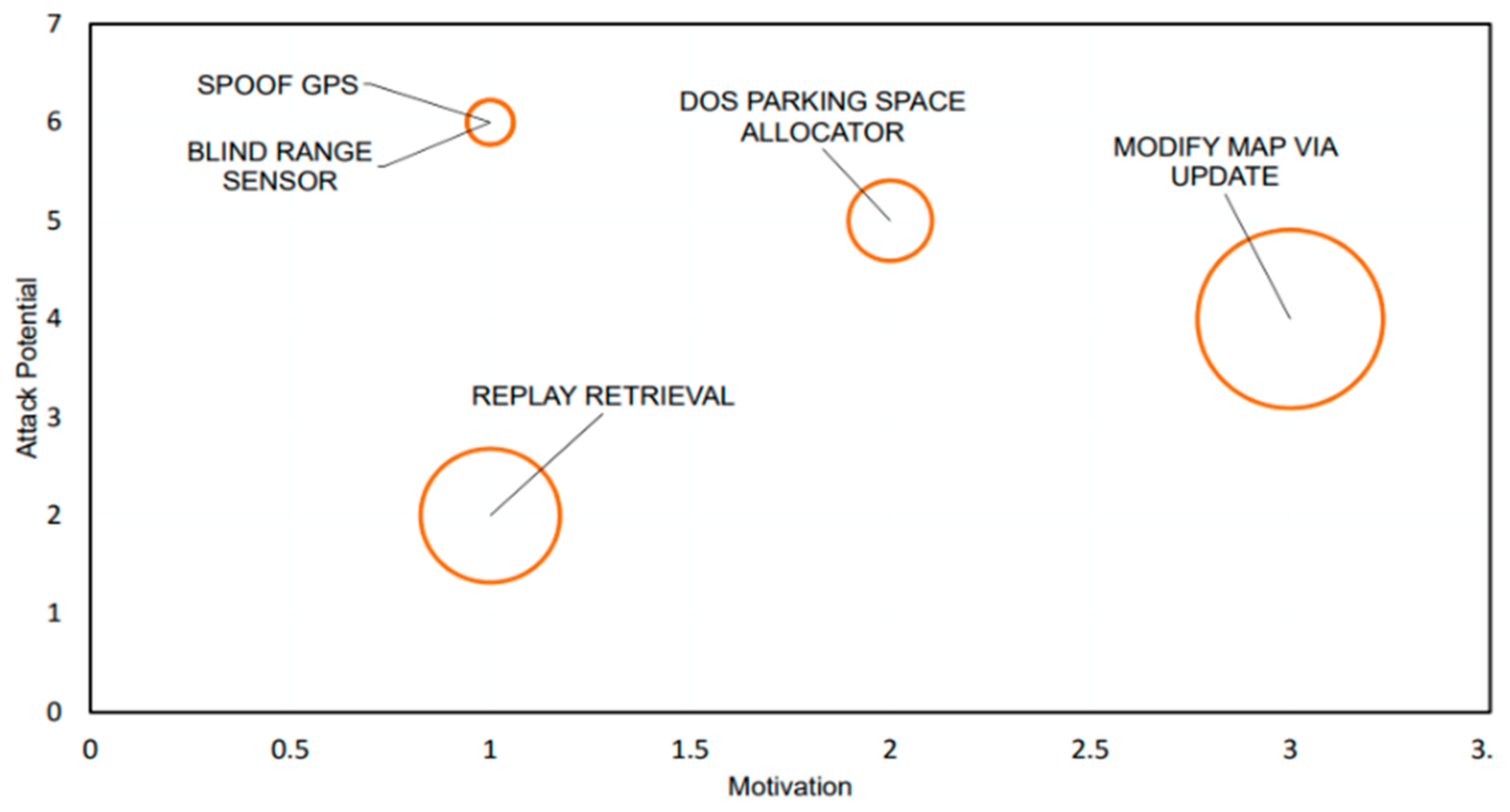

2. Materials and Methods

- Attack potential (vertical axis): Considers the difference between the threat agent’s ability to execute a successful attack and the system’s ability to withstand such attacks. Parameters include the time required for an attacker to identify a vulnerability and launch an attack; availability of attacker’s finances versus finances required to launch a successful attack; attacker’s skill set versus the system’s required skills.

- Motivation (horizontal axis): Considers the motivation and determination of the threat agent to execute the attack. Parameters include financial gain, ideology, passion, and risk.

- Impact (size of circle). Considers the losses to stakeholders in the event of successful attack, factoring financial loss, privacy, and safety consequences.

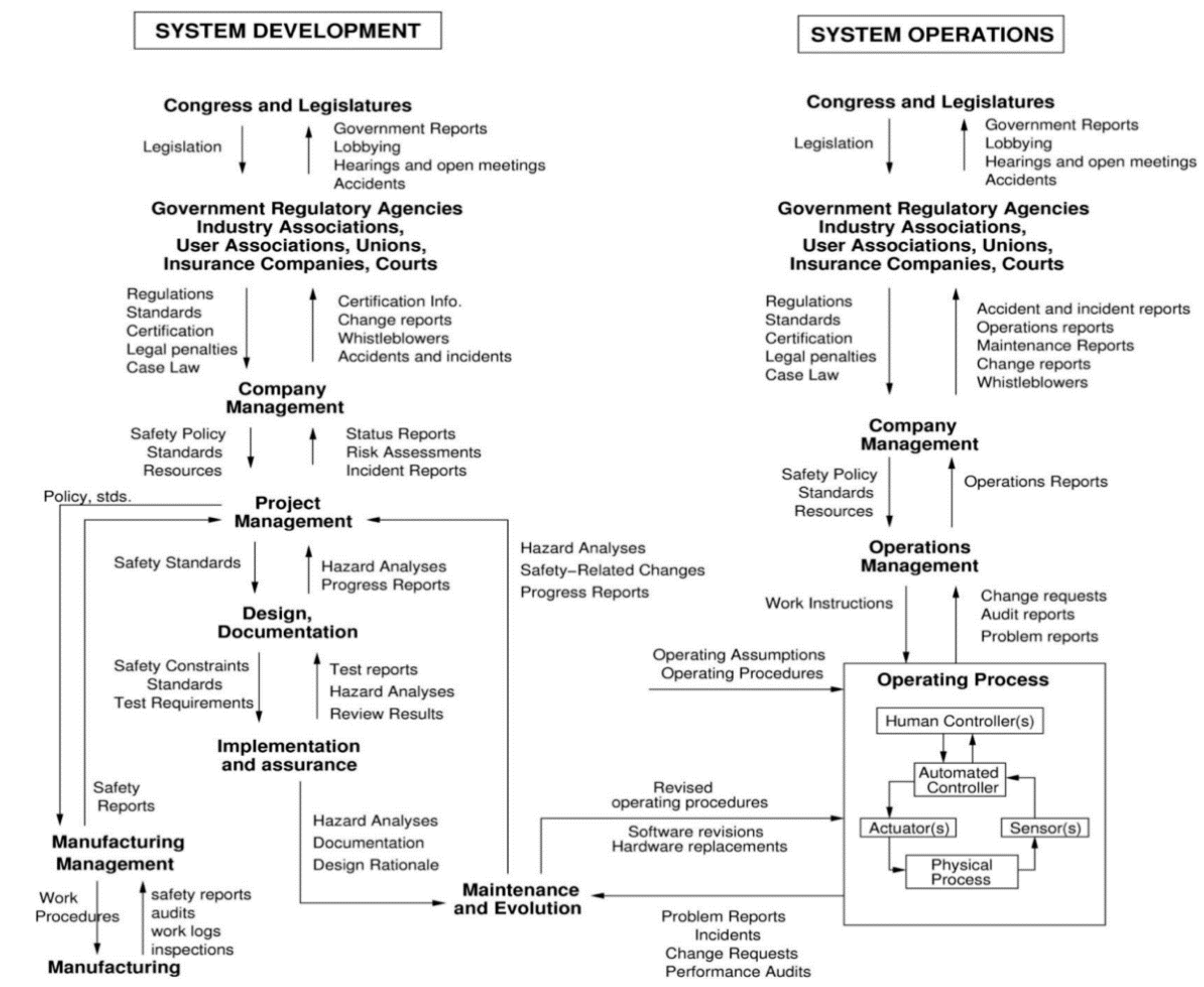

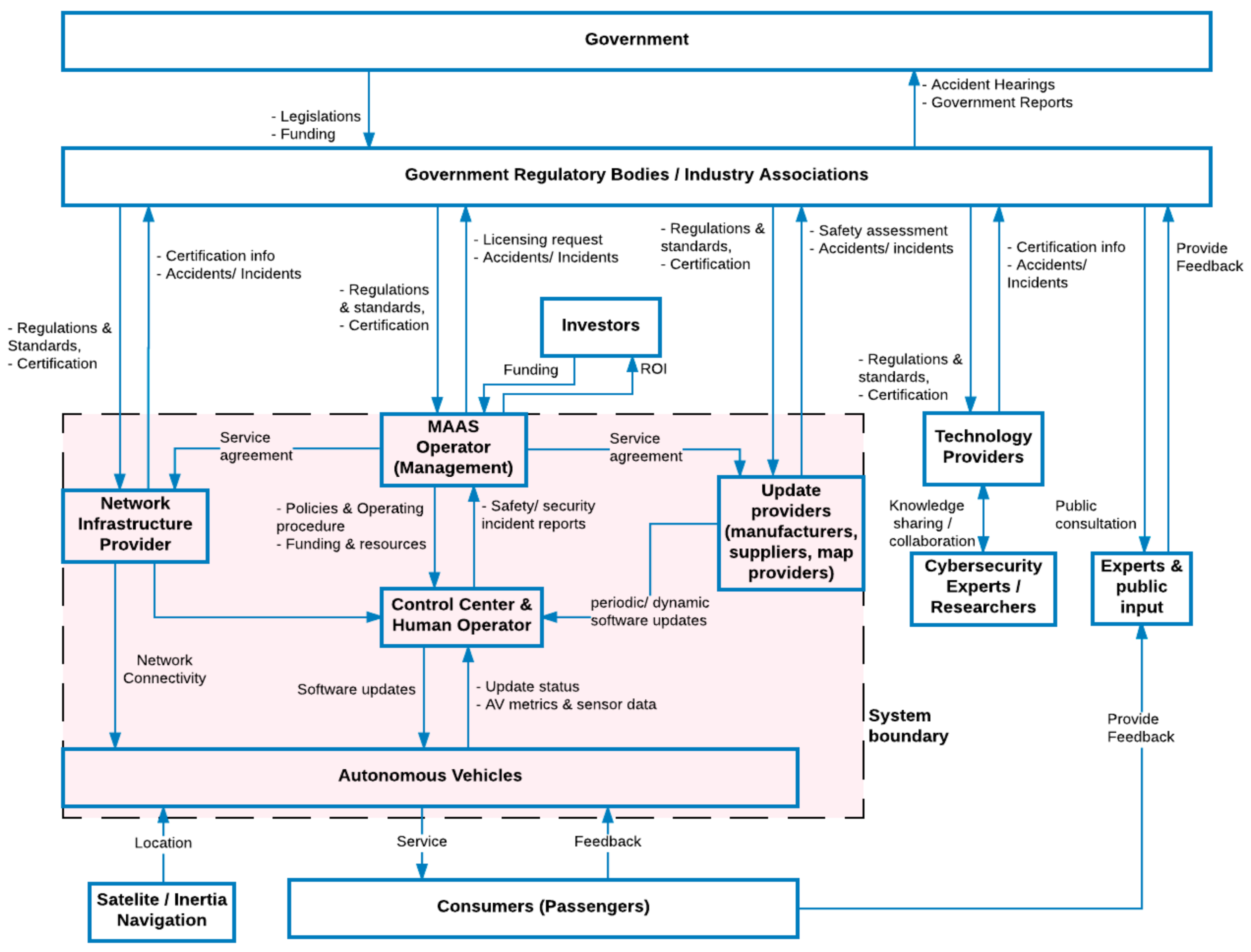

2.1. Applying Cybersafety on Mobility-as-a-Service Vehicle Fleets

- Establish the system engineering foundation, which includes defining and framing the problem, as well as identifying accidents/losses and hazards related to the case.

- Identify potentially unsafe/unsecure control actions, which documents the generic functional control structure and control actions that may lead to the identified hazards.

- Identify causes of unsafe/unsecure control actions and eliminate or control them, which includes identification of scenarios leading to unsafe/unsecure control actions, and using the identified unsafe control actions to create safety requirements and constraints.

2.2. Stage 1: Establish the System Engineering Foundation

- A1:

- Damage to vehicle or public property

- A2:

- Injury or death to people

- A3:

- Degradation of system availability or performance

- A4:

- Loss of critical information

2.3. Stage 2: Identify Potentially Unsafe/Unsecure Control Actions: Functional Control Structure

2.4. Unsafe/Unsecure Control Actions

- A control action required for safety is not provided;

- An unsafe/unsecure control action is provided that leads to a hazard;

- A potentially safe control action is provided too late, too early, or out of sequence;

- A safe control action is stopped too soon or applied too long.

2.5. Stage 3: Identify Causes for Unsafe/Unsecure Control Actions and Propose Mitigation Measures

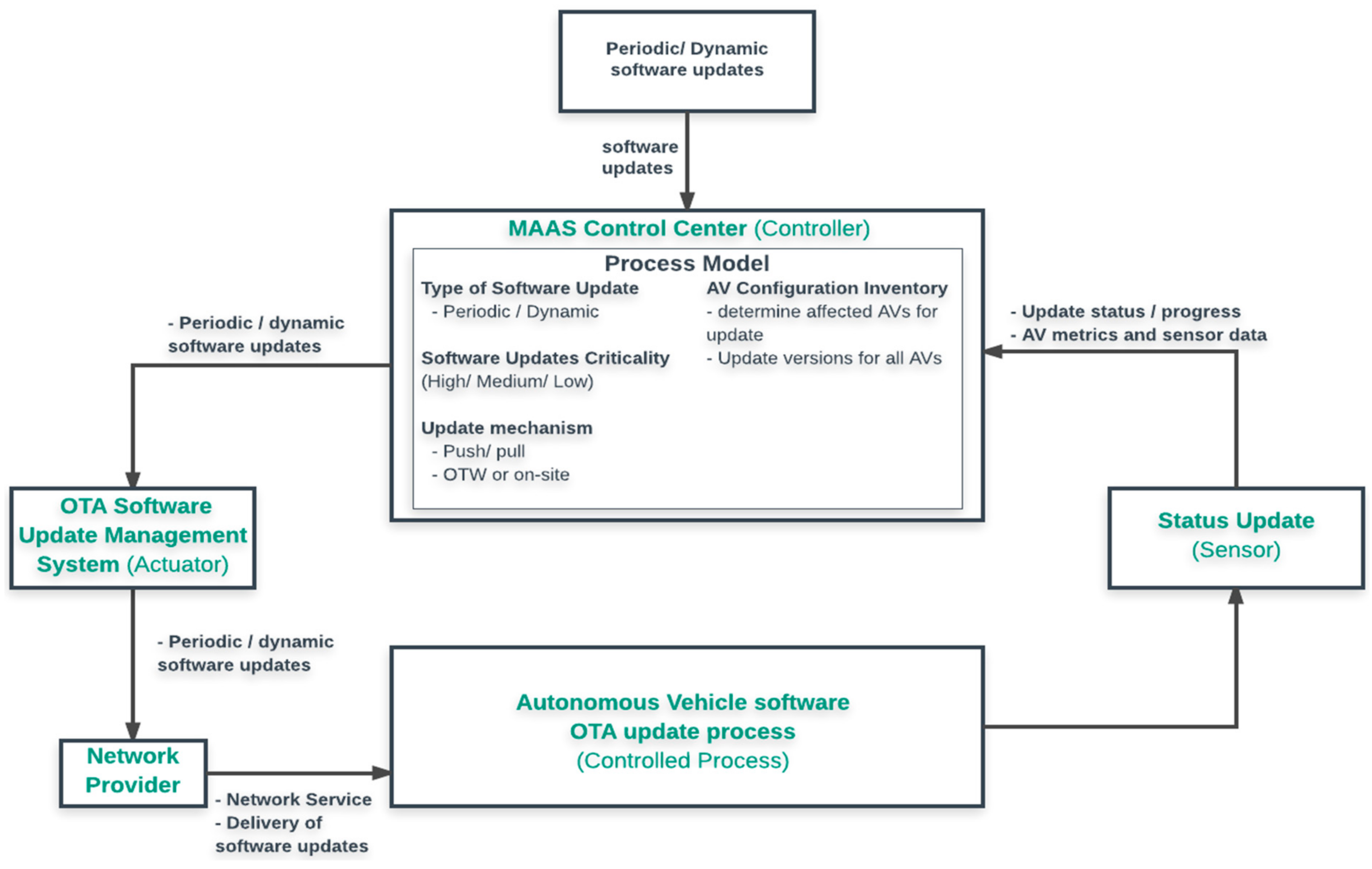

2.6. Interactions between MaaS Control Center and AVs

3. Results: Summary of Cybersafety Analysis

- Based on a top-down approach, its scope of analysis is bounded by unacceptable accidents/losses and hazards identified upfront. One key lesson from this study is that even for a relatively narrow system boundary under analysis, the number of causal scenarios, and mitigation actions can expand substantially. It is therefore recommended to begin the analysis at higher levels of abstraction and then go into further details by further in-zooming the functional control structure in subsequent iterations.

- One of the distinguishing features of this approach is the consideration of sociotechnical interactions beyond the technical operational aspects of the system. Analyzing the interactions within the whole ecosystem can be useful in finding insights on how the external interactions may impact process model of components further down the control structure.

- Incorporating heuristics could aid the identification of unsafe/unsecure interactions, as well as causal scenarios in which such interactions may take place. In Stage 2, the four factors in which unsafe control actions can take place (e.g., control action not provided, unsafe/unsecure control action provided, control action provided too late/too early/out of sequence, and control action stopped too soon or applied too long) are to some extend similar to HAZOP guidewords. In Stage 3, in addition to classification of causal factors for identifying possible accident scenarios, it may be useful to apply the Confidentiality, Integrity, and Availability (“CIA”) guidewords as creativity process for identifying cybersecurity causal factors.

- An additional benefit is the ability to trace mitigation requirements to the hazard(s) that these requirements are intended to mitigate against. Having clear traceability to the intent is important to help developers and testers validate the system, as well as ensure that the mitigation measures are maintained should the system be upgraded or replaced.

3.1. Comparison between Cybersafety and Combined Harm Analysis of Safety and Security for Information Systems (CHASSIS)

3.2. Analysis Approach

3.3. Level of Abstraction

3.4. Scope of Analysis

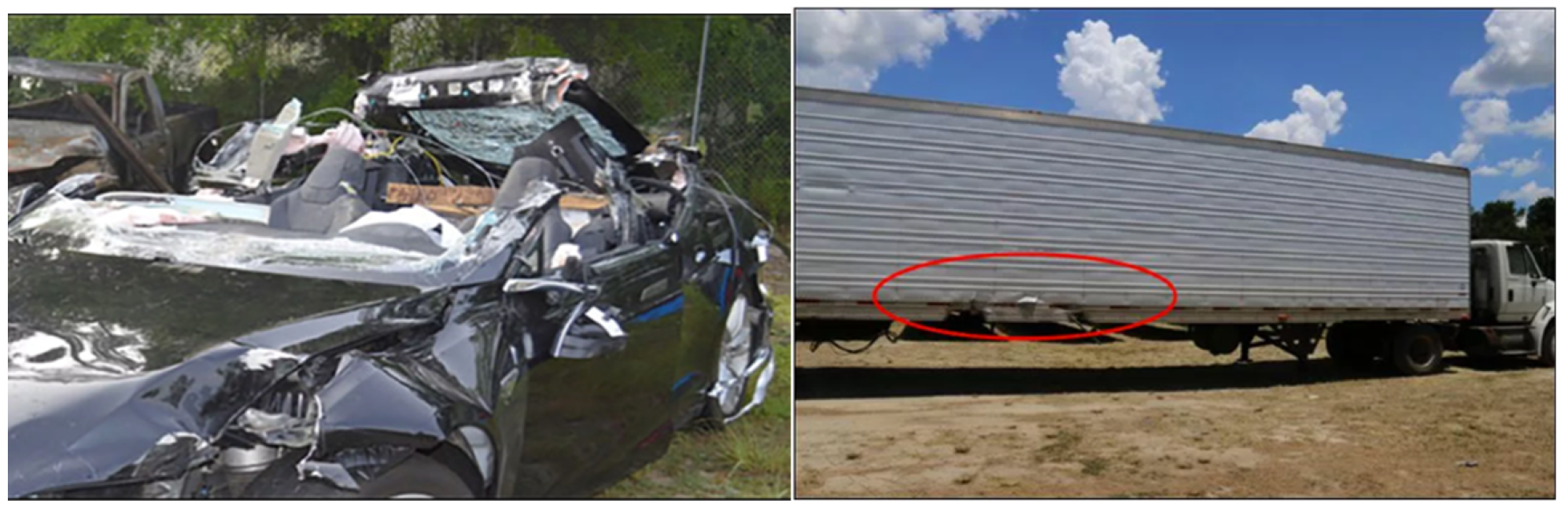

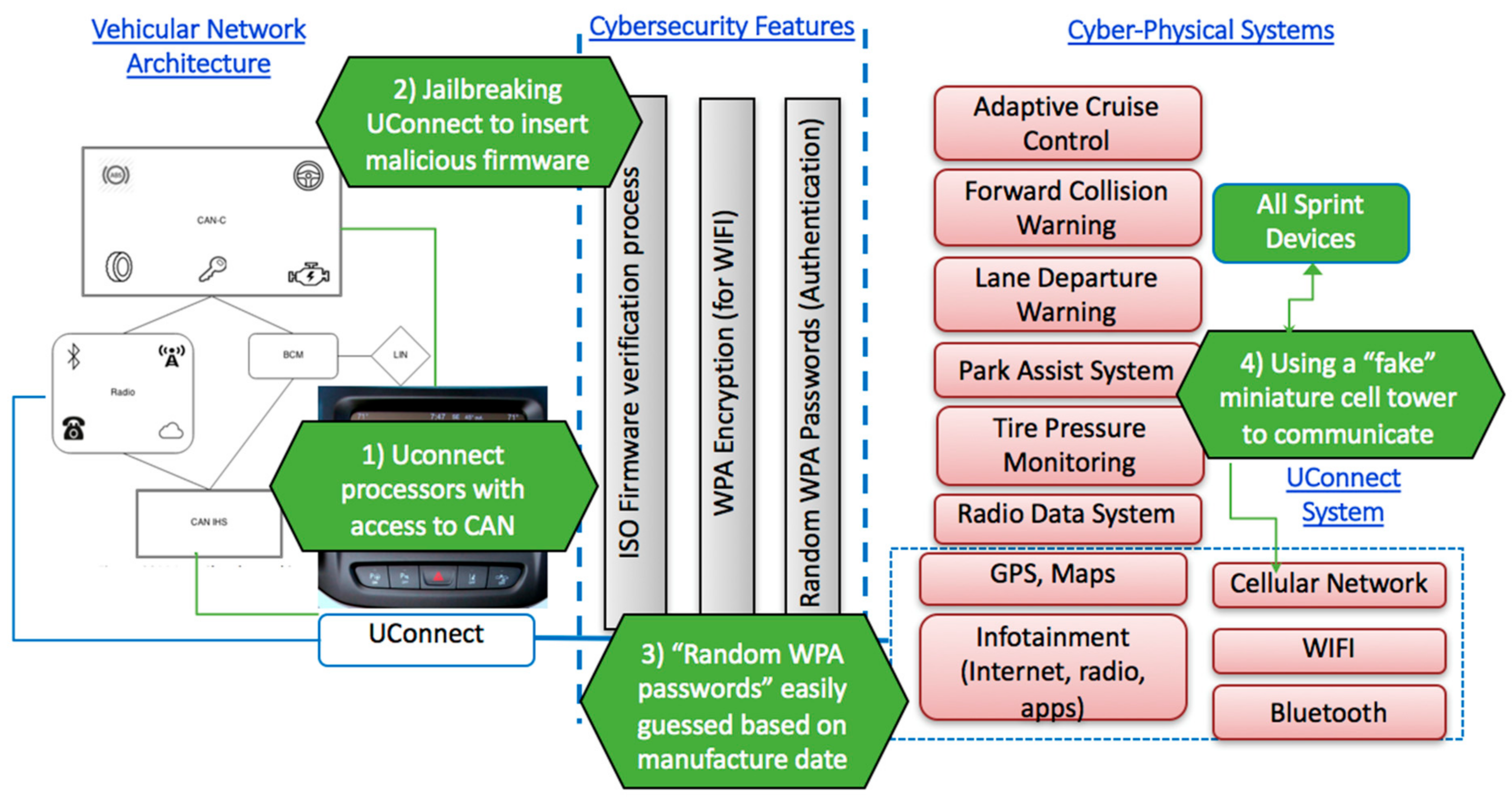

4. Discussion: Backtesting against A Past Cyber Hack Scenario

Case Analysis of Recommendations from Cybersafety

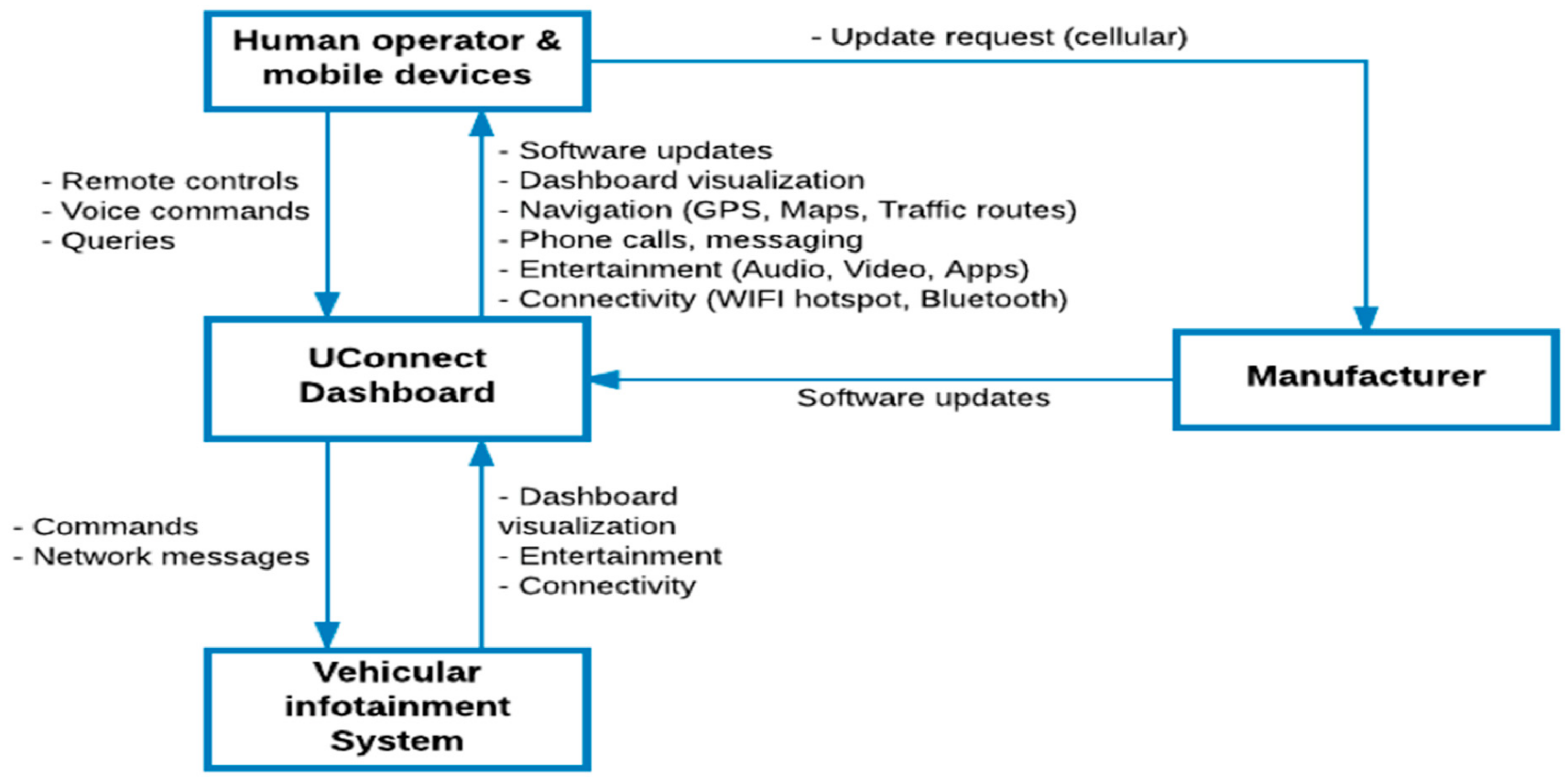

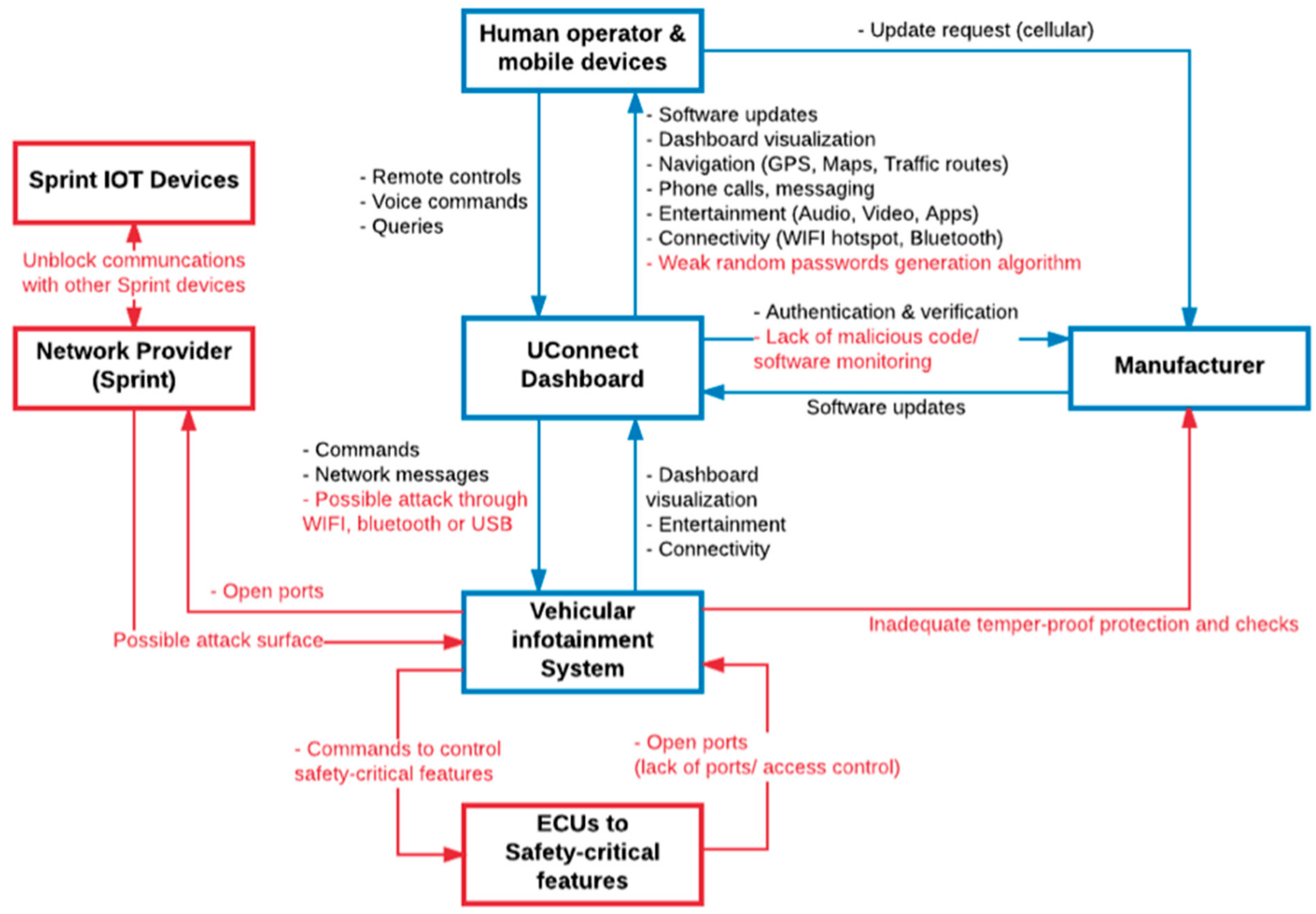

- The researchers identified a microcontroller and software within the UConnect head unit that connects to other components of the vehicle through the vehicle’s internal network known as the Controller Area Network (CAN) bus. The CAN bus is a critical infrastructure that enable communications among the vehicle’s electronic control units. Others that have looked at concerns and vulnerabilities related to the CAN include [30,31]. (Some papers have focused on vehicle sensors, such as [32].)

- Using this as an entry point, Miller and Velasek planted their code on the firmware of an entertainment system hardware, disabling checks and balances in the vehicle computer units, and enabling them to send commands to the vehicle’s CAN bus.

- To access the vehicular network wirelessly using WiFi, the researchers identified that each vehicle’s WPA password was generated based on the epoch time (in seconds) from the time the vehicle was manufactured to the first start-up. The researchers were able to narrow down to a few dozen combinations, and the WPA password used to access the vehicle network could be guessed quite easily.

- The UConnect system uses Sprint’s 3G network to communicate with other vehicles, and with the vehicle manufacturer for software updates. The researchers found that it was possible to communicate with other Sprint devices connected anywhere in the country. This network vulnerability allowed the researchers to increase their range of attack by exploiting cellular access into the vehicle.

- At the physical system (vehicle) level, the unauthorized control of vehicle’s safety-critical components results in violation of safety/security constraint. The driver is also unable to safely override or takeover control of the vehicle. Based on the analysis, the physical failure or inadequate control that led to the violation are due to the Electronic Control Unit (ECU) executing unauthorized command, as well as indirect linkages in ECUs performing safety-critical functions and infotainment functions.

- For the in-car controller, the safety/security constraints violated include the transmission and execution of unauthorized commands and the unauthorized access to the vehicle’s safety-critical features. A number of inadequate controls were observed, ranging from nonencrypted messages, inadequate authorization, and authentication mechanisms, and availability of a back door for attackers to insert malicious codes.

- For the vehicle manufacturer, possible safety/security constraints include inadequate secure development process, cyber-security-related competencies, and quality-assurance processes to ensure cybersecurity risks are mitigated. It is also important to consider contextual factors, such as the supply of parts from different manufacturers, and the competitive industry that calls for new features that may lead to new attack surfaces.

- Inadequate control for driver to override or takeover control of vehicle when vehicle is compromised by attacker

- Unsafe/unsecure interactions between remote-accessible infotainment system and CAN bus connected to safety-critical features can lead to security vulnerability

- Lack of feedback to alert the driver or manufacturer when the vehicle’s safety and security features were compromised. Possible points of compromise include jailbreaking the UConnect console to enable unauthorized software updates, installation of unauthorized software updates, or suspicious access into the system to alter safety-critical features of the vehicle.

- Awareness and technical competencies of staff in vehicular cybersecurity

- Effect of competition and time to market on security test adequacy

- Effect of the lack of standards and regulations on cybersecurity in the automotive industry

- Adequacy of cybersecurity activities (design, build, and test) in the automotive development approach

- Adequacy of training and resources allocated to cybersecurity efforts in the organization

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Keeney, T. Mobility-as-a-Service: Why Self-Driving Cars Could Change Everything. ARK Invest. Available online: https://research.ark-invest.com/self-driving-cars-white-paper (accessed on 17 June 2017).

- Lee, E. Mobility as a Service Market Size worth USD 523.61 Billion by 2027. Emergen Research. 14 December 2020. Available online: https://www.globenewswire.com/news-release/2020/12/14/2144252/0/en/Mobility-as-a-Service-Market-Size-Worth-USD-523-61-Billion-by-2027-Emergen-Research.html (accessed on 21 September 2017).

- Weilun, S. nuTonomy Driverless-Car Accident due to ‘Extremely Rare’ Software Glitches: One-North Trial Resumes. The Business Times. Available online: http://www.businesstimes.com.sg/transport/nutonomy-driverless-car-accident-due-to-extremely-rare-software-glitches-one-north-trial (accessed on 29 October 2017).

- Golson, J. Tesla and Mobileye Disagree on Lack of Emergency Braking in Deadly Autopilot Crash. The Verge. 1 July 2016. Available online: https://www.theverge.com/2016/7/1/12085218/tesla-autopilot-crash-investigation-radar-automatic-emergency-braking (accessed on 21 September 2017).

- Controlling Vehicle Features of Nissan LEAFs across the Globe via Vulnerable APIs. Troy Hunt. 24 February 2016. Available online: https://www.troyhunt.com/controlling-vehicle-features-of-nissan/ (accessed on 21 July 2017).

- Hackers Remotely Kill a Jeep on the Highway—With Me in It. WIRED. Available online: https://www.wired.com/2015/07/hackers-remotely-kill-jeep-highway/ (accessed on 5 September 2017).

- Automotive Security (ASQ). Challenges, Standards, and Solutions; ASQ: Milwaukee, WI, USA, 2016. [Google Scholar]

- Leveson, N.G. Engineering a Safer World: Systems thinking Applied to Safety; The MIT Press: Cambridge, MA, USA, 2012. [Google Scholar]

- J1739: Potential Failure Mode and Effects Analysis in Design (Design FMEA) and Potential Failure Mode and Effects Analysis in Manufacturing and Assembly Processes (Process FMEA) and Effects Analysis for Machinery (Machinery FMEA); SAE International: Warrendale, PA, USA. Available online: http://standards.sae.org/j1739_200208/ (accessed on 1 September 2017).

- Ericson, C.A., II. Fault Tree Analysis Primer, 2nd ed.; CreateSpace Independent Publishing Platform: Scotts Valley, CA, USA, 2011. [Google Scholar]

- Banerjee, A.; Venkatasubramanian, K.K.; Mukherjee, T.; Gupta, S.K.S. Ensuring Safety, Security, and Sustainability of Mission-Critical Cyber—Physical Systems. Proc. IEEE 2012, 100, 283–299. [Google Scholar] [CrossRef]

- Schneider, D.; Armengaud, E.; Schoitsch, E. Towards Trust Assurance and Certification in Cyber-Physical Systems. In Computer Safety, Reliability, and Security; Springer: Cham, Switzerland, 2014; pp. 180–191. [Google Scholar]

- Macher, G.; Sporer, H.; Berlach, R.; Armengaud, E.; Kreiner, C. SAHARA: A security-aware hazard and risk analysis method. In Proceedings of the 2015 Design, Automation Test in Europe Conference Exhibition (DATE), Grenoble, France, 9–13 March 2015; pp. 621–624. [Google Scholar]

- Security Application of Failure Mode and Effect Analysis (FMEA) | SpringerLink. Available online: https://link.springer.com/chapter/10.1007/978-3-319-10506-2_21 (accessed on 19 August 2017).

- Raspotnig, C.; Karpati, P.; Katta, V. A Combined Process for Elicitation and Analysis of Safety and Security Requirements. In Enterprise, Business-Process and Information Systems Modeling; Springer: Berlin/Heidelberg, Germany, 2012; pp. 347–361. [Google Scholar]

- Dominic, D.; Chhawri, S.; Eustice, R.M.; Ma, D.; Weimerskirch, A. Risk Assessment for Cooperative Automated Driving. In Proceedings of the 2nd ACM Workshop on Cyber-Physical Systems Security and Privacy, New York, NY, USA, 16 October 2016; pp. 47–58. [Google Scholar]

- Martinez, R.S. System Theoretic Process Analysis of Electric Power Steering for Automotive Applications. Master’s Thesis, Massachusetts Institute of Technology, Engineering Systems Division, Cambridge, MA, USA, June 2015. [Google Scholar]

- Peper, N.A. Systems Thinking Applied to Automation and Workplace Safety. Master’s Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, June 2017. [Google Scholar]

- Fleming, C.H.; Leveson, N.G. Improving Hazard Analysis and Certification of Integrated Modular Avionics. J. Aerosp. Inf. Syst. 2014, 11, 397–411. [Google Scholar] [CrossRef]

- Pawlicki, T.; Samost, A.; Brown, D.W.; Manger, R.P.; Kim, G.Y.; Leveson, N.G. Application of systems and control theory-based hazard analysis to radiation oncology. J. Med. Phys. 2016, 43, 1514–1530. [Google Scholar] [CrossRef] [PubMed]

- Young, W.; Leveson, N.G. An Integrated Approach to Safety and Security Based on Systems Theory. Commun. ACM 2014, 57, 31–35. [Google Scholar] [CrossRef]

- Salim, H. Cybersafety: A Systems Thinking and Systems Theory Approach to Managing Cyber Security Risks. Master’s Thesis, Engineering Systems Division, Massachusetts Institute of Technology, Cambridge, MA, USA, 2014. [Google Scholar]

- Young, W.; Leveson, N. Systems thinking for safety and security. In Proceedings of the 29th Annual Computer Security Applications Conference (ACSAC ’13 ACM), New Orleans, LA, USA, 16 December 2013. [Google Scholar]

- Salim, H.; Madnick, S. Cyber Safety: A Systems Theory Approach to Managing Cyber Security Risks—Applied to TJX Cyber Attack. Working Paper CISL 2016-09. August 2016. Available online: http://web.mit.edu/smadnick/www/wp/2016-09.pdf (accessed on 3 April 2021).

- Nourian, A.; Madnick, S. A Systems Theoretic Approach to the Security Threats in Cyber Physical Systems Applied to Stuxnet; Working Paper CISL 2014-13; September 2014. [Google Scholar] [CrossRef]

- Nourian, A.; Madnick, S. A Systems Theoretic Approach to the Security Threats in Cyber Physical Systems Applied to Stuxnet. IEEE Trans. Dependable Secur. Comput. 2018, 15, 2–13. [Google Scholar] [CrossRef]

- Young, W., Jr. Understanding STPA-Sec through a Simple Roller Coaster Example. In Proceedings of the 2016 STAMP Conference, Boston, MA, USA, 23 March 2016. [Google Scholar]

- Lee, C.W. A System Theoretic Approach to Cybersecurity Risks Analysis of Passenger Autonomous Vehicles. Master’s Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, February 2018. [Google Scholar]

- Valasek, C.; Millier, C. Remote Exploitation of an Unaltered Passenger Vehicle. Available online: http://illmatics.com/Remote%20Car%20Hacking.pdf (accessed on 7 May 2015).

- Casillo, M.; Coppola, S.; De Santo, M.; Pascale, F. Emanuele Santonicola Embedded Intrusion Detection System for Detecting Attacks overCAN-BUS. In Proceedings of the 2019 4th International Conference on System Reliability and Safety (ICSRS), Rome, Italy, 20–22 November 2019; pp. 136–141. [Google Scholar] [CrossRef]

- Castiglione, A.; Palmieri, F.; Colace, F.; Lombardi, M.; Santaniello, D.; D’Aniello, G. Securing the Internet of vehicles through lightweight block ciphers. Pattern Recognit. Lett. 2020, 135, 264–270. [Google Scholar] [CrossRef]

- El-Rewini, Z.; Sadatsharan, K.; Sugunaraj, N.; Selvaraj, D.F.; Plathottam, S.J.; Ranganathan, P. Cybersecurity Attacks in Vehicular Sensors. IEEE Sens. J. 2020, 20, 13752–13767. [Google Scholar] [CrossRef]

| Hazards | Associated Accidents/Losses |

|---|---|

| H1: Adversaries take over control of safety-critical functions of AV | A1, A2, A3 |

| H2: AV operating with unsafe/unsecure/outdated software | A1, A2, A3 |

| H3: Adversaries compromise network/critical infrastructure supporting AV | A3, A4 |

| H4: AV traveling on unsafe/unauthorized road | A1, A2 |

| Control Action | Not Providing Causes Hazard | Providing Causes Hazard | Too Early/Too Late/Wrong Order Causes Hazard | Stopping Too Soon/Applying Too Long Causes Hazard |

|---|---|---|---|---|

| UCAs between MaaS Operator (Management) and software update provider | ||||

| Service agreement | UCA-1: Service agreement with network provider not provided before system is operationalized [H3, H4] | Not hazardous | Not applicable | Not applicable |

| UCAs between MaaS Operator (Management) and Control Center | ||||

| Safety/security policies and operating procedure | UCA-3: Safety policies and operating procedure not provided before system is operationalized [H1–H4] | Not hazardous | Not applicable | Not applicable |

| UCAs between software update providers and Control Center | ||||

| Periodic software updates | UCA-7: Software updates not provided by providers when new threats/vulnerabilities exist [H1, H2] | Not hazardous | UCA-8: Software updates provided too late by providers when new threats/vulnerabilities exist [H1, H2] | Not applicable |

| UCAs between Control Center and AV | ||||

| Periodic/dynamic software updates | UCA-10: Software updates not applied to AVs when threats or vulnerabilities exists in AV [H1, H2] | UCA-11: Unauthorized software updated into AVs [H1, H2, H4] | UCA-12: Software update not applied to AVs in timely manner when threats or vulnerabilities exists in AV [H1, H2, H4] | Not applicable |

| Unsafe/Unsecure Control Actions | Possible Safety/Security Constraints |

|---|---|

| UCA-1: Service agreement with network provider not provided before system is operationalized [H3, H4] | SC-1: The MaaS operator shall establish service level agreement with network service provider to ensure adequate coverage of network, availability, and protection levels against cyber security threats. |

| UCA-3: Safety policies and operating procedure not provided before system is operationalized [H1–H4] | SC-2: The MaaS operator shall translate applicable regulatory requirements and standards to safety policies and operating procedures. |

| UCA-7: Software updates not provided by providers when new threats/vulnerabilities exist [H1, H2] | SC-7: The MaaS operator shall establish protocols for periodic or ad-hoc software updates upon detection of vulnerabilities. |

| UCA-8: Software updates provided too late by providers when new threats/vulnerabilities exist [H1, H2] | SC-3: The MaaS operator shall establish protocols for timely update of critical software updates that need to be installed on AVs expeditiously |

| UCA-10: Software updates not applied to AVs when threats or vulnerabilities exists in AV [H1, H2] | SC-10: The MaaS operator shall, by working with associated providers, ensure that software updates are provided to provide fixes for detected vulnerabilities. |

| UCA-11: Unauthorized software updated into AVs [H1, H2, H4] | SC-11: The MaaS operator shall, by working with relevant parties, prevent unauthorized software from being installed into AVs. |

| UCA-12: Software update not applied to AVs in timely manner when threats or vulnerabilities exists in AV [H1, H2, H4] | SC-12: The MaaS operator shall ensure timely response to vulnerable software by providing fixes/patches through pre-emptive or quick recovery approach |

| Scenario | Associated Causal Factors | Requirements/Design Features | Allocated to | Rationale |

|---|---|---|---|---|

| UCA-7: Software updates not provided by providers when new threats/vulnerabilities exist [H1, H2] UCA-8: Software updates provided too late by providers when new threats/vulnerabilities exist [H1, H2] | ||||

| Outdated software Software updates with vulnerabilities | Inadequate service level agreement with update providers | R-1: Establish service level agreement with update providers to ensure preventive bugs and vulnerability fixes are included | MaaS Operator (Management team) | Improve organizational cybersecurity plans and strategies |

| Long lead time to implement software updates | R-2: Ensure proactive vulnerability monitoring. For example, bug bounty program to invite “ethical hackers” to find security vulnerabilities R-3: Dedicated, independent cyber security team actively looking into regular security audit tests and detecting any new threats/vulnerabilities. | MaaS Operator (Management team) | Improve organizational cybersecurity plans and strategies | |

| Lack of proactive vulnerability monitoring and security maintenance | ||||

| UCA-10: Software updates not applied to AVs when threats or vulnerabilities exists in AV [H1, H2] UCA-12: Software update not applied to AVs in timely manner when threats or vulnerabilities exists in AV [H1, H2, H4] | ||||

| Delayed software updates to AVs | Inadequate policies and operating procedures | R-4: Develop cybersecurity policies and operating procedures to determine update lead time based on different levels criticality. | MaaS Operator (Management team) | Improve organizational cybersecurity plans and strategies |

| Inadequate cybersecurity response plans and strategies | R-5: Develop cybersecurity response plans and strategies, ensuring all parties involved know their roles and responsibilities in the event of cyber-attack (malicious software updates) R-6: Provide a system for response plans to be exercised and lessons learnt incorporated to improve existing plans | MaaS Operator (Management team) | Improve organizational cybersecurity plans and strategies | |

| AV internal or external network over-loaded | Excessive traffic restricting software updates to AV | R-7: AV gateway designed to prevent unauthorized traffic | MaaS IT Infra/security team | Improving critical infraprotection |

| File size of software updates too large | R-8: MaaS operator to work with providers to limit file size of software updates | MaaS Operator | Coordination and cooperation with external providers | |

| Too many concurrent downloads | R-9: MaaS operator to stagger software updates in to minimize network congestion | MaaS Operator | Enhancing internal policies and procedures | |

| Backdoor attacks within vehicular network (CAN bus) | R-10: Segregate networks for safety-critical functions and non-safety-critical functions | AV Manufacturer | Improving AV security design | |

| Categories of Requirements | Cybersafety | CHASSIS |

|---|---|---|

| Managerial aspects | 7 | 2 |

| Organizational/operations aspects | 14 | 7 |

| Technical (AV design) aspects | 13 | 10 |

| Technical (MaaS IT Infrastructure) aspects | 14 | 4 |

| Total requirements | 48 | 23 |

| Mitigations in Both Cybersafety and CHASSIS | Mitigations in Cybersafety Only | Mitigations in CHASSIS Only |

|---|---|---|

| Both methods identified mitigations to strengthen control and protection at key components under system boundary. |

|

|

| Domain | Analysis |

|---|---|

| Safety and security responsibilities and constraints violated | - Prevent unauthorized control of vehicle’s safety-critical components |

| Emergency and Safety Equipment (Controls) | - Driver unable to over-ride or take over command of vehicle - Driver may attempt to take over control or switch off the vehicle |

| Physical Failures and Inadequate Controls | - ECU for safety-critical components execute unauthorized command - ECU for safety-critical components (e.g., accelerator, brakes, and steering) and non-safety-critical components (infotainment, wipers, etc.) on the same network bus |

| Contextual Factors | - Driver may completely switch off vehicle, but this may be dangerous while the vehicle is driving. - Driver may enable vehicular network (e.g., WiFi, cellular) and expose the vulnerability |

| Domain | Analysis |

|---|---|

| Safety and security responsibilities and constraints violated | - Prevent unauthorized software or command from being sent and executed on vehicle controller - Prevent access to vehicle’s safety-critical features |

| Emergency and Safety Equipment (Controls) | - UConnect system do not have direct features to control safety-critical features of the vehicle. |

| Physical Failures and Inadequate Controls | - Messages transmitted over the air is not encrypted which allow attackers to interpret messages and plan attacks - Inadequate authorization and authentication allow unauthorized software to be installed in vehicle - Vehicle installed with vulnerable software provide backdoor for attackers to send commands and remotely control safety-features of vehicle |

| Contextual Factors | - By default, UConnect is designed with features for drivers to remotely control non-safety-critical features of vehicle. - Vehicle is accessible from anywhere through the Internet, which makes it possible for vehicle to launch large-scale attacks remotely - Unsecure interaction between the infotainment system and CAN bus that connects to safety-critical features |

| Domain | Analysis |

|---|---|

| Safety and security -related responsibilities | - Ensure secure development process, including safety/security hazards analysis, development, and testing. - Ensure staff are trained in cybersecurity - Ensure vehicles manufactured are secure with cybersecurity risks mitigated |

| Unsafe decisions and control | - Unsafe interactions of new UConnect feature with existing architecture that shares vehicular network between safety-critical and non-safety-critical features - Procure parts/components with vulnerabilities - Inadequate training or resources on cybersecurity |

| Process/mental flaws | - Manufacturer assumed UConnect can only access infotainment features and it is not possible to remotely access safety-critical features - Use of legacy components and parts |

| Context in which decisions were made | - Highly competitive industry which may cause manufacturers to develop new features to attract buyers - May not be aware of security vulnerabilities for parts/components procured from suppliers. - Automotive industry may be new to cybersecurity risks, and the team may not have adequate competencies |

| Inadequate Controls/Unsafe Decisions | Example of Mitigations from Cybersafety | Examples of Mitigations from CHASSIS |

|---|---|---|

| ECU for safety-critical components execute unauthorized command | R-7: AV gateway designed to prevent unauthorized traffic R-21: AVs to check for certified updates before processing these updates R-26: In-build intrusion detection system R-32: build in tamper-proof design for critical functions of AV | MP-6: Design and develop a mechanism to certify updates are from trusted sources MP-1: AV shall have capability to detect messages that may be altered or are from unidentified sources MP-2: AV shall ensure updates are from authorized and authenticated sources before receiving these updates |

| ECU for safety-critical components (e.g., accelerator, brakes, and steering) and non-safety-critical components (infotainment, wipers, etc.) on the same network bus | R-10: Segregate networks for safety-critical functions and non-safety-critical functions R-33: Build protection mechanism to prevent unauthorized traffic from accessing AV internal traffic | Nil |

| Messages transmitted over the air not encrypted which allow attackers to interpret messages and plan attacks | R-15: Protect communications channel, using secure transport protocol and encryption techniques whenever possible | MP-7: (1) Ensure security-critical information are sent using secure network protocol; (2) Ensure security-critical information are encrypted before transmission |

| Inadequate authorization and authentication allow unauthorized software to be installed in vehicle ECU | R-23: Enforce strong authentication and authorization mechanisms to ensure validity of commands/software R-20: Create a certificate authority, with all updates submitted for certification before they can be accepted by AV | MP-14: Design and implement authentication and authorization mechanism MP-15: Ensure that the authentication and authorization mechanism is tamper-proof MP-6: Design and implement tamper-proof ECU to prevent unauthorized inject of commands to ECU to send wrong/inaccurate metrics |

| Vehicle installed with vulnerable software provide backdoor for attackers to send commands and remotely control safety-features of vehicle | R-46: Provide anomalies detection and analysis tool to detect potential attacks. R-25: Send alerts to control stations when unauthorized modifications are detected | Nil |

| Unsafe interactions of new UConnect feature with existing architecture that shares vehicular network between safety-critical and non-safety critical features | R-22: Enforce secure software development lifecycle (SDLC) and conduct audits/checks to ensure development teams follow them | Nil |

| Inadequate training or resources on cybersecurity | R-37: Build/strengthen CS technical competencies in organization R-43: Ensure AV manufacturer has known track records for safety and security R-39: Ensure that staffs at all levels are familiar with their CS roles and responsibilities R-42: Translate applicable standards and regulatory guidelines into actionable tasks for the organization | MP-21: Provide clear CS guidelines, policies, and training to ensure that staff at all levels are familiar with their CS roles and responsibilities MP-22: Include standard operating procedures to update management on CS incidents based on criticality/severity |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, C.W.; Madnick, S. Cybersafety Approach to Cybersecurity Analysis and Mitigation for Mobility-as-a-Service and Internet of Vehicles. Electronics 2021, 10, 1220. https://doi.org/10.3390/electronics10101220

Lee CW, Madnick S. Cybersafety Approach to Cybersecurity Analysis and Mitigation for Mobility-as-a-Service and Internet of Vehicles. Electronics. 2021; 10(10):1220. https://doi.org/10.3390/electronics10101220

Chicago/Turabian StyleLee, Chee Wei, and Stuart Madnick. 2021. "Cybersafety Approach to Cybersecurity Analysis and Mitigation for Mobility-as-a-Service and Internet of Vehicles" Electronics 10, no. 10: 1220. https://doi.org/10.3390/electronics10101220

APA StyleLee, C. W., & Madnick, S. (2021). Cybersafety Approach to Cybersecurity Analysis and Mitigation for Mobility-as-a-Service and Internet of Vehicles. Electronics, 10(10), 1220. https://doi.org/10.3390/electronics10101220