An Accuracy Improvement Method Based on Multi-Source Information Fusion and Deep Learning for TSSC and Water Content Nondestructive Detection in “Luogang” Orange

Abstract

:1. Introduction

2. Materials and Methods

2.1. ”Luogang”Orange Samples

2.2. Nondestructive Sampling Platforms

2.3. TSSC and Water Content Test

2.4. Data Analysis

2.4.1. Analysis Procedure

2.4.2. Initial Feature Formation

2.4.3. Feature Vector Conversion for CNN Input

2.4.4. Detection Effect Evaluation

2.4.5. Software

3. Results and Discussion

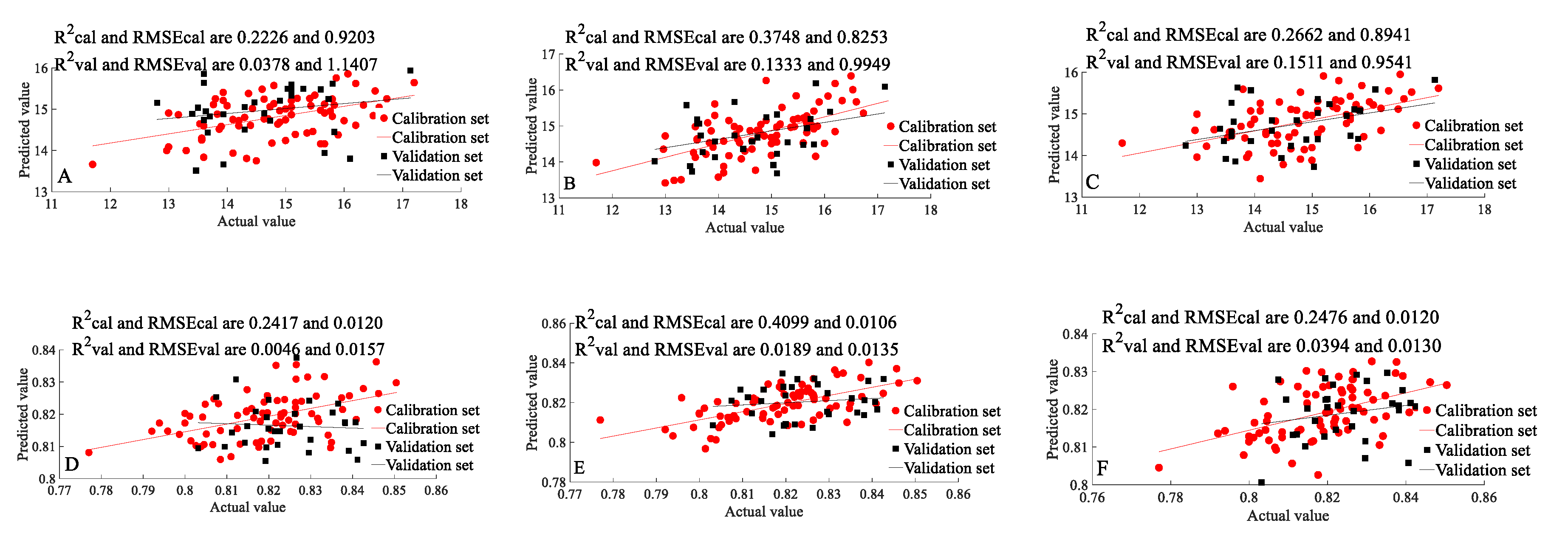

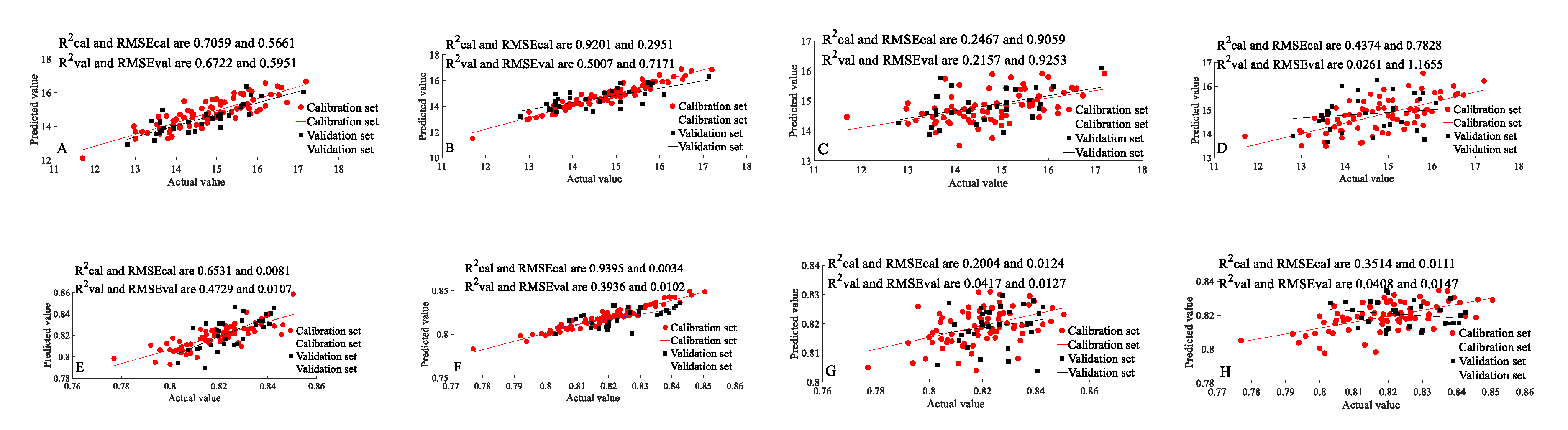

3.1. Detection Based on Raw Data Of Single Detectors

3.1.1. Detection Based on VIS/NIR Spectrometer (400–1100 nm)

3.1.2. Detection Based on NIR Spectrometer (900–1700 nm)

3.1.3. Detection Based on Machine Vision

3.1.4. Detection Based on Electronic Nose

3.1.5. Detection after SG and GA Preprocessing

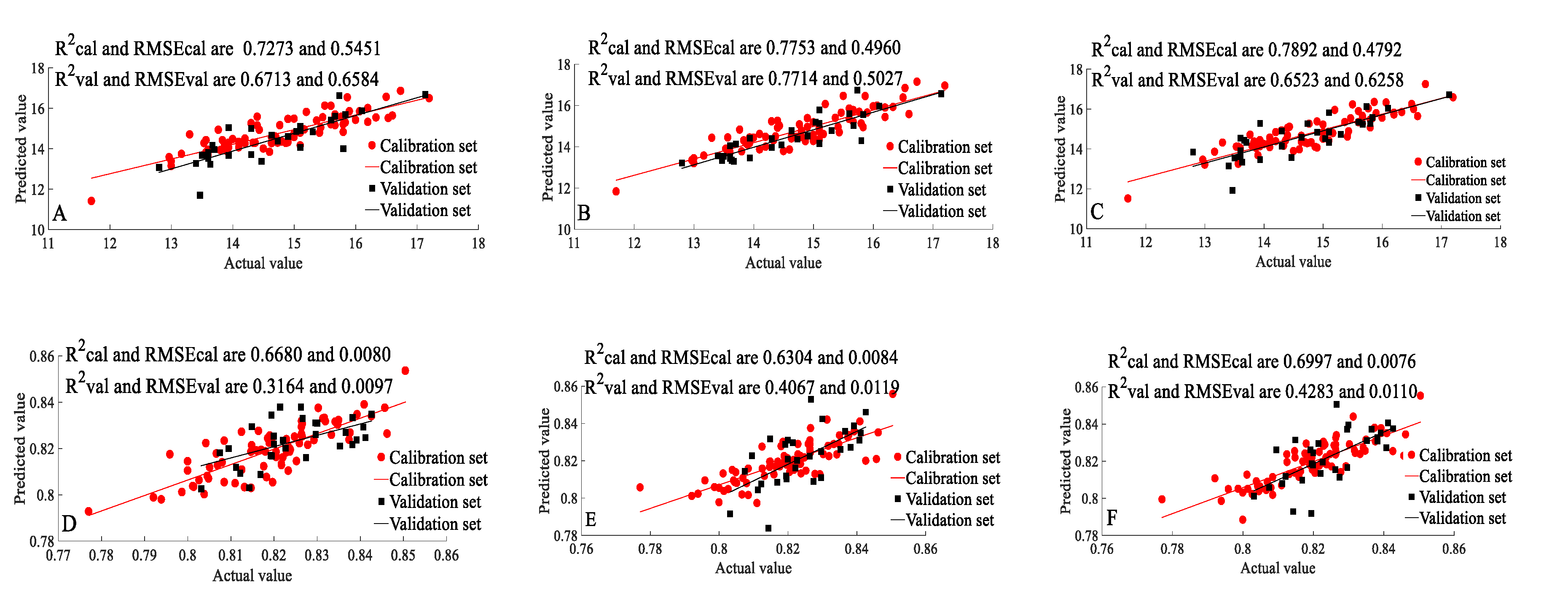

3.2. Detection Based on MIF

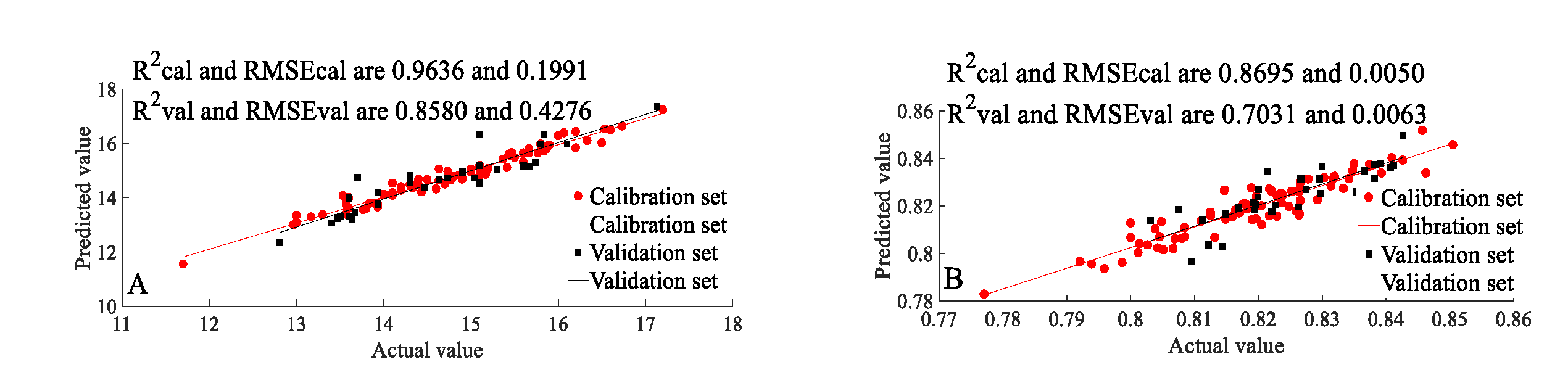

3.3. Detection Based on MIF and Deep Learning

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A

References

- Huang, S.P.; Yue, X.J.; Hong, T.S.; Cai, K.; Lin, S.L. Hyperspectrum based models for monitoring phosphorus content of Luogang Orange leaf using Wavelet Denoising and Least Squares Support Vector Regression Analysis. Guangdong Agric. Sci. 2013, 2013, 37–40. [Google Scholar]

- Khodabakhshian, R.; Emadi, B.; Khojastehpour, M.; Golzarian, M.R. A comparative study of reflectance and transmittance modes of Vis/NIR spectroscopy used in determining internal quality attributes in pomegranate fruits. J. Food Meas. Charact. 2019, 13, 3130–3139. [Google Scholar] [CrossRef]

- Shen, F.; Zhang, B.; Cao, C.; Jiang, X. On-line discrimination of storage shelf-life and prediction of post-harvest quality for strawberry fruit by visible and near infrared spectroscopy. J. Food Process Eng. 2018, 41, e12861–e12866. [Google Scholar] [CrossRef]

- Shahir, S.; Visvanathan, R.; Nambi, V.E.; Chandrasekar, V. Mathematical modeling of physical properties of banana fruit for machine vision systems using image processing method. JARDCS 2018, 10, 611–617. [Google Scholar]

- Chong, V.K.; Kondo, N.; Ninomiya, K.; Nishi, T.; Monta, M.; Namba, K.; Zhang, Q. Features Extraction for Eggplant Fruit Grading System Using Machine Vision. Appl. Eng. Agric. 2008, 24, 675–684. [Google Scholar] [CrossRef]

- Brezmes, J.; Llobet, E.; Vilanova, X.; Orts, J.; Saiz, G.; Correig, X. Correlation between electronic nose signals and fruit quality indicators on shelf-life measurements with pinklady apples. Sens. Actuators B Chem. 2001, 80, 41–50. [Google Scholar] [CrossRef]

- Baietto, M.; Wilson, A.D. Electronic-Nose Applications for Fruit Identification, Ripeness and Quality Grading. Sensors 2015, 15, 899–931. [Google Scholar] [CrossRef]

- Zhang, D.; Xu, L.; Wang, Q.; Tian, X.; Li, J. The Optimal Local Model Selection for Robust and Fast Evaluation of Soluble Solid Content in Melon with Thick Peel and Large Size by Vis-NIR Spectroscopy. Food Anal. Method 2019, 12, 136–147. [Google Scholar] [CrossRef]

- Bag, S.K.; Srivastav, P.P.; Mishra, H.N. FT-NIR spectroscopy: A rapid method for estimation of moisture content in bael pulp. Br. Food J. 2011, 113, 494–504. [Google Scholar] [CrossRef]

- Long, X.; Jing, L.; Muhua, L. Nondestructive Detection of Soluble Solids Content on Navel Orange with Vis/NIR Based on Genetic Algorithm. Laser Optoelectron. Prog. 2019, 47, 123001. [Google Scholar] [CrossRef]

- Fan, G.; Zha, J.; Du, R.; Gao, L. Determination of soluble solids and firmness of apples by Vis/NIR transmittance. J. Food Eng. 2009, 93, 416–420. [Google Scholar] [CrossRef]

- Shao, Y.; Bao, Y.; He, Y. Visible/near-infrared spectra for linear and nonlinear calibrations: A case to predict soluble solids contents and pH value in peach. Food Bioprocess Technol. 2011, 4, 1376–1383. [Google Scholar] [CrossRef]

- Li, J.; Huang, W.; Zhao, C.; Zhang, B. A comparative study for the quantitative determination of soluble solids content, pH and firmness of pears by Vis/NIR spectroscopy. J. Food Eng. 2013, 116, 324–332. [Google Scholar] [CrossRef]

- Han, D.; Chang, D.; Song, S.; Jiang, S.; Zhao, H. Information collection of mini watermelon quality using near-infrared non-destructive detection. Nongye Jixie Xuebao = Trans. Chin. Soc. Agric. Mach. 2013, 44, 174–178. [Google Scholar]

- Yang, B.; Guo, W.; Li, W.; Li, Q.; Liu, D.; Zhu, X. Portable, visual, and nondestructive detector integrating Vis/NIR spectrometer for sugar content of kiwifruits. J. Food Process Eng. 2019, 42, e12981–e12982. [Google Scholar] [CrossRef]

- OZAKI, Y. Near-Infrared Spectroscopy-Its Versatility in Analytical Chemistry. Anal. Sci. Int. J. Jpn. Soc. Anal. Chem. 2012, 28, 545–563. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, A.; Hu, D.; Xie, L. Comparison of detection modes in terms of the necessity of visible region (VIS) and influence of the peel on soluble solids content (SSC) determination of navel orange using VIS–SWNIR spectroscopy. J. Food Eng. 2014, 126, 126–132. [Google Scholar] [CrossRef]

- Xu, X.; Mo, J.; Xie, L.; Ying, Y. Influences of Detection Position and Double Detection Regions on Determining Soluble Solids Content (SSC) for Apples Using On-line Visible/Near-Infrared (Vis/NIR) Spectroscopy. Food Anal. Method 2019, 12, 2078–2085. [Google Scholar] [CrossRef]

- Vaudelle, F.; l’Huillier, J.P. Influence of the size and skin thickness of apple varieties on the retrieval of internal optical properties using Vis/NIR spectroscopy: A Monte Carlo-based study. Comput. Electron. Agric. 2015, 116, 137–149. [Google Scholar] [CrossRef] [Green Version]

- Kondo, N.; Ahmad, U.; Monta, M.; Murase, H. Machine vision based quality evaluation of Iyokan orange fruit using neural networks. Comput. Electron. Agric. 2000, 29, 135–147. [Google Scholar] [CrossRef]

- Gobbi, E.; Falasconi, M.; Concina, I.; Mantero, G.; Bianchi, F.; Mattarozzi, M.; Musci, M.; Sberveglieri, G. Electronic nose and Alicyclobacillus spp. spoilage of fruit juices: An emerging diagnostic tool. Food Control 2010, 21, 1374–1382. [Google Scholar] [CrossRef]

- Su, M.; Zhang, B.; Ye, Z.; Chen, K.; Guo, J.; Gu, X.; Shen, J. Pulp volatiles measured by an electronic nose are related to harvest season, TSSC concentration and TSSC/TA ratio among 39 peaches and nectarines. Sci. Hortic. 2013, 150, 146–153. [Google Scholar] [CrossRef]

- Pothula, A.K.; Igathinathane, C.; Shen, J.; Nichols, K.; Archer, D. Machine Vision Analysis for Industrial Beet Color Change Kinetics and Total Soluble Solid Content. In 2014 ASABE Intersectional Meeting; The American Society of Agricultural and Biological Engineers: St. Joseph, MI, USA, 2014; Volume 2014. [Google Scholar]

- Xu, S.; Lu, H.; Zhou, Z.; Lü, E.; Jiang, Y. Identification for Guava Mechanical Damage Based on Combined Hyper-spectrometer and Electronic Nose. Trans. Chin. Soc. Agric. Mach. 2015, 46, 214–219. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Xu, S.; Sun, X.; Lu, H.; Zhang, Q. Detection of Type, Blended Ratio, and Mixed Ratio of Pu’er Tea by Using Electronic Nose and Visible/Near Infrared Spectrometer. Sensors 2019, 19, 2359. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, Y.; Peng, J.; Wang, Y. Application of partial least squares regression in detecting the important landscape indicators determining urban land surface temperature variation. Landsc. Ecol. 2018, 33, 1133–1145. [Google Scholar] [CrossRef]

- Mcleod, G.; Clelland, K.; Tapp, H.; Kemsley, E.K.; Wilson, R.H.; Poulter, G.; Coombs, D.; Hewitt, C.J. A comparison of variate pre-selection methods for use in partial least squares regression: A case study on NIR spectroscopy applied to monitoring beer fermentation. J. Food Eng. 2009, 90, 300–307. [Google Scholar] [CrossRef] [Green Version]

- Xu, S.; Lu, H.; Ference, C.; Zhang, Q. Visible/near Infrared Reflection Spectrometer and Electronic Nose Data Fusion as an Accuracy Improvement Method for Portable Total Soluble Solid Content Detection of Orange. Appl. Sci. 2019, 9, 3761. [Google Scholar] [CrossRef] [Green Version]

- Cheng, P.M.; Tran, K.N.; Whang, G.; Tejura, T.K. Refining Convolutional Neural Network Detection of Small-Bowel Obstruction in Conventional Radiography. Am. J. Roentgenol. 2018, 212, 342–350. [Google Scholar] [CrossRef]

- Du, J.; Hu, B.L.; Liu, Y.Z.; Wei, C.Y.; Zhang, G.; Tang, X.J.; Hu, B.R.A.C. Study on Quality Identification of Macadamia nut Based on Convolutional Neural Networks and Spectral Features. Spectrosc. Spectr. Anal. 2018, 38, 1514–1519. [Google Scholar]

- Trefethen. Spectral Methods in MATLAB; SIAM: Philadelphia, PA, USA, 2000. [Google Scholar]

- Jie, D.F.; Yang, J.; Peng, Y.X.; Lian, Y.X.; Zhang, D.; University, H.A. Research on the detection model of sugar content in different position of citrus based on the hyperspectral technology. Food Mach. 2017, 33, 51–54. [Google Scholar]

- Ncama, K.; Opara, U.L.; Tesfay, S.Z.; Fawole, O.A.; Magwaza, L.S. Application of Vis/NIR spectroscopy for predicting sweetness and flavour parameters of ‘Valencia’ orange (Citrus sinensis) and ‘Star Ruby’ grapefruit (Citrus x paradisi Macfad). J. Food Eng. 2017, 193, 86–94. [Google Scholar] [CrossRef]

- Chia, K.S.; Rahim, H.A.; Rahim, R.A. Prediction of soluble solids content of pineapple via non-invasive low cost visible and shortwave near infrared spectroscopy and artificial neural network. Biosyst. Eng. 2012, 113, 158–165. [Google Scholar] [CrossRef]

- Huang, H.; Liu, L.; Ngadi, M.O.; Gariépy, C.; Prasher, S.O. Near-Infrared Spectral Image Analysis of Pork Marbling Based on Gabor Filter and Wide Line Detector Techniques. Appl. Spectrosc. 2014, 68, 332–339. [Google Scholar] [CrossRef]

- Liu, G.; He, J.; Wang, S.; Luo, Y.; Wang, W.; Wu, L.; Si, Z.; He, X. Application of Near-Infrared Hyperspectral Imaging for Detection of External Insect Infestations on Jujube Fruit. Int. J. Food Prop. 2016, 19, 41–52. [Google Scholar] [CrossRef]

- Zhang, Y.; Sakae, S.; Li, M. Prediction of tomato inner quality based on machine vision. Trans. Chin. Soc. Agric. Eng. 2010, 26, 366–370. [Google Scholar]

- Jian, L.I.; Zheng, L.; Lin, H.; Zheng, F.; Hui, G. Study of Red-heart Plum Total Soluble Solids Content Predicting Method Using Electronic Nose. J. Nucl. Agric. Sci. 2015, 29, 2360–2365. [Google Scholar]

- Li, L.; Wang, J.; Cao, Z.; Zhong, E. An information-fusion method to identify pattern of spatial heterogeneity for improving the accuracy of estimation. Stoch. Environ. Res. Risk A 2008, 22, 689–704. [Google Scholar] [CrossRef]

- Feng, R.H.; Kang, W.Q.; Da-Peng, Y.E. The Design of a Nondestructive Testing Device for Pomelo Sugar Based on Partial Least Square Method. J. Anhui Agric. Sci. 2016, 44, 262–264. [Google Scholar]

- Le, B.T.; Xiao, D.; Mao, Y.C.; Song, L.; He, D.K.; Liu, S.J. Coal classification based on visible near-infrared spectroscopy and CNN-ELM algorithm. Spectrosc. Spectr. Anal. 2018, 38, 2107–2112. [Google Scholar]

| CNN Network Structure | Learning Rate | Iterations | ||||||

|---|---|---|---|---|---|---|---|---|

| Layer 1 | Layer 2 | |||||||

| FMN | FMS | PS | FMN | FMS | PS | |||

| TSSC | 6 | 3 | 2 | 4 | 2 | 2 | 0.85 | 300 |

| Water content | 7 | 3 | 2 | 3 | 2 | 2 | 0.80 | 500 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, S.; Lu, H.; Ference, C.; Zhang, Q. An Accuracy Improvement Method Based on Multi-Source Information Fusion and Deep Learning for TSSC and Water Content Nondestructive Detection in “Luogang” Orange. Electronics 2021, 10, 80. https://doi.org/10.3390/electronics10010080

Xu S, Lu H, Ference C, Zhang Q. An Accuracy Improvement Method Based on Multi-Source Information Fusion and Deep Learning for TSSC and Water Content Nondestructive Detection in “Luogang” Orange. Electronics. 2021; 10(1):80. https://doi.org/10.3390/electronics10010080

Chicago/Turabian StyleXu, Sai, Huazhong Lu, Christopher Ference, and Qianqian Zhang. 2021. "An Accuracy Improvement Method Based on Multi-Source Information Fusion and Deep Learning for TSSC and Water Content Nondestructive Detection in “Luogang” Orange" Electronics 10, no. 1: 80. https://doi.org/10.3390/electronics10010080

APA StyleXu, S., Lu, H., Ference, C., & Zhang, Q. (2021). An Accuracy Improvement Method Based on Multi-Source Information Fusion and Deep Learning for TSSC and Water Content Nondestructive Detection in “Luogang” Orange. Electronics, 10(1), 80. https://doi.org/10.3390/electronics10010080